Abstract

Our objective is to develop a video reflection removal algorithm that is both easy to compute and effective. Unlike previous methods that depend on machine learning, our approach proposes a local image reflection removal technique that combines image completion and lighting compensation. To achieve this, we utilized the MSER image region feature point matching method to reduce image processing time and the spatial area of layer separation regions. In order to improve the adaptability of our method, we implemented a local image reflection removal technique that utilizes image completion and lighting compensation to interpolate layers and update motion field data in real-time. Our approach is both simple and efficient, allowing us to quickly obtain reflection-free video sequences under a variety of lighting conditions. This enabled us to achieve real-time detection effects through video restoration. This experiment has confirmed the efficacy of our method and demonstrated its comparable performance to advanced methods.

1. Introduction

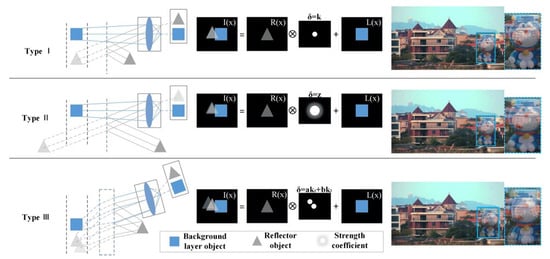

Reflection removal involves separating the layers of an image into the background and reflection layers. There are various methods for removing reflections, including single image reflection removal (SIRR) [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15], multi-image reflection removal [16,17,18,19,20,21], video reflection removal [22,23,24,25], light field camera reflection removal [26,27,28,29], and special function camera (sensor) reflection removal [30,31]. The image removal model is shown in Figure 1.

Figure 1.

Physical (left) and mathematical (middle) image formation models of the image reflection removal method and the actual experimental presentation scenario (right). Types I and II ignore the refraction effect of thicker glass, while type III exists with thicker glass, which reflects and refracts light from the glass front object to different locations and exhibits different intensities, respectively, leading to the superimposed image phenomenon of the mirror front object in the reflective layer.

Recent research has revealed that machine learning networks [9,10] that are designed well can learn the distinguishing features of specific reflection removal methods from a vast amount of training set data. For instance, by using image features for the semantic segmentation of images [6] or extracting core modules for reflection dynamics [5], usually from VGG networks, these networks can better predict the movement of the background and reflection layers and suggest image reflection parts. Typically, prediction is carried out in two stages [7,9,11]. In another study, a solution was proposed to minimize the issue of under/over exposed pixels in captured images. This was achieved by using multiple polarized images taken at different exposure times [16] and introducing the weighted non-negative matrix decomposition of full change regularization. It should be noted, however, that these methods often require a longer sample training time.

In this paper, we provide a method for removing reflection layer areas from videos that does not require training. It uses an image completion strategy and a lighting correction mechanism. This process has two essential components: (1) The recognition of reflection regions, which compares the two video frames before and after the region and lowers the error rate of recognition by using a lighting correction technique; (2) Pixel substitution methods in the reflection removal process in order to cover the intended reflection area and make the reflection removal procedure simpler. The target area recognition step of this method’s detection speed and reflection removal integrity may be guaranteed by coupling these two activities. In contrast to [4,16,26,28], our technique does not need the building of a complicated machine learning network or a substantial amount of sample training, and the process for acquiring pictures is also rather straightforward. Our contributions are summarized as follows:

- (1)

- We propose a video reflection removal method based on the combination of image complementation and illumination compensation, which has a 5.2% higher reflection region removal accuracy and operation speed than without adding illumination compensation.

- (2)

- We propose an illumination compensation mechanism based on a combination of luminance adjustment and gamma correction for reflective region extraction by joint MSER region matching.

The rest of this paper is organized as follows: In Section 2, we introduce an illumination compensation mechanism based on a combination of brightness adjustment and gamma correction, as well as a reflection region extraction method based on MSER region matching, with a focus on the reflection region removal method based on image completion and illumination compensation fusion. This paper analyzes the effectiveness of a reflection region removal method based on image completion and lighting compensation fusion. By comparing the image completion-based reflection region removal method with the relative motion-based reflection region removal method, the superiority of our method was found. In Section 3, we obtain the superiority of our method through comparative experiments.

2. Related Works

Single Image Reflection Removal (SIRR). Earlier researchers usually used a priori methods for image reflection removal, such as layer sparsity [2] and smoothing [3]. These methods mainly rely on the a priori asymmetry of the layers (reflection and background layers), and their applicability is limited by special cases. In recent years, machine learning-based reflection removal methods have prevailed. This approach learns the properties of specific reflection removal methods from a large training set of data by well-designed machine learning networks [4]. To facilitate the learning of the learning network, some auxiliary cues are generally used to help train the learning network. For example, using image features to extract the reflection dynamics core module [5], the module is typically extracted from a VGG network to better predict the motion of the background layer and the reflection layer and propose the image reflection part. Some methods use hybrid networks [15] for image deblurring or image enhancement, after which gradient information [1,4,6,7] and edge information [10] are explored; their information can be fused with reflection formation models [8] and Alpha hybrid masks [14], among others, to achieve the linear expression prediction of image reflections, generally choosing to use two stages [7,9,11] for prediction. Although machine learning-based methods achieve good results for radiation removal, they all try to solve the problem from the perspective of a single image.

Multi-image reflection removal. Multi-image reflection removal generally captures multiple images of a scene in a predefined manner, an operation that makes the reflection removal problem easier to handle. Most of the methods studied for multi-image reflection removal are based on motion cues [17,18,19], which exploit the difference in motion between two layers of images of the same target scene captured from different viewpoints. Some of the multi-image reflection removal methods adhered to the sparse non-negative matrix decomposition (NMF) optimization by combining a color illumination reflection model with NMF optimization in a multi-image framework [20]. According to the synthetic data of this method, the real data images of non-dielectric materials that are obtained conform to the proposed reflection model. Some of them also combine image information and multiscale gradient information with human perception-inspired loss functions [21] to accomplish reflection removal from images with machine learning. There are also some studies that use multiple polarized images taken at different exposure times in order to achieve high accuracy reflection removal in high dynamic range scenes [16] and minimize the under/over exposure pixel problem of the captured images by introducing a weighted non-negative matrix decomposition (WNMF) with full variation regularization. These multi-image methods lead to better reflection removal results due to the availability of image information from different viewpoints. However, the acquisition of images takes a long time and is professional, which limits the applicability of non-professional personnel.

Video reflection removal. Motion cues are also often used to handle video reflection removal [15,16,17,18,19,20,21,22]. Information about the relative displacement of the environment (i.e., reflection dynamics) captured in the image sequence and separated from the video frames is used to help image sequences separate reflection layers and reduce reflection artifacts using temporal coherence constraints. Some studies proposed a recursive method for reflection removal based on a prediction-correction model [25], which is able to effectively decompose the reflected image into different layers (reflection and background layers) according to the principle of a real centrifuge. Some studies separated reflections and dirt by averaging images through a priori algorithms [23], and others introduced optical flow into specular reflection removal methods with high-speed cameras and stroboscopes [24], using the optical flow method to compensate for the noise at the specified location of the specular reflection due to inter-frame differences when the high-speed camera moves. Unlike the real experimental video that contains both object and camera motion, our experiments only need to bring two frames of the image sequence in the video frame into the model and use the feature extraction method to extract the same region in both frames, as the reflection removal action range for reflection removal and the video reflection can be removed by the inter-frame difference method for changing reflection images.

Light field camera reflection removal. Although layer separation is irrelevant to conventional camera imaging, studies [26,27,28] demonstrate that light-field imaging can be beneficial to handle reflection removal. One study proposed a cue-no-reflection flash cue [27] to make distinguishing reflections easier for reflection removal and to avoid introducing artifacts in the presence of flash-only images when utilizing reflection-free cues by creating a specialized framework. Some of the studies have used reflection removal methods based on light field LF imaging [26,28], using epipolar plane images, retrieving scene depth maps from low frequency images, dividing the gradient points of the images into background and reflection layers based on the depth values, and reconstructing background images using sparse optimization methods. The drawback of the method proposed in the study above is that a special light field camera is required for reflection removal. By contrast, our proposed method uses a camera for environmental information acquisition without the flash processing of the acquired image, which can simplify the image data acquisition process.

Special function camera/sensor reflection removal. Special format images acquired by special cameras will have better results for the reflection removal of images. A study [30] proposed a learning framework-based reflection removal method for RGB images by directing the reflection suppression of active NIR images through a near-infrared (NIR) camera. There is also a study of reflection removal using data available on DP sensors [29]. This study simplifies the delineation of image gradients belonging to the background layer region by using out-of-focus-parallax cues that appear in the two sub-aperture views. However, most camera APIs currently do not provide access to this useful data, and the cost of this camera is high.

3. Method

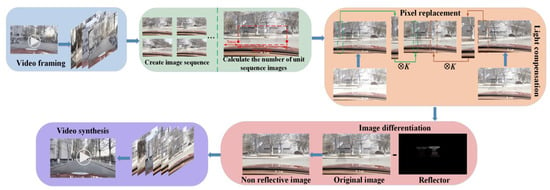

This paper proposes a reflection removal method for black box videos based on the combination of image completion and illumination compensation. We use the gradient reconstruction and completion of transmitted images as a solution to the problem of reflection removal. Some of the reflected images in the video may shake due to vehicle bumps, and traditional feature matching region extraction methods cannot fully extract the target region. Based on the MSER feature region extraction method, we decompose the video into video frames and combine all of its frames into an image sequence. This article utilizes the phenomenon that the reflective imaging position and overall shape of the windshield do not change due to the driving of the vehicle and are only related to the direction of sunlight exposure. Different reflected images presented under different lighting conditions is compensated for through the addition of a loss function. Finally, the image is reconstructed using a motion gradient method through image completion to achieve the effect of reflection removal. Our approach comprises two key parts: (1) The extraction of the reflection region, which entails illumination adjustment and MSER region matching of the front and back picture frames; (2) The removal of reflections from images. Image differentiation is used together with the IRLS method and a penalty function for motion map decomposition to produce a background layer sequence. Figure 2 depicts our approach in broad strokes.

Figure 2.

An overview of our method. The blue module is used for video framing operations, selecting images with reflective areas, calculating the number of images used in the image sequence (represented by the green module), and then adding lighting compensation for image completion (represented by the orange module). Reflection removal is achieved through the difference between the image and the reflection layer (represented by the pink module). Finally, image collection and video restoration (represented by the purple module) are carried out.

3.1. Light Compensation

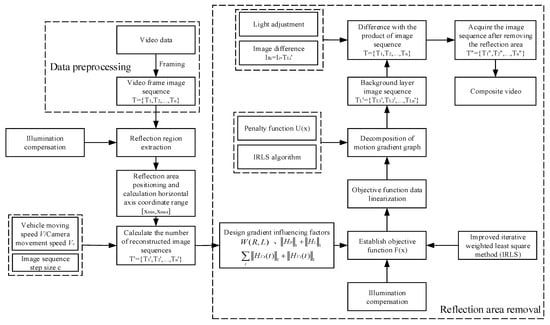

The recognition process of the reflection areas is usually influenced by the background layer and lighting intensity; the changes in lighting intensity have an especially significant impact on the accuracy of real-time reflection area recognition in black box cameras. In order to identify the reflection area more easily, this paper uses a light compensation algorithm based on the combination of brightness adjustment and gamma correction to make up or reduce the light compensation of the area where the reflection is not obvious due to the light change. The gradient difference of the image is used to determine whether brightness adjustment and light compensation are needed. Figure 3 shows the overall process of the algorithm.

Figure 3.

A flowchart of the reflective region removal method based on the combination of image complementation and illumination compensation.

3.1.1. Image Gradient Metric

In order to standardize the gradient metric, we set the acquired image as and the pixels of the image as . Then, the gradient value of a pixel point in the acquired image within a certain exposure time is

where , the total image gradient value, is

The image gradient is normalized by Equation (3) to accelerate the convergence of the operation and to improve computational efficiency, where is the normalized pixel of the image. In this paper, a Gaussian function is used to simulate the influence of different brightness components on the image. By analyzing the influence factors of brightness with different weights, the brightness estimate of the gradient value is obtained. is the activation threshold, and is the weight coefficient to adjust the intensity.

3.1.2. An Illumination Compensation Mechanism Based on Luminance Adjustment and Gamma Correction

In order to prevent the low accuracy of reflective region recognition due to illumination, an illumination compensation mechanism based on brightness adjustment and gamma correction is proposed in this paper. In this method, the video obtained by the black box camera is processed in frames. And the video frame image sequence is taken as the input image . The image is converted from RGB space to YUV space by the spatial conversion method, and the Y component in YUV space is extracted. can be obtained when Equation (4) is calculated. , , and are the proportions of R, G, and B channels in the RGB image, respectively.

where and are the number of rows and columns of the respective images. is the adjustment factor used to avoid pixel singularities in the images. is the image adjustment luminance function of the logarithmic mean luminance, , in order to obtain the global and local features of the image.

The normalized luminance can be expressed with Equations (6) and (7). The original image, , can be adjusted for regional luminance to obtain the processed image .

An accurate light compensation of the reflective region cannot be fully realized by luminance adjustment only. The gamma correction of the image curve is also needed to discriminate the dark and light region parts of the image by means of the nonlinear tone editing of the image to improve the contrast effect of the two parts, thus providing a better detection environment for the reflective region identification operation.

Firstly, the image is converted from RGB space to HSV space. The transformed color space H, S, and V channels satisfy Equation (8). Equation (8) is the expression of the multi-scale Gaussian function .

The luminance estimate of the original image is obtained through convolution calculation as shown in Equation (9).

In order to obtain the global and local features of the image, this paper uses a Gaussian function to simulate the influence of various luminance components on the image. We use different weights to analyze the influencing factors of luminance and to obtain the luminance estimate of the image .

where is the scale factor, is the normalization constant, and is the weight of each luminance component.

The corresponding convolution kernel range is set according to the scale factor of different sizes. is proportional to the convolution kernel range. The larger the value of scale factor , the better the global feature of the detected image brightness value. is the V-component value of the input image in YUV space. After extracting the luminance components, the two-dimensional gamma correction function Equation (11) is constructed according to image luminance distribution.

The contrast effect of the two parts of the image is improved by the method of nonlinear tone editing. Equation (12) is used to obtain the gamma-corrected image brightness value in HSV space, after which the image brightness in RGB space and the image are obtained via spatial conversion.

is the average luminance value of the luminance estimate. is the V-channel value in HSV space, which can be obtained from the image , according to the spatial transformation ().

Finally, the brightness adjustment of the target region and the gamma-corrected image are obtained by Equation (13), where is the gamma-corrected image, and and are the weight values of the input image and the corrected image . The use of light compensation mechanisms can improve the visibility of images captured under low lighting conditions or images with strong lighting differences without changing the clarity of the image. By adjusting the brightness and contrast of the image, the dark areas can be clearer and the details more abundant, with a reduction in the incompleteness of the image after the reflection removal operation and the error recognition rate of obstacles. This method can be applied to real-time image processing or large-scale image dataset processing to provide real-time image information of the vehicle’s operating environment after reflection removal. But adding a lighting compensation mechanism cannot solve all lighting problems through simple brightness adjustment and gamma correction. For more complex lighting scenes, this mechanism may not be able to effectively suppress lighting differences or restore details. Secondly, an excessive increase in brightness or contrast may lead to loss of details or overexposure of the image, thereby reducing image quality. Finally, this mechanism is a global adjustment method and cannot handle different regions individually. Therefore, the applicable scenarios set in this paper are only tested and validated for the feasibility of the method under the conditions of relatively uniform lighting. In response to the issue of inability to adjust the region, this paper adds a reflection region extraction process, which involves local reflection region recognition and extraction through MSER region matching.

3.2. Target Area Extraction

The first link of reflection removal is to extract the reflection region to be removed, because the position of the reflection region only changes with the light position during the vehicle travel; the reflection image on the front windshield of the vehicle generally does not change. But the reflection region cannot be completely identified according to the intensity of light, and the phenomenon is shown in Figure 4. This paper defines the region of interest as a region of interest based on graying the detection area, pre-setting the selection threshold range , and selecting regions with similar grayscale values. The area of this region needs to be larger than the set threshold range. By calculating the edge gradient difference of the target area in each image in image sequence , we compare the edge gradient difference of the target reflection area with the set range threshold . If , we reduce the brightness in the region of interest except for the target reflection area and reassign the weight of the image; if , there is no need for lighting compensation. Then, the MSER algorithm is used to calculate the regional range difference . The regional set spacing is [32], and the extraction of the maximally stable extremal regions is represented by . The specific procedure is as follows.

Figure 4.

A diagram of the unchanged reflection area.

Step 1: Video framing. The acquired video is frame separated to obtain image sequence .

Step 2: Region of interest (ROI) extraction. According to the grayscale processing of the detection region , the selection threshold range is preset, and a region of a similar grayscale value is selected. The area of the region needs to be larger than the set threshold range . The region is defined as the region of interest .

Step 3: Illumination compensation. By calculating the edge gradient difference of the target region in each picture in the image sequence , the edge gradient difference of the target reflective region is compared with the set range threshold . If its gradient difference , the luminance in the region of interest other than the target reflective region is reduced, whereas the luminance is normalized. The luminance component coefficient is set by the luminance influence factor, and the luminance estimate is obtained. The luminance is adjustment based and combined with the gamma correction function to assign weights to the images acquired in YUV space, as well as HSV space, to obtain the processed image .

Step 4: The MSER algorithm is used to extract the maximum stable extreme value region . Among them, steps 5–7 are the specific operating steps for MSER.

Step 5: Region range difference calculation. For the two frames acquired in the experiment, the set of MSER regions for the before and after frames are assumed to be and , respectively. is the set of the differences between the th MSER region range in the previous frame and the unmatched region in the next frame. The set is normalized, and the effect of normalization is denoted by , where .

Step 6: The region set spacing is calculated. Suppose the set of MSER region masses in the front and back images are and , respectively, is the set of distances between the th MSER region range in the former image and the unmatched region in the latter image. The set of is normalized and the processing result is denoted by , where .

Step 7: Extract the matching region . Let be the set of the matching values of the th MSER and extract the MSER corresponding to the smallest as the matching region, which is noted as .

Step 8: Set the initial set of core objects as , the number of initial clusters as , the initialized dataset as , and the classification result as .

Step 9: Traversing dataset is used to find the kernel points . According to the distance measurement, find the neighborhood dataset corresponding to the kernel point . If the number of neighborhood dataset is , add the object to the set of kernel points . If the algorithm cannot find the kernel point after the traversal, i.e., the set of kernel points , then the algorithm ends the operation. At this point, it is determined that the threshold and the value of the neighborhood radius are not reasonable. It is generally necessary to expand the value of the neighborhood radius or reduce the threshold .

Step 10: When , the DBSCAN clustering algorithm continues the operation. A kernel point is randomly selected in the set of kernel points . Using density direct and density reachable, all data points belonging to the same classification as are found, and the related points are removed from the set of kernel points . The dataset is established. Repeat step 10 until the dataset is an empty set and then output the classification result .

3.3. Reflection Removal

Aiming at the reflection region removal part of the video recorded by the black box camera, this paper proposes a reflection region removal method based on image completion. Firstly, the target video is divided into frames. Secondly, according to the area covered by the reflection image in each frame, the reflection layer image is obtained by customizing the layer difference of adjacent sequences. The reflection region extraction method based on MSER fast feature matching and illumination compensation is used to extract the image containing the reflection region. Finally, the illumination compensation is performed on the image of the video frame image sequence that needs brightness adjustment. The reflection area that needs to be removed is covered by the pixel substitution method to obtain the reflection layer image , and the video frame image sequence image is subtracted one by one to realize the reflection area removal of the video.

3.3.1. Reflective Region Removal Method Based on Image Complementation

In this paper, we need to perform reflection removal on video data, which belongs to the category of dynamic reflection removal. We first need to split the video into frames and convert the video into image sequences by performing optical flow processing on two of the frames in image sequence , while identifying and extracting the reflection regions present in image sequence , according to the reflection region extraction algorithm in Section 3.2. And then we need to update the reflected regions according to the replacement image pixel method. The part of the area blocked by the reflected area, where the vehicle motion and camera parameters (vehicle speed/camera motion speed , field of view , camera height , etc.) are, can be preset according to the test requirements.

First, the image objective function is established based on the video frame image sequence as

where and are the set of layer movement velocities for the reflection and background layers, respectively. In order to reduce the reflection removal accuracy degradation due to image blurring, prior picture constraints are added on the decomposed image and layer motion fields. The natural image gradient in this paper conforms to the heavy tailed distribution , and it is assumed that the detected gradient conforms to the Gaussian distribution. Then, in order to enable the complete separation of the background layer from the reflection layer, we simplify the construction of the image in the presence of the reflection region by assuming that the objects in the background layer do not duplicate the objects in the reflection layer; i.e., the objects corresponding to strong gradients exist in only one of the two layers and cannot exist in both layers. To adopt this assumed way of object gradient existence, the method is defined as

After that, a sparsity operation is performed on the gradient of the motion field of the two layers, i.e., , so that the gradient is minimized. By taking all the above factors into account, the reflection removal image objective function constructed in this paper is shown in Equation (16).

The gradients of the two selected adjacent video frame images are calculated and the gradient dilution is performed. The latter motion image is pixel overlapped (replaced) with the former motion image using the pixel replacement method, which makes up for the background layer partially covered by the reflective region to obtain the updated video frame image . Then, the updated video frame image is subjected to an image subtraction algorithm with image to obtain the partially complemented motion image gradient . We calculate the average value of its regional gradient and subtract this average gradient value from the extracted motion map of the reflected region to obtain the motion gradient map after the reflected region is removed. Finally, the alternating gradient descent method solves for and . The solution steps are as follows:

Step 1: Simplify target functionality. When solving the inverse problem for each layer (background layer and reflection layer), the relative displacement between the two frames before and after the image can be ignored, which is the running speed. Therefore, the expression for the objective function of image reflection removal can be simplified as

Step 2: Design an improved reiterative weighted least squares (IRLS) method. Using IRLS to optimize unconstrained functions in the presence of 1-norm and 2-norm, where R, L, and A are set as the reflection layer component, background layer component, and AlphaMap of the final iteration. By linearizing the objective function data items and smoothing the function expression, a more natural removal effect is achieved to avoid image level mutations.

Step 3: Add the compensation/penalty function . is the penalty coefficient. By utilizing compensation/penalty functions, the result of poor image reflection removal caused by other external factors (possible external factors such as vehicle bumps, wind speed, etc.) can be reduced in real-time.

Step 4: Correct the R, L, and A parameters in step 2 and calculate , . Using Equation (17) and using the IRLS algorithm for motion mapping quadratic decomposition.

where and are the gradient values of the reflection layer and the background layer, respectively.

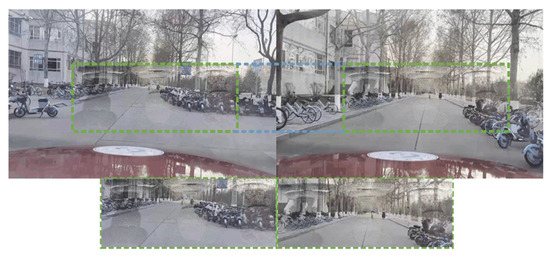

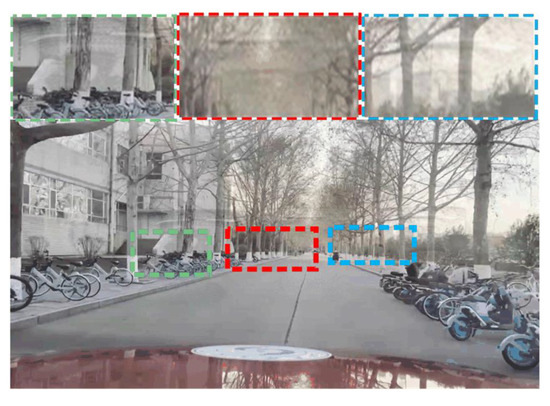

However, by the method for reflective region removal above, a more clearly separated background layer image cannot be obtained for reflective regions with different gradients. The separation results are shown in Figure 5. In order to solve this problem, this paper proposes a reflective region removal method based on improved image complementation and illumination compensation.

Figure 5.

The results of an image complementation-based reflective region removal method.

3.3.2. A Reflective Region Removal Method Based on the Combination of Improved Image Complementation and Illumination Compensation

This paper includes the illumination compensation mechanism based on the reflection region removal method, which is based on image completion. Firstly, by selecting the video frame image according to the position of the reflection region to form the image sequence , this method adds illumination compensation to the reflection area in the reflection removal method based on image completion, so that the illumination intensity of the reflection region part in the image sequence remains consistent and the reflection removal error is avoided. Secondly, the image sequence is obtained by unifying the light of the reflective region, and the background layer of the reflective region part in image is complemented according to the method of image complementation (pixel overlay) to achieve no reflection in this background layer region. The image is differenced from image to obtain the reflective layer image at time . Finally, the complete removal of the reflective region in the video is achieved by differencing the product with the image sequence . The specific reflection region removal steps are as follows:

Step 1: Video framing. The black box camera line recording data are framed to obtain the video frame image sequence .

Step 2: Reflection region extraction. Firstly, image pre-processing is performed on the detection region for ROI extraction and to improve the extraction accuracy of the reflection region. The brightness of the image corresponding to the edge gradient difference of the reflective region is less than the threshold , which is adjusted by the light compensation mechanism set in this paper to increase the edge gradient difference of the reflective region so that the reflective region in the image is better extracted. Finally, according to the principle of invariance of reflective region mapping under the same illumination conditions, the region matching of two frames is performed by an MSER algorithm in order to fit the shape uncertainty of the reflective region. The DBSCAN clustering algorithm is applied to cluster the acquired data points to determine the reflective region .

Step 3: The number of images required for reflected area removal is calculated, and an image sequence is constructed. The range of horizontal axis coordinates corresponding to the pixels at the edge of the reflected area can be obtained through step 2. The number of image sheets required for reflected area removal is calculated according to Equation (19), where is an integer. The time required for the distance of the reflected region from the edge of the image can be deduced from the camera running speed , i.e., according to as the step length for selecting images in the image sequence to form a brand new image sequence .

Step 4: The objective function is established. The objective function is constructed by considering the form of gradient existence. We assume that the detected gradient conforms to the Gaussian distribution and that it satisfies the heavy-tailed distribution; we define the gradient influence factors , , and , as well as the light compensation as shown in Equation (19). and are the corresponding background and reflection layers at moment t, respectively, and is the proportion of the area of the image occupied by the moving layer. and are the sets of layer movement velocities for the reflection and background layers, respectively. is a sparsity operation on the gradients of the motion fields of the two layers, which minimizes the gradients. and are the gradient values of the moving velocity of the reflective layer and the background layer at moment t, respectively. In order to unify the layers, the gradient constraint is added.

Step 5: Pixel replacement and -function decomposition. Firstly, through the image sequence , obtained in step 3, pixel replacement is performed for the duplicate parts in the adjacent frame images, and due to the different perspective and distance, the image points need to be multiplied by the perspective conversion matrix . The layer velocity component as well as the layer component are fixed, respectively, the IRLS algorithm is applied, and the penalty function is added for motion map decomposition, so as to obtain the background layer image sequence .

Step 6: Reflection layer image acquisition. The reflection layer image is obtained by the image difference formula, which is calculated as

Step 7: Reflection removal. The image sequence is multiplied and differenced according to the reflection layer image obtained in step 6—i.e., —so as to obtain the image sequence after reflection region removal; finally, the image sequence is synthesized, so as to achieve the effect of video reflection removal.

4. Experiments

This article collects the reflection of the front windshield inside the vehicle during operation in a campus environment through a vehicle’s black box in the actual vehicle test section. The method used in this article is compared with other methods, summarizing the advantages of this method in real-time and evaluating its accuracy, as well as the limited analysis of usage scenarios.

4.1. Experimental Setup

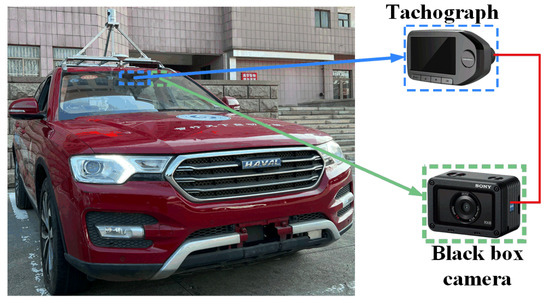

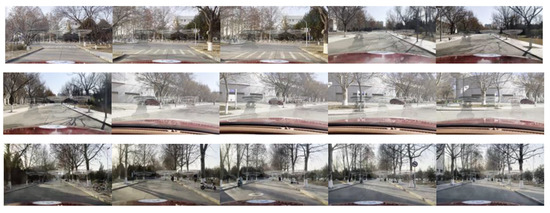

In this paper, we use HAVAL as the test vehicle and record the traveling environment as well as the reflection phenomenon of the front windshield using a SONY RX0 black box camera, where the pose of the black box camera is fixed; its experimental vehicle is shown in Figure 6. We processed this image sequence on a laptop with Intel Core i7-6500U and 1 TB RAM. The image data are processed using machine learning, a Static Video Reflection Removal Method, an image completion-based reflection region removal method, a combination of image completion and lighting compensation-based reflection region removal method, and a relative motion-based reflection region removal method. There are 2318 reflection images collected through experiments in the dataset. Figure 7 is part of the dataset. The size of each image is 2216 × 1244. The accuracy and detection speed of the five methods are compared and analyzed. The image processing process of this paper is completed in MATLAB, and the MATLAB version used is matlab2020a.

Figure 6.

Experimental equipment.

Figure 7.

Partial dataset.

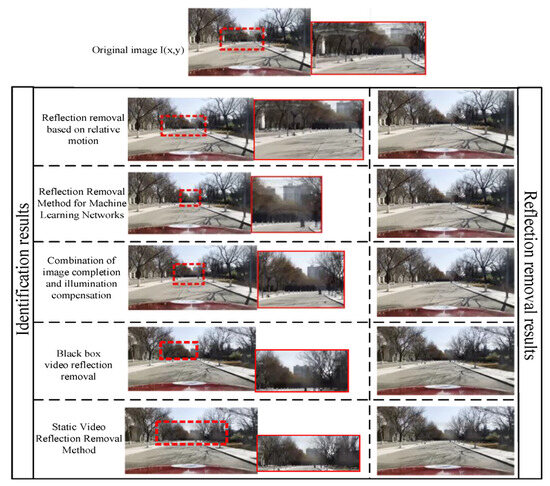

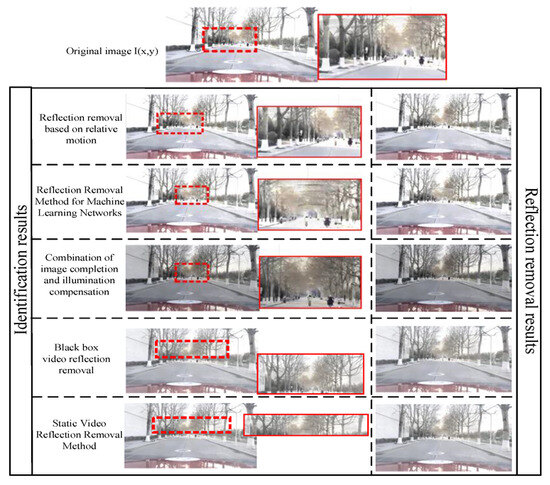

4.2. Comparison with Advanced Technology

To be fair, we only compared the reflection region removal method based on relative motion [22], the reflection region removal method based on machine learning networks [4], and the reflection region removal method proposed in this paper that combines image completion and lighting compensation. Please note that our method is not designed to achieve optimal reaction time but to emphasize the advantages of our simple structure and the adaptability of the method in changing environments.

Discriminant analysis. Our method was used to validate the adaptability of each method in two different environments (light intensity , ). We collected a real car video frame sequence for our experiment, which resulted in a dataset of 2318 reflection images. Our method, as shown in Table 1, has an average reduction of +1.34% in recognition and decision-making time compared to the other two methods, demonstrating its practical value. However, our method still lags behind machine learning networks in terms of the detection time for reflection regions.

Table 1.

A comparison of reflection area recognition and detection time.

Due to the similarity between the accuracy evaluation criteria of the reflection area removal method and the mechanism of obstacle recognition, this paper evaluates the advantages and disadvantages of reflection removal methods based on the interference of the reflection area on the normal recognition of obstacles during the obstacle recognition process and the traditional normalized product correlation (NCC) evaluation criteria for image matching. The calculation formulas for the accuracy evaluation index of the reflection area recognition are and .

The data in Table 2 include the number of video frame images in which each method detects a reflection area in the same video, which can be divided into the number of images that can detect the reflection area and the number of images that cannot detect the reflection area (). By setting the reflection result threshold , the number of samples that can recognize the reflection area and the number of pixels in the reflection area is greater than the threshold, which is denoted as ; the number of samples that have a reflection area and the number of pixels in the reflection area being less than the threshold is denoted as ; the number of samples that have a reflection area greater than the threshold, but the detection result has no reflection area is denoted as ; and the number of samples that have a reflection area less than the threshold, but the detection result has no reflection area is denoted as .

Table 2.

A Comparison Table of the Data Results of Five Methods for Removing Reflection Regions.

Adaptability comparison. Table 2 shows that we evaluated our algorithm by calculating the normalized cross correlation (NCC) of the ground truth decomposition we recovered. The NCCs of the reflection layer we separated were 0.89236, 0.86982, 0.91563, 0.90642, and 0.88959, while the NCCs of the background layer were 0.86624, 0.87431, 0.90875, 0.90433 and 0.89746, respectively. The RT, DT, and NCC of the reflection region removal method based on image completion and lighting compensation are higher than the other four methods. In this paper, it was observed that differences in lighting intensity in various driving environments can lead to false detections and missed detections. To address this issue, the proposed method automatically adjusts the image brightness and increases a layer gradient difference through lighting compensation. This leads to a reduction in the missed recognition rate and an improvement in the comprehensiveness of reflection region removal. Figure 8, Figure 9, Figure 10 and Figure 11 show the reflection removal effect under different lighting conditions.

Figure 8.

A comparison of five reflection removal methods under a normal driving environment.

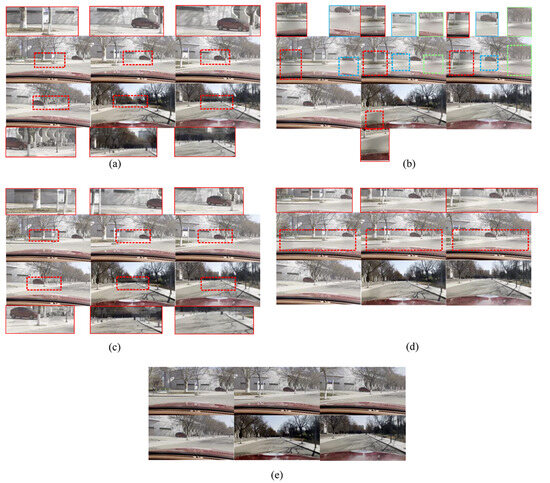

Figure 9.

A comparison of five reflection removal methods under normal lighting conditions.

Figure 10.

The results of five reflection removal methods under light conditions where (a) is the removal result of the reflection region removal method based on relative motion, (b) is the removal result of the black box video reflection removal, (c) is the removal result of the reflection region removal method based on image completion, (d) is the removal result based on the Static Video method, and (e) is the removal result of the reflection region removal method based on the combination of image completion and light compensation.

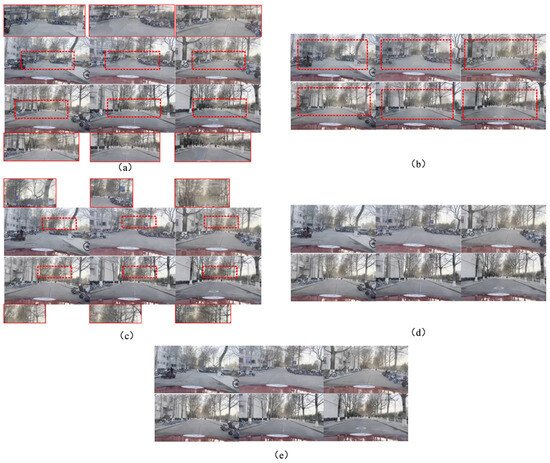

Figure 11.

The plot of the results of five reflection removal methods in a normal driving environment where (a) is the removal result of the reflection region removal method based on relative motion, (b) is the removal result of the black box video reflection removal, (c) is the removal result of the reflection region removal method based on image completion, (d) is the removal result based on the Static Video method, and (e) is the removal result of the reflection region removal method based on the combination of image completion and light compensation.

From Figure 10 and Table 2, it can be seen that under lighting conditions, machine learning methods may result in incomplete video reflection removal due to training factors and sudden changes in the environment. The method based on relative motion cannot completely eliminate the reflection area under lighting conditions. The reflection removal methods based on black box videos and static videos have significant errors in selecting ROI regions due to lighting factors. This leads to a blurring of the reflection area and an increase in the false detection rate of obstacles. The method proposed in this paper is effective in removing video reflections. By reducing the weight of lighting brightness and angles, the effect of reflection removal is achieved. The results indicate that under lighting conditions, local reflection areas can be completely eliminated. It reduces the false detection rate of obstacles caused by radiation. Table 3 compares the more popular methods in recent years. It can be seen that the method proposed in this paper has the highest adaptability—that is, the best reflection removal effect—whether in the presence or absence of light.

Table 3.

A comparison of reflection removal methods with and without illumination (NCC).

According to Figure 11, it can be seen that the reflection removal effect of each method is relatively better under no light conditions than under light conditions. Due to local reflections, there is a phenomenon of incomplete reflection removal. The reflection removal method based on black box video and still video suppresses the shadow problem that occurs during reflection removal under lighting conditions. The method proposed in this paper can effectively remove local area reflections under weak illumination. Due to the weakening of lighting, the reflection area is not obvious, which has a small impact on vehicle obstacle detection. Experiments have shown that the method proposed in this paper can effectively remove reflections from car mounted videos with and without light, and the effect is good.

5. Conclusions

This paper proposes a reflective region removal method based on the combination of image complementation and illumination compensation, which uses illumination compensation to keep the brightness of the reflective region stable in real-time during the reflective region extraction, and eliminates the process of ellipse region fitting and the SIFT or ASIFT extraction of feature points via fast image region matching, combining the MSER-based fast image region matching method with the illumination compensation mechanism. The combination of the MSER-based fast image region matching method, illumination compensation mechanism, and image complementation-based reflective region removal method reduces the spatial and temporal complexity of the reflective region of the vehicle’s front windshield and improves the speed and accuracy of reflective region extraction. In the reflective region removal process, the following occur: re-extracting video frame image sequences, forming custom image sequences, performing illumination compensation for the reflective region mapping in each image, keeping the illumination intensity of the reflective region of the custom image sequence consistent, restoring the reflective region covered with the background layer by combining the non-reflective region of each image with the same reflective region, and removing the reflective region using image difference. The video image sequence containing the reflected region is finally removed with image difference, and the process clearly illustrates the feasibility of the detection method combining image complementation and illumination compensation. In order to solve the problem of the incomplete extraction of reflective regions caused by real-time luminance changes, this paper uses the combination of luminance adjustment and gamma correction in the process of light compensation to improve the contrast of the deep and shallow parts of the image by setting the gamma function, which improves the problem of the incomplete identification of reflective regions with luminance adjustment only.

The reflective region identification and removal process of the reflective region removal method are based on the combination of image complementation and illumination compensation and are illustrated through real-vehicle tests. The reflective region removal method based on image complementation, the reflective region removal method based on relative motion, and the proposed method are compared in a real vehicle test outdoors, and the test results are compared using DT, RT, and NCC as evaluation indexes. The results show that the method has high accuracy, and the reasons for the high accuracy are analyzed. The reflective area removal method based on image complementation, the reflective area removal method based on reflective area removal, and the reflective area removal speed of the method were compared; the results showed that the method has a faster processing speed, and the reasons for the faster processing speed were analyzed. However, the method is slow in decision making, which may lead to the problem of time delay in the reflected area. A future research direction will focus on the design of a reflection region removal decision system that can reduce time delays under the condition of complete reflection region removal.

Author Contributions

Conceptualization, J.N. and Y.X.; methodology, S.D.; software, Y.X., S.D.; validation, S.D., X.K. and S.S.; investigation, S.D.; resources, Y.X. and S.D.; data curation, Y.X., S.D.; writing—original draft preparation, S.D.; writing—review and editing, Y.X. and S.D.; supervision, Y.X., S.D. and J.N.; project administration, Y.X.; funding acquisition, Y.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 51905320 and 52102465, the Natural Science Foundation of Shandong Province under Grant ZR2022MF230, Experiment technology upgrading project under Grant 2022003, and the Shandong provincial programme of introducing and cultivating talents of discipline to universities: research and innovation team of intelligent connected vehicle technology.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

References

- Chen, J. Single Image Reflection Removal with Camera Calibration. Master’s Thesis, Nanyang Technological University, Singapore, 2021; pp. 1–62. [Google Scholar]

- Levin, A.; Weiss, Y. User assisted separation of reflections from a single image using a sparsity prior. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1647–1654. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Brown, M.S. Single image layer separation using relative smoothness. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2752–2759. [Google Scholar]

- Zhang, Y.N.; Shen, L.; Li, Q. Content and Gradient Model-driven Deep Network for Single Image Reflection Removal. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2021; pp. 6802–6812. [Google Scholar]

- Zheng, Q.; Qiao, X.; Cao, Y.; Guo, S.; Zhang, L.; Lau, R.W. Distilling Reflection Dynamics for Single-Image Reflection Removal. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 11–17 October 2021; pp. 1886–1894. [Google Scholar]

- Han, B.J.; Sim, J.Y. Single image reflection removal using non-linearly synthesized glass images and semantic context. IEEE Access. 2019, 7, 170796–170806. [Google Scholar] [CrossRef]

- Li, T.; Lun, D.P.K. Single-image reflection removal via a two-stage background recovery process. IEEE Signal Process. Lett. 2019, 26, 1237–1241. [Google Scholar] [CrossRef]

- Chi, Z.; Wu, X.; Shu, X.; Gu, J. Single image reflection removal using deep encoder-decoder network. arXiv 2018, arXiv:1802.00094. [Google Scholar]

- Li, Y.; Liu, M.; Yi, Y.; Li, Q.; Ren, D.; Zuo, W. Two-Stage Single Image Reflection Removal with Reflection-Aware Guidance. arXiv 2020, arXiv:2012.00945. [Google Scholar] [CrossRef]

- Chang, Y.C.; Lu, C.N.; Cheng, C.C.; Chiu, W.C. Single image reflection removal with edge guidance, reflection classifier, and recurrent decomposition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual Conference, 5–9 January 2021; pp. 2033–2042. [Google Scholar]

- Fan, Q.; Yang, J.; Hua, G.; Chen, B.; Wipf, D. A Generic Deep Architecture for Single Image Reflection Removal and Image Smoothing. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Yang, J.; Gong, D.; Liu, L.; Shi, Q. Seeing deeply and bidirectionally: A deep learning approach for single image reflection removal. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 654–669. [Google Scholar]

- Li, C.; Yang, Y.; He, K.; Lin, S.; Hopcroft, J.E. Single image reflection removal through cascaded refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3565–3574. [Google Scholar]

- Wen, Q.; Tan, Y.; Qin, J.; Liu, W.; Han, G.; He, S. Single image reflection removal beyond linearity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3771–3779. [Google Scholar]

- Ren, W. Low-Light Image Enhancement via a Deep Hybrid Network. IEEE Trans. Image Process. 2019, 28, 4364–4375. [Google Scholar] [CrossRef] [PubMed]

- Aizu, T.; Matsuoka, R. Reflection Removal Using Multiple Polarized Images with Different Exposure Times. In Proceedings of the 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 29 August–2 September 2022; pp. 498–502. [Google Scholar]

- Szeliski, R.; Avidan, S.; Anandan, P. Layer extraction from multiple images containing reflections and transparency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition—CVPR 2000, Hilton Head, SC, USA, 15 June 2000; Volume 1, pp. 246–253. [Google Scholar]

- Li, Y.; Brown, M.S. Exploiting reflection change for automatic reflection removal. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2432–2439. [Google Scholar]

- Sun, C.; Liu, S.; Yang, T.; Zeng, B.; Wang, Z.; Liu, G. Automatic reflection removal using gradient intensity and motion cues. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 466–470. [Google Scholar]

- Bonekamp, J. Multi-Image Optimization Based Specular Reflection Removal from Non-Dielectric Surfaces. Master’s Thesis, Delft University of Technology, Delft, The Netherlands, 2021. [Google Scholar]

- Wan, R.; Shi, B.; Duan, L.Y.; Tan, A.H. Crrn: Multi-scale guided concurrent reflection removal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 4777–4785. [Google Scholar]

- Xue, T.; Rubinstein, M.; Liu, C.; Freeman, W.T. A computational approach for obstruction-free photography. ACM Trans. Graph 2015, 34, 1–11. [Google Scholar]

- Cheong, J.Y.; Simon, C.; Kim, C.-S.; Park, I.K. Reflection removal under fast forward camera motion. IEEE Trans. 2017, 26, 6061–6073. [Google Scholar] [CrossRef] [PubMed]

- Iwata, S.; Ogata, K.; Sakaino, S.; Tsuji, T. Specular reflection removal with high-speed camera for video imaging. In Proceedings of the IECON 2015—41st Annual Conference of the IEEE Industrial Electronics Society, Yokohama, Japan, 9–12 November 2015; pp. 001735–001740. [Google Scholar]

- Alayrac, J.B.; Carreira, J.; Zisserman, A. The visual centrifuge: Model-free layered video representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2457–2466. [Google Scholar]

- Li, T.; Lun, D.P.K. A novel reflection removal algorithm using the light field camera. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Lei, C.; Chen, Q. Robust reflection removal with reflection-free flash-only cues. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14811–14820. [Google Scholar]

- Li, T.; Lun, D.P.K.; Chan, Y.H. Robust reflection removal based on light field imaging. IEEE Trans. Image Process. 2018, 28, 1798–1812. [Google Scholar] [CrossRef] [PubMed]

- Punnappurath, A.; Brown, M.S. Reflection removal using a dual-pixel sensor. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 5–9 June 2019; pp. 1556–1565. [Google Scholar]

- Hong, Y.; Lyu, Y.; Li, S.; Shi, B. Near-infrared image guided reflection removal. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Sheridan, K.; Puranik, T.G.; Mangortey, E.; Pinon, O.J.; Kirby, M.; Mavris, D. An Application of DBSCAN Clustering for Flight Anomaly Detection during the Approach Phase. In Proceedings of the AIAA SciTech Forum, Orlando, FL, USA, 6–10 January 2020; p. 1851. [Google Scholar]

- Jiang, G.; Xu, Y.; Gong, X.; Gao, S.; Sang, X.; Zhu, R.; Wang, L.; Wang, Y.J.J.o.R. An Obstacle Detection and Distance Measurement Method for Sloped Roads Based on VIDAR. J. Robot. 2022, 2022, 5264347. [Google Scholar] [CrossRef]

- Nandoriya, A.; Elgharib, M.; Kim, C.; Hefeeda, M.; Matusik, W. Video reflection removal through spatio-temporal optimization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2411–2419. [Google Scholar]

- Simon, C.; Kyu Park, I. Reflection removal for in-vehicle black box videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4231–4239. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).