Abstract

For the visual measurement of moving arm holes in complex working conditions, a histogram equalization algorithm can be used to improve image contrast. To lessen the problems of image brightness shift, image over-enhancement, and gray-level merging that occur with the traditional histogram equalization algorithm, a dual histogram equalization algorithm based on adaptive image correction (AICHE) is proposed. To prevent luminance shifts from occurring during image equalization, the AICHE algorithm protects the average luminance of the input image by improving upon the Otsu algorithm, enabling it to split the histogram. Then, the AICHE algorithm uses the local grayscale correction algorithm to correct the grayscale to prevent the image over-enhancement and gray-level merging problems that arise with the traditional algorithm. It is experimentally verified that the AICHE algorithm can significantly improve the histogram segmentation effect and enhance the contrast and detail information while protecting the average brightness of the input image, and thus the image quality is significantly increased.

1. Introduction

In the industrial field, machine vision systems applied in practice will inevitably encounter environmental problems (e.g., light, fog, smoke, dust), imaging equipment problems, lighting problems, and other factors that will result in the acquisition of low-quality, low-contrast images, which is not conducive to subsequent image processing, and so image enhancement is necessary. The main methods of image enhancement include histogram equalization, homomorphic filtering [1], Retinex theory-based enhancement algorithm, and deep learning methods. For the image enhancement algorithm of homomorphic filtering, Gong [2] et al. proposed a homomorphic filtering method based on combination and addition in HSV space. However, this work merely improved the underground image data and had certain efficiency flaws. The enhancement method based on Retinex theory has a poor effect on high-brightness images (such as hazy photos), and it produces visible halo phenomena at the intersection of light and dark in the image, which is not conducive to industrial measurement. Deep learning technologies can increase image quality. However, there are still issues with data availability and the generalization of deep learning systems [3].

The histogram equalization method is widely used because it is fast, simple, and effective. Histogram equalization takes the histogram statistics of the pixel values of the input image and then distributes them evenly, which is effective for image enhancement. However, the traditional histogram equalization algorithm [4] can lead to the brightness of the image being offset due to over-stretching, which results in poor enhancement; it can also lead to a loss of detail information and over-enhancement due to gray-level merging. These image quality problems detract from the success of image processing and hinder the extraction of target information from the image. Therefore, histogram equalization algorithms have been improved through various methods [5,6,7,8].

To solve the problem of mean luminance shift, Kim proposed the bi-histogram equalization (BBHE) algorithm [9], which divides the input image histogram into two sub-histograms based on the mean value of the input image histogram, equalizes them separately, and finally merges them. Later on, many other scholars improved such algorithms, and Wang et al. proposed the dualistic sub-image histogram equalization (DSIHE) algorithm [10], which divides the image into two sub-histograms based on the median of the gray level, instead of the mean, and equalizes them separately. The recursive sub-image histogram equalization (RSIHE) algorithm [11] and the recursive mean-separate histogram equalization (RMSHE) algorithm [12] improve upon BBHE and DSIHE, respectively. Chen et al. [13] proposed a bi-histogram equalization algorithm with a “minimum” mean brightness error (i.e., minimum mean brightness error bi-histogram equalization, MMBEBHE), which determines the unique separation point by testing all intensity values and selecting the minimum difference between the average input brightness and the average output brightness. He et al. [14] proposed an infrared image enhancement method combining improved L-C saliency detection and dual-region histogram equalization in order to improve the visual effect of infrared images and highlight the detail information. The foreground and background regions are obtained by adaptive segmentation of the saliency map using the K-means algorithm. Although the K-means algorithm works well when the sample data are dense and the distinction between classes is particularly good, the selection of the K value is difficult to estimate. Blind determination of the K value will lead to inaccurate segmentation results.

The principle of all these methods is to calculate a suitable threshold to split the original histogram and then equalize each histogram separately. These methods can protect the average brightness of the input image, but their limitations are that the segmented sub-histogram is too narrow, leading to poor image enhancement, and the distribution is too wide, so it will contain noise, artifacts, and other defects. To solve the problem of image detail loss, some scholars proposed the local histogram equalization algorithm (AHE) for image contrast enhancement, but the algorithm is complex, has a long running time, and generates a lot of noise and block effects, so it was improved to produce the contrast-limited adaptive histogram equalization (CLAHE) algorithm [15].

In recent years, to mitigate the problem of average brightness change and image detail loss due to gray-level merging in the equalization process, Stark et al. [16] proposed adaptive histogram equalization, the idea of which is to segment the image, perform histogram equalization for each region separately, and finally merge multiple local maps, which can protect certain detail information but also introduce noise. To improve the image over-enhancement problem, Maitra et al. [17] proposed a pre-processing algorithm for pectoral muscle detection and suppression using contrast limited adaptive histogram equalization (ARAN) to enhance the contrast of digital mammograms. Bi-histogram with a plateau limit for digital image enhancement (BHEPL) [18] uses the average of the intensity of each sub-histogram as the platform limit. Aquino-Morínigo et al. [19] proposed a dual Bi-histogram histogram equalization algorithm using two platform limits (BHE2PL). Singh et al. [20] proposed an image enhancement technique using the idea of exposure values, called image enhancement using exposure-based sub-image histogram equalization (ESIHE), which divides the cropped histogram into two parts using a pre-computed exposure threshold. Paul [21] proposed a three-histogram equalization technique for digital image enhancement in the three-platform limit, which uses a separation threshold parameter to initially separate the histogram of the input image into three sub-histograms. Huang [22] proposed an image enhancement strategy—contrast-constrained dynamic quadratic histogram equalization (CLDQHE)—to overcome the drawbacks of over-enhancement and over-smoothing that exist in traditional histogram equalization methods. Although these algorithms perform well in contrast improvement, they fail to maintain brightness and preserve fine structures.

Hence, this study proposes a dual histogram equalization algorithm based on adaptive image correction (AICHE) for image enhancement in the process of moving arm hole machine vision measurement in complex working conditions. With AICHE, the global histogram is divided into two sub-histograms to solve the problem of mean luminance shift, and then the sub-histograms are corrected in two platform limits to avoid the over-enhancement of the image. Next, to prevent image over-enhancement and gray-level merging problems, grayscale correction is conducted using a local grayscale correction algorithm to perform histogram equalization on the basis of maintaining the average brightness of the input image to improve the image contrast while protecting image detail information.

2. Histogram Equalization

The main idea of the histogram equalization algorithm is to extend the probability density function (PDF) of the gray levels in the whole image and remap the gray levels of the pixels in the original image. First, the histogram of the original image F is normalized and its cumulative histogram is constructed. The conversion formula is mainly composed of the cumulative distribution function (CDF). Then, the cumulative histogram is quantized to the gray level of the output image. The three steps of the algorithm are detailed as follows:

Count the percentage of pixels for each gray value to obtain the PDF of the histogram:

where is the gray level of the input image, is the total number of pixels in the input image, and is the total number of pixels in the image with gray level .

Accumulate the PDF of each gray level to obtain the CDF of the histogram:

where CDF is cumulative distribution function.

Quantize the CDF and map it to the output image:

where start and end denote the minimum and maximum gray levels of the mapping interval, respectively.

Based on the CDF, the traditional histogram equalization algorithm selectively enhances the gray levels that occupy more pixels and extends the distribution range of gray levels. However, it will over-enhance the gray levels with higher frequency, and it will merge the gray levels with fewer pixel points, resulting in the loss of details, which is also the drawback of traditional histogram equalization.

3. Proposed AICHE Transformation

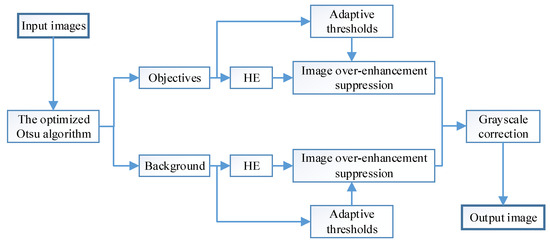

In this study, we propose the AICHE algorithm to segment the image into two sub-histograms of target and background by improving upon the Otsu method, and then perform the histogram equalization process separately, which ensures that the average brightness of the original image will not be shifted. Additionally, the algorithm segments the histogram of the image based on the adaptive threshold. This effectively avoids the phenomenon of image over-enhancement and also prevents detail loss to a certain extent. The flowchart of the AICHE algorithm is shown in Figure 1.

Figure 1.

Overall flowchart of AICHE algorithm.

3.1. Histogram Segmentation

For most images, the distribution of pixel grayscale is not uniform, and the average brightness of the image will be shifted during the equalization process. This can be solved using histogram segmentation. When segmenting the grayscale histogram, if the segmented sub-histogram is too narrow, the equalization effect of the image will be reduced, and if it is too wide, it will lead to excessive enhancement and the loss of details. Therefore, the selection of the threshold value is extremely important, and the improper selection of the threshold value will directly lead to the degradation of the image quality after equalization.

First, suppose a threshold t is the segmentation point, and the image is divided into target region A and background region B according to the gray level, where region A consists of pixels with gray value in the interval , and region B consists of pixels with gray value in the interval . Then, the ratio of class A to class B , is

where and denote the initial and termination values of the histogram, respectively, and denotes the probability that the grayscale value is i.

and , can be calculated as follows:

where and denote the probabilities of class A and class B, respectively.

Then, the can be calculated as follows:

where is the average grayscale of the input image.

The inter-class variance is defined as

The traditional Otsu algorithm is simple, convenient, and not affected by the brightness of the image. It sets the threshold at which the variance between the target and background grayscale reaches its maximum value as the optimal segmentation threshold: . The smaller the distance between each pixel in two regions and the class center, the better the pixel cohesion in each region. The traditional Otsu algorithm is less effective in segmentation because it does not consider pixel spatial correlation. To measure the goodness of pixel cohesion, is assumed and calculated as follows:

where is a distance metric. and are calculated as follows:

where and denote the mean variance values of the target and background regions, respectively.

Obviously, the smaller the average variances and , the better the segmentation effect; on this basis, a new threshold-finding formula is obtained.

The corresponding when takes the maximum value is the optimal threshold. Therefore, is obtained as follows:

where is the optimal threshold value.

According to threshold , the histogram is divided into two sub-histograms, where the first part is defined as and the second part is defined as .

3.2. Adaptive Local Grayscale Correction

The input image is divided into two sub-histograms according to the algorithm above, and histogram equalization is performed on each of the two sub-histograms to improve the image brightness offset. However, a new histogram assignment algorithm is used in this study to solve the image over-enhancement and gray level merging problems. The algorithm is mainly divided into two parts: image over-enhancement suppression and local gray level correction.

3.2.1. Image Over-Enhancement Suppression

In the equalization process, the gray levels with higher frequencies appear to be over-enhanced, whereas the gray levels with lower frequencies are merged, leading to a loss of image details. Therefore, the AICHE algorithm suppresses the over-enhanced gray levels by setting a threshold T for each of the two sub-histograms. The procedure is as follows.

- First, let the input image be F, and obtain the sets and of non-zero cells in the two sub-histograms.where i is the gray level of the image, and and denote non-zero cells in the two sub-histograms, respectively.

- The one-dimensional median filtering of and is performed, and the segmentation thresholds and of the two sub-histograms are calculated as follows.where and denote the peaks of the two sub-histograms, respectively.

- The image is obtained by independently equalizing the two sub-histograms according to Equations (1)–(3), and the equalization equation is as follows.where is the total number of pixels in the image at gray level , is the histogram after equalization at gray level , and and are the total numbers of gray levels in region A and region B, respectively.

- After cropping the balanced histogram according to Equation (16), the image is obtained.where indicates the cropped histogram with gray level .

3.2.2. Local Gray Level Correction

To solve the problem that the gray levels will be merged after equalization, the AICHE algorithm corrects the image after equalization. First, the gradient value is obtained by convolving the input image and the equalized image with the Sobel operator to find the location where the gradient value is obviously reduced. Second, the gray value is modified with reference to the original image to enhance the local gradient value to protect the image detail information. The specific process is as follows.

- The gradient matrices and of the input image and the equalized image are obtained by convolving the images and with Sobel operators in four directions. The gradient matrix convolution is calculated as follows:where , , , denote the convolution factors in the four directions of 0°, 45°, 135°, and 180°, respectively. The four convolution factors are

- Local grayscale correction of the image is conducted according to Equation (19) to enhance the local information of the image.where is the grayscale value of the center pixel of the output image, and denote the center pixels of image F and image , respectively, and and are the grayscale averages of each pixel in a window centered at in the input image and the equalized image, respectively.

- The final image is the output.

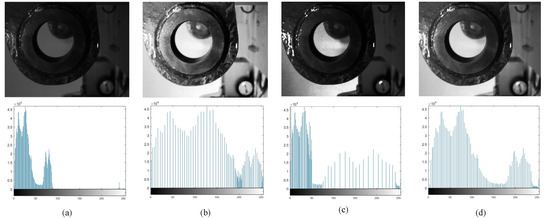

Figure 2 shows the effect of image processing and its grayscale histogram during the process of the HE algorithm and AICHE algorithm. It can be seen that although the HE algorithm can improve the image contrast, the image is overexposed due to image stretching. And, after histogram segmentation, the average brightness of the image is protected, but at this point there is still the problem of gray level merging and the loss of image details. After the adaptive local gray level correction of the image, the average brightness of the input image is protected while the contrast and detail information are enhanced, and the image quality is significantly improved.

Figure 2.

Image enhancement effect of AICHE algorithm: (a) original image; (b) effect of HE algorithm; (c) effect of histogram segmentation; and (d) effect of adaptive local grayscale correction.

4. Analysis of Algorithm Results

4.1. Improved Image Segmentation Effect of Otsu Algorithm

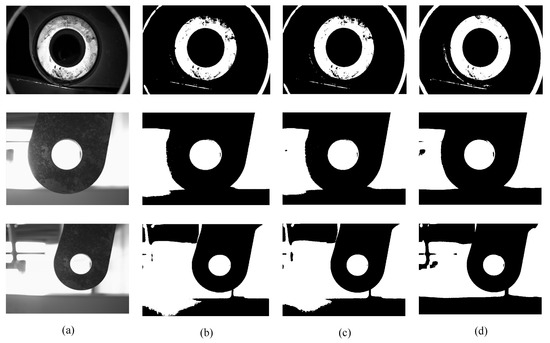

Figure 3 shows the comparison between the improved Otsu algorithm and other image segmentation algorithms in three scenarios. Unlike the traditional Otsu and K-means algorithms, the improved Otsu algorithm can segment the image reasonably well to obtain a more complete moving arm profile. The improved Otsu algorithm segmentation can show more details of the image and optimize the segmentation effect.

Figure 3.

Comparison of segmentation effect between improved Otsu algorithm and other image segmentation algorithms: (a) original image; (b) segmentation effect of traditional Otsu algorithm method; (c) segmentation effect of the K-means algorithm method; and (d) segmentation effect of improved Otsu algorithm.

4.2. AICHE Algorithm Effect

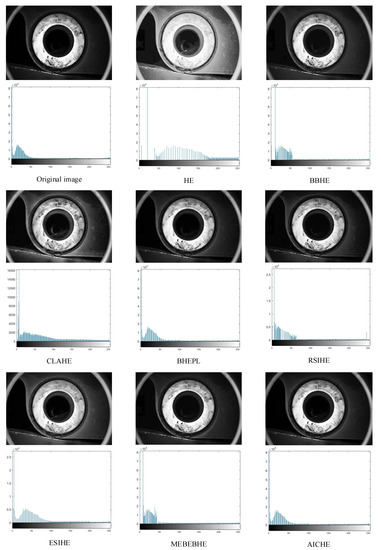

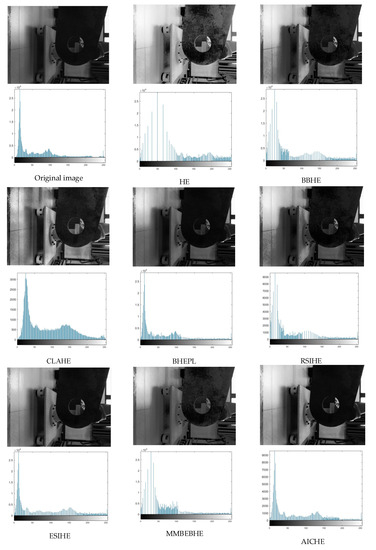

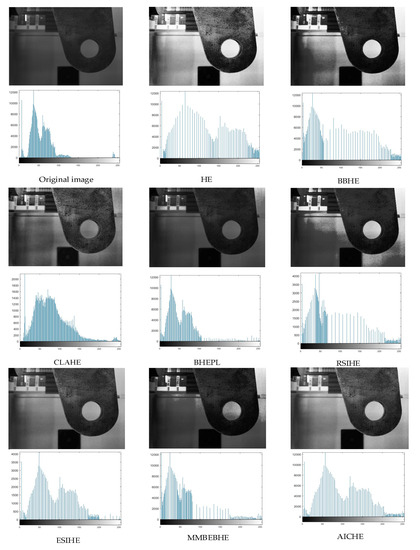

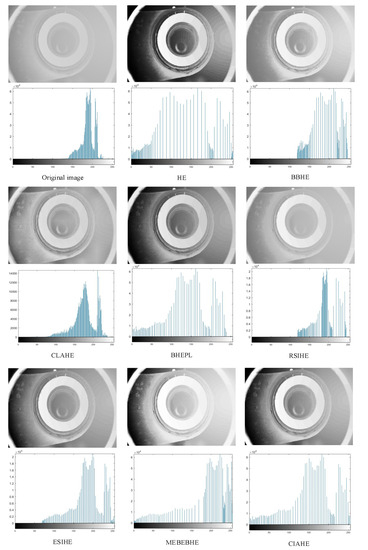

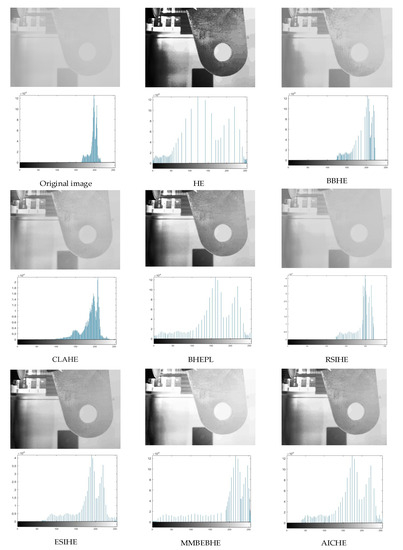

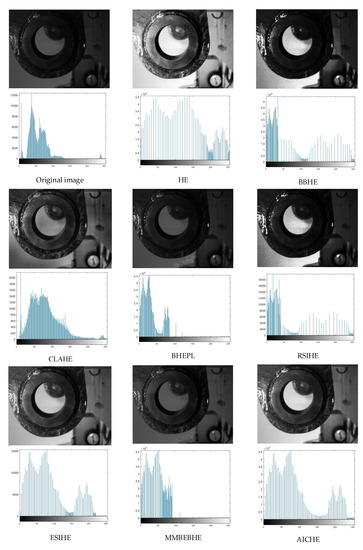

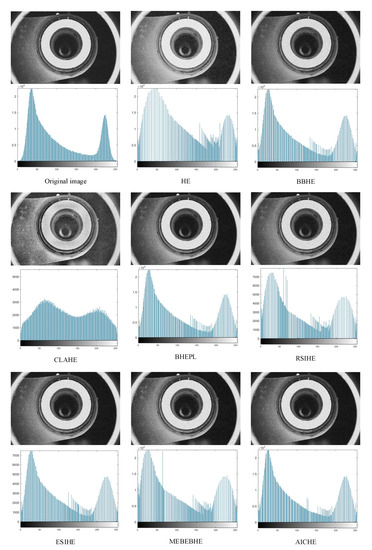

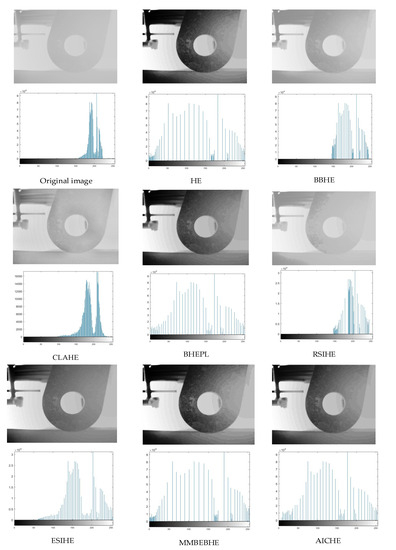

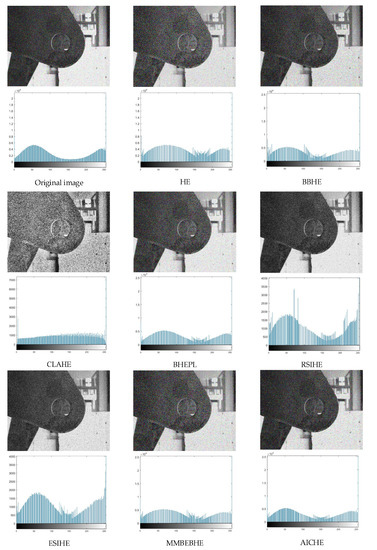

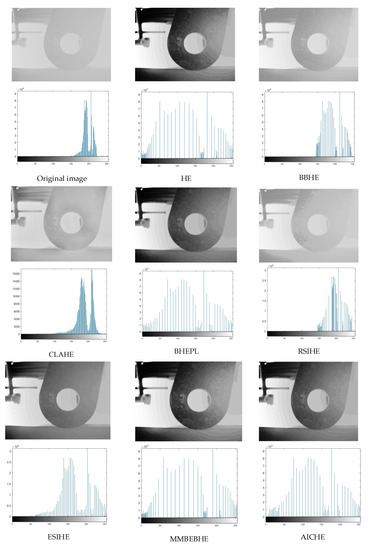

To demonstrate the effectiveness of the AICHE algorithm, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 simulate the environment of insufficient light, fog, and smoke, and compare the image enhancement effects of seven histogram equalization algorithms with those of the AICHE algorithm. These include the classical algorithms HE, BBHE, and CLAHE and several more advanced algorithms, BHEPL, RSIHE, ESIHE, and MEBEBHE.

Figure 4.

The simulation results (above) of the ‘scene 1’ image are presented along with its corresponding histogram (below).

Figure 5.

The simulation results (above) of the ‘scene 2’ image are presented along with its corresponding histogram (below).

Figure 6.

The simulation results (above) of the ‘scene 3’ image are presented along with its corresponding histogram (below).

Figure 7.

The simulation results (above) of the ‘scene 4’ image are presented along with its corresponding histogram (below).

Figure 8.

The simulation results (above) of the ‘scene 5’ image are presented along with its corresponding histogram (below).

Figure 9.

The simulation results (above) of the ‘scene 6’ image are presented along with its corresponding histogram (below).

Figure 10.

The simulation results (above) of the ‘scene 7’ image are presented along with its corresponding histogram (below).

Figure 11.

The simulation results (above) of the ‘scene 8’ image are presented along with its corresponding histogram (below).

Figure 12.

The simulation results (above) of the ‘scene 9’ image are presented along with its corresponding histogram (below).

Figure 13.

The simulation results (above) of the ‘scene 10’ image are presented along with its corresponding histogram (below).

4.3. Objective Evaluation Indicators

Four objective evaluation indicators are selected in this study, which are detailed below.

4.3.1. Structure Similarity Index Measure

Structure similarity index measure (SSIM) is a metric used to compare the similarity of two images. The SSIM value is mainly based on three characteristics: structure, luminance, and contrast. Luminance is measured by the average gray value; contrast is measured by the gray standard deviation; and structure is measured by the correlation coefficient. The calculation method is as follows:

where is the average gray value, is the gray standard deviation, and C is the correlation coefficient.

SSIM is consistent with human visual characteristics in evaluating image quality. Its value falls in the range of [0, 1], where a higher value indicates a stronger similarity between the two images, reflecting higher image quality. Its calculation formula is as follows:

where X and Y denote the input image and output image, respectively; and are the standard deviations of image X and Y, respectively; and are the grayscale averages of image X and image Y, respectively; is the covariance of the two images; and and are the correlation coefficients.

4.3.2. Peak Signal-to-Noise Ratio

Peak signal-to-noise ratio (PSNR) can be used to compare the contrast enhancement effect of images. PSNR is a measure of image quality based on the definition of mean square error (MSE), which expresses the average of the differences between two images at each pixel point and is calculated as follows:

PSNR is improved on the basis of MSE and its value is greater than zero. The larger the value, the less distortion in the output image, the higher the contrast, and the more obvious the enhancement effect; it is calculated as follows:

where is the total number of pixels; and denote the input image and output image, respectively; and MSE is the mean square error.

4.3.3. Absolute Mean Brightness Error

The absolute average brightness error (AMBE) indicates the absolute difference between the average brightness of the input image and the resulting image, and it is used to measure the performance in maintaining the original brightness. ASME is a value greater than zero. The smaller the AMBE value, the better the light preservation effect. It is calculated as follows:

where X and Y denote the input image and the resultant image, respectively, and and are the average brightness values of the input image and the resultant image, respectively.

4.3.4. Information Entropy

Information entropy (E) is used to measure the information richness of an image, which is greater than zero. The larger the value of E, the more information and details are present in the image. However, a large value of E also indicates significant noise in the image. It is calculated as follows:

where denotes the proportion of pixels with gray value in the image.

4.4. Evaluation Results

In this study, the performance of each algorithm is measured using image evaluation metrics such as SSIM, E, PSNR, AMBE, and time.

As can be seen in Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13, the HE algorithm shows an obvious phenomenon of image brightness change and detail loss. Under low light conditions, the BBHE, RSIHE, and MMBEBHE algorithms can protect the average brightness but will lead to uneven histogram balance due to unreasonable histogram segmentation, which is less effective for detail processing and will cause local area distortion. CLAHE can protect the image details but will introduce a large amount of noise, especially in Figure 10 and Figure 13 where the image visual effect is significantly reduced. The AICHE algorithm improves the image contrast under the condition of protecting the average brightness of the image; it does not introduce excessive noise, and, to a certain extent, it retains the original shape of the original histogram.

From Table 1 and Table 2, the AICHE algorithm has the highest PSNR value and the SSIM value is closest to 1.The image information entropy in Table 3 demonstrates the richness of details in the image. The information entropy of the image processed by CLAHE obviously exceeds that of the original image, which indicates that noise is introduced in the image and produces a block effect. Except for the CLAHE algorithm, the AICHE algorithm has the highest information entropy value, indicating that the gray level merging phenomenon is effectively avoided in the equalization process, which can protect the image information. ESIHE cannot maintain the average image brightness well, which is also clearly reflected by the AMBE values in Table 4.

Table 1.

SSIM of different algorithms.

Table 2.

PSNR of different algorithms.

Table 3.

E of different algorithms.

Table 4.

AMBE of different algorithms.

Based on the evaluation results, the AICHE algorithm proposed herein has good image enhancement effects in all three working conditions; it can protect the average brightness of the image and reduce the merging of gray levels on the basis of improving the image contrast, and it has good robustness. However, as shown in Table 5, the computational time of the AICHE algorithm is relatively long.

Table 5.

Time of different algorithms.

5. Conclusions

In this study, the AICHE algorithm is proposed for image enhancement under complex working conditions. The problems of average brightness shift, image over-enhancement, and gray level merging in the traditional histogram equalization process are effectively solved. The algorithm improves upon the traditional Otsu method by segmenting the image histogram to solve the problem of mean luminance shift and adaptively obtain the threshold to suppress the gray level to avoid the image over-enhancement phenomenon. Then, it uses the local gray level correction method to avoid the gray level merging problem. According to the experimental analysis, the adaptive local correction method can effectively avoid image over-enhancement and image detail loss, and it can enhance the image contrast and detail information. Compared with other improved algorithms, the AICHE algorithm significantly enhances the PSNR, gray level, and information entropy while avoiding the introduction of noise; its SSIM is closer to 1, and its image visual effect is better.

Due to the pursuit of high-quality measurement accuracy, this method may lead to some defects in time efficiency, which is more suitable for image enhancement in the industrial environment. How to improve time efficiency will be a key issue in future research.

Author Contributions

Methodology, B.L. and S.J.; software, B.Y.; resources, S.Y. and D.Z.; writing—original draft preparation, B.Y.; writing—review and editing, B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Guangxi Science and Technology Major Special Project, grant number AA22068064; Guangxi Science and Technology Programs, grant number AD22080042; Guangxi Key R&D Program Projects, grant number AB22035066.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We appreciate the support of the Guangxi College Students Innovation and Entrepreneurship Program.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tarawneh, A.S.; Hassanat, A.B.; Elkhadiri, I.; Chetverikov, D.; Almohammadi, K. Automatic gamma correction based on root-mean-square-error maximization. Int. Conf. Comput. Inf. Technol. 2020, 1, 448–452. [Google Scholar]

- Gong, Y.; Xie, X. research on coal mine underground image recognition technology based on homomorphic filtering method. Coal Sci. Technol. 2023, 51, 241–250. [Google Scholar]

- Chen, Z.; Pawar, K.; Ekanayake, M.; Pain, C.; Zhong, S.; Egan, G.F. Deep learning for image enhancement and correction in magnetic resonance imaging-state-of-the-art and challenges. J. Digit. Imaging 2023, 36, 204–230. [Google Scholar]

- Castleman, K.R. Digital Image Processing; Prentice Hall Press: Upper Saddle River, NJ, USA, 1996. [Google Scholar]

- Ding, C.; Luo, Z.; Hou, Y.; Chen, S.; Zhang, W. An effective method of infrared maritime target enhancement and detection with multiple maritime scene. Remote Sens. 2023, 15, 3623. [Google Scholar]

- Zhou, J.; Pang, L.; Zhang, D.; Zhang, W. Underwater image enhancement method via multi-interval subhistogram perspective equalization. IEEE J. Ocean. Eng. 2023, 48, 474–488. [Google Scholar]

- Yuan, Z.; Zeng, J.; Wei, Z.; Jin, L.; Zhao, S.; Liu, X.; Zhang, Y.; Zhou, G. Clahe-based low-light image enhancement for robust object detection in overhead power transmission system. IEEE Trans. Power Deliv. 2023, 38, 2240–2243. [Google Scholar]

- Dyke, R.M.; Hormann, K. Histogram equalization using a selective filter. Vis. Comput. 2022, 69, 284–302. [Google Scholar]

- Kim, Y.-T. Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar]

- Wang, Y.; Chen, Q.; Zhang, B. Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans. Consum. Electron. 1999, 45, 68–75. [Google Scholar]

- Sim, K.S.; Tso, C.P.; Tan, Y.Y. Recursive sub-image histogram equalization applied to gray scale images. Pattern Recognit. Lett. 2007, 28, 1209–1221. [Google Scholar]

- Chen, S.-D.; Ramli, A.R. Contrast enhancement using recursive mean-separate histogram equalization for scalable brightness preservation. IEEE Trans. Consum. Electron. 2003, 49, 1301–1309. [Google Scholar] [CrossRef]

- Chen, S.-D.; Ramli, A.R. Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Trans. Consum. Electron. 2003, 49, 1310–1319. [Google Scholar] [CrossRef]

- He, Z.; Zeng, X.; Deng, C. Infrared image enhancement based on local entropy-lc and dual-area histogram equalization. Infrared Technol. 2023, 45, 582–598. [Google Scholar]

- Pisano, E.D.; Zong, S.; Hemminger, B.M.; DeLuca, M.; Johnston, R.E.; Muller, K.; Braeuning, M.P.; Pizer, S.M. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 1998, 11, 193–200. [Google Scholar] [CrossRef]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef]

- Maitra, I.K.; Nag, S.; Bandyopadhyay, S.K. Technique for preprocessing of digital mammogram. Comput. Methods Programs Biomed. 2012, 107, 175–188. [Google Scholar] [CrossRef]

- Ooi, C.H.; Isa, N.A.M. Adaptive contrast enhancement methods with brightness preserving. IEEE Trans. Consum. Electron. 2010, 56, 2543–2551. [Google Scholar] [CrossRef]

- Aquino-Morínigo, P.B.; Lugo-Solís, F.R.; Pinto-Roa, D.P.; Ayala, H.L.; Noguera, J.L.V. Bi-histogram equalization using two plateau limits. Signal Image Video Process. 2017, 11, 857–864. [Google Scholar] [CrossRef]

- Singh, K.; Kapoor, R. Image enhancement using exposure based sub image histogram equalization. Pattern Recognit. Lett. 2014, 36, 10–14. [Google Scholar] [CrossRef]

- Paul, A. Adaptive tri-plateau limit tri-histogram equalization algorithm for digital image enhancement. Vis. Comput. 2023, 39, 297–318. [Google Scholar]

- Huang, Z.; Wang, Z.; Zhang, J.; Li, Q.; Shi, Y. Image enhancement with the preservation of brightness and structures by employing contrast limited dynamic quadri-histogram equalization. Optik 2021, 226, 165877. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).