Improving Software Defect Prediction in Noisy Imbalanced Datasets

Abstract

1. Introduction

- (1)

- US-PONR offers a new PSM-based method for data oversampling and noise reduction, which reduces the introduction of overfitting noise caused by the minority class sample generation.

- (2)

- It also offers a new method of data pre-processing for software defect prediction that uses a combination of undersampling, oversampling, and noise reduction.

- (3)

- US-PONR additionally performs SOTA in software defect prediction experiments under different noise environment settings, and the experiments demonstrate that the method can effectively identify and label noise samples and remove them.

2. Background

2.1. Software Defect Prediction (SDP) and Metrics

2.2. Class Imbalance

2.3. Noise Reduction

3. Materials and Methods

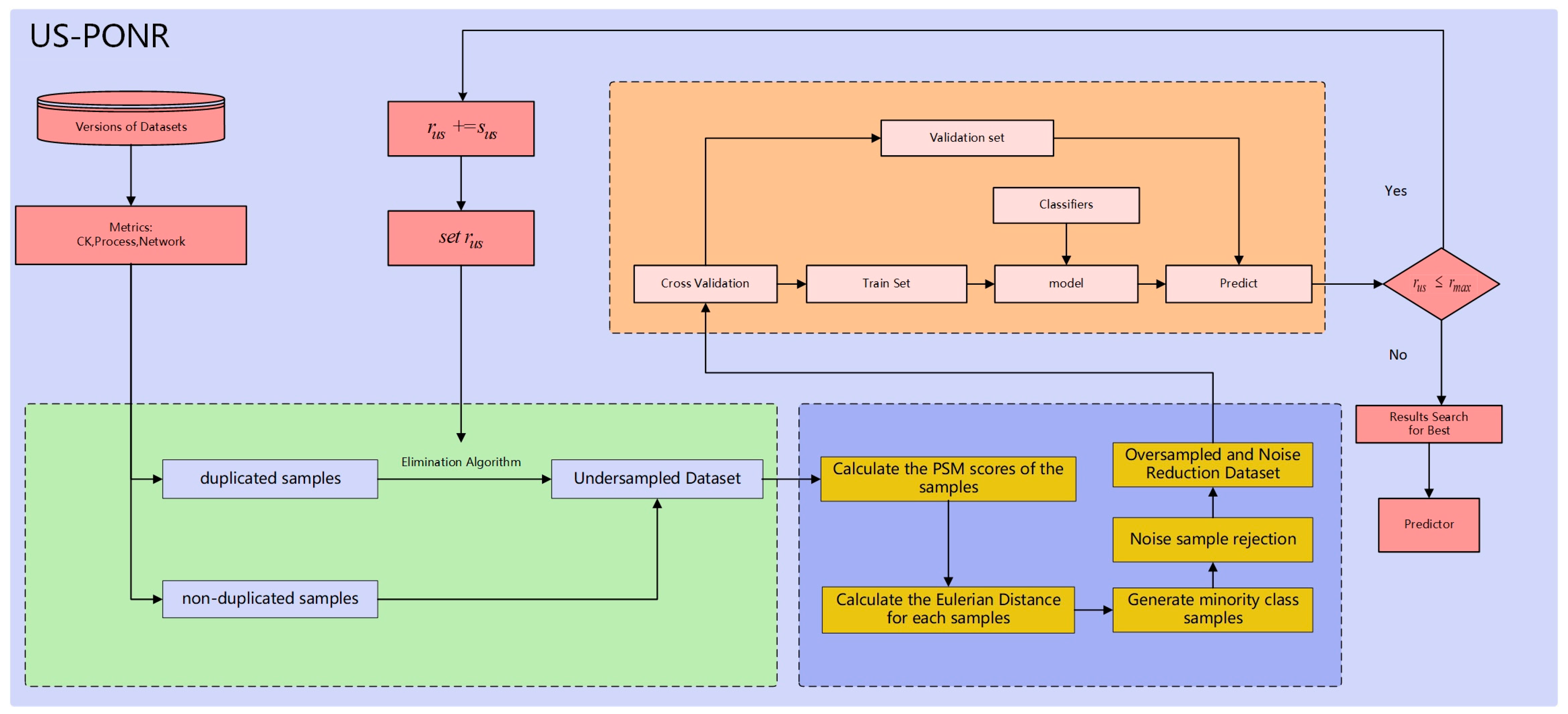

3.1. Framework

3.2. Undersampling

| Algorithm 1: Undersampling |

| input |

| output |

| 1. : undersample ratio, initialize to 1 |

| 2. , , initialize to null |

| 3. for in |

| 4. if |

| 5. then if in |

| 6. then ← |

| 7. ← |

| 8. end if |

| 9. else |

| 10. ← |

| 11. end if |

| 12. end for |

| 13. do { |

| 14. r = / |

| 15. while r ≤ |

| 16. ← xj (random select from ) |

| 17. end while |

| 18. = + |

| 19. ← and |

| 20. += |

| 21. }while ( ≤ ) |

| 22. return |

3.3. PONR

3.4. Predictor Construction

| Algorithm 2: CV-based aggregation |

| input StratifiedKFold Grouped Dataset , |

| undersampling ratio , Classifers, Model Score Error Threshold |

| output defect prediction result, best undersampling ratio |

| 1. for in : |

| 2. for in : |

| 3. ← |

| 4. ← |

| 5. for classifier i in Classifiers |

| 6. if classifier i need hyperparameter-optimal or threshold-optimal: |

| 7. optimize hyperparameter or threshold |

| 8. end if |

| 9. ← train predictor by and |

| 10. if < : |

| 11. = |

| 12. end if |

| 13. ← |

| 14. end for |

| 15. += |

| 16. += |

| 17. end for |

| 18. = ; = |

| 19. Record and corresponding to each different |

| 20. end for |

| 21. calculate (max( calculated by diff )) |

| 22. = with max () |

| 23. = with max() |

| 24. for result in |

| 25. if |-result| < |

| 26. add the classfier (with its predict result) into |

| 27. end for |

| 28. defect prediction result = best prediction result by classifiers in |

| 29. return defect prediction result and |

4. Experiment

- defect prediction in SDP datasets without noise (for RQ1);

- defect prediction in SDP datasets in different noise environments (for RQ2);

- validation of the proportion of labeled noisy samples in the dataset before and after the use of the data pre-processing method (for RQ3).

4.1. Research Questions

- RQ1: Is US-PONR effective in SDP datasets?

- RQ2: Can US-PONR perform better compared to the benchmark methods in unbalanced SDP datasets with noise?

- RQ3: Is US-PONR especially good at eliminating label noise samples?

4.2. Datasets

4.2.1. PROMISE Public Dataset

4.2.2. Noise Dataset Generation

4.3. Experiment Settings

4.4. Comparison Methods

5. Results

5.1. Answer to RQ1: Is US-PONR Effective in SDP Datasets?

- Determining the optimization parameter.

- Comparing the results of US-PONR with the results of just US (undersampling) or PONR alone.

- Comparing US-PONR with the SOTA data pre-processing method.

5.1.1. Determining the Optimization Parameter

5.1.2. Comparing US-PONR with US and PONR

5.1.3. Comparing US-PONR with the SOTA Data Pre-Processing Method—SMOTUNED

5.2. Answer to RQ2: Can US-PONR Perform Better Compared to the Benchmark Methods in Un-Balanced SDP Datasets with Noise?

5.3. Answer to RQ3: Is US-PONR Especially Good at Eliminating Label Noise Samples?

6. Threats to Validity

6.1. Datasets

6.2. Model Hyperparameters

6.3. Evaluation

7. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wong, W.E.; Li, X.; Laplante, P.A. Be more familiar with our enemies and pave the way forward: A review of the roles bugs played in software failures. J. Syst. Softw. 2017, 133, 68–94. [Google Scholar] [CrossRef]

- Wong, W.E.; Debroy, V.; Surampudi, A.; Kim, H.; Siok, M.F. Recent catastrophic accidents: Investigating how software was responsible. In Proceedings of the SSIRI 2010—4th IEEE International Conference on Secure Software Integration and Reliability Improvement, Singapore, 9–11 June 2010; pp. 14–22. [Google Scholar] [CrossRef]

- Aleem, S.; Capretz, L.F.; Ahmed, F. Benchmarking Machine Learning Techniques for Software Defect Detection. Int. J. Softw. Eng. Appl. 2015, 6, 11–23. [Google Scholar] [CrossRef]

- Alsaeedi, A.; Khan, M.Z. Software Defect Prediction Using Supervised Machine Learning and Ensemble Techniques: A Comparative Study. J. Softw. Eng. Appl. 2019, 12, 85–100. [Google Scholar] [CrossRef]

- Prasad, M.; Florence, L.F.; Arya3, A. A Study on Software Metrics based Software Defect Prediction using Data Mining and Machine Learning Techniques. Int. J. Database Theory Appl. 2015, 8, 179–190. [Google Scholar] [CrossRef][Green Version]

- Chidamber, S.; Kemerer, C.F. A Metric suite for object oriented design. IEEE Trans. Softw. Eng. 1994, 20, 476–493. [Google Scholar] [CrossRef]

- Nagappan, N.; Ball, T. Use of relative code churn measures to predict system defect density. In Proceedings of the 27th International Conference on Software Engineering, ICSE05, St. Louis, MO, USA, 15–21 May 2005; pp. 284–292. [Google Scholar] [CrossRef]

- Khoshgoftaar, T.; Allen, E.; Goel, N.; Nandi, A.; McMullan, J. Detection of software modules with high debug code churn in a very large legacy system. In Proceedings of the ISSRE ‘96: 7th International Symposium on Software Reliability Engineering, White Plains, NY, USA, 30 October–2 November 1996. [Google Scholar] [CrossRef]

- Nikora, A.P.; Munson, J.C. Developing fault predictors for evolving software systems. In Proceedings of the 5th International Workshop on Enterprise Networking and Computing in Healthcare Industry, Sydney, Australia, 5 September 2004. [Google Scholar] [CrossRef]

- Hassan, A.E. Predicting faults using the complexity of code changes. In Proceedings of the International Conference on Software Engineering, Vancouver, BC, Canada, 16–24 May 2009; pp. 78–88. [Google Scholar] [CrossRef]

- Yang, Y.; Ai, J.; Wang, F. Defect Prediction Based on the Characteristics of Multilayer Structure of Software Network. In Proceedings of the 2018 IEEE International Conference on Software Quality, Reliability, and Security Companion (QRS-C), Lisbon, Portugal, 16–20 July 2018; pp. 27–34. [Google Scholar] [CrossRef]

- Ai, J.; Su, W.; Zhang, S.; Yang, Y. A Software Network Model for Software Structure and Faults Distribution Analysis. IEEE Trans. Reliab. 2019, 68, 844–858. [Google Scholar] [CrossRef]

- Zimmermann, T.; Nagappan, N. Predicting defects using network analysis on dependency graphs. In Proceedings of the International Conference on Software Engineering, Leipzig, Germany, 10–18 May 2008; pp. 531–540. [Google Scholar] [CrossRef]

- Zhang, S.; Ai, J.; Li, X. Correlation between the Distribution of Software Bugs and Network Motifs. In Proceedings of the 2016 IEEE International Conference on Software Quality, Reliability and Security (QRS), Vienna, Austria, 1–3 August 2016. [Google Scholar] [CrossRef]

- Li, Y.; Wong, W.E.; Lee, S.-Y.; Wotawa, F. Using Tri-Relation Networks for Effective Software Fault-Proneness Prediction. IEEE Access 2019, 7, 63066–63080. [Google Scholar] [CrossRef]

- Yu, X.; Liu, J.; Keung, J.W.; Li, Q.; Bennin, K.E.; Xu, Z.; Wang, J.; Cui, X. Improving Ranking-Oriented Defect Prediction Using a Cost-Sensitive Ranking SVM. IEEE Trans. Reliab. 2019, 69, 139–153. [Google Scholar] [CrossRef]

- Gong, L.; Jiang, S.; Jiang, L. Tackling Class Imbalance Problem in Software Defect Prediction through Cluster-Based Over-Sampling with Filtering. IEEE Access 2019, 7, 145725–145737. [Google Scholar] [CrossRef]

- Zhang, X.; Song, Q.; Wang, G. A dissimilarity-based imbalance data classification algorithm. Appl. Intell. 2015, 42, 544–565. [Google Scholar] [CrossRef]

- Zhou, L. Performance of corporate bankruptcy prediction models on imbalanced dataset: The effect of sampling methods. Knowl. Based Syst. 2013, 41, 16–25. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Bennin, K.E.; Keung, J.; Phannachitta, P.; Monden, A.; Mensah, S. Mahakil: Diversity based oversampling approach to alleviate the class imbalance issue in software defect prediction. IEEE Trans. Softw. Eng. 2017, 44, 534–550. [Google Scholar] [CrossRef]

- Riquelme, J.C.; Ruiz, R.; Rodríguez, D.; Moreno, J. Finding defective modules from highly unbalanced datasets. Actas De Los Talleres Las Jorn. Ing. Del Softw. Bases Datos 2008, 2, 67–74. [Google Scholar]

- Pandey; Kumar, S.; Tripathi, A.K. An empirical study toward dealing with noise and class imbalance issues in software defect prediction. Soft Comput. 2021, 25, 13465–13492. [Google Scholar] [CrossRef]

- Li, Z.; Jing, X.-Y.; Zhu, X. Progress on approaches to software defect prediction. IET Softw. 2018, 12, 161–175. [Google Scholar] [CrossRef]

- Kim, H.; Just, S.; Zeller, A. It’s not a bug, it’s a feature: How misclassification impacts bug prediction. In Proceedings of the 2013 35th International Conference on Software Engineering (ICSE), San Francisco, CA, USA, 18–26 May 2013. [Google Scholar]

- Kim, H.; Just, S.; Zeller, A. The impact of tangled code changes on defect prediction models. Empir. Softw. Eng. 2016, 21, 303–336. [Google Scholar]

- Rivera, W.A. Noise Reduction A Priori Synthetic Over-Sampling for class imbalanced data sets. Inf. Sci. 2017, 408, 146–161. [Google Scholar] [CrossRef]

- Song, Q.; Jia, Z.; Shepperd, M.; Ying, S.; Liu, J. A general software defect-proneness prediction framework. IEEE Trans. Softw. Eng. 2011, 37, 356–370. [Google Scholar] [CrossRef]

- Jin, C. Software defect prediction model based on distance metric learning. Soft Comput. 2021, 25, 447–461. [Google Scholar] [CrossRef]

- Goyal, S. Effective software defect prediction using support vector machines (SVMs). Int. J. Syst. Assur. Eng. Manag. 2022, 13, 681–696. [Google Scholar] [CrossRef]

- Xu, J.; Ai, J.; Liu, J.; Shi, T. ACGDP: An Augmented Code Graph-Based System for Software Defect Prediction. IEEE Trans. Reliab. 2022, 71, 850–864. [Google Scholar] [CrossRef]

- Hanif, H.; Maffeis, S. Vulberta: Simplified source code pre-training for vulnerability detection. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar]

- Weyuker, E.J.; Ostrand, T.J.; Bell, R.M. Do too many cooks spoil the broth? Using the number of developers to enhance defect prediction models. Empir. Softw. Eng. 2008, 13, 539–559. [Google Scholar] [CrossRef]

- Guzmán-Ponce, A.; Sánchez, J.S.; Valdovinos, R.M.; Marcial-Romero, J.R. DBIG-US: A two-stage under-sampling algorithm to face the class imbalance problem. Expert Syst. Appl. 2021, 168, 114301. [Google Scholar] [CrossRef]

- Tax, D.M.J. One-Class Classification: Concept Learning in the Absence of Counter-Examples; Netherlands Participating Organizations: Leidschendam, The Netherlands, 2002; p. 584. [Google Scholar]

- Agrawal, A.; Menzies, T. Is ‘better data’ better than ‘better data miners’?: On the benefits of tuning SMOTE for defect prediction. In Proceedings of the International Conference on Software Engineering, Gothenburg, Sweden, 27 May–3 June 2018; pp. 1050–1061. [Google Scholar] [CrossRef]

- Feng, S.; Keung, J.; Yu, X.; Xiao, Y.; Bennin, K.E.; Kabir, A.; Zhang, M. COSTE: Complexity-based OverSampling TEchnique to alleviate the class imbalance problem in software defect prediction. Inf. Softw. Technol. 2021, 129, 106432. [Google Scholar] [CrossRef]

- Ochal, M.; Patacchiola, M.; Vazquez, J.; Storkey, A.; Wang, S. Few-shot learning with class imbalance. IEEE Trans. Artif. Intell. 2023. [Google Scholar] [CrossRef]

- Bennin, K.E.; Keung, J.; Phannachitta, P.; Mensah, S. The significant effects of data sampling approaches on software defect prioritization and classification. In Proceedings of the 2017 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Toronto, ON, Canada, 9–10 November 2017. [Google Scholar]

- Feng, S.; Keung, J.; Yu, X.; Xiao, Y.; Zhang, M. Investigation on the stability of SMOTE-based oversampling techniques in software defect prediction. Inf. Softw. Technol. 2021, 139, 106662. [Google Scholar] [CrossRef]

- Soltanzadeh, P.; Hashemzadeh, M. RCSMOTE: Range-Controlled synthetic minority over-sampling technique for handling the class imbalance problem. Inf. Sci. 2021, 542, 92–111. [Google Scholar] [CrossRef]

- Batista, G.E.A.P.A.; Prati, R.C.; Monard, M.C. A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.Y.; Mao, B.H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. Lect. Notes Comput. Sci. 2005, 3644, 878–887. [Google Scholar] [CrossRef]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the International Joint Conference on Neural Networks, Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F.; Last, F. Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Inf. Sci. 2018, 465, 1–20. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F. Self-Organizing Map Oversampling (SOMO) for imbalanced data set learning. Expert Syst. Appl. 2017, 82, 40–52. [Google Scholar] [CrossRef]

- Lee, H.; Kim, J.; Kim, S. Gaussian-based SMOTE algorithm for solving skewed class distributions. Int. J. Fuzzy Log. Intell. Syst. 2017, 17, 229–234. [Google Scholar] [CrossRef]

- Barua, S.; Islam, M.; Yao, X.; Murase, K. MWMOTE—Majority weighted minority oversampling technique for imbalanced data set learning. IEEE Trans. Knowl. Data Eng. 2014, 26, 405–425. [Google Scholar] [CrossRef]

- Ahluwalia, A.; Falessi, D.; Di Penta, M. Snoring: A noise in defect prediction datasets. In Proceedings of the IEEE International Working Conference on Mining Software Repositories, Montreal, QC, Canada, 25–31 May 2019; pp. 63–67. [Google Scholar] [CrossRef]

- Hu, S.; Liang, Y.; Ma, L.; He, Y. MSMOTE: Improving classification performance when training data is imbalanced. In Proceedings of the 2nd International Workshop on Computer Science and Engineering, WCSE 2009, Qingdao, China, 28–30 October 2009; Volume 2, pp. 13–17. [Google Scholar] [CrossRef]

- Sáez, J.A.; Luengo, J.; Stefanowski, J.; Herrera, F. SMOTE-IPF: Addressing the noisy and borderline examples problem in imbalanced classification by a re-sampling method with filtering. Inf. Sci. 2015, 291, 184–203. [Google Scholar] [CrossRef]

- Koziarski, M.; Wożniak, M. CCR: A combined cleaning and resampling algorithm for imbalanced data classification. Int. J. Appl. Math. Comput. Sci. 2017, 27, 727–736. [Google Scholar] [CrossRef]

- Ramentol, E.; Caballero, Y.; Bello, R.; Herrera, F. SMOTE-RSB *: A hybrid preprocessing approach based on oversampling and undersampling for high imbalanced data-sets using SMOTE and rough sets theory. Knowl. Inf. Syst 2012, 33, 245–265. [Google Scholar] [CrossRef]

- Ramentol, E.; Gondres, I.; Lajes, S.; Bello, R.; Caballero, Y.; Cornelis, C.; Herrera, F. Fuzzy-rough imbalanced learning for the diagnosis of High Voltage Circuit Breaker maintenance: The SMOTE-FRST-2T algorithm. Eng. Appl. Artif. Intell. 2016, 48, 134–139. [Google Scholar] [CrossRef]

- Khoshgoftaar, T.M.; Rebours, P. Improving software quality prediction by noise filtering techniques. J. Comput. Sci. Technol. 2007, 22, 387–396. [Google Scholar] [CrossRef]

- Matloob, F.; Ghazal, T.M.; Taleb, N.; Aftab, S.; Ahmad, M.; Abbas, S.; Khan, M.A.; Soomro, T.R. Software defect prediction using ensemble learning: A systematic literature review. IEEE Access 2021, 9, 98754–98771. [Google Scholar] [CrossRef]

- Menzies, T.; Caglayan, B.; Kocaguneli, E.; Krall, J.; Peters, F.; Turhan, B. The Promise Repository of Empirical Software Engineering Data. Available online: http://promise.site.uottawa.ca/SERepository/ (accessed on 31 December 2007).

- Cheikhi, L.; Abran, A. PROMISE and ISBSG software engineering data repositories: A survey. In Proceedings of the Joint Conference of the 23rd International Workshop on Software Measurement and the 8th International Conference on Software Process and Product Measurement, IWSM-MENSURA 2013, Ankara, Turkey, 23–26 October 2013; pp. 17–24. [Google Scholar] [CrossRef]

- Ghotra, B.; McIntosh, S.; Hassan, A.E. Revisiting the impact of classification techniques on the performance of defect prediction models. In Proceedings of the International Conference on Software Engineering, Florence, Italy, 16–24 May 2015; Volume 1, pp. 789–800. [Google Scholar] [CrossRef]

- Kovács, G. Smote-variants: A python implementation of 85 minority oversampling techniques. Neurocomputing 2019, 366, 352–354. [Google Scholar] [CrossRef]

- Kyurkchiev, N.; Markov, S. On the Hausdorff distance between the Heaviside step function and Verhulst logistic function. J. Math. Chem. 2016, 54, 109–119. [Google Scholar] [CrossRef]

| Name | Description |

|---|---|

| WMC | Weighted methods per class |

| DIT | Depth of inheritance tree |

| NOC | Number of children |

| CBO | Coupling between object classes |

| RFC | Response for a class |

| LCOM | Lack of cohesion in methods |

| LOC | Lines of code |

| Name | Description |

|---|---|

| REVISIONS | Number of revisions of a file. |

| AUTHORS | Number of distinct authors that checked a file into the repository. |

| LOC_ADDED | Sum over all revisions of the lines of code added to a file. |

| MAX_LOC_ADDED | Maximum number of lines of code added for all revisions. |

| AVE_ LOC_ADDED | Average lines of code added per revision. |

| LOC_DELETED | Sum over all revisions of the lines of code deleted from a file. |

| MAX_LOC_DELETED | Maximum number of lines of code deleted for all revisions. |

| AVE_LOC_DELETED | Average lines of code deleted per revision. |

| CODECHURN | Sum of (added lines of code—deleted lines of code) over all revisions. |

| MAX_CODECHURN | Maximum CODECHURN for all revisions. |

| AVE_CODECHURN | Average CODECHURN per revision. |

| Name | Description | Name | Description |

|---|---|---|---|

| Funcount | The number of internal functions of the class node. | Katz_centrality | The relative influence of a node within a network. |

| Indegree | The total number of connections it points to other nodes. | Load_centrality | The fraction of all shortest paths that pass through that node. |

| Outdegree | The total number of connections other nodes point to it. | PageRank | A ranking of the nodes in the graph G based on the structure of the incoming links. |

| Insidelinks | The total number of connections within the internal functions of the node. | Average_neighbor_degree | The average of the neighborhood of each node. |

| Out_degree_centrality | The fraction of nodes its outgoing edges are connected to. | Number_of_cliques | The number of maximal cliques for each node. |

| In_degree_centrality | The fraction of nodes its incoming edges are connected to. | Core_number | The largest value k of a k-core containing that node. |

| Degree_centrality | The fraction of nodes it is connected to. | Brokerage | The number of pairs not directly connected. |

| Closeness_centrality | The reciprocal of the sum of the shortest path distances from v to all other nodes. | EffSize | Effective size of network. |

| Betweenness_centrality | The sum of the fraction of all-pair shortest paths that pass through node v. | Constraint | Measures how strongly a module is constrained by its neighbors. |

| Eccentricity | The maximum distance from v to all other nodes in G. | Hierarchy | Measures how the constraint measure is distributed across neighbors. |

| Communicability_centrality | A broader measure of connectivity, which assumes that information could flow along all possible paths between two nodes. | TwoStepReach | The percentage of nodes that are two steps away. |

| Name | Description |

|---|---|

| LiR | Linear regression |

| RR | Ridge regression |

| LoR | Logistic regression |

| LDA | Linear discriminant analysis |

| QDA | Quadratic discriminant analysis |

| KR | Kernel ridge |

| SVC | C-Support vector classification |

| SGDC | Linear classifiers (SVM) |

| KNN | K-Nearest neighbors vote classifier |

| GNB | Gaussian naïve Bayes |

| DT | Decision tree classifier |

| RF | Random forest classifier |

| ET | Extra trees classifier |

| AB | AdaBoost classifier |

| Projects | Versions | Total Instances | Defective Instances | Unbalance Ratio r |

|---|---|---|---|---|

| ant | 1.3, 1.4, 1.5, 1.6 | 947 | 184 | 4.147 |

| camel | 1.0, 1.2, 1.4, 1.6 | 2784 | 562 | 3.954 |

| poi | 2 | 314 | 37 | 7.486 |

| synapse | 1.0, 1.1, 1.2 | 635 | 162 | 2.920 |

| log4j | 1 | 138 | 34 | 2.971 |

| jedit | 3.2, 4.0, 4.1, 4.2, 4.3 | 1749 | 303 | 4.772 |

| PDE | 1 | 1497 | 209 | 6.163 |

| JDT | 1 | 997 | 206 | 3.840 |

| velocity | 1.6 | 229 | 78 | 1.936 |

| xerces | 1.2, 1.3 | 893 | 140 | 5.379 |

| mylyn | 1 | 1862 | 245 | 6.600 |

| US-PONR | ||

|---|---|---|

| rus | AUC | |

| ant | 3.8 | 0.8218 |

| camel | 3.9 | 0.8612 |

| JDT | 3.4 | 0.9257 |

| jedit | 4.4 | 0.9381 |

| log4j | 2.2 | 0.8716 |

| mylyn | 5.4 | 0.9116 |

| PDE | 5 | 0.9292 |

| poi | 5.8 | 0.9416 |

| synapse | 2.4 | 0.7811 |

| velocity | 1.9 | 0.7842 |

| xerces | 4.8 | 0.9442 |

| AUC | Time | |||

|---|---|---|---|---|

| US-PONR | SMOTUNED | US-PONR | SMOTUNED | |

| ant | 0.8218 | 0.8896 | 11.12 s | 378 s |

| camel | 0.8612 | 0.9290 | 37.63 s | 6364 s |

| JDT | 0.9257 | 0.8631 | 9.96 s | 585 s |

| jedit | 0.9381 | 0.9445 | 22.77 s | 1635 s |

| log4j | 0.8716 | 0.8766 | 1.04 s | 82 s |

| mylyn | 0.9116 | 0.9003 | 23.25 s | 1594 s |

| PDE | 0.9292 | 0.9061 | 17.52 s | 3029 s |

| poi | 0.9416 | 0.9346 | 2.95 s | 111 s |

| synapse | 0.7811 | 0.8315 | 5.71 s | 598 s |

| velocity | 0.7826 | 0.8399 | 1.64 s | 150 s |

| xerces | 0.9442 | 0.9325 | 9.09 s | 502 s |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ant | |||||||||||||

| 10% noise | 0.8676 | 0.8624 | 0.8571 | 0.8630 | 0.8630 | 0.8716 | 0.7355 | 0.7481 | 0.6997 | 0.7201 | 0.7338 | 0.7628 | 0.8355 |

| 20% noise | 0.7952 | 0.7012 | 0.7338 | 0.7287 | 0.7015 | 0.7080 | 0.6980 | 0.7290 | 0.6792 | 0.7133 | 0.7116 | 0.7457 | 0.7780 |

| 30% noise | 0.7717 | 0.7359 | 0.7461 | 0.7185 | 0.7438 | 0.7314 | 0.7461 | 0.7344 | 0.7008 | 0.6929 | 0.7185 | 0.6945 | 0.7618 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| camel | |||||||||||||

| 10% noise | 0.8522 | 0.8549 | 0.8508 | 0.8470 | 0.8528 | 0.8433 | 0.7653 | 0.7678 | 0.7443 | 0.7730 | 0.7353 | 0.7864 | 0.7523 |

| 20% noise | 0.7985 | 0.7354 | 0.7423 | 0.7610 | 0.7459 | 0.7112 | 0.7251 | 0.7678 | 0.7232 | 0.7366 | 0.7398 | 0.7264 | 0.7021 |

| 30% noise | 0.7774 | 0.7155 | 0.7366 | 0.7508 | 0.7208 | 0.6633 | 0.6990 | 0.7389 | 0.6849 | 0.7309 | 0.7105 | 0.7105 | 0.6809 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| JDT | |||||||||||||

| 10% noise | 0.8960 | 0.7035 | 0.8271 | 0.7648 | 0.7091 | 0.7596 | 0.7989 | 0.8139 | 0.7406 | 0.8004 | 0.7775 | 0.8766 | 0.8859 |

| 20% noise | 0.8166 | 0.6599 | 0.7896 | 0.7251 | 0.7402 | 0.7452 | 0.7993 | 0.7962 | 0.7220 | 0.7685 | 0.7540 | 0.8253 | 0.7100 |

| 30% noise | 0.8020 | 0.6447 | 0.7643 | 0.7181 | 0.7091 | 0.7201 | 0.7738 | 0.7858 | 0.7207 | 0.7758 | 0.7410 | 0.8027 | 0.7028 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| jedit | |||||||||||||

| 10% noise | 0.8865 | 0.8073 | 0.8829 | 0.8762 | 0.8364 | 0.8443 | 0.8720 | 0.8771 | 0.8437 | 0.8824 | 0.8308 | 0.9016 | 0.9061 |

| 20% noise | 0.8882 | 0.8169 | 0.8856 | 0.8479 | 0.8273 | 0.8173 | 0.8558 | 0.8730 | 0.8525 | 0.8724 | 0.8155 | 0.8720 | 0.8816 |

| 30% noise | 0.8804 | 0.8201 | 0.8622 | 0.8383 | 0.7960 | 0.8470 | 0.8350 | 0.8670 | 0.8086 | 0.8375 | 0.7995 | 0.8614 | 0.8589 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| log4j | |||||||||||||

| 10% noise | 0.8684 | 0.7838 | 0.8354 | 0.7949 | 0.7792 | 0.7838 | 0.7532 | 0.8519 | 0.8052 | 0.7586 | 0.7805 | 0.7805 | 0.7887 |

| 20% noise | 0.8108 | 0.8101 | 0.8205 | 0.7397 | 0.7632 | 0.7576 | 0.7342 | 0.8000 | 0.7442 | 0.7750 | 0.7317 | 0.7436 | 0.7619 |

| 30% noise | 0.7945 | 0.7619 | 0.7805 | 0.7536 | 0.7654 | 0.7805 | 0.7686 | 0.7632 | 0.7541 | 0.7394 | 0.7541 | 0.7541 | 0.7448 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| mylyn | |||||||||||||

| 10% noise | 0.8538 | 0.7403 | 0.7889 | 0.7314 | 0.6851 | 0.6731 | 0.8018 | 0.8067 | 0.7526 | 0.7632 | 0.7287 | 0.8688 | 0.8848 |

| 20% noise | 0.7542 | 0.6035 | 0.6871 | 0.6870 | 0.5676 | 0.6823 | 0.7504 | 0.7795 | 0.7277 | 0.7651 | 0.6651 | 0.8293 | 0.8472 |

| 30% noise | 0.7508 | 0.5664 | 0.7027 | 0.6454 | 0.5589 | 0.6546 | 0.6588 | 0.6254 | 0.6196 | 0.7123 | 0.6639 | 0.7699 | 0.8211 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| PDE | |||||||||||||

| 10% noise | 0.7800 | 0.7001 | 0.8040 | 0.7598 | 0.7373 | 0.6843 | 0.8148 | 0.8099 | 0.7282 | 0.7693 | 0.7168 | 0.8900 | 0.8862 |

| 20% noise | 0.7438 | 0.7211 | 0.7776 | 0.7251 | 0.7336 | 0.6602 | 0.7696 | 0.8044 | 0.7509 | 0.7891 | 0.7171 | 0.8529 | 0.8416 |

| 30% noise | 0.7282 | 0.7202 | 0.7522 | 0.7052 | 0.7144 | 0.6699 | 0.7642 | 0.7681 | 0.7402 | 0.7773 | 0.6932 | 0.7798 | 0.8035 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| poi | |||||||||||||

| 10% noise | 0.7259 | 0.7087 | 0.6812 | 0.6777 | 0.6557 | 0.7867 | 0.7717 | 0.7626 | 0.7134 | 0.6479 | 0.6667 | 0.7671 | 0.8056 |

| 20% noise | 0.6612 | 0.6825 | 0.7107 | 0.6614 | 0.5912 | 0.6897 | 0.7813 | 0.7143 | 0.7273 | 0.7412 | 0.6013 | 0.7432 | 0.7285 |

| 30% noise | 0.6947 | 0.6914 | 0.5882 | 0.6301 | 0.6739 | 0.6582 | 0.7647 | 0.6747 | 0.6452 | 0.6905 | 0.6897 | 0.6667 | 0.6410 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| synapse | |||||||||||||

| 10% noise | 0.8037 | 0.5630 | 0.7304 | 0.7009 | 0.6281 | 0.7139 | 0.7295 | 0.7358 | 0.7176 | 0.7586 | 0.7107 | 0.7715 | 0.7988 |

| 20% noise | 0.7866 | 0.6379 | 0.7361 | 0.6988 | 0.6490 | 0.6910 | 0.7330 | 0.6845 | 0.7077 | 0.7431 | 0.6833 | 0.7539 | 0.7818 |

| 30% noise | 0.7510 | 0.6140 | 0.7190 | 0.7335 | 0.6199 | 0.7224 | 0.6846 | 0.6792 | 0.6585 | 0.6732 | 0.6815 | 0.6254 | 0.6649 |

| US-PONR | SOMO | MWMOTE | SMOTE_##3IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| velocity | |||||||||||||

| 10% noise | 0.8496 | 0.7757 | 0.8333 | 0.7724 | 0.7628 | 0.7439 | 0.8171 | 0.7987 | 0.7642 | 0.7561 | 0.7561 | 0.8272 | 0.8067 |

| 20% noise | 0.8190 | 0.7686 | 0.7716 | 0.7371 | 0.7353 | 0.7672 | 0.7457 | 0.7959 | 0.7371 | 0.7802 | 0.7802 | 0.7489 | 0.7974 |

| 30% noise | 0.8163 | 0.7600 | 0.7908 | 0.7398 | 0.7245 | 0.7806 | 0.7398 | 0.7911 | 0.7500 | 0.7194 | 0.7653 | 0.7199 | 0.7449 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| xerces | |||||||||||||

| 10% noise | 0.8616 | 0.7928 | 0.8544 | 0.8087 | 0.7309 | 0.7814 | 0.8477 | 0.8542 | 0.8108 | 0.8298 | 0.8033 | 0.8793 | 0.8734 |

| 20% noise | 0.8535 | 0.7667 | 0.8242 | 0.7849 | 0.7330 | 0.7790 | 0.8316 | 0.8398 | 0.7993 | 0.8379 | 0.7824 | 0.8711 | 0.8700 |

| 30% noise | 0.8448 | 0.6484 | 0.7942 | 0.7928 | 0.7225 | 0.7647 | 0.8321 | 0.8318 | 0.7880 | 0.8100 | 0.7818 | 0.8303 | 0.8441 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ant | |||||||||||||

| 10% noise | 0.0177 | 0.0995 | 0.0675 | 0.0670 | 0.0867 | 0.0662 | 0.0644 | 0.0600 | 0.0643 | 0.0644 | 0.0647 | 0.0643 | 0.0672 |

| 20% noise | 0.0435 | 0.1996 | 0.1479 | 0.1457 | 0.1679 | 0.1448 | 0.1417 | 0.1340 | 0.1415 | 0.1415 | 0.1408 | 0.1413 | 0.1530 |

| 30% noise | 0.0926 | 0.2844 | 0.2416 | 0.2407 | 0.2639 | 0.2405 | 0.2355 | 0.2180 | 0.2355 | 0.2339 | 0.2347 | 0.2354 | 0.2193 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| camel | |||||||||||||

| 10% noise | 0.0185 | 0.0996 | 0.0677 | 0.0650 | 0.0884 | 0.0685 | 0.0682 | 0.0597 | 0.0678 | 0.0682 | 0.0683 | 0.0675 | 0.0637 |

| 20% noise | 0.0496 | 0.1987 | 0.1470 | 0.1439 | 0.1894 | 0.1510 | 0.1480 | 0.1318 | 0.1476 | 0.1480 | 0.1499 | 0.1468 | 0.1468 |

| 30% noise | 0.1002 | 0.2998 | 0.2420 | 0.2390 | 0.2538 | 0.2512 | 0.2432 | 0.2222 | 0.2426 | 0.2406 | 0.2447 | 0.2418 | 0.2132 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| JDT | |||||||||||||

| 10% noise | 0.0181 | 0.0939 | 0.0682 | 0.0678 | 0.0985 | 0.0671 | 0.0682 | 0.0614 | 0.0677 | 0.0683 | 0.0682 | 0.0678 | 0.0650 |

| 20% noise | 0.0472 | 0.1890 | 0.1483 | 0.1475 | 0.1986 | 0.1475 | 0.1220 | 0.1317 | 0.1475 | 0.1478 | 0.1479 | 0.1481 | 0.1510 |

| 30% noise | 0.0929 | 0.2851 | 0.2435 | 0.2419 | 0.2982 | 0.2416 | 0.2431 | 0.2249 | 0.2425 | 0.2422 | 0.2422 | 0.2435 | 0.2170 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| jedit | |||||||||||||

| 10% noise | 0.0156 | 0.0998 | 0.0657 | 0.0653 | 0.0993 | 0.0665 | 0.0658 | 0.0580 | 0.0658 | 0.0658 | 0.0657 | 0.0659 | 0.0674 |

| 20% noise | 0.0399 | 0.1997 | 0.1434 | 0.1434 | 0.1773 | 0.1445 | 0.1438 | 0.1312 | 0.1440 | 0.1441 | 0.1452 | 0.1433 | 0.1531 |

| 30% noise | 0.0872 | 0.3000 | 0.2381 | 0.2369 | 0.2869 | 0.2396 | 0.2371 | 0.2164 | 0.2380 | 0.2375 | 0.2388 | 0.2378 | 0.2188 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| log4j | |||||||||||||

| 10% noise | 0.0198 | 0.0873 | 0.0750 | 0.0725 | 0.0879 | 0.0689 | 0.0737 | 0.0654 | 0.0729 | 0.0747 | 0.0703 | 0.0680 | 0.0829 |

| 20% noise | 0.0435 | 0.1996 | 0.1479 | 0.1457 | 0.1679 | 0.1448 | 0.1417 | 0.1340 | 0.1415 | 0.1415 | 0.1408 | 0.1413 | 0.1530 |

| 30% noise | 0.0926 | 0.2844 | 0.2416 | 0.2407 | 0.2639 | 0.2405 | 0.2355 | 0.2180 | 0.2355 | 0.2339 | 0.2347 | 0.2354 | 0.2193 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| mylyn | |||||||||||||

| 10% noise | 0.0119 | 0.0776 | 0.0634 | 0.0617 | 0.0995 | 0.0694 | 0.0630 | 0.0596 | 0.0633 | 0.0628 | 0.0631 | 0.0629 | 0.0612 |

| 20% noise | 0.0343 | 0.1632 | 0.1386 | 0.1369 | 0.1997 | 0.1531 | 0.1388 | 0.1289 | 0.1385 | 0.1384 | 0.1389 | 0.1383 | 0.1277 |

| 30% noise | 0.0782 | 0.2722 | 0.2317 | 0.2295 | 0.2988 | 0.2571 | 0.2326 | 0.2159 | 0.2321 | 0.2311 | 0.2329 | 0.2318 | 0.2205 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| PDE | |||||||||||||

| 10% noise | 0.0109 | 0.0999 | 0.0642 | 0.0626 | 0.0993 | 0.0624 | 0.0635 | 0.0592 | 0.0634 | 0.0633 | 0.0636 | 0.0634 | 0.0612 |

| 20% noise | 0.0331 | 0.1953 | 0.1403 | 0.1384 | 0.1989 | 0.1395 | 0.1398 | 0.1330 | 0.1395 | 0.1398 | 0.1398 | 0.1396 | 0.1387 |

| 30% noise | 0.0753 | 0.2956 | 0.2339 | 0.2312 | 0.2989 | 0.2339 | 0.2334 | 0.2216 | 0.2332 | 0.2326 | 0.2330 | 0.2326 | 0.2208 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| poi | |||||||||||||

| 10% noise | 0.0084 | 0.0963 | 0.0681 | 0.0572 | 0.0920 | 0.0639 | 0.0630 | 0.0551 | 0.0690 | 0.0632 | 0.0646 | 0.0595 | 0.0608 |

| 20% noise | 0.0284 | 0.1927 | 0.1414 | 0.1338 | 0.1921 | 0.1457 | 0.1385 | 0.1219 | 0.1432 | 0.1424 | 0.1379 | 0.1374 | 0.1295 |

| 30% noise | 0.0718 | 0.2898 | 0.2295 | 0.2167 | 0.2895 | 0.2492 | 0.2359 | 0.2143 | 0.2242 | 0.2306 | 0.2363 | 0.2329 | 0.2192 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| synapse | |||||||||||||

| 10% noise | 0.0263 | 0.0988 | 0.0715 | 0.0716 | 0.0938 | 0.0704 | 0.0722 | 0.0556 | 0.0718 | 0.0714 | 0.0727 | 0.0721 | 0.0756 |

| 20% noise | 0.0614 | 0.1954 | 0.1551 | 0.1526 | 0.1715 | 0.1539 | 0.1548 | 0.1375 | 0.1539 | 0.1547 | 0.1550 | 0.1540 | 0.1473 |

| 30% noise | 0.1148 | 0.2930 | 0.2505 | 0.2497 | 0.2704 | 0.2506 | 0.2493 | 0.2222 | 0.2503 | 0.2507 | 0.2498 | 0.2502 | 0.2838 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| velocity | |||||||||||||

| 10% noise | 0.0365 | 0.0964 | 0.0790 | 0.0791 | 0.0948 | 0.0784 | 0.0775 | 0.0662 | 0.0790 | 0.0791 | 0.0794 | 0.0775 | 0.0695 |

| 20% noise | 0.0885 | 0.1891 | 0.1685 | 0.1614 | 0.1927 | 0.1693 | 0.1694 | 0.1465 | 0.1669 | 0.1719 | 0.1642 | 0.1642 | 0.1925 |

| 30% noise | 0.1460 | 0.2933 | 0.2649 | 0.2595 | 0.2915 | 0.2743 | 0.2669 | 0.2470 | 0.2645 | 0.2665 | 0.2665 | 0.2627 | 0.3000 |

| US-PONR | SOMO | MWMOTE | SMOTE_ IPF | SMOTE_ RSB | SMOTE_ FRST_2T | SMOTE | SMOTE_ TomekLinks | Borderline_ SMOTE2 | ADASYN | MSMOTE | Gaussian_ SMOTE | CCR | |

| xerces | |||||||||||||

| 10% noise | 0.0134 | 0.0818 | 0.0649 | 0.0641 | 0.0924 | 0.0718 | 0.0643 | 0.0435 | 0.0645 | 0.0640 | 0.0647 | 0.0648 | 0.0634 |

| 20% noise | 0.0367 | 0.1670 | 0.1437 | 0.1409 | 0.1878 | 0.1641 | 0.1419 | 0.1062 | 0.1418 | 0.1420 | 0.1418 | 0.1417 | 0.1412 |

| 30% noise | 0.0718 | 0.2553 | 0.2357 | 0.2340 | 0.2724 | 0.2654 | 0.2349 | 0.1903 | 0.2356 | 0.2354 | 0.2352 | 0.2355 | 0.2222 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, H.; Ai, J.; Liu, J.; Xu, J. Improving Software Defect Prediction in Noisy Imbalanced Datasets. Appl. Sci. 2023, 13, 10466. https://doi.org/10.3390/app131810466

Shi H, Ai J, Liu J, Xu J. Improving Software Defect Prediction in Noisy Imbalanced Datasets. Applied Sciences. 2023; 13(18):10466. https://doi.org/10.3390/app131810466

Chicago/Turabian StyleShi, Haoxiang, Jun Ai, Jingyu Liu, and Jiaxi Xu. 2023. "Improving Software Defect Prediction in Noisy Imbalanced Datasets" Applied Sciences 13, no. 18: 10466. https://doi.org/10.3390/app131810466

APA StyleShi, H., Ai, J., Liu, J., & Xu, J. (2023). Improving Software Defect Prediction in Noisy Imbalanced Datasets. Applied Sciences, 13(18), 10466. https://doi.org/10.3390/app131810466