Towards Feasible Capsule Network for Vision Tasks

Abstract

:1. Introduction

- We explore deeper architectures to unlock the capabilities of CapsNets;

- By leveraging the strengths of various backbone models, we propose a capsule head wrapping (CapsHead) approach and carefully experiment with modifications to the capsule head and routing mechanism;

- We aim to enhance the expressivity and performance of CapsHead in tasks such as classification, medical image segmentation, and semantic segmentation.

2. Related Works

3. Methods

3.1. Preliminaries

- Dynamic routing [14]: The agreement is measured by cosine similarity, and the coupling coefficients are updated as follows:

- 2.

- EM routing [28]: This refers to using an EM algorithm to determine the coupling as a mixture coefficient with cluster assumption that the votes are distributed around a parent capsule.

- 3.

- Max–min routing [50]: Instead of using SoftMax, which limits the range of routing coefficients and results in mostly uniform probabilities, this study proposes the utilization of max–min normalization. Max–min normalization ensures a scale-invariant approach to normalize the logits.

- 4.

- Fuzzy routing [21]: To address the computational complexity of EM routing, Vu et al. introduce a routing method based on fuzzy clustering, where the coupling between capsules is represented by fuzzy coefficients. This approach offers a more efficient alternative to EM routing, reducing the computational demands, while still enabling effective information flow between capsules.

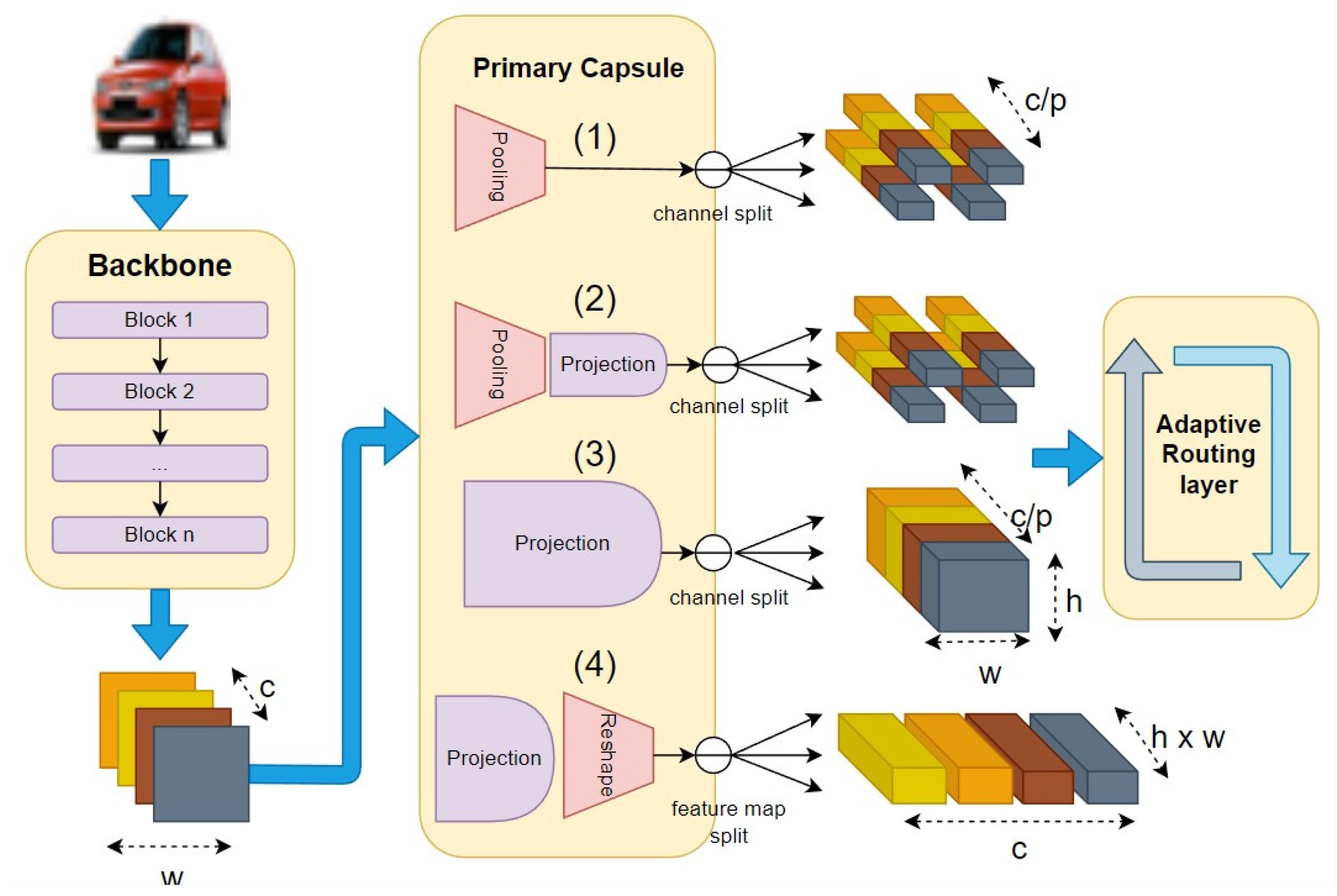

3.2. Hybrid-Architecture Capsule Head

- (1)

- In the first design, we add adaptive average pooling to reduce the feature maps’ dimension and a fully connected capsule layer. This configuration enables the transformation of backbone features into capsules through the primary caps layer, followed by routing through the FCCaps layer.

- (2)

- In the second design, we again employ average pooling after the backbone feature extraction, followed by a projection operation to enhance the capacity of the embedding space. Then, we split by channel dimension to aggregate the capsules. Subsequently, routing is applied to these capsules to get the next-layer capsules.

- (3)

- In the third design, we remove the average pooling, but keep the projection and channel splitting. By adopting these modifications, the routing layer can effectively capture spatial information, making it well-suited for segmentation tasks. However, for classification tasks, we extend the functionality by incorporating capsule pooling, which allows us to reduce the number of class capsules to the desired target.

- (4)

- Lastly, the fourth design directly explores the splitting of feature maps, followed by projection and adaptive capsule routing. This configuration enables a more adaptive and flexible routing mechanism based on the spatial characteristics of the feature maps.

- -

- Firstly, we consider whether the feature maps extracted from the backbone model are before or after the pooling layer. For one-dimensional feature maps, after the pooling layer, which represent high-level features condensed into a single vector, they can be directly used for linear evaluation and analysis. On the other hand, two-dimensional feature maps, before the pooling layer, capture rich contextual information, particularly beneficial for interpreting the entire model or visualizing the learned features.

- -

- The second criterion pertains to the interpretation of capsules. Capsules can be seen as encapsulating either channels or feature maps. In the channel-based interpretation, a capsule represents a pose vector constructed at a specific 1-pixel location, with the channel dimension serving as the capsule pose. The total number of capsules is determined by the number of pixel locations. Alternatively, in the feature map-based interpretation, each feature map constitutes a capsule, and we utilize average adaptive pooling to obtain the desired dimension of the capsule pose. In this case, the channel size corresponds to the number of capsules.

- -

- Lastly, we consider the mapping of feature vectors to the primary capsule space. We provide the flexibility of either directly using the feature space spanned by the backbone model or incorporating a non-linear projection head to map the feature vectors to the primary capsule space. This allows for a more tailored and optimized representation of capsules. In this study, we craft the projection head using a multi-layer perceptron with two-to-three layers, incorporating non-linear activation functions like ReLU.

4. Experiments

4.1. Dataset

- -

- CIFAR10: CIFAR10 is an image classification dataset that contains a total of images. It consists of 10 different classes, with images per class. Each image is a color image, making it a widely used benchmark for evaluating image classification algorithms.

- -

- CIFAR100: CIFAR100 is an extension of CIFAR10, offering more fine-grained labeling. It comprises a total of images across classes, with images per class. This dataset provides a challenging task for fine-grained image classification, enabling researchers to evaluate algorithms with increased specificity.

- -

- LungCT-Scan: The LungCT-Scan dataset is designed specifically for lung image analysis in medical imaging research. It consists of computed tomography (CT) scan images of the lungs. The purpose of the dataset is for image segmentation. We used images for training and images for validation.

- -

- VOC-2012: VOC-2012, or the PASCAL VOC dataset, is a benchmark dataset for object detection, segmentation, and classification. It consists of approximately images in total. The dataset includes annotations such as object bounding boxes and pixel-level segmentation for various object categories. In this study, we use images for training and images for validation.

4.2. Configurations

4.3. Results

4.3.1. Linear Evaluation of the Classification Task

4.3.2. Performance on Segmentation Task

4.3.3. Pretrained and Fine-Tuned Evaluation

- -

- CapsNet with a pre-trained backbone: The pre-trained model serves as the starting point, and we subsequently fine-tune the entire network, including the CapsHead.

- -

- CapsNet without a pre-trained backbone: Here, the entire network, including the capsule structures, is trained from scratch on the target downstream task.

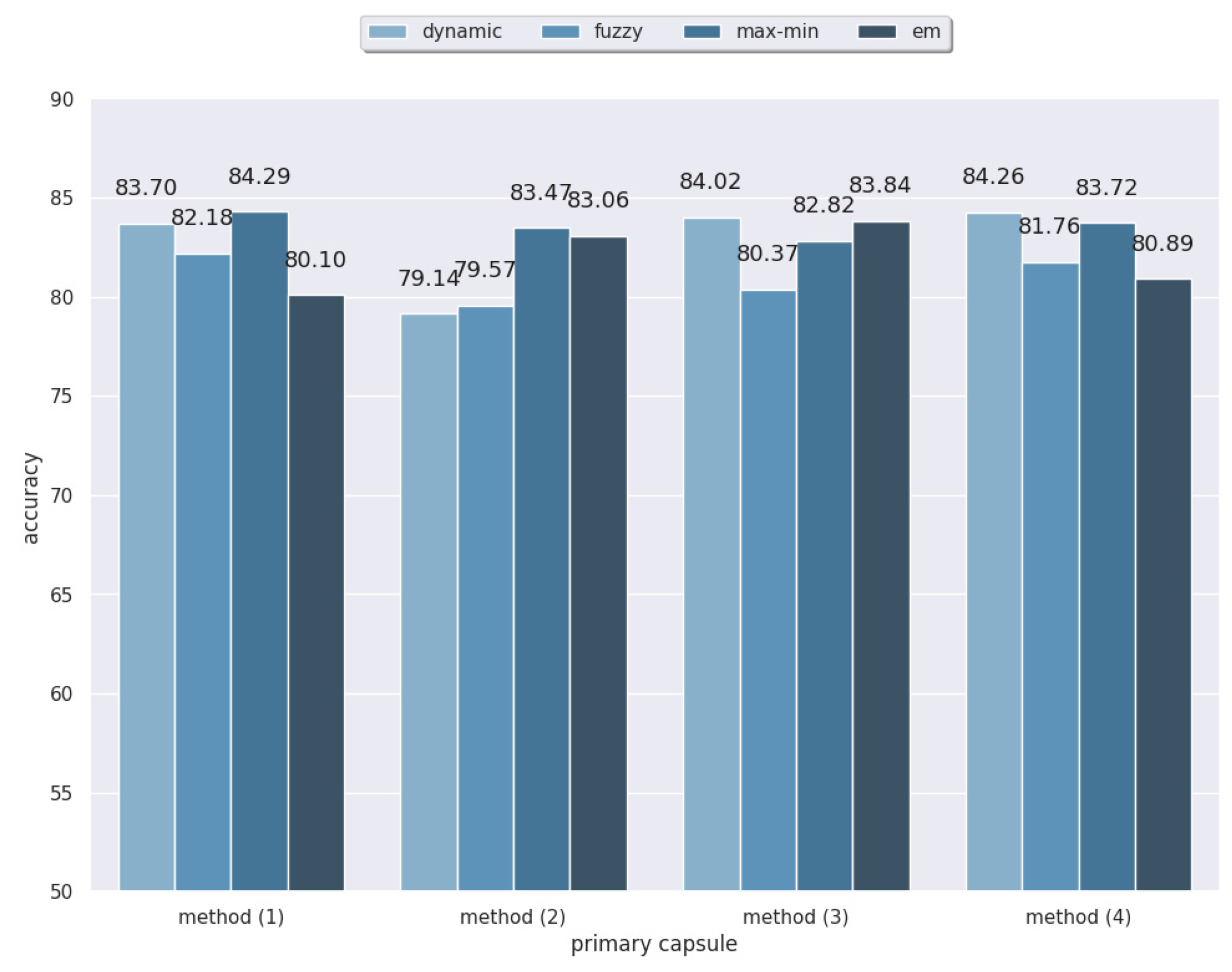

4.3.4. Ablation Study

4.3.5. Limitation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN(s) | Convolutional neural network(s) |

| CapsNet(s) | Capsule network(s) |

| CapsHead(s) | Capsule head(s) |

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Dang, W.; Xiang, L.; Liu, S.; Yang, B.; Liu, M.; Yin, Z.; Yin, L.; Zheng, W. A Feature Matching Method based on the Convolutional Neural Network. J. Imaging Sci. Technol. 2023, 67, 030402. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Alexey, D.; Lucas, B.; Alexander, K.; Dirk, W.; Xiaohua, Z.; Thomas, U.; Mostafa, D.; Matthias, M.; Georg, H.; Sylvain, G.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ruderman, A.; Rabinowitz, N.C.; Morcos, A.S.; Zoran, D. Pooling is neither necessary nor sufficient for appropriate deformation stability in CNNs. arXiv 2018, arXiv:1804.04438. [Google Scholar]

- Hinton, G.E.; Krizhevsky, A.; Wang, S.D. Transforming auto-encoders. In Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2011: 21st International Conference on Artificial Neural Networks, Espoo, Finland, 14–17 June 2011. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Kulkarni, T.D.; Whitney, W.; Kohli, P.; Tenenbaum, J.B. Deep convolutional inverse graphics network. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Hahn, T.; Pyeon, M.; Kim, G. Self-routing capsule networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Marchisio, A.; Nanfa, G.; Khalid, F.; Hanif, M.A.; Martina, M.; Shafique, M. Capsattacks: Robust and imperceptible adversarial attacks on capsule networks. arXiv 2019, arXiv:1901.09878. [Google Scholar]

- Nguyen, H.H.; Yamagishi, J.; Echizen, I. Capsule-forensics: Using Capsule Networks to Detect Forged Images and Videos. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2307–2311. [Google Scholar] [CrossRef]

- Shahroudnejad, A.; Afshar, P.; Plataniotis, K.N. Improved Explainability of Capsule Networks: Relevance Path by Agreement. arXiv 2018, arXiv:1802.10204. [Google Scholar]

- Deliège, A.; Cioppa, A.; Droogenbroeck, M.V. HitNet: A neural network with capsules embedded in a Hit-or-Miss layer, extended with hybrid data augmentation and ghost capsules. arXiv 2018, arXiv:1806.06519. [Google Scholar]

- Dang, T.V.; Vo, H.T.; Yu, G.H.; Kim, J.Y. Capsule network with shortcut routing. EICE Trans. Fundam. Electron. Commun. Comput. Sci. 2021, 8, 1043–1050. [Google Scholar] [CrossRef]

- Mazzia, V.; Salvetti, F.; Chiaberge, M. Efficient-capsnet: Capsule network with self-attention routing. Sci. Rep. 2021, 11, 14634. [Google Scholar] [CrossRef] [PubMed]

- Xi, E.; Bing, S.; Jin, Y. Capsule Network Performance on Complex Data. arXiv 2017, arXiv:1712.03480. [Google Scholar]

- Li, H.; Guo, X.; Dai, B.; Ouyang, W.; Wang, X. Neural network encapsulation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Nair, P.; Doshi, R.; Keselj, S. Pushing the Limits of Capsule Networks. arXiv 2021, arXiv:2103.08074. [Google Scholar]

- Xiong, Y.; Su, G.; Ye, S.; Sun, Y.; Sun, Y. Deeper capsule network for complex data. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Patrick, M.K.; Adekoya, A.F.; Mighty, A.A.; Edward, B.Y. Capsule Networks—A survey. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 1295–1310. [Google Scholar]

- Hinton, G.E.; Sabour, S.; Frosst, N. Matrix capsules with EM routing. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Yang, Z.; Wang, X. Reducing the dilution: An analysis of the information sensitiveness of capsule network with a practical improvement method. arXiv 2019, arXiv:1903.10588. [Google Scholar]

- Neill, J.O. Siamese Capsule Networks. arXiv 2018, arXiv:1805.07242. [Google Scholar]

- Xiang, C.; Zhang, L.; Tang, Y.; Zou, W.; Xu, C. MS-CapsNet: A Novel Multi-Scale Capsule Network. IEEE Signal Process. Lett. 2018, 25, 1850–1854. [Google Scholar] [CrossRef]

- Chen, Z.; Crandall, D. Generalized Capsule Networks with Trainable Routing Procedure. arXiv 2018, arXiv:1808.08692. [Google Scholar]

- Jiménez-Sánchez, A.; Albarqouni, S.; Mateus, D. Capsule Networks against Medical Imaging Data Challenges. arXiv 2018, arXiv:1807.07559. [Google Scholar]

- Phaye, S.S.R.; Sikka, A.; Dhall, A.; Bathula, D. Dense and Diverse Capsule Networks: Making the Capsules Learn Better. arXiv 2018, arXiv:1805.04001. [Google Scholar]

- Jia, B.; Huang, Q. DE-CapsNet: A diverse enhanced capsule network with disperse dynamic routing. Appl. Sci. 2020, 10, 884. [Google Scholar] [CrossRef]

- Gugglberger, J.; Peer, D.; Rodríguez-Sánchez, A. Training Deep Capsule Networks with Residual Connections. arXiv 2021, arXiv:2104.07393. [Google Scholar]

- Mandal, B.; Sarkhel, R.; Ghosh, S.; Das, N.; Nasipuri, M. Two-phase Dynamic Routing for Micro and Macro-level Equivariance in Multi-Column Capsule Networks. Pattern Recognit. 2021, 109, 107595. [Google Scholar] [CrossRef]

- Mobiny, A.; Nguyen, H.V. Fast CapsNet for Lung Cancer Screening. arXiv 2018, arXiv:1806.07416. [Google Scholar]

- Amer, M.; Maul, T. Path Capsule Networks. Neural Process. Lett. 2020, 52, 545–559. [Google Scholar] [CrossRef]

- Kosiorek, A.R.; Sabour, S.; Teh, Y.W.; Hinton, G.E. Stacked Capsule Autoencoders. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Wang, J.; Guo, S.; Huang, R.; Li, L.; Zhang, X.; Jiao, L. Dual-Channel Capsule Generation Adversarial Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L. Remote Sensing Image Scene Classification Using CNN-CapsNet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Duarte, K.; Rawat, Y.; Shah, M. VideoCapsuleNet: A Simplified Network for Action Detection. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Saqur, R.; Vivona, S. CapsGAN: Using Dynamic Routing for Generative Adversarial Networks. arXiv 2018, arXiv:1806.03968. [Google Scholar]

- Jaiswal, A.; AbdAlmageed, W.; Wu, Y.; Natarajan, P. CapsuleGAN: Generative Adversarial Capsule Network. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- LaLonde, R.; Bagci, U. Capsules for object segmentation. arXiv 2018, arXiv:1804.04241. [Google Scholar]

- Afshar, P.; Mohammadi, A.; Plataniotis, K.N. Brain tumor type classification via capsule networks. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Tsai, Y.-H.H.; Srivastava, N.; Goh, H.; Salakhutdinov, R. Capsules with Inverted Dot-Product Attention Routing. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Zhang, S.; Zhou, Q.; Wu, X. Fast dynamic routing based on weighted kernel density estimation. In Proceedings of the International Symposium on Artificial Intelligence and Robotics, Nanjing, China, 24–25 November 2018. [Google Scholar]

- Zhao, Z.; Kleinhans, A.; Sandhu, G.; Patel, I.; Unnikrishnan, K.P. Capsule Networks with Max-Min Normalization. arXiv 2019, arXiv:1903.09662. [Google Scholar]

- Bahadori, M.T. Spectral Capsule Networks. In Proceedings of the International Conference on Learning Representations Workshops, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Rajasegaran, V.J.J.; Jayasekara, S.; Jayasekara, H.; Seneviratne, S.; Rodrigo, R. DeepCaps: Going Deeper with Capsule Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, L.; Edraki, M.; Qi, G.-J. CapProNet: Deep Feature Learning via Orthogonal Projections onto Capsule Subspaces. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Lenssen, J.E.; Fey, M.; Libuschewski, P. Group Equivariant Capsule Networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

| Dataset | Classifier | Settings | Accuracy |

|---|---|---|---|

| CIFAR 10 | SVM | 89.2 | |

| FC | 2 hidden layers | 89.1 | |

| FC | 1 layer | 87.97 | |

| CapsHead | Max-Min, setting (3) | 89.18 | |

| CIFAR 100 | SVM | 68.02 | |

| FC | 2 hidden layers | 66.82 | |

| FC | 1 layer | 66.43 | |

| CapsHead | Dynamic, setting (3) | 69.47 |

| Dataset | Backbone | Head | Dicescore |

|---|---|---|---|

| CT-Lung-Scan | FCN | CNN | 96.06 |

| Deeplab | 97.69 | ||

| FCN | CapsHead | 97.59 | |

| Deeplab | 97.70 | ||

| VOC 2012 | FCN | CNN | 80.24 |

| Deeplab | 80.27 | ||

| FCN | CapsHead | 86.58 | |

| Deeplab | 86.11 |

| Dataset | Backbone | Scratch | With Pre-Trained |

|---|---|---|---|

| CIFAR 10 | ResNet18 | 83.7 | 94.08 |

| DenseNet | 86.03 | 94.97 | |

| CIFAR 100 | ResNet18 | 54.29 | 73.03 |

| DenseNet | 55.08 | 79.91 |

| Tunning | Value | Accuracy | Params of Capsule Head |

|---|---|---|---|

| Primary Capsule | (1) | 83.7 | 700 K |

| (2)—2 hidden layers | 83.21 | 1.5 M | |

| (3)—2 hidden layers | 84.02 | 5.6 M | |

| (4)—2 hidden layers | 84.26 | 1 M | |

| Routing Method | Dynamic | 83.7 | |

| Max–Min | 80.64 | ||

| EM | 80.1 | ||

| Fuzzy | 82.18 |

| Dataset | Study | Accuracy (%) |

|---|---|---|

| CIFAR 10 | HitNet [20] | 73.3 |

| Two-phase routing [37] | 75.82 | |

| KDE Routing [49] | 84.6 | |

| DCNET++ [34] | 89.32 | |

| Self-Routing [16] | 92.14 | |

| DeepCaps [52] | 92.74 | |

| DE-CapsNet [35] | 92.96 | |

| Encapsulation [24] | 95.45 | |

| CapsHead (ours) | 94.97 | |

| CIFAR 100 | Encapsulation [24] | 73.33 |

| CapsHead (ours) | 79.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vu, D.T.; An, L.B.T.; Kim, J.Y.; Yu, G.H. Towards Feasible Capsule Network for Vision Tasks. Appl. Sci. 2023, 13, 10339. https://doi.org/10.3390/app131810339

Vu DT, An LBT, Kim JY, Yu GH. Towards Feasible Capsule Network for Vision Tasks. Applied Sciences. 2023; 13(18):10339. https://doi.org/10.3390/app131810339

Chicago/Turabian StyleVu, Dang Thanh, Le Bao Thai An, Jin Young Kim, and Gwang Hyun Yu. 2023. "Towards Feasible Capsule Network for Vision Tasks" Applied Sciences 13, no. 18: 10339. https://doi.org/10.3390/app131810339

APA StyleVu, D. T., An, L. B. T., Kim, J. Y., & Yu, G. H. (2023). Towards Feasible Capsule Network for Vision Tasks. Applied Sciences, 13(18), 10339. https://doi.org/10.3390/app131810339