Abstract

When a robotic manipulator interacts with its environment, the end-effector forces need to be measured to assess if a task has been completed successfully and for safety reasons. Traditionally, these forces are either measured directly by a 6-dimensional (6D) force–torque sensor (mounted on a robot’s wrist) or by estimation methods based on observers, which require knowledge of the robot’s exact model. Contrary to this, the proposed approach is based on using an array of low-cost 1-dimensional (1D) strain gauge sensors mounted beneath the robot’s base in conjunction with time series neural networks, to estimate both the end-effector 3-dimensional (3D) interaction forces as well as robot joint torques. The method does not require knowledge of robot dynamics. For comparison reasons, the same approach was used but with 6D force sensor measurements mounted beneath the robot’s base. The trained networks showed reasonably good performance, using the long-short term memory (LSTM) architecture, with a root mean squared error (RMSE) of 1.945 N (vs. 2.004 N; 6D force–torque sensor-based) for end-effector force estimation and 3.006 Nm (vs. 3.043 Nm; 6D force–torque sensor-based) for robot joint torque estimation. The obtained results for an array of 1D strain gauges were comparable with those obtained with a robot’s built-in sensor, demonstrating the validity of the proposed approach.

1. Introduction

Over the past few decades, robots have become extremely prevalent and can be found in various sectors, including automated industrial processes and beyond. As a result, the robotics industry has experienced rapid growth and has become a popular field of research, constantly developing new and innovative robotic applications. Recent reports have emphasised the significance of this industry, estimating the global robotics market’s value to be nearly 28 billion dollars in 2020 [1]. Furthermore, forecasts predict that the market value will reach approximately 74 billion dollars by 2026, largely driven by the high demand for robots in industries such as automotive and logistics. This increased interest in robots of all types can be attributed to advancements in automation and robotics, which have made it possible to utilise them in demanding applications while simultaneously reducing operating costs.

A series of pre-programmed trajectories are often repeatedly executed by robotic manipulators in a so-called position control scheme. However, this control scheme is ineffective in circumstances where knowledge of the robotic manipulator’s configuration does not assure accurate task completion and in situations where a robot is interacting dynamically with its environment. For such situations, force control, also known as interaction control, is required [2]. In such cases, force–torque sensors are used to measure interaction forces and torques in order to interpret the interaction [3,4]. Additionally, force control is frequently appropriate when the robot collaborates with or interacts with a human. Force control, however, is not advantageous when the robot is moving through open space (i.e., there is no environmental interaction). Therefore, a mix of force and position control is often employed. A force–torque sensor is mounted at the robot’s tip in the majority of contemporary applications, i.e., in the wrist of the robotic arm. Measuring interaction forces using standard 6-dimensional (6D) force–torque sensors, however, can occasionally be difficult, particularly when using robots with small payloads and when cost-effectiveness is of high importance.

Since the workspace of the robotic manipulator is constrained, it can be expanded by mounting it on a portable platform. Thus, the term mobile manipulator then applies to this type of robot [5,6]. It is typically convenient to mount small robotic manipulators onto moving platforms due to weight restrictions. However, the payload of these manipulators is frequently insufficient for a force sensor to fit on the end-effector while still allowing them to carry out their intended action.

In our earlier research, we suggested the techniques [7,8] for estimating forces acting on the robot end-effector by detecting forces with a 6D force–torque sensor mounted beneath the robot base. This approach used neural networks for data-driven implicit modelling of robot dynamics. In order to infer the end-effector forces using a neural network, the method used forces obtained by the base-mounted sensor and robot kinematics data (joint positions, velocities, and accelerations) provided by the robot controller. This strategy has the advantage of not consuming any payload and leaving all payload available for a specific task. This study builds on that approach by extending it so that force–torque sensors can be replaced with a more cost-effective alternative in the form of an array of simple 1-dimensional (1D) strain gauges [9]. Thus, this paper aims to prove that this research is viable in real-world setups for the estimation of end-effector forces and robot joint torques. It is based on the hypothesis that a set of 1D strain gauges positioned off-center beneath the robot base can provide sufficient data to reliably and accurately estimate needed physical quantities.

The rest of the paper is organised as follows. Section 2 reviews the current state of the art and identifies manuscript contributions in relation to it. The preliminary information and experimental setups are presented in Section 3; the obtained results are reported, analysed, and discussed in Section 4. In Section 5, conclusions are drawn and recommendations for subsequent research on the subject are given.

2. Related Work and Contributions

The small available payload in some robots makes it advantageous to try to remove the force sensor from the robot while trying to keep some type of sensor measurement. One way of trying to deal with the issue is to use small weight (and size) force–torque sensors (like https://www.ati-ia.com/products/ft/ft_ModelListing.aspx, accessed on 25 August 2023). However, their small size introduces new challenges in their positioning and mounting on the robot, which, in turn, can result in a weight increase due to the need for an adapter plate and wiring. Using force estimation methods in place of direct measurements made with physical sensors is another way to solve the issue, according to [10]. Physical measurements may also be used to supplement the estimated forces, because they can be used in conjunction with the measured forces to provide additional information. An excellent illustration is being able to differentiate between the effects of inertial forces brought on by the acceleration of a heavy tool and contact forces with the environment, as both of these forces would be detected by a force sensor at the robot’s wrist, as presented in [11].

2.1. Neural Networks for Force Estimation in Robotics

Force estimation in robotics was mostly based on force observers in the past [11,12,13,14,15]. With knowledge of the control forces and torques, these estimates are deduced using the measured pose data. The multilayer perceptron (MLP) network [16] and recurrent neural networks [17] are two examples of neural networks that have been introduced using certain force observer-based methods. Deep neural networks are making a comeback in a variety of fields, including robotics. Robotics has used deep neural networks to address a variety of issues, including grasping and control [18,19]. However, force estimation in robotics has not been extensively addressed by deep learning. Still, there are a few interesting studies on the subject.

Neural networks were used in [20] as an extension of the force observer, which needed to have available the precise dynamic model of a manipulator, which is usually known but is unreliable, or inaccurate. The results of the force estimation are, therefore, unsatisfactory in that case. The inverse dynamics of a robotic manipulator could be resolved, according to the authors, using neural networks. The developed neural network model, as was demonstrated in the paper, was more precise than classical force observers. Furthermore, it was simpler to implement since understanding the dynamic model of a robotic manipulator was not required. In [17], a deep neural network-based observer was used to estimate non-contact forces arising from inertia and which interfere with measurements of contact forces. It was shown that in a highly dynamic motion, the proposed neural network outperformed the analytical method based on the identified inertial parameters. A similar motivation was behind the work in [21], where a neural network was used to estimate free space motion robot joint torques. These values were then subtracted from the ones measured by joint motors, and, based on that, tool interaction forces were estimated. The proposed method was able to estimate outside forces within 10% normalised root mean squared error (NRMSE) compared to an external force sensor, outperforming model-based approaches. There are also methods based on neural networks developed with particular applications in mind. For instance, in robotic surgery [22], the force was estimated without force sensors using visual feedback and neural networks. There are also approaches, like the one in [23], which combine visual information with robot state information in order to increase neural network estimation accuracy (to 0.488 N root mean squared error (RMSE)) to the point of outperforming physics-based models.

There have recently been advances made in neural network-based techniques that can learn the inverse dynamic models of robots [24,25]. These techniques incorporate prior knowledge from the system being modelled while simultaneously learning it. Energy conservation law establishes constraints that improve performance by formulating prior knowledge for physical systems. It was emphasised that deep learning had excelled in every area of application, with the exception of physical systems (at the time) [24]. So, the Deep Lagrangian Network (DeLaN), a novel model that made use of system prior knowledge, was constructed. Before developing more precise models and guaranteeing physical validity while ensuring that the models can effectively extrapolate new samples, the Euler–Lagrange equation was utilised as the underlying physics prior. Sometimes multilayer perceptrons overfit training data, since they are not required to follow the energy conservation law and because they need more training data to generalise correctly in these situations. On the other hand, the Euler–Lagrange equations were applied as a neural network in [24] to achieve more realistic performance. The Lagrangian neural network (LNN), a more versatile technique for learning arbitrary Lagrangians, was introduced in [25]. The paper also gave an overview of physical dynamics models based on neural networks. It was demonstrated that the model created using this method successfully complies with energy efficiency. The findings demonstrated that, in contrast to standard neural networks, using a LNN almost strictly conserves energy.

2.2. Strain Gauges for Force Measurements in Robotics

Simple strain gauges and load cells have found their application in a number of areas including robotics. In [26], four simple 1D 50 N load cells were used to construct force-sensing shoes for the Nao robot. The mechanical construction consisted of two plates between which four load cells were sandwiched; a design similar to that of standard force plates. The sensors were calibrated and were used to measure the centre of pressure and ground reaction forces beneath each foot. Obtained results showed significant improvement in accuracy and precision in both targeted parameters over robots built in force-sensing resistor systems. A similar sandwich-based design with two, four load cell configurations with an 8 N measurement range was used in [27]. The authors designed a 3-axis force sensor for use with robot-assisted minimally invasive surgery applications in a teleoperation setting. The sensor achieved an average error below 0.15 N in all directions, and an average grip force error of 0.156 N.

Simple load cells can also be used in legged robots for ground reaction force estimation while being embedded in their mechanical construction, as was the case in [28]. In this research, the authors used two 10 kg load cells (TAL220), positioned at fixed angles and rigidly mounted to machine-milled pieces which were part of the robot legs. Measured data were then processed by a neural network which estimated the required 2-dimensional (2D) ground reaction forces. These forces were estimated with an accuracy of above %. Simple strain gauges can also be used for different applications within wheeled mobile robots, like in [29], where authors used several bent sensors to measure 3-dimensional (3D) forces of payload placed on a mobile robot platform. Several different designs were tested in simulation only, and the best one measured payload mass with an accuracy of kg, and lever lengths with an accuracy of m.

More complex sensor structures can be constructed out of simple (single) strain gauge cells, as was carried out in [30], where four strain gauges were used to measure 2D forces and torque. The obtained results demonstrated that force and torque measurements could be decoupled by combining measurements from different strain gauge sets. In their conclusion, the authors state that this type of sensor might be appropriate for robotic applications. Another such example, but of a more complex sensor structure, is presented in [31], where the authors proposed the development of a complex humanoid robot. One part of its sensing and control structure was a 6-axis sensor which made use of load cells mounted on all sides of each spoke, in a three-spoke design. The complex sensor body was machined in order to obtain a linear response in the strain–stress relationship. The sensor performance was not tested in the manuscript.

The closest research to our own (in the hardware part) can be found in [32], where a very similar design (with three load cells and four strain gauges in four-spoke architecture) was used to measure robot base forces/torques with the aim of automated tool-changing application. However, the signal processing part is significantly different and more complex since it is based on an accurate robot model with the crucial step of identification of the dynamic parameters. Based on the model, two custom calibration procedures were developed. Also, the authors stated that the mechanical design used is robot-specific, which is not the case in our approach, where neural networks are robot-specific. The paper demonstrated the successful implementation of the approach in tool-changing tasks but did not provide a numerical comparison between estimated and measured forces.

Based on the initial hypothesis and presented state-of-the-art review, the following contributions of the manuscript can be defined:

- Development of a general and simple method for estimation of end-effector interaction forces for complex real robots using an array of 1D strain gauges and deep neural networks. The method does not require special calibration nor the knowledge of the (exact) robot model.

- Estimation of robot joint torques through the implicit robot model, learned via deep neural networks using the developed method.

- Experimental verification of the proposed approach in comparison to the 6-axis force–torque sensor, through extensive testing.

3. Materials and Methods

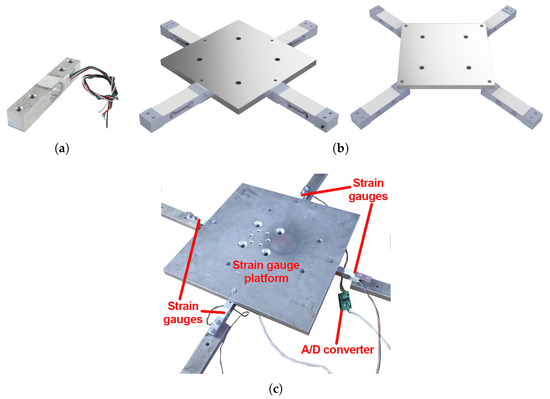

In this paper, we propose an approach in which a platform with four generic 20 kg one-dimensional force sensors based on strain gauges (Figure 1a) is used instead of the 6D force–torque sensor. These one-dimensional force sensors were put into an approximately rectangular configuration, as shown in Figure 1b, on top of which an aluminium plate with a robot mounted on it was placed. Note that strain gauge sensors do not need to be placed in the strictly and precisely rectangular configuration. This is due to neural networks’ ability to learn from data as is. Therefore, the only strict requirement is that once sensors are in place, their placement should not be changed during the experiment. Finally, note that the platform shown in Figure 1c was for presentation purposes and was not used in the actual experiments.

Figure 1.

The conceptual presentation of a base force measurement platform with multiple strain gauges. (a) Strain gauge. (b) Possible placement of four strain gauges in regards to the platform scenarios (the right one was used in the experiment). (c) Practical realisation of the left concept from subfigure (b).

Two experiments were performed in this research, and the results of each of them are presented in a separate subsection of the paper. Both experiments were conducted on the described platform and strain gauge measurements were made available by using the HX711 24-bit analog-digital (A/D) converter and Arduino Uno (the same Arduino board was used to acquire data from all of the strain gauge sensors simultaneously). Please note that, during the study, raw A/D converter outputs (i.e., number of levels) were used as neural network inputs, without the need for any additional calibration or data processing.

The experiments consisted of the data collection phase and the neural networks training/testing phase. All relevant data were collected at once (i.e., they were time-stamped for later software synchronisation) and were used both for training neural networks for end-effector force estimation and joint-side torque estimation. Please note that the 6D force–torque sensor had a sampling rate of 100 Hz, while the strain gauge-based subsystem had a sampling rate of 10 Hz.

3.1. Data Collection

The conducted experiments were performed using a real-world UR5e, a 6 degrees of freedom (DoF) collaborative robotic manipulator. The robot was mounted on the platform and fixed (bolted) on a flat surface (i.e., aluminium table). Furthermore, in this experiment, along with the proposed force platform based on strain gauges, the 6D force–torque sensor (JR3 model 45E) was used in order to compare the quality of the obtained results using two different force-sensing devices on the same dataset. In all experiments, data obtained from the UR5e robot and its controller (e.g., joint positions, joint velocities, tool forces/torques, joint motor currents) [33] were used as ground truth for the neural network training and are referred to hereafter as measured values. These data are often used as the ground truth for different applications in the literature [4,34].

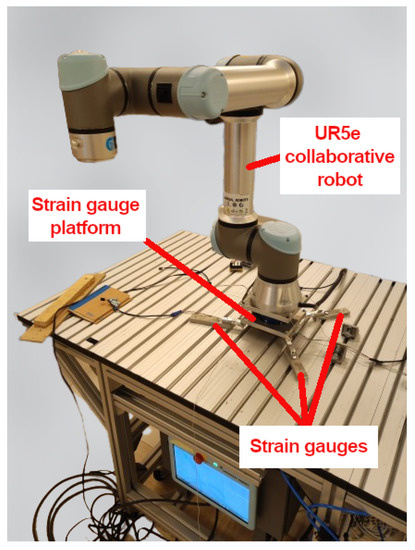

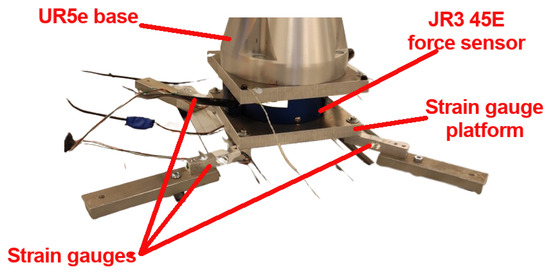

The experimental setup for this experiment is shown in Figure 2, while the close-up view of the force sensing setup is given in Figure 3 (where the aluminium table was removed by image-processing tool, for clarity reasons). As can be seen from the figures, the whole sensor setup has a sandwich-like structure where strain gauges are fixed to a flat aluminium table via small pieces of aluminium blocks on one side (in order to be elevated and, thus, function properly), and to the lower base plate (marked “Strain gauge platform”) on the other side. On top of the lower base plate, the JR3 sensor is mounted with the upper base plate mounted on top of the JR3 sensor. Finally, on top of the upper base plate, the UR5e robot manipulator was securely mounted. All mounting was performed using nuts and bolts of appropriate diameters. It should be noted that, due to individual hardware component dimensions and shapes (6D force–torque sensor and strain gauge platform), this was the only viable way of connecting them. However, this configuration has a disadvantage in that, due to small strain gauge deformations during the measurement, the platform can move slightly, affecting 6D sensor measurement, since its bottom part is connected to it. Thus, this should be kept in mind when interpreting and comparing obtained results. Finally, since the 6D sensor was placed on top of the strain gauge platform, it had an effect on the robot model implicitly learned by the network (i.e., the same network would not work if the sensor was removed and the robot placed directly on the platform). This additional complexity added to neural network learning can, in our view, have only negative effects on accuracy, thus making the obtained results a kind of worst-case scenario for the strain gauge platform.

Figure 2.

Experimental setup with force sensor and strain gauge platform bolted to a flat aluminium table.

Figure 3.

The close-up view of the force sensing setup with UR5 robotic manipulator (without the surface it is bolted to).

The approach to data collection was the same in both experiments and was conducted as follows. The robot was programmed to execute random motions using UR Script and PolyScope software. First, a random valid goal was chosen (via random joint angles for all manipulator joints within the manufacturer-specified angle ranges) and the trajectory from the current robot pose to the goal robot pose was planned and then executed. If the random goal was not a valid one (for example, generating a trajectory that results in self-collision) it was discarded, and a new random one was generated from the start. Additionally, targeted maximal joint speed and acceleration (as the percentage of maximal allowed joint velocity/acceleration) were selected from the safe operational range (i.e., high velocities and accelerations were not selected due to robot and human experimenter safety, and since they are not normally used during human–robot interaction [35]). Note that joint velocities were programmed to finalise their movements all at the same time. Between two successive motions, the robot was at a standstill for a short time, roughly about 1 s. Each of the measuring instances contained multiple trajectories and lasted from 45 to 65 s. In total, 100 measurement instances were executed. With a mean duration of 55 s, this gives a total of 550,000 measurement points for the 6D sensor, and 55,000 for the strain gauge platform.

Simultaneously, the force was applied to the robot end-effector by the experimenter using hands, both while the robot was in motion or at a standstill. Along with strain gauge readings, data about robot kinematics and dynamics were collected using MATLAB Data Acquisition Toolbox. These data are provided by the robot controller and are joint positions, velocities and accelerations, end-effector forces, and joint motor currents. The last two are of particular importance, since they are used later as ground truths for training neural networks. Note that the UR5e robot controller provides joint motor currents rather than joint-side torques, but this should pose no additional challenges for estimation since the relationship between joint-side torque and current is linear; thus, joint torques are relatively easy to compute, once motor current is measured, via equation , where is joint-side torque, i is estimated motor current, and r and are gear reduction ratio and motor torque constant, respectively. These parameters are available in the literature [36] and were used in our experiment for the calculation of joint torques using the provided equation.

3.2. Neural Networks

The chosen neural network architecture for training was long short-term memory (LSTM), which, as they proved in our previous research [8], is the most accurate for the task of force estimation compared to MLP and convolutional neural networks (CNNs). However, the exact network hyperparameters were obtained using the hyperparameter optimisation procedure. The hyperparameters that were tuned were the number of LSTM layers and the number of cells per layer, and similarly for fully-connected layers, as well as the activation function used in the fully-connected layers. The possible values for each hyperparameter are summarised in Table 1. For a (naive) brute force grid search approach, this would yield 1728 neural network configurations to explore.

Table 1.

Hyperparameters search space.

The hyperparameter optimisation search space was the same for both experiments, but the optimisation was conducted in each experiment for each neural network separately. For all LSTM neural networks, the sequence length of 10 samples was used, and the networks were trained with the Adam optimiser [37] and mean-squared-error loss function, while the root mean squared error was used as a metric for reporting network performance. The network training was implemented with an early stopping condition (i.e., there was no improvement for 10 epochs), which resulted in a reasonably short training time of about 2–3 min. Available data were split in an 80%–20% ratio between training (and validation) and testing. Tensorflow and Keras [38,39] were used for training neural networks and the optimisation was performed using the KerasTuner framework [40] and Hyperband algorithm [41].

4. Results and Discussion

4.1. End-Effector Force Estimation

The first experiment was performed in order to train neural networks and directly compare the performance of the proposed system using strain gauges with the performance of the system when a 6-axis force sensor is used.

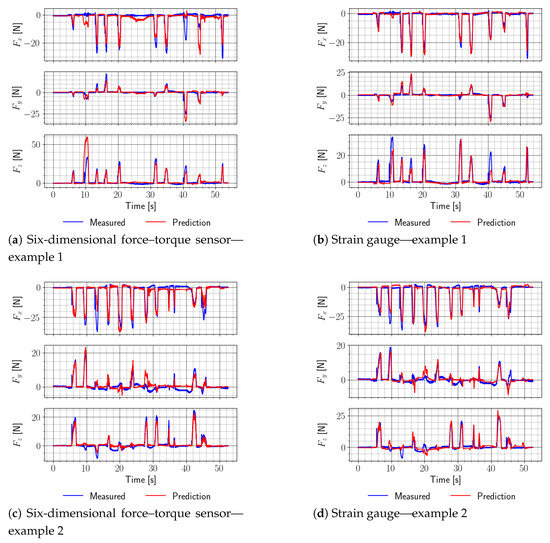

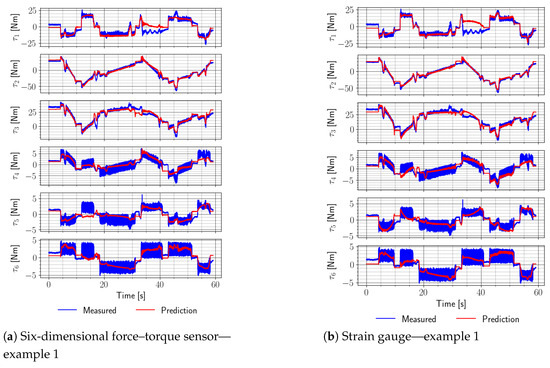

It seems that the performance of the two is similar, due to the fact that the reported validation and test set metrics (root-mean-squared-error—RMSE) reported similar values. The values are summarised in Table 2 and show that the system with strain gauges performs marginally better than the one with the force sensor. This is somewhat surprising, given the difference in price/accuracy between the 6-axis force–torque sensor and our custom-built platform. However, the results might be explained by the earlier note about the mounting configuration. Nevertheless, it demonstrates the validity of the proposed strain gauge-based approach. Showcase examples of the obtained force estimates using both methods are shown in Figure 4 for randomly chosen measurement runs. From the figures, it can be concluded that the performance is also visually similar, i.e., both approaches follow the reference force reasonably well.

Table 2.

Trained neural networks performance for end-effector force estimation.

Figure 4.

Examples of the end-effector force estimation performance of the two systems using a real-world UR5e robotic manipulator.

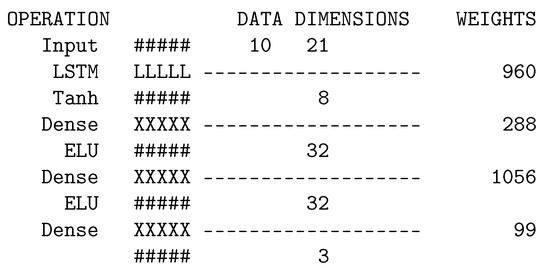

The LSTM networks that were obtained with hyperparameter optimisation were architecturally similar: both had a single LSTM layer and two fully connected layers but differed in the number of units per layer. Therefore, the network trained with the 6D force–torque sensor data had an LSTM layer with just 8 processing units, followed by two fully connected layers with 32 units each, as shown in Figure 5). On the other hand, the network trained with the strain gauge data had an LSTM layer with 24 units, followed by two fully connected layers with 16 units each, as shown in Figure 6. This suggests that, in shear numbers (and interpreted naively), the neural network for strain gauge-based measurements had 8 units less than its force-based counterpart. Both networks used the exponential linear unit (ELU) as an activation function.

Figure 5.

Neural network for end-effector force estimation based on force sensor.

Figure 6.

Neural network for end-effector force estimation based on multiple strain gauge sensors.

It might be concluded from the obtained results in this experiment that the usage of a custom-made force platform based on strain gauges does not negatively impact the performance of the system. Contrary to that, it even slightly improves the performance (with a smaller network size), given that the price of the strain gauges is negligible compared to the price of the 3-axis force sensors. Also, when compared to the force sensing accuracy of N RMSE obtained during human–robot interaction in [42], our results demonstrate the practical applicability of the approach.

4.2. Joint-Side Torque Estimation

The goal of this experiment was to train a neural network for joint-side torque estimation and to assess its performance. Training this network may be thought of as learning of the inverse dynamic model of the robot from collected data about robot kinematics and dynamics in the presence of external forces acting on the robot.

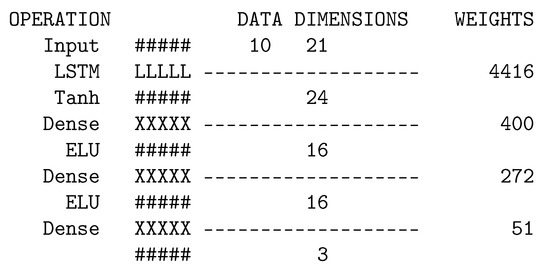

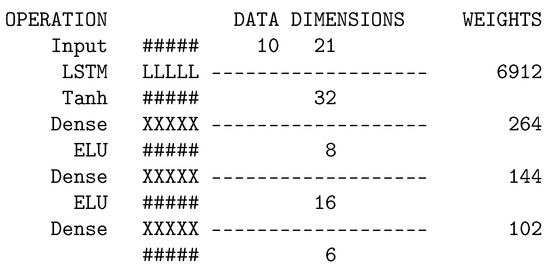

In this experiment, similarly to the previous one, two networks were optimised: one with 6D force–torque sensor measurement, and one with the proposed force-measuring platform. Both of the optimised architectures have the same number of LSTM layers (1 layer), the same number of fully-connected layers (2 layers), and the same activation function (ELU). These were all the same as in the previous experiment. However, this time, the two networks ended equally also in the number of neurons in each layer (LSTM: 32 neurons; fully-connected layers: 8 and 16 neurons), as shown in Figure 7. Thus, the only difference between them is that the one that uses strain gauge readings as input has one more input, since force–torque sensors produce three readings in each time instance, but strain gauges produce four (since there are four of them installed on the platform). The validation and test set RMSE metrics for both networks are reported in Table 3.

Figure 7.

Neural network for joint torque estimation (the same network was obtained in both analysed cases).

Table 3.

Trained neural networks performance for joint torques estimation.

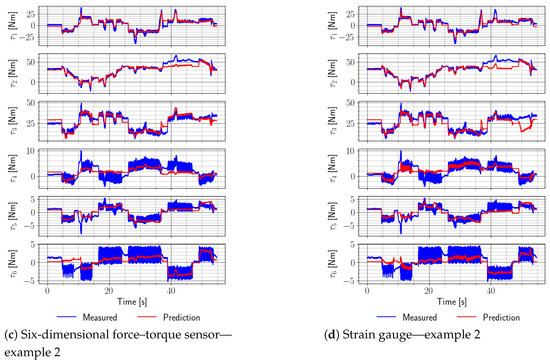

The obtained metrics again demonstrate marginally better performance when using the newly-proposed system (and, thus, prompting the construction-related note mentioned earlier). Showcase examples may be examined in Figure 8, from which is evident that ground truth data are somewhat noisy, especially the lower joints data. Neural networks mostly managed to filter out the noise and produce fair estimates using the network that used force–torque sensor data as input, and also the one that used strain gauges data as input, but to a lesser degree, especially in joint 4. This might be due to the sensitivity of the 24-bit A/D converter used to acquire data from strain gauges, as strain gauge readings have been fed to the neural networks in their raw form, i.e., they have not been preprocessed in any way (i.e., filtering or calibration).

Figure 8.

Examples of the joint torque estimation performance of the two systems using a real-world UR5e robotic manipulator.

Another issue that should be taken into account when developing neural network-based models, both for the torque and for the force estimation, is the effect of the longtime operation of the mobile manipulator. It is safe to assume that during the robot’s lifetime, there will be changes in robot dynamic parameters (due to normal wear-and-tear and possibly by changing defective parts) which will have effects on the accuracy of the learned models or any other model, including the analytical one (thus, highlighting the fact this is not an issue that is inherent only to our approach but present in all other model-based approaches). However, this issue can, in our view, be dealt with by the occasional retraining of the model (something like transfer learning): an approach that requires significantly fewer data points than original neural network training and one that is much simpler than performing full manipulator identification, as needed in other methods/approaches.

5. Conclusions

This paper presented a new, generic platform as an alternative to more expensive 6D force–torque sensors, which uses multiple 1D strain gauges instead. Then, the platform was used for robot end-effector force estimation and joint-side torque estimation, demonstrating good performance. The data were collected on the real-world robot to train neural networks for these two tasks. Since the experimental setup included both the custom-built platform and force–torque sensor, it was possible to directly compare the obtained neural networks using one source against the other, which demonstrated that networks trained with data from stain gauges performed marginally better on the same dataset.

Therefore, it might be concluded that the proposed force-measuring platform works well when used in applications where there is a need to cost-efficiently estimate either end-effector forces or joint-side torques (or both) while freeing up the robot’s payload. Compared to some similar research from the literature (like [32]) our approach does not require any calibration, specialised hardware, or knowledge of accurate robot models. However, it does rely on robot-specific neural networks. This network is obtained through neural network training, which requires large amounts of data and significant effort to collect it. However, this effort can be reduced by learning from simulation (for a particular robot, as was demonstrated in [43]) and later applying transfer learning principles to a smaller amount of real-world data.

In the future, this work will be extended to take into account the potential slope when the robot is not mounted on a flat surface, or more realistically, when the robot is mounted on a mobile platform for applications in real-world outdoor scenarios. One step of this was already achieved in our previous work [43] (only in simulation and only with the force–torque sensor, not the proposed platform based on strain gauges), but in the future, we plan to conduct the real-world experiment both with force sensors and with the platform. Additionally, the generic platform will be augmented with additional 1D strain gauges to explore if this contributes to the accuracy of the method.

Author Contributions

Conceptualisation, S.K. and J.M.; formal analysis, S.K., J.M. and R.K.; methodology, S.K., J.M. and R.K.; software, S.K.; supervision, V.P.; validation, J.M.; visualisation, S.K.; writing—original draft, S.K.; writing—review and editing, J.M., R.K. and V.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

Authors would like to express gratitude to Ivo Stančić for his patience in discussing the topic and giving useful pointers in the analysis.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 1D | 1-dimensional |

| 2D | 2-dimensional |

| 3D | 3-dimensional |

| 6D | 6-dimensional |

| A/D | Analog-to-Digital |

| CNN | Convolutional Neural Network |

| DeLaN | Deep Lagrangian Network |

| DoF | Degrees of Freedom |

| ELU | Exponential Linear Unit |

| LNN | Lagrangian Neural network |

| LSTM | Long-short Term Memory |

| MLP | Multilayer Perceptron |

| MSE | Mean Square Error |

| NRMSE | Normalised Root Mean Square Error |

| ReLU | Rectified Linear Unit |

| RMSE | Root Mean Square Error |

References

- Mordor Intelligence. Global Robotics Market-Growth, Trends, COVID-19 Impact, And Forecasts (2021–2026). 2020. Available online: https://www.mordorintelligence.com/industry-reports/robotics-market (accessed on 21 October 2021).

- Siciliano, B.; Villani, L. Robot Force Control; Springer: Greer, SC, USA, 1999. [Google Scholar] [CrossRef]

- Liu, X.; Zuo, G.; Zhang, J.; Wang, J. Sensorless force estimation of end-effect upper limb rehabilitation robot system with friction compensation. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419856132. [Google Scholar] [CrossRef]

- Wahrburg, A.; Bos, J.; Listmann, K.D.; Dai, F.; Matthias, B.; Ding, H. Motor-Current-Based Estimation of Cartesian Contact Forces and Torques for Robotic Manipulators and Its Application to Force Control. IEEE Trans. Autom. Sci. Eng. 2018, 15, 879–886. [Google Scholar] [CrossRef]

- Sereinig, M.; Werth, W.; Faller, L.M. A review of the challenges in mobile manipulation: Systems design and RoboCup challenges. Electr. Comput. Eng. 2020, 137, 297–308. [Google Scholar] [CrossRef]

- Feng, Z.; Hu, G.; Sun, Y.; Soon, J. An overview of collaborative robotic manipulation in multi-robot systems. Annu. Rev. Control 2020, 49, 113–127. [Google Scholar] [CrossRef]

- Kružić, S.; Musić, J.; Kamnik, R.; Papić, V. Estimating Robot Manipulator End-effector Forces using Deep Learning. In Proceedings of the 2020 43rd International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 28 September–2 October 2020. [Google Scholar] [CrossRef]

- Kružić, S.; Musić, J.; Kamnik, R.; Papić, V. End-Effector Force and Joint Torque Estimation of a 7-DoF Robotic Manipulator Using Deep Learning. Electronics 2021, 10, 2963. [Google Scholar] [CrossRef]

- Hoffmann, K. An Introduction to Measurement Using Strain Gauges; Hottinger Baldwin: Darmstadt, Germany, 1989. [Google Scholar]

- Sebastian, G.; Li, Z.; Crocher, V.; Kremers, D.; Tan, Y.; Oetomo, D. Interaction Force Estimation Using Extended State Observers: An Application to Impedance-Based Assistive and Rehabilitation Robotics. IEEE Robot. Autom. Lett. 2019, 4, 1156–1161. [Google Scholar] [CrossRef]

- Alcocer, A.; Robertsson, A.; Valera, A.; Johansson, R. Force estimation and control in robot manipulators. IFAC Proc. Vol. 2003, 36, 55–60. [Google Scholar] [CrossRef]

- Veil, C.; Müller, D.; Sawodny, O. Nonlinear disturbance observers for robotic continuum manipulators. Mechatronics 2021, 78, 102518. [Google Scholar] [CrossRef]

- Sariyildiz, E.; Oboe, R.; Ohnishi, K. Disturbance Observer-Based Robust Control and Its Applications: 35th Anniversary Overview. IEEE Trans. Ind. Electron. 2020, 67, 2042–2053. [Google Scholar] [CrossRef]

- Stolt, A.; Linderoth, M.; Robertsson, A.; Johansson, R. Force controlled robotic assembly without a force sensor. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012. [Google Scholar] [CrossRef]

- Yu, X.; He, W.; Li, Q.; Li, Y.; Li, B. Human-Robot Co-Carrying Using Visual and Force Sensing. IEEE Trans. Ind. Electron. 2021, 68, 8657–8666. [Google Scholar] [CrossRef]

- Liu, S.; Wang, L.; Wang, X.V. Sensorless force estimation for industrial robots using disturbance observer and neural learning of friction approximation. Robot. Comput. Integr. Manuf. 2021, 71, 102168. [Google Scholar] [CrossRef]

- El Dine, K.M.; Sanchez, J.; Corrales, J.A.; Mezouar, Y.; Fauroux, J.C. Force-Torque Sensor Disturbance Observer Using Deep Learning. In Springer Proceedings in Advanced Robotics; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 364–374. [Google Scholar] [CrossRef]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2017, 37, 421–436. [Google Scholar] [CrossRef]

- Jin, L.; Li, S.; Yu, J.; He, J. Robot manipulator control using neural networks: A survey. Neurocomputing 2018, 285, 23–34. [Google Scholar] [CrossRef]

- Smith, A.C.; Hashtrudi-Zaad, K. Application of neural networks in inverse dynamics based contact force estimation. In Proceedings of the 2005 IEEE Conference on Control Applications, Sydney, Austrlia, 4–7 July 2005. [Google Scholar] [CrossRef]

- Yilmaz, N.; Wu, J.Y.; Kazanzides, P.; Tumerdem, U. Neural Network based Inverse Dynamics Identification and External Force Estimation on the da Vinci Research Kit. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1387–1393. [Google Scholar] [CrossRef]

- Aviles, A.I.; Alsaleh, S.; Sobrevilla, P.; Casals, A. Sensorless force estimation using a neuro-vision-based approach for robotic-assisted surgery. In Proceedings of the 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015. [Google Scholar] [CrossRef]

- Chua, Z.; Jarc, A.M.; Okamura, A.M. Toward Force Estimation in Robot-Assisted Surgery using Deep Learning with Vision and Robot State. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 12335–12341. [Google Scholar] [CrossRef]

- Lutter, M.; Ritter, C.; Peters, J. Deep Lagrangian Networks: Using Physics as Model Prior for Deep Learning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar] [CrossRef]

- Cranmer, M.; Greydanus, S.; Hoyer, S.; Battaglia, P.; Spergel, D.; Ho, S. Lagrangian Neural Networks. arXiv 2020, arXiv:2003.04630. [Google Scholar] [CrossRef]

- Han, Y.; Li, R.; Chirikjian, G.S. Look at my new blue force-sensing shoes! In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 2891–2896. [Google Scholar]

- Chua, Z.; Okamura, A.M. A Modular 3-Degrees-of-Freedom Force Sensor for Robot-Assisted Minimally Invasive Surgery Research. Sensors 2023, 23, 5230. [Google Scholar] [CrossRef] [PubMed]

- Schulze, J.S.; Fisher, C. Prototype of an Ultralow-Cost 2-D Force Sensor for Robotic Applications. IEEE Sens. Lett. 2021, 5, 2500404. [Google Scholar] [CrossRef]

- Andersson, T.; Kihlberg, A. Differential Drive Robot Platform with External Force Sensing Capabilities Intended for Logistic Tasks Set in a Hospital Environment. Master’s Thesis, Malardalen University, Vateras, Sweden, 2021. Available online: https://diva-portal.org/smash/get/diva2:1717478/FULLTEXT01.pdf (accessed on 28 August 2023).

- Hoang, P.H.; Thang, V.D.T. Design and simulation of flexure-based planar force/torque sensor. In Proceedings of the 2010 IEEE Conference on Robotics, Automation and Mechatronics, Singapore, 28–30 June 2010; pp. 194–198. [Google Scholar] [CrossRef]

- Tsagarakis, N.G.; Metta, G.; Sandini, G.; Vernon, D.; Beira, R.; Becchi, F.; Righetti, L.; Santos-Victor, J.; Ijspeert, A.J.; Carrozza, M.C.; et al. iCub: The design and realization of an open humanoid platform for cognitive and neuroscience research. Adv. Robot. 2007, 21, 1151–1175. [Google Scholar] [CrossRef]

- Gattringer, H.; Müller, A.; Hoermandinger, P. Design and Calibration of Robot Base Force/Torque Sensors and Their Application to Non-Collocated Admittance Control for Automated Tool Changing. Sensors 2021, 21, 2895. [Google Scholar] [CrossRef]

- Universal Robots. e-Series. Built to Do More. Brochure, 2021. Available online: https://www.universal-robots.com/media/1809365/05_2021_e-series_brochure_english_web_rgb_din-a4.pdf (accessed on 28 August 2023).

- Schäfer, M.B.; Meiringer, J.G.; Nawratil, J.; Worbs, L.; Giacoppo, G.A.; Pott, P.P. Estimating Gripping Forces During Robot- Assisted Surgery Based on Motor Current. Curr. Dir. Biomed. Eng. 2022, 8, 105–108. [Google Scholar] [CrossRef]

- Rojas, R.A.; Garcia, M.A.R.; Gualtieri, L.; Rauch, E. Combining safety and speed in collaborative assembly systems—An approach to time optimal trajectories for collaborative robots. Procedia CIRP 2021, 97, 308–312. [Google Scholar] [CrossRef]

- Raviola, A.; Guida, R.; Martin, A.D.; Pastorelli, S.; Mauro, S.; Sorli, M. Effects of Temperature and Mounting Configuration on the Dynamic Parameters Identification of Industrial Robots. Robotics 2021, 10, 83. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 28 August 2023).

- Kapoor, A.; Gulli, A.; Pal, S.; Chollet, F. Deep Learning with TensorFlow and Keras Build and Deploy Supervised, Unsupervised, Deep, and Reinforcement Learning Models; Packt Publishing, Limited: Birmingham, UK, 2022. [Google Scholar]

- O’Malley, T.; Bursztein, E.; Long, J.; Chollet, F.; Jin, H.; Invernizzi, L. KerasTuner. 2019. Available online: https://github.com/keras-team/keras-tuner (accessed on 28 August 2023).

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res. 2018, 18, 1–52. [Google Scholar]

- Kim, U.; Jo, G.; Jeong, H.; Park, C.H.; Koh, J.S.; Park, D.I.; Do, H.; Choi, T.; Kim, H.S.; Park, C. A Novel Intrinsic Force Sensing Method for Robot Manipulators During Human–Robot Interaction. IEEE Trans. Robot. 2021, 37, 2218–2225. [Google Scholar] [CrossRef]

- Kružić, S.; Musić, J.; Stančić, I.; Papić, V. Neural Network-based End-effector Force Estimation for Mobile Manipulator on Simulated Uneven Surfaces. In Proceedings of the 2022 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 22–24 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).