Abstract

Medical simulations have proven to be highly valuable in the education of healthcare professionals. This significance was particularly evident during the COVID-19 pandemic, where simulators provided a safe and effective means of training healthcare practitioners in the principles of lung ultrasonography without exposing them to the risk of infection. This further emphasizes another important advantage of medical simulation in the field of medical education. This paper presents the principles of ultrasound simulation in the context of inflammatory lung conditions. The propagation of sound waves in this environment is discussed, with a specific focus on key diagnostic artifacts in lung imaging. The simulated medium was modeled by assigning appropriate acoustic characteristics to the tissue components present in the simulated study. A simulation engine was developed, taking into consideration the requirements of easy accessibility through a web browser and high-performance simulation through GPU-based computing. The obtained images were compared with real-world examples. An analysis of simulation parameter selection was conducted to achieve real-time simulations while maintaining excellent visual quality. The research findings demonstrate the feasibility of real-time, high-quality visualization in ultrasound simulation, providing valuable insights for the development of educational tools and diagnostic training in the field of medical imaging.

1. Introduction

Lung ultrasonography (LUS), also known as lung ultrasound or chest ultrasound, is a non-invasive imaging technique that allows real-time assessment of pulmonary structures. Research evidence indicates the importance of this technique, which may be a significant tool for a wide range of lung diseases, including both diagnosis and treatment considerations. There is a steady increase in the prevalence of LUS, and it has been shown to be effective in conditions including pneumonia [1], pleural effusion, lung consolidation [2], pneumothorax [3] and much more common etiologies of respiratory failure. Lung ultrasonography is recommended as a “point of care” examination in patients with dyspnea, chest pain, and any symptoms in the chest [4]. Moreover, LUS played a significant role during the COVID-19 pandemic [5,6]. In [7], authors present machine learning-based diagnostic tools for COVID-19 detection that are among other factors based on LUS examination. Despite the fact that ultrasonographic methods offer many inestimable advantages, such as portability (bedside usage), no ionizing radiation exposure, efficiency, and low-cost [8], its main limitation—operator-dependency, should also be mentioned. The appropriate interpretation of lung ultrasound images requires adequate training, experience and expertise to ensure the accuracy and reliability of the results [9].

Lung ultrasound has been shown to be an easy-to-learn technique by personnel without previous experience in a relatively short period of time [10,11,12]. Chiem et al. found that after a brief 30-min training, clinicians achieved a 95% success rate in obtaining adequate images and demonstrated comparable B-line interpretation sensitivity and specificity to expert sonographers [11]. In order to achieve effective training, several approaches are used. There are traditional ways of education based on lectures and seminars [13], in addition, e-learning courses with online lectures [14,15,16] and hands-on sessions involving healthy live models [16,17] or phantoms [17,18]. Each method has certain advantages; for example, learning from online lectures is distinguished by high accessibility, flexibility, and affordability, while hands-on learning on phantoms simulates the process of a real examination without exposing the patient to risks [19]. A promising solution that combines a number of advantages is a web-based LUS simulator, which visualizes US images on the screen, allows users to manipulate the probe, and presents different disease scenarios.

The development of an LUS simulator requires a comprehensive understanding of the fundamentals of ultrasound physics and the phenomena used in lung ultrasound diagnosis. LUS is achieved by interpreting ultrasound reverberation artifacts, generally referred to as A and B lines.

A-lines are horizontal, hyperechoic (bright) lines that are evenly spaced and parallel to the pleural line. These artifacts are caused by subpleural air, resulting in large differences in the acoustic impedance between the chest wall and alveoli of the lung. The sound waves travel through the lung and reflect between the pleural line and the transducer, producing a sequence of horizontal lines that are typical findings in air-filled tissues. The B-lines (known as ultrasound lung comets) are vertical, hyperechoic lines that originate from the pleural lines and extend without fading to the bottom of the screen. They replace normal A-lines and are synchronized with the lung sliding. B-lines are the consequence of interlobular septal thickening covered by air-filled alveoli, creating a significant acoustic impedance gradient that produces the reverberation artifacts. B-lines are associated with various lung diseases, including pulmonary edema, interstitial lung disease and pneumonia [20,21,22,23].

In this work, we presented a web-based LUS simulator that was created to provide a comprehensive learning tool that visualizes ultrasound images, allows probe manipulation, and presents various disease scenarios. The simulator includes artifacts that are commonly seen during lung exams, such as shadows, A-lines, B-lines, pleural breaks, pleural sliding, and consolidation. The simulator has been tested for compatibility and performance. Its usability and effectiveness in providing practical experience in lung ultrasound interpretation have been demonstrated.

2. Simulation Method

The Lung Ultrasound Simulator was intended to be a web-based application, available for free using only a web browser. This requirement had a significant impact on the selected technology and method of algorithm implementation. Particularly, instead of relying on common 3D libraries, the Lung Ultrasound Simulator had to utilize WebGL technology based on OpenGL ES. WebGL is specifically designed for web-based applications and comes with certain limitations compared to the full OpenGL standard [24]. For example, it may lack support for certain advanced features, such as compute shaders for parallel computations, and it may have restrictions on certain texture types or dimensions.

The first stage of the work includes collecting anonymized real images of the actual ultrasound examinations, with detailed descriptions, and then analyzing and defining the requirements for the project lung simulator. A database of real US examinations was created and used as a source of reference images. The images were prepared for educational purposes and supplied by the Department of Coronary Disease and Heart Failure at John Paul II Hospital, Kraków, Poland.

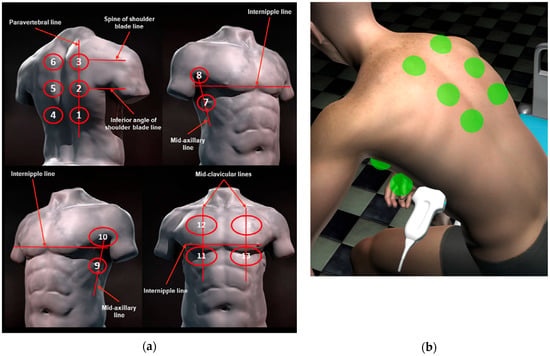

The next stage was a definition of the learning tool in the simulator. The chosen approach assumes the existence of 14 predefined standard application points for the ultrasound probe on each patient, following established guidelines presented in Figure 1 [25]. Each of these points corresponds to a specific observation that the user should identify on the ultrasound image. After noting all the observed phenomena and considering additional patient information, the user is asked to select the most probable diagnosis. In the subsequent step, the simulator can provide feedback to ascertain the accuracy of the user’s observations.

Figure 1.

Schematic representation of the acquisition landmarks on chest anatomic lines. (a) From guidelines [22]; (b) represented on the virtual patient in the simulator.

The next stage was to prepare scenarios: different pathologies and diseases were selected, and virtual patients were created to reflect defined conditions. For each of the 14 landmarks, the corresponding pathology was selected, along with the appropriate artifacts that would be visible when the ultrasound probe was positioned over that point.

2.1. Simulation Model

There are typically two main models used in ultrasound simulations: vector-based and volumetric. The vector-based model is created by 3D graphic artists and often relies on CT data as a foundation. This model involves the generation of geometric shapes to simulate ultrasound wave propagation.

On the other hand, the volumetric model is derived directly from CT data, allowing for a more accurate representation of the scanned anatomy. By utilizing the actual CT data, the volumetric model offers a higher level of realism, anatomical details and precision in simulating ultrasound images [26,27,28,29,30,31,32,33,34].

Both approaches have their strengths and are commonly employed in ultrasound simulation technology. Vector-based (or triangle-based) models are easier to edit, animate and apply interactions to. The Ultrasound simulation can then be performed using a cross-section of that 3D model or using a raytracing technique [35], where rays are sent from the transducer position and travel through the model, simulating a wavefront propagation.

On the other hand, the volumetric model may have lower temporal resolution, may be corrupted by different artifacts, is more challenging to process, and occupies more memory space. A volume model can be sampled using a ray-marching technique, which can implement most of the required phenomena to produce a realistic final ultrasound image.

2.2. Ultrasound Simulation

The first step was a 3D vector model creation of a patient and internal structures. The authors decided to use the Daz 3D [36] software and an asset of male and female with internal organs available in the Daz 3D Assets Store [37]. To meet the simulation requirements, models were adjusted and animated; in particular, the motion of the lungs was added. The animation was manually implemented to reflect the lung’s movement as visible on the acquired ultrasound videos. Ribs movement was not implemented because the complexity of modeling exceeds the benefits and impact of animation on the final outcome. The speed and the range of the lung animation vary depending on the selected patient, which reflects the breathing speed and the depth of inhalation.

In the following step, the Unity framework was used to create a simulation platform for the Lung Ultrasound Simulator. The Unity framework is widely utilized for developing 2D and 3D games, and it has also found extensive application in the medical field [38,39,40,41]. Moreover, it serves as a solid foundation for creating web-based 3D applications, offering a versatile platform for delivering immersive experiences over the Internet.

The previously prepared models were imported into the Unity framework, and then a virtual examination room and virtual US probe were created. Users can manipulate the probe and position it on the patient’s body using a computer mouse.

Next, a disease scenario was implemented for each patient by configuring the landmark states to the appropriate values based on the expected observations.

Then, the simulation algorithm is used to render the simulated US image:

- According to the virtual probe position and orientation, the 3D model of the patient and its internal structures are rendered using a virtual camera and custom shader program. Only the cross-section is visible and rendered as an image into the framebuffer. This is an approximation of an ultrasound image in which individual tissues are rendered using a custom shader (called Material) that, among others, encodes the required physical parameters (like absorption coefficient and acoustic impedance) as a color. Additionally, the cross-section is completed with a simulation of the tissue structure. This is achieved using a position-dependent pseudorandom values function [42].

- The same virtual camera renders a UV map of the moving tissues. UV value is a vertex attribute required to correctly map a texture on an object. However, in this case, this step is used to simulate pulmonary pleurae movement and related artifacts. The values rendered on the UV map correspond to the local position of the lung surface. The lung’s movement corresponds to the changes in these values.

- Another virtual camera is employed to render the ultrasound (US) sector. This camera utilizes a shader program that incorporates the modified ray-marching method to simulate wave propagation. This step was challenging because instead of a compute shader, better suited for this problem, a regular fragment shader had to be used. Compute shaders were not supported in the web browser environment at the time of writing. In the resulting image, each column corresponds to a ray, and each row is a ray marching step.

- A shader program that extends the results of previous steps with additional wave phenomena, including A-lines and noise.

- A shader program that transforms the results into a selected sector view.

2.3. Artifacts Simulation

In order to create an effective learning tool, it is crucial to simulate various ultrasound artifacts that are commonly encountered during lung examinations. These artifacts play a significant role in the interpretation and analysis of ultrasound images. By accurately replicating these artifacts in the Lung Ultrasound Simulator, users can develop the necessary skills to identify and interpret them in real-life scenarios.

The artifacts can be rendered according to the classical wave equation [43,44]:

where:

p—acoustic pressure,

c—speed of sound in the medium,

t—time,

and with a relaxation term:

where:

—is a relaxation coefficient.

In cases where there is negligible or no scattering, it leads to (1-D):

where:

—remaining intensity at the distance to the wave source,

—initial intensity,

—absorption coefficient—a product of the medium attenuation coefficient and the frequency of the ultrasound wave.

To solve Equation (2), ultrasound waves can be simplified as a ray that is generated at the transducer and marched over a 2D cross-section plane. During that process, a ray interacts with the medium (tissue characteristics set up with a custom material) and renders the intensity image. The attenuation is implemented using the Equation (3). No reflection or refraction rays are implemented. However, the reflected intensity is calculated as follows:

where:

—is the reflected intensity,

—is the incoming intensity,

—are the acoustic impedances, defined as a product of tissue density and speed of sound.

Key artifacts that should be simulated include shadows, A-lines, B-lines, pleural breaks, and pleural sliding. The strength of each artifact can be adjusted as needed and defined for each standard probe application point.

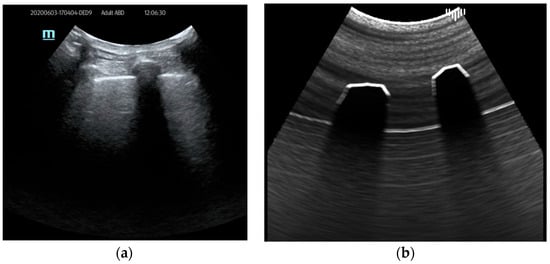

- Shadows are dark areas that occur when ultrasound waves are blocked or absorbed by dense structures, such as ribs or air-filled spaces (Figure 2). To simulate shadows, first, the cross-section image is rendered with Material attributes defined for each tissue. Then, a ray marching technique utilizes these values to compute shadows for each ray.

Figure 2. Ultrasound shadows example: (a) real examination; (b) the LUS simulation.

Figure 2. Ultrasound shadows example: (a) real examination; (b) the LUS simulation.

- 2.

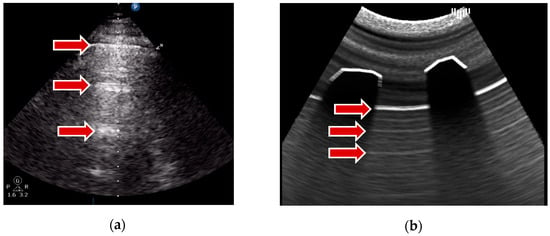

- A-lines are horizontal, parallel lines seen in normal lung tissue and are created by the multiple reflections of ultrasound waves between the pleural line and the transducer. This is achieved during the ray marching step without a time-consuming ray generation: the input frame buffer, which stores the image of the first render pass, is repeatedly sampled relative to the distance of a pleural line to the transducer. The results can be seen in Figure 3.

Figure 3. A-lines example: (a) real examination; (b) the LUS simulation.

Figure 3. A-lines example: (a) real examination; (b) the LUS simulation.

- 3.

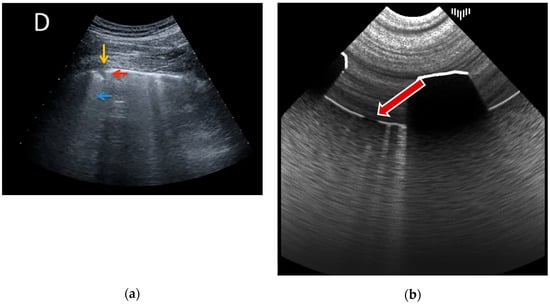

- B-lines, on the other hand, are vertical lines that represent thickened or edematous lung interstitial spaces and are commonly associated with pulmonary edema or interstitial lung diseases. To simulate this artifact, the intensity of the response is randomly increased at the pleural line, leaving a bright trail interpreted as a B-line. A pseudorandom value is calculated from the UV map (rendered in the previous step) for each ray of the ray marching algorithm. Then, a strength parameter associated with the patient’s landmark determines the visibility of the B-lines. This parameter ranges from 0 (no B-lines) to 1 (multiple coalescent B-lines). Radial blur, added in the final step, increases visual similarity to real artifacts. An example of that artifact results is visible in Figure 4.

Figure 4. B-lines example: (a) real examination; (b) the LUS simulation.

Figure 4. B-lines example: (a) real examination; (b) the LUS simulation.

- 4.

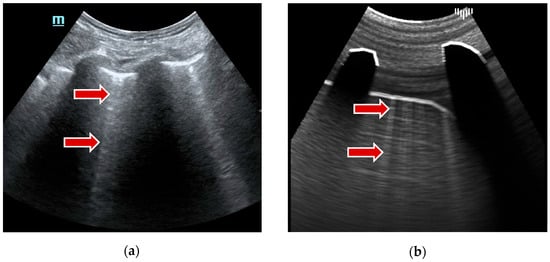

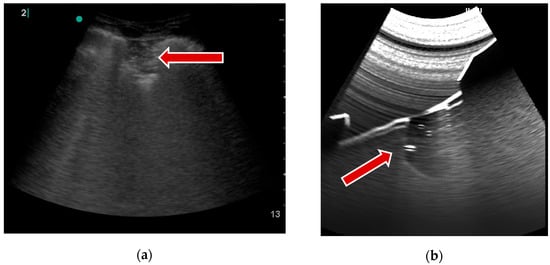

- Pleural breaks, also known as pleural irregularities or pleural abnormalities, appear as disruptions in the smooth pleural line. It was achieved by changing the pleural reflectance parameter in the function of distance to the axis of the probe standard application point (landmark). Figure 5 shows this simulated artifact.

Figure 5. Pleural break example: (a) real examination [18]; (b) the LUS simulation.

Figure 5. Pleural break example: (a) real examination [18]; (b) the LUS simulation.

- 5.

- Lastly, pleural sliding refers to the dynamic movement of the visceral and parietal pleura during respiration. As mentioned before, it was created using the UV maps of the animated lungs model and calculating pseudorandom values on that basis. The sliding is then achieved by modifying the intensity of the outer part of the pleural line according to the random values.

The attenuation phenomenon is also applied during ray marching, according to the traversed tissue attenuation values. Furthermore, pleural fluid was implemented using a custom 3D object and appropriate material parameters.

In addition to the mentioned artifacts that are highly important during lung ultrasound examinations, it was crucial for educational purposes to simulate the presence of consolidation. Consolidations are regions of lung tissues filled with liquid instead of air. It ensues from the accumulation of inflammatory cellular exudate within the alveoli and contiguous ducts. The simulation of the image of consolidation was achieved using a predefined mesh object created in 3D software (3D Studio Max, Autodesk) and positioned in a virtual patient interior. Material attributes are added to the consolidation object to increase attenuation and induce more reflectance and then rendered in the final image (Figure 6).

Figure 6.

Consolidation example: (a) real examination; (b) the LUS simulation.

Finally, image noise and radial blur are applied to the image.

By simulating these artifacts within the Lung Ultrasound Simulator, users can develop a comprehensive understanding of their appearance, characteristics, and clinical significance. This simulation enables medical professionals and students to gain practical experience in identifying and interpreting these artifacts, enhancing their diagnostic capabilities and preparing them for real-world lung ultrasound examinations.

Due to their lesser importance and the complexity involved in their implementation, other artifacts have not been incorporated into the system at this stage.

3. Results

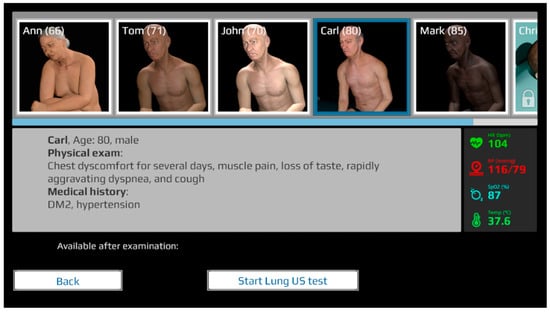

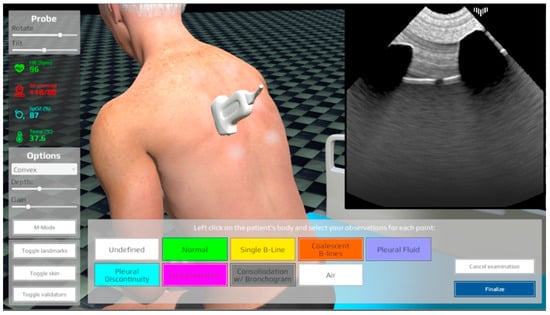

The LUS simulator is available for free at https://lus.mstech.eu/ (accessed on 20 August 2023). Using only a web browser and a computer mouse, the user can examine a selected virtual patient (5 cases available) and afterward choose the most probable diagnosis (Figure 7 and Figure 8). The simulator shows the correct answers and allows one to revisit all the points to check the correct observations.

Figure 7.

The LUS simulator, patient selection screen.

Figure 8.

The LUS simulator, patient examination screen.

The simulator underwent thorough testing on various machines, operating systems, and web browsers, demonstrating its compatibility without encountering significant issues. Performance testing was conducted by measuring the simulator’s performance with different frame buffer sizes, and the findings are summarized in Table 1. The results indicate that the size of the framebuffers has an impact on performance, with the largest framebuffer making the simulator unusable. Furthermore, a resolution of 512 by 512 pixels was selected as it provides the optimal balance between image quality and rendering efficiency, ensuring that the resulting images maintain sufficient visual clarity.

Table 1.

Frames per second (FPS) for different sizes of framebuffers.

4. Discussion

Lung ultrasonography (LUS) is a non-invasive imaging technique that allows real-time assessment of pulmonary structures. It has proven to be effective in diagnosing and treating various lung diseases, including pneumonia, pleural effusion, lung consolidation, and pneumothorax. LUS is recommended as a point-of-care examination for patients with symptoms in the chest. We presented a web-based LUS simulator developed to provide a comprehensive learning tool that visualizes ultrasound images, allows probe manipulation, and presents different disease scenarios. The simulator utilizes WebGL technology and a combination of vector-based and ray-marching techniques to simulate ultrasound waves and generate realistic ultrasound images. It incorporates various artifacts commonly encountered during lung examinations, such as shadows, A-lines, B-lines, pleural breaks, pleural sliding, and consolidation. By simulating these artifacts, the simulator helps users develop skills in identifying and interpreting ultrasound findings. The simulator has been tested for compatibility and performance, demonstrating its usability and effectiveness in providing practical experience in lung ultrasound interpretation.

The LUS simulator developed as part of the COVID-19 Rapid Response Projects and made available free of charge constituted a significant stride in responding to the COVID-19 pandemic while simultaneously aiming to encourage the utilization of ultrasonography in diagnosing COVID-19 patients. Faced with the challenges imposed by the pandemic, this innovative tool facilitated the learning and practice of ultrasonography from the comfort of one’s own home, offering immense value to aspiring medical professionals and physicians. The unrestricted access to the simulator not only democratized the acquisition of this crucial skill but also facilitated the commencement of ultrasonography education, which gained paramount importance in the context of COVID-19.

5. Conclusions

In conclusion, the LUS simulator stands as a remarkable innovation in the field of medical education, particularly within the context of the COVID-19 pandemic. While its quality may not rival that of top-tier commercial counterparts, its distinct advantage lies in its accessibility, simplicity, and cost-free availability. The online platform offers an easy-to-use interface that incorporates essential learning features, making it an invaluable resource for aspiring medical practitioners.

In the future, research on the effectiveness of learning using the developed simulator is planned. Additionally, there is an intention to enhance its capabilities through improved implementations of both simulation algorithms and 3D models. Furthermore, the simulator will be supplemented with additional scenarios.

Author Contributions

Conceptualization, K.S.; methodology, K.S.; software, K.S.; validation, K.S.; formal analysis, K.S.; investigation, K.S.; resources, K.S., A.P. and J.L.; data curation, K.S. and J.L.; writing—original draft preparation, K.S. and J.L.; writing—review and editing, K.S.; visualization, K.S.; supervision, A.P.; project administration, K.S.; funding acquisition, A.P. and K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by EIT Health, grant number 20880, “Ultrasonographic Lung Simulator (20880)”, “COVID-19 Rapid Response Projects”. The project is also partially funded by the Doctoral School of the University of Science and Technology, Cracow, Poland, as a part of the Industrial Doctoral Program “Selected phenomena simulation using ray trace technique”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The simulator is available at https://lus.mstech.eu/ (accessed on 20 August 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chavez, M.A.; Shams, N.; Ellington, L.E.; Naithani, N.; Gilman, R.H.; Steinhoff, M.C.; Santosham, M.; Black, R.E.; Price, C.; Gross, M.; et al. Lung Ultrasound for the Diagnosis of Pneumonia in Adults: A Systematic Review and Meta-Analysis. Respir. Res. 2014, 15, 50. [Google Scholar] [CrossRef]

- Hansell, L.; Milross, M.; Delaney, A.; Tian, D.H.; Ntoumenopoulos, G. Lung Ultrasound Has Greater Accuracy than Conventional Respiratory Assessment Tools for the Diagnosis of Pleural Effusion, Lung Consolidation and Collapse: A Systematic Review. J. Physiother. 2021, 67, 41–48. [Google Scholar] [CrossRef] [PubMed]

- Alrajab, S.; Youssef, A.M.; Akkus, N.I.; Caldito, G. Pleural Ultrasonography versus Chest Radiography for the Diagnosis of Pneumothorax: Review of the Literature and Meta-Analysis. Crit. Care 2013, 17, R208. [Google Scholar] [CrossRef] [PubMed]

- Demi, L.; Wolfram, F.; Klersy, C.; De Silvestri, A.; Ferretti, V.V.; Muller, M.; Miller, D.; Feletti, F.; Wełnicki, M.; Buda, N.; et al. New International Guidelines and Consensus on the Use of Lung Ultrasound. J. Ultrasound Med. 2023, 42, 309–344. [Google Scholar] [CrossRef] [PubMed]

- European Society of Radiology (ESR); Clevert, D.-A.; Sidhu, P.S.; Lim, A.; Ewertsen, C.; Mitkov, V.; Piskunowicz, M.; Ricci, P.; Bargallo, N.; Brady, A.P. The Role of Lung Ultrasound in COVID-19 Disease. Insights Imaging 2021, 12, 81. [Google Scholar] [CrossRef]

- Lê, M.P.; Jozwiak, M.; Laghlam, D. Current Advances in Lung Ultrasound in COVID-19 Critically Ill Patients: A Narrative Review. J. Clin. Med. 2022, 11, 5001. [Google Scholar] [CrossRef]

- La Salvia, M.; Torti, E.; Secco, G.; Bellazzi, C.; Salinaro, F.; Lago, P.; Danese, G.; Perlini, S.; Leporati, F. Machine-Learning-Based COVID-19 and Dyspnoea Prediction Systems for the Emergency Department. Appl. Sci. 2022, 12, 10869. [Google Scholar] [CrossRef]

- Raheja, R.; Brahmavar, M.; Joshi, D.; Raman, D. Application of Lung Ultrasound in Critical Care Setting: A Review. Cureus 2019, 11, e5233. [Google Scholar] [CrossRef]

- Tsou, P.; Chen, K.P.; Wang, Y.; Fishe, J.; Gillon, J.; Lee, C.; Deanehan, J.K.; Kuo, P.; Yu, D.T.Y. Diagnostic Accuracy of Lung Ultrasound Performed by Novice Versus Advanced Sonographers for Pneumonia in Children: A Systematic Review and Meta-analysis. Acad. Emerg. Med. 2019, 26, 1074–1088. [Google Scholar] [CrossRef]

- Russell, F.M.; Ferre, R.; Ehrman, R.R.; Noble, V.; Gargani, L.; Collins, S.P.; Levy, P.D.; Fabre, K.L.; Eckert, G.J.; Pang, P.S. What Are the Minimum Requirements to Establish Proficiency in Lung Ultrasound Training for Quantifying B-lines? ESC Heart Fail. 2020, 7, 2941–2947. [Google Scholar] [CrossRef]

- Chiem, A.T.; Chan, C.H.; Ander, D.S.; Kobylivker, A.N.; Manson, W.C. Comparison of Expert and Novice Sonographers’ Performance in Focused Lung Ultrasonography in Dyspnea (FLUID) to Diagnose Patients with Acute Heart Failure Syndrome. Acad. Emerg. Med. 2015, 22, 564–573. [Google Scholar] [CrossRef] [PubMed]

- International Liaison Committee on Lung Ultrasound (ILC-LUS) for the International Consensus Conference on Lung Ultrasound (ICC-LUS); Volpicelli, G.; Elbarbary, M.; Blaivas, M.; Lichtenstein, D.A.; Mathis, G.; Kirkpatrick, A.W.; Melniker, L.; Gargani, L.; Noble, V.E.; et al. International Evidence-Based Recommendations for Point-of-Care Lung Ultrasound. Intensive Care Med. 2012, 38, 577–591. [Google Scholar] [CrossRef] [PubMed]

- Noble, V.E.; Lamhaut, L.; Capp, R.; Bosson, N.; Liteplo, A.; Marx, J.-S.; Carli, P. Evaluation of a Thoracic Ultrasound Training Module for the Detection of Pneumothorax and Pulmonary Edema by Prehospital Physician Care Providers. BMC Med. Educ. 2009, 9, 3. [Google Scholar] [CrossRef] [PubMed]

- Cuca, C.; Scheiermann, P.; Hempel, D.; Via, G.; Seibel, A.; Barth, M.; Hirche, T.O.; Walcher, F.; Breitkreutz, R. Assessment of a New E-Learning System on Thorax, Trachea, and Lung Ultrasound. Emerg. Med. Int. 2013, 2013, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Edrich, T.; Stopfkuchen-Evans, M.; Scheiermann, P.; Heim, M.; Chan, W.; Stone, M.B.; Dankl, D.; Aichner, J.; Hinzmann, D.; Song, P.; et al. A Comparison of Web-Based with Traditional Classroom-Based Training of Lung Ultrasound for the Exclusion of Pneumothorax. Anesth. Analg. 2016, 123, 123–128. [Google Scholar] [CrossRef]

- Heiberg, J.; Hansen, L.; Wemmelund, K.; Sørensen, A.; Ilkjaer, C.; Cloete, E.; Nolte, D.; Roodt, F.; Dyer, R.; Swanevelder, J.; et al. Point-of-Care Clinical Ultrasound for Medical Students. Ultrasound Int. Open 2015, 1, E58–E66. [Google Scholar] [CrossRef]

- Breitkreutz, R.; Dutiné, M.; Scheiermann, P.; Hempel, D.; Kujumdshiev, S.; Ackermann, H.; Seeger, F.H.; Seibel, A.; Walcher, F.; Hirche, T.O. Thorax, Trachea, and Lung Ultrasonography in Emergency and Critical Care Medicine: Assessment of an Objective Structured Training Concept. Emerg. Med. Int. 2013, 2013, 1–9. [Google Scholar] [CrossRef]

- Rojas-García, A.; Moreno-Blanco, D.; Otero-Arteseros, M.; Rubio-Bolívar, F.J.; Peinado, H.; Elorza-Fernández, D.; Gómez, E.J.; Quintana-Díaz, M.; Sánchez-Gonzalez, P. SIMUNEO: Control and Monitoring System for Lung Ultrasound Examination and Treatment of Neonatal Pneumothorax and Thoracic Effusion. Sensors 2023, 23, 5966. [Google Scholar] [CrossRef]

- Pietersen, P.I.; Madsen, K.R.; Graumann, O.; Konge, L.; Nielsen, B.U.; Laursen, C.B. Lung Ultrasound Training: A Systematic Review of Published Literature in Clinical Lung Ultrasound Training. Crit. Ultrasound J. 2018, 10, 23. [Google Scholar] [CrossRef]

- Marini, T.J.; Rubens, D.J.; Zhao, Y.T.; Weis, J.; O’Connor, T.P.; Novak, W.H.; Kaproth-Joslin, K.A. Lung Ultrasound: The Essentials. Radiol. Cardiothorac. Imaging 2021, 3, e200564. [Google Scholar] [CrossRef]

- Lichtenstein, D.A.; Mezière, G.A.; Lagoueyte, J.-F.; Biderman, P.; Goldstein, I.; Gepner, A. A-Lines and B-Lines. Chest 2009, 136, 1014–1020. [Google Scholar] [CrossRef] [PubMed]

- Bhoil, R.; Ahluwalia, A.; Chopra, R.; Surya, M.; Bhoil, S. Signs and Lines in Lung Ultrasound. J. Ultrason. 2021, 21, e225–e233. [Google Scholar] [CrossRef] [PubMed]

- Demi, M.; Soldati, G.; Ramalli, A. Lung Ultrasound Artifacts Interpreted as Pathology Footprints. Diagnostics 2023, 13, 1139. [Google Scholar] [CrossRef] [PubMed]

- WebGL Overview—The Khronos Group Inc. Available online: https://www.khronos.org/webgl/ (accessed on 28 June 2023).

- Soldati, E.; Roseren, F.; Guenoun, D.; Mancini, L.; Catelli, E.; Prati, S.; Sciutto, G.; Vicente, J.; Iotti, S.; Bendahan, D.; et al. Multiscale Femoral Neck Imaging and Multimodal Trabeculae Quality Characterization in an Osteoporotic Bone Sample. Mater. Basel Switz. 2022, 15, 8048. [Google Scholar] [CrossRef]

- Borzęcki, M.; Skurski, A.; Kamiński, M.; Napieralski, A.; Kasprzak, J.; Lipiec, P. Applications of Ray-Casting in Medical Imaging. In Information Technologies in Biomedicine, Volume 3; Piętka, E., Kawa, J., Wieclawek, W., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2014; Volume 283, pp. 3–14. ISBN 978-3-319-06592-2. [Google Scholar]

- Piórkowski, A.; Kempny, A. The Transesophageal Echocardiography Simulator Based on Computed Tomography Images. IEEE Trans. Biomed. Eng. 2013, 60, 292–299. [Google Scholar] [CrossRef]

- Zhu, M.; Salcudean, S.E. Real-Time Image-Based B-Mode Ultrasound Image Simulation of Needles Using Tensor-Product Interpolation. IEEE Trans. Med. Imaging 2011, 30, 1391–1400. [Google Scholar] [CrossRef]

- Shams, R.; Hartley, R.; Navab, N. Real-Time Simulation of Medical Ultrasound from CT Images. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2008; Lecture Notes in Computer Science; Metaxas, D., Axel, L., Fichtinger, G., Székely, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5242, pp. 734–741. ISBN 978-3-540-85989-5. [Google Scholar]

- Kutter, O.; Shams, R.; Navab, N. Visualization and GPU-Accelerated Simulation of Medical Ultrasound from CT Images. Comput. Methods Programs Biomed. 2009, 94, 250–266. [Google Scholar] [CrossRef] [PubMed]

- Reichl, T.; Passenger, J.; Acosta, O.; Salvado, O. Ultrasound Goes GPU: Real-Time Simulation Using CUDA; Miga, M.I., Wong, K.H., Eds.; Society of Photo-Optical Instrumentation Engineers (SPIE): Lake Buena Vista, FL, USA, 2009; p. 726116. [Google Scholar]

- Dillenseger, J.-L.; Laguitton, S.; Delabrousse, É. Fast Simulation of Ultrasound Images from a CT Volume. Comput. Biol. Med. 2009, 39, 180–186. [Google Scholar] [CrossRef]

- Bamber, J.C.; Dickinson, R.J. Ultrasonic B-Scanning: A Computer Simulation. Phys. Med. Biol. 1980, 25, 463–479. [Google Scholar] [CrossRef]

- Gjerald, S.U.; Brekken, R.; Hergum, T.; D’hooge, J. Real-Time Ultrasound Simulation Using the GPU. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2012, 59, 885–892. [Google Scholar] [CrossRef]

- Burger, B.; Bettinghausen, S.; Radle, M.; Hesser, J. Real-Time GPU-Based Ultrasound Simulation Using Deformable Mesh Models. IEEE Trans. Med. Imaging 2013, 32, 609–618. [Google Scholar] [CrossRef] [PubMed]

- Daz 3D—3D Models and 3D Software. Available online: https://www.daz3d.com/ (accessed on 28 June 2023).

- Anatomy 4 Pro Bundle. Available online: https://www.daz3d.com/anatomy-4-pro-bundle (accessed on 28 June 2023).

- Unity Real-Time Development Platform. Available online: https://www.unity.com (accessed on 28 June 2023).

- Von Haxthausen, F.; Rüger, C.; Sieren, M.M.; Kloeckner, R.; Ernst, F. Augmenting Image-Guided Procedures through In Situ Visualization of 3D Ultrasound via a Head-Mounted Display. Sensors 2023, 23, 2168. [Google Scholar] [CrossRef] [PubMed]

- Korzeniowski, P.; Plotka, S.; Brawura-Biskupski-Samaha, R.; Sitek, A. Virtual Reality Simulator for Fetoscopic Spina Bifida Repair Surgery. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–7 October 2022; IEEE: Kyoto, Japan, 2022; pp. 401–406. [Google Scholar]

- Korzeniowski, P.; Chacon, C.S.; Russell, V.R.; Clarke, S.A.; Bello, F. Virtual Reality Simulator for Pediatric Laparoscopic Inguinal Hernia Repair. J. Laparoendosc. Adv. Surg. Tech. A 2021, 31, 1322–1330. [Google Scholar] [CrossRef] [PubMed]

- Gustavson, S. Simplex Noise Demystified; Linköping University: Linköping, Sweden, 2005. [Google Scholar]

- Huijssen, J.; Verweij, M.D. An Iterative Method for the Computation of Nonlinear, Wide-Angle, Pulsed Acoustic Fields of Medical Diagnostic Transducers. J. Acoust. Soc. Am. 2010, 127, 33–44. [Google Scholar] [CrossRef] [PubMed]

- Soulioti, D.E. Ultrasound in Reverberating and Aberrating Environments: Applications to Human Transcranial, Transabdominal, and Super-Resolution Imaging. Doctoral Dissertation, Department of Biomedical Engineering of the University of North Carolina at Chapel Hill and North Carolina State University, Chapel Hill, NC, USA, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).