Abstract

The widespread popularity of digital technology has enabled the rapid dissemination of news. However, it has also led to the emergence of “fake news” and the development of a media ecosystem with serious prejudices. If early warnings about the source of fake news are received, this provides better outcomes in preventing its spread. Therefore, the issue of understanding and evaluating the credibility of media has received increasing attention. This work proposes a model of evaluating news media credibility called MiBeMC, which mimics the structure of human verification behavior in networks. Specifically, we first construct an intramodule information feature extractor to simulate the semantic analysis behavior of human information reading. Then, we design a similarity module to mimic the process of obtaining additional information. We also construct an aggregation module. This simulates human verification of correlated content. Finally, we apply regularized adversarial training strategy to train the MiBeMC model. The ablation study results demonstrate the effectiveness of MiBeMC. For the CLEF-task4 development and test dataset, the performance of the MiBeMC over state-of-the-art baseline methods is evaluated and found to be superior.

1. Introduction

With the widespread use of social networks by the public, the digital dissemination of information has subtly changed the overall structure of the public media space. Users can easily obtain a large amount of information via WeChat, Sina Weibo, personal media, and other network platforms. Meanwhile, with the continuous improvement of modern technologies such as the Internet of Things and its related services [1], the channels for information acquisition and dissemination will become more diverse and convenient. However, while enjoying this convenience, the public must also face a series of problems brought on by purposeful dissemination of information [2]. Nowadays, the spread of false, biased, and promotional content online has made it impossible for fact-checkers to verify every suspicious article. Therefore, many researchers are shifting their attention to analyzing entire news organizations, assessing the probability of credibility of the content at the time of news release by examining the reliability of the source of the news.

There is currently insufficient in-depth research in this field, which is mainly related to false information, false news detection, and media bias. Relevant researchers in the field of natural language processing have made many attempts to solve this problem, such as stance detection [3], rumor detection [4], and the use of other fact-checking websites such as Snopes, FactCheck, and Politifact [5]. However, the fact verification speed of these two types of methods is far from adequate in addressing the current quantity and dissemination speed of information, and they are not competent in credibility recognition in the early stage of information dissemination and cannot effectively block the impact of the early spread of false information.

The majority of audiences base the evaluations of the credibility of media on their own cognitive processes and on information retrieval processing. Additionally, some readers may rely on the results of third-party software evaluation, such as those provided Ad Fontes Media or Media Bias/Fact Check [6]. Although these websites and the human behind them often provide effective credibility labels, they also require subjective explanations from media producers and may not be suitable to other media ecosystems (for instance, the methods used for evaluation in the United States may not be applicable to other countries, such as China), and they can only be expanded at the speed of the human user to evaluate media websites. Therefore, people are increasingly interested in finding more effective computational methods for evaluating media credibility.

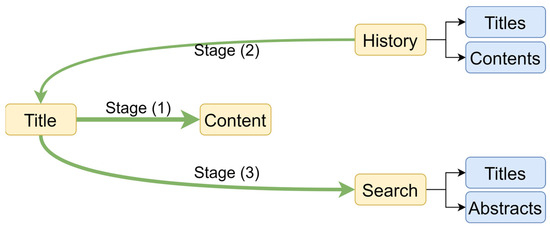

Inspired by [7], we propose the MiBeMC model to better mimic human behavior in evaluating media credibility on the internet. In creating MiBeMC, we acted from the belief that information modules typically have high affinity and mutual support, which is consistent with previous research on modal similarity or consistency [8,9]. Figure 1 shows human evaluation media credibility stages on the internet, consisting of multiple components with a news example with two components, i.e., title and body content. The evaluation behavior can be divided into three stages: (1) reading the title and content on a page on a website; (2) after the readers pay attention to the news outlet, they will associate the credibility of the same outlet’s news with what they have seen before; and (3) the human user will search for other news on relevant information based on the title. In stage (1), readers generally conduct a preliminary evaluation of the credibility of the information published by the media based on the title and content. In stage (2), readers generally further evaluate the credibility of the media outlet based on historical articles. In stage (3), readers generally search for relevant topic information and complete their final evaluation of media information based on relevant titles and content. After experiencing multiple factual verification processes of unified media release information, readers often make the final judgment on the credibility of the media. Thus, the media credibility verification behavior of readers can be described as proceeding from information to historical information to relevant information––i.e., title, content, historical information, similar information (as shown in Figure 2).

Figure 1.

Three stages of evaluating the credibility of news media by human user.

Figure 2.

Media credibility verification behavior of human.

Based on the above observations, we proposed the MiBeMC model, which includes an intra module feature extractor, a similarity module, an interaction module, an aggregation module, and a credibility evaluation module. A feature extractor module is used to perform semantic analysis on information. A similarity module is used to mimic search and recall information in order to obtain relevant additional information. The interaction module uses an inter-component feature extractor, which aims to simulate readers’ understanding and validation of the behavior of each two parts of the news. The goal of the aggregation module is to simulate the human user’s verification process from a local area to the entire content. Finally, we complete the credibility evaluation of news media according to the feature by the aggregator module.

Our main contributions can be summarized as follows:

- To the best of our knowledge, this is the first exploration for mimicking the process of verifying the process of news outlets in media credibility evaluation.

- We proposed the MiBeMC model by simulating the factual verification behavior of humans in order to examine the factuality of the reporting of the news media. Due to the finer granularity of modules compared to modalities, this method is more suitable for news media credibility evaluation in complex scenarios. The experimental results show the superiority of the MiBeMC performance over other algorithms on the CLEF Task 4 development and the test dataset.

- We introduced an algorithm framework based on regularization adversarial training, and we used the same strategy to experimentally analyze the performance of RoBERTa [10] and DeBERTa [11] on our proposed method. The experiments proved the effectiveness of regularization training strategy in this media-level task.

- The title of a news report often reflects the author’s high level of semantic understanding of the content. The opening content is a further expansion of the title, while the ending content usually includes a re-summary of the author’s attitude. Based on this discovery, we experimentally compared the differences in input combinations of different large language models on different content.

2. Related Work

Due to the appearance of “fake news” and other phenomena as well as the development of the media ecosystem with serious prejudice, humans have refocused on understanding media credibility. In earlier work, the credibility evaluation of news media sources was usually based on detecting the textual content of various news media websites to determine the credibility of their news. Due to the lack of credibility labels for media level [6], further research on this task has been delayed.

Baly et al. used labels from Media Bias/Fact Check (MBFC) in 2018 and proposed a media credibility evaluation task first [5]. They viewed all news published by news websites as a whole component and used ordinal regression models to evaluate media credibility and political bias. They also validated the impact of different features on the performance of the results via many experiments. They found that text content is the feature that has the greatest impact on performance, and the use of multitask joint models can also significantly improve overall performance. Specially, their experimental results on artificial fact verification and classification datasets have shown that using text representation can only achieve credibility prediction accuracy of 65–71% and bias prediction accuracy of 70–85%.

In addition to textual content information, it is also possible to use relevant features, such as domain names, certificates, and hosting attributes of news media websites [12], webpage design [13], and websites linked [14], in order to estimate the credibility of news media. By using the social background information of news media websites, the internal connection between news websites and social background information was studied [15]. Hounsel et al. made predictions based on the domain, the certificate, and the hosting information from the website infrastructure as potential indicators of source reliability [12]. Bozhanova et al. predicted the factuality of news outlets using observations about user attention in their YouTube channels [16].

Other research used the similarity of news media websites to evaluate the credibility of news outlets. The reliable feature for identifying the similarity is to check how close or overlapping the users of different news media are [17]. Panayotov et al. proposed a model based on graph neural networks, which models audience overlap between news outlet to predict the credibility and stance [18].

Some news media credibility evaluation works used prior knowledge. Hardalov et al. believed that the public is more inclined to believe in the results of manual factual verification [19]. Therefore, they proposed to verify whether the input content has been verified by a professional factual auditor and return an article explaining its evaluation results as a pipeline for performing automatic factual verification.

In recent research, Leburu et al. explored the effectiveness of stylometric features combined with a Random Forest classifier to evaluate the factuality of news media reporting. They proposed to leverage writing styles as a distinctive marker to differentiate between accurate and less factual news sources [20]. Tran et al. aimed to maximize the amount of training data and developed a RoBERTa model that learns the factual reporting patterns of news articles and news sources [21].

3. Methodology

The architecture of the proposed MiBeMC for media credibility evaluation is presented in this section. MiBeMC evaluates the credibility of media by simulating the human’s process of reading and verifying information, which can effectively extract and integrate features within and between different parts.

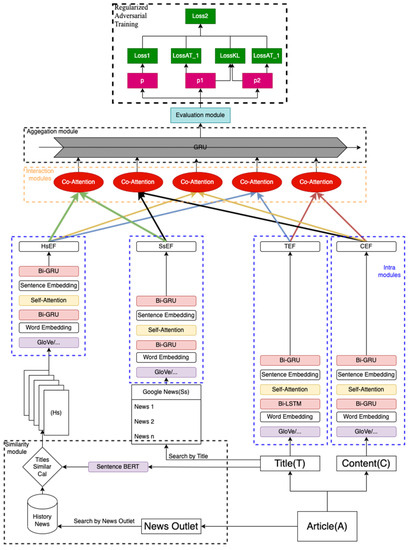

As shown in Figure 3, MiBeMC has six different modules, which are two different intra module feature extractors, one similarity module, one interaction module, one aggregation module, and one media credibility evaluation module. Specifically, these modules modeled three stages of human evaluation behavior into the following five steps: (1) the different intra modules extract the latent semantic of each component of the information, (2) the similarity module achieves the additional information by search operation, (3) the interaction module simulates the reading and understanding behavior between intra modules, (4) the aggregation module integrates these interaction features based on mimicking human verification behaviors, and (5) the media credibility evaluation module predicts the factuality label of news outlet based on the output of the final aggregation module.

Figure 3.

MiBeMC architecture.

3.1. Problem Formulation

To better simulate the human user’s process of reading information and verifying operation, we divide a news article into two components: the title and the content.

Following the definition in CLEF 2023 CheckThat! Task 4 [22], we consider news media credibility evaluation as an ordinal classification task that corresponds to three levels of credibility at the media level: low, mixed, and high. A news article () can be divided into two elements: title () and content (), which are formally written as . Our task is to find an evaluator that can model and integrate the information between different components, thereby evaluating the media into three levels of credibility: low (), mixed (), and high (), i.e., .

3.2. Data Preprocessing

For the noise in news content, we use Clean-Text [23] to clear the newline characters, ASCII codes, URLs, e-mail addresses, and punctuation in the title and the content, replace all numbers with the special token [number], and convert all uppercase letters to lowercase ones.

3.3. Information Association Similarity Module

This module simulates the operation of humans obtaining additional information from search pages and historical memories.

For the content related to the search pages, we use the title as the search term in order to obtain the results returned from the first page of Google News. We only use the title and the truncation content on the results page. We use the special separator [CLS] to connect each news article.

For historical memories, we utilize the historical news release from the same media. We vector all titles using Sentence-BERT [24] first, and then we choose the top 10 most similar forms of content based on cosine similarity. For similar search results, we use the special separator [CLS] to connect each news article. In addition, considering the characteristics of news type text itself, we found that the beginning and end parts of the news also provide relatively important semantic information. In particular, the first sentence or paragraph of a news article often serves as the introduction to the entire article, briefly revealing the content of the article and reinterpreting the essential semantics of the title. The last sentence or the last paragraph of the news contains a summary of the entire news article or the major points that the author wants to convey. Therefore, we adopted three different combinations of content interception methods in order to recombine the text and to feed it into the subsequent network.

- Head truncation. After truncating the start position of all news content in the media to the specified position, use a special separator to identify the [SEP] connection.

- Tail truncation. After truncating the end position of all news content in the media to the specified forward position, use a special separator to identify the [SEP] connection.

- Middle content truncation. After truncating the content of all news in the media from a specified position to a fixed length, use a special separator to identify the [SEP] connection.

3.4. Feature Extraction Intra Module

In this work, we use two different feature extractors intra modules to mine the latent semantics of the title, content, search news results, and history similar news, which are written as TEF, CEF, SsEF, and HsEF respectively. Specifically, the feature extractor has one attention layer and, at most, two Recurrent Neural Network (RNN) layers.

Due to the fact that all textual articles consist of sentences and words, we naturally write the title and content as , where I and J are the number of title and content sentences, and is a sentence with words. Similarly, represents a sentence containing words. In this work, we limit the title length to one, i.e., . In addition, we introduce Glove [25] to vectorize each word with dimension.

Many studies have shown the significant ability of the hierarchical structure to extract features [9,26], so we constructed a hierarchical attention network in order to capture the internal semantic information from words to sentences, namely, TEF, CEF, SsEF, and HsEF, and, just like when humans read a news text, it reads the entire text from local words to the whole sentence.

Taking content elements as an instance and considering information loss during long sentence input, we use two Bi-GRU [27] layer to encode words and sentences separately. The first layer is used for word-level encoding and can be mimicked as the human forward and reverse reading process:

where c represents the c-th sentence of content, and where is the output feature of Bi-GRU in both directions before and after the word .

Due to the strong ability of the attention mechanism in natural language processing [26,28], we use an attention layer to redistribute the weight of words according to their importance. Each word’s weight can be calculated as follows:

where are the learnable parameters, is the attention weight of the word , and is a hidden representation of achieved from a fully connected layer with . Therefore, the weighted sentence embedding can be calculated as follows:

After the attention layer, we apply the second layer to explore the contextual information of sentences by simulating the human reading process. Therefore, the feature of the j-th sentence can be expressed as follows:

where is the output of Bi-GRU in two directions around sentence .

The structures of SsEF and HsEF are the same with CEF. TEF can be considered as a variant of CEF. For providing the human with a direct understanding of the article, a news editor often needs to describe the entire story in language that is as concise as possible. Therefore, a news title usually contains the most critical semantic information, which is a highly condensed form of the overall content. Therefore, for a news title, we use Bi-LSTM instead of Bi-GRU because the LSTM unit contains more parameters.

3.5. Intra Feature Interaction Module

This module simulates the reading and understanding process of humans, including five interaction modules within four intra features. As shown in Figure 1, different news articles on the internet can complement and verify each other, which is also one of the bases that humans use to distinguish fake news and low credibility news outlets more effectively [29,30]. In addition, research has shown that mining information from two different components is effective [31,32]. Therefore, replicating the human reading and understanding process of news content components, we can combine these two different components and then use feature extractors between the components to separately mine the interaction affinity of each combination.

In this work, we use the parallel co-attention [30] as intra-feature interaction modules and simulate the understanding process of each combination according to its interaction affinity, such as the complement of the title and the content. The parallel attention operation calculates weights to each other based on the interaction affinity of two different inputs. The higher the interaction affinity, the higher the media credibility to the human. The specific module for the two different intra modules is shown in Equations (6)–(13). We calculate the interaction matrix to measure the interaction affinity between two different intra modules:

where , are the two different modules inputs, N and Q are the number of each sentences, and is a learnable matrix. For the case where four intra modules are accessible, . The newly feature representations of A, , and are calculated as follows:

where are represented as the learnable weight matrix, , respectively, represent the interaction co-attention scores of and , and are the output of and , which contain the weighted information of and . is the feature output of the attention operation with two different inputs.

3.6. Aggregation Module

We connect the information between the interaction modules into a well-designed order, and we give it to the aggregator module. By this operation, we mimic the verification of facts behavior of humans––namely, the order mentioned in Figure 2. Therefore, the news content feature representation can be formalized as . We use the GRU layer as an aggregator to aggregate the features of the different combinations in order to reflect the reading and verification sequences of humans. Due to the problem of long-term dependence on GRU, we use the reverse order of human validation behavior as input in order to reduce the impact of long-term dependency issues from the perspective of data input:

where represents the final news content feature embedding that integrates different interaction modules.

3.7. Media Credibility Evaluation Module

We apply three-layer MLP to evaluate the credibility level of the news media through :

where represents the evaluation probability of credibility, and represents the three MLP layer used to detect labels.

In the real scenario of media credibility evaluation, high credibility media often accounts for the majority, while mixed and low credibility media account for a relatively small proportion, which is also reflected in the public dataset. To solve this problem, we used the multi category Focal Loss [33] in order to alleviate the problem of category imbalance. This loss function can effectively reduce the weight of simple samples in training so that the model can pay more attention to hard and error prone samples. We provide each category with a different α coefficient value in Focal Loss, such as a high category α coefficient of 0.35:

where p is the prediction probability with credibility level label of the sample, α is the weight coefficient corresponding to each category, and is used to control the relative importance of loss in different categories, representing the difference between hard samples and soft samples.

3.8. Regularized Adversarial Training

Despite data preprocessing on the input text, many of the articles in news media frequently contain a lot of duplicate information. To improve the model’s generalization capability and to lessen the effect of redundant information on the performance of the model, we include adversarial training. Adversarial training is a training strategy that improves model robustness by introducing adversarial samples into the training process. It also has an obvious disadvantage in that it can easily lead to model performance degradation due to inconsistencies between the training and the testing stages. Ni et al. proposed a regularization training strategy R-AT [34], which introduces Dropout and regularizes the output probability distribution of different submodules to maintain consistency under the same adversarial sample. Specifically, R-AT generates adversarial samples by dynamically perturbing the input embedding vector, and then passes the adversarial samples to two submodules referencing Dropout in order to generate two probability distributions. By reducing the bidirectional KL divergence of two output probability distributions, R-AT regularizes model predictions.

In this work, we adopt the R-AT strategy and we use the Focal Loss function to replace the original Cross entropy loss function. In each training step, the forward propagation and backward calculation of origin samples are completed first. Following the original R-AT strategy configuration, we use the fast Gradient method (FGM) [35] proposed by Miyato et al. in order to perturb the language model layer of the model and to obtain two confrontation samples. By calculating the corresponding classification loss and the KL divergence of the confrontation samples, the calculate process is as follows:

Finally, the loss of the second backward propagation is obtained:

where is the output of the model using FGM, and references KL distance function. We rewrite the original loss function of R-AT and modify the original fixed weight parameter into an adaptive parameter k, which is utilized to adjust the weight of multiple different loss function during the second forward propagation.

4. Experiment and Parameter Setup

4.1. Dataset

In previous work, the credibility of news media was usually estimated based on the overall labels of the target media regarding known true/false information that lacks media level credibility labels [36]. In recent years, with the improvement of data labels, this task has been able to be studied as an independent task, called CheckThat! Lab, provides a comprehensive dataset and research platform for this task. In this article, we used CheckThat! Task 4 dataset in CLEF 2023 [22] to focus on media credibility assessment research, which requires predicting the level of credibility at the media level, including low, mixed, and high levels. This data level includes news media, the news article list, corresponding credibility label text (high, mixed, low), and label number (2, 1, 0). This dataset consists of 1189 media outlet sources and 10 k news articles. The news article list contains the title and the content, which are used to predict the credibility of their source. The label distribution of the training, development, and test partitions are shown in Table 1.

Table 1.

CLEF-2023 CheckThat! Lab task 4 dataset.

4.2. Evaluation Metric

Following the CLEF-2023 CheckThat! Lab Task 4, we treat media credibility evaluation as an ordinal classification task, and, thus, we use Mean Absolute Error (MAE) as the evaluation metric. The smaller the MAE value, the smaller the difference between the predicted label and the gold label, indicating that the predicted results are more accurate.

where is the predicted label, is the ground-truth label, and n is the number of media outlet.

4.3. Compare Models

We used seven compare models as benchmarks for this task, including four baseline models provided in CLEF-2023, which are Mid-label, Majority, Random, and N-gram. Three models are from the leaderboard named CUCPLUS, Accenture, and UBCS. The CUCPLUS model is from our previous work [37], and achieves first place.

Although this competition has ended, some models on the leaderboard are currently unknown and not publicly available, so we only choose three of them that are publishing their work on workshop note in order to compare the results. We list all of the compared models with a brief description OF THEM in Table 2.

Table 2.

Important comparison of the three models with a brief description.

4.4. Implementation Details

The proposed model is trained, tested, and evaluated inside the AutoDL platform. The PyTorch and scikit-learn libraries are used to create all models. CountVectorizer and Clean-Text libraries are used for data preprocessing.

We implemented all of the compared models and proposed MiBeMC using NVIDIA GeForce RTX 3090. We first encoded the text using Glove [25]. The word dimension was 300, Bi-GRU unit’s dimension was 100, and the intra module output feature was 768. We used the Adam optimizer [38]. The learning rate was 5 × 10−4 and the learning rate was adjusted by the cosine annealing algorithm, and the attenuation weight was 5 × 10−3. The loss function was a multi-category Focal Loss, γ = 2, α = [0.1, 0.5, 0.4]. The batch size was 8. The maximum text input length was 1024. During the training, each batch of samples was randomly selected from the dataset from the different media.

We also used the RoBERTa and DeBERTa instead of Glove to encode the text input. In this case, the word dimension was 768.

5. Results and Discussions

In this section, we conduct experiments on the CLEF 2023 Task 4 dataset [7] to evaluate the effectiveness of MiBeMC in evaluating media credibility. Specifically, we propose the following three research questions to guide the experiment:

- RQ1: Is MiBeMC better performance than the baseline and state-of-the-art methods in evaluating media credibility?

- RQ2: Does content truncation have an impact on model performance?

- RQ3: Is regularized adversarial training effective in the proposed method?

- RQ4: How effectively does each module of MiBeMC in improving its performance?

5.1. Performance Comparison (RQ1)

In the previous sections, we described the proposed MiBeMC model, a public dataset for media credibility evaluation, and a performance evaluation metric. To answer RQ1, we conducted a comprehensive experiment on the CLEF-2023 task 4 dataset (a publicly available benchmark dataset). Table 3 shows the performance of each of the models. The experimental results show that the MiBeMC model has lower MAE values than the others. In this comparison experiment, the MiBeMC settings are as follows: using Glove to encode the text input, the hyperparameter k of the R-AT strategy is 0.3, and the content truncation strategy is to retain 50 characters at the beginning and the end of the article content, as well as the middle 100 characters. The other settings are the same as the default configurations.

Table 3.

Comparison of the performance of the proposed model with the compare models on the CLEF-2023 Task 4 dataset.

Our proposed model has improved performance by 0.606, 0.539, 0.339, and 0.198 compared to the current baseline models Random, Middle class, Majority class, and Ngram baseline, and it has improved performance by 0.022, 0.194, and 0.268 compared to the state-of-the-art models CUCPLUS, Accenture, and UBCS.

Compared to the modal-based models (CUCPLUS and Accenture), the MiBeMC model achieves better performance. This may be because these modal-based methods can lead to sub-optimal outcomes since they overlook human behavior in the reading and verification process. From the experiment results, it can be concluded that modeling the media credibility evaluation process from a component perspective combined with the human’s reading, understanding, and verification processes is effective because it can achieve an excellent performance compared to the modal-based methods.

In addition, the MiBeMC provides a complete solution for evaluating the credibility of the news outlet. Even if the number of history articles published by media organizations is small, or even if it is only one, the proposed model can obtain additional information based on the title and the content of the current article. The retrieval information based on the title and the history information based on the cosine similarity provide reliable additional relevant information. At the same time, regularized adversarial training is also used in order to reduce the impact of redundant data and to improve the performance of MiBeMC.

In the end, we also examined the performance of different pre-trained language models combined with our proposed model. Table 4 shows the algorithm performance after using RoBERTa and DeBERTa instead of Glove.

Table 4.

Performance of RoBERTa and DeBERTa instead of Glove on the CLEF-2023 Task 4 dataset.

The results in Table 4 show that after replacing Glove with a pre-trained language model, the MAE values are all decreased. We suggest that this phenomenon is due to the pre-trained language models having a stronger language processing ability and being more suitable for handling the complex task of media credibility evaluation. Through more careful observation, we find that DeBERTa is better than RoBERTa. This may be because DeBERTa’s virtual adversarial training strategy is consistent with the goal of the regularized adversarial training strategy that we used, both to reduce the impact of data redundancy and the mismatch between the training and the prediction. RoBERTa also preferred to focus on the token itself, whereas DeBERTa preferred special character dominance due to its enhanced mask decoder. This makes it effective for us to select special characters, such as [CLS] and [SEP], as feature vectors in data preprocessing as the context representation of the input sequence.

5.2. Study on Content Truncation (RQ2)

As mentioned in Section 3.3, the historical content published by news media can range from a few to tens of thousands of articles. In order to enable the model to focus more on effective information, we use cosine similarity to limit news articles to a maximum of 10. However, one article has many words, so we used three different combinations of content truncation methods in order to reassemble such news content. To answer RQ2, we designed experiments with different truncation combinations in order to analyze the impact of content truncation on the performance of MiBeMC. Specifically, we conducted relevant experiments on MiBeMC-RoBERTa and MiBeMC-DeBERTa, and the results are shown in Table 5. T represents the content being replaced by its title text, F represents the header truncation, I represents the middle content truncation, and L represents the tail truncation.

Table 5.

Performance of the MiBeMC-RoBERTa and the MiBeMC-DeBERTa with a combination of different truncated methods on the CLEF-2023 Task 4 dataset.

As shown in Table 5, only using the title alone as input can achieve a good performance, and DeBERTa’s performance is better than that of RoBERTa in most cases. Most truncated combinations as inputs greatly reduce the performance of the model, with only a few specific combinations improving the performance. However, it should be noted that in several combinations, there is a significant improvement in performance, with the MAE value achieved ranging from 0.268 and 0.243. We believe that this is related to the characteristics of the news article itself. The title of the article already summarizes the content, while the beginning and ending content are usually related to the writing habits of the media editors. The middle content is often the main judgment basis for untrustworthy content. Therefore, it is necessary to determine the truncation combination of news content, and content truncation methods can indirectly reduce the redundant information, thereby enabling it to better represent the core information of the whole piece of news.

5.3. Study on R-AT (RQ3)

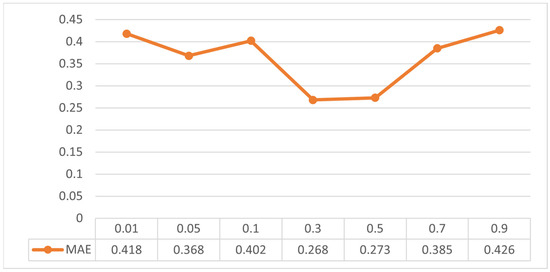

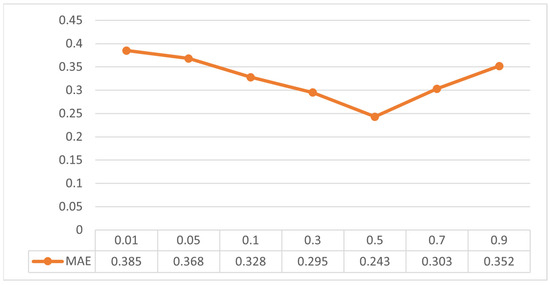

For a deep learning model, preventing overfitting and improving the generalization ability are essential. Adversarial training is one of the commonly used methods to improve model generalization ability. In this work, we apply the R-AT strategy, and we use the hyperparameter k to adjust the weights of different loss function during the second forward propagation. To answer RQ3, in order to demonstrate the effectiveness of the R-AT strategy on the performance of the MiBeMC model, we conducted relevant experiments on different values of hyperparameter k. The results are shown in Figure 4 and Figure 5, and k represents the ratio of loss and the KL loss during the second forward propagation in regularized adversarial training.

Figure 4.

Performance of MiBeMC-RoBERTa with a different k value.

Figure 5.

Performance of MiBeMC-DeBERTa with different k vlaue.

The experimental results show that the model can maintain good stability when different k values are used. When k is taken as 0.3, MiBeMC-RoBERTa achieved the best performance, with an MAE value of 0.268. When k is taken as 0.5, MiBeMC-DeBERTa achieved the best performance, with an MAE value of 0.243. However, the k value should not be too large (such as above 0.7), as a higher k value implies that randomness is too high, and model optimization will thus be more difficult. These results also prove that the potential gradient regularization of the R-AT strategy can make the model tend to optimize to a flatter minimum risk in order to improve the generalization ability.

5.4. Ablation Study (RQ4)

The behavior of carefully evaluating a framework in the presence and absence of a certain component is called ablation study. This analysis is performed by individually deleting the components of the framework and grouping them, identifying bottlenecks and unnecessary components helps in order to optimize the process of model design. The purpose of conducting ablation study is to demonstrate the importance and efficacy level of the contributions made by each different module.

To measure the necessity of the title, the content, and the effectiveness of the similarity module, the interaction module, and the aggregation module, we compared the five ablation variant models of MiBeMC. The detailed results are shown in Table 6. The MiBeMC/T, MiBeMC/C, MiBeMC/Ss, and MiBeMC/Hs are variants without title, content, retrieval information, and historical information, respectively. The MiBeMC/G is a variant of the MiBeMC without aggregation modules, which replaces directly connecting the intra features to evaluate media credibility. Obviously, the absence of any module will lead to suboptimal performance. Among these four components, content and historical information contribute the most to the evaluation of media credibility, while the contribution of search information is the least. In summary, every component that is related to the evaluation process is beneficial to the model, and mining the hidden information within it is meaningful.

Table 6.

Performance of variant models on the CLEF-2023 Task 4 dataset.

In addition, the ablation of the aggregation module reduced the performance of the MiBeMC, indicating that the human verification process order is beneficial for evaluating media credibility. Therefore, we further explored the effectiveness of the aggregation order, which is the verification process order. As shown in Table 7, the concatenation order from humans’ reading, understanding, and factual verification behaviors is superior to other orders, which proves the rationality and the effectiveness of the MiBeMC.

Table 7.

Performance of different aggregation order on the CLEF-2023 Task 4 dataset.

6. Conclusions

This work is inspired by the human user’s factual verification behavior in media credibility evaluation, and it proposes a novel new media credibility evaluation method called the MiBeMC. Specifically, we refined media credibility assessment into a multi factor module fusion problem, aiming to extract features within and between modules. Firstly, we designed a feature extractor intra module in order to simulate the semantic understanding process of each news element. Then, we constructed an association similarity module to simulate the credibility of the same media’s news that humans have previously seen when they follow the release of the news media as well as other title and content verification behaviors of readers searching related topics. Simultaneously, these behaviors are integrated into a sequence through the aggregation module in order to mimic the entire human verification process. Finally, a media credibility detector was constructed to predict the credibility of the news outlet. In addition, regularized adversarial training can alleviate redundant information in the dataset, effectively avoiding overfitting that is caused by learning information unrelated to credibility labels, and thereby enhancing the model’s generalization ability. The experiment has demonstrated the effectiveness of MiBeMC in the media credibility evaluation task.

However, our proposed model has some limitations. Firstly, in data truncation ablation experiments, we found the impact of data truncation on model performance, but we have not yet conducted in-depth research on how to accurately control the truncation range in order to maximize its effectiveness. Secondly, it currently does not support languages other than English, and due to the lack of relevant datasets, we are unable to further explore the role of multimodal data in the media credibility evaluation task. In future work, we will conduct in-depth analysis of the role of precise data truncation technology in media credibility assessment tasks. In order to fill the multimodal data gaps in this task, we will also attempt to construct a multimodal media credibility evaluation dataset, further exploring the role of media credibility evaluation in the online media environment, and hoping to provide some technical support for promoting the sustainable development of the environment of news outlets.

Author Contributions

Conceptualization, W.F. and Y.W.; methodology, W.F.; software, W.F.; validation, W.F.; formal analysis, W.F.; investigation, W.F.; resources, W.F.; data curation, W.F. and H.H.; writing—original draft preparation, W.F.; writing—review and editing, W.F. and Y.W.; visualization, W.F. and H.H.; supervision, Y.W.; project administration, Y.W.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key Research and Development Program of China, grant number 2021YFF0901602.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

In the work, no new data were created. The dataset used in this work can be accessed on https://gitlab.com/checkthat_lab/clef2023-checkthat-lab/-/tree/main/task4 (accessed on 2 June 2023).

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Mohamed, A.A.; Laith, A.; Alburaikan, A.; Khalifa, H.A. AOEHO: A new hybrid data replication method in fog computing for Iot application. Sensors 2023, 23, 2189. [Google Scholar] [CrossRef] [PubMed]

- Fan, W.; Wang, Y. Cognition security protection about the mass: A survey of key technologies. J. Commun. Univ. China Sci. Technol. 2022, 29, 1–8. [Google Scholar] [CrossRef]

- Ghanem, B.; Rosso, P.; Rangel, F. Stance detection in fake news a combined feature representation. In Proceedings of the First Workshop on Fact Extraction and VERification (FEVER); Association for Computational Linguistics: Brussels, Belgium, 2018; pp. 66–71. [Google Scholar]

- Zubiaga, A.; Liakata, M.; Procter, R. Exploiting context for rumour detection in social media. In Proceedings of the Social Informatics: 9th International Conference, SocInfo 2017, Oxford, UK, 13–15 September 2017; Proceedings, Part I 9; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 109–123. [Google Scholar]

- Baly, R.; Karadzhov, G.; Alexandrov, D.; Glass, J.; Nakov, P. Predicting factuality of reporting and bias of news media sources. arXiv 2018, arXiv:1810.01765. [Google Scholar]

- Cruickshank, I.J.; Zhu, J.; Bastian, N.D. Analysis of Media Writing Style Bias through Text-Embedding Networks. arXiv 2023, arXiv:2305.13098. [Google Scholar]

- Yin, J.; Gao, M.; Shu, K.; Zhao, Z.; Huang, Y.; Wang, J. Emulating Reader Behaviors for Fake News Detection. arXiv 2023, arXiv:2306.15231:2. [Google Scholar]

- Zhou, X.; Wu, J.; Zafarani, R. Safe: Similarity-aware multi-modal fake news detection. arXiv 2020, arXiv:200304981. [Google Scholar]

- Xue, J.; Wang, Y.; Tian, Y.; Li, Y.; Shi, L.; Wei, L. Detecting fake news by exploring the consistency of multimodal data. Inf. Process. Manag. 2021, 58, 102610. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- He, P.; Liu, X.; Gao, J.; Chen, W. Deberta: Decoding-enhanced bert with disentangled attention. arXiv 2020, arXiv:2006.03654. [Google Scholar]

- Hounsel, A.; Holland, J.; Kaiser, B.; Borgolte, K.; Feamster, N.; Mayer, J. Identifying disinformation websites using infrastructure features. In Proceedings of the 10th USENIX Workshop on Free and Open Communications on the Internet (FOCI 20), Boston, MA, USA, 11 August 2020. [Google Scholar]

- Castelo, S.; Almeida, T.; Elghafari, A.; Santos, A.; Pham, K.; Nakamura, E.; Freire, J. A topic-agnostic approach for identifying fake news pages. In Proceedings of the Companion Proceedings of the 2019 World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 975–980. [Google Scholar]

- Fairbanks, J.; Fitch, N.; Knauf, N.; Briscoe, E. Credibility assessment in the news: Do we need to read. In Proceedings of the MIS2 Workshop Held in Conjuction with 11th International Conference on Web Search and Data Mining; ACM: New York, NY, USA, 2018; pp. 799–800. [Google Scholar]

- Baly, R.; Karadzhov, G.; An, J.; Kwak, H.; Dinkov, Y.; Ali, A.; Glass, J.; Nakov, P. What was written vs. who read it: News media profiling using text analysis and social media context. arXiv 2020, arXiv:2005.04518. [Google Scholar]

- Bozhanova, K.; Dinkov, Y.; Koychev, I.; Castaldo, M.; Venturini, T.; Nakov, P. Predicting the Factuality of Reporting of News Media Using Observations about User Attention in Their YouTube Channels; INCOMA Ltd.: Shoumen, Bulgaria, 2021. [Google Scholar]

- Darwish, K.; Stefanov, P.; Aupetit, M.; Nakov, P. Unsupervised user stance detection on Twitter. In Proceedings of the International AAAI Conference on Web and Social Media, Atlanta, GA, USA, 1–5 June 2020; Volume 14, pp. 141–152. [Google Scholar]

- Panayotov, P.; Shukla, U.; Sencar, H.T.; Nabeel, M.; Nakov, P. GREENER: Graph Neural Networks for News Media Profiling. arXiv 2022, arXiv:2211.05533. [Google Scholar]

- Hardalov, M.; Chernyavskiy, A.; Koychev, I.; Ilvovsky, D.; Nakov, P. CrowdChecked: Detecting previously fact-checked claims in social media. arXiv 2022, arXiv:2210.04447. [Google Scholar]

- Leburu, D.; Thuma, E.; Motlogelwa, N.P.; Mudongo, M.; Mosweunyane, G. UBCS at CheckThat! 2023: Stylometric features in detecting factuality of reporting of news media. In Proceedings of the Working Notes of CLEF 2023–Conference and Labs of the Evaluation Forum, Thessaloniki, Greece, 18–21 September 2023. [Google Scholar]

- Sieu, T.; Paul, R.; Benjamin St Evan, M.W. Accenture at CheckThat! 2023: Learning to Detect Factuality Levels of News Sources. In Proceedings of the Working Notes of CLEF 2023–Conference and Labs of the Evaluation Forum, Thessaloniki, Greece, 18–21 September 2023. [Google Scholar]

- Barrón-Cedeño, A.; Alam, F.; Caselli, T.; Da San Martino, G.; Elsayed, T.; Galassi, A.; Haouari, F.; Ruggeri, F.; Struß, J.M.; Nandi, R.N.; et al. The clef-2023 checkthat! Lab: Check worthiness, subjectivity, political bias, factuality, and authority. In European Conference on Information Retrieval; Springer Nature: Cham, Switzerland, 2023; pp. 506–517. [Google Scholar]

- Johannes, F. Python Package for Text Cleaning. Available online: https://github.com/jfilter/clean-text (accessed on 23 October 2022).

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Qian, S.; Wang, J.; Hu, J.; Fang, Q.; Xu, C. Hierarchical multi-modal contextual attention network for fake news detection. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021; pp. 153–162. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Qi, P.; Cao, J.; Li, X.; Liu, H.; Sheng, Q.; Mi, X.; He, Q.; Lv, Y.; Guo, C.; Yu, Y. Improving fake news detection by using an entity-enhanced framework to fuse diverse multimodal clues. In Proceedings of the 29th ACM International Conference on Multimedia, Online, 20–24 October 2021; pp. 1212–1220. [Google Scholar]

- Spezzano, F.; Shrestha, A.; Fails, J.A.; Stone, B.W. That’s fake news! Investigating how readers identify the reliability of news when provided title, image, source bias, and full articles. Proc. ACM Hum. Comput. Interact. J. 2021, 5, 1–19. [Google Scholar] [CrossRef]

- Lu, J.; Yang, J.; Batra, D.; Parikh, D. Hierarchical question-image co-attention for visual question answering. Adv. Neural Inf. Process. Syst. 2016, 29, 2980–2988. [Google Scholar]

- Shu, K.; Cui, L.; Wang, S.; Lee, D.; Liu, H. Defend: Explainable fake news detection. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 395–405. [Google Scholar]

- Kumar, S.; Kumar, G.; Singh, S.R. Detecting incongruent news articles using multi-head attention dual summarization. In Proceedings of the 2nd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 12th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 20–23 November 2022; pp. 967–977. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ni, S.; Li, J.; Kao, H.Y. R-AT: Regularized Adversarial Training for Natural Language Understanding. In Findings of the Association for Computational Linguistics: EMNLP 2022; Association for Computational Linguistics: Abu Dhabi, United Arab Emirates, 2022; pp. 6427–6440. [Google Scholar]

- Miyato, T.; Dai, A.M.; Goodfellow, I. Adversarial training methods for semi-supervised text classification. arXiv 2016, arXiv:1605.07725. [Google Scholar]

- Wessel, M.; Horych, T.; Ruas, T.; Aizawa, A.; Gipp, B.; Spinde, T. Introducing MBIB—The first Media Bias Identification Benchmark Task and Dataset Collection. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’23), Taipei, Taiwan, 23–27 July 2023; ACM: New York, NY, USA. [CrossRef]

- Li, C.; Xue, R.; Lin, C.; Fan, W.; Han, X. Team_CUCPLUS at CheckThat! 2023: Text Combination and Regularized Adversarial Training for News Media Factuality Evaluation. In Proceedings of the Working Notes of CLEF 2023—Conference and Labs of the Evaluation Forum, Thessaloniki, Greece, 18–21 September 2023. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).