1. Introduction

As a part of computational linguistics, coreference resolution is still a research challenge as it is not enough to only find the first occurrence of an entity in the overall analysis of a text; the correct identification and assignment of all verbal references to these entities are also necessary. In this work, we decided to combine the issue of coreference resolution with the area of identifying fake news. We focused on entity determination methods and compared whether substituting an entity for the original reference can improve and specify the text representation for classifiers.

Natural language processing requires pre-processing the text into a form that is usable for the analysis and prediction of specific tasks. Coreference resolution (CR) involves searching and replacing the name of the given entity in the text. Every written text contains mentions or references to many entities from the real world, which may not always be labeled with the same words. Very often pronouns or synonyms of nominal phrases are used to avoid the repetition of words in the text. The mentioned method of referencing brings one problem: for each reference, its target entity must also be determined. CR deals with this problem. A coreference occurs when the same referent is referred to in the text under different terms.

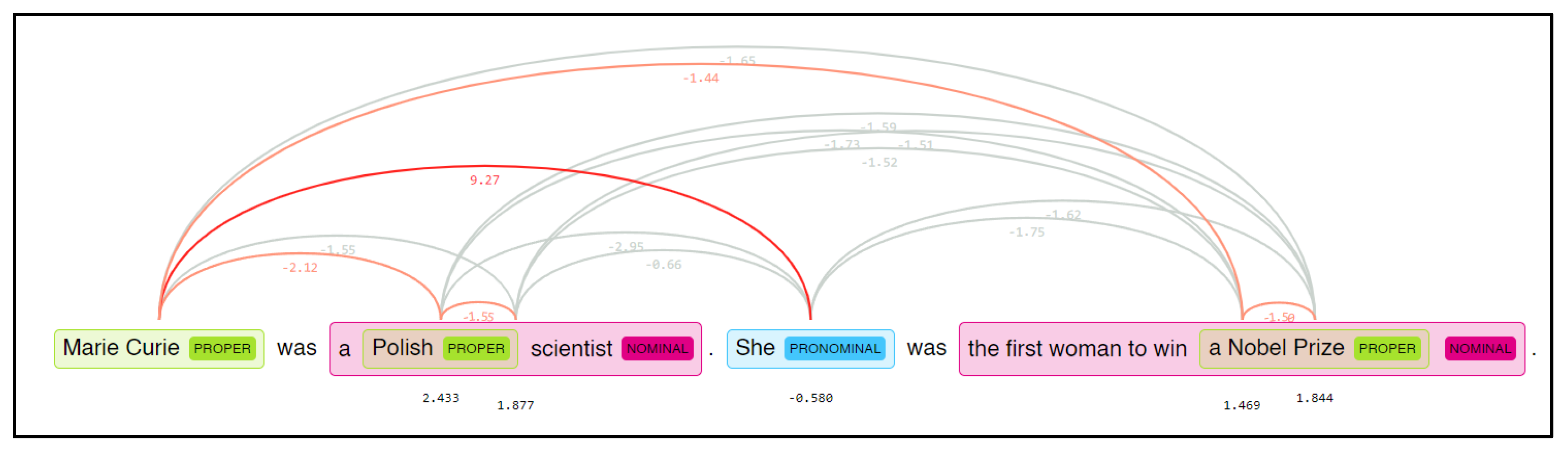

As an example, we can mention the person “Marie Curie”, who can be mentioned later in a text as “Mrs. Curie”, by the pronoun “she”, or even by her initials “MC”. Mentions can occur in the form of a noun phrase, a pronoun, a proper name, etc. Each of these phrases refers to the same person, and the main task of coreferencing is to identify him or her and to find out the relationship between a given group of expressions. Another problem is determining the gender of the referent because it makes it much easier to determine the relationships between individual entities. We can see the illustration of CR in

Figure 1, where mentions are identified in blue, which will be replaced by specific entities.

In our article, we focus on coreference resolution, which searches and identifies coreferential relationships between entities and their referring terms in a text. Using coreference resolution, we can add additional information to the text, e.g., replace pronouns with a weak declarative value by the objects they represent. This approach can be used to refine the representation of the text.

The aim of this article is to find out whether pre-processing a text using coreference resolution can improve performance measures in classification tasks. One of the classification tasks is the classification of fake news, which was chosen from our previous work as we have experience with this type of task and, at the same time, we have a validated existing dataset. It is obvious that coreference resolution can be applied in solving other text data classification tasks as well.

The basic goal of our work is to determine the significance of CR identification for identifying fake news messages. We believe that a text after CR identification will result in better outcomes. Our aim is not only to determine if the results will improve but also to quantify the improvement. Additionally, we aim to assess the impact of CR on the results based on two different embedding methods for creating feature vectors.

We used CR as a preliminary preparation method for texts that will be used for the vectorization of documents. Vectors created from documents are often used as input vectors to create training and test sets for classifiers in natural language processing classification tasks.

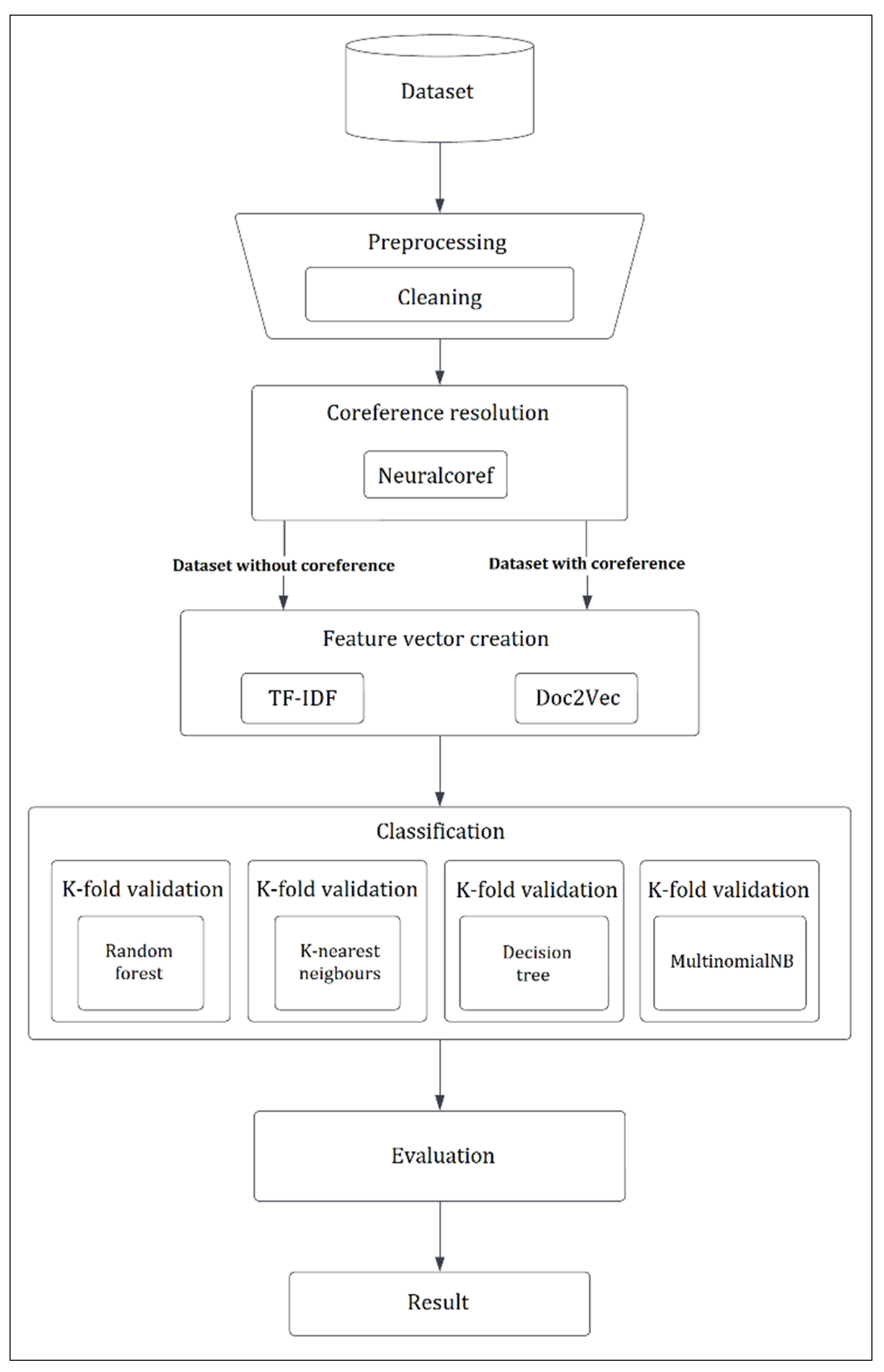

The following methodology (

Figure 2) was used for the evaluation of the contribution of CR to improving classification tasks:

Data preparation;

The identification of the coreference resolution in the data file;

The creation of word vectors and document vectors using the Doc2Vec methods and the traditional TF–IDF method. The following datasets containing four-word vectors were created:

- (a)

Doc2Vec_nocoref—a dataset in its original state without any identified coreference resolution, processed by the Doc2Vec method;

- (b)

Doc2Vec_coref—a modified dataset with an identified coreference resolution, processed by the Doc2Vec method;

- (c)

TfIdf_nocoref—a dataset in its original state without any identified coreference resolution, processed by the TF–IDF method,

- (d)

TfIdf_coref—a modified dataset with an identified coreference resolution, processed by the TF–IDF method.

The creation of the text classification models (fake news classification) using pre-processed word vectors as the inputs:

- (a)

Decision Tree;

- (b)

Random Forest;

- (c)

K-Nearest Neighbors;

- (d)

MultinomialNB.

The evaluation and comparison of the performance measures of the created fake news classification models (accuracy, precision, recall, and F1 score).

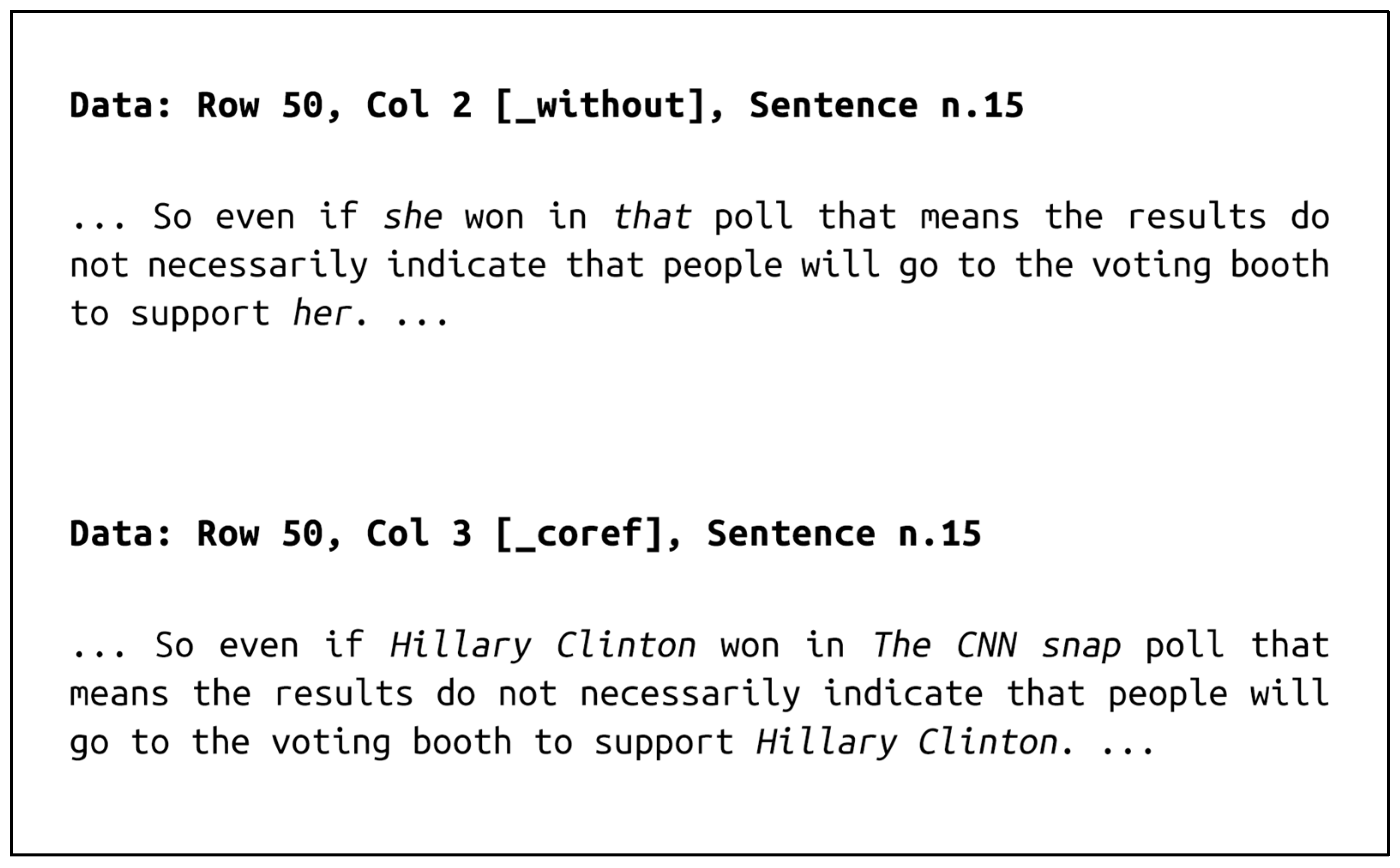

Steps 2 and 3 are the important parts of the mentioned methodology. In step 2, a new text data file named “_coref” is created, where mentions from the original text data file “_without” are identified and replaced (

Figure 3). Then, word vectors are created from both the “_without” and “_coref” data files using both the TF–IDF and Doc2Vec methods.

The first phase of the methodology—data preparation—is a traditional stage of processing all NLP tasks. However, some of its parts (e.g., tokenization) are applied when creating word vectors only if the vectors are generated by available libraries.

The actual evaluation of CR involves assessing how well we can classify fake news using models created from pre-prepared datasets (with and without any coreference). In other words, the output evaluation will consist of evaluating the created models for classifying fake news, where news articles from the test set will be classified into two categories: fake and real news.

The paper is organized as follows.

Section 2 provides a summary of the current state in the field of fake news identification studies.

Section 3 describes the fake news dataset used in this research along with relevant pre-processing methods and the model creation.

Section 4 provides a summary of the most important results.

Section 6 of the article contains the discussion and conclusions.

2. Related Work

Coreference resolution is a method that usually supports other NLP techniques such as text summarization [

1], a question answering system [

2], or information extraction [

3,

4]. It can be used in solving several classification tasks such as identifying and classifying fake news or detecting phishing messages [

5]. Nadeeem et al. [

6] presented the FAKTA framework, which integrates various components of a fact checking process, i.e., document retrieval from media sources with various types of reliability, stance detection of documents with respect to given claims, evidence extraction, and linguistic analysis.

Bengtson et al. [

7] described a rather simple pair-wise classification model for coreference resolution, which was developed with a well-designed set of features. Their work produced a state-of-the-art system that outperformed systems built with complex models.

Ming [

8] investigated the improvement of Chinese CR. Their model proposes acquired word and character representations through pre-trained Skip-gram embeddings and pre-trained BERT. Then, it explicitly leverages span-level information by performing bidirectional LSTMs among the above representations. The proposed model achieved a 62.95% F1 score, outperforming their baseline methods. The only limitation of their article is the absence of an error analysis.

The BERT model (bidirectional representation from transformers), which is used by Google’s search engines, was developed by Devlin et al. [

9]. Unlike recent language representation models, they designed BERT to pre-train deep bidirectional representations from unlabeled text by joint conditioning on both the left and right context in all layers. As a result, the pre-trained BERT model can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of tasks, such as question answering and language inferencing [

1,

2,

3] without substantial task-specific architecture modifications. The results were spectacular; the GLUE score was 80.5%, and the MultiNLI accuracy was 86.7%. The Stanford question answering system dataset (SQuAD) v1.1 resulted in an F1 score of 93.2% and also a SQuAD v2.0 Test F1 score of 83.1%.

Denis et al. [

10] investigated two strategies for improving coreference resolution. The first one involved training separate models that specialized in particular types of mentions (e.g., pronouns versus proper nouns), and the second one used a ranking loss function rather than a classification function. However accurate their models were at picking a correct antecedent for a true anaphor, the best they could achieve in terms of f-scores was 88.1% with MUC, 85.2% with B3, and 79.7% with CEAF.

Ferilli et al. [

11] focused on anaphora resolution in English texts. Their approach improves on the traditional algorithm that is considered the standard baseline for comparison in the literature (by the Hobbs algorithm). Whilst the most significant contribution is provided by a gender agreement feature, the modification to the general rules alone already yields an improvement, for which they propose to use their algorithm as the new baseline in the literature.

Karthikeyan et al. [

12] identified all types of anaphora with layered or step by step approaches so that everyone utilized the anaphora paradigm in their application. Their results presented an improved framework that performed all the necessary rules for distinguishing pronouns.

Veena et al. [

13] introduced a conceptual graph model, which proposed a concept-based graph model that follows a triplet representation with coreference resolution, which extracts the concepts at both the sentence and document level. The extracted concepts are clustered using a modified DB Scan algorithm that then forms a belief network. Their model had an accuracy of 91%.

Veena et al. [

14] proposed a machine learning approach using support vector machines (SVM) towards coreference resolution at the document level. In their research work, 17 well-defined syntactic and semantic features including the 13 baseline features with semantic role labeling (SRL) were used. The use of an SVM classifier led to a better outcome when compared to other machine learning models.

Novák [

15] introduced the Treex CR system, which implemented a sequence of evaluation models for individual types of coreference expressions (personal, reflexive, indefinite, interrogative, and possessive pronouns). It used a rich set of features extracted from linguistically pre-processed data. The system seemed to outperform the baseline system in Czech. In English, although it could not outperform the best approaches in the Stanford system, its performance was high enough to be used in future experiments.

Mohan et al. [

16] proposed a solution to the problem of ambiguous pronouns in the English language. In their work, they used a dataset called GAP (Webster et al., 2018), which contains 8908 labeled pairs of antecedent naming of a person and an ambiguous pronoun from the Wikipedia database. They trained the model using the BERT technique to obtain contextual embeddings from the text, which they applied to the SVM classifier. Their proposed model demonstrated promising performance; it had an accuracy of 78.35%, a precision of 75.82%, a recall of 69.30%, an F1 score of 71.50%, and a low loss of 0.53.

In this article, we focus on improving the classification of textual data using coreference resolution. This approach is very similar to data augmentation methods. Wei et al. [

17] utilized four techniques for expanding the text corpus, synonym replacement, random insertion, swapping, and deletion, which are collectively known as easy data augmentation (EDA). Through experiments on five classification tasks, they found an improved performance for convolutional and recurrent neural networks. Models trained using EDA surpassed this number by achieving an average accuracy of 88.6% while only using 50% of the available training data. A limitation of this paper is that the performance gains achieved with the proposed data augmentation method (EDA) may be marginal when the dataset is already sufficient. While EDA shows promise for small datasets, it might not yield significant improvements when using pre-trained models like ULMFit, ELMo, or BERT. Additionally, comparing EDA with other data augmentation methods used in NLP becomes challenging due to variations in the models and datasets used in different studies.

Haralabopoulos et al. [

18] used a text data augmentation technique—sentence permutations—to create synthetic data based on an existing labeled dataset. As a conclusion from the mentioned research works, the solution to the CR issue entails the design of new methods and a search for ways in which we can identify references in the text in the most efficient way. Their permutation augmentation improved the baseline classification accuracy by 4% on average and outperformed all the other augmentations proposed in their work by an average of 0.2%.

All the research works are summarized in

Table 1.

3. Methodology

To verify the suitability of the pre-preparation of text data using coreference resolution for classification tasks, we proceeded according to the methodology we mentioned at the beginning of this article. We applied this method to the selected dataset. In this chapter, we describe the dataset and the algorithm for coreference resolution in more detail. It was necessary to create several versions of the classifier and verify their success using performance measures. We also describe the methods used to create the word vectors that represent the inputs to the classifiers.

3.1. Dataset

We used the freely available dataset KaiDMML, which was originally created for the needs of the FakeNewsTracker system [

19,

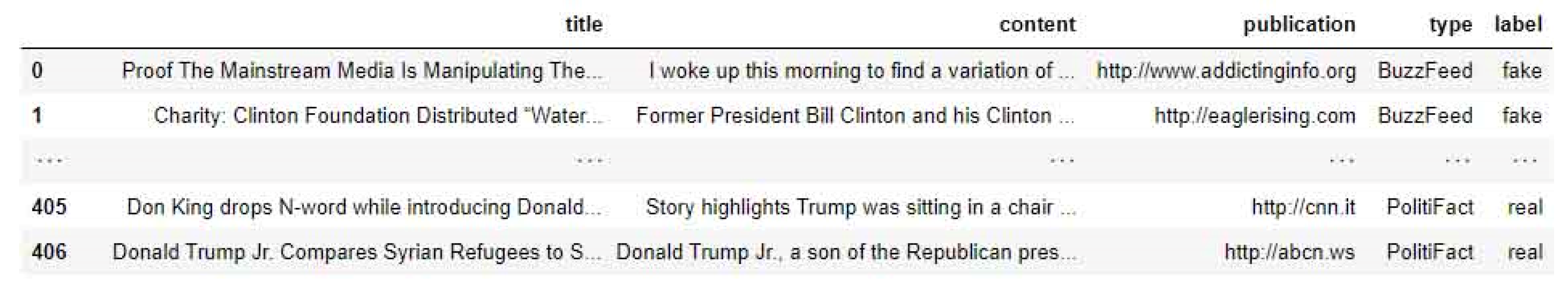

20]. The dataset contains records collected from the PolitiFact and GossipCop projects. On

Figure 4, a sample of the used dataset is presented.

Both projects tried to verify the facts to determine their truth and correctness, mainly from news portals or social networks. The first project focused on the field of politics, and the second one focused on the field of verifying information about famous personalities. The dataset was manually annotated, where each message was marked as real or fake.

Table 2 provides an overview of the basic analysis of the number of words in the dataset.

3.2. Coreference Resolution Algorithm—Neuralcoref

Before we introduce the characteristics of the neuralcoref algorithm, we should consider one of the three different CR approaches [

19]:

Deterministic—coreference identification based on natural language rules [

20,

22];

Statistical—coreference identification based on machine learning [

19];

Neural—coreference identification based on the neural network [

20,

22].

We decided to use the open-source library neuralcoref in our work, which is an implementation of the mention-ranking coreference model from [

23] and provides very good results in terms of coreference resolution. The current version of the neuralcoref library (as of November 2022) is 4.0. It was necessary to have Spacy version 2.1.0 installed.

The product of the model is the rating (score) for the pair s(c,m), where c is designated as a candidate for antecedent and m is a mention. The given score represents the compatibility of the coreference with the feedforward neural network [

24].

At the same time, the model extracts different words, e.g., the base form of a mention with a group of words. Each word is represented by a vector

, and each group of words is represented by the average of the vectors of all the words in the group. It also considers the distance and match of strings. Heuristic loss functions were also used when training the model [

25].

In the context of gender association, the trained word embeddings refer to vectors that capture the relationship between words and their gender connotations. These vectors are generated using algorithms that analyze large datasets, such as the OntoNotes corpus. By utilizing the OntoNotes corpus, the algorithm is able to train the word embeddings to accurately determine the gender of given entities. This includes the ability to assign a coreference even for unknown entities (such as nicknames, names of celebrities, etc.). Overall, the trained word embeddings enable the algorithm to effectively analyze and determine gender associations, providing valuable insights into the feminine and masculine gender connotations of the given entities [

26].

3.3. The Techniques Used to Create the Input Vectors

We worked with two versions of the dataset: one with and one without coreference resolution. These two versions were used separately for the next steps of creating the word vector models. We considered using the TF–IDF and Doc2Vec techniques to create the input vector for classification. The vectors were subsequently used as an input for the following classification algorithms: Decision Tree, Random Forest, MultinomialNB, K-Nearest Neighbors, and Logistic Regression. The individual steps of our methodology are depicted in

Figure 2.

3.3.1. Term Frequency–Inverse Document Frequency

Nowadays, the classification of text documents is used in various areas of computer science. Algorithms dealing with this task usually need to represent the input text as a vector with a fixed size [

27]. The simplest method for creating word vectors from documents is TF (term frequency) [

28], which is calculated according to (1):

where:

is the number of terms I in the document j;

is the number of all terms in the document.

However, the above-mentioned method is not reliable, as the most important words will usually be words with frequent occurrences, e.g., pronouns, conjunctions, prepositions, particles, or articles, unless they have been removed as stop words. Simultaneously, a document also can contain words that describe the essence of the text much better, and those words may not be marked with the TF method.

Therefore, the IDF (inverse document frequency) method is a method (2) where

is determined by a logarithmic calculation. The method involves dividing the number of all documents

and the number of documents in which the word

occurs. Priority is given to words that are specific to a small subset of documents.

If the word does not exist in the corpus, the denominator is adjusted to the form

, so the division by zero does not occur. Subsequently, the TF–IDF method, which is the improved product of the TF statistic and the IDF statistic, has the following form (3):

There are many formulas for calculating the TF–IDF, as there are also other parameters that can affect the vector itself [

29]. More advanced keyword extraction methods include the KEA (keyphrase extraction algorithm) [

30] or TextRank [

31], in which the similarities of individual word subsequences are heuristically evaluated. Another popular algorithm is RAKE (rapid automatic keyword extraction) [

32], the main advantage of which is that it can work with an article regardless of the corpus.

3.3.2. Word2Vec

A weak point of the previous approaches is the neglecting of the semantics of a text [

33]. Word2Vec was introduced to overcome this. The Word2Vec model uses a two-layer neural network and was created in 2013 from the need to capture the semantic similarity of words [

34]. A corpus—a set of text—is needed for the training process itself (learning neural networks).

The output of the trained Word2Vec models are word vectors containing a large cluster of data, where individual elements (words) are represented by their position in a multidimensional space. Logical and mathematical operations can be performed with the mentioned vectors, and, thus, they can simulate semantic or lexical relations between words. Formula (4) gives the most common example of a trained Word2Vec vector and determines that the difference between the words “father” and “man” is equal to the difference between the words “mother” and “woman”. The mentioned relations are language-independent.

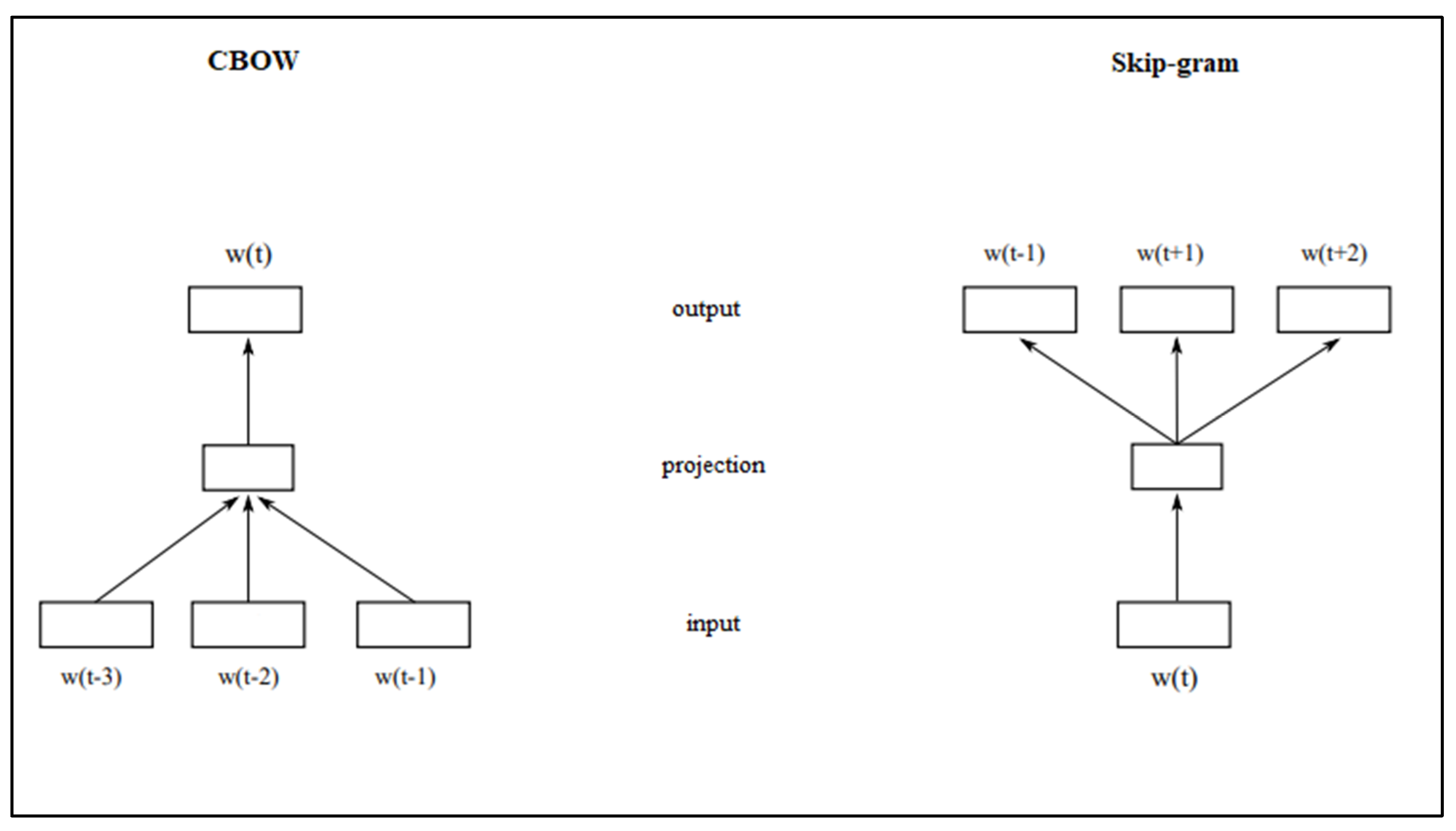

Word2vec can utilize one of two possible model architectures to produce these distributed representations of words:

CBOW (continuous bag of words)—the algorithm predicts the missing word depending on the other words in the document;

Skip-gram—opposite of CBOW. It receives one word for the input to predict the surrounding window of context words.

Both algorithms work as a neural network, which is trained by a standard procedure. The architecture is shown in

Figure 5. The implementation of both algorithms is also used by the Deeplearning4j [

35] and Gensim [

36] tools.

3.3.3. Doc2Vec

The Doc2Vec model works by describing whole sentences using vectors, while corpus words are learned separately. This model is strongly based on the principle of the Word2Vec model. They differ in the case of Doc2Vec, the goal of which is to create a vector representation of the entire document instead of the words [

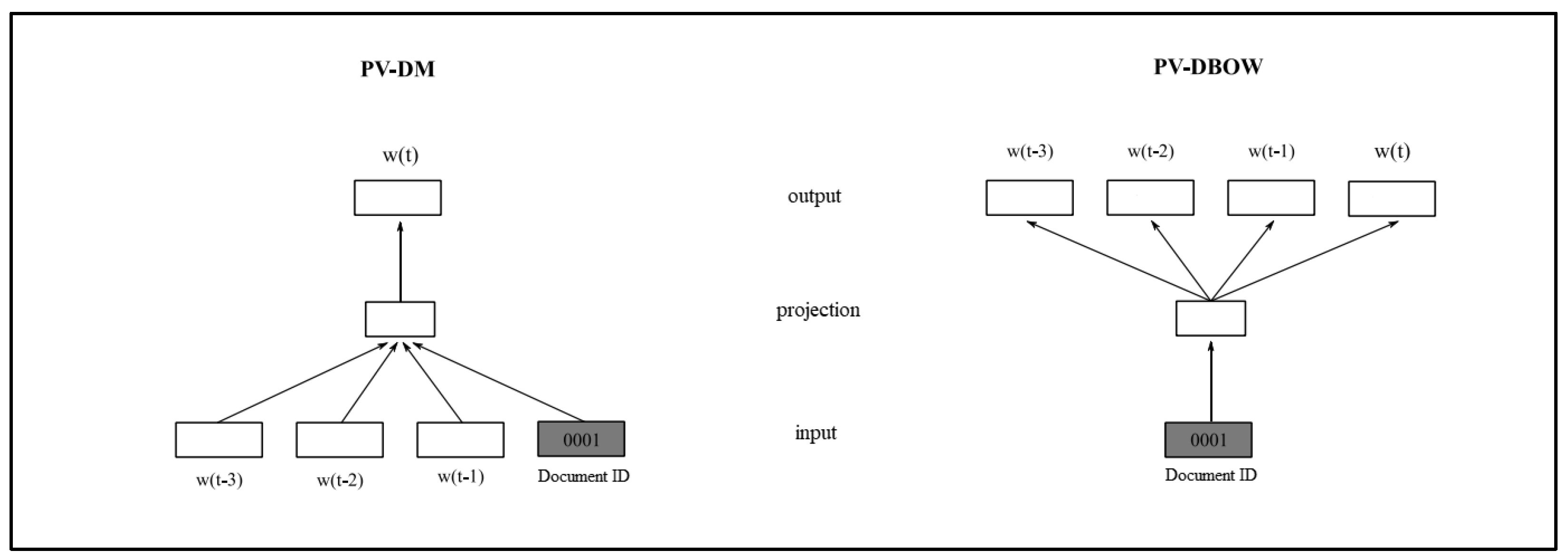

37].

The training of sentence vectors in the Doc2Vec method is based on the word vector methods from Word2Vec. The first of the methods is PV–DM (distributed memory model of paragraph vectors), and it works on the CBOW principle. A document ID is added to the model, which uniquely identifies said document. Each sentence is mapped to a vector of the same size as the individual word vectors. Vectors are also created for the document itself in the same way [

38].

Another Doc2Vec method is PV–DBOW (distributed bag of words of paragraph vector), which ignores the context of words in the input. It is less memory-demanding than the PV–DM technique, and it is very similar to the Skip-gram algorithm from Word2Vec [

39]. The architecture of both algorithms is shown in

Figure 6.

We decided to use the last-mentioned model in our work, as we needed to determine the vectors of the entire document with and without the use of CR.

3.3.4. The Techniques Used to Create Classification Methods

We prepared multiple fake news classifiers to verify the effectiveness of the coreference resolution. These were created using the following classification algorithms:

Decision Tree algorithm;

Random Forest classifier [

40];

K-Nearest Neighbors [

41];

Multinomial Naive Bayes model [

42];

Logistic Regression.

We used K-fold validation to evaluate the created models. The K-fold technique is a popular and easy-to-understand technique, which generally results in a less biased model. The reason for this is that it ensures that every observation from the original dataset has the chance of appearing in the training and test set.

Firstly, we shuffled our dataset so that the order of the inputs and outputs was completely random. We conducted this step to make sure that our inputs were not biased in any way. In the K-fold cross-validation step, the original sample was randomly partitioned into equally sized subsamples. Of the subsamples, a single subsample was retained as the validation data for testing the model, and the remaining subsamples were used as the training data. The cross-validation process was then repeated times with each of the k subsamples used exactly once as the validation data.

The advantage of this method is that all observations were used for both training and validation, and each observation was used for validation exactly once. When evaluating all the models we created, , i.e., the input dataset was randomly divided into 10 parts.

We also focused on the following four widely used metrics, accuracy, precision, F1 score, and recall, to assess the performance of our models.

Accuracy is a fundamental performance measure used in evaluating machine learning models. It represents the ratio of correctly predicted instances to the total number of instances in the dataset. Mathematically, it is defined as per Formula (5):

In the binary classification, we used four key metrics: true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). TP represents the number of correctly predicted positive instances, TN represents the number of correctly predicted negative instances, FP refers to the number of incorrectly predicted positive instances, and FN indicates the number of incorrectly predicted negative instances. These metrics helped us to assess the model’s performance and make informed decisions as to how to improve it.

Precision assesses the accuracy of positive predictions made by the model. It represents the proportion of true-positive predictions (correctly predicted positive instances) among all the positive predictions (Formula (6))

Precision is particularly useful when the cost of false positives is high, as it helps in minimizing the number of false alarms. However, a high precision score may be accompanied by a low recall score, leading to an increased number of false negatives.

Recall calculates the proportion of true-positive predictions among all the actual positive instances in the dataset (Formula (7)).

The final measure is the F1 score, which is a metric that combines precision and recall to provide a balanced evaluation of a model’s performance. It is the harmonic mean of precision and recall and is mathematically represented in Formula (8):

The F1 score reaches its highest value at 1 (perfect precision and recall) and its lowest at 0. It is particularly useful when dealing with imbalanced datasets, where accuracy can be misleading. The F1 score considers both false positives and false negatives, making it a suitable measure when the overall model performance needs to be balanced.

4. Results

We applied the existing coreference resolution method to the selected dataset using the neuralcoref library. We cleaned the data and removed null values in the form of empty rows in the pre-processing stage of our work. We did not remove the stop words or lemmatization since we could have significantly disrupted the identification of pronouns, as the library needs to work with the original text.

We used the Scikit-learn library to create word vectors using TF–IDF. The gensim library was used for the Doc2Vec method. The investigated classification models were created using the methods of the Scikit-learn library. We used K-fold validation for all the models, and we set the number of splits as .

Five classification methods (Decision Tree, Random Forest, K-Nearest Neighbors, MultinomialNB, and Logistic Regression) and two word-embedding methods (TF–IDF, Doc2Vec) were selected. The used methods were examined using K-fold cross-validation, where k represented 10 measurements. In this way, 160 classification models were created (data without coreferencing and data with coreferencing (2) × word embedding methods (2) × classification methods (4) × k-fold validation (10)) for the investigated fake news dataset. The quality of the created models was evaluated using evaluation metrics (

accuracy, precision, recall, f1 score, precision_fake, recall_fake, precision_real, and

recall_real). The individual stages of our proposed method are described in

Figure 2.

The descriptive statistics for the accuracy results are presented in

Table 3. The Valid N value represents the four classification methods used for the 10-fold cross-validation. The mean and median proved that better results were observed for the TF–IDF method compared to the Doc2Vec method. After closer inspection, better outcomes were achieved (mean and median) for the models created from the input data after applying coreference resolution. The range of the variation in both word-embedding methods (

TfIdf_coref and

Doc2Vec_coref) also decreased.

Similarly, better results were also observed in the case of coreference resolution for the other performance measures. The results for the F1 score, which is a combination of precision and recall, are presented in

Table 4.

The results of the accuracy and F1 score (

Table 3 and

Table 4) showed that the difference between the models created from the datasets without and with the application of coreference resolution were not so striking. Similarly, insufficient results of the models were observed with the other evaluation measurements (

precision, recall, precision_fake, recall_fake, precision_real, and

recall_real).

Therefore, we analyzed the results for the used classification algorithms individually in more detail. The results of the 10-fold cross-validation for each classification technique (Decision Tree, Random Forest, K-Nearest Neighbors, MultinomialNB, and Logistic Regression) depended on the methods of preparing the input vectors for the classification methods. The input vectors were prepared without coreference resolution (parameter _without) and with coreference resolution (parameter _coref). At the same time, two word-embedding methods (TF–IDF and Doc2Vec) were applied for the preparation of the vectors.

The results for accuracy are visualized in the following individual graphs (

Figure 7a–d). We compared the combinations of the text preparation methods (

_without and

_coref) and word-embedding methods (TF–IDF and Doc2Vec).

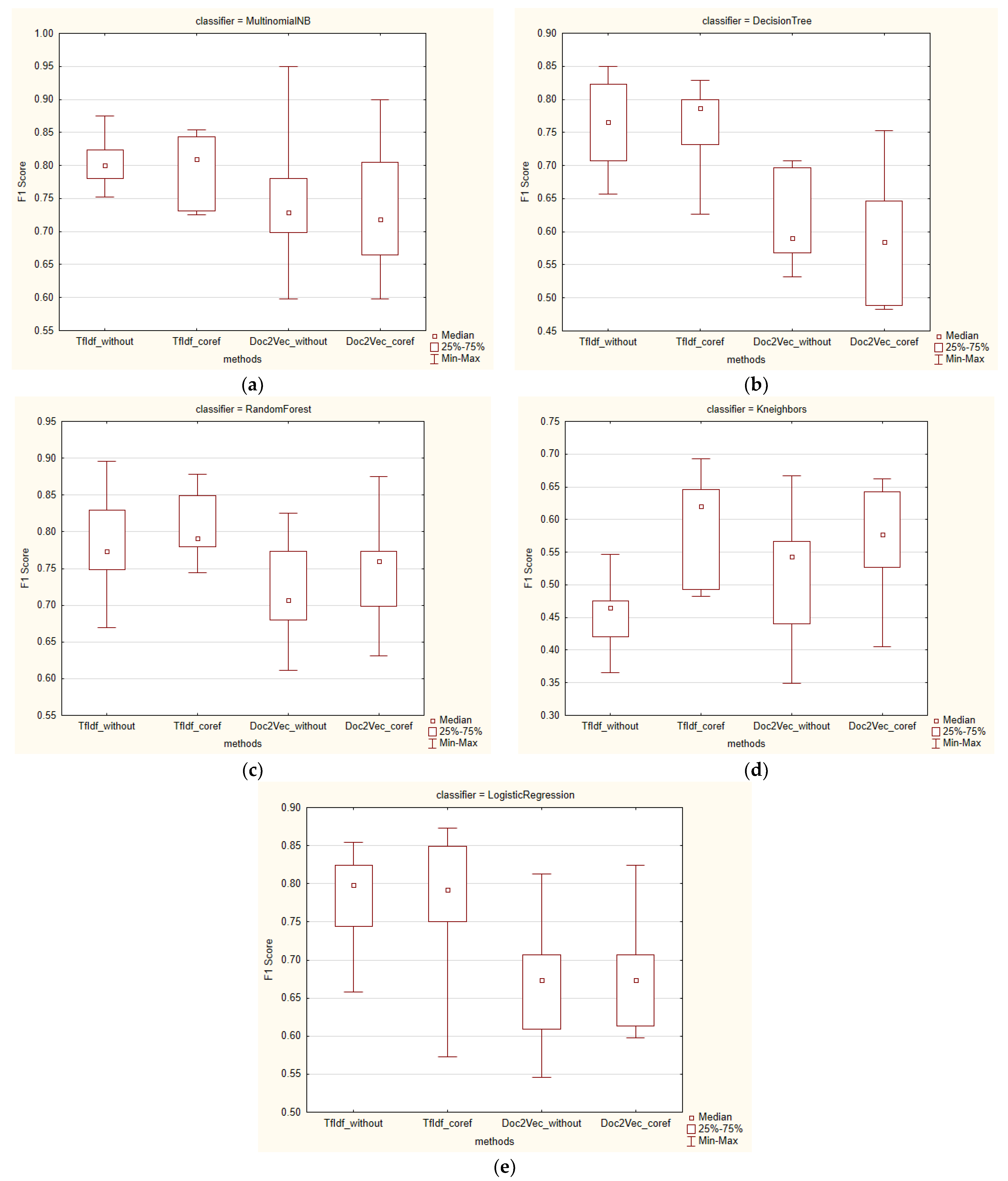

The graphs show that the median was generally higher for the classification methods with the application of coreference resolution. This was observed in all five classifiers, except for TF–IDF for Logistic Regression. Similarly, a higher value of Q3 was observed for most methods, except for Decision Tree and Doc2Vec for Logistic Regression, in favor of the methods with the application of coreference resolution. On the other hand, in the K-Nearest Neighbors and MultinomialNB classifiers, there was a greater degree of variability in the coreference resolution methods.

Simultaneously, we also analyzed the results from the F1 score point of view (

Figure 8a–d), which combines precision and recall. Even in this case, higher median values were observed for all F1 score metrics except for word embedding with Doc2Vec and the MultinomialNB classifier.

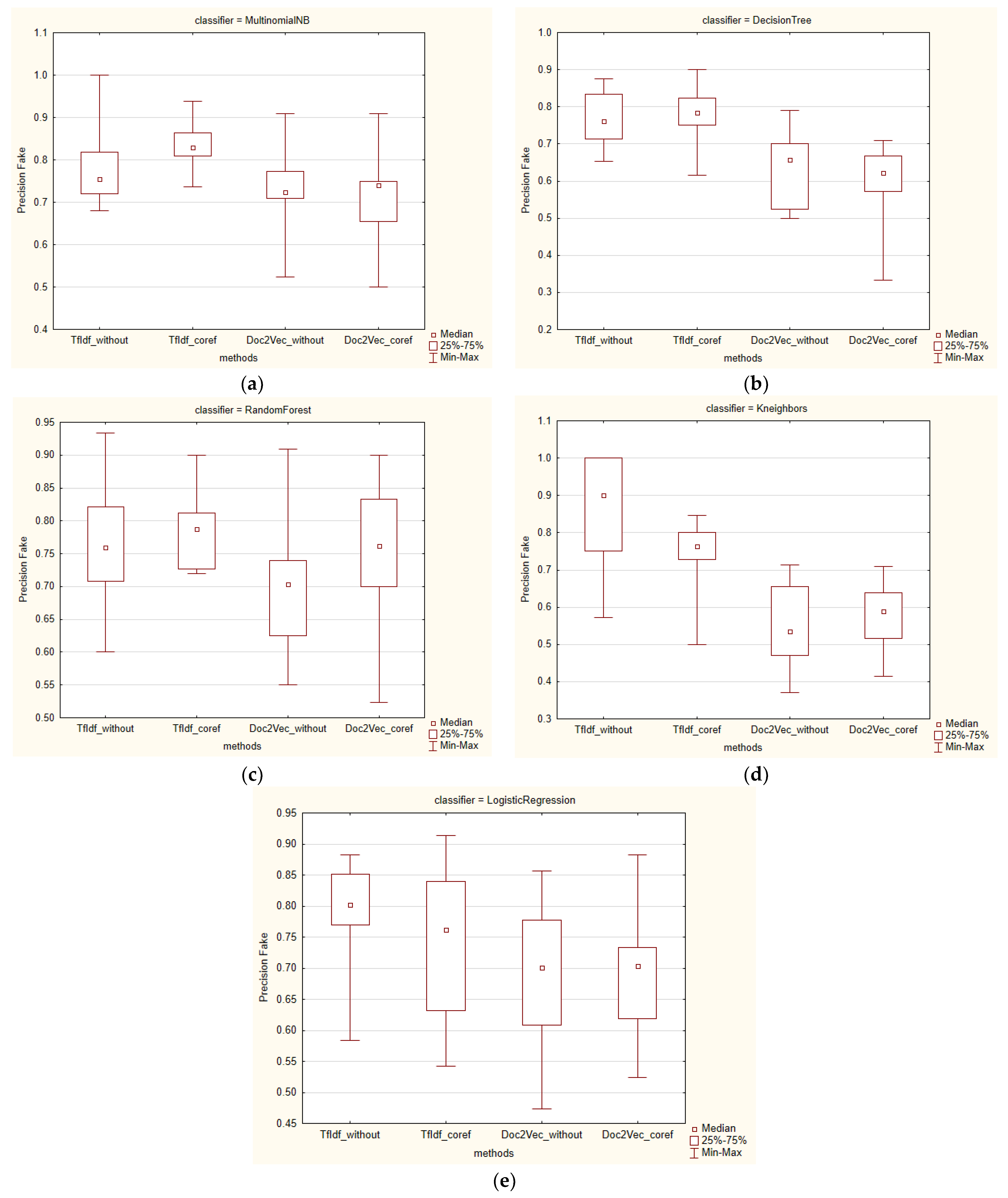

All the methods were applied with the aim of identifying fake news. For this reason, we present the results (

Figure 9a–d) for the performance measure precision_fake.

In addition, the perspective of the median of the variability in these results was also interesting. The median, except for TF–IDF for the K-Nearest Neighbors and Logistic Regression methods and Doc2Vec for the Decision Tree method, was higher in all the other methods after applying coreference resolution.

Also, the quartile range for the degree of variability was analyzed. An interesting finding was that, except for Doc2Vec for the Random Forest and MultinomialNB methods and TF–IDF for the Logistic Regression method, smaller quartile ranges were found for the methods with coreference resolution.

5. Discussion

We aimed at improving text classification using coreference resolution in the presented work. We used the freely available KaiDMML dataset, which contains 405 news articles, half of which were annotated as fake news and the other half as real news. The advantage of this dataset is its manual annotation. However, it is natural that the observed performance metrics of our models were lower using this dataset than in the case of the datasets that are not manually annotated. The second disadvantage of this dataset is its small size.

We applied CR analysis to the selected dataset and compared the results of the classification of fake news without applying the CR method. The TF–IDF and Doc2Vec methods were used to create the input vectors. We chose Decision Tree, Random Forest, K-Nearest Neighbors, MultinomialNB, and Logistic Regression from the classification methods.

Despite our efforts, no significant differences in the results were identified. Therefore, we only analyzed the results with descriptive statistics, and we did not verify the statistical significance of the differences between the investigated approaches.

This finding is important. The results were improved by applying the CR method. Unfortunately, its impact was not statistically significant. The only difference was observed for the K-Nearest Neighbors classifier. However, this classifier achieved very poor results compared to the other classifiers used. The difference in the results between the methods with CR and those without applying CR was therefore rather due to the poor results of the K-Nearest Neighbors classifier.

The finding that CR led to better classification results but not to statistically significant differences was surprising. In the case of the TF–IDF method in particular, we expected significantly better results for CR. This expectation was given by the TF–IDF method itself, where the values of the vector element for specific objects were practically increased, e.g., nouns and decreased values and pronouns or abbreviations (mentions). We assumed that by increasing the values of the elements expressing specific objects at the expense of mentions, we would achieve significantly better results.

However, it was clear from the results of the descriptive statistics that applying CR contributed to better results, especially when examining the median value for accuracy, the F1 score, and the precision_fake metric. For this reason, we can conclude that the application of CR improved the classification tasks in general.

An interesting and surprising finding was that the TF–IDF method achieved better results compared to the Doc2Vec method. In our opinion, this was caused by every creation of a vector in the Doc2Vec method, which needs a robust corpus for its effective deployment. In our case, it was only 405 records.

Although the results were not statistically significant, it can be concluded from the descriptive statistics that the application of CR can contribute to improving the classification of fake news.

The application of coreference resolution (CR) to a dataset can be classified as a data augmentation technique. There are two related research studies in this area. Haralabopoulos et al. [

18] used a text data augmentation technique—sentence permutations—to create synthetic data based on an existing labeled dataset. Their method achieved a significant improvement in terms of classification accuracy, averaging around 4.1% across eight different datasets. Additionally, they proposed two other methods for text data expansion: antonym replacement and negation. These methods were tested on three suitable datasets and achieved accuracy improvements of 0.35% (antonyms) and 0.4% (negation) compared to the permutation method they proposed.

Wei et al. [

17] utilized four techniques for expanding the text corpus called easy data augmentation (EDA) which included synonym replacement, random insertion, swapping, and deletion. Through experiments on five classification tasks, they found an improved performance for convolutional and recurrent neural networks.

To improve the accuracy and F1 score results, the application of CR increased the median value for the accuracy by 0.0942 (K-NN/TF–IDF) and for the F1 score by 0.1558 (K-NN/TF–IDF). In comparison to the mentioned studies, our improvement may seem relatively small, but it is important to note that our article focused solely on one technique. In the mentioned experiments, the authors worked with a combination of 3–4 techniques, making the results appear comparable. Nevertheless, we believe that the more crucial finding is the fact that the application of CR led to a classification improvement across practically all the classifiers. It is also worth noting that in augmenting the data of natural language processing, the primary concern is often corpus augmentation, whereas in our article, we directly applied coreference resolution to the classified data.

6. Conclusions

We focused our work on the design and verification of procedures for the field of natural language processing. Within this area, we chose the issue of coreference identification and a dataset containing fake news. We used the coreference algorithm for the input data, and, thus, we created two sets of texts—a text with the application of coreference resolution and a text without the application of coreference resolution. Subsequently, we evaluated these two texts using vector models, namely TF–IDF and Doc2Vec. Finally, we implemented various machine-learning-based classifiers in our research, from which we selected the Decision Tree, Random Forest, K-Nearest Neighbors, MultinomialNB, and Logistic Regression methods as the most suitable. The classifiers categorized news articles as either fake or real news based on their classification result.

According to the achieved results, the data processing with the application of coreference resolution resulted in an increase in the accuracy of the classification of fake news.

In the case of the accuracy performance measure, the best result was observed for the Random Forest classifier using the TfIdf_coref method (median = 0.8149). For the F1 score, the best result was observed for the MultinomialNB classifier using the TfIdf_coref method (median = 0.8101). When looking at the results in terms of increased accuracy, an improvement was observed in all methods except for the Decision Tree classifier with Doc2Vec, where a decrease in accuracy was observed when comparing the metrics _coref and _without. The most significant increase in accuracy was observed for the K-Nearest Neighbors classifier with the TF–IDF embedding method (0.0942 increase for _coref).

Regarding the results from the perspective of an increased F1 score, an improvement was observed in all the methods except for the Decision Tree and MultinomialNB classifiers with Doc2Vec, where a decrease in accuracy was observed when comparing the metrics _coref and _without. The most notable increase in the F1 score was observed for the K-Nearest Neighbors classifier and the TF–IDF embedding method (0.1557 increase for _coref).

Coreference resolution represents a single method for dataset preparation in the classification tasks of fake news identification. For this reason, even a slight improvement in the method could cause an important shift in the researched area, because the method would be used as part of other methods for dataset preparation.

We want to find and use other tools for the application of coreference resolution in future work. The neuralcoref library was used as a deep learning neural network model in this study. This library has not been updated for a long time, and its implementation is only possible with the support of the Spacy 2.1.0 software, which has long been replaced by newer versions.

It is clear from the results that the method needs to be verified with several other datasets. We plan to validate this method on other datasets with a different thematic focus. An important part of further work would also be improving the accuracy of the method in various other classification tasks of natural language processing, such as classifications of text type, content, or tone. It would also be a research challenge to validate this method in collaboration with other word vector preparation methods.