Abstract

Brain–computer interface (BCI) technology enables humans to interact with computers by collecting and decoding electroencephalogram (EEG) from the brain. For practical BCIs based on EEG, accurate recognition is crucial. However, existing methods often struggle to achieve a balance between accuracy and complexity. To overcome these challenges, we propose 1D convolutional neural networks with bidirectional recurrent attention unit network (1DCNN-BiRAU) based on a random segment recombination strategy (segment pool, SegPool). It has three main contributions. First, SegPool is proposed to increase training data diversity and reduce the impact of a single splicing method on model performance across different tasks. Second, it employs multiple 1D CNNs, including local and global models, to extract channel information with simplicity and efficiency. Third, BiRAU is introduced to learn temporal information and identify key features in time-series data, using forward–backward networks and an attention gate in the RAU. The experiments show that our model is effective and robust, achieving accuracy of 99.47% and 91.21% in binary classification at the individual and group levels, and 90.90% and 92.18% in four-category classification. Our model demonstrates promising results for recognizing human motor imagery and has the potential to be applied in practical scenarios such as brain–computer interfaces and neurological disorder diagnosis.

1. Introduction

Electroencephalogram (EEG) has become a widely used non-invasive method for measuring brain activity in recent years [1,2,3,4]. EEG signals have been used in brain–computer interface (BCI) research to develop innovative systems that enable communication between humans and computers. However, accurately classifying EEG signals is challenging due to their inherent complexity and variability between subjects. Unreliable classification will significantly limit the effectiveness of BCI systems. Therefore, developing robust and accurate methods for EEG signal classification is essential for the continued advancement and successful implementation of BCI systems.

Convolutional neural networks (CNNs) have demonstrated their effectiveness in the classification of motor imaginary (MI)-based EEG signals in several studies. Dose et al. [5] reported an accuracy of 65.73% in classifying raw signals from a 4-task MI dataset with varying EEG channel configurations using a CNN. Meanwhile, Tang et al. [6] proposed a CNN and empirical mode decomposition (EMD)-based approach for classifying a small 2-task MI dataset with 16 EEG channels, achieving an average accuracy of 85.83% per participant. Also, to deal with temporal relationship information in EEG signals, recurrent neural network (RNN) algorithms have gained significant popularity in the field of EEG studies. P. Wang et al. [7] utilized a long short term memory (LSTM) network to process EEG time-slice signals and reported an average accuracy of 77.30%. Luo et al. [4] compared the performance of gated recurrent unit (GRU) and LSTM units in RNN, which achieved an accuracy of 82.75%.

Despite the progress achieved by existing methods in EEG classification tasks, several challenges still need to be addressed. Firstly, traditional CNNs may not always be practical for 1D signals, especially when dealing with limited or specialized training data. This can lead to unnecessary training parameters and overfitting of small sample data [8]. Secondly, although RNN models are commonly used in EEG classification, their capabilities are still limited [9,10,11,12]. In some cases, different model structures are simply combined without internal adjustment or optimization of the RNN structure. As a result, these models may fail to fully capture the complex temporal relationships present in EEG signals, and they may not achieve optimal performance in classification tasks. Additionally, evidence suggests that studying segments of EEG signals rather than the whole-time sequence may provide more advantages for differentiation [13].

To overcome the challenges, in this study, we proposed a 1D CNN with bidirectional recurrent attention unit network based on random segment recombination strategy (segment pool, SegPool). The contributions of this paper are summarized as follows: Firstly, SegPool is used to increase training data diversity and reduce the impact of a single splicing method on model results for different tasks. Secondly, multiple 1D CNNs, including local and global models, are utilized to extract channel information with simplicity and compactness. By performing only 1D convolutions, these CNNs enable fewer training parameters and low-cost hardware implementation. Finally, bidirectional recurrent attention unit (BiRAU) is proposed to learn temporal information and identify key information in time series. This is accomplished through the forward–backward networks and the attention gate in RAU.

2. Methods

2.1. Overview

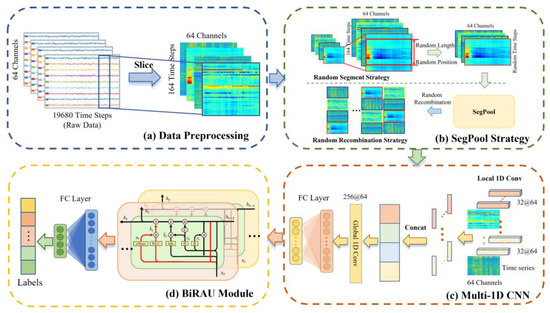

The framework proposed in this paper is illustrated in Figure 1. The whole model can be divided into four parts: (a) Data Preprocessing: extracting raw EEG signals from data files, and filtering and slicing them; (b) SegPool Strategy: the SegPool Strategy utilizes random slicing and recombination strategies to crop and splice EEG signal segments; (c) Multi-1D CNN: in this module, a local 1D CNN and a global 1D CNN are used to extract local and global features from EEG signals, respectively; (d) BiRAU Module: the BiRAU Module contains the bidirectional recurrent attention unit for extracting temporal information, followed by a fully connected layer for prediction.

Figure 1.

The pipeline of the proposed 1DCNN-BiRAU network based on SegPool. (a) represents the preprocessing of raw EEG data, including filtering operations and slicing. (b) represents the strategy of SegPool. (c) represents the local and global 1D CNN module. (d) represents the bidirectional recurrent attention unit.

In the following sections, we introduce each of the above modules in detail.

2.2. Data Preprocessing

In our experiment, we used the EEG Motor Movement /Imagery (M/I) Dataset provided by PhysioNet [14,15]. This dataset includes 1500 EEG recordings from 109 subjects, each undergoing 14 experimental runs: two baseline runs (eyes open/closed) and four M/I tasks (repeated thrice). The tasks involved left/right fist movements (Task1), imagining left/right fist movements (Task2), both fist/foot movements (Task3), and imagining both fist/foot movements (Task4). The EEG signal was sampled at 160 Hz and each task contained 19,680 time points.

To preprocess the data, first, we applied a five-order Butterworth filter to the extracted raw EEG data to isolate the 6–35 Hz frequency band, which is most relevant for motor imagery tasks [16]. Butterworth filter is a type of linear time-invariant system that can produce a very flat passband and slow roll-off. It is the most widely used filter in EEG signal processing due to its maximally flat frequency response in the passband [17].

After that, we divided the EEG recordings into small segments (or “clips”) which contained 164 time steps, each corresponding to a single motor imagery task. To increase the diversity of the data, we employed the SegPool strategy, where we randomly combined different clips from different tasks to form a single input sequence for the model. In different tasks, the length of the splicing clips also varied, e.g., two clips were randomly spliced and recombined in the binary classification tasks, while four clips were processed in the same way in the four-category classification tasks.

2.3. Model

In this section, we introduce our proposed method in detail, which corresponds to the three modules (b), (c), and (d) in Figure 1. Specifically, the following subsections elaborate on the SegPool Strategy, Multi-1D CNN, and BiRAU Module, respectively.

Random segment recombination strategy. Inspired by the Multi-clip Random Fragment Strategy [18], we proposed a data augmentation technique called SegPool for EEG signal classification. As shown in Figure 1b, the SegPool Strategy randomly slices and concatenates different task segments to create new combinations. The goal of this technique is to increase the diversity of the training data and improve the generalization performance of the model.

SegPool comprises a random segment strategy and a recombination strategy. The random segment strategy is an effective approach to prevent models from overfitting to specific task patterns in EEG signal analysis. This method involves splitting EEG signals into short, fixed-length segments. These segments can be obtained from various tasks in the dataset used, thus increasing the variability of the training data.

The EEG segments obtained through the random segment strategy are pooled together. Then, in the recombination stage, segments from different tasks are randomly combined to form various training examples. This process involves selecting segments and concatenating them randomly to create a new example.

The use of the recombination strategy in the SegPool provides an opportunity to create numerous new training examples with different combinations of task segments. By doing so, we can prevent the model from overfitting to specific task patterns, leading to more accurate EEG signal analysis.

Local and global 1D CNNs. Specifically, as depicted in Figure 1c, the 1D CNN takes a matrix with dimensions M × N as input, where M is the length of the time window and N is the number of EEG channels. Each 1D convolutional layer uses a kernel of variable dimension Q × N, where Q is the temporal window covered by the filter. The mathematical notation of a 1D convolutional layer is as follows:

where is the output of the unit r of the filter feature map of size R (R = M in the case stride = 1); x is the two-dimensional input portion overlapping with the filter; w is the connection weight of the convolutional filter; b is the bias term; and f is the activation function of the filter.

In this work, we applied multiple layers of 1D CNN with different kernels sizes and numbers of kernels to extract hierarchical features from the EEG recordings. We used both local and global 1D CNNs, where the local CNN operates on single task segment and the global CNN operates on combinations of task segments. The output of the 1D CNN layers was a set of high-dimensional feature vectors, which were then passed to the BiRAU for further processing.

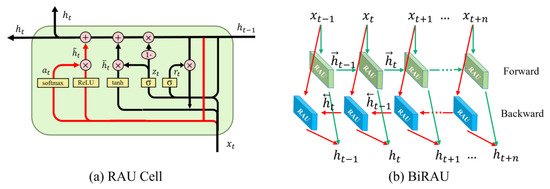

Bidirectional recurrent attention unit. As shown in Figure 1d, we used BiRAU to extract temporal relational features between EEG signals. RAU stands for recurrent attention unit [19], which is a type of RNN that can be used for sequence modeling and prediction tasks. Different from other attention mechanisms [20], an RAU architecture seamlessly integrates the attention mechanism into the cell of a GRU and allows the network to focus on different parts of the input sequence selectively. The architecture of the RAU cell is shown in Figure 2.

Figure 2.

(a) The architecture of the RAU cell. The part with red links is the attention gate. (b) The architecture of the BiRAU design.

The attention weights of the attention gate in the RAU are calculated as follows:

where ; and are learnable parameters; is the external input vector at time step t; and is the hidden state of the previous time step. The attention gate assigns a high weight to valuable information and a low weight to unimportant information. It enables the network to identify important feature dimensions for the current and subsequent hidden states by combining the input at the current time step and previous hidden state.

Furthermore, the hidden state with attention can be computed as

where and are learnable parameters; is the activation function, which is short for rectified linear unit; and denotes the Hadamard product.

In this work, we proposed a BiRAU structure, which is shown in Figure 2b, to extract temporal information from the high-dimensional feature vectors produced by the 1D CNN, which is essential for accurate classification of motor imagery tasks. By incorporating bidirectional processing, the BiRAU processes the input sequence in both forward and backward directions; it can effectively capture both past and future context information of the input sequence, and produces a set of hidden states that capture the contextual information of the sequence. The output of BiRAU can be computed as follows:

where is the concatenation of the forward state and the backward state of the same size.

3. Experiments and Results

In this work, binary and four-category classification experiments were designed at the individual and group levels. We randomly selected 10 subjects from PhysioNet, who were denoted as Sbj1-Sbj10 in the experiments, and used 67% of signals of each subject as the training set and the other 33% as the testing set, respectively.

3.1. Ablation Experiment

To validate the effectiveness of our proposed method and determine the contribution of each component to the results, we designed four ablation experiments on five subjects: (1) 1DCNN+BiLSTM, (2) 1DCNN+BiRNN, (3) local 1DCNN+BiRAU, and (4) global 1DCNN+BiRAU. In experiments (1) and (2), the 1D CNN comprised both the local 1D CNN and global 1D CNN. We examined the individual contributions of the local 1D CNN, the global 1D CNN, and the BiRAU to the model’s performance. The results of the ablation experiments are presented in Table 1.

Table 1.

The accuracies of the ablation experiments.

Clearly, both the local 1D CNN and global 1D CNN play a role in the feature extraction of EEG information. In particular, after using the SegPool Strategy, the local 1D CNN can focus more on the feature information extraction of each task itself, and the global 1D CNN can help better learn different tasks and differentiate features between tasks. Moreover, the BiRAU also exhibits better classification ability and effect than the BiLSTM and BiRNN.

3.2. Parameter Settings

In this work, parameter tuning is extremely important, which significantly affects classification performance. To identify the optimal parameter settings for our proposed model, we conducted parameter tuning experiments using four-category classification tasks on five subjects, respectively. The experiments aimed to investigate the impact of several key parameters on the model’s performance, including the number of local 1D convolution layers, the number of global 1D convolution layers, and the numbers of layers and neurons in the BiRAU. Additionally, we also varied the ratio of random slices according to a previous work [18] and chose a range of 70–90% for the length of the random segments.

Table 2 summarizes the results of the parameter tuning experiments. It could be observed that consistent results were achieved across experiments for different subjects. The optimal parameter settings for all five subjects consisted of 32 local convolution layers and 256 global convolution layers, along with a two-layer BiRAU with 32 neurons, which attained the best average accuracy of 90.25%.

Table 2.

Parameter tuning experiments.

In summary, a 32-layer local 1D CNN could capture the feature information for each task. However, we needed a larger global 1D CNN to identify the differences between tasks. A two-layer BiRAU with 32 neurons could handle the temporal dimension well, as shown by its high correlation coefficient with the EEG signals over time. Notably, the following experiments were performed based on this model’s parameter setting.

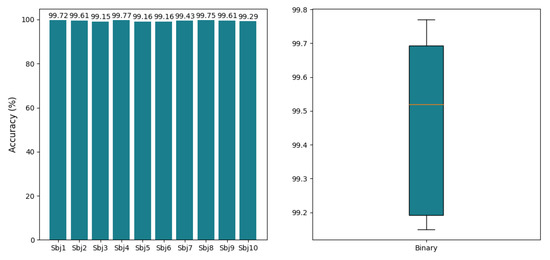

3.3. Classification Performance on Individual Level

To evaluate the effectiveness of our proposed model, we conducted binary classification experiments using the EEG data from ten different subjects. The hyperparameter settings for each subject were kept consistent. The experimental results are presented in Figure 3, which shows that our proposed model achieves good classification performance across all ten subjects. Notably, Sbj4 achieves the highest accuracy of 99.77%, while Sbj3 obtains the lowest accuracy of 99.15%.

Figure 3.

Individual-level performance (accuracy) in binary classification.

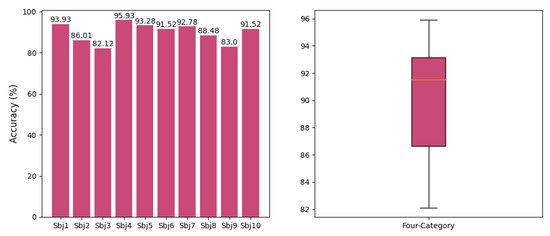

We further evaluated the four-category classification performance using the same experimental settings. The results are shown in Figure 4. It can be seen that the proposed model achieves an average accuracy of 90.90% across all subjects, with the highest accuracy of 95.93% and the lowest accuracy of 82.12% in the four-category classification task.

Figure 4.

Individual-level performance (accuracy) in four-category classification.

Our results demonstrate that the proposed model can effectively differentiate brain states across diverse subjects, even with consistent hyperparameter settings. This suggests that our model has great potential for real-world applications that involve a variety of subjects.

3.4. Classification Performance at Group Level

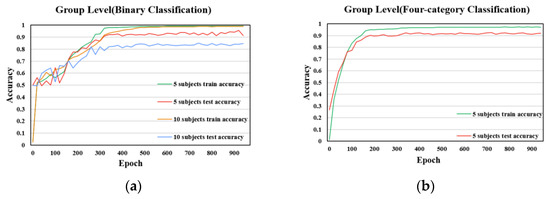

The interpretation of EEG signals is still a challenge due to large inter-subject variability [19,20,21]. To address this challenge, we conducted group-level experiments to further evaluate the classification performance of our 1DCNN-BiRAU model in binary and four-category classification. We selected five subjects and ten subjects for the group-level training, and the experimental results are presented in Figure 5.

Figure 5.

The performance at group level. (a) shows the training and test accuracy of binary classification, and (b) shows the training and test accuracy of four-category classification.

The results show that promising classification performance can be consistently obtained for group-level experiments. The proposed model in this paper can effectively mitigate overfitting while also achieving consistent and stable results. It achieves accuracy of 91.21% (five subjects) and 84.57% (ten subjects) in the binary classification task, and accuracy of 92.18% (five subjects) in the four-category classification task. The findings demonstrate that our proposed model is capable of maintaining good classification performance when applied to a group of subjects. These results also suggest that our model effectively captures inter-subject differences and generalizes well across multiple subjects.

3.5. Comparison with Existing Studies

To assess the effectiveness of our proposed model, we conducted a comparison with the classification results reported in related works. As shown in Table 3, the results demonstrate that the proposed 1DCNN-BiRAU model based on SegPool in this paper achieves impressive results in both the binary and four-category classification tasks at the individual level. The model achieves an average accuracy of 99.47% and 90.90%, respectively, which represents a significant improvement over existing work where the highest performance is 90.08% [22] and 90.00% [23]. These results strongly demonstrate the superior performance of the proposed model in differentiating brain states.

Table 3.

Comparison with existing studies (individual level).

4. Conclusions

In this work, we proposed a novel 1DCNN-BiRAU model based on random segment recombination strategy, and the results demonstrate its effectiveness in recognizing human motion images. Furthermore, given the inter-subject variability, it is imperative to explore approaches to enhance the accuracy and robustness of our model across subjects.

While promising results were obtained with a limited sample of 10 individuals, further testing using a larger and more diverse participant pool is needed. We also conducted four-category classification experiments on groups of 10, 20, and 40 subjects, with our model achieving accuracies of 85.15%, 83.49%, and 79.97%, respectively. Expanding the participant sample size will accentuate individual differences but also augment model training and robustness. In future work, we will thoroughly investigate inter-subject variability, optimize the model architectures, and apply our proposed model to real-world applications.

Author Contributions

Conceptualization, H.H. and C.Y.; methodology, H.H.; software, E.S.; validation, C.Y., H.H. and E.S.; formal analysis, C.Y.; investigation, S.Y.; resources, J.W. (Jiaqi Wang); data curation, Y.K.; writing—original draft preparation, H.H.; writing—review and editing, C.Y.; visualization, J.W. (Jinru Wu); supervision, S.Z.; project administration, S.Z.; funding acquisition, S.Z.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the High-Level Researcher Start-Up Projects of Northwestern Polytechnical University (Grant No. 06100-23SH0201228) and the Basic Research Projects of Characteristic Disciplines of Northwestern Polytechnical University (Grant No. G2023WD0146).

Institutional Review Board Statement

Not applicable. This study utilized a publicly available EEG dataset which does not require ethical approval. All data used do not contain personally identifiable information or offensive content.

Informed Consent Statement

Not applicable. This study utilized an open-source EEG dataset that is publicly available, and did not involve direct participation of human subjects.

Data Availability Statement

The data used in this study were obtained from a publicly available dataset, which can be found at the following link: https://archive.physionet.org/pn4/eegmmidb/, accessed on 9 September 2009.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alotaiby, T.; El-Samie FE, A.; Alshebeili, S.A.; Ahmad, I. A review of channel selection algorithms for EEG signal processing. EURASIP J. Adv. Signal Process. 2015, 2015, 66. [Google Scholar] [CrossRef]

- Xie, X.; Yang, Y. Study on classification algorithm of motor imagination EEG signal. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Computer Engineering (ICAICE), Hangzhou, China, 5–7 November 2021; pp. 605–609. [Google Scholar] [CrossRef]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Bougrain, L.; Clerc, M. Electroencephalography (EEG)-Based Brain-Computer Interfaces. In Wiley Encyclopedia of Electrical and Electronics Engineering; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Dose, H.; Møller, J.S.; Iversen, H.K.; Puthusserypady, S. An end-to-end deep learning approach to MI-EEG signal classification for BCIs. Expert Syst. Appl. 2018, 114, 532–542. [Google Scholar] [CrossRef]

- Tang, X.; Li, W.; Li, X.; Ma, W.; Dang, X. Motor imagery EEG recognition based on conditional optimization empirical mode decomposition and multi-scale convolutional neural network. Expert Syst. Appl. 2020, 149, 113285. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, A.; Liu, X.; Shang, J.; Zhang, L. LSTM-Based EEG Classification in Motor Imagery Tasks. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2018, 26, 2086–2095. [Google Scholar] [CrossRef] [PubMed]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Orgeron, J. EEG Signals Classification Using LSTM-Based Models and Majority Logic. Master’s Thesis, Georgia Southern University, Statesboro, GA, USA, 2022. Available online: https://digitalcommons.georgiasouthern.edu/etd/2391 (accessed on 1 July 2022).

- Petrosian, A.; Prokhorov, D.; Homan, R.; Dasheiff, R.; Wunsch, D. Recurrent neural network based prediction of epileptic seizures in intra- and extracranial EEG. Neurocomputing 2000, 30, 201–218. [Google Scholar] [CrossRef]

- Supakar, R.; Satvaya, P.; Chakrabarti, P. A deep learning based model using RNN-LSTM for the Detection of Schizophrenia from EEG data. Comput. Biol. Med. 2022, 151, 106225. [Google Scholar] [CrossRef] [PubMed]

- Najafi, T.; Jaafar, R.; Remli, R.; Wan Zaidi, W.A. A Classification Model of EEG Signals Based on RNN-LSTM for Diagnosing Focal and Generalized Epilepsy. Sensors 2022, 22, 7269. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhao, S.; Dong, Q.; Cui, Y.; Chen, Y.; Han, J.; Xie, L.; Liu, T. Recognizing Brain States Using Deep Sparse Recurrent Neural Network. IEEE Trans. Med. Imaging 2019, 38, 1058–1068. [Google Scholar] [CrossRef] [PubMed]

- Schalk, G.; McFarland, D.J.; Hinterberger, T.; Birbaumer, N.; Wolpaw, J.R. BCI2000: A general-purpose brain-computer interface (BCI) system. IEEE Trans. Biomed. Eng. 2004, 51, 1034–1043. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, E215–E220. [Google Scholar] [CrossRef] [PubMed]

- Altaheri, H.; Muhammad, G.; Alsulaiman, M.; Amin, S.U.; Altuwaijri, G.A.; Abdul, W.; Bencherif, M.A.; Faisal, M. Deep learning techniques for classification of electroencephalogram (EEG) motor imagery (MI) signals: A review. Neural Comput. Appl. 2023, 35, 14681–14722. [Google Scholar] [CrossRef]

- Baig, M.Z.; Aslam, N.; Shum, H.P.H. Filtering techniques for channel selection in motor imagery EEG applications: A survey. Artif. Intell. Rev. 2020, 53, 1207–1232. [Google Scholar] [CrossRef]

- Zhang, S.; Shi, E.; Wu, L.; Wang, R.; Yu, S.; Liu, Z.; Xu, S.; Liu, T.; Zhao, S. Differentiating brain states via multi-clip random fragment strategy-based interactive bidirectional recurrent neural network. Neural Netw. 2023, 165, 1035–1049. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Zhong, G.; Yue, G.; Wang, L.-N.; Yu, H.; Ling, X.; Dong, J. Recurrent attention unit: A new gated recurrent unit for long-term memory of important parts in sequential data. Neurocomputing 2023, 517, 1–9. [Google Scholar] [CrossRef]

- Wang, F.; Tax, D. Survey on the attention based RNN model and its applications in computer vision. arXiv 2016, arXiv:1601.06823. Available online: https://www.semanticscholar.org/paper/Survey-on-the-attention-based-RNN-model-and-its-in-Wang-Tax/f660ea723b62f69b9f4c439724a6b73357e1d3c3 (accessed on 25 January 2016).

- Tanaka, H. Group task-related component analysis (gTRCA): A multivariate method for inter-trial reproducibility and inter-subject similarity maximization for EEG data analysis. Sci. Rep. 2020, 10, 84. [Google Scholar] [CrossRef] [PubMed]

- Xiaoling, L. Motor imagery-based EEG signals classification by combining temporal and spatial deep characteristics. Int. J. Intell. Comput. Cybern. 2020, 13, 437–453. [Google Scholar] [CrossRef]

- Khademi, Z.; Ebrahimi, F.; Kordy, H.M. A transfer learning-based CNN and LSTM hybrid deep learning model to classify motor imagery EEG signals. Comput. Biol. Med. 2022, 143, 105288. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).