Domain Knowledge Graph Question Answering Based on Semantic Analysis and Data Augmentation

Abstract

1. Introduction

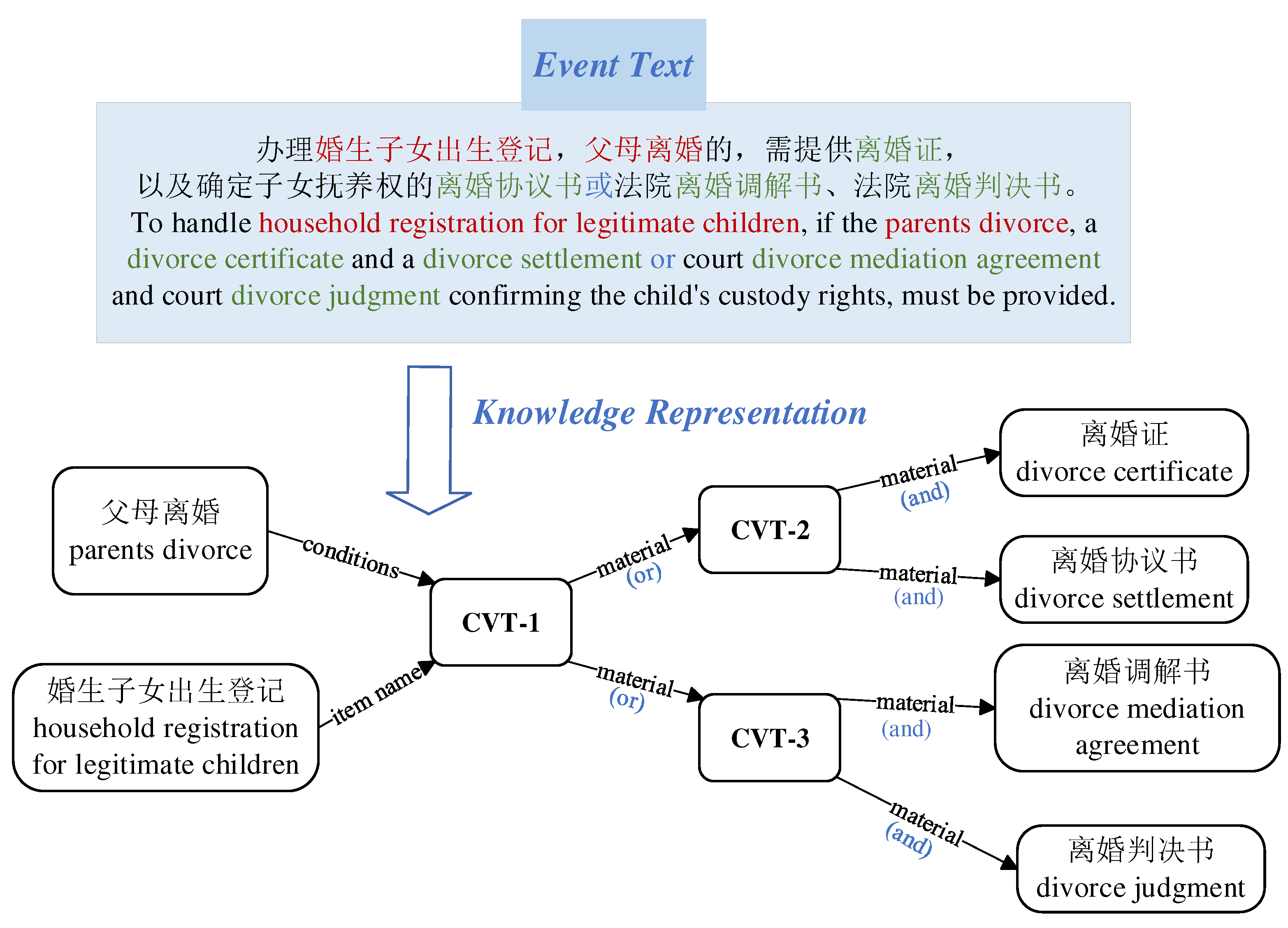

- This paper uses compound value types (CVT) nodes to store household registration events. Since CVT nodes collect multiple attributes of events and more accurately model complex relationships between entity nodes, this approach simplifies queries with multiple constraints in a knowledge graph (KG) into simple queries;

- This paper comprehensively uses KGs and text similarity technology to improve the accuracy of the QA system. It leverages a corpus of query questions to train a RoBERTa-BiLSTM-MultiHeadAttention (RBMA) model to classify query intent. When the intent is clear, it utilizes the language technology platform (LTP) [7] to extract semantic role subjects from queries, and further retrieves the answer from the KG. When the intent is ambiguous, it uses text similarity techniques to match input queries with a corpus of queries and outputs the most similar answers;

- This paper applies the LLM to enhance the training data to solve the problem of data imbalance and improve the accuracy of intent classification. We use the GPT-3.5-turbo language model to augment the dataset size by replacing synonyms and randomly inserting irrelevant words. The experiment results show that data augmentation techniques greatly improve the performance of QA systems.

2. Related Works

3. The Construction of Household Registration Domain Knowledge Graph

3.1. Data Acquisition

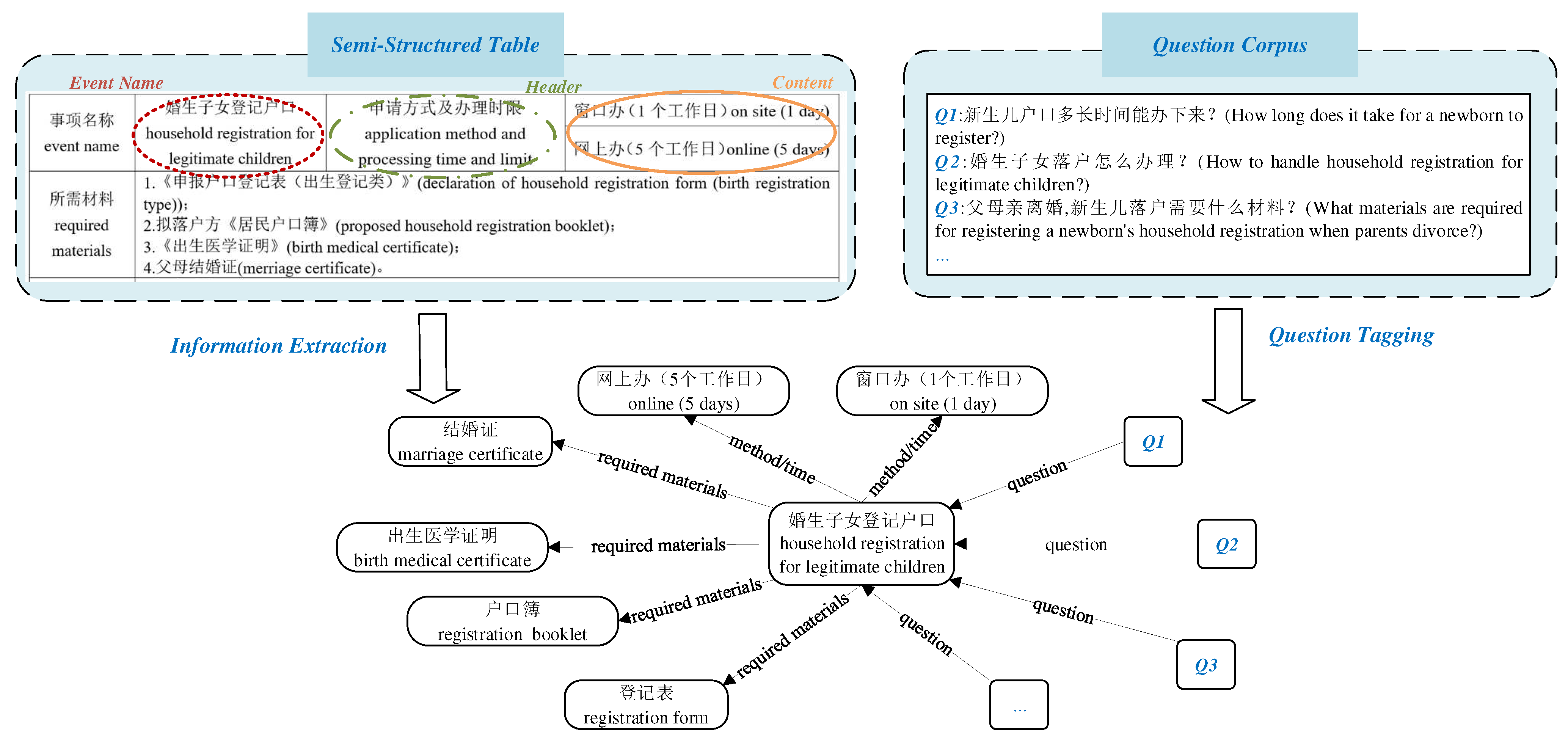

3.2. Information Extraction

- (1)

- The Guidelines are semi-structured tables, and the theme tags and headers of the table can be directly extracted as relationships and attributes. The household registration business event name is extracted as the head entity, while the specific contents in the tables are extracted as tail entities, forming the basic event triplet of the household registration business;

- (2)

- Supplementary explanations for household registration events under different constraint conditions are added as a tail entity to the head entity of the household registration business event name, forming the condition triplet of the household registration event.

- (3)

- The questions from the question corpus were manually labeled, and corresponding relationships were established with the entities in steps (1) and (2), thereby constructing the question triplet of the household registration event.

3.3. Knowledge Representation

4. Question Answering System

4.1. System Structure

4.2. Semantic Parsing

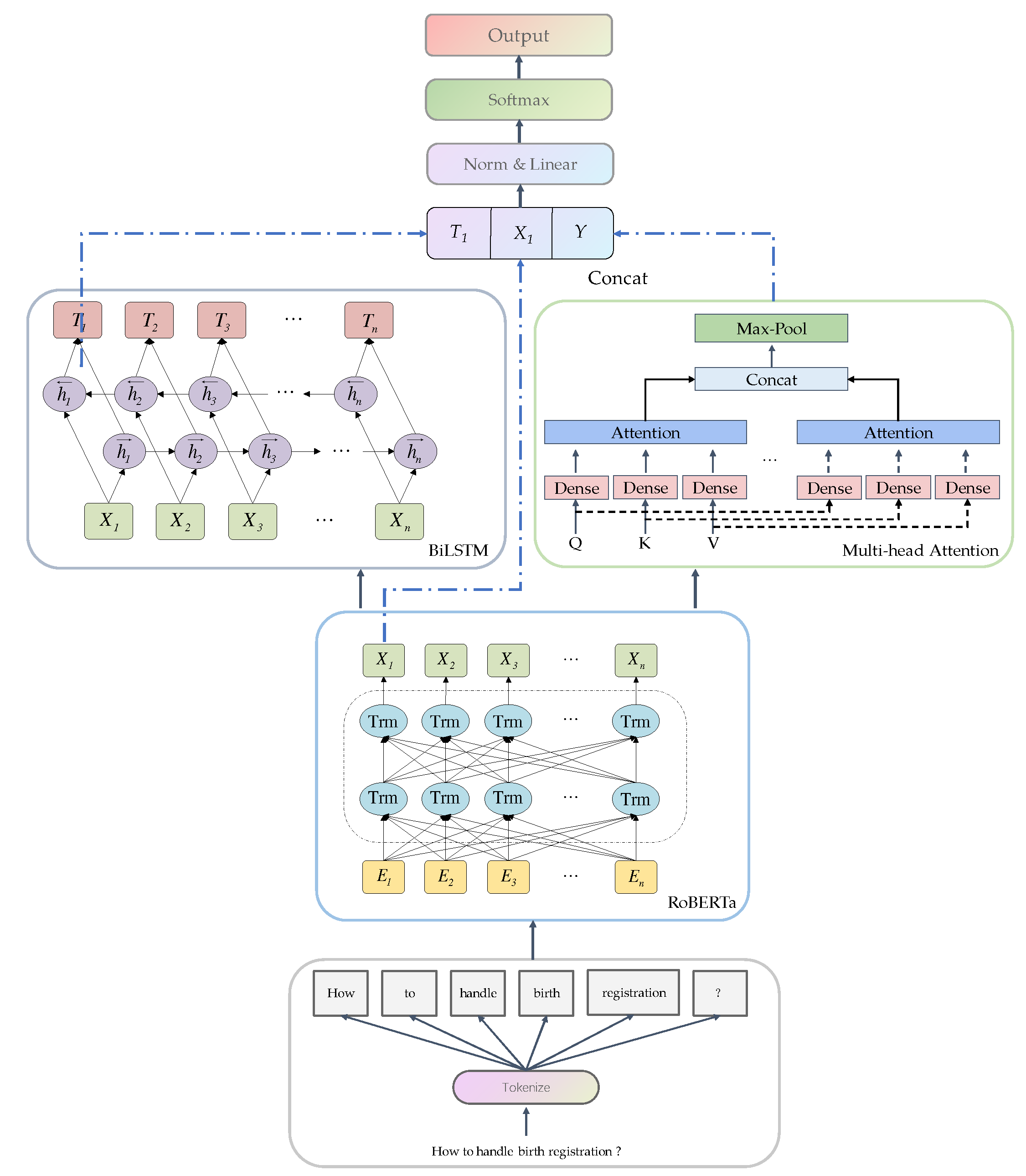

4.2.1. Question Intention Classification

- (1)

- RoBERTa Layer

- (2)

- BiLSTM Layer

- (3)

- Multi-head attention layer

- (4)

- Linear layer

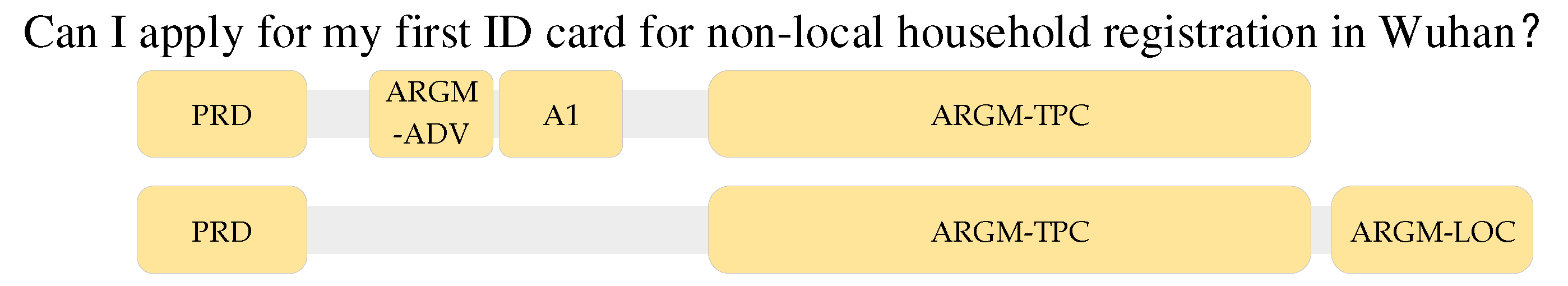

4.2.2. Event Matching

- (1)

- Semantic Role Analysis

- (2)

- Text similarity matching

- To begin with, the phrases extracted from semantic analysis in the phrase set are reassembled and concatenated, resulting in the sentence with extracted semantic role features.

- Search for all CVT nodes that represent household registration events in the KG, extract the corresponding description texts of household registration events for these nodes, and form a candidate set ;

- Perform similarity matching between the sentence and the description texts in the candidate set to determine the household registration item corresponding to the given question and its CVT node in the KG.

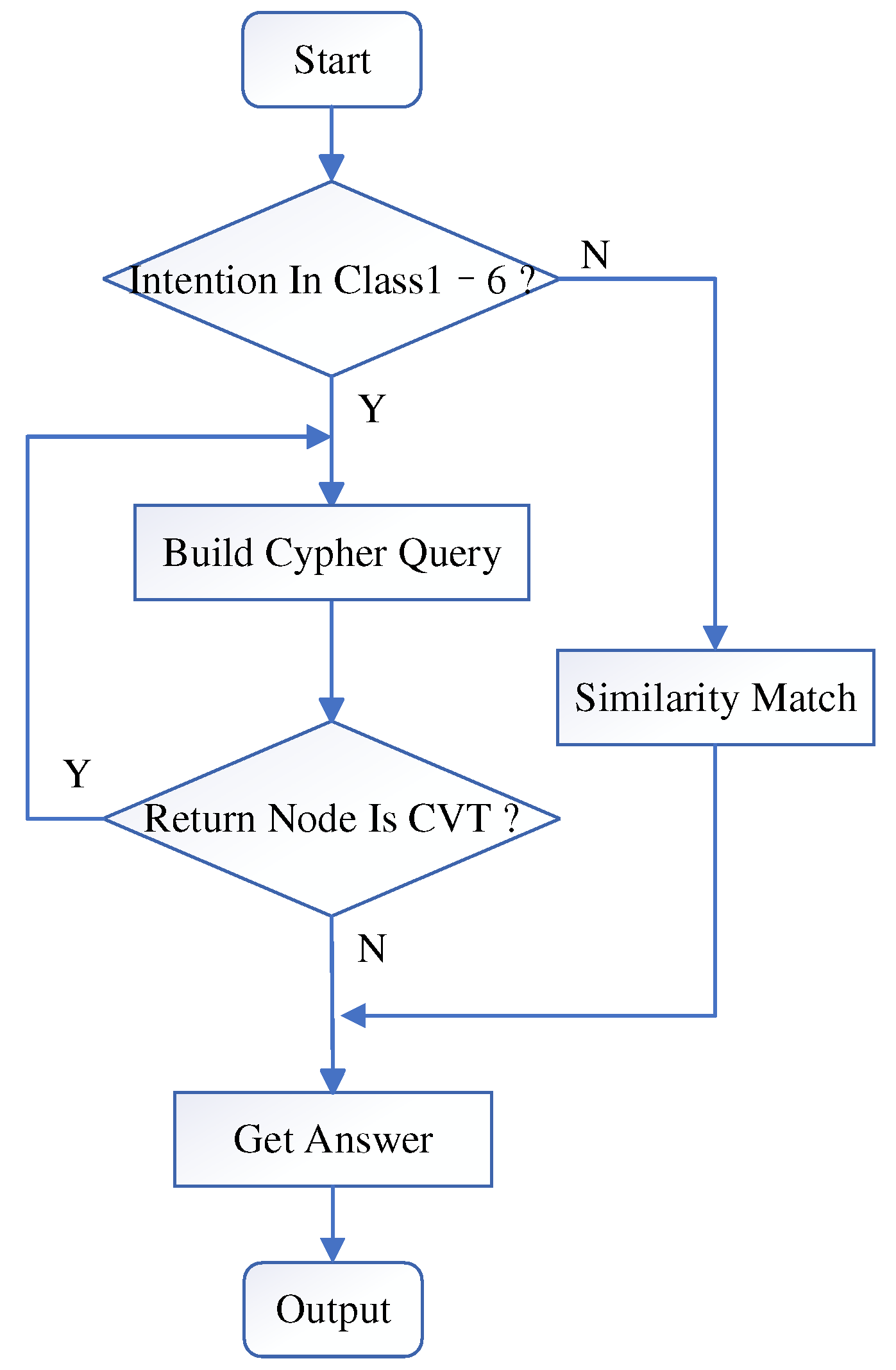

4.3. Answer Retrieval

- (1)

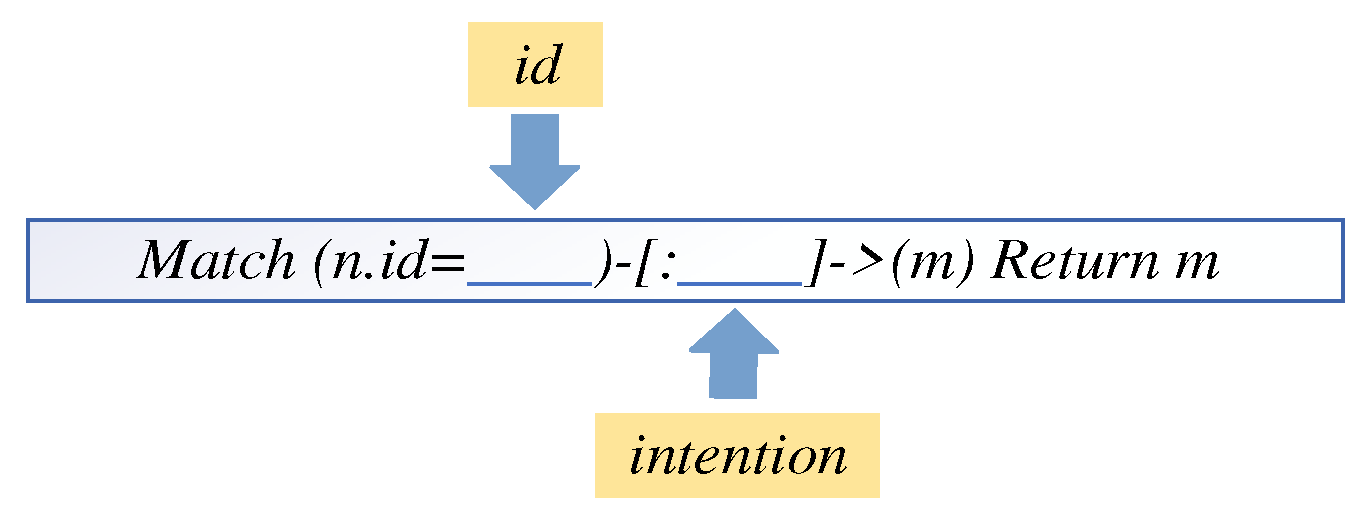

- The first method applies to belonging to class 1 to class 6, which fills in predesigned Cypher query templates with and of the event node to construct querying statements. The Cypher query template is shown in Figure 8. If the query returns an answer node, the node content will be output as an answer. If a CVT node is returned, the node will be re-inserted into the Cypher query template and the query will continue until an answer node is returned, and use the text content stored in the answer node as the output;

- (2)

- The second query method is used when is class 7, which means the question is unrelated to household registration processing. Here, we use the CoSENT model to retrieve the most similar question from the question corpus to generate the answer.

5. Experiment and Analysis

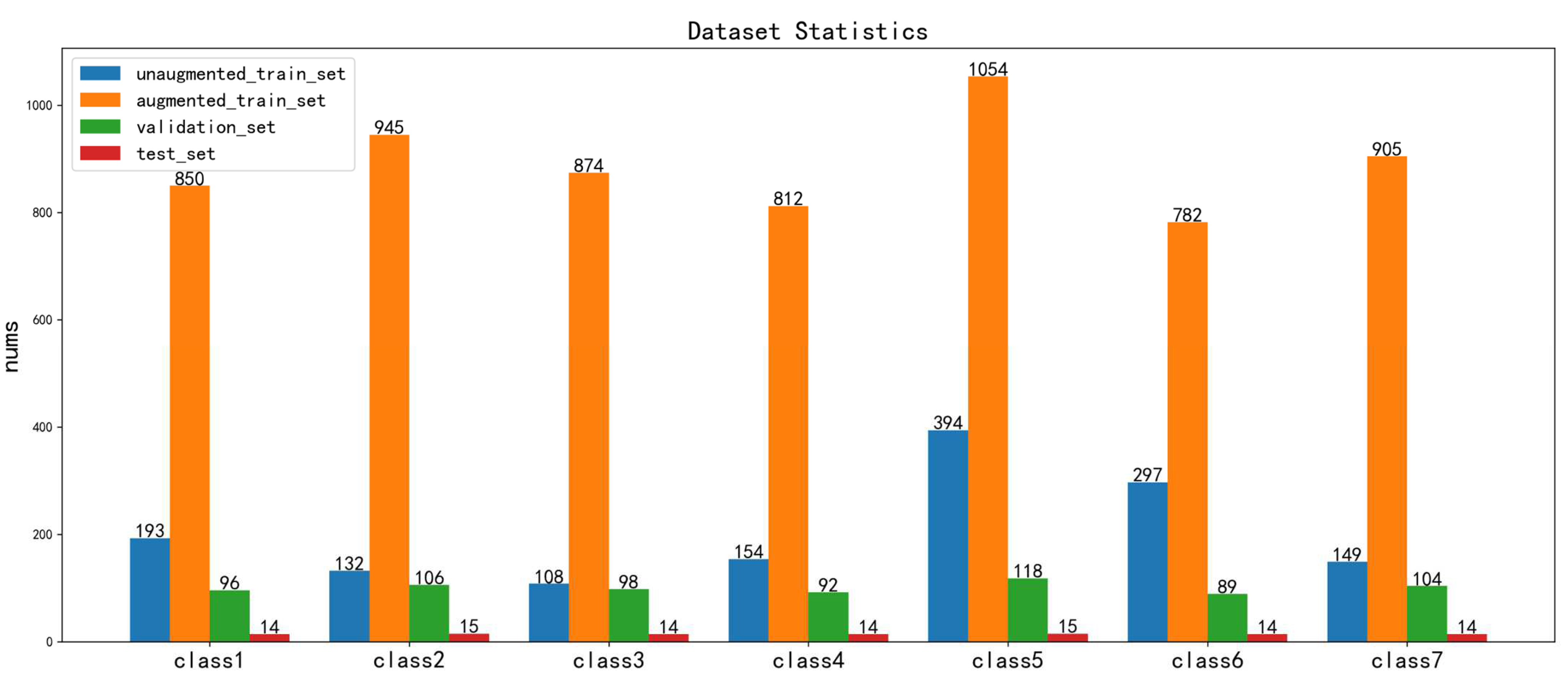

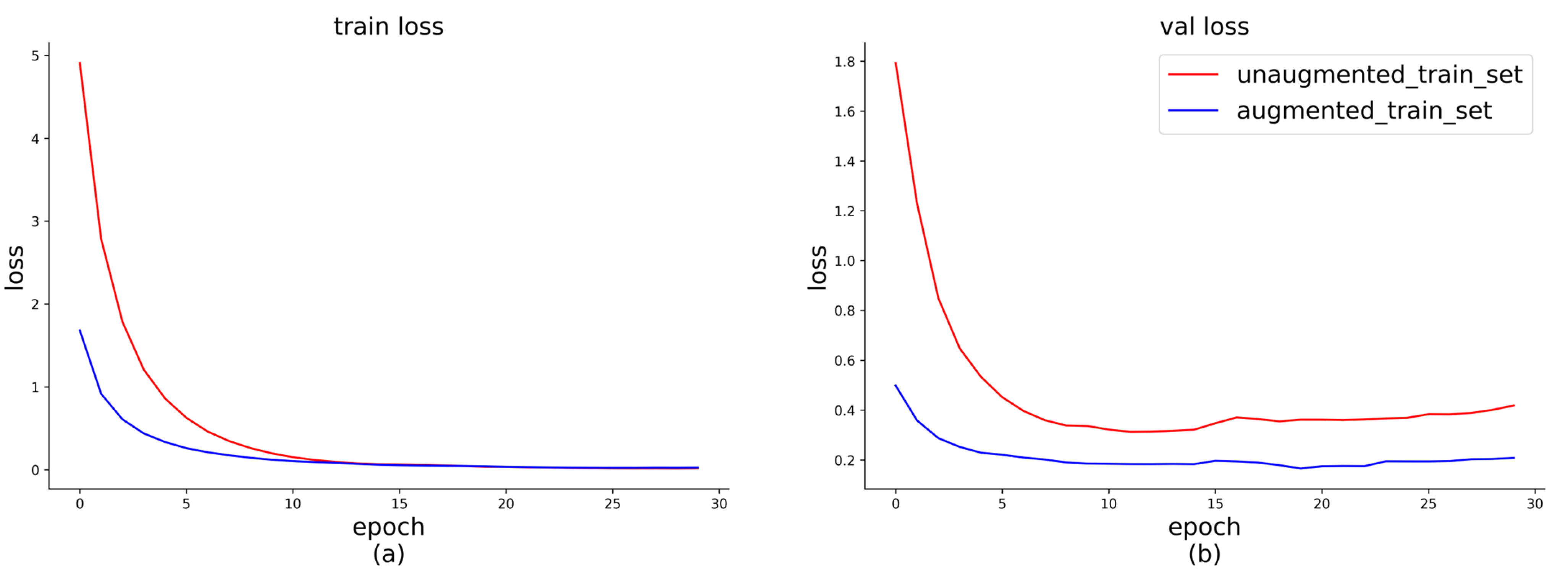

5.1. Data Augmentation

5.2. Question Intention Classification Experiment

5.2.1. Comparison Model

- DPCNN [58]: A deep network-based classification model that extracts text dependency features over long distances;

- TextRCNN [59]: A bidirectional RNN-based model that leverages context information through max-pooling to extract important features for text classification;

- BERT: A model that leverages the BERT architecture to parse the semantics of a sentence and obtain corresponding sentence embeddings for text classification tasks;

- BERT-BiLSTM-MultiHeadAttention: A model that employs BERT to obtain sentence embeddings, BiLSTM to extract contextual information, and MHA to consider multiple aspects of the sentence and perform text classification based on the combination of all information;

- RoBERTa: A model that utilizes RoBERTa pretrained models to obtain semantic embeddings of sentences for text classification tasks;

- RoBERTa-BiLSTM: A model that combines RoBERTa’s semantic parsing with BiLSTM’s contextual information for text classification;

- RoBERTa-MultiHeadAttention: A model that incorporates MHA with RoBERTa’s semantic embeddings to consider multiple aspects of the sentence and performs text classification based on the combination of all information.

5.2.2. Dataset Configuration

- : 1427 genuine question collected and labeled from the question corpus dataset;

- : Randomly selecting 90% of the data from the 7055 datasets obtained through data augmentation resulted in a total of 6222 questions;

- : Extracting the remaining 10% of the data from the 7055 datasets obtained through data augmentation resulted in a total of 833 questions;

- : 100 genuine questions were randomly selected from the , and these questions were manually rewritten to create an additional 100 questions as the test set.

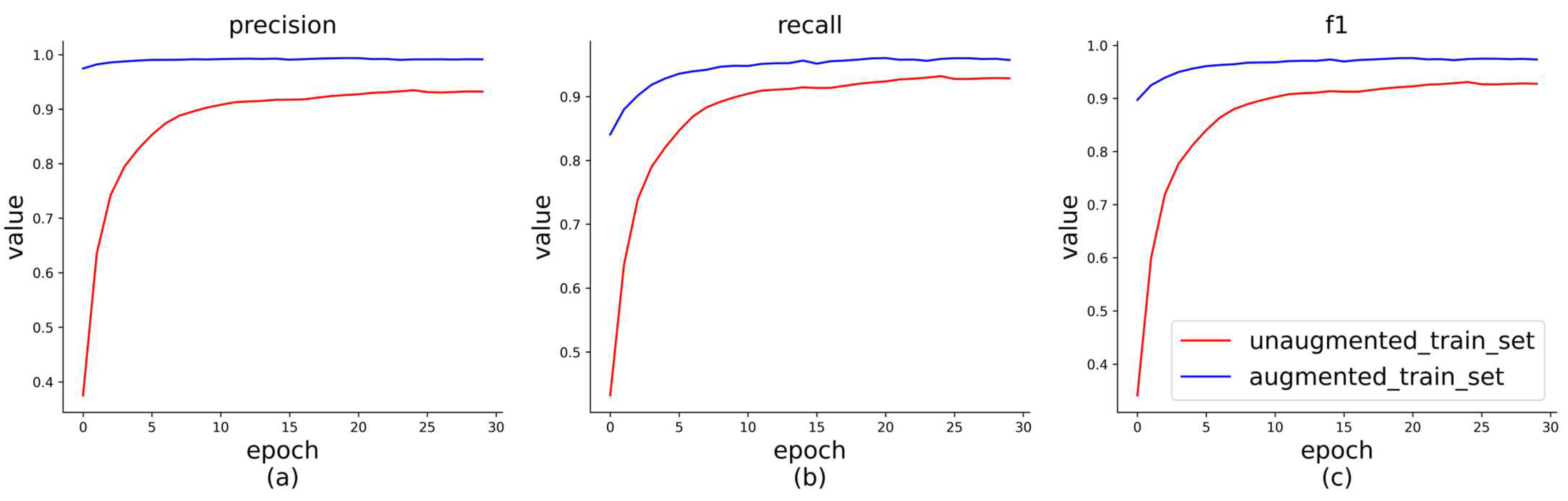

5.2.3. Experimental Results and Analysis

- (1)

- Model Comparison Experiment

- (2)

- Impact of Text Data Augmentation on the Model

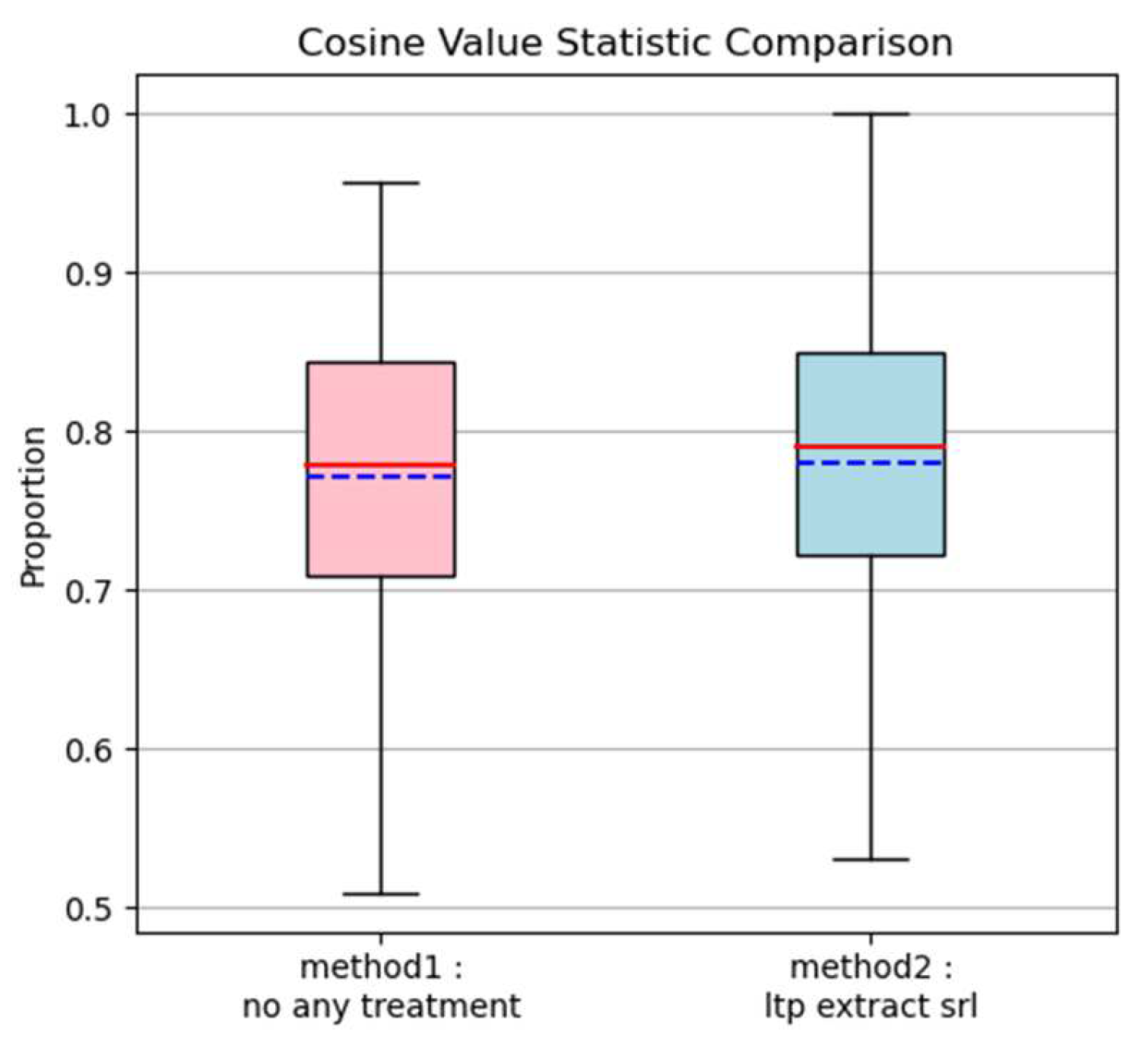

5.3. Event Entity Matching Experiment

5.3.1. Experimental Method Comparison

- (1)

- Match the raw question text directly with the corresponding event entity for text similarity without any processing, and record the experimental accuracy and similarity values.

- (2)

- Use LTP for syntactic analysis of the question, extract semantic role phrases, reorganize the phrases into sentences and then conduct text similarity matching with the corresponding event entities, and record the experimental accuracy and similarity values.

5.3.2. Experimental Results and Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, W.; Deng, Y.; Liang, Y.; Lei, K. Answer Category-Aware Answer Selection for Question Answering. IEEE Access 2020, 9, 126357–126365. [Google Scholar] [CrossRef]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing; Pearson: London, UK, 2014; Volume 3. [Google Scholar]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Zeng, A.; Liu, X.; Du, Z.; Wang, Z.; Lai, H.; Ding, M.; Yang, Z.; Xu, Y.; Zheng, W.; Xia, X.; et al. Glm-130b: An open bilingual pre-trained model. arXiv 2022, arXiv:2210.02414. [Google Scholar]

- Chapman, D. Geographies of self and other: Mapping Japan through the koseki. Asia Pac. J. 2011, 9, 1–10. [Google Scholar]

- Cullen, M.J. The Making of the Civil Registration Act of 1836. J. Ecclesiastical Hist. 1974, 25, 39–59. [Google Scholar] [CrossRef]

- Che, W.; Feng, Y.; Qin, L.; Liu, T. N-LTP: An Open-source Neural Language Technology Platform for Chinese. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (Emnlp 2021): Proceedings of System Demonstrations, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 42–49. [Google Scholar]

- Bordes, A.; Usunier, N.; Chopra, S.; Weston, J. Large-scale simple question answering with memory networks. arXiv 2015, arXiv:1506.02075. [Google Scholar]

- Bao, J.; Duan, N.; Yan, Z.; Zhou, M.; Zhao, T. Constraint-based question answering with knowledge graph. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 2503–2514. [Google Scholar]

- Unger, C.; Bühmann, L.; Lehmann, J.; Ngomo, A.-C.N.; Gerber, D.; Cimiano, P. Template-Based Question Answering over RDF Data. In Proceedings of the 21st International Conference on World Wide Web, WWW’12, Lyon, France, 16–20 April 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 639–648. [Google Scholar] [CrossRef]

- Zheng, W.; Zou, L.; Lian, X.; Yu, J.X.; Song, S.; Zhao, D. How to Build Templates for RDF Question/Answering: An Uncertain Graph Similarity Join Approach. In Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data, SIGMOD’15, Melbourne, Australia, 31 May–4 June 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1809–1824. [Google Scholar] [CrossRef]

- Cui, W.; Xiao, Y.; Wang, H.; Song, Y.; Hwang, S.; Wang, W. KBQA: Learning question answering over QA corpora and knowledge bases. arXiv 2019, arXiv:1903.02419. [Google Scholar] [CrossRef]

- Bast, H.; Haussmann, E. More accurate question answering on freebase. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, Melbourne, Australia, 18–23 October 2015; pp. 1431–1440. [Google Scholar]

- Abujabal, A.; Yahya, M.; Riedewald, M.; Weikum, G. Automated template generation for question answering over knowledge graphs. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 1191–1200. [Google Scholar]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning efficient convolutional networks through network slimming. In Proceedings of the IEEE International Conference on Computer Vision, Cambridge, MA, USA, 20–23 June 1995; pp. 2736–2744. [Google Scholar]

- Chen, Y.; Li, H.; Qi, G.; Wu, T.; Wang, T. Outlining and Filling: Hierarchical Query Graph Generation for Answering Complex Questions Over Knowledge Graphs. IEEE Trans. Knowl. Data Eng. 2022, 35, 8343–8357. [Google Scholar] [CrossRef]

- Xu, K.; Wu, L.; Wang, Z.; Yu, M.; Chen, L.; Sheinin, V. Exploiting rich syntactic information for semantic parsing with graph-to-sequence model. arXiv 2018, arXiv:1808.07624. [Google Scholar]

- Green, B.; Wolf, A.; Chomsky, C.; Laughery, K. BASEBALL: An Automatic Question Answerer. In Readings in Natural Language Processing; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1986; pp. 545–549. [Google Scholar]

- Wa, W. Lunar rocks in natural english: Explorations in natural language question answering. Fundam. Stud. Computer Sci. Netherl. Da. 1977, 5, 521–569. [Google Scholar]

- Vrandečić, D.; Krötzsch, M. Wikidata: A free collaborative knowledgebase. Commun. ACM 2014, 57, 78–85. [Google Scholar] [CrossRef]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; van Kleef, P.; Auer, S.; et al. DBpedia–A large-scale, multilingual knowledge base extracted from Wikipedia. Semantic Web 2015, 6, 167–195. [Google Scholar] [CrossRef]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Lukovnikov, D.; Fischer, A.; Lehmann, J.; Auer, S. Neural network-based question answering over knowledge graphs on word and character level. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 1211–1220. [Google Scholar]

- Deng, Y.; Zhang, W.; Xu, W.; Shen, Y.; Lam, W. Nonfactoid Question Answering as Query-Focused Summarization with Graph-Enhanced Multihop Inference. IEEE Trans. Neural Networks Learn. Syst. 2023; early access. [Google Scholar] [CrossRef]

- Shen, Y.; Ding, N.; Zheng, H.-T.; Li, Y.; Yang, M. Modeling relation paths for knowledge graph completion. IEEE Trans. Knowl. Data Eng. 2020, 33, 3607–3617. [Google Scholar] [CrossRef]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge graph embedding: A survey of approaches and applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Abu-Salih, B. Domain-specific knowledge graphs: A survey. J. Netw. Comput. Appl. 2021, 185, 103076. [Google Scholar] [CrossRef]

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Philip, S.Y. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 494–514. [Google Scholar] [CrossRef]

- Jiang, Z.; Chi, C.; Zhan, Y. Research on Medical Question Answering System Based on Knowledge Graph. IEEE Access 2021, 9, 21094–21101. [Google Scholar] [CrossRef]

- Du, Z.Y.; Yang, Y.; He, L. Question answering system of electric business field based on Chinese knowledge map. Comput. Appl. Softw. 2017, 34, 153–159. [Google Scholar]

- Aghaei, S.; Raad, E.; Fensel, A. Question answering over knowledge graphs: A case study in tourism. IEEE Access 2022, 10, 69788–69801. [Google Scholar] [CrossRef]

- Liu, Q.; Li, Y.; Duan, H.; Liu, Y.; Qin, Z. Knowledge graph construction techniques. J. Comput. Res. Dev. 2016, 53, 582–600. [Google Scholar]

- Wuhan City Household Registration Business Processing Guidelines. Available online: http://www.wuhan.gov.cn/gfxwj/sbmgfxwj/sgaj_79493/202301/t20230104_2124417.shtml (accessed on 9 June 2023).

- Speer, R.; Chin, J.; Havasi, C. Conceptnet 5.5: An open multilingual graph of general knowledge. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Hubei Government Service Network. Available online: http://zwfw.hubei.gov.cn/ (accessed on 9 June 2023).

- Zhou, W.; Liu, J.; Lei, J.; Yu, L.; Hwang, J.-N. GMNet: Graded-feature multilabel-learning network for RGB-thermal urban scene semantic segmentation. IEEE Trans. Image Process. 2021, 30, 7790–7802. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Li, Q.; Li, W.; Li, X.; Liu, A.-A. Dual-level representation enhancement on characteristic and context for image-text retrieval. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 8037–8050. [Google Scholar] [CrossRef]

- Li, L.; Wang, P.; Zheng, X.; Xie, Q.; Tao, X.; Velásquez, J.D. Dual-interactive fusion for code-mixed deep representation learning in tag recommendation. Inf. Fusion 2023, 99, 101862. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; AN, G.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in neural information processing systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Lu, S.; Liu, M.; Yin, L.; Yin, Z.; Liu, X.; Zheng, W. The multi-modal fusion in visual question answering: A review of attention mechanisms. PeerJ Comput. Sci. 2023, 9, e1400. [Google Scholar] [CrossRef]

- Lu, S.; Ding, Y.; Yin, Z.; Liu, M.; Liu, X.; Zheng, W.; Yin, L. Improved Blending Attention Mechanism in Visual Question Answering. Comput. Syst. Sci. Eng. 2023, 47, 1149–1161. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Q.; Cheng, H.H.; Peng, W.; Xu, W. A Review of Vision-Based Traffic Semantic Understanding in ITSs. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19954–19979. [Google Scholar] [CrossRef]

- Appendix—LTP4 4.1.4 Documents. Available online: https://ltp.readthedocs.io/zh_CN/latest/appendix.html (accessed on 20 June 2023).

- Xiong, Z.; Zeng, M.; Zhang, X.; Zhu, S.; Xu, F.; Zhao, X.; Wu, Y.; Li, X. Social Similarity Routing Algorithm based on Socially Aware Networks in the Big Data Environment. J. Signal Process. Syst. 2022, 94, 1253–1267. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- CoSENT: A More Effective Sentence Vector Scheme than Sentence-BERT-Scientific Spaces. Available online: https://spaces.ac.cn/archives/8847 (accessed on 10 June 2023).

- Liu, X.; Shi, T.; Zhou, G.; Liu, M.; Yin, Z.; Yin, L.; Zheng, W. Emotion classification for short texts: An improved multi-label method. Humanit. Soc. Sci. Commun. 2023, 10, 1–9. [Google Scholar] [CrossRef]

- Cheng, L.; Yin, F.; Theodoridis, S.; Chatzis, S.; Chang, T.-H. Rethinking Bayesian learning for data analysis: The art of prior and inference in sparsity-aware modeling. IEEE Signal Process. Mag. 2022, 39, 18–52. [Google Scholar] [CrossRef]

- Zhang, Y.; Shao, Z.; Zhang, J.; Wu, B.; Zhou, L. The effect of image enhancement on influencer’s product recommendation effectiveness: The roles of perceived influencer authenticity and post type. J. Res. Interact. Mark. 2023. [CrossRef]

- Wei, J.; Zou, K. Eda: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv 2019, arXiv:1901.11196. [Google Scholar]

- Anaby-Tavor, A.; Carmeli, B.; Goldbraich, E.; Kantor, A.; Kour, G.; Shlomov, S.; Tepper, N.; Zwerdling, N. Do not have enough data? Deep learning to the rescue! In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 7383–7390. [Google Scholar]

- Petroni, F.; Rocktäschel, T.; Riedel, S.; Lewis, P.; Bakhtin, A.; Wu, Y.; Miller, A. Language models as knowledge bases? arXiv 2019, arXiv:1909.01066. [Google Scholar]

- Li, X.L.; Liang, P. Prefix-tuning: Optimizing continuous prompts for generation. arXiv 2021, arXiv:2101.00190. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Comput. Surv. 2023, 55, 195:1–195:35. [Google Scholar] [CrossRef]

- Nigh, M. ChatGPT3 Prompt Engineering. 24 June 2023. Available online: https://github.com/mattnigh/ChatGPT3-Free-Prompt-List (accessed on 25 June 2023).

- Johnson, R.; Zhang, T. Deep pyramid convolutional neural networks for text categorization. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 562–570. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the AAAI conference on artificial intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

| Data Sources | Entity Count | Entity Category Count | Relationship Count | Relationship Category Count |

|---|---|---|---|---|

| Guidelines | 622 | 8 | 1512 | 9 |

| Question corpus | 1770 | 12 | 2564 | 13 |

| Total | 2392 | 20 | 4067 | 22 |

| Category | Definition |

|---|---|

| Class 1 | Application method and processing time limit |

| Class 2 | Processing location |

| Class 3 | Application conditions |

| Class 4 | Required materials |

| Class 5 | Processing procedures |

| Class 6 | Intention unclear |

| Class 7 | Not related to household registration |

| Category | Definition | Category | Definition |

|---|---|---|---|

| A0 | causers or experiencers | EXT | extent |

| A1 | patient | FRQ | frequency |

| A2 | semantic role 2 | LOC | locative |

| A3 | semantic role 3 | MNR | manner |

| ADV | adverbial | PRP | purpose or reason |

| BNF | beneficiary | QTY | quantity |

| CND | condition | TMP | temporal |

| CRD | coordinated arguments | TPC | topic |

| DGR | degree | PRD | predicate |

| DIR | direction | PSR | possessor |

| DIS | discourse marker | PSE | possessee |

| Step | Interpretation | Prompt |

|---|---|---|

| Capacity and Role | What role (or roles) should ChatGPT act as? | As a user who is going to handle household registration business |

| Insight | Provides the behind the scenes insight, background, and context to your request. | You have questions about the handling information of some household registration matters |

| Statement | What you are asking ChatGPT to do. | Please rewrite the given question, provide a similar question |

| Personality | The style, personality, or manner you want ChatGPT to respond in. | Ensure that the original meaning of the sentence is preserved. The rewriting method includes synonym substitution and random insertion of irrelevant words |

| Experiment | Asking ChatGPT to provide multiple examples to you. | The sentences that need to be rewritten are: |

| Parameter | Value |

|---|---|

| Embedding dim | 768 |

| BiLSTM layers num | 2 |

| batch_size | 64 |

| Epoch | 30 |

| Learning rate | 5 × 10−4 |

| Dropout | 0.2 |

| Model | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| DPCNN | 0.9456 | 0.761 | 0.8236 | 0.73 |

| TextRCNN | 0.951 | 0.8218 | 0.8817 | 0.78 |

| BERT | 0.978 | 0.8762 | 0.9215 | 0.85 |

| BERT-BiLSTM-MultiHeadAttention | 0.9846 | 0.9374 | 0.9591 | 0.9 |

| Roberta | 0.9805 | 0.9004 | 0.9371 | 0.87 |

| RoBERTa-BiLSTM | 0.9836 | 0.9459 | 0.9628 | 0.91 |

| RoBERTa-MultiHeadAttention | 0.9856 | 0.9374 | 0.9595 | 0.89 |

| RoBERTa-BiLSTM-MultiHeadAttention (trained on unaugmented data) | 0.8999 | 0.8965 | 0.8969 | 0.87 |

| RoBERTa-BiLSTM-MultiHeadAttention (trained on augmented data) | 0.9949 | 0.9615 | 0.9774 | 0.93 |

| Model | Train Dataset | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Class 1 | unaugmented | 0.9516 | 0.9043 | 0.9271 |

| augmented | 0.9974 | 0.9893 | 0.9813 | |

| Class 2 | unaugmented | 0.7953 | 0.8761 | 0.8327 |

| augmented | 0.9902 | 0.9576 | 0.9271 | |

| Class 3 | unaugmented | 0.8735 | 0.9858 | 0.9244 |

| augmented | 0.9911 | 0.9904 | 0.9897 | |

| Class 4 | unaugmented | 0.9439 | 0.9293 | 0.9354 |

| augmented | 0.9986 | 0.9973 | 0.9961 | |

| Class 5 | unaugmented | 0.954 | 0.9537 | 0.9537 |

| augmented | 0.9981 | 0.9952 | 0.9924 | |

| Class 6 | unaugmented | 0.8738 | 0.8953 | 0.8843 |

| augmented | 0.9921 | 0.9837 | 0.9754 | |

| Class 7 | unaugmented | 0.9089 | 0.8372 | 0.8709 |

| augmented | 0.9965 | 0.989 | 0.9816 | |

| Average | unaugmented | 0.8998 | 0.8965 | 0.8969 |

| augmented | 0.9949 | 0.9615 | 0.9774 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, S.; Zhang, H.; Zhang, W. Domain Knowledge Graph Question Answering Based on Semantic Analysis and Data Augmentation. Appl. Sci. 2023, 13, 8838. https://doi.org/10.3390/app13158838

Hu S, Zhang H, Zhang W. Domain Knowledge Graph Question Answering Based on Semantic Analysis and Data Augmentation. Applied Sciences. 2023; 13(15):8838. https://doi.org/10.3390/app13158838

Chicago/Turabian StyleHu, Shulin, Huajun Zhang, and Wanying Zhang. 2023. "Domain Knowledge Graph Question Answering Based on Semantic Analysis and Data Augmentation" Applied Sciences 13, no. 15: 8838. https://doi.org/10.3390/app13158838

APA StyleHu, S., Zhang, H., & Zhang, W. (2023). Domain Knowledge Graph Question Answering Based on Semantic Analysis and Data Augmentation. Applied Sciences, 13(15), 8838. https://doi.org/10.3390/app13158838