Research on a Coal Seam Gas Content Prediction Method Based on an Improved Extreme Learning Machine

Abstract

1. Introduction

2. Development of Prediction Models for Coal Seam Gas Content

2.1. Theoretical Analysis and Performance Evaluation of the GASA Optimization Algorithm

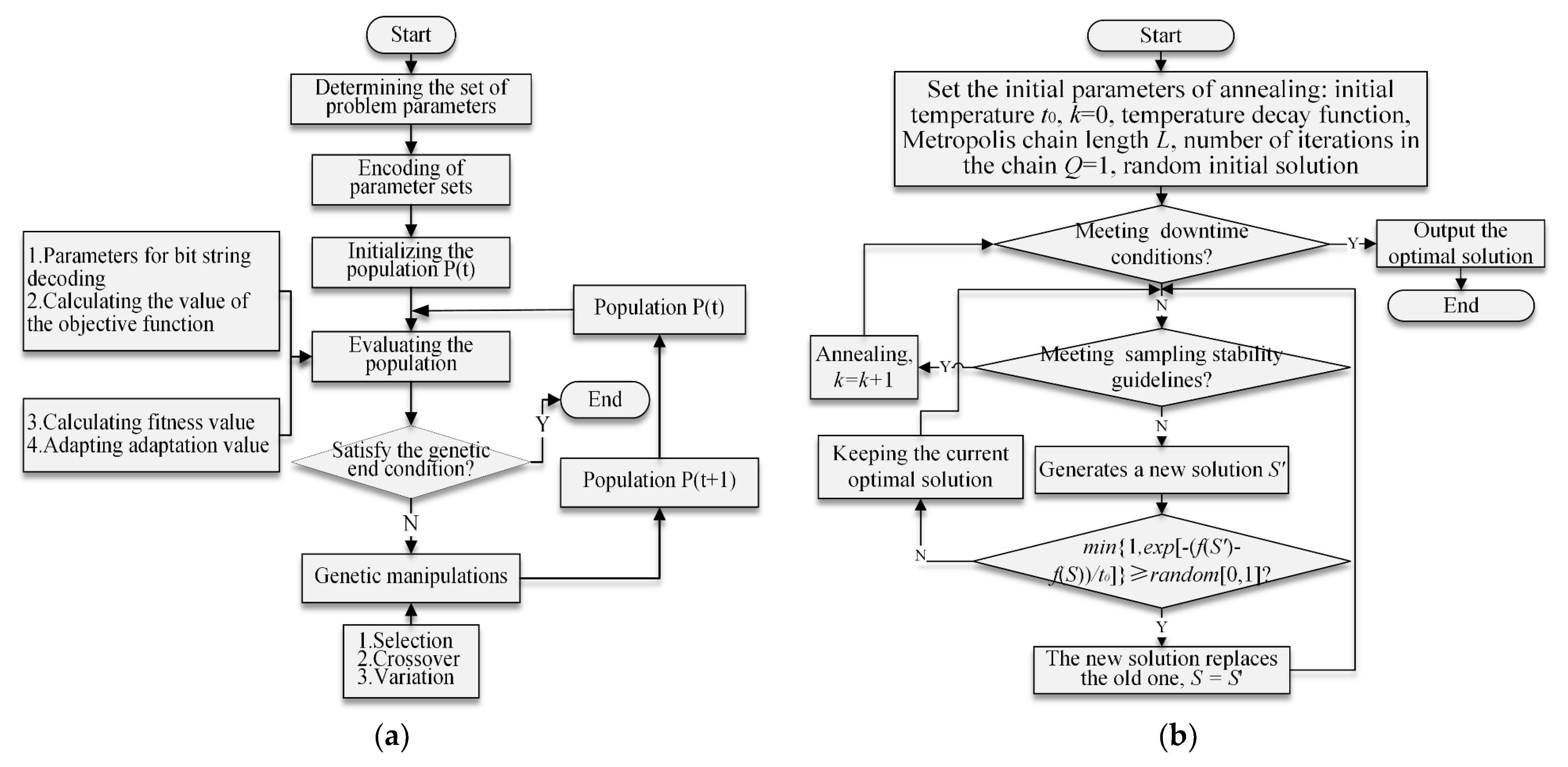

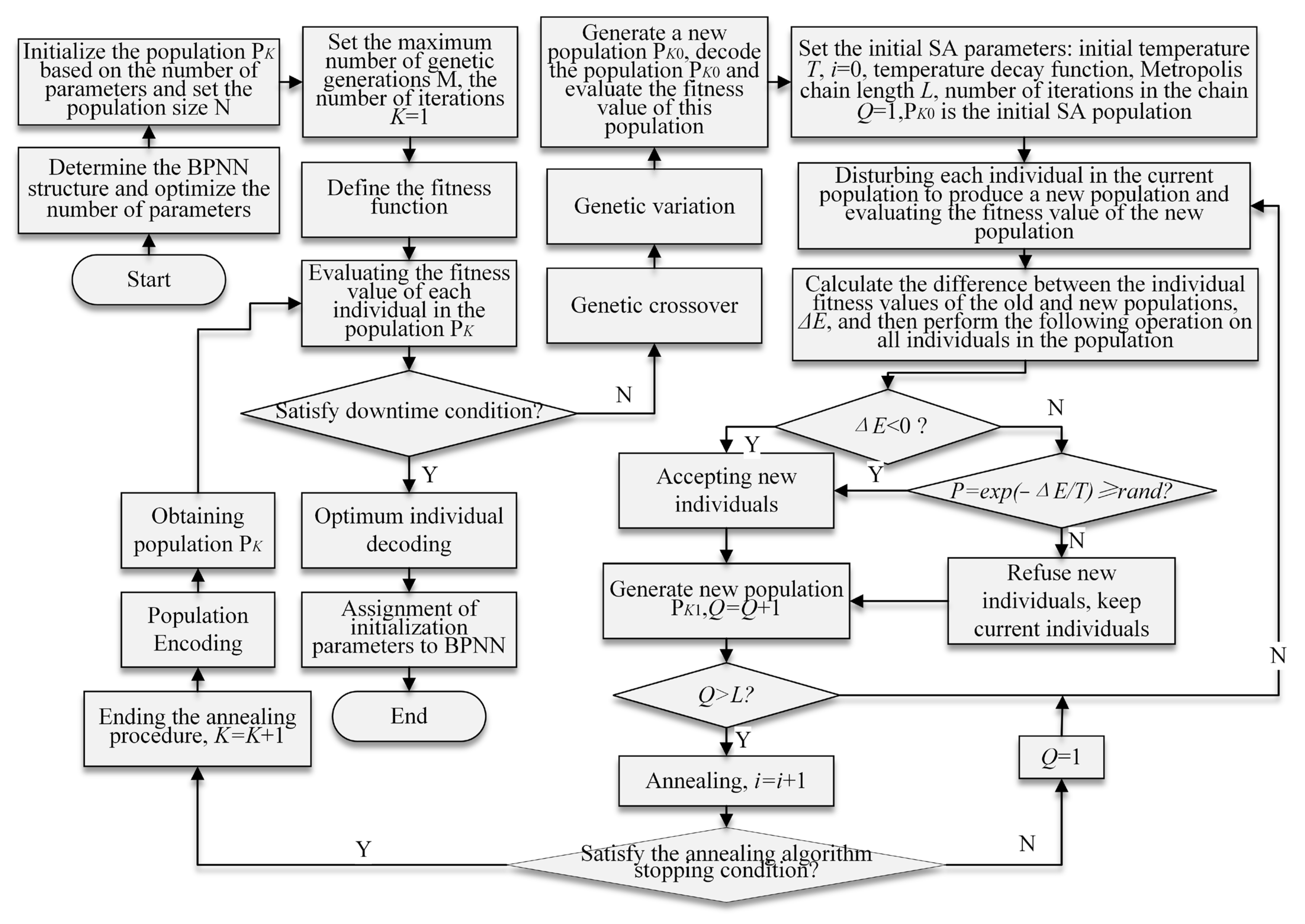

2.1.1. Theoretical Analysis of the GASA Optimization Algorithm

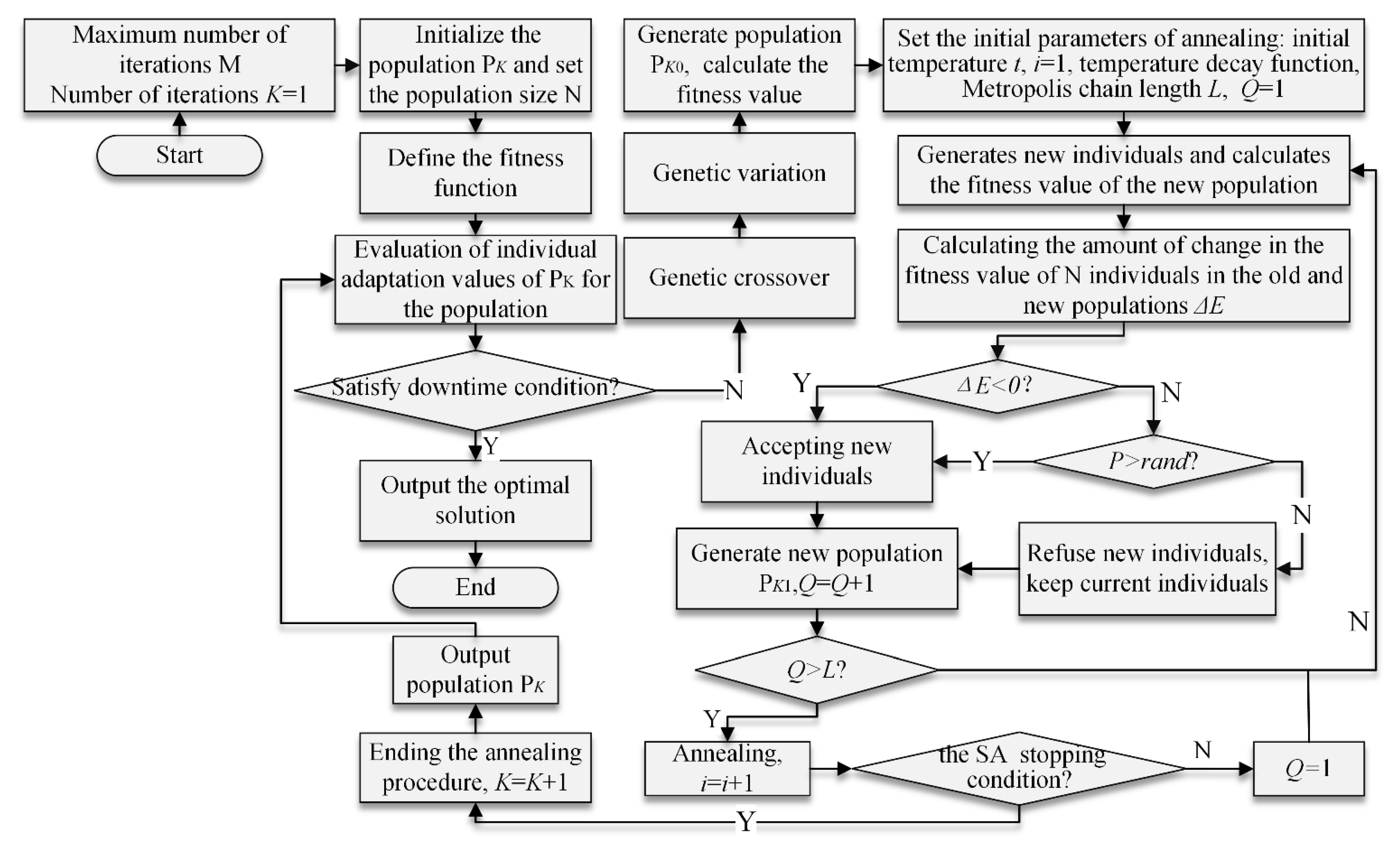

- (1)

- GA Initialization: Set the population size N and initialize the population PK. Set the maximum number of generations M and the genetic iteration number K to 1;

- (2)

- Defining the fitness function;

- (3)

- Assess the fitness value of the PK: Verify if the stopping criterion for the GA has been attained. If the criterion has indeed been met, then it yields the most superior solution. If not, proceed with steps (4) to (9) accordingly;

- (4)

- PK performs genetic crossover and mutation to generate a fresh population, PK0, and subsequently assesses the fitness value of PK0.

- (5)

- Initializing SA parameters: Set the initial solution PK0 of the population as the initial solution of SA, set i = 0, set the initial temperature T = Ti (sufficiently high), determine the length of the Metropolis chain L at each state T, and set the iteration count in the chain Q = 1.

- (6)

- At the present temperature T, and for Q = 1, 2, 3, …L, iterate through steps (7) to (9) repeatedly.

- (7)

- For every member of the current population, induce a random perturbation to generate a fresh population, and subsequently evaluate the fitness of this new population.

- (8)

- For each member of the current population, determine the disparity in fitness between the fresh population and the current population, represented by . Determine whether , and if so, accept the new solution as well; if not, reject the new solution.

- (9)

- Increment Q by 1. Verify if Q > L. If this condition is satisfied, then increment i by 1, and reduce the temperature using the temperature annealing function such that T = Ti+1, where T < Ti+1. Subsequently, verify if the annealing stopping criteria have been met. If so, then increment K by 1, produce the optimal group solution PK, and return to step (3). If the annealing stopping criteria have not been met, then set Q to 1, and return to step (7). If Q > L is not met, then return to step (7). The annealing stopping criterion is typically established as the stopping temperature.

- (10)

- Once the genetic operations have been applied to the PK population to produce the PK0 population, the PK0 population is employed as the initial solution for the SA algorithm to create PK+1. If the new PK+1 population does not meet the stopping criteria for the GA, then the population formed by the SA algorithm, PK+1, is adopted as the starting population for the GA to partake in the iterative optimization.

2.1.2. Performance Testing of the GASA Algorithm

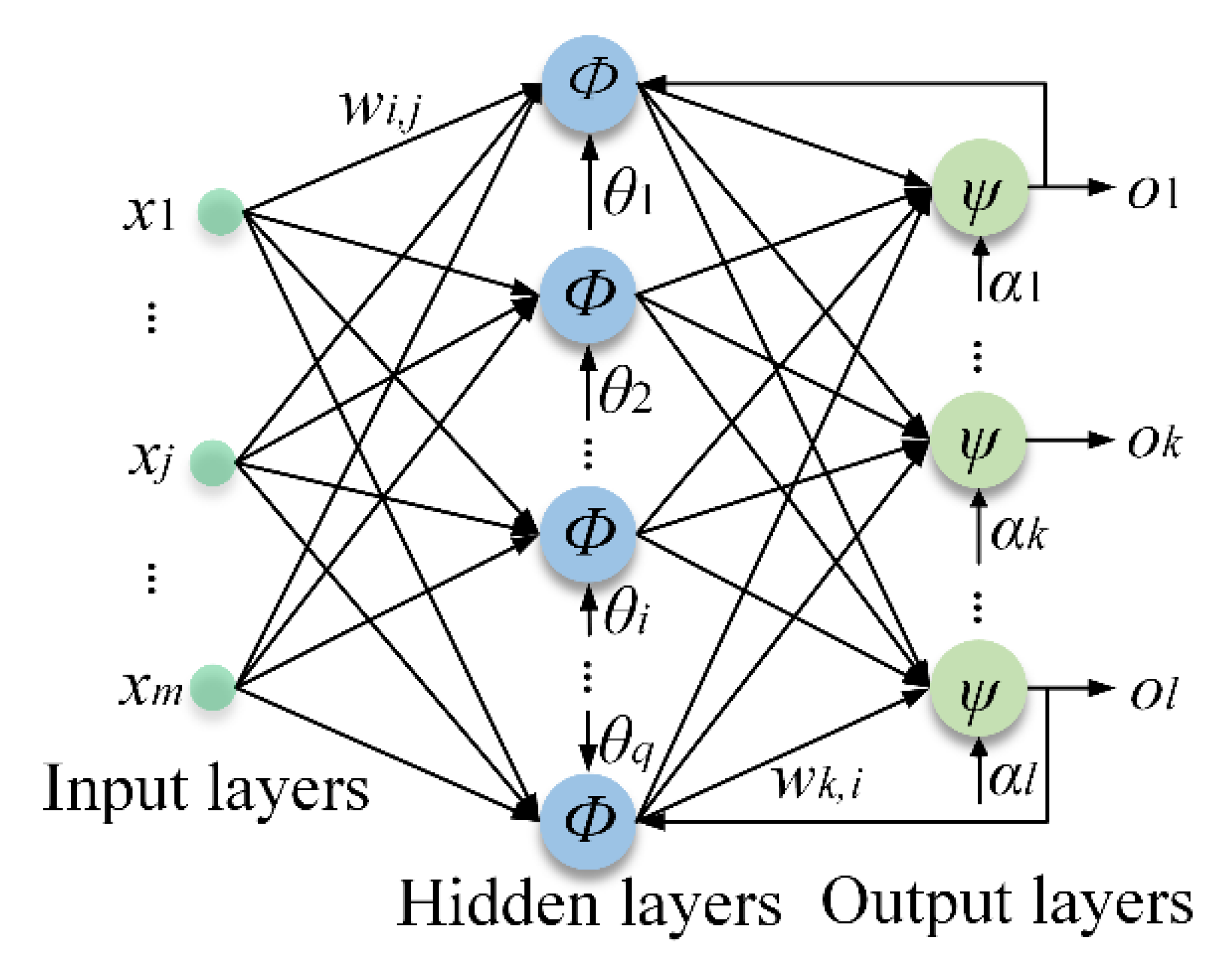

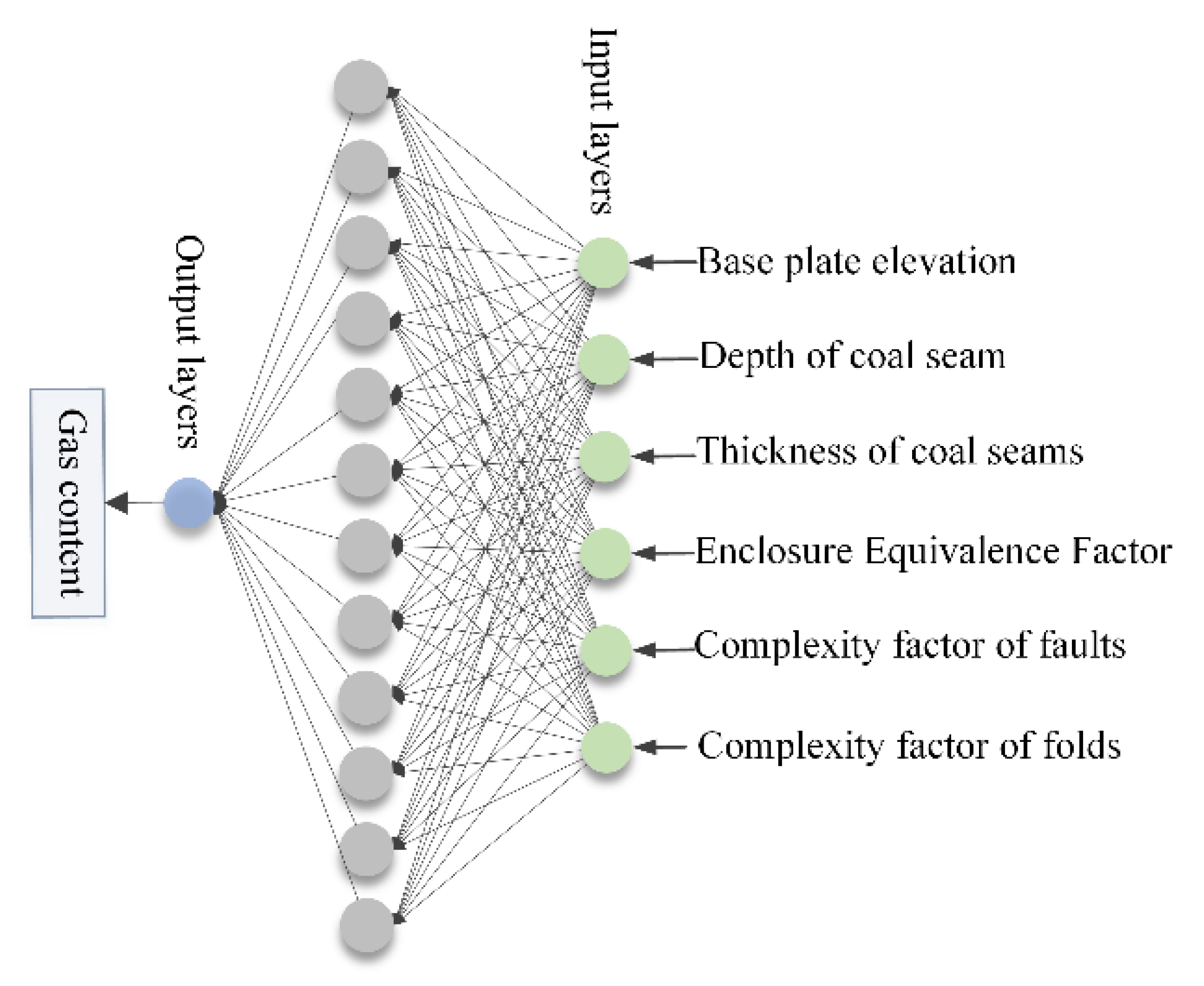

2.2. Development of a Gas Content Prediction Model Based on the GASA-BPNN Algorithm

- (1)

- To apply the prediction model, the data are first normalized to the range of [0, 1]. Subsequently, the dataset is stratified and sampled into 10 mutually exclusive subsets. Each time, one subset is chosen as the test set, while the remaining subsets are used as training sets for 10 rounds of training and testing. Figure 8 illustrates the schematic diagram of the sample division process.

- (2)

- The initialization of the GA population involves encoding the 97 parameters using floating point number encoding rules. Each individual in the population represents a unique set of weights and thresholds. The initial population, PK, consists of 50 individuals who are randomly generated.

- (3)

- The GA parameters are set as follows: The maximum number of genetic iterations is set to 800, K is initialized to 1, and the GA stops when the maximum number of iterations is reached. The crossover probability is set to 0.7, and the mutation probability is set to 0.005.

- (4)

- The fitness function is defined based on the MSE of the prediction, whereby a smaller MSE value yields a higher fitness value for the individual.

- (5)

- The initial population PK was subjected to fitness evaluation, and the individuals were ranked based on their respective fitness values.

- (6)

- Stopping criteria for GASA: Check if the maximum number of genetic iterations has been reached. If so, then terminate the process and proceed to steps (7)–(11). Otherwise, increment K by 1 and proceed to step (12).

- (7)

- BPNN Initialization: The solution obtained from step (6) is assigned to the BPNN. The maximum number of training iterations is set to H = 800, and the iteration counter is initialized to S = 0.

- (8)

- The input dataset is processed by propagating the data forward through the layers of the BPNN. The input for the fth neuron in the hidden layer is computed using Equation (3), and the input for the jth output node is computed using Equation (4).

- (9)

- The BPNN weights and thresholds are updated by backpropagating the MSE to each node, and the parameters are adjusted using the error-adaptive learning rate gradient descent method described in Equation (5). If the error approaches the target value with less fluctuation, then the model training direction is correct, and the learning rate can be increased. However, if the error increases beyond the allowed range, then the model training direction is incorrect, and the learning rate should be reduced.

- (10)

- BPNN stopping criterion: Verify whether the termination criterion has been met, where the minimum error is defined as the stopping condition. If the criterion is satisfied, then the network completes its learning phase, and the model is established, advancing to step (11). Otherwise, examine if H is equal to S. If true, then return to step (7). Otherwise, increase S by one and go back to step (8).

- (11)

- The performance evaluation of the model involves testing the model on the test set. The evaluation metrics used to assess the model’s performance are the MSE, iteration number, and relative prediction error.

- (12)

- The selection process in the GA involves the use of the roulette selection method, which serves to screen individuals based on their fitness values. Specifically, for an individual xu with a fitness value of fu, the probability of xu being selected is given by .

- (13)

- The process of GA crossover involves randomly selecting two individuals, and , from the population PK, and generating new individuals, and , through the arithmetic crossover operation outlined in Equation (6).where ,, and .

- (14)

- For the GA variation, an individual genotype X = x1x2…xb…xs is randomly selected, and the genetic operation is performed at the mutation point xb using Equation (7).

- (15)

- Evaluate the fitness of PK0: Calculate the fitness value of PK0 and designate it as the initial population of the annealing algorithm.

- (15)

- SA parameter initialization: All genetically operated individuals undergo annealing. The initial temperature T is set to 100 °C, the cooling factor is set to 0.98, and the stopping criterion is set such that Q = 1 and the Markov chain length is L = 60.

- (17)

- A novel solution is produced by perturbing each member of the population, computing the disparity between the fitness values of each member in the old and new populations, and determining whether to adopt the new solution based on the Metropolis sampling criterion.

- (18)

- Increment Q by 1 and check if it is in the current Markov chain. If it is in the chain, then proceed to step (17). If it is not in the chain, then decrease the temperature and check if the stopping condition for the SA is met. If the stopping condition is met, then the SA algorithm ends and proceeds to step (4). Otherwise, set Q = 1 and proceed to step (17).

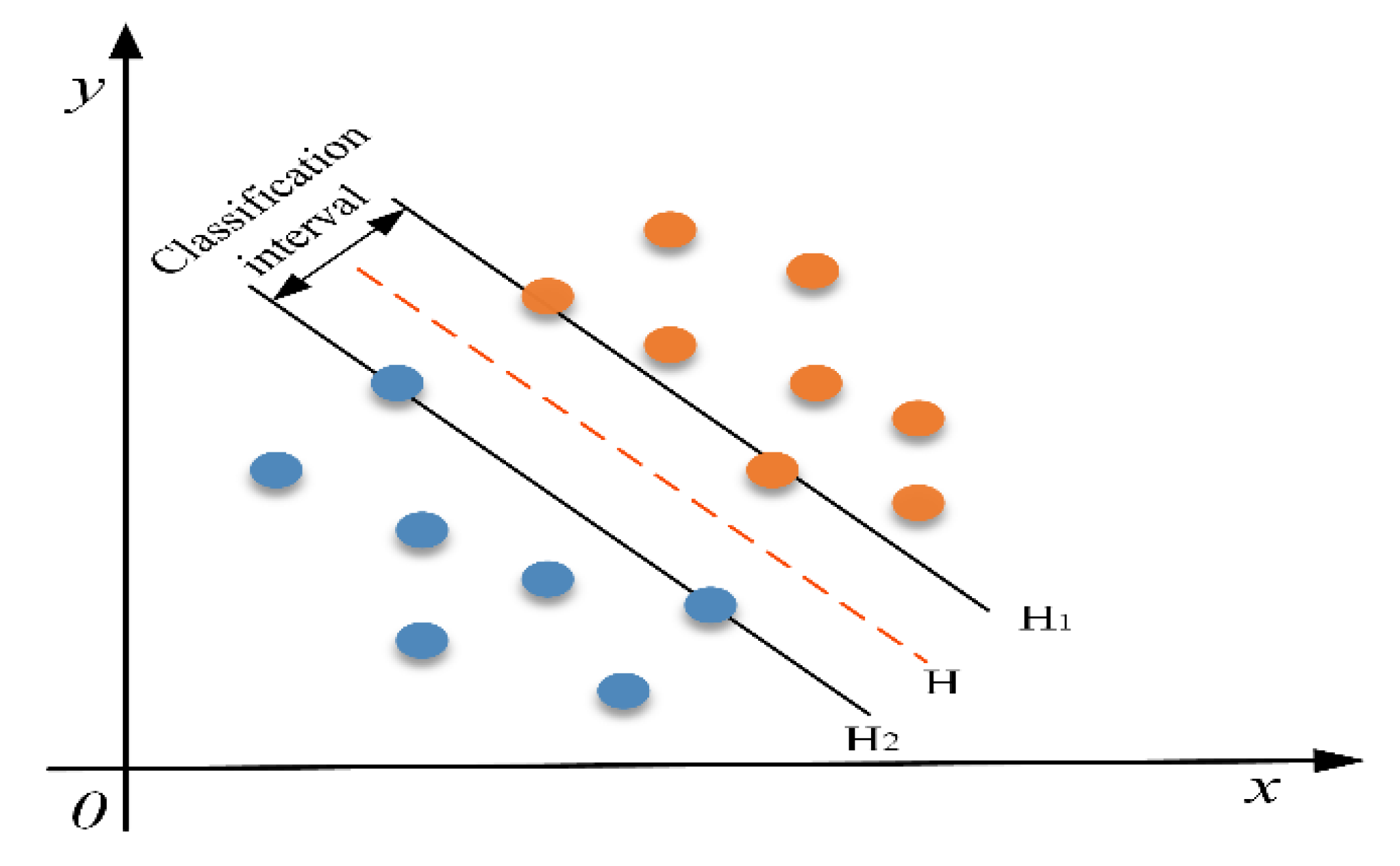

2.3. Establishment of a Gas Content Prediction Model Based on the GASA-SVM Algorithm

- (1)

- The 10-fold cross-validation method is used to split the data into test and training sets;

- (2)

- GA initialization: The parameters σ and c of the kernel function are encoded using the floating-point number encoding rule. A population of 50 individuals is randomly initialized, and the population size and GA parameters such as maximum generation and crossover probability are set;

- (3)

- Fitness evaluation function: The MSE between the predicted gas content and the actual output is minimized as the fitness evaluation function. The randomly initialized individuals from step (2) are evaluated. The genetic stopping condition is checked. If met, then the optimal individual is output, decoded, and assigned to SVM. GASA completes the optimized SVM, which is then trained again using the sample data to establish the model. If the genetic stopping condition is not met, then genetic crossover and genetic mutation are performed. The resulting individuals are used as the initial generation population of the SA algorithm;

- (4)

- SA algorithm initialization: Individuals in the population that did not meet the genetic stopping condition in step (3) are used as the initial individuals in the SA algorithm. Each individual undergoes simulated annealing. The initial parameters of SA, such as the initial temperature, temperature decay function, and Metropolis chain length, are set. Essentially, an SA algorithm is concatenated under each individual in the GA population;

- (5)

- Interference population: Each individual in the population generates a new population using the fitness evaluation function in step (3). The fitness function value is evaluated, and the difference in fitness function value between new and old populations is calculated. Finally, the Metropolis sampling criterion is used to determine whether the old solution should be replaced by the new solution;

- (6)

- Check if the SA stopping condition is met. If it is met, then end the annealing and use this population as the next generation population in the GA in step (3). If it is not met, then return to step (5).

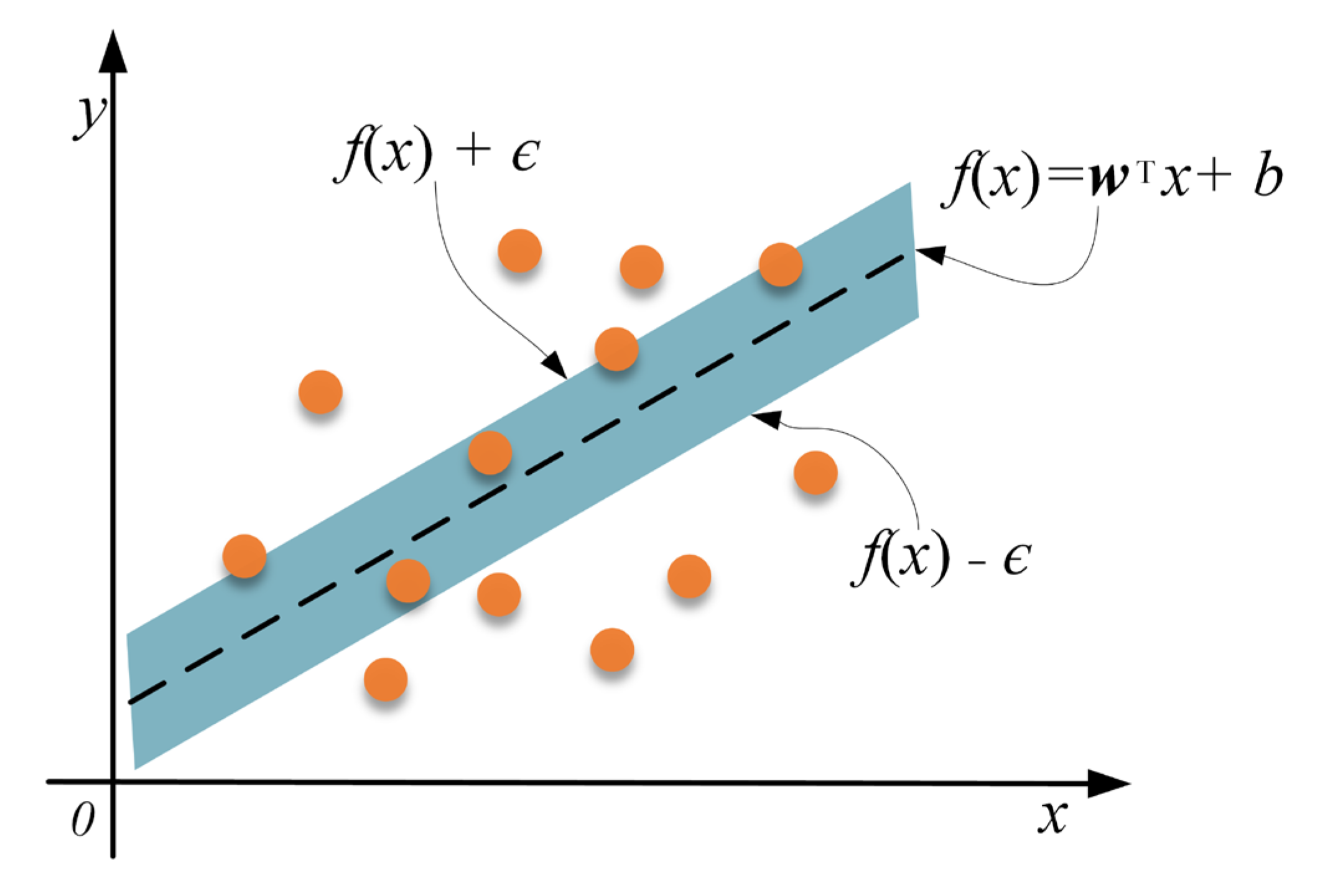

2.4. Development of a Gas Content Prediction Model Based on the GASA-KELM Algorithm

- (1)

- Normalize the dataset and split it into training and testing sets using 10-fold cross-validation.

- (2)

- Initialize the GA by encoding the kernel function parameters and penalty factor c for the KELM, randomly initializing the population, and setting the population size and GA parameters.

- (3)

- Define the fitness evaluation function as the MSE between the predicted and actual values of the model, and evaluate the randomly initialized individuals in the population from step (2). Determine if the genetic stopping condition is met. If so, then output the optimal individual, decode it, and assign it to KELM. Complete the model establishment by training the sample data again. The training process and model performance evaluation are discussed in the following section. If the genetic stopping condition is not met, then perform genetic operations, and use the resulting individuals as the initial population for SA.

- (4)

- Initialize the SA algorithm by using the population individuals from step (3) that did not meet the genetic stopping condition as the initial population for the SA algorithm. Each individual undergoes simulated annealing. Set the initial parameters for SA, such as the initial temperature, temperature decay function, and Metropolis chain length, which correspond to an SA algorithm linked to each population individual in the GA.

- (5)

- Update the population using the neighborhood function to generate a new population. Evaluate the fitness function value using the evaluation function from step (3), calculate the difference in fitness values between the new and old populations, and determine whether to replace the old solution based on the Metropolis criterion.

- (6)

- Determine whether the annealing stopping condition is met. If it is met, then complete the annealing operation and use this population as the next generation population in the GA in step (3). If it is not met, then return to step (5).

3. Field Application of Gas Content Prediction Models

3.1. Overview of the Jiulishan Mine in Jiaozuo

3.2. Establishment of the Parameter System for the Gas Content Prediction Model

3.2.1. Establishing a Dataset for Gas Content Prediction

3.2.2. Primary Controlling Factors of Gas Content Based on Grey Correlation Analysis

- (1)

- Setting reference sequence and comparison sequence: Reference sequence: Gas content (X0), Comparison sequence: Eight influencing factors of coal seam gas content.

- (2)

- Data preprocessing and normalization: According to Formula (8), the data will be normalized.

- (3)

- To calculate the correlation coefficient: The calculation of correlation coefficient is obtained according to Formula (9).

- (4)

- To compute the correlation degree: Calculate the correlation degree using Formula (10).

- (5)

- Ranking of correlation and determination of input parameters

3.3. Parameter Optimization and Testing of the Model

3.4. Application of the Model in Engineering and Evaluation of Its Predictive Performance

4. Conclusions

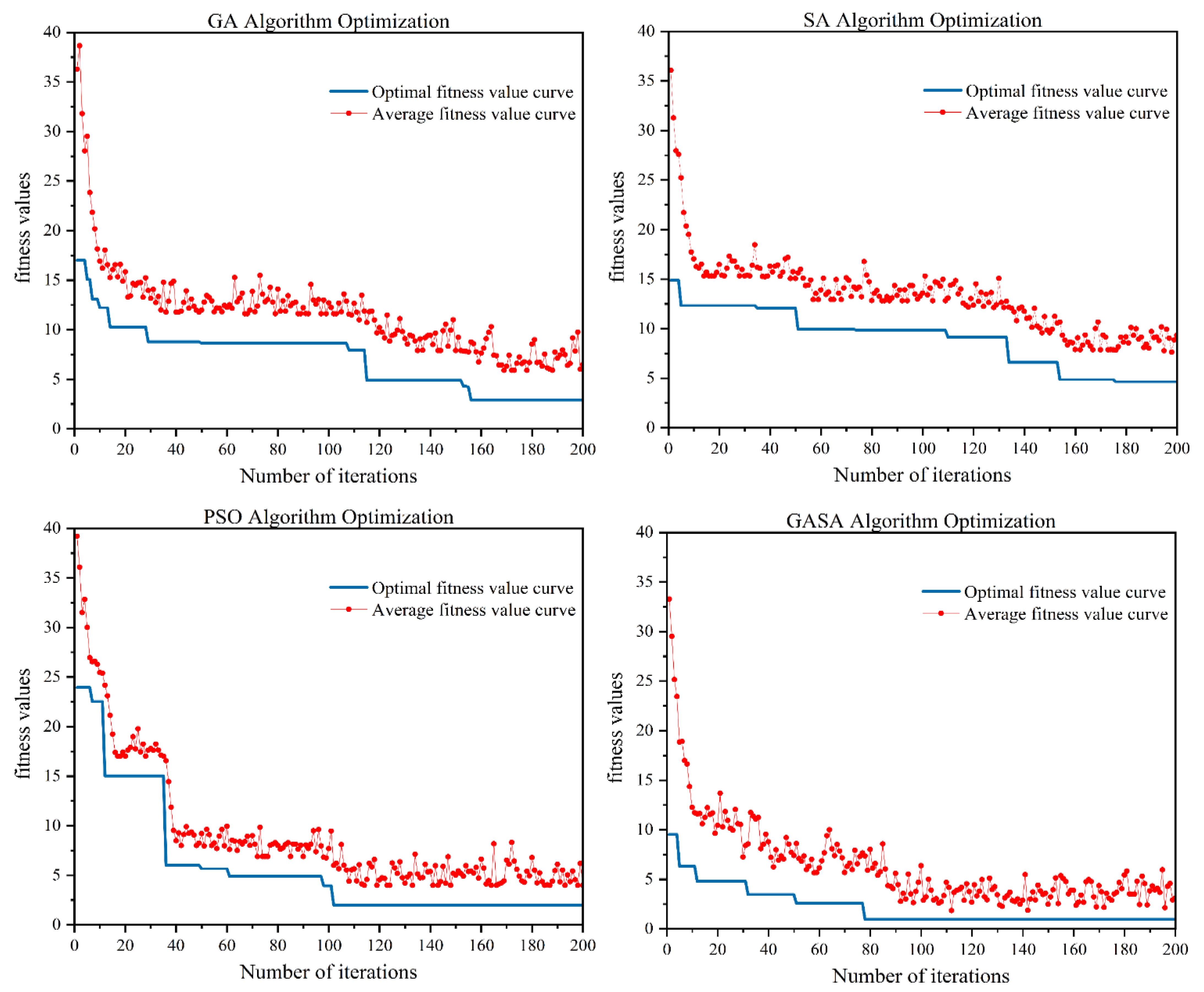

- After conducting verification, Rastrigin’s function was optimized 20 times using the PSO, GA, SA, and GASA algorithms under the same conditions. The algorithms completed the iterative optimization at the 102nd, 156th, 176th, and 78th iterations. The average optimization values of the four algorithms were 9.2472 × 10−4, 7.9003 × 10−3, 9.1873 × 10−2, and 5.6935 × 10−4, with respective variances of 3.1547, 3.7519, 7.6823, and 2.0524. After considering the average optimization results over 20 iterations, the variance of the optimization results, and the average number of iterations, the GASA designed in this paper exhibits stronger capabilities in optimizing complex functions and providing stable global search performance compared to the PSO, GA, and SA algorithms. Furthermore, the GASA algorithm demonstrates a more efficient optimization speed and higher optimization accuracy for complex functions compared to single algorithms, effectively avoiding the issue of optimization algorithms being prone to local optima.

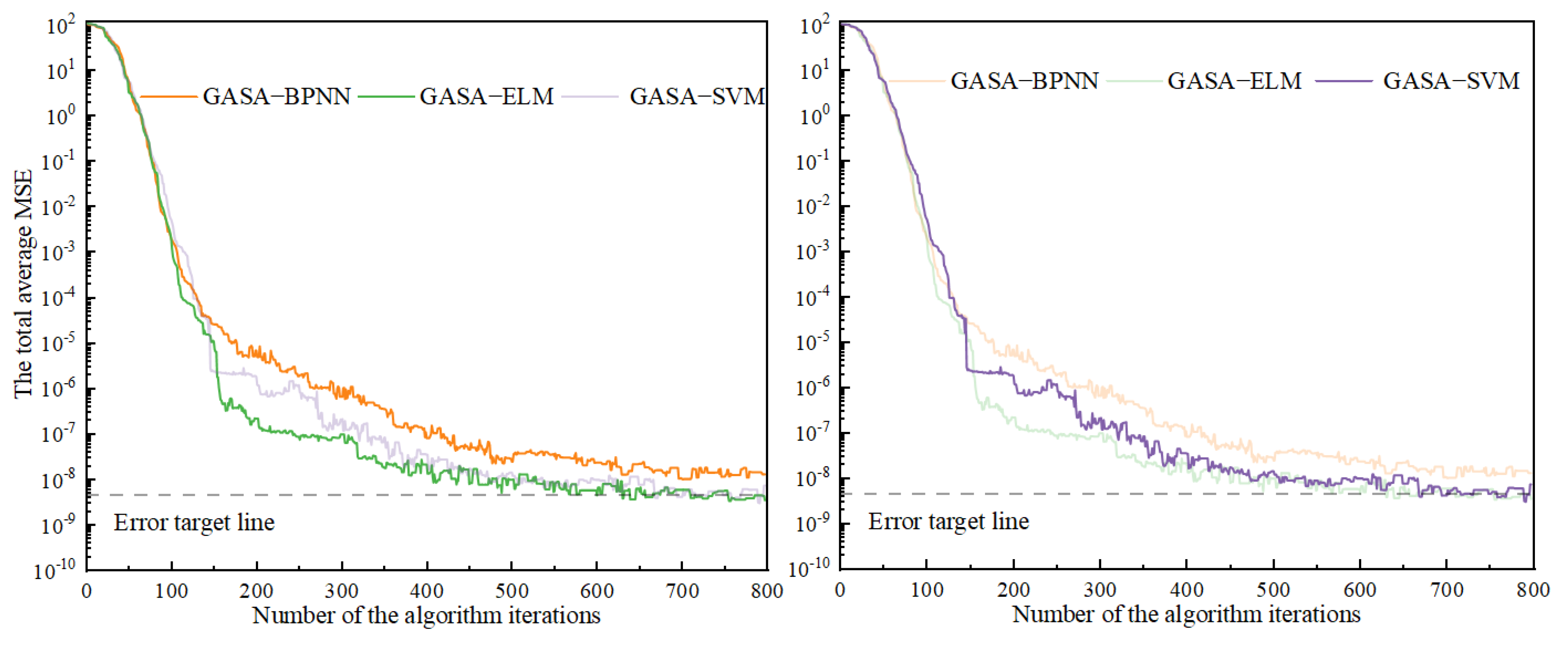

- In the process of constructing the GASA-BPNN prediction model, the GASA failed to meet the target requirements within 800 iterations. Conversely, during the construction of the GASA-SVM and GASA-KELM gas content prediction models, the GASA was able to discover the optimal initial parameters during the 673rd and 487th iterations, respectively. This disparity can be attributed to the fact that the number of parameters to be optimized in BPNN is significantly greater than in SVM and KELM. As a result, the optimization process for the GASA-SVM and GASA-KELM models was much faster and produced higher-quality results than the BPNN model.

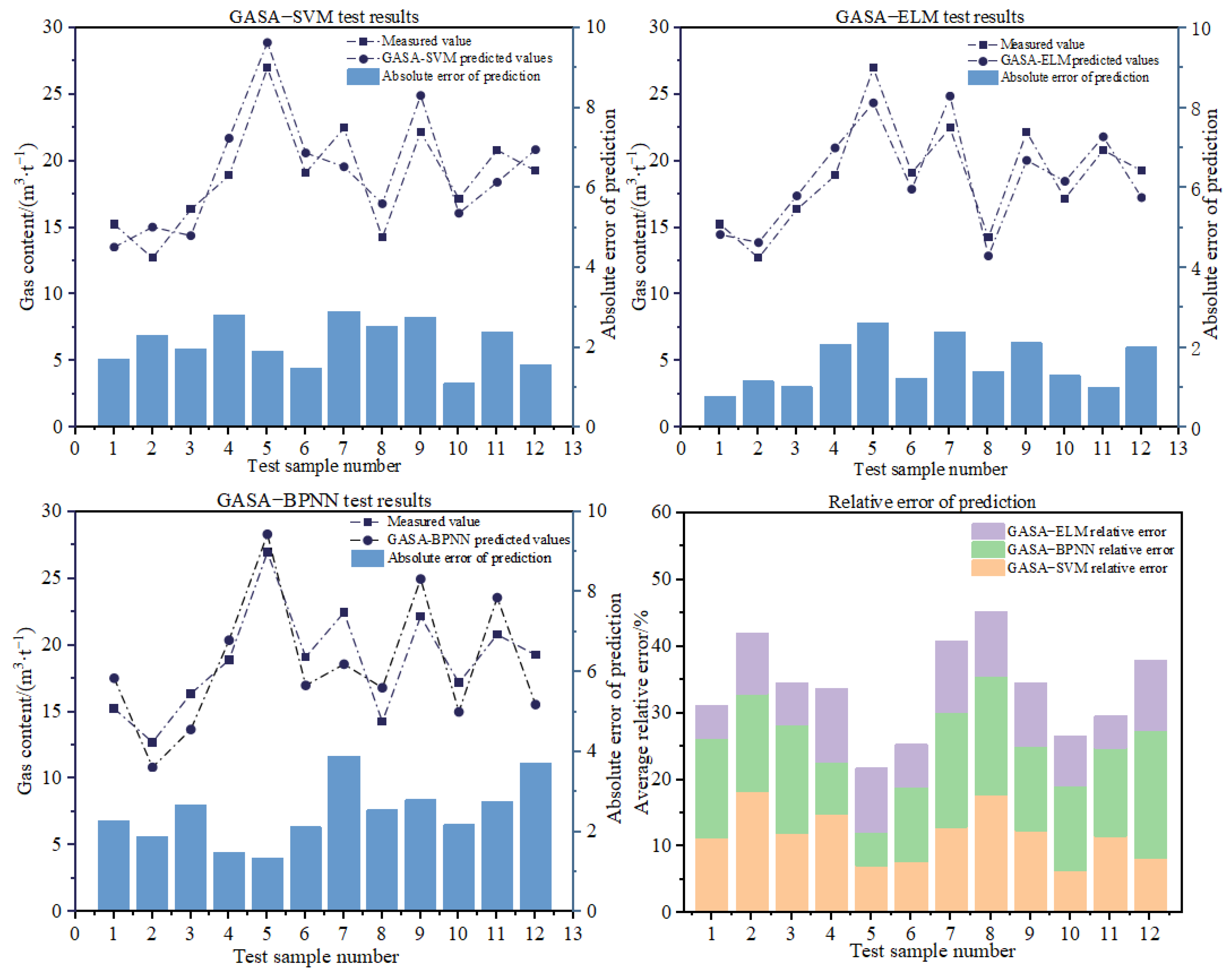

- During 10-fold cross-validation, the GASA-BPNN, GASA-SVM and GASA-KELM models yielded average relative errors of 15.74%, 13.85%, and 9.87%, respectively. The corresponding variances of the 10 cross-validation results were 3.99, 2.76 and 2.05. Notably, in comparison with the GASA-SVM and GASA-BPNN models, the GASA-KELM model displayed superior accuracy and stability in predicting gas content. Subsequently, the GASA-KELM model was tested on twelve additional samples, which further revealed the model’s exceptional performance in terms of prediction accuracy and generalization ability to new sample data.

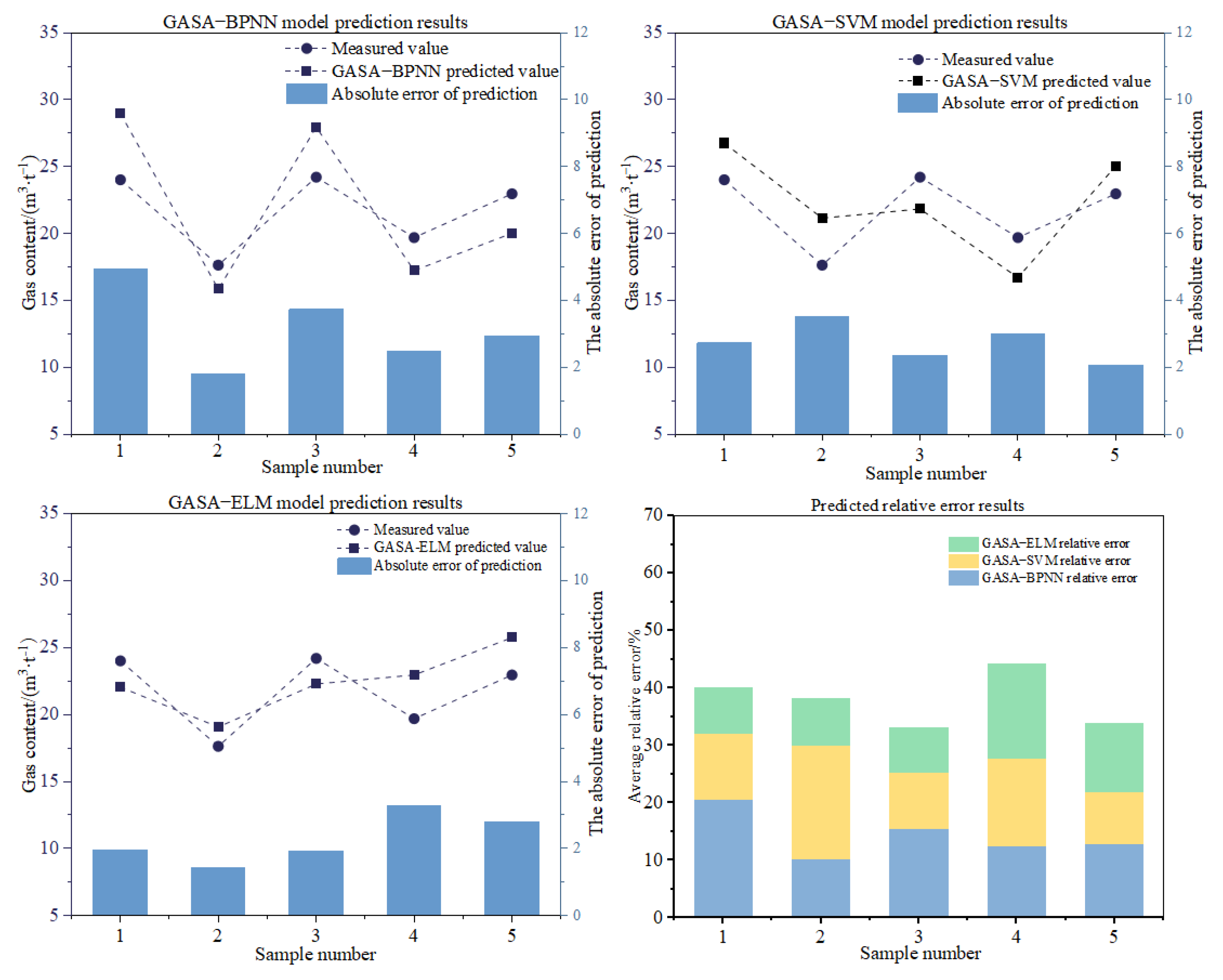

- The developed GASA-KELM model proves to have significant advantages over other ANN models in terms of high accuracy in gas content prediction, stability in prediction, and strong generalization ability when applied to the gas content prediction case of the Jiulishan Mine’s 15-mining area. These advantages are essential for the accurate prediction of gas content and for formulating effective regional gas management strategies.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, S.; Shi, Q.; Wang, S.; Shen, Y.; Sun, Q.; Cai, Y. Resource property and exploitation concepts with green and low-carbon of tar-rich coal as coal-based oil and gas. J. China Coal Soc. 2021, 46, 1365–1377. [Google Scholar] [CrossRef]

- Yuan, L. Scientific conception of precision coal mining. J. China Coal Soc. 2017, 42, 1–7. [Google Scholar] [CrossRef]

- Yuan, L.; Zhang, T.; Zhang, Q.; Jiang, B.; Lv, X.; Li, S.; Fu, Q. Construction of green, low-carbon and multi-energy complementary system for abandoned mines under global carbon neutrality. J. China Coal Soc. 2022, 47, 2131–2139. [Google Scholar] [CrossRef]

- Xu, C.; Wang, K.; Li, X.; Yuan, L.; Zhao, C.; Guo, H. Collaborative gas drainage technology of high and low level roadways in highly-gassy coal seam mining. Fuel 2022, 323, 124325. [Google Scholar] [CrossRef]

- Lin, Y.; Qin, Y.; Wang, X.; Duan, Z.; Ma, D. Geology and emission of mine gas in Binchang mining area with low rank coal and high mine gas. J. China Coal Soc. 2019, 44, 2151–2158. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, L.; Dong, J.; Wang, Q. Simulation on the temperature evolution law of coal containing gas in the freezing coring process. J. China Coal Soc. 2021, 46, 199–210. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, F.; Xia, T.; Xu, M. A multi-objective optimization model to enhance the comprehensive performance of underground gas drainage system. J. Nat. Gas Sci. Eng. 2016, 36, 852–864. [Google Scholar] [CrossRef]

- Wang, S.; Shen, Y.; Sun, Q.; Liu, L.; Shi; Zhu, M.; Zhang, B.; Cui, S. Underground CO2 storage and technical problems in coal mining area under the “dual carbon” target. J. China Coal Soc. 2022, 47, 45–60. [Google Scholar] [CrossRef]

- Fu, X.; Zhang, X.; Wei, C. Review of research on testing, simulation and prediction of coalbed methane content. J. China Univ. Min. Technol. 2021, 50, 13–31. [Google Scholar] [CrossRef]

- Zheng, H.; Zhang, Y.; Liu, J.; Wei, H.; Zhao, J.; Liao, R. A novel model based on wavelet LS-SVM integrated improved PSO algorithm for forecasting of dissolved gas contents in power transformers. Electr. Power Syst. Res. 2018, 155, 196–205. [Google Scholar] [CrossRef]

- Yu, F.; Xu, X. A short-term load forecasting model of natural gas based on optimized genetic algorithm and improved BP neural network. Appl. Energy 2014, 134, 102–113. [Google Scholar] [CrossRef]

- Xin, J.; Chen, J.; Li, C.; Lu, R.; Li, X.; Wang, C.; Zhu, H.; He, R. Deformation characterization of oil and gas pipeline by ACM technique based on SSA-BP neural network model. Measurement 2022, 189, 110654. [Google Scholar] [CrossRef]

- Cao, B.; Yin, Q.; Guo, Y.; Yang, J.; Zhang, L.; Wang, Z.; Tyagi, M.; Sun, T.; Zhou, X. Field data analysis and risk assessment of shallow gas hazards based on neural networks during industrial deep-water drilling. Reliab. Eng. Syst. Saf. 2023, 232, 109079. [Google Scholar] [CrossRef]

- Lin, H.; Gao, F.; Yan, M.; Bai, Y.; Xiao, P.; Xie, X. Study on PSO-BP neural network prediction method of coal seam gas content and its application. China Saf. Sci. J. 2020, 30, 80–87. [Google Scholar] [CrossRef]

- Ma, L.; Lu, W.; Wei, G. Study on prediction method of coal seam gas content based on GASA—BP neural network. J. Saf. Sci. Technol. 2022, 18, 59–65. [Google Scholar]

- Wu, Y.; Gao, R.; Yang, J. Prediction of coal and gas outburst: A method based on the BP neural network optimized by GASA. Process Saf. Environ. Prot. 2020, 133, 64–72. [Google Scholar] [CrossRef]

- Ruilin, Z.; Lowndes, I.S. The application of a coupled artificial neural network and fault tree analysis model to predict coal and gas outbursts. Int. J. Coal Geol. 2010, 84, 141–152. [Google Scholar] [CrossRef]

- Xie, X.; Fu, G.; Xue, Y.; Zhao, Z.; Chen, P.; Lu, B.; Jiang, S. Risk prediction and factors risk analysis based on IFOA-GRNN and apriori algorithms: Application of artificial intelligence in accident prevention. Process Saf. Environ. Prot. 2019, 122, 169–184. [Google Scholar] [CrossRef]

- Meng, Q.; Ma, X.; Zhou, Y. Forecasting of coal seam gas content by using support vector regression based on particle swarm optimization. J. Nat. Gas Sci. Eng. 2014, 21, 71–78. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, B.; Li, X.; Chen, H. Research and Application of Improved Gas Concentration Prediction Model Based on Grey Theory and BP Neural Network in Digital Mine. Procedia CIRP 2016, 56, 471–475. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, H.; Li, S.; Fan, C. Prediction of Residual Gas Content during Coal Roadway Tunneling Based on Drilling Cuttings Indices and BA-ELM Algorithm. Adv. Civ. Eng. 2020, 2020, 1287306. [Google Scholar] [CrossRef]

- Qiu, L.; Peng, Y.; Song, D. Risk Prediction of Coal and Gas Outburst Based on Abnormal Gas Concentration in Blasting Driving Face. Geofluids 2022, 2022, 3917846. [Google Scholar] [CrossRef]

- Wu, X.; Yang, Z.; Wu, D. Advanced Computational Methods for Mitigating Shock and Vibration Hazards in Deep Mines Gas Outburst Prediction Using SVM Optimized by Grey Relational Analysis and APSO Algorithm. Shock Vib. 2021, 2021, 5551320. [Google Scholar] [CrossRef]

- Bumin, M. Predicting the direction of financial dollarization movement with genetic algorithm and machine learning algorithms: The case of Turkey. Expert Syst. Appl. 2023, 213, 119301. [Google Scholar] [CrossRef]

- Estran, R.; Souchaud, A.; Abitbol, D. Using a genetic algorithm to optimize an expert credit rating model. Expert Syst. Appl. 2022, 203, 117506. [Google Scholar] [CrossRef]

- Kassaymeh, S.; Al-Laham, M.; Al-Betar, M.A.; Alweshah, M.; Abdullah, S.; Makhadmeh, S.N. Backpropagation Neural Network optimization and software defect estimation modelling using a hybrid Salp Swarm optimizer-based Simulated Annealing Algorithm. Knowl. Based Syst. 2022, 244, 108511. [Google Scholar] [CrossRef]

- Mu, A.; Huang, Z.; Liu, A.; Wang, J.; Yang, B.; Qian, Y. Optimal model reference adaptive control of spar-type floating wind turbine based on simulated annealing algorithm. Ocean Eng. 2022, 255, 111474. [Google Scholar] [CrossRef]

- Zhang, B.; Guo, S.; Jin, H. Production forecast analysis of BP neural network based on Yimin lignite supercritical water gasification experiment results. Energy 2022, 246, 123306. [Google Scholar] [CrossRef]

- Dhanasekaran, Y. Improved bias value and new membership function to enhance the performance of fuzzy support vector Machine. Expert Syst. Appl. 2022, 208, 118003. [Google Scholar] [CrossRef]

- Kim, D.; Kang, S.; Cho, S. Expected margin–based pattern selection for support vector machines. Expert Syst. Appl. 2020, 139, 112865. [Google Scholar] [CrossRef]

- Anand, P.; Bharti, A.; Rastogi, R. Time efficient variants of Twin Extreme Learning Machine. Intell. Syst. Appl. 2023, 17, 200169. [Google Scholar] [CrossRef]

- Huang, G.-B.; Babri, H.A. Upper bounds on the number of hidden neurons in feedforward networks with arbitrary bounded nonlinear activation functions. IEEE Trans. Neural Netw. 1998, 9, 224–229. [Google Scholar] [CrossRef]

- Wei, G.; Pei, M. Prediction of coal seam gas content based on PCA-AHPSO-SVR. J. Saf. Sci. Technol. 2019, 15, 69–74. [Google Scholar]

- Zhang, Z.; Zhang, Y. Geological Control Factors of Coal and Gas Prominence and Prominence Zone Prediction in Jiu Li Shan Mine. Saf. Coal Mines 2009, 40, 88–90+93. [Google Scholar]

- Liu, X.; Liu, H.; Zhao, X.; Han, Z.; Cui, Y.; Yu, M. A novel neural network and grey correlation analysis method for computation of the heat transfer limit of a loop heat pipe (LHP). Energy 2022, 259, 124830. [Google Scholar] [CrossRef]

| Algorithm | Test Function | Average Result | Variance of Results |

|---|---|---|---|

| PSO | Rastrigin’s function | 9.2472 × 10−4 | 3.1547 |

| GA | 7.9003 × 10−3 | 3.7519 | |

| SA | 9.1873 × 10−2 | 7.6823 | |

| GASA | 5.6935 × 10−4 | 2.0524 |

| W | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| Relative Error/% | 27.9 | 30.84 | 21.29 | 19.73 | 24.18 | 19.62 | 16.35 | 21.87 | 15.22 |

| Sample Number | X0/(m3·t−1) | X1/(m) | X2/(m) | X3 | X4/(m) | X5 | X6 | X7 | X8/(m) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 17.01 | 407.12 | 6.44 | 0.0198 | 254.09 | 0.4185 | 0.0008 | 0.0005 | −324.81 |

| 2 | 25.32 | 350.41 | 6.08 | 0.0191 | 209.54 | 0.9000 | 0.1416 | 0.1812 | −265.47 |

| 3 | 25.63 | 327.86 | 5.74 | 0.0354 | 191.74 | 0.4508 | 0.3062 | 0.0001 | −243.64 |

| 4 | 15.27 | 438.26 | 4.84 | 0.0213 | 241.67 | 0.4000 | 0.0016 | 0.0001 | −353.7 |

| 5 | 18.99 | 363.62 | 5.79 | 0.0440 | 219.25 | 0.5663 | 0.1003 | 0.0004 | −279.48 |

| 6 | 12.53 | 400.92 | 3.61 | 0.0193 | 256.23 | 0.7740 | 0.0264 | 0.0048 | −315.06 |

| 7 | 22.03 | 524.6 | 5.35 | 0.0122 | 267.32 | 0.5109 | 0.3255 | 0.0025 | −437.3 |

| 8 | 21.46 | 517.3 | 4.36 | 0.0171 | 114.52 | 0.3855 | 0.0853 | 0.0115 | −430 |

| 9 | 24.46 | 437.4 | 4.81 | 0.0224 | 121.89 | 0.2370 | 0.1189 | 0.0137 | −350.7 |

| 10 | 24.03 | 444 | 7.07 | 0.0165 | 188.67 | 0.6074 | 0.0352 | 0.0084 | −359.82 |

| 11 | 31.01 | 305.42 | 5.39 | 0.0403 | 207.99 | 0.3394 | 0.1738 | 0.0002 | −217.27 |

| 12 | 19.17 | 326.7 | 5.43 | 0.0546 | 241.06 | 0.5873 | 0.0032 | 0.0117 | −242.16 |

| 13 | 27.63 | 530 | 10.59 | 0.0412 | 334.41 | 0.4105 | 0.0421 | 0.0004 | −440.9 |

| 14 | 20.41 | 501.7 | 5.34 | 0.0159 | 128.22 | 0.3534 | 0.0266 | 0.0010 | −414.5 |

| 15 | 9.67 | 284 | 5.62 | 0.0166 | 144.48 | 0.3299 | 0.0338 | 0.0012 | −195.1 |

| 16 | 18.86 | 438 | 5.07 | 0.0154 | 95.09 | 0.4837 | 0.0984 | 0.0029 | −350.4 |

| 17 | 16.71 | 400.92 | 3.6l | 0.0278 | 256.23 | 0.7740 | 0.0264 | 0.0048 | −317.1 |

| 18 | 12.74 | 400.92 | 3.61 | 0.0315 | 256.23 | 0.7740 | 0.0009 | 0.0001 | −308.68 |

| 19 | 27.86 | 309.48 | 5.80 | 0.0302 | 174.28 | 0.3158 | 0.1480 | 0.0008 | −220.93 |

| 20 | 13.38 | 474.73 | 2.66 | 0.0222 | 306.27 | 0.3718 | 0.0015 | 0.0005 | −383.68 |

| Factors Influencing | X1 | X2 | X3 | X4 | X5 | X6 | X7 | X8 |

| 0.6698 | 0.5623 | 0.2908 | 0.3176 | 0.5024 | 0.6117 | 0.5102 | 0.5785 |

| —— | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 | Average/% | Variance |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BPNN | 19.46 | 12.59 | 16.6 | 7.53 | 15.32 | 17.9 | 17.82 | 12.06 | 20.89 | 17.21 | 15.738 | 3.99 |

| SVM | 13.81 | 15.3 | 8.74 | 15.11 | 18.29 | 13.76 | 10.03 | 15.58 | 14.71 | 13.15 | 13.848 | 2.76 |

| KELM | 12.46 | 7.92 | 10.07 | 8.14 | 9.24 | 11.44 | 13.78 | 9.16 | 8.35 | 8.09 | 9.865 | 2.05 |

| Sample Number | X0/(m3·t−1) | X1/(m) | X2/(m) | X3 | X4/(m) | X5 | X6 | X7 | X8/(m) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 24.03 | 449 | 4.75 | 0.0107 | 199.44 | 0.3299 | 0.1264 | 0.0027 | −364 |

| 2 | 17.65 | 402 | 5.48 | 0.0285 | 183.86 | 0.3810 | 0.0298 | 0.0199 | −308.6 |

| 3 | 24.22 | 305.1 | 5.39 | 0.0291 | 135.74 | 0.4302 | 0.0895 | 0.0615 | −218.9 |

| 4 | 19.71 | 481 | 6.24 | 0.0166 | 118.10 | 0.5123 | 0.0135 | 0.0047 | −394.2 |

| 5 | 22.98 | 387.8 | 5.55 | 0.0243 | 98.24 | 0.5808 | 0.0494 | 0.0012 | −295.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, S.; Ma, L.; Li, H.; Tian, F.; Mao, J. Research on a Coal Seam Gas Content Prediction Method Based on an Improved Extreme Learning Machine. Appl. Sci. 2023, 13, 8753. https://doi.org/10.3390/app13158753

Tian S, Ma L, Li H, Tian F, Mao J. Research on a Coal Seam Gas Content Prediction Method Based on an Improved Extreme Learning Machine. Applied Sciences. 2023; 13(15):8753. https://doi.org/10.3390/app13158753

Chicago/Turabian StyleTian, Shuicheng, Lei Ma, Hongxia Li, Fangyuan Tian, and Junrui Mao. 2023. "Research on a Coal Seam Gas Content Prediction Method Based on an Improved Extreme Learning Machine" Applied Sciences 13, no. 15: 8753. https://doi.org/10.3390/app13158753

APA StyleTian, S., Ma, L., Li, H., Tian, F., & Mao, J. (2023). Research on a Coal Seam Gas Content Prediction Method Based on an Improved Extreme Learning Machine. Applied Sciences, 13(15), 8753. https://doi.org/10.3390/app13158753