1. Introduction

The importance of sustainability in agriculture has grown in recent years due to the need to address the challenges associated with food production from an environmental, social, and economic perspective, prioritizing the development and implementation of various sustainable practices and approaches in agriculture. Agriculture sustainability is now one of the most significant factors contributing to environmental change worldwide [

1]. In addition, increasing agriculture or food production rapidly to meet growing food supply demands takes work. Various factors contribute to this challenge, including outdated agricultural practices, inadequate storage facilities, unstable marketplaces, and political instability. As the world’s population continues to rise, experts in food and agriculture predict that agricultural production will need to increase by 70% before 2050 to feed the growing population sustainably [

2].

A country’s social and economic prosperity is strongly tied to its sustainable agricultural base. Sustainable practices aim to improve agricultural productivity while reducing harmful environmental impacts through innovative technologies in Sustainable Agriculture Supply Chains (ASCs) [

3,

4,

5]. The ASCs technologies are a hopeful answer to the challenges faced by agriculture. Technology, specifically data-driven methods, is a promising solution to global agriculture problems. By collecting and analyzing farm data, we can make informed decisions regarding agricultural practices. This approach has been proven to increase crop yield, decrease costs, and promote sustainability [

6].

The smart farm, also called digital agriculture, is essential to the new agricultural revolution towards green practices, with science and technology at the center of its operation. Smart farming technologies are crucial in supplying organic agriculture products, which are now in higher demand. These technologies could assist in controlling and reducing the use of chemicals, antibiotics, and synthetic chemical fertilizers, which is good for the health of consumers and farmers [

7,

8]. The current smart farm is based on the greenhouse environment [

9]. Greenhouses are systems that protect crops from factors that can cause them damage. They consist of a closed structure with a cover of translucent material. These aim to maintain an independent climate inside, improving the growth conditions for increasing the quality and quantity of products. These systems can produce in a particular place without restricting agroclimatic conditions [

10].

Greenhouse systems must be designed according to the environmental conditions of the place where they will be installed. Control of the microclimate is necessary for the optimal development of the plant since it represents 90% of the crop production yield, where the equipment, shape, and elements of the greenhouse will depend on how different the outdoor climate is from the plant’s requirements [

11]. The effectiveness of a control system in a greenhouse is related to the description of the variables that affect the behavior of the climate. It can help design and practically use the agricultural process at all stages. It comprises emerging digital technologies such as remote sensing, wireless sensor networks, Cloud Computing (CC), Internet of Things (IoT), image processing, and Artificial intelligence (AI) [

12,

13]. Sensors and actuators are used to regulate farming processes, while wireless sensor networks are being used to monitor the farm. Farmers can use wireless cameras and sensors to remotely collect data through videos and pictures. With the help of IoT and CC technology, farmers can also monitor the condition of their agricultural land using their smartphones from anywhere in the world. This can help reduce crop production costs and increase productivity [

14].

AI methods address these big data-related challenges [

15]. Machine learning (ML), a subset of AI, is widely used to identify hidden patterns in the data. ML can detect data whose data patterns are unknown and direct researchers to achieve the expected goals. The application of the ML Algorithm in the smart farm and greenhouse has attracted the interest of researchers. Traditional methods are not capable of analyzing large and unstructured data. In addition, it cannot identify and predict the most influential factors, especially regarding supply chain performance. Therefore, researchers replaced traditional analytical methods with machine learning techniques. ML has advantages like big data analysis and solving nonlinear problems like data-driven models [

16,

17].

A data-driven model is usually a time series with a daily cyclical pattern [

18]. It means that, for most applications, data profiles such as electricity charging, panel voltage, temperature, and wind turbine generation repeat their pattern every 24 h. Although, the drawback of time series analysis is that it can only be applied when a unique period in the time series exists with an adaptive law [

19]. Using specific ML algorithms can minimize the disadvantages of time series patterns, such as Autoregressive (AR), Exponential Smoothing (ES), Autoregressive Integrated Moving Average (ARIMA) model, Extreme Gradient Boosting (XGBoost), and Long Short-Term Memory (LSTM). Traditional machine learning methods include Support Vector Machine (SVM), random forest, Back Propagation (BP) neural network, and Linear Regression (LR), whereas deep learning methods include Recurrent Neural Network (RNN) and Convolutional Neural Networks (CNN) [

20].

Climate forecasting in greenhouses to predict microclimate conditions has become highly relevant due to the numerous sensors and systems that allow for accurate measurements and evaluations of the microclimate within seconds [

21]. While different techniques have been developed to model temperature behavior inside greenhouses, they often only use variable monitoring instead of forecasts. As a result, automatic control implementation to maintain optimal microclimate conditions during the different crop stages is limited to corrective and non-preventive mitigation actions, which may only partially satisfy the needs of greenhouse growers. However, AI-based algorithms have been developed to act preventively by adjusting heating/cooling systems, ventilation, and carbonic fertilization supply through actuators installed to ensure optimal growth, maintenance, and plague control of crops in greenhouses [

22].

Adding a preventive model to an automatic control integrated into greenhouses systems would make maintaining the optimal temperature for each crop more feasible. Going to extreme temperatures could represent various crop problems like changing the crops’ morphology and physiological processes, such as floral formation, leaf burn, poor fruit quality, excess transpiration, and shortening crop life. Therefore, having the best possible control of the greenhouse microclimate is crucial to prevent developing pathogens and damaging crops growing [

23].

This paper proposes an approach involving supervised learning algorithms on weather data from a controlled greenhouse environment using LR and SVM models, as the literature shows their vast use in time series applications. We aim to obtain a model capable of understanding the data structure to make a precise temperature forecast inside the greenhouse. These models have low computational power compared with more complex models like deep neural networks, so they are suitable for this application. Also, they are appropriate for applications where fast response is required.

The contributions of this paper can be summarized as follows: Use supervised learning algorithms to study weather data from a controlled greenhouse environment. We aim to develop a model that can accurately predict the temperature inside the greenhouse by identifying the trends in the data using different variables. This model will enable the greenhouse control systems to perform precise actions to maintain optimal crop microclimate conditions through the installed actuators, with the minimum possible energy consumption; this can help mitigate the development of crop disease, poor fruit quality, and other problems that may occur because of an unattended microclimate change inside the greenhouse.

The paper is organized as follows:

Section 2 presents the existing works in greenhouses focused on smart farming.

Section 3 describes the proposed workflow based on a Team Data Science Process (TDSP) methodology and the implementation details to make forecasting in the greenhouse.

Section 4 shows the obtained results, while

Section 5 presents the discussion. Finally,

Section 6 closes with the conclusions.

2. Related Work

This literature review examines current farming systems and how they can be improved by combining data from various sources. It provides valuable insights into the latest developments in agriculture technology, focusing on ML applications in greenhouse farming.

Research projects have tested temperature prediction in greenhouses by collecting data on variables such as temperature, humidity, and carbon dioxide levels. A system was used to collect 62 days of information from a multi-span greenhouse, in which a model with a Multilayer Perceptron Neural Network (MLP-NN) was developed to model the internal temperature and relative humidity [

24]. A study tested an optimal ventilation control system to regulate the temperature of a single-span greenhouse. The researchers created a prediction model using Artificial Neural Networks (ANN) and a dataset collected over two months [

25]. In contrast, in another work with the same method, they work to improve the accuracy of prediction algorithms in dynamic conditions but using fifteen days of data [

26]. In another study, authors tested different models; ANN, Nonlinear Autoregressive Exogenous (NARX), and RNN-LSTM, to determine which was most effective in predicting changes in variables that directly impact greenhouse crop growth. They collected data over a year to conduct their analysis [

27]. Also, a model with 172 days of data is used based on a Bidirectional self-attentive Encoder–Decoder framework (BEDA) and LSTM. This model predicts indoor environmental factors from noisy IoT-based sensors [

28]. The methods for collecting greenhouse data vary among the studies presented, with some using sensors or meteorological stations. The frequency of data collection depends on the specific application.

Various investigations use multiple variables, including radiation, pressure, direction, and wind speed, to predict soil temperature and water content in greenhouses. For instance, one study analyzed data collected over four months and used random forest and the inferring connections of networks to predict the soil temperature and volumetric water [

29]. Another study used a Reversible Automatic Selection Normalization (RASN) network over six months of data to evaluate the prediction model using different variables [

30]. ANN were also used to forecast internal temperature using around two months of information [

31]. In refs. [

32,

33,

34] use SVM to make predictions about certain variables in greenhouses. Other studies used algorithms such as Xgboost [

35] or LSTM [

36] to analyze meteorological factors affecting crop evapotranspiration. One work predicted greenhouse aerial environments using the BiLSTM model with a dataset of two years [

37]. In another approach, the spatio-temporal kriging method was used to estimate the temperature in greenhouses using three months of data [

38].

Sometimes, data is not acquired directly for investigations. Instead, datasets are gathered from other projects or repositories to make AI predictions. Some approaches involve adding feature functions to time series through techniques like LR, SVR, RMM, or LSTM for predictive analysis [

39,

40]. Additionally, certain studies employ methods like the Bayesian optimization-based multi-head attention encoder to forecast changes in climate time series accurately [

41].

Each study evaluates its model’s performance using metrics such as coefficient of determination R-squared (R), Mean Square Error (MSE), Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). The percent Standard Error of the Prediction (%SEP), Symmetric Mean Absolute Percentage Error (SMAPE), and Pearson Correlation Coefficient (R) are commonly used.

3. Materials and Methods

Data science develops actionable insights from data by encompassing the entire life cycle of requirements, data collection, preparation, analysis, visualization, management, and preserving large datasets. This broad view embraces the notion that data science is more than just analytics; it integrates other disciplines, including computer science, statistics, information management, and big data engineering [

42].

Most data science research has only focused on technical capabilities, which has led to a significant challenge for projects due to the need for more attention given to management. Therefore, it is essential to emphasize the appropriate methodology for developing a project focused on data science. One of the available options is the agile methodology, which involves using a set of techniques applied in short work cycles to increase the efficiency of project development [

42].

Mainly, The Team Data Science Process (TDSP) is being used in developing this project, an agile and iterative data science methodology for delivering predictive analytics solutions and intelligent applications efficiently. TDSP provides a lifecycle to structure the development of data science projects. The life cycle describes all the steps that successful projects follow, as shown

Figure 1 [

43]:

Business understanding: frame business problems, define objectives, and identify data sources.

Data acquisition and understanding: ingest data, and check data structure.

Modeling: feature engineering, model training, and evaluation

Deployment: deploy model process.

3.1. Business Knowledge

The TDSP methodology-based data science lifecycle begins with understanding the business objectives and identifying the problems that can be solved through data and machine learning models. This also involves deciding on how these models will be implemented in the environment. In our analysis, we considered factors such as the greenhouse’s location, crops, sensor positions, crop seasons, best practices, and the potential benefits of having an internal temperature forecast system to address greenhouses’ challenges, specifically those that affect the crops’ morphology and physiological processes.

3.2. Data Acquisition and Understanding

As part of the TDSP methodology, the next step involves acquiring data. This entails gathering information from the greenhouse and analyzing it. In this stage, a weather station collected the data from a greenhouse with a curved roof. This type of greenhouse is for traditional use with no climate control and has natural ventilation, see

Figure 2. The greenhouse has an area of 165 m

,

m long, 6 m wide. This is located in South Mezquitera, Juchipila, Zacatecas, Mexico, with latitude and longitude (

,

) and orientation 21

N 103

W.

Inside and outside the greenhouse are nine sensors as a part of the Davis Vantage Pro 2 central weather system. Seven sensors outside the greenhouse measure temperature, humidity, solar radiation, barometric pressure, rainfall, wind speed, and direction. Inside the greenhouse, there are sensors for humidity and temperature. These sensors work together to create a uniform system within the greenhouse.

Temperature Sensor: The Davis Vantage Pro 2 central weather system contains an SHT11, a digital, low power consumption, fully calibrated humidity, and temperature sensor Integrated Circuit. The sensor applies CMOSens technology that guarantees excellent reliability and long-term stability. Each one of the output signal sensors is delivered through a 14-bit analog-to-digital converter coupled to a serial interface circuit. The sensor operating temperature range is C to 123 C and a typical accuracy for the temperature sensor of ±C and the humidity sensor of ±3%.

Data were collected from 12 July 2020, to 24 June 2021, with sampling at 5-min intervals. Information was not collected during two periods: 16 December 2020–3 January 2021, and 7 March 2021–21 March 2021, due to maintenance at the weather station and changes in the polyethylene plastic of the greenhouse, respectively. A total of 85,989 samples were obtained to train and test the prediction models.

Figure 3 shows the data collected corresponding to the greenhouse internal temperature.

During data collection, tomatoes were grown from July 2019 to January 2020, and bell peppers from February 2020 until the end of the data.

The

Table 1 shows the main variables obtained from the data collected.

3.3. Modeling

In the TDSP methodology, modeling is a crucial step that involves creating data features from raw data to prepare for model training. Additionally, it is necessary to compare the success metrics of different models to determine which one accurately answers the question at hand.

3.3.1. Feature Engineering

In feature engineering, it is vital to balance including informative variables and avoiding unrelated ones. Including informative variables can improve the outcome, while unrelated variables can add unnecessary noise to the model. Exploring the data is essential in selecting the appropriate models to forecast the internal temperature in the greenhouse.

Data exploration revealed that each season exhibits a unique trend and number of samples in their variables, as shown in

Table 2, which displays the greenhouse’s internal temperature variations. This analysis resembles the one presented by Castañeda-Miranda et al. [

44], where they divided the data into seasons of the year to avoid underfitting during model training due to varied temperature trends throughout the year.

Splitting the data by seasons allows for better analysis and modeling of internal temperature [

45]. When trying to forecast the internal temperature using all the data, it is hard to capture the complete trend and its correlation with other variables. Therefore, it is important to understand the data’s origin to create viable model candidates for accurate forecasting.

During the seasons when it is hotter, such as summer and spring, there is a stronger relationship between solar radiation and internal temperature. The correlation coefficients for these seasons are 0.75 and 0.66, respectively. In contrast, during the cooler seasons of winter and autumn, the correlation coefficients are lower at 0.24 and 0.28, respectively.

The internal dew presents high concordance values in fall, winter, and summer, whereas there is no significant correlation in winter.

The external temperature has significant correlation values with the external and internal humidity. The value due to the initial selection of predictors in the models is remarkable; these correlations between independent variables indicate multicollinearity.

3.3.2. Model Training

This analysis used various techniques to predict and estimate the internal temperature of the controlled greenhouse. These techniques included LR, regression with Partial Least Squares (PLS), and SVM.

Linear Regression

LR is one of the most widely used techniques. The reason is its advantages for understanding the data and the ability to clearly and straightforwardly represent complex phenomena [

46]. The LR model has two main structures: Single Linear Regression (SLR) and Multiple Linear Regression (MLR).

The structure of SLR is expressed as Equation (

1):

where

Y is the dependent variable called the response or output variable. On the other hand,

X is the independent variable. The variables

and

are the regression coefficients or parameters of the model and correspond to the intercept and slope, respectively. The variable

represents the error in predicting the response variable due to the stochastic relationship between

Y and

X [

47].

The MLR takes

k variables as predictors for the model, as expressed in the Equation (

2):

Equation (

2) includes the intercept like the simple model. However, the variables

,

, and

no longer represent the slope of the line. Instead, in this

k-dimensional space, they represent the slopes of the hyperplane formed by the predictors [

47].

is a parameter that needs to be estimated. This estimation is based on the data collected from the sample. In the case of LR, the Ordinary Least Squares (OLS) method is used [

48]. The OLS equation for SLR can be expressed as Equation (

3):

Equation (

3) aims to determine the regression coefficients

that minimizes

S. This equation can also be applied to the MLR to evaluate the contribution of each variable to the model [

48].

Partial Least Squares

The PLS analysis technique is used to compare input and output variables. It was initially designed to deal with multiple regression problems where there needs to be more data, many null values, and multicollinearity. The main objective of PLS is to predict the dependent variable using the independent variable and determine the structure between the two. This regression method allows the identification of underlying factors, which are linear combinations of the dependent variables that best explain the independent variable [

49].

PLS focuses on training the correlation strategy between the X and Y variables by utilizing a specific part of the correlation or covariance matrix. It measures the covariance between multiple variables and creates a new set of variables through optimized linear combinations, aiming to achieve maximum covariance with the least number of dimensions. This reduces the number of input variables, using the covariance of the input data and information of the dependent variable [

50].

The method involves using matrices of variable X and linear combinations of them along with variable Y. Assuming that X is an n × p matrix and Y is an n × q matrix, the technique works by successfully extracting factors from both variables X and Y, where the covariance of the extracted factors is the maximum. This technique can also work with multiple response variables, but for this particular model, we will only assume one response variable; Y is n × 1, and X is n × p.

This method seeks to find a linear decomposition of

X and

Y that satisfies Equation (

4):

where:

The linear decomposition is achieved when the algorithms use an iterative process to extract and , finding the maximum covariance between T and U. These scores are successfully extracted, and the number (r) depends on the X and Y range.

Each is a linear combination of X. Specifically, the first is , where w is an eigenvector corresponding to the first eigenvalue of . Similarly, the first is , where c is the eigenvector corresponding to the first eigenvalue of . represents the covariance between X and Y.

Once the first factor is extracted, the original values of X and Y are deflected with and . This process is repeated until all possible latent factors of t and u are obtained, and X is reduced to a null matrix. The number of factors obtained depends on the X range, typically ranging from 3 to 7 factors containing 99% of the variance.

PLS regression enables the creation of a model with varying dependent and independent variables while accounting for multicollinearity. The model generates new uncorrelated factors, allowing for a robust set even with missing or noisy data. Including the output variable when creating the X factors makes the prediction more precise without the risk of overfitting [

50].

Support Vector Machine

The SVM algorithm was first designed to detect similarities by creating a decision boundary with support vectors. Because SVM is a convex model, it provides consistent and well-balanced results. This approach seeks out an optimal hyperplane that divides observations into classes based on patterns of information about them.

SVM has significantly advanced through convex optimization, statistical learning theory, and kernel functions. When building the hyperplane to separate data, it is necessary to evaluate the dot products between two training data vectors. In Hilbert space, the dot product has a kernel representation, meaning the evaluation of the dot product is not solely dependent on the space’s dimension.

There are two main types of SVM: Support Vector Classification (SVC) and Support Vector Regression (SVR). In this analysis, we will be utilizing the SVR method [

51,

52,

53].

SVR transforms the original data vector

x into a higher dimensional space

F using a nonlinear transformation

. Then, linear regression is applied as shown in the Equation (

8):

where

b is the threshold value. The resulting regression in a high-dimensional feature space corresponds to a non-linear regression in the low-dimensional input space, thus avoiding the computation of the dot product of

in a high-dimensional space. Since

is a map, the

w value can be obtained from the data by minimizing the sum of the empirical risk

and a complexity term

that imposes flatness on the feature space. This becomes a constrained optimization problem, which can be solved using Lagrange multipliers, as indicated in Equation (

9):

where

l is the number of examples,

is a regularization term, and

is a cost function. The

function is expressed in Equations (

10)–(

12).

- (1)

Linear

-insensitive Cost Function ():

- (2)

- (3)

must be minimized from Equation (

9) using Equation (

13):

where

is the solution that minimizes

. In the optimization problem of

y

, the non-zero values for

for each respective value of

,

are called Equation (

8), where it can be rewritten as Equation (

14):

where

is called the

function, uses a symmetric kernel function that meets the Mercer conditions, and represents a dot product in a feature space. To obtain the value of

b, we can select a point on the margin using the newly rewritten Equation (

14). Taking the average of all points in the margin is typically recommended, as shown in Equation (

15):

where

is a prediction error for the linear

-insensitive cost function

and for the Huber cost function

.

The strategy of the kernel is to convert the input data into the necessary format for processing. This enables the SVM algorithm to map data from a lower to a higher dimension. The kernel function, defined by a dot product in the Hilbert space, is expressed in Equation (

16):

In Equation (

16), the kernel is equivalent to mapping the data into a feature space V, allowing the SVM algorithm to compute and solve problems where data is not linearly separable. Therefore, the quadratic programming problem required to find the optimal hyperplane is convex only if the kernel function satisfies the Mercer conditions; thus, the kernel must satisfy

:

for each square integral function

.

If

k satisfies Equation (

17), then the matrix M, where:

- (1)

Symmetric, () and

- (2)

Positive Semi-Definite Matrix (PSD)

A matrix is positive semi-definite if for each of the real vectors , in other words, all eigenvalues are non-negative.

Choosing the appropriate kernel becomes vital and non-trivial when considering kernel effectiveness for non-linear data. This means it also becomes one more parameter to be considered as a convex optimization problem within the general conditions. An optimal kernel function can be chosen from a fixed set of kernels statistically rigorously using cross-validation.

In general, there are some kernel functions, as shown in Equations (

19)–(

22).

- (1)

- (2)

- (3)

Radial Basis Function (RBF) kernel:

- (4)

Hyperparameters

Adjusting the hyperparameters is crucial in creating an accurate mathematical prediction model. If they are not adjusted correctly, the model can produce sub-optimal results, leading to more errors. There are two ways to select hyperparameters: using default values provided by the software or manually configuring them. Additionally, data-dependent hyperparameter optimization strategies can be employed. These strategies are second-degree optimization procedures that minimize the expected error of the model by searching for candidate configurations of hyperparameters. Grid or random search is an example of such strategies, where a list of candidate parameters is specified. Bayesian optimization is another iterative strategy that’s more complex [

54].

Bayesian optimization was used to obtain the best set of hyperparameters of the model; its use was able to minimize the non-convex function in a way that some other method does not allow. The random search and grid search methods were initially used for the data analysis; nevertheless, for the forecast, Bayesian optimization was used to adjust the best candidate due to its superiority compared to the methods above [

55].

As in other types of optimization, the Bayesian method seeks to find the minimum of a function

on some bounded set

X; taken from a subset of

. Unlike other methods, Bayesian optimization builds a probabilistic model for

. It uses it to determine where to evaluate the function at

X later while accounting for uncertainty. As a Bayesian method, it utilizes all available information to find the minima of non-convex functions with relatively few evaluations. However, this comes at the cost of additional calculations to determine the next point to prove [

55].

3.3.3. Model Evaluation

The theoretical basis of using an algorithm to determine continuous values covers several aspects that reveal possible connections between the data, dependent and independent. It is crucial to select the right metric to evaluate the model as it helps to explain the relationship and primary objective of the phenomenon.

In this study, four different metrics are used to evaluate the internal temperature forecast of the greenhouse; R

, RMSE, MAE, and MAPE [

56]:

where

n is the number of observations, and

is the error between the forecasted value and actual value.

R

quantifies to what extent the independent variables determine the dependent variable in terms of variance proportion as expressed in Equation (

23), where

is the residual sum of squares and

is the total sum of squares [

56].

The RMSE is a derivation of the MSE used to standardize their units of measurement. The MSE evaluates how well a model fits the training data by measuring the variance. The RMSE is useful because it assigns more weight to certain data points, resulting in a greater impact on the overall error if a prediction is incorrect. This is expressed in Equation (

24).

MAE evaluates the result regarding distances from the regressor to the real points. MAE does not heavily penalize outliers due to its norm that smooths out all errors, providing a generic and bounded performance measure for the model. This is expressed in Equation (

25).

MAPE is used when variations impact the estimate more than the absolute values. This metric is heavily biased toward low forecasts, so it is not suitable for evaluating tasks where errors of large magnitudes are expected. This is expressed in Equation (

26).

3.4. Development

The Development includes the execution of the previous steps of the TDSP methodology. Once the different algorithms and evaluation metrics have been defined, the model experimentation was developed. This study used the following models to forecast the internal temperature:

MLR model using OLS.

Multiple regression model using PLS: In the PLS analysis, the elbow method was used to determine the best number of components using the MSE metric.

SVR model using polynomial kernel.

SVR model using RBF kernel: It was determined to use a polynomial and RBF kernel due to the non-linear mapping., which provides a different analysis to the MLR model.

In all models, the data set was divided by seasons and included the five independent variables: external temperature, external humidity, internal humidity, internal dew point, and solar radiation. The dependent variable is the internal temperature. Besides, the data were divided into 80% training and 20% testing. In addition, the K-Folds method performs cross-validation to assess the model’s performance by applying k-10 subsets that work better for our models; this was also useful to detect overfitting in the model, which infers that the model is not effectively generalizing patterns and similarities in the new inputted data.

We used Visual Studio Code editor (1.80.1) software and Python (3.9.5) programming language to execute data modeling algorithms. The data was extracted from the central meteorological system and imported into the software using Pandas for manipulation, analysis, and usage. NumPy was used for executing complex mathematical operations on vectors to optimize computer performance. We also utilized MatPlotLib and Seaborn libraries to represent time series graphically and Sklearn to develop mathematical algorithms for forecasting internal temperature.

5. Discussion

The combination of supervised learning, big data technologies, and high-performance computing has opened up new possibilities for deciphering, measuring, and comprehending data-heavy operations in agricultural environments.

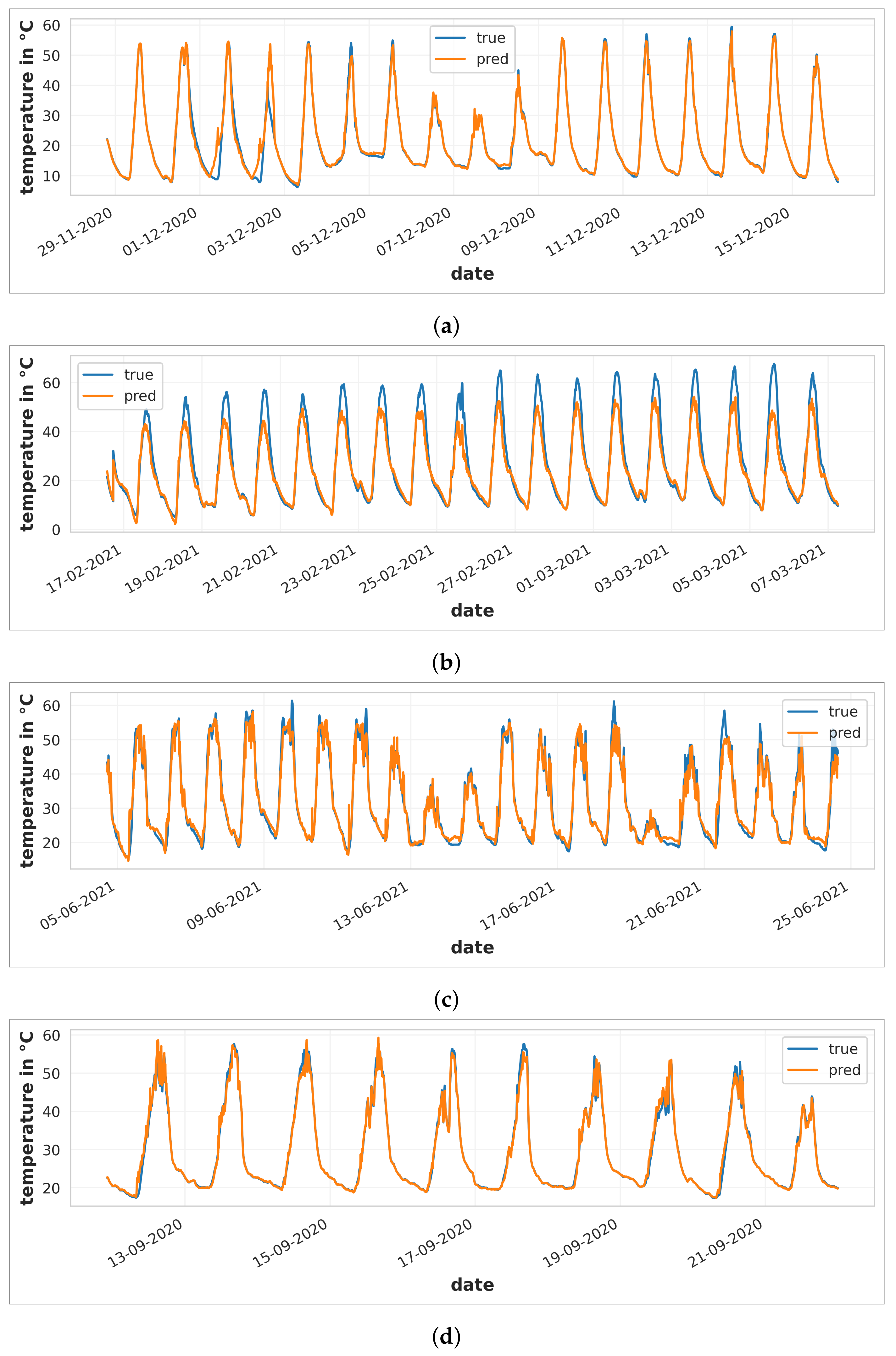

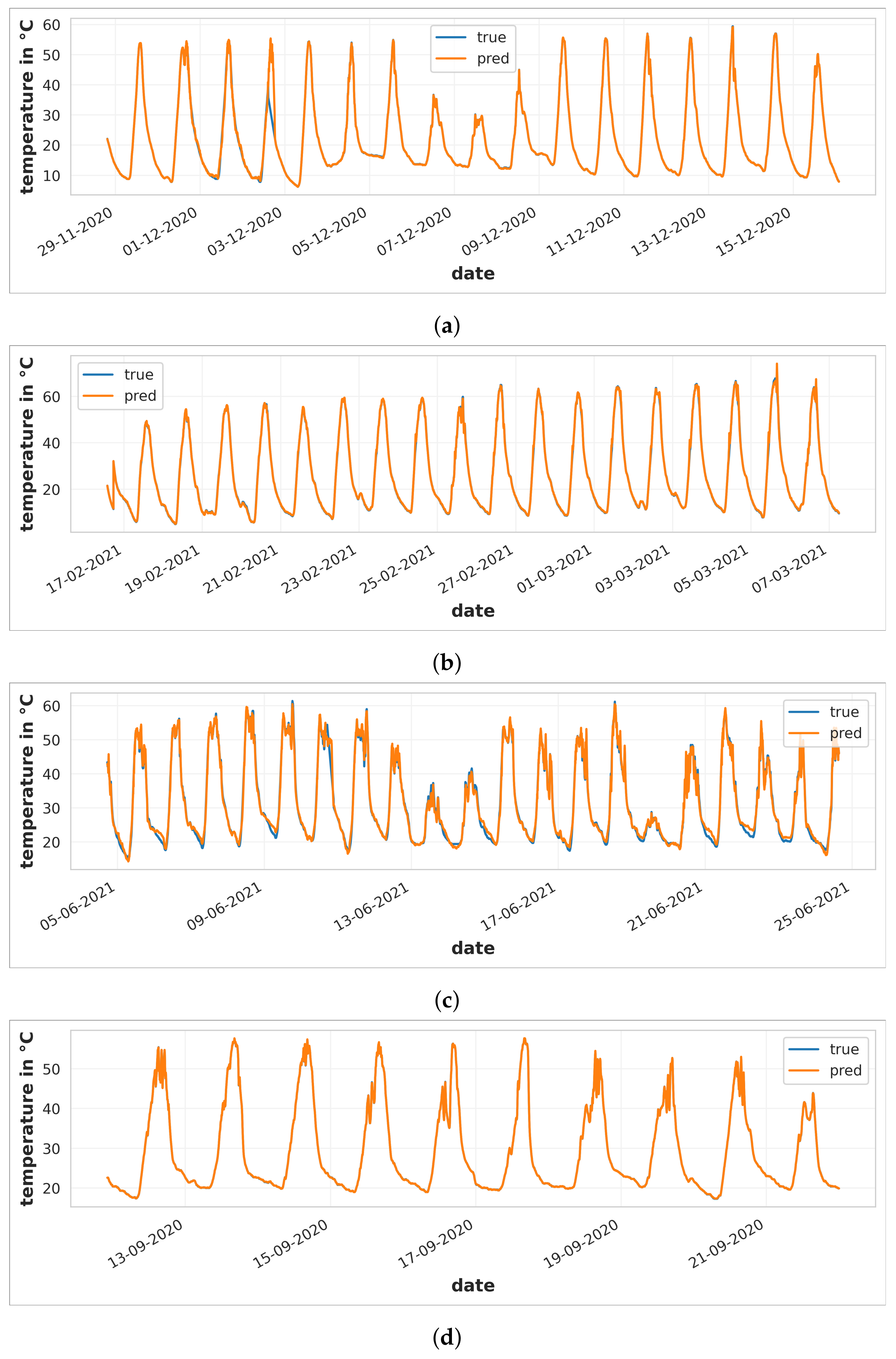

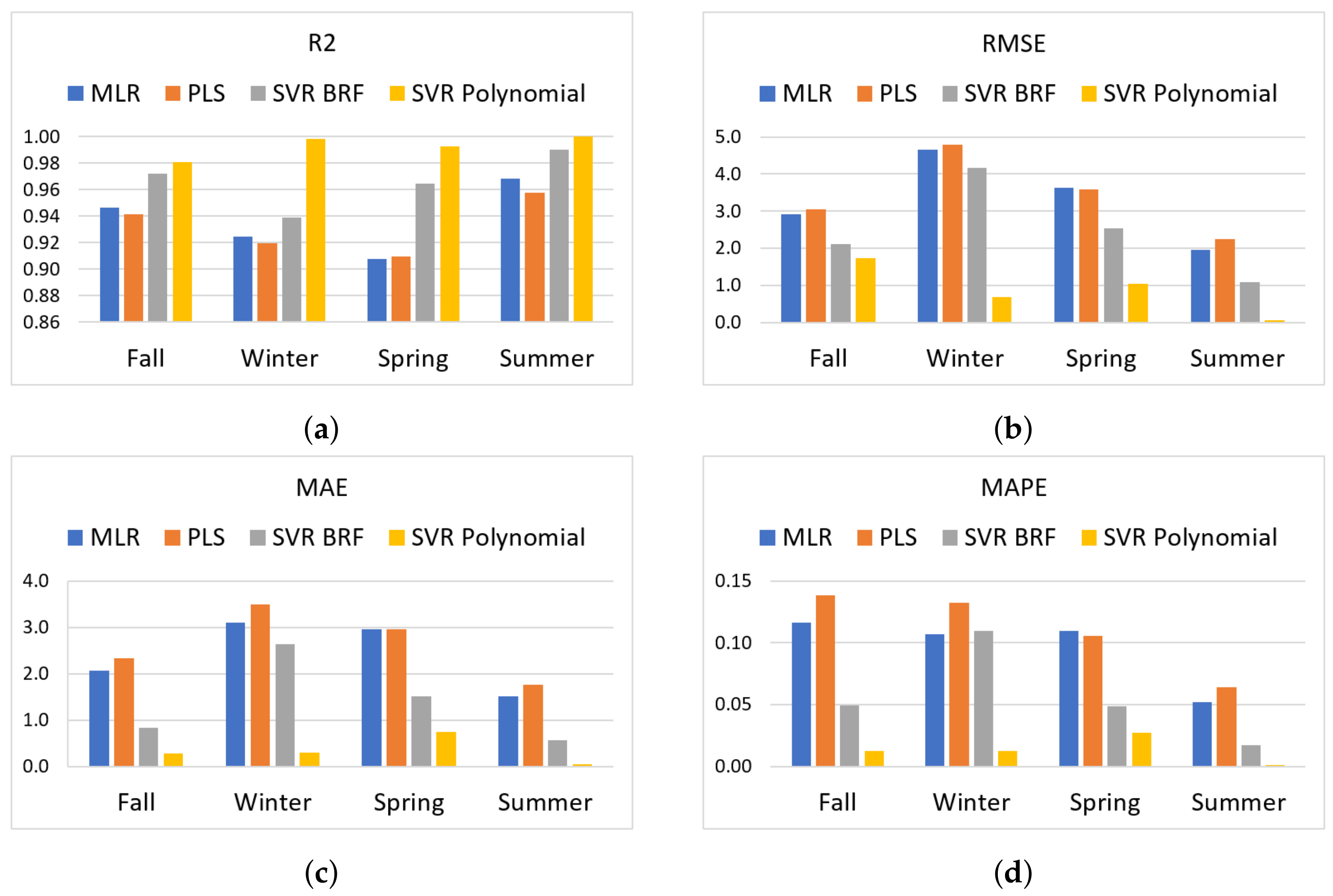

This study analyzed different supervised learning techniques, specifically LR and SVR, to predict the internal temperature of a greenhouse. The main objective was to identify the best model based on an acceptable temperature range with a 2 C hysteresis. The MAE metric was used to measure the accuracy of the models by calculating the distance between the predicted values and the actual temperature points.

The results show that the MAE metric for both LR models exceeded 2 C, with the multiple regression algorithm using PLS performing the worst in winter with a hysteresis of 3.49 C. However, the two SVR models remained below 2 C, except for the SVR BRF in winter, which had a hysteresis of 2.64 C. It was noted that both LR and SVR BRF models struggled to adapt to temperature peaks, resulting in higher MAE values in winter. On the other hand, the SVR Polynomial model proved to be the best in all seasons, with the lowest MAE values. It showed great adaptability over time, and the ability to model data in N dimensions and complexity, making it an excellent model for temperature forecasting in greenhouses, especially during summer, with an MAE of 0.04.

Based on our analysis, the SVR with the polynomial kernel model had the highest forecast accuracy during summer among all the algorithms tested. This model’s performance is comparable to existing ones, as shown in

Table 7. Some studies presented inferior results, such as Zou et al. [

32], using SVM and Thangavel et al. [

34], using SVR with fuzzy logic. Ge et al. [

35], compared various models; specifically, the SVR model had lower precision. Fan et al. [

33], had similar results using SVR BRF but only evaluated the error using the MSE metric; hence, more information is needed to confirm the model’s accuracy.

6. Conclusions

The smart farm is an integral part of the new agricultural revolution towards environmentally friendly practices, focusing on science and technology. Greenhouses are used to optimize crop growth by monitoring variables such as temperature, humidity, ventilation, solar radiation, and wind speed. However, modeling the internal environment of a greenhouse is challenging due to its dynamic nature and dependence on external conditions. Various methods for predicting temperature changes within greenhouses focus on monitoring current conditions rather than forecasting future ones. As a result, greenhouse managers are limited to corrective and non-preventive measures, which may only partially meet their needs. Fortunately, AI algorithms’ forecasts are being used to analyze greenhouse behavior and execute preventive actions by adjusting heating, ventilation, and fertilization systems, guaranteeing the best possible growth, upkeep, and management of crops within the greenhouse.

This research focused on predicting the temperature inside a greenhouse. Over almost a year, the Davis Vantage Pro 2 central meteorological system gathered data on internal factors (such as temperature, humidity, and dew point) and external factors (such as temperature, humidity, and solar radiation). Various experiments were conducted to predict the temperature using four models adapted to a specific year’s season. These models included MLR using OLS, multiple regression using PLS, SVR with RBF, and polynomial kernel. LR models were used to analyze the correlation between input variables and the internal temperature of a greenhouse. However, these models can have difficulty adjusting to temperature spikes. The SVR models, which are more complex, are better at adapting to different factors, especially in extreme temperature conditions. The SVR polynomial model had the highest forecast accuracy of all the algorithms.

The greenhouse control system will utilize these models to give accurate instructions to the different actuators and anticipate upcoming needs, thereby maintaining the optimal internal conditions for the crops.