The methodology used in this paper was divided into two parts. The first part focused on the use of advanced statistical models, while the second part provides the comparison and validation of such results using physical-based models.

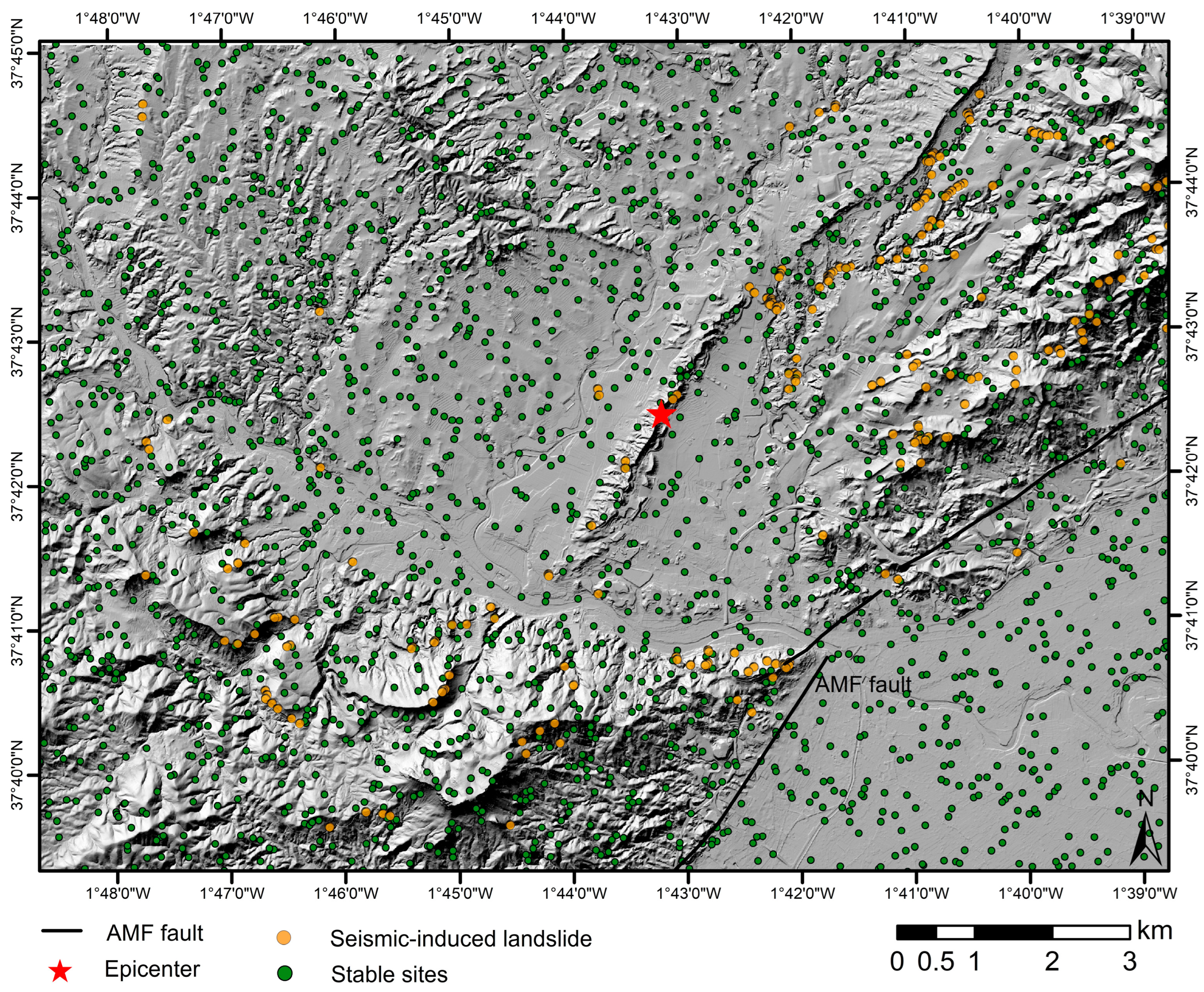

In the first part, after data collection, a selection of relevant variables for landslide control was conducted, considering parameters associated with seismic events, instability location, and terrain characteristics. Data were split into training and testing sets, and additional variables were created to balance the samples and ensure the representativeness of the features in both sets. Various feature selection algorithms were used, machine learning models were applied, and their results were analyzed.

3.1. Landslide Control Parameters

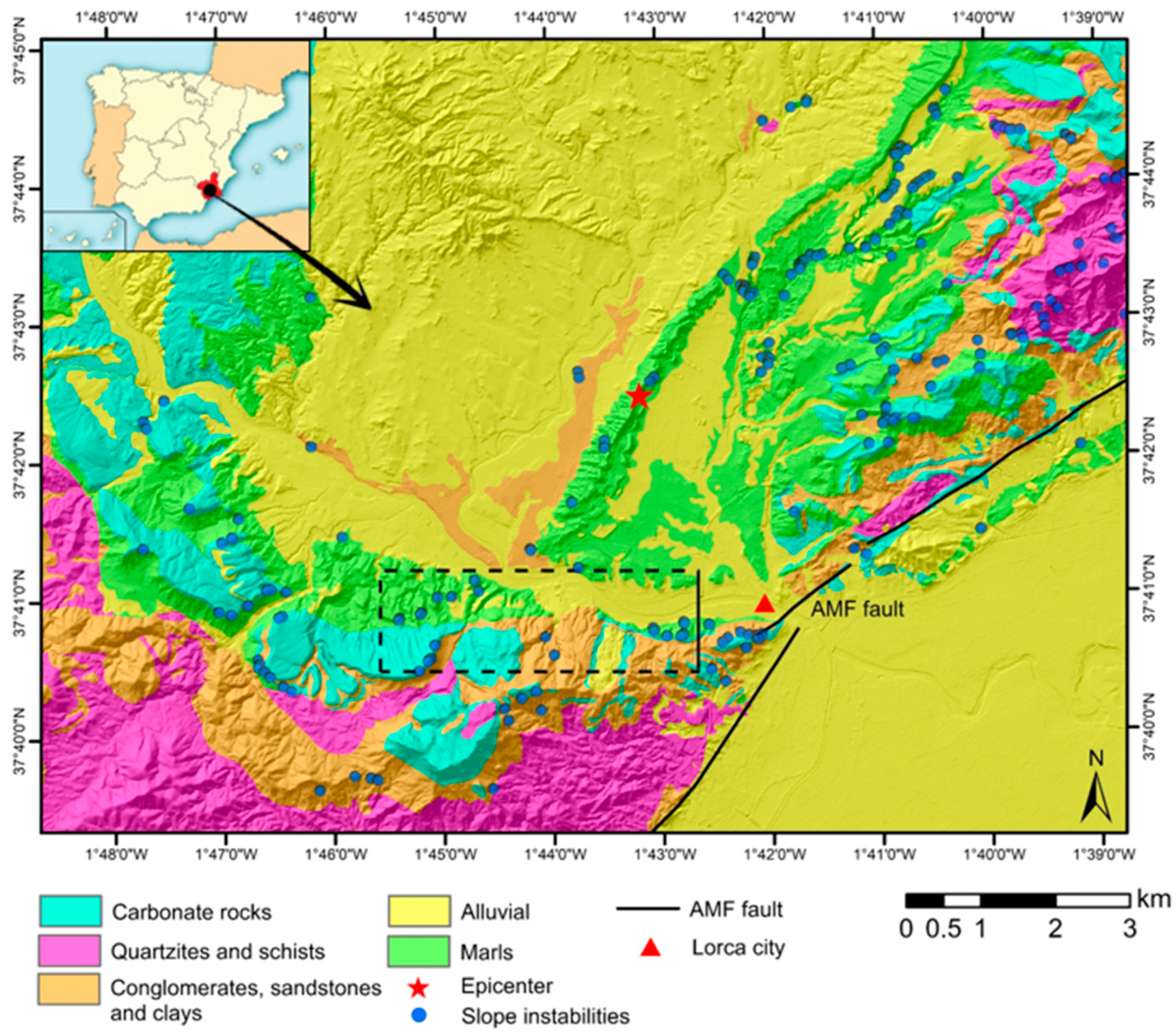

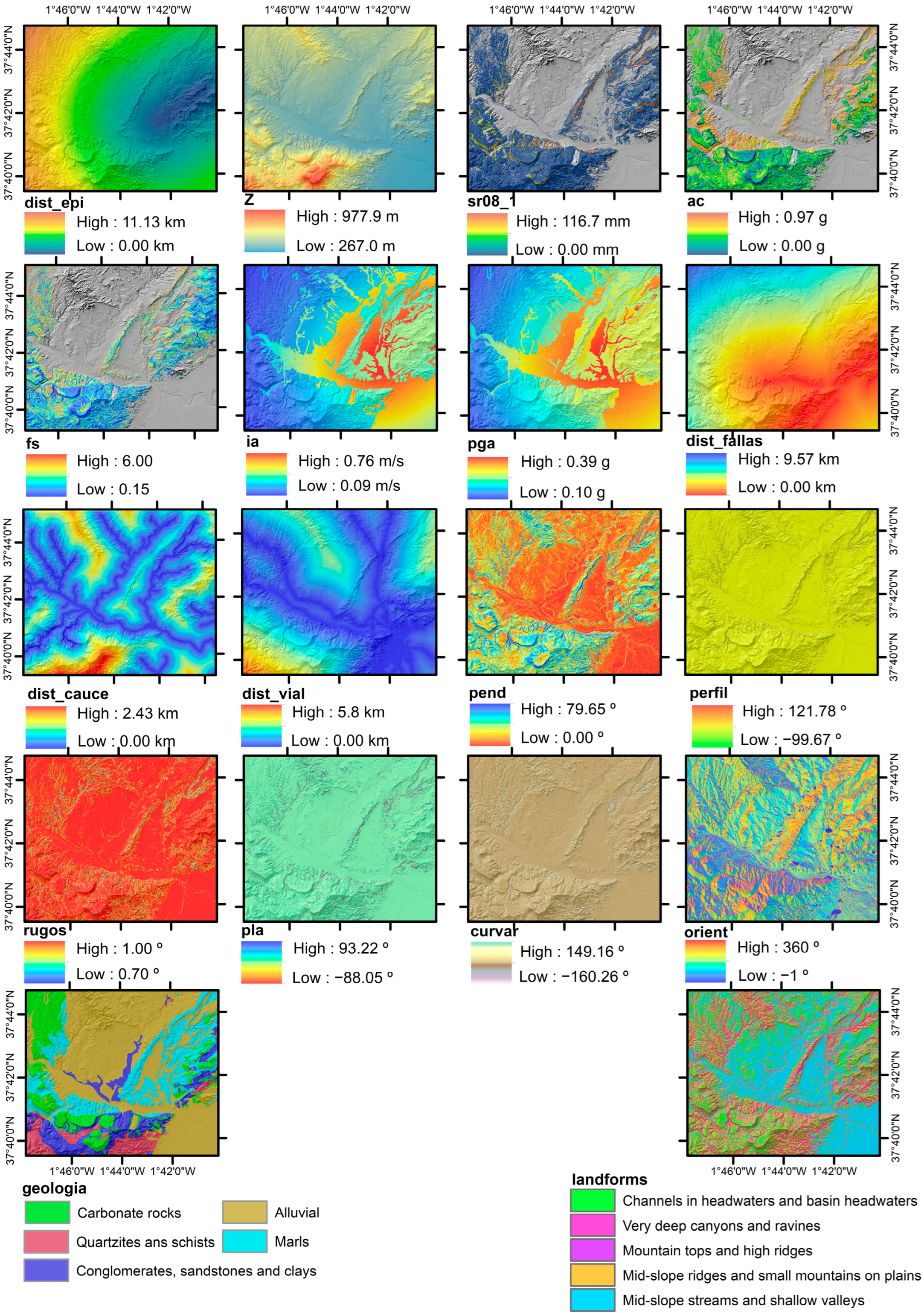

In this study, the existing landslide inventories were merged. This resulted in a total of 257 slope instabilities with different volumes, ranging from one to hundreds of cubic meters. Each mapped slope instability was used to obtain relevant information for landslide control. The parameters used to control the landslide occurrence are shown in

Table 1 and

Figure 2. They are grouped into eighteen factors, which provide information about the terrain, location, and seismic parameters that contributed to the landslide triggering.

Factors can be classified into two main categories: categorical and numerical variables. Categorical variables are used to represent different categories or groups of values, whereas numerical variables are used to quantitatively measure a property, either in a discrete or continuous form. Categorical variables represent qualitative information, such as lithology or terrain morphology, whereas numerical variables provide quantitative information, such as slope or roughness.

It should be noted that some dummy variables were included as categorical variables. These variables are represented by binary numeric values, typically 0 and 1, and are used to encode categorical features in machine learning models. By converting a categorical variable into multiple dummy variables, a numerical representation that indicates the presence or absence of a specific category is created. Dummy variables are particularly useful in classification and regression algorithms where categorical features can have a significant impact on data prediction and analysis.

3.1.1. Parameters Related to the Terrain

Lithological units significantly influence the location of landslide phenomena [

31], and lithological characterization is one of the most relevant aspects in compiling an inventory of terrain-associated control parameters. The lithological units used in the study were obtained from the geological mapping carried out by the Spanish Geological Survey [

32].

Other parameters in the control of landslide triggering include geomorphological factors, such as terrain morphology, slope, curvature, and roughness (

Table 1).

Information about the overall curvature of the terrain in a specific direction is obtained using three measures of curvature as follows: total, perpendicular, and transverse concavity/convexity. The total concavity/convexity helps identify areas prone to landslides. Perpendicular concavity/convexity describes the curvature in relation to a vertical line, helping to understand how local terrain features influence slope stability. Transverse concavity/convexity refers to the lateral curvature, allowing the evaluation of how terrain geometry affects the propagation and direction of landslides.

Roughness in landslide analysis refers to variations in the slope. It provides key information about local topographic characteristics and how changes in the slope can affect slope stability and its propagation. Areas with higher roughness tend to have irregularities in the terrain, which increases the likelihood of landslides. Moreover, roughness can influence the velocity and direction of failures.

It is well known that all of these terrain factors significantly influence the distribution, typology, and mechanisms of slope movements [

33]. Therefore, they were incorporated into the actual study using several parameters derived from the combined use of the ArcGIS software with the digital elevation model from the Spanish Geographic Institute [

34].

3.1.2. Parameters Associated with the Slope Instability Location

Some control parameters are related to the distances of the landslides to the rivers or the nearest roads [

35,

36]. These parameters provide information about the position of the instability with respect to the environment in which it is located. The distance to the nearest active fault, as well as the distance to the epicenter, are variables that are usually included in landslide susceptibility studies [

37,

38]. They provide information on the proximity of the slope area to the earthquake location. These parameters can also influence the size and extent of landslides: the closer the area is to the epicenter and the faults, the more likely it is that larger and more extensive landslides can occur. Another parameter with information about the location widely used is the elevation above sea level [

35,

36,

39]. All of these factors were obtained using ArcGIS, based on cartographic databases from the Spanish Geographic Institute [

34].

3.1.3. Parameters Related to the Seismic Event

These control parameters provide relevant information about the properties of the seismic event related to the landslide occurrence, such as the peak ground acceleration (PGA) and Arias intensity (I

A). Other parameters that characterize landslide occurrence include the safety factor, critical acceleration, and Newmark displacement. The safety factor (FS) is a parameter used to evaluate the stability of slopes and determine if they are unstable (FS < 1) or not (FS > 1). In this study, FS was calculated using Jibson’s proposal [

40], considering the friction angle, cohesion, slope, unit weight, and depth of the sliding block. Critical acceleration is a parameter used in seismic stability analyses and represents the minimum seismic acceleration required to overcome the maximum strength of the slope material and trigger a landslide. The Newmark displacement (D

N) quantifies the expected displacement of a slope during a seismic event, considering different seismic parameters (PGA or I

A) and the distance to the seismic source. The susceptibility to seismically induced landslides is based on the computed Newmark displacement. According to the criteria set by the authors in [

41], areas can be divided into non-susceptible (D

N < 1 cm), low susceptibility (D

N = 1–5 cm), moderate susceptibility (D

N = 5–15 cm), and high susceptibility (D

N > 15 cm). All these seismic parameters related to the 2011 Lorca seismic event correspond to those obtained by [

27].

3.3. Selection of Predictors

Once the training dataset was prepared, relevant variables were selected, i.e., those that have a true relationship with the output to be predicted. The use of too many variables would add noise to the models. Among the different existing variable selection methods, the wrapper methods were used. These methods search for a subset within the dataset that determines the optimal combination of variables, which allows for the best possible performance in predicting the target variable [

42].

This present study incorporates variable selection algorithms based on the use of random forests, bootstrap averaging, decision trees, and gradient boosting. In particular, the following methods were used: (i) stepwise forward and backward selection, (ii) Boruta selection model, (iii) recursive feature elimination, (iv) simulated annealing, and (v) genetic algorithms.

Machine learning methods based on decision trees were first described in [

43]. These methods learn from the number of times an event occurs compared to another, without considering the order of occurrence of these events. The results are shown in the form of a probability tree. These algorithms are widely used in machine learning techniques, particularly in variable pre-selection, as they have low predictive potential but a high capacity for searching for rules and interactions between variables, particularly categorical variables (since they trace nonlinear relationships very well). One advantage of these pre-selection methods is that they do not assume any theory about the data and properly and efficiently handle the missing data [

44]. In addition, decision trees are the basis of other algorithms that have predictive capability, such as bagging, random forest, and gradient boosting.

The precursor of the technique called bagging [

45] uses the advantages of decision trees to avoid their predictive instability, thus creating a variable selection technique called bootstrap averaging. It is based on generating multiple versions of a predictor to obtain an aggregated version, which is used to predict the variable and is the starting point in the next step. It obtains a decrease in variance based on the average of previous estimates.

Gradient boosting, proposed by the authors in [

46], is based on updating the decrease direction given by the negative gradient of the error function. These algorithms are extremely versatile and are easy to implement and monitor. They have a high predictive capacity and are robust against irrelevant variables, but they are not suitable for problems with low complexity [

44].

Among the variable selection algorithms used in this study, stepwise selection methods are based on variable selection using stepwise regression. This method is a hybrid between forward and backward stepwise selections [

47]. Forward stepwise selection starts with a model that does not contain any variable, known as the null model. Then, it begins to add significant variables in terms of

p-value or lower values in the RSS (residual sum of squares) model sequentially until a specified stopping rule is reached or until all considered variables are included in the model. The stopping rule is usually defined based on a certain

p-value threshold, and if the threshold is exceeded, the variable is added to the model. The

p-value sensitivity threshold can be defined either fixedly or using AIC (Akaike information criterion) or BIC (Bayesian information criterion). The problem with defining the threshold following these criteria is that the threshold changes for each variable. Backward stepwise selection begins with a full model of variables, which are removed one by one based on a stopping criterion [

48]. This selection method allows variables to be selected following a simple and easily interpretable statistical model. However, variable selection is unstable, with a small sample size compared to the number of variables to be studied, as many combinations of variables can fit the data similarly. Additionally, this method does not consider the possible causal relationships between the variables.

The Boruta variable selection model was proposed in [

49], which works as an algorithm surrounding Random Forest. This algorithm begins by adding randomness to the dataset. This creates shadow variables from the study variables, which are copied and permuted with each other. Afterwards, a random forest classifier acts on the dataset, where the relative importance of each of the variables is calculated. To evaluate this importance, the decrease in the average accuracy value (mean decrease accuracy) of each feature is used. A greater number of average accuracy values indicates more importance in the model. In each of the iterations, a feature is checked and compared to the importance of its shadow variables. If it is lower than its shadow counterparts, the feature is removed because it is considered to have little importance. This process ends when all the features are confirmed or rejected, or a limit of random forest execution is reached. This variable selection method is relevant because it selects variables that are important to the target variable.

The recursive feature elimination method proposed in [

50] is a backward feature selection method, which begins by building a model on the entire set of predictors and calculating the importance order of each predictor. The lowest-importance variables are eliminated, and the model is rebuilt by calculating the importance order of the remaining variables. Backward selection is frequently used with random forest models, as it tends not to exclude variables from the prediction equation and forces the trees to contain suboptimal divisions of the predictors using a random sample of predictors.

Simulated annealing is a method that begins by randomly selecting a subset of entities and defining the operator with a maximum number of iterations. Afterwards, this method builds a model and calculates its predictive performance. Once calculated, it proceeds to include or exclude a small number of features randomly (from one to five) to recalculate the performance and check if it improves or not with the new modification. In the case of worsening, the solution can even be accepted, according to [

51], although the acceptance probability decreases as the iteration of the algorithm increases.

The genetic algorithm proposed in [

52] is inspired by natural selection and biological evolution processes. Its main objective is to find the optimal set of predictor variables that maximizes model performance. It begins with an initial population of solutions, where each solution represents a set of variables. The performance of each solution is then evaluated using a fitness function that measures the quality of the selected variables. Solutions with higher fitness are more likely to be selected for the next generation. After the selection, genetic operators such as reproduction, crossover, and mutation are applied to generate a new population of solutions. Reproduction involves copying the selected solutions directly into the next generation. Crossover combines the characteristics of two solutions by exchanging parts of their chromosomes. Mutation introduces random changes into a solution to explore new possibilities. This process of selection, reproduction, crossover, and mutation is repeated for several generations until a convergence criterion is reached (e.g., a maximum number of iterations or insignificant improvement in the fitness of the solutions). The final solution obtained represents the optimal set of predictor variables for a given problem.

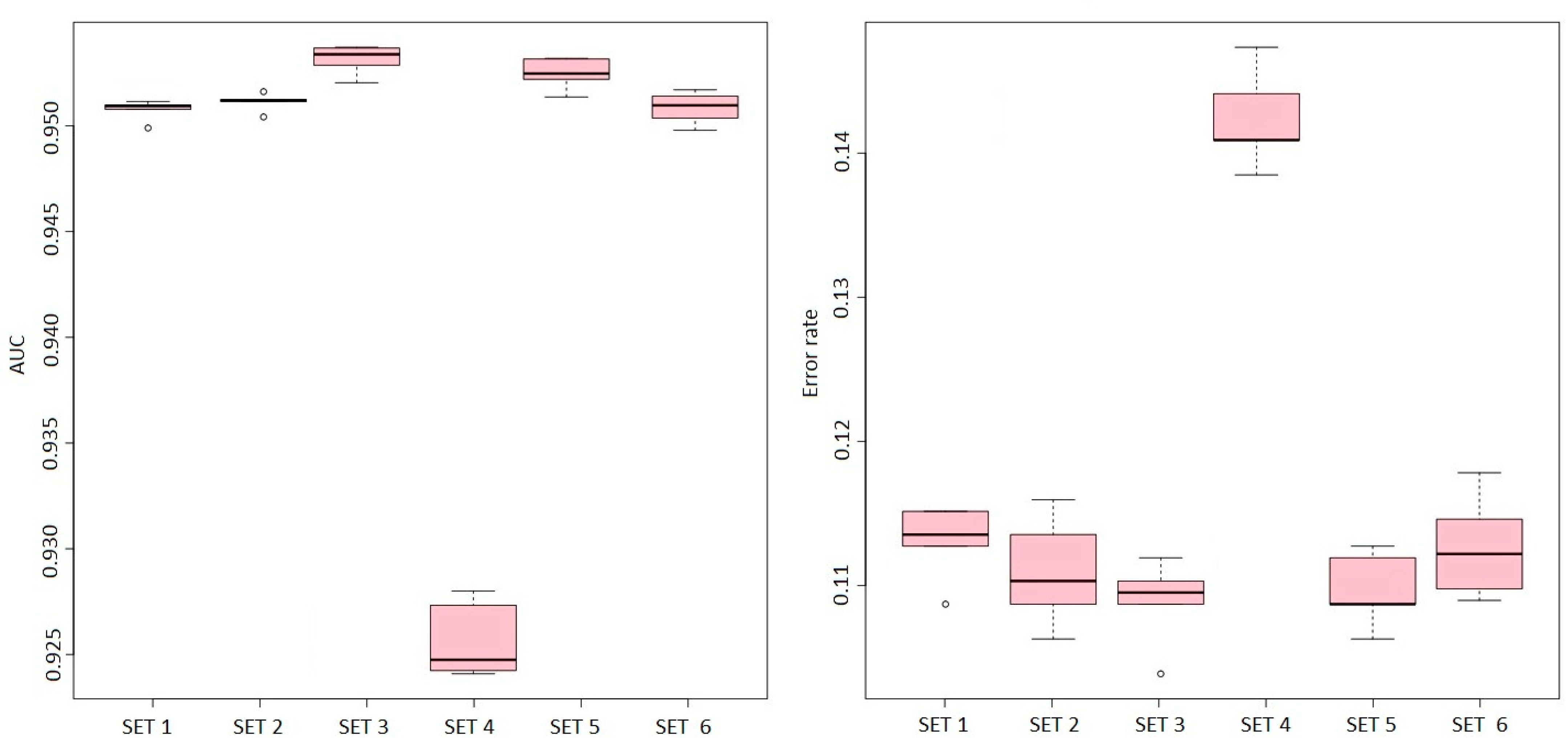

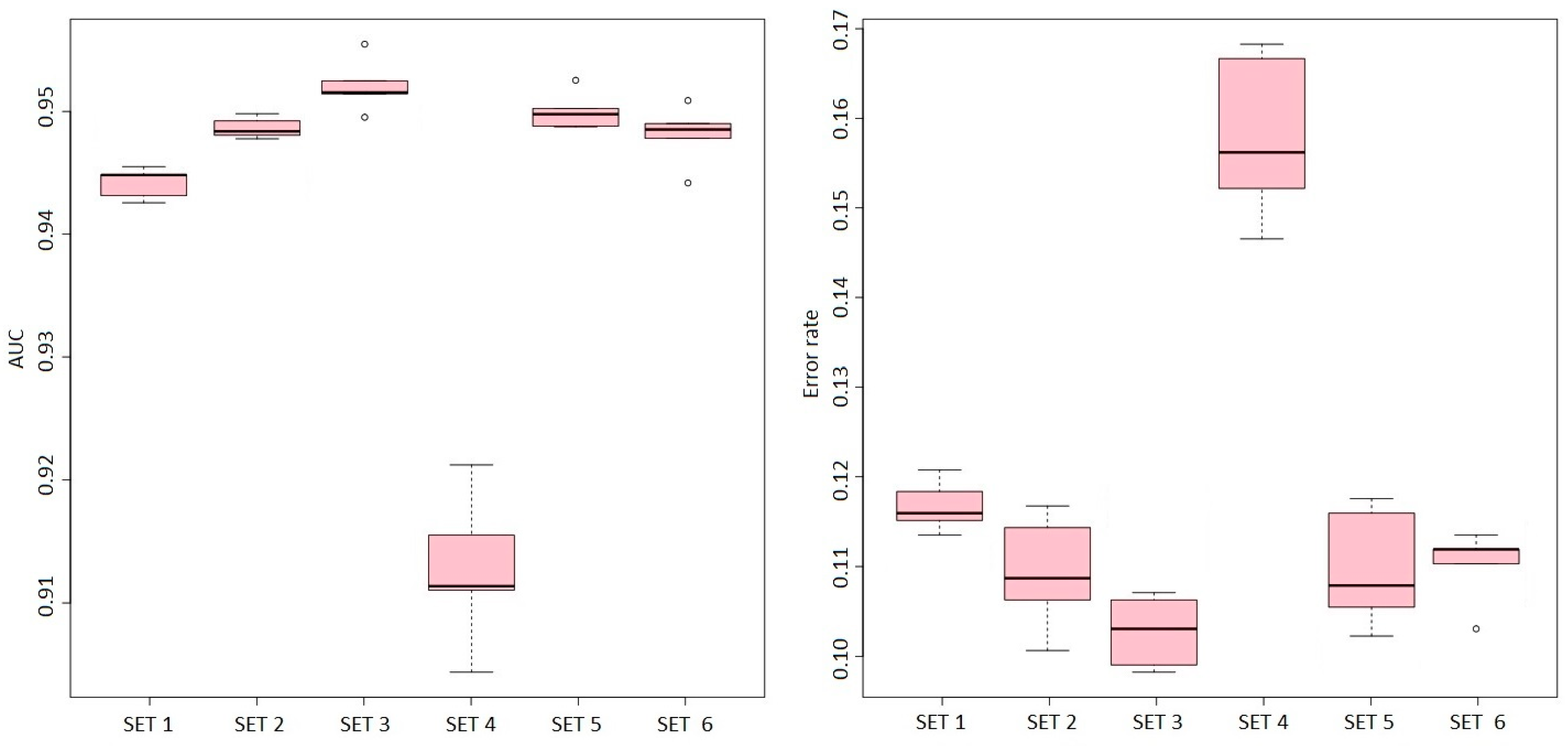

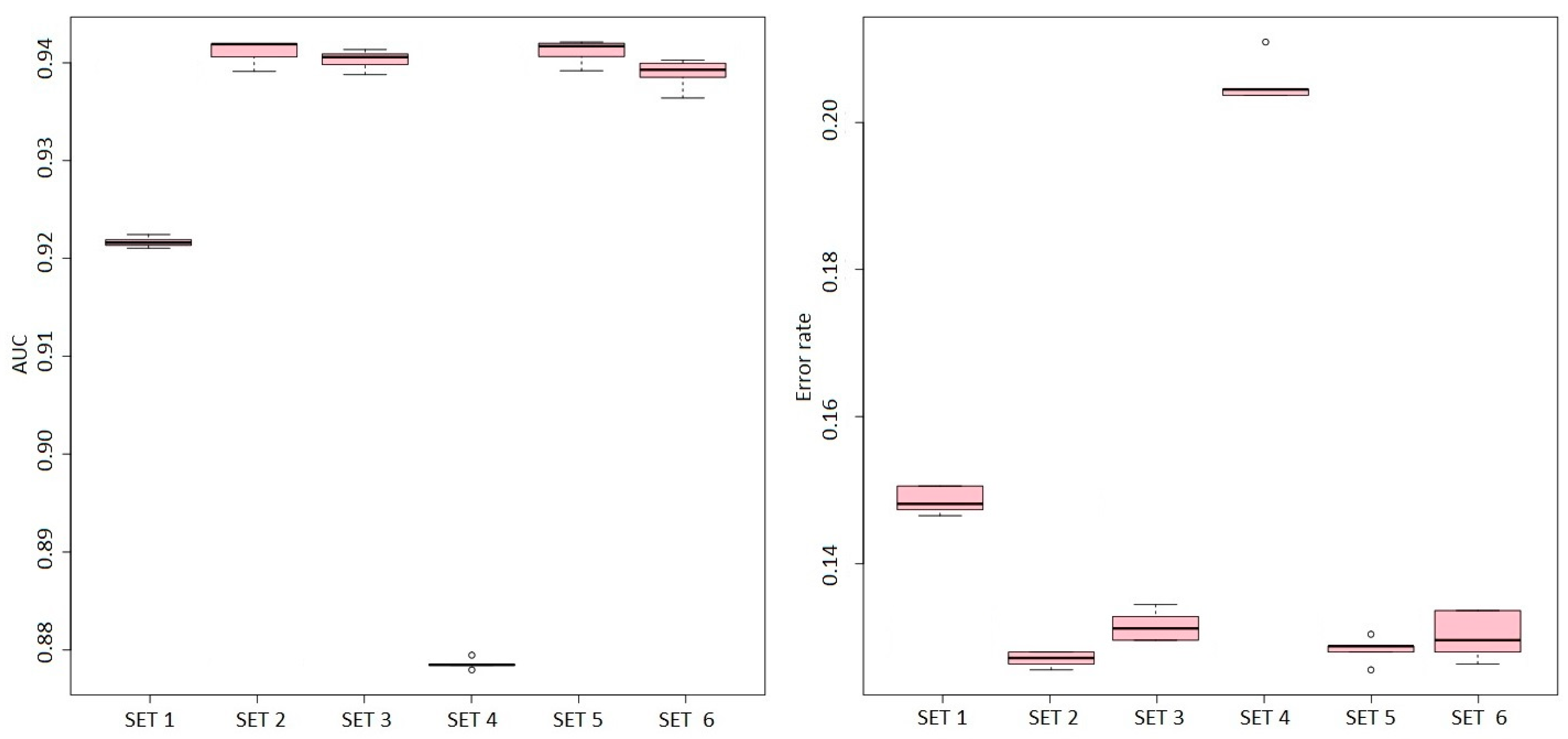

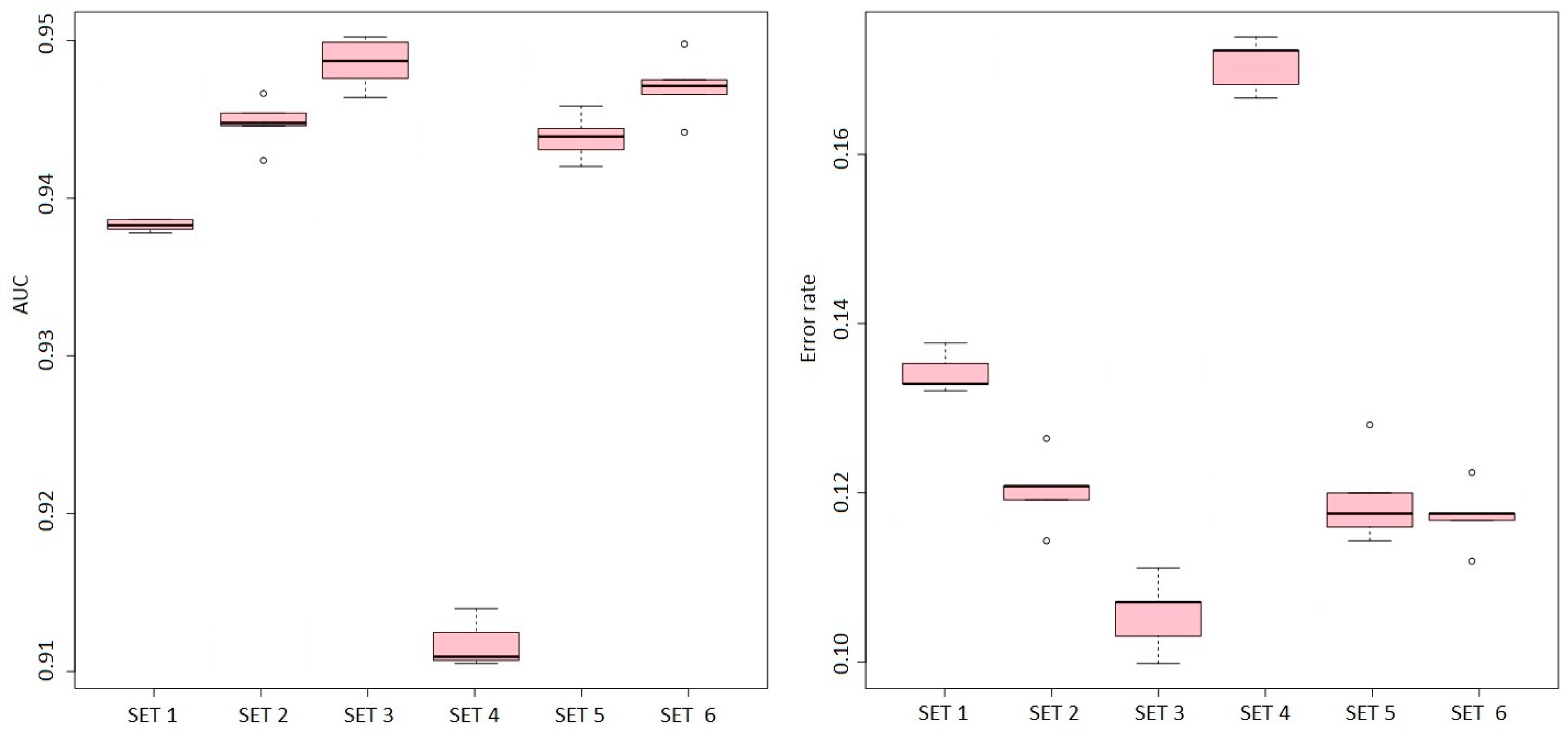

3.5. Model Evaluation

The evaluation of the models entailed two differentiated phases. The first phase consisted of an evaluation of the statistical results by machine learning models using a subset of 1864 test slides, which comprised 30% of the total dataset. To evaluate the performance of the developed classification models, different metrics were employed as follows: the confusion matrix, classification error rate, area under the curve, and combined performance.

The confusion matrix shows the number of times the model correctly or incorrectly predicted each of the classes in the classification problem. It has four main elements: true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN).

The classification error rate or classification error (error) is a metric used to evaluate the performance of a classification model. It is defined as the proportion of incorrect predictions made by the model relative to the total number of predictions made. A high classification error rate indicates that the model struggles to correctly classify the samples into their respective classes. This suggests that the model may not be suitable for a given dataset. The error rate can be calculated using Equation (1):

The area under the curve (AUC) is a metric used to evaluate the performance of binary classification models. AUC refers to the area under the receiver operating characteristic (ROC) curve, which is a graphical representation of the relationship between the true positive rate (TPR, Equation (2)) and the false positive rate (FPR, Equation (3)) across different decision thresholds.

The AUC ranges from 0 to 1: a value of 1 indicates the perfect ability of the model to distinguish between classes; a value of 0.5 indicates random performance. The AUC provides a balanced assessment of the model’s performance by considering its ability to distinguish between both classes.

The combined performance (PC) combines two model evaluation metrics: the classification error rate and AUC. This can be calculated using Equation (4):

where

corresponds to the average error rate of all classes, and

refers to the average area under the ROC curve for all obtained classes. This approach provides an overall evaluation of the model’s performance that considers both its ability to distinguish between classes and its accuracy in classifying each class. By combining these two metrics, a more comprehensive assessment of performance can be obtained.

It should be noted that in the definition of PC, both the error and AUC evaluate binary classification models, but they are not necessarily equally important. The error is a more critical measure for evaluating the model; it indicates how well the model is classifying observations into the correct categories. The AUC measures the model’s ability to distinguish between the two classes, but it does not necessarily indicate how well observations are classified into the correct categories. In this study, both measures were considered equally significant, so equal weights were assigned.

To improve the generalization ability of the models and select the best parameters, repeated cross-validation was applied. This was performed using the ‘trainControl’ function implemented in Caret as a common training control parameter. The purpose of this cross-validation was to repeat the process a total of five times for each model to obtain a more accurate estimate of the model performance. A total of 10 folds were specified to be used in cross-validation for each repetition of the model. At the same time, a hyperparameter grid was implemented for each model during the cross-validation training phase. This was possible by using Caret’s ‘expand.grid’ function, which created all possible combinations of the selected parameter values.

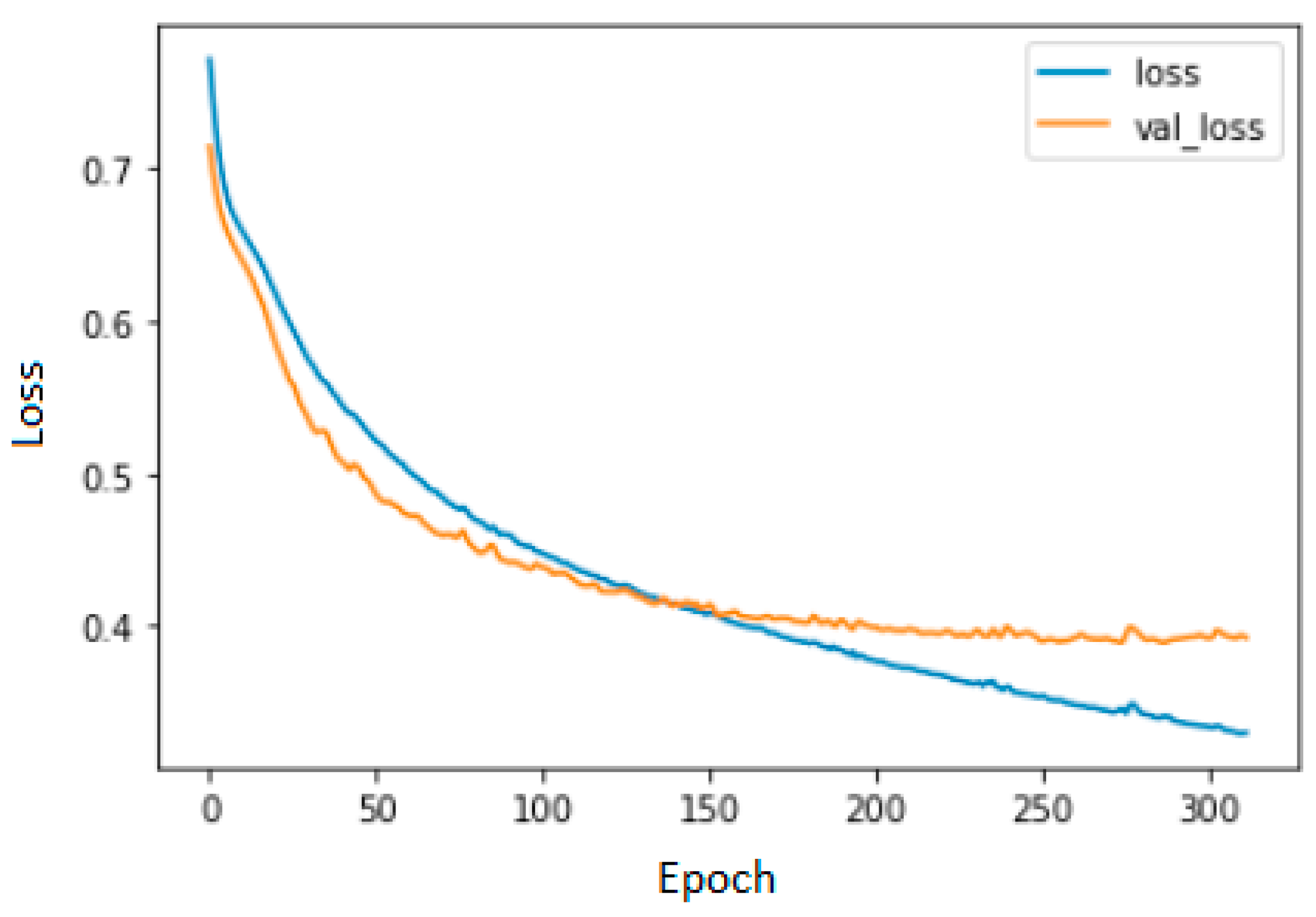

In the case of LG models, no hyperparameter grid was used. In such cases, only repeated cross-validation was implemented in the training phases of each of the six different LG models, using the number of epochs defined in the general cross-validation function as the stopping criterion. Each model was trained and validated 50 times (10 times in each repetition of cross-validation) during the entire training and validation process.

For the RF models, a different hyperparameter grid was implemented for each model, along with repeated cross-validation. The parameter adjusted was ‘mtry’, i.e., the number of variables selected for each partition of each tree in the forest. The maximum value set for each model corresponded to the integer nearest to the square root of the number of variables considered. The minimum value used was 1 and the rate of variation between the maximum and minimum values was also 1. To develop each of the models, a depth of 300 trees was established for each RF, with a minimum of ten observations per tree leaf with replacement.

In the case of the ANN models, a network architecture was implemented using the average of the created models (avNNet) with a maximum number of 145 iterations. This architecture is not a specific or optimal architecture for the case study, but its success primarily depends on the number of analyzed parameters [

67]. In each of the ANN, a different hyperparameter grid was implemented, as well as repeated cross-validation. The adjusted hyperparameters corresponded to the ‘size’ parameter or neurons in the hidden layer of the network. The maximum value set in each model for this parameter corresponded to the maximum number of variables in each of the sets. The minimum value was taken as 1. The rate of variation between the maximum and minimum used was also 1. In addition, the hyperparameter ‘decay’ or L2 penalty rate was also applied to the weights of the network. The explored penalty values were 0.1, 0.01, and 0.001. To avoid increasing the model’s bias, the use of replacement sampling was disabled in each model.

The models created using SVM with a linear kernel (SVML) were constructed by employing a different hyperparameter grid in addition to repeated cross-validation. The hyperparameter corresponded to the regularization parameter ‘C’, which controlled the trade-off between classification accuracy and model complexity. This parameter was tuned in two rounds. In the first round, different standard values of C (0.01, 0.05, 0.1, 0.2, 0.5, 1, 2, 5, and 10) were tested to select a range of values, to be later expanded in a second round, and to select the regularization value that resulted in a balance between the margin of error and higher model accuracy.

In the case of SVM with a radial basis function kernel (SVMR), they were also created with a different hyperparameter grid in each model, in addition to repeated cross-validation. The hyperparameters to be adjusted were two: the regularization hyperparameter of SVMR and the ‘sigma’ hyperparameter or radial kernel bandwidth in the model. These two hyperparameters were adjusted in two rounds, where the kernel bandwidth hyperparameter was tested with different standard values (sigma: 0.0001, 0.005, 0.01, 0.05, 0.1, 1, 10, and 100).

To complete this phase of validation, the models that use the dataset provided by the principal component analysis were also developed in the same way as their counterparts. Principal component analysis (PCA) is a statistical method aimed at reducing the dimensionality of data. Its objective is to obtain a new set of uncorrelated variables called “principal components”, which capture the most information in terms of the variance of the original data. This technique is widely used in various fields [

68] to perform tasks such as dimensionality reduction, data visualization, pattern detection, and exploration of the underlying structure in a set of variables.