Maximizing Test Coverage for Security Threats Using Optimal Test Data Generation

Abstract

1. Introduction

Research Significance

- Firstly, it will help in designing consistent acceptance test cases for security threats (authentication and authorization) through structured misuse case descriptions for early-stage mitigation of security threats. This will help us to overcome the challenge of inconsistent acceptance test case design due to its reliance upon human judgment.

- Secondly, by comparing two state-of-the-art approaches that maximize test coverage through optimal test data generation for structured misuse case descriptions. It was evident from our results that modified condition decision coverage (MC/DC) maximizes test coverage through optimal test data generation with minimum test conditions in contrast to decision coverage (D/C) through structured misuse case descriptions.

2. Literature Review

Research Question

- RQ1: Which among decision coverage and MC/DC maximizes test coverage for security threats in the Structured Misuse case description?

- Hypothesis 1: Decision coverage maximizes test coverage for security threats in the Structured Misuse case description

- Hypothesis 2: MC/DC maximizes test coverage for security threats in the Structured Misuse case description.

3. Research Methodology

4. Experiment Design

- Identify security authentication and authorization threats.

- Design the structured misuse case description.

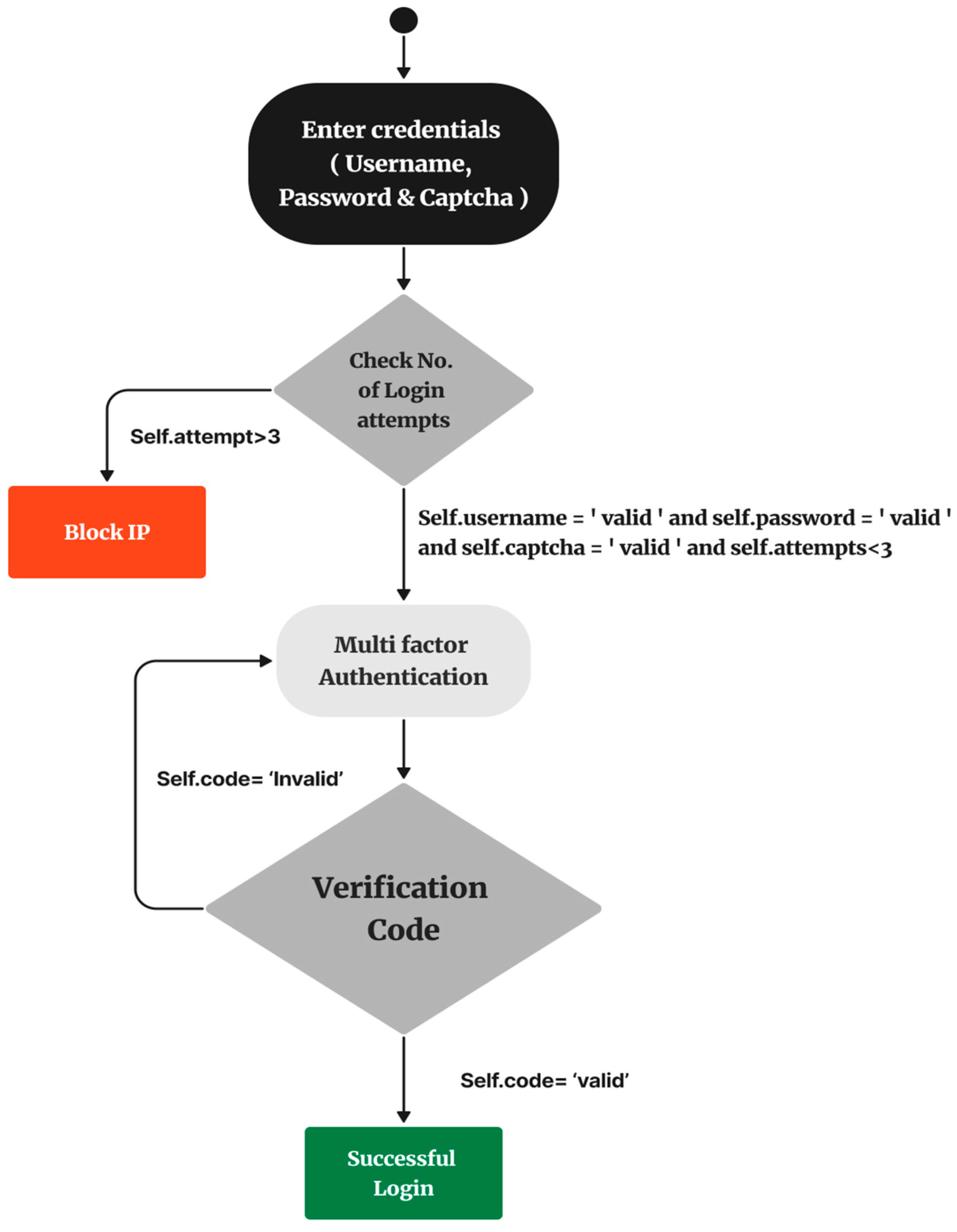

- Draw mal-activity from the structured misuse case description.

- Specify constraints in the mal-activity diagram using OCL.

- Transform constraints into Boolean expression.

- Transform Boolean expression into Truth Table Expression.

- Generate possible test data:

- 7.1

- through MC/DC (modified condition decision coverage).

- 7.2

- through decision coverage (D/C).

- Compare and find optimal test data generated through MC/DC and D/C

- Design test cases for generated optimal test data.

4.1. Test Combinations Identified by MC/DC:

4.2. Acceptance Test Case Design

5. Results and Discussion

Threats to Validity

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bharathi, M. Hybrid Particle Swarm and Ranked Firefly Metaheuristic Optimization-Based Software Test Case Minimization. Int. J. Appl. Metaheuristic Comput. 2022, 13, 1–20. [Google Scholar] [CrossRef]

- Habib, A.S.; Khan, S.U.R.; Felix, E.A. A systematic review on search-based test suite reduction: State-of-the-art, taxonomy, and future directions. IET Softw. 2023, 17, 93–136. [Google Scholar] [CrossRef]

- Huang, T.; Fang, C.C. Optimization of Software Test Scheduling under Development of Modular Software Systems. Symmetry 2023, 15, 195. [Google Scholar] [CrossRef]

- Aghababaeyan, Z.; Abdellatif, M.; Briand, L.; Ramesh, S.; Bagherzadeh, M. Black-Box Testing of Deep Neural Networks Through Test Case Diversity. IEEE Trans. Softw. Eng. 2023, 49, 3182–3204. [Google Scholar] [CrossRef]

- Mohi-Aldeen, S.M.; Mohamad, R.; Deris, S. Optimal path test data generation based on hybrid negative selection algorithm and genetic algorithm. PLoS ONE 2020, 15, e0242812. [Google Scholar] [CrossRef]

- Wang, J.; Lutellier, T.; Qian, S.; Pham, H.V.; Tan, L. EAGLE: Creating Equivalent Graphs to Test Deep Learning Libraries. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 22–27 May 2022; pp. 798–810. [Google Scholar] [CrossRef]

- Khari, M.; Sinha, A.; Verdú, E.; Crespo, R.G. Performance analysis of six meta-heuristic algorithms over automated test suite generation for path coverage-based optimization. Soft Comput. 2020, 24, 9143–9160. [Google Scholar] [CrossRef]

- Alomar, E.A.; Wang, T.; Raut, V.; Mkaouer, M.W.; Newman, C.; Ouni, A. Refactoring for reuse: An empirical study. Innov. Syst. Softw. Eng. 2022, 18, 105–135. [Google Scholar] [CrossRef]

- Sidhu, B.K.; Singh, K.; Sharma, N. A machine learning approach to software model refactoring. Int. J. Comput. Appl. 2022, 44, 166–177. [Google Scholar] [CrossRef]

- Pachouly, J.; Ahirrao, S.; Kotecha, K.; Selvachandran, G.; Abraham, A. A systematic literature review on software defect prediction using artificial intelligence: Datasets, Data Validation Methods, Approaches, and Tools. Eng. Appl. Artif. Intell. 2022, 111, 104773. [Google Scholar] [CrossRef]

- Khan, M.U.; Sartaj, H.; Iqbal, M.Z.; Usman, M.; Arshad, N. AspectOCL: Using aspects to ease maintenance of evolving constraint specification. Empir. Softw. Eng. 2019, 24, 2674–2724. [Google Scholar] [CrossRef]

- Barisal, S.K.; Dutta, A.; Godboley, S.; Sahoo, B.; Mohapatra, D.P. MC/DC guided Test Sequence Prioritization using Firefly Algorithm. Evol. Intell. 2021, 14, 105–118. [Google Scholar] [CrossRef]

- Suhail, S.; Malik, S.U.R.; Jurdak, R.; Hussain, R.; Matulevičius, R.; Svetinovic, D. Towards situational aware cyber-physical systems: A security-enhancing use case of blockchain-based digital twins. Comput. Ind. 2022, 141, 103699. [Google Scholar] [CrossRef]

- Ami, A.S.; Cooper, N.; Kafle, K.; Moran, K.; Poshyvanyk, D.; Nadkarni, A. Why Crypto-detectors Fail: A Systematic Evaluation of Cryptographic Misuse Detection Techniques. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–26 May 2022; pp. 614–631. [Google Scholar] [CrossRef]

- Canakci, S.; Delshadtehrani, L.; Eris, F.; Taylor, M.B.; Egele, M.; Joshi, A. DirectFuzz: Automated Test Generation for RTL Designs using Directed Graybox Fuzzing. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC) 2021, San Francisco, CA, USA, 5–9 December 2021; pp. 529–534. [Google Scholar] [CrossRef]

- Aleman, J.L.M.; Agenjo, A.; Carretero, S.; Kosmidis, L. On the MC/DC Code Coverage of Vulkan SC GPU Code. In Proceedings of the 41st Digital Avionics System Conference, Portsmouth, VA, USA, 18–22 September 2022. [Google Scholar] [CrossRef]

- Tatale, S.; Prakash, V.C. Automatic Generation and Optimization of Combinatorial Test Cases from UML Activity Diagram Using Particle Swarm Optimization. Ing. Syst. d’Inform. 2022, 27, 49–59. [Google Scholar] [CrossRef]

- Avdeenko, T.; Serdyukov, K. Automated test data generation based on a genetic algorithm with maximum code coverage and population diversity. Appl. Sci. 2021, 11, 4673. [Google Scholar] [CrossRef]

- Lemieux, C.; Inala, J.P.; Lahiri, S.K.; Sen, S. CODAMOSA: Escaping Coverage Plateaus in Test Generation with Pre-trained Large Language Models. 2023, pp. 1–13. Available online: https://github.com/microsoft/codamosa (accessed on 14 March 2023).

- Fadhil, H.M.; Abdullah, M.N.; Younis, M.I. Innovations in t-way test creation based on a hybrid hill climbing-greedy algorithm. IAES Int. J. Artif. Intell. 2023, 12, 794–805. [Google Scholar] [CrossRef]

- Gupta, N.; Sharma, A.; Pachariya, M.K. Multi-objective test suite optimization for detection and localization of software faults. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 2897–2909. [Google Scholar] [CrossRef]

- Khaleel, S.I.; Anan, R. A review paper: Optimal test cases for regression testing using artificial intelligent techniques. Int. J. Electr. Comput. Eng. 2023, 13, 1803–1816. [Google Scholar] [CrossRef]

- Barisal, S.K.; Chauhan, S.P.S.; Dutta, A.; Godboley, S.; Sahoo, B.; Mohapatra, D.P. BOOMPizer: Minimization and prioritization of CONCOLIC based boosted MC/DC test cases. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 9757–9776. [Google Scholar] [CrossRef]

- Sartaj, H.; Iqbal, M.Z.; Jilani, A.A.A.; Khan, M.U. A Search-Based Approach to Generate MC/DC Test Data for OCL Constraints. In Proceedings of the Search-Based Software Engineering: 11th International Symposium, SSBSE 2019, Tallinn, Estonia, 31 August–1 September 2019; Lecture Notes in Computer Science. Springer International Publishing: Berlin, Germany, 2019; Volume 11664, pp. 105–120. [Google Scholar] [CrossRef]

- Zafar, M.N.; Afzal, W.; Enoiu, E. Evaluating System-Level Test Generation for Industrial Software: A Comparison between Manual, Combinatorial and Model-Based Testing. In Proceedings of the 3rd ACM/IEEE International Conference on Automation of Software Test, Pittsburgh, PA, USA, 17–18 May 2022; pp. 148–159. [Google Scholar] [CrossRef]

- Jha, P.; Sahu, M.; Isobe, T. A UML Activity Flow Graph-Based Regression Testing Approach. Appl. Sci. 2023, 13, 5379. [Google Scholar] [CrossRef]

- Tiwari, R.G.; Pratap Srivastava, A.; Bhardwaj, G.; Kumar, V. Exploiting UML Diagrams for Test Case Generation: A Review. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; pp. 457–460. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Y.; Deng, G.; Liu, Y.; Wan, R.; Wu, R.; Ji, D.; Xu, S.; Bao, M. Morest: Model-Based RESTful API Testing with Execution Feedback; Association for Computing Machinery: New York, NY, USA, 2022; Volume 2022-May. [Google Scholar] [CrossRef]

- El-Attar, M.; Abdul-Ghani, H.A. Using security robustness analysis for early-stage validation of functional security requirements. Requir. Eng. 2016, 21, 1–27. [Google Scholar] [CrossRef]

- Afrose, S.; Xiao, Y.; Rahaman, S.; Miller, B.P.; Yao, D. Evaluation of Static Vulnerability Detection Tools With Java Cryptographic API Benchmarks. IEEE Trans. Softw. Eng. 2023, 49, 485–497. [Google Scholar] [CrossRef]

- Ribeiro, V.; Cruzes, D.S.; Travassos, G.H. Understanding Factors and Practices of Software Security and Performance Verification. In Proceedings of the 19th Brazilian Symposium on Software Quality, Sbcopenlib, Brazil, 1–4 December 2020. [Google Scholar]

- Szűgyi, Z.; Porkoláb, Z. Comparison of DC and MC/DC Code Coverages. Acta Electrotech. Inform. 2013, 13, 57–63. [Google Scholar] [CrossRef]

- Marques, F.; Morgado, A.; Fragoso Santos, J.; Janota, M. TestSelector: Automatic Test Suite Selection for Student Projects. In Proceedings of the Runtime Verification: 22nd International Conference, RV 2022, Tbilisi, GA, USA, 28–30 September 2022; Lecture Notes in Computer Science. Springer International Publishing: Berlin, Germany, 2022; Volume 13498, pp. 283–292. [Google Scholar] [CrossRef]

- Senjyu, T.; Mahalle, P.N.; Perumal, T.; Joshi, A. ICT with Intelligent Applications; Springer: New York, NY, USA, 2020; Volume 1. [Google Scholar]

- Yang, Y.; Xia, X.; Lo, D.; Grundy, J. A Survey on Deep Learning for Software Engineering. ACM Comput. Surv. 2022, 54, 1–73. [Google Scholar] [CrossRef]

- Elyasaf, A.; Farchi, E.; Margalit, O.; Weiss, G.; Weiss, Y. Generalized Coverage Criteria for Combinatorial Sequence Testing. IEEE Trans. Softw. Eng. 2023, 1–12. [Google Scholar] [CrossRef]

| Elicitation of Security Requirement | Goal | Sub-Goal |

|---|---|---|

| The application should authenticate the User using a valid username and Password. | Security | Authentication |

| Authorization codes should be set up and modified only by the System Administrator. | Security | Authorization |

| ID* | SMC-SA-001 |

| Goal* | Security |

| Sub Goal* | Authentication |

| Misuse Case Name* | Steal Login Details IMPLEMENTS steal sensitive data |

| Associated Misusers* | Information Thief |

| Author Name | ABC |

| Date | dd/mm/yy |

| Description* | Misuser gets access through automated attacks such as credential stuffing and brute force technique to Login into the system to perform illegal activities with the user data. |

| Preconditions* | The login page is accessible to the Information Thief. |

| Trigger* | Information Thief clicks the login button |

| Basic Flow* | Information Thief uses an automated attack tool to generate many combinations of usernames and passwords. Login Details, i.e., username, Password, and Captcha matched with the login details of the system. On Successful Login, a verification code will be sent on the user email id/SMS for multifactor authentication. If a user receives a verification code and verifies the login attempt, then the Information thief will be redirected to the User’s personal and sensitive data pages. |

| Alternate Flow* | BF-2. In case of non-authentic/invalid login details, i.e., username, Password, and Captcha, or the number of login attempts are greater than three against the same IP, it will be blocked. BF-4. If the multifactor authentication verification code is not received through Email/SMS, repeat the Bf3. |

| Assumption | The system has login forms feeding input into database queries. |

| Threatens Use Case* | User Login |

| Business Rules | The Hospital system shall be available to its end-users over the internet. |

| Stakeholder & Threats | Hospital O/I Maintenance Department, O/I User Department, Store Keeper, Dispenser. If deleted, data loss reveals sensitive information to damage the business and reputation of the hospital. |

| Threatens Use case Mitigation Note: | If a user from the same IP address attempt three logins failed attempts, block the IP. Also, apply Captcha and multifactor authentication to avoid attacks. All fields with * are mandatory for Structured Misuse Case Description. |

| a | self. Username = ‘valid’ |

| b | self. Password = ‘valid’ |

| c | self.captcha = ‘valid’ |

| d | self.attempts < 3 |

| No. | a | b | c | d | ((a ∧ b) ∧ (c ∧ d)) |

|---|---|---|---|---|---|

| 1 | F | F | F | F | F |

| 2 | F | F | F | T | F |

| 3 | F | F | T | F | F |

| 4 | F | F | T | T | F |

| 5 | F | T | F | F | F |

| 6 | F | T | F | T | F |

| 7 | F | T | T | F | F |

| 8 | F | T | T | T | F |

| 9 | T | F | F | F | F |

| 10 | T | F | F | T | F |

| 11 | T | F | T | F | F |

| 12 | T | F | T | T | F |

| 13 | T | T | F | F | F |

| 14 | T | T | F | T | F |

| 15 | T | T | T | F | F |

| 16 | T | T | T | T | T |

| No. | a | b | c | d | ((a ∧ b) ∧ (c ∧ d)) |

|---|---|---|---|---|---|

| 1 | F | F | F | F | F |

| 2 | F | F | F | T | F |

| 3 | F | F | T | F | F |

| 4 | F | F | T | T | F |

| 5 | F | T | F | F | F |

| 6 | F | T | F | T | F |

| 7 | F | T | T | F | F |

| 8 | F | T | T | T | F |

| 9 | T | F | F | F | F |

| 10 | T | F | F | T | F |

| 11 | T | F | T | F | F |

| 12 | T | F | T | T | F |

| 13 | T | T | F | F | F |

| 14 | T | T | F | T | F |

| 15 | T | T | T | F | F |

| 16 | T | T | T | T | T |

| Constraint No. | Test Scenario | Constraint |

|---|---|---|

| 8 | Invalid Username Unsuccessful login | self. Username = ‘Invalid’ ∧ self. Password = ‘valid’) ∧ (self.captcha = ‘valid’ ∧ self.attempts < 3) |

| 12 | Unsuccessful Login due to invalid Password | self. Username = ‘valid’ ∧ self. Password = ‘Invalid’) ∧ (self.captcha = ‘valid’ ∧ self.attempts < 3)) |

| 14 | Unsuccessful Login due to invalid Captcha | self. Username = ‘valid’ ∧ self. Password = ‘valid’) ∧ (self.captcha = ‘Invalid’ ∧ self.attempts < 3)) |

| 15 | Unsuccessful Login due to more than three login attempts | self. Username = ‘valid’ ∧ self. Password = ‘valid’) ∧ (self.captcha = ‘valid’ ∧ self.attempts > 3)) |

| 16 | Successful Login | self. Username = ‘valid’ ∧ self. Password = ‘valid’) ∧ (self.captcha = ‘valid’ ∧ self.attempts < 3))) |

| TC # | Scenario | ECP | Input | Expected Output | |||

|---|---|---|---|---|---|---|---|

| Username | Password | Captcha | Attempts | ||||

| TC-08 | Invalid Username Unsuccessful login | Username: Invalid Class: {0–9, @#$%^&*()[]{}} | Admin123 | Admin@98ml | As12 | 1 | Unsuccessful Login due to the wrong username |

| Password: Valid: {A–Z, a–z, 0–9, !@#$%^&*();:[]{}} | admin_#@!11 | ||||||

| Captcha: Valid Class: {A–Z},{a–z},{0–9} | AB23C | ||||||

| Login Attempts: Valid: {0 < attempt ≤ 3} | 2 | ||||||

| TC-SA-12 | Unsuccessful Login due to invalid Password | Username: Valid: {A–Z, a–z} | user | Unsuccessful Login due to the wrong Password | |||

| Password:Invalid Class: Password = {A–Z},{a–z} | 1234 | ||||||

| Captcha: Valid Class: {A–Z},{a–z},{0–9} | XYZ88 | ||||||

| Login Attempts: Valid: {0 < attempt ≤ 3} | 3 | ||||||

| TC-SA-14 | Unsuccessful Login due to invalid Captcha | Username: Valid: {A–Z, a–z} | ABC | Unsuccessful Login due to invalid Captcha | |||

| Passsword: Valid: {A–Z, a–z, 0–9, !@#$%^&*();:[]{}} | Admin&12345 | ||||||

| Captcha: Invalid class: {!@#$%^&*();:[]{}} | ZX&12 | ||||||

| Login Attempts: Valid Class: {attempt > 3} | 1 | ||||||

| TC-SA-15 | Unsuccessful Login due to more than three login attempts | Username: Valid: {A–Z, a–z} | User | Unsuccessful Login due to more than three login attempts | |||

| Password: Valid: {A–Z, a–z, 0–9, !@#$%^&*();:[]{}} | Admin&123 | ||||||

| Captcha: Invalid class: {!@#$%^&*();:[]{}} | ZXC12 | ||||||

| Login Attempts: Invalid Class: {attempt > 3} | 4 | ||||||

| TC-SA-16 | Successful Login | Username: Valid: {A–Z, a–z} | User | Successfully logged in | |||

| Passsword: Valid: {A–Z, a–z, 0–9, !@#$%^&*();:[]{}} | Admin&123 | ||||||

| Captcha: Valid Class: {A–Z},{a–z},{0–9} | ZXC12 | ||||||

| Login Attempts: Valid Class: {0 < attempt ≤ 3} | 1 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, T.; Faiz, R.B.; Aljaidi, M.; Khattak, A.; Samara, G.; Alsarhan, A.; Alazaidah, R. Maximizing Test Coverage for Security Threats Using Optimal Test Data Generation. Appl. Sci. 2023, 13, 8252. https://doi.org/10.3390/app13148252

Hussain T, Faiz RB, Aljaidi M, Khattak A, Samara G, Alsarhan A, Alazaidah R. Maximizing Test Coverage for Security Threats Using Optimal Test Data Generation. Applied Sciences. 2023; 13(14):8252. https://doi.org/10.3390/app13148252

Chicago/Turabian StyleHussain, Talha, Rizwan Bin Faiz, Mohammad Aljaidi, Adnan Khattak, Ghassan Samara, Ayoub Alsarhan, and Raed Alazaidah. 2023. "Maximizing Test Coverage for Security Threats Using Optimal Test Data Generation" Applied Sciences 13, no. 14: 8252. https://doi.org/10.3390/app13148252

APA StyleHussain, T., Faiz, R. B., Aljaidi, M., Khattak, A., Samara, G., Alsarhan, A., & Alazaidah, R. (2023). Maximizing Test Coverage for Security Threats Using Optimal Test Data Generation. Applied Sciences, 13(14), 8252. https://doi.org/10.3390/app13148252