Efficient Tree Policy with Attention-Based State Representation for Interactive Recommendation

Abstract

1. Introduction

- An attention-based state representation model for IRS is proposed that can effectively capture the user dynamics even when the sequential data are long and noisy.

- An efficient tree-structured policy is devised that can improve the learning and decision efficiency of TPGR through reinforcement learning.

- The proposed model is evaluated with state-of-the-art methods and the results demonstrate that the proposed method is effective and efficient on three benchmark datasets.

2. Literature Review

2.1. State Representation Modeling for a Deep-Reinforcement-Learning-Based Interactive Recommendation

2.2. Dealing with Large Discrete Action Space in Reinforcement Learning

3. Method

3.1. Problem Statement

- State S: A state is defined as the historical interactions between the user and the recommender agent.

- Action A: An action is the suggested items provided by the recommender agent.

- Transition probability function P: is the transition function that determines the new state after the recommender agent suggests item a under observation s, which models the dynamics of user preference.

- Reward function R: is a function that calculates the immediate reward received by the recommender agent after the user provides feedback when given the recommendation items a under state s.

- Discount factor : defines the measurement of present value of long-term rewards.

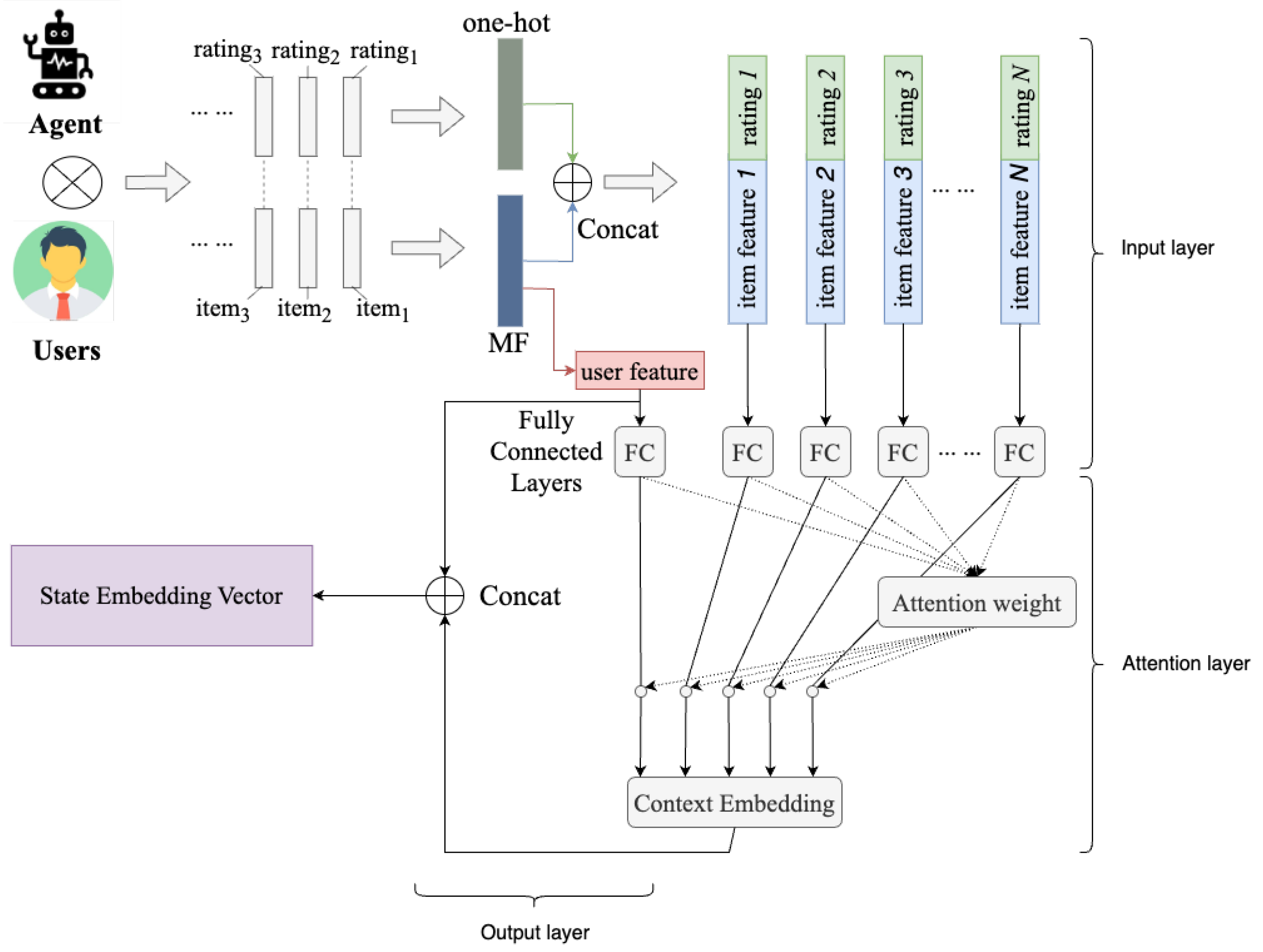

3.2. Attention-Based State Representation Model

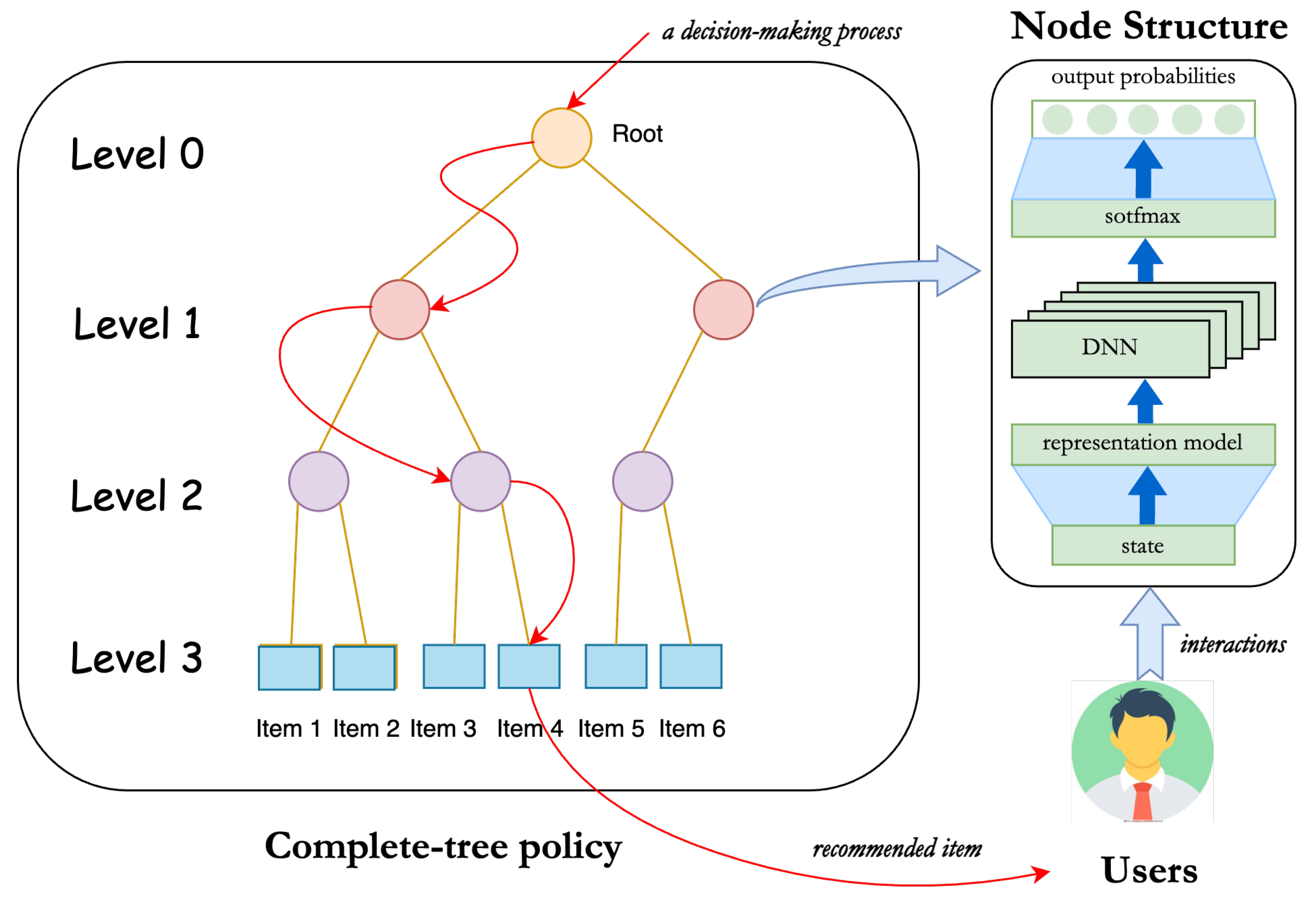

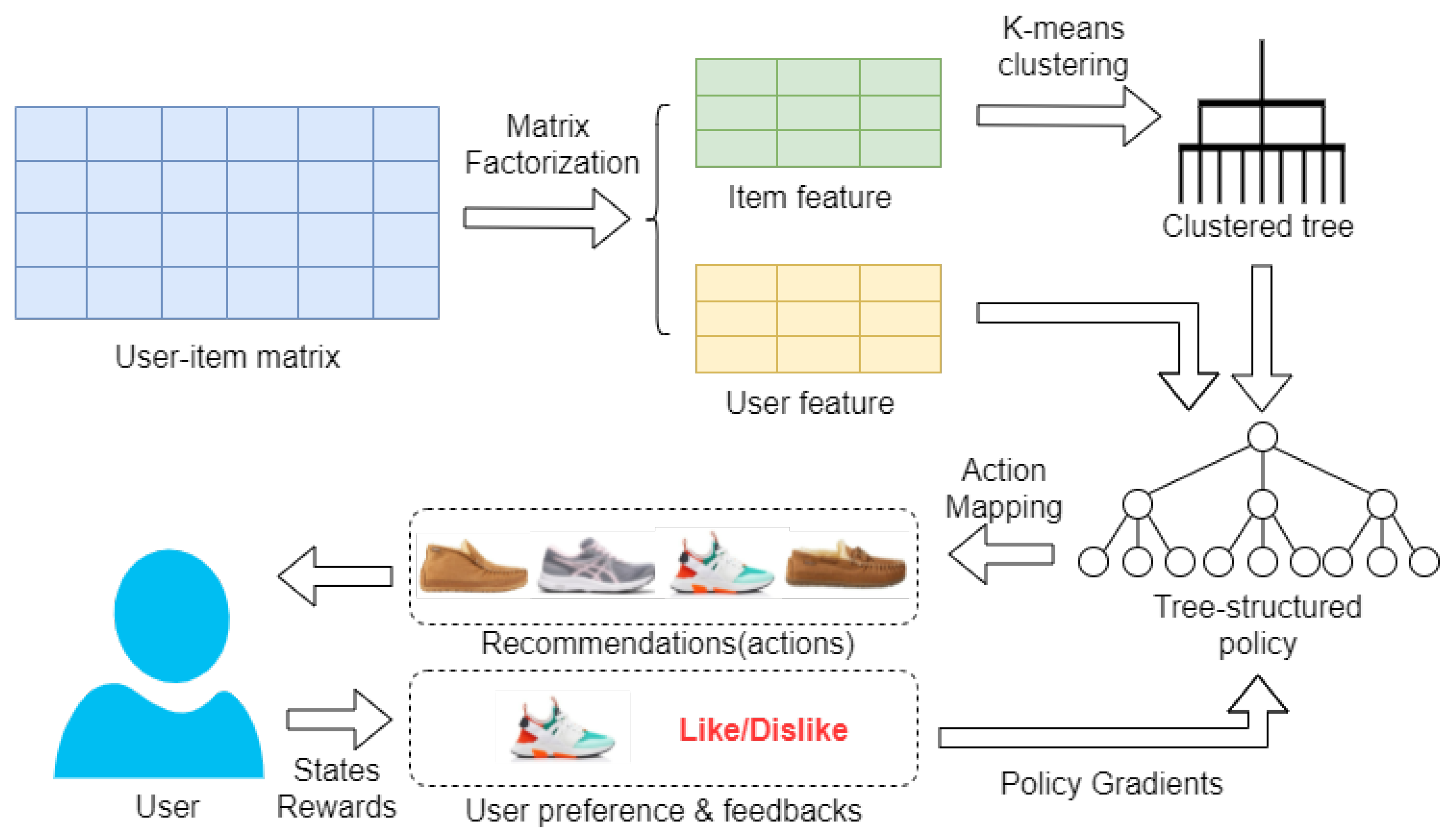

3.3. Efficient Tree-Structured Policy

- All-shared: The parameters of the state representation model are shared across all the tree nodes.

- Layer-shared: The parameters of the state representation model are shared across different layers; that is, for one layer of the tree, the nodes hold the same state representation model.

| Algorithm 1 Decision making based on the complete tree policy |

|

3.4. Learning Process

| Algorithm 2 K-means clustering algorithm for building the complete tree policy |

|

| Algorithm 3 Learning the complete tree policy |

|

4. Results and Discussion

- RQ1: How does the proposed method perform compared with the state-of-the-art interactive recommendation methods?

- RQ2: Does our method improve learning efficiency?

- RQ3: Does the proposed state representation method improve the performance over the state-of-the-art methods?

- RQ4: How do the different parameter-sharing strategies affect the performance of our model?

4.1. Experimental Setting

4.1.1. Datasets

4.1.2. Evaluation Metrics

- Average Reward: Since IRS aims to maximize the total reward of the episode, the average reward is a straightforward performance measurement. We adopt the reward over the top-K suggested items. If the top-K suggested items contain the item that the user selects, then the reward is set to the ratings commented on by the user. Otherwise, the reward over top-K suggested items is set to 0.

- Hit Ratio HR@K: HR measures the fraction of items that the user favors in the recommendation list and is calculated as below:where we define if the item user selects and favors () is in the top-K suggested items.

- Mean Reciprocal Rank MRR@K: MRR@K measures the average reciprocal rank of the first relevant item. Denoting as the rank of the first relevant recommendation item, MRR@K is calculated as below:

4.1.3. Compared Methods

- Popularity: Ranks the top k frequent items according to their popularity measured by a number of ratings, a simple but widely adopted baseline method.

- SVD: Suggests recommendations based on singular value decomposition (SVD). For the IRS setting, the model is trained after each user interaction and gives recommendations with the predicted highest rating.

- DDPG-KNN: A DDPG-based method that maps the discrete action space to a continuous one, then selects K nearest items in the continuous space with the max Q-value obtained by the critic network [23]. In our experiment, the K value is set to .

- DQN-R: A DQN-based method that adopts a refined DQN to evaluate the Q-values of the items and chooses the item with the max Q-value [24].

- TPGR: Adopts a tree-structured policy and uses the policy gradient to optimize the tree-structured policy [5]. This is the state-of-the-art IRS approach and is similar to our proposed method.

4.2. Performance Evaluation (RQ1)

4.3. Efficiency Evaluation (RQ2)

4.4. Influence of the Attention-Based State Representation (RQ3)

- Caser [51]: A popular CNN-based model for sequential recommendation by embedding the sequence with multiple convolutional filters to capture the user dynamics.

- ATEM [26]: An attention-based model for sequential recommendation. Compared to the proposed state representation model, ATEM ignores the user feature and feedback.

- TPGR’s state representation model [5]: An RNN-based state representation model that encodes the state with the final output of RNN.

- DRR-att [4]: An attention-based state representation model that uses an attention mechanism and average pool to obtain the user’s feature. Compared to DRR-att, our method introduces the user’s feedback and preprocessed the user and item feature with a fully connected layer.

4.5. Effect of Different Parameter Sharing Strategies (RQ4)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Experiment Details

References

- Zou, L.; Xia, L.; Gu, Y.; Zhao, X.; Liu, W.; Huang, J.X.; Yin, D. Neural Interactive Collaborative Filtering. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 June 2020; pp. 749–758. [Google Scholar]

- Zhao, X.; Zhang, W.; Wang, J. Interactive collaborative filtering. In Proceedings of the 22nd ACM international conference on Information and Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2013; pp. 1411–1420. [Google Scholar]

- Zhang, Q.; Cao, L.; Shi, C.; Niu, Z. Neural Time-Aware Sequential Recommendation by Jointly Modeling Preference Dynamics and Explicit Feature Couplings. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 5125–5137. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Tang, R.; Li, X.; Zhang, W.; Ye, Y.; Chen, H.; Guo, H.; Zhang, Y.; He, X. State representation modeling for deep reinforcement learning based recommendation. Knowl. Based Syst. 2020, 205, 106170. [Google Scholar] [CrossRef]

- Chen, H.; Dai, X.; Cai, H.; Zhang, W.; Wang, X.; Tang, R.; Zhang, Y.; Yu, Y. Large-scale Interactive Recommendation with Tree-structured Policy Gradient. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3312–3320. [Google Scholar]

- Zhao, X.; Zhang, L.; Ding, Z.; Xia, L.; Tang, J.; Yin, D. Recommendations with negative feedback via pairwise deep reinforcement learning. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1040–1048. [Google Scholar]

- Wang, S.; Hu, L.; Wang, Y.; Sheng, Q.Z.; Orgun, M.; Cao, L. Modeling multi-purpose sessions for next-item recommendations via mixture-channel purpose routing networks. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; AAAI Press: Palo Alto, CA, USA, 2019; pp. 3771–3777. [Google Scholar]

- Wang, S.; Pasi, G.; Hu, L.; Cao, L. The era of intelligent recommendation: Editorial on intelligent recommendation with advanced AI and learning. IEEE Intell. Syst. 2020, 35, 3–6. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Yang, L.; Zheng, Q.; Pan, G. Sample complexity of policy gradient finding second-order stationary points. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 10630–10638. [Google Scholar]

- Yang, L.; Zheng, G.; Zhang, Y.; Zheng, Q.; Li, P.; Pan, G. On convergence of gradient expected sarsa (λ). In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 10621–10629. [Google Scholar]

- Shi, J.C.; Yu, Y.; Da, Q.; Chen, S.Y.; Zeng, A.X. Virtual-taobao: Virtualizing real-world online retail environment for reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4902–4909. [Google Scholar]

- Zou, L.; Xia, L.; Ding, Z.; Song, J.; Liu, W.; Yin, D. Reinforcement learning to optimize long-term user engagement in recommender systems. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2810–2818. [Google Scholar]

- Zhou, S.; Dai, X.; Chen, H.; Zhang, W.; Ren, K.; Tang, R.; He, X.; Yu, Y. Interactive recommender system via knowledge graph-enhanced reinforcement learning. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 179–188. [Google Scholar]

- Cai, X.; Han, J.; Li, W.; Zhang, R.; Pan, S.; Yang, L. A Three-Layered Mutually Reinforced Model for Personalized Citation Recommendation. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 6026–6037. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Xia, L.; Zhang, L.; Ding, Z.; Yin, D.; Tang, J. Deep reinforcement learning for page-wise recommendations. In Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, BC, USA, 2 October 2018; pp. 95–103. [Google Scholar]

- Afsar, M.M.; Crump, T.; Far, B. Reinforcement learning based recommender systems: A survey. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Wang, S.; Hu, L.; Cao, L.; Huang, X.; Lian, D.; Liu, W. Attention-based transactional context embedding for next-item recommendation. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, AAAI 2018. Association for the Advancement of Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 2532–2539. [Google Scholar]

- Wang, S.; Cao, L.; Hu, L.; Berkovsky, S.; Huang, X.; Xiao, L.; Lu, W. Hierarchical attentive transaction embedding with intra-and inter-transaction dependencies for next-item recommendation. IEEE Intell. Syst. 2021, 36, 56–64. [Google Scholar] [CrossRef]

- Wang, N.; Wang, S.; Wang, Y.; Sheng, Q.Z.; Orgun, M. Modelling local and global dependencies for next-item recommendations. In Proceedings of the International Conference on Web Information Systems Engineering, Amsterdam, The Netherlands, 20–24 October 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 285–300. [Google Scholar]

- Song, W.; Wang, S.; Wang, Y.; Wang, S. Next-item recommendations in short sessions. In Proceedings of the 15th ACM Conference on Recommender Systems, Amsterdam, The Netherlands, 27 September–1 October 2021; pp. 282–291. [Google Scholar]

- Zhang, J.; Hao, B.; Chen, B.; Li, C.; Chen, H.; Sun, J. Hierarchical reinforcement learning for course recommendation in MOOCs. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 435–442. [Google Scholar]

- Dulac-Arnold, G.; Evans, R.; van Hasselt, H.; Sunehag, P.; Lillicrap, T.; Hunt, J.; Mann, T.; Weber, T.; Degris, T.; Coppin, B. Deep reinforcement learning in large discrete action spaces. arXiv 2015, arXiv:1512.07679. [Google Scholar]

- Zheng, G.; Zhang, F.; Zheng, Z.; Xiang, Y.; Yuan, N.J.; Xie, X.; Li, Z. DRN: A deep reinforcement learning framework for news recommendation. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 167–176. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Hu, L.; Wang, Y.; Cao, L.; Sheng, Q.Z.; Orgun, M. Sequential recommender systems: Challenges, progress and prospects. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, 10–16 August 2019; pp. 6332–6338. [Google Scholar]

- Chen, X.; Li, S.; Li, H.; Jiang, S.; Qi, Y.; Song, L. Generative adversarial user model for reinforcement learning based recommendation system. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 1052–1061. [Google Scholar]

- Zou, L.; Xia, L.; Du, P.; Zhang, Z.; Bai, T.; Liu, W.; Nie, J.Y.; Yin, D. Pseudo Dyna-Q: A reinforcement learning framework for interactive recommendation. In Proceedings of the 13th International Conference on Web Search and Data Mining, Houston, TX, USA, 3–7 February 2020; pp. 816–824. [Google Scholar]

- Lei, T.; Zhang, Y.; Artzi, Y. Training rnns as fast as cnns. In Proceedings of the ICLR 2018, Vancouver, BC, Canada, 30 August–3 May 2018. [Google Scholar]

- Gao, R.; Xia, H.; Li, J.; Liu, D.; Chen, S.; Chun, G. DRCGR: Deep reinforcement learning framework incorporating CNN and GAN-based for interactive recommendation. In Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1048–1053. [Google Scholar]

- Sun, F.; Liu, J.; Wu, J.; Pei, C.; Lin, X.; Ou, W.; Jiang, P. BERT4Rec: Sequential Recommendation with Bidirectional Encoder Representations from Transformer. In Proceedings of the CIKM ’19: 28th ACM International Conference on Information and Knowledge Management, New York, NY, USA, 3–7 November 2019; pp. 1441–1450. [Google Scholar] [CrossRef]

- Wang, S.; Cao, L.; Wang, Y.; Sheng, Q.Z.; Orgun, M.A.; Lian, D. A survey on session-based recommender systems. Acm Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Liu, H.; Cai, K.; Li, P.; Qian, C.; Zhao, P.; Wu, X. REDRL: A review-enhanced deep reinforcement learning model for interactive recommendation. Expert Syst. Appl. 2022, 213, 118926. [Google Scholar] [CrossRef]

- Wang, S.; Hu, L.; Wang, Y.; He, X.; Sheng, Q.Z.; Orgun, M.A.; Cao, L.; Ricci, F.; Yu, P.S. Graph learning based recommender systems: A review. In Proceedings of the 30th International Joint Conference on Artificial Intelligence, Virtual, 19–26 August 2021; AAAI Press: Palto, CA, USA, 2021; pp. 4644–4652. [Google Scholar]

- Wang, X.; Xu, Y.; He, X.; Cao, Y.; Wang, M.; Chua, T.S. Reinforced negative sampling over knowledge graph for recommendation. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 99–109. [Google Scholar]

- Wang, P.; Fan, Y.; Xia, L.; Zhao, W.X.; Niu, S.; Huang, J. KERL: A knowledge-guided reinforcement learning model for sequential recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 209–218. [Google Scholar]

- Chen, X.; Huang, C.; Yao, L.; Wang, X.; Zhang, W. Knowledge-guided deep reinforcement learning for interactive recommendation. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Xian, Y.; Fu, Z.; Muthukrishnan, S.; De Melo, G.; Zhang, Y. Reinforcement knowledge graph reasoning for explainable recommendation. In Proceedings of the 42nd international ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 285–294. [Google Scholar]

- Sallans, B.; Hinton, G.E. Reinforcement learning with factored states and actions. J. Mach. Learn. Res. 2004, 5, 1063–1088. [Google Scholar]

- Pazis, J.; Parr, R. Generalized value functions for large action sets. In Proceedings of the ICML, Washington, DC, USA, 28 June–2 July 2011. [Google Scholar]

- Bellemare, M.G.; Naddaf, Y.; Veness, J.; Bowling, M. The arcade learning environment: An evaluation platform for general agents. J. Artif. Intell. Res. 2013, 47, 253–279. [Google Scholar] [CrossRef]

- Chandak, Y.; Theocharous, G.; Kostas, J.; Jordan, S.; Thomas, P. Learning action representations for reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 941–950. [Google Scholar]

- Fu, M.; Agrawal, A.; Irissappane, A.A.; Zhang, J.; Huang, L.; Qu, H. Deep reinforcement learning framework for category-based item recommendation. IEEE Trans. Cybern. 2021, 52, 12028–12041. [Google Scholar] [CrossRef] [PubMed]

- Mnih, A.; Hinton, G.E. A scalable hierarchical distributed language model. Adv. Neural Inf. Process. Syst. 2008, 21, 1081–1088. [Google Scholar]

- Funk, S. Netflix Update: Try This at Home. Available online: http://sifter.org/simon/journal/20061211.html (accessed on 9 June 2023).

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- He, R.; McAuley, J. Ups and downs: Modeling the visual evolution of fashion trends with one-class collaborative filtering. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; pp. 507–517. [Google Scholar]

- McAuley, J.; Targett, C.; Shi, Q.; Van Den Hengel, A. Image-based recommendations on styles and substitutes. In Proceedings of the 38th international ACM SIGIR Conference on Research and Development in Information Retrieval, Santiego, Chila, 9–13 August 2015; pp. 43–52. [Google Scholar]

- Wang, S.; Xu, X.; Zhang, X.; Wang, Y.; Song, W. Veracity-aware and event-driven personalized news recommendation for fake news mitigation. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 3673–3684. [Google Scholar]

- Wang, S.; Zhang, X.; Wang, Y.; Liu, H.; Ricci, F. Trustworthy recommender systems. arXiv 2022, arXiv:2208.06265. [Google Scholar]

- Tang, J.; Wang, K. Personalized top-n sequential recommendation via convolutional sequence embedding. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018; pp. 565–573. [Google Scholar]

- Muja, M.; Lowe, D. Fast Library for Approximate Nearest Neighbors (FLANN). Available online: git://github.com/mariusmuja/flann.git or http://www.cs.ubc.ca/research/flann (accessed on 9 June 2023).

| Dataset | Instant Video | Baby | Musical Instruments |

|---|---|---|---|

| Number of Users | 122,609 | 175,826 | 98,959 |

| Number of Items | 8229 | 8256 | 17,380 |

| Number of Ratings | 145,983 | 228,861 | 125,044 |

| Number of Users in training set | 98,084 | 140,660 | 79,167 |

| Number of Users in testing set | 24,522 | 35,166 | 19,792 |

| Dataset | Metric | Popularity | SVD | DDPG-KNN (k = 1) | DDPG-KNN (k = 0.1N) | DQN-R | TPGR | ATRec | Improv. |

|---|---|---|---|---|---|---|---|---|---|

| Instant Video | Reward@10 | 0.00004 | 0.00041 | 0.00264 | 0.01619 | 0.05082 | 0.11041 | 0.15478 | 40.2% |

| HR@10 | 0.00004 | 0.00119 | 0.00336 | 0.01807 | 0.05376 | 0.11782 | 0.17416 | 57.7% | |

| MRR@10 | 0.00001 | 0.00041 | 0.00165 | 0.00679 | 0.02117 | 0.04569 | 0.08836 | 93.4% | |

| Reward@30 | 0.00012 | 0.003 | 0.00567 | 0.04598 | 0.11228 | 0.16860 | 0.24399 | 44.4% | |

| HR@30 | 0.00016 | 0.00507 | 0.00713 | 0.05137 | 0.12150 | 0.18169 | 0.28041 | 54.3% | |

| MRR@30 | 0.00002 | 0.00075 | 0.00190 | 0.00884 | 0.02497 | 0.05023 | 0.09431 | 87.8% | |

| Musical Instruments | Reward@10 | 0.00008 | 0.00056 | 0 | 0.00004 | 0.03542 | 0.05270 | 0.05575 | 4.8% |

| HR@10 | 0.00010 | 0.00054 | 0 | 0.00005 | 0.03965 | 0.05998 | 0.06669 | 11.1% | |

| MRR@10 | 0.00003 | 0.00011 | 0 | 0.00002 | 0.00506 | 0.03754 | 0.02262 | −39.7% | |

| Reward@30 | 0.00013 | 0.00332 | 0.00048 | 0.00004 | 0.06618 | 0.07350 | 0.09943 | 35.3% | |

| HR@30 | 0.00015 | 0.00471 | 0.00060 | 0.00005 | 0.07196 | 0.08056 | 0.12180 | 51.1% | |

| MRR@30 | 0.00004 | 0.00034 | 0.00004 | 0.00002 | 0.00747 | 0.03859 | 0.02590 | −32.9% | |

| Baby | Reward@10 | 0 | 0.00373 | 0.00019 | 0.00208 | 0.04811 | 0.06859 | 0.06842 | −0.00% |

| HR@10 | 0 | 0.00054 | 0.00019 | 0.00216 | 0.05405 | 0.07514 | 0.08587 | 14.3% | |

| MRR@10 | 0 | 0.00086 | 0.00003 | 0.00057 | 0.01791 | 0.03523 | 0.03628 | 3.0% | |

| Reward@30 | 0.00001 | 0.00965 | 0.00019 | 0.00370 | 0.09720 | 0.12384 | 0.12885 | 4.0% | |

| HR@30 | 0.00003 | 0.01871 | 0.00019 | 0.00381 | 0.11064 | 0.13759 | 0.16719 | 21.5% | |

| MRR@30 | 0 | 0.00220 | 0.00003 | 0.00066 | 0.02142 | 0.03973 | 0.04311 | 9.3% |

| Method | Instant Video | Musical Instruments | Baby |

|---|---|---|---|

| DDPG-KNN (k = 1) | 0.56430 | 0.23432 | 1.42474 |

| DDPG-KNN (k = 0.1N) | 0.88891 | 1.15585 | 1.72415 |

| DQN-R | 0.64808 | 5.64286 | 1.75619 |

| TPGR | 0.07419 | 0.07971 | 0.06205 |

| ATRec | 0.05439 | 0.06201 | 0.04382 |

| Improv. | 36.4% | 28.5% | 41.6% |

| Method | Average Decision Time per Item | Average Decision Time for Top-K Items | ||||

|---|---|---|---|---|---|---|

| Instant Video | Musical Instruments | Baby | Instant Video | Musical Instruments | Baby | |

| TPGR | 0.00073 | 0.00072 | 0.00071 | 6.03045 | 12.66505 | 5.91112 |

| ATRec | 0.00939 | 0.01135 | 0.000814 | 0.00939 | 0.01135 | 0.000814 |

| Dataset | Metric | All-Shared | Layer-Shared |

|---|---|---|---|

| Instant Video | Reward@10 | 0.15478 | 0.11970 |

| HR@10 | 0.15151 | 0.17416 | |

| MRR@10 | 0.08836 | 0.05442 | |

| Reward@30 | 0.24399 | 0.22471 | |

| HR@30 | 0.28041 | 0.25890 | |

| MRR@30 | 0.09431 | 0.06133 | |

| Average learning time per-step | 0.05439 | 0.07010 | |

| Average decision time per-step | 0.00939 | 0.01216 | |

| Musical Instruments | Reward@10 | 0.05500 | 0.05191 |

| HR@10 | 0.06776 | 0.06305 | |

| MRR@10 | 0.02333 | 0.02208 | |

| Reward@30 | 0.09998 | 0.10186 | |

| HR@30 | 0.12205 | 0.12486 | |

| MRR@30 | 0.02647 | 0.02556 | |

| Average learning time per-step | 0.06201 | 0.08463 | |

| Average decision time per-step | 0.01135 | 0.01593 | |

| Baby | Reward@10 | 0.07272 | 0.06842 |

| HR@10 | 0.08944 | 0.08587 | |

| MRR@10 | 0.03883 | 0.03628 | |

| Reward@30 | 0.12885 | 0.13162 | |

| HR@30 | 0.16719 | 0.17207 | |

| MRR@30 | 0.04311 | 0.04106 | |

| Average learning time per-step | 0.04382 | 0.05972 | |

| Average decision time per-step | 0.00814 | 0.01149 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, L.; Zhang, Q.; Wang, S.; Zhang, Z.; Zhou, B.; Wu, M.; Li, S. Efficient Tree Policy with Attention-Based State Representation for Interactive Recommendation. Appl. Sci. 2023, 13, 7726. https://doi.org/10.3390/app13137726

Shi L, Zhang Q, Wang S, Zhang Z, Zhou B, Wu M, Li S. Efficient Tree Policy with Attention-Based State Representation for Interactive Recommendation. Applied Sciences. 2023; 13(13):7726. https://doi.org/10.3390/app13137726

Chicago/Turabian StyleShi, Longxiang, Qi Zhang, Shoujin Wang, Zilin Zhang, Binbin Zhou, Minghui Wu, and Shijian Li. 2023. "Efficient Tree Policy with Attention-Based State Representation for Interactive Recommendation" Applied Sciences 13, no. 13: 7726. https://doi.org/10.3390/app13137726

APA StyleShi, L., Zhang, Q., Wang, S., Zhang, Z., Zhou, B., Wu, M., & Li, S. (2023). Efficient Tree Policy with Attention-Based State Representation for Interactive Recommendation. Applied Sciences, 13(13), 7726. https://doi.org/10.3390/app13137726