Detection of Elbow OCD in the Ultrasound Image by Artificial Intelligence Using YOLOv8

Abstract

:1. Introduction

2. Materials and Methods

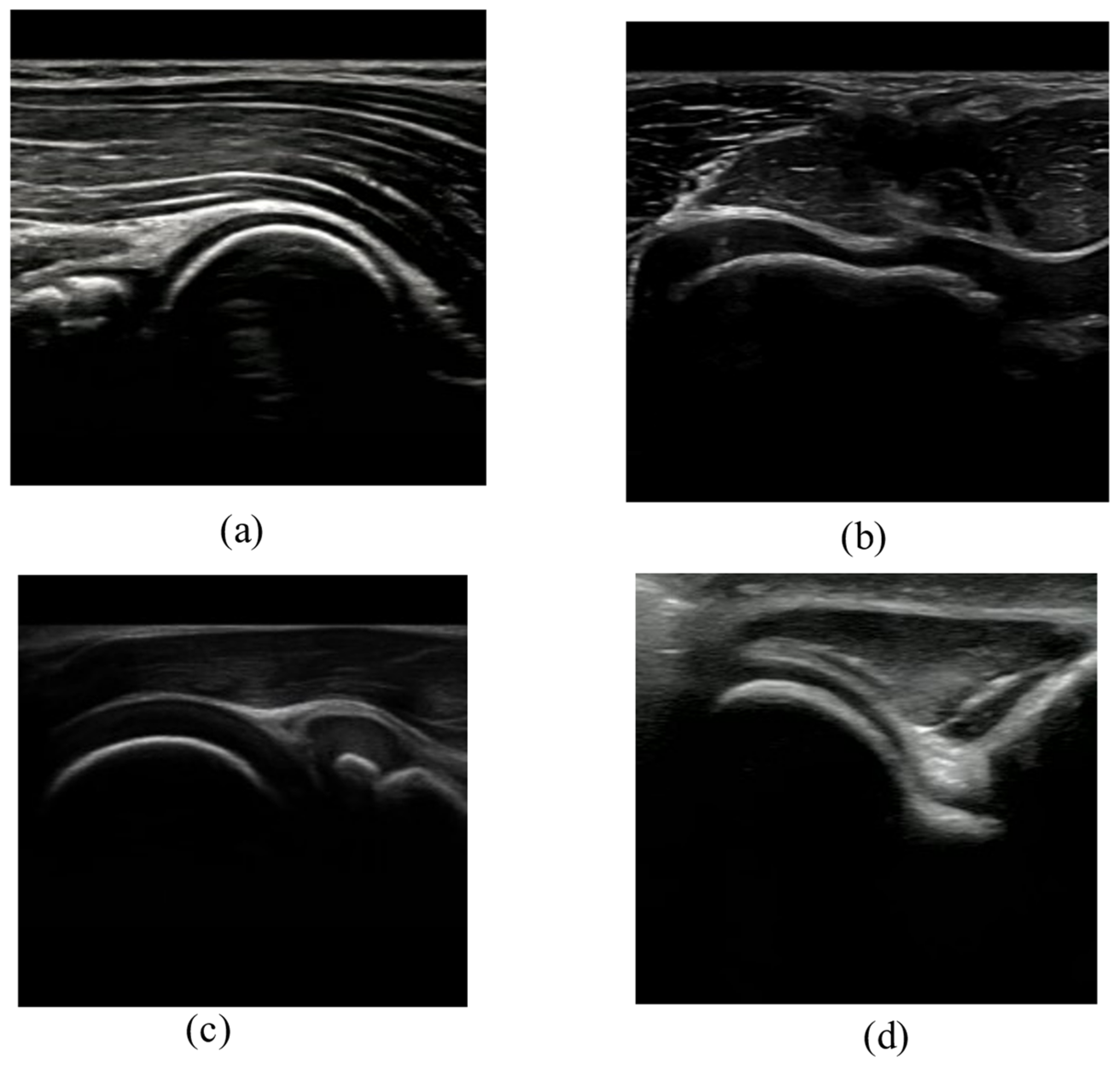

2.1. Data Collection

2.2. Model Training

2.2.1. Image Classification Task

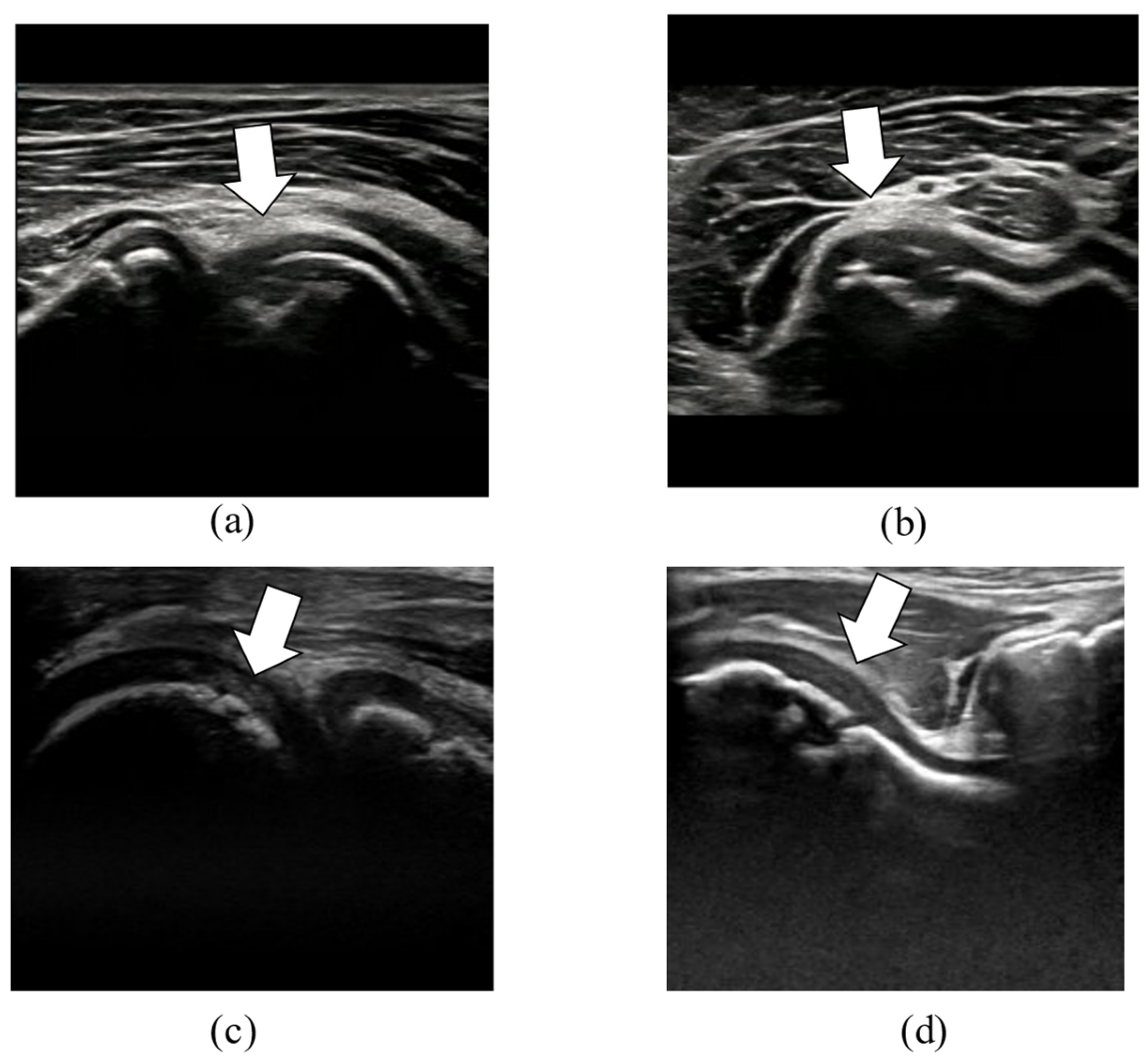

2.2.2. Object Detection Task

3. Results

3.1. Image Classification

3.2. Object Detection

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kida, Y.; Morihara, T.; Kotoura, Y.; Hojo, T.; Tachiiri, H.; Sukenari, T.; Iwata, Y.; Furukawa, R.; Oda, R.; Arai, Y.; et al. Prevalence and Clinical Characteristics of Osteochondritis Dissecans of the Humeral Capitellum Among Adolescent Baseball Players. Am. J. Sports Med. 2014, 42, 1963–1971. [Google Scholar] [CrossRef] [PubMed]

- Matsuura, T.; Suzue, N.; Iwame, T.; Nishio, S.; Sairyo, K. Prevalence of Osteochondritis Dissecans of the Capitellum in Young Baseball Players: Results Based on Ultrasonographic Findings. Orthop. J. Sports Med. 2014, 2, 2325967114545298. [Google Scholar] [CrossRef] [PubMed]

- Bruns, J.; Werner, M.; Habermann, C.R. Osteochondritis Dissecans of Smaller Joints: The Elbow. Cartilage 2021, 12, 407–417. [Google Scholar] [CrossRef] [PubMed]

- Sayani, J.; Plotkin, T.; Burchette, D.T.; Phadnis, J. Treatment Strategies and Outcomes for Osteochondritis Dissecans of the Capi-tellum. Am. J. Sports Med. 2021, 49, 4018–4029. [Google Scholar] [CrossRef] [PubMed]

- Matsuura, T.; Iwame, T.; Iwase, J.; Sairyo, K. Osteochondritis Dissecans of the Capitellum: Review of the Literature. J. Med. Investig. 2020, 67, 217–221. [Google Scholar] [CrossRef] [PubMed]

- Yoshizuka, M.; Sunagawa, T.; Nakashima, Y.; Shinomiya, R.; Masuda, T.; Makitsubo, M.; Adachi, N. Comparison of sonography and MRI in the evaluation of stability of capitellar osteochondritis dissecans. J. Clin. Ultrasound 2018, 46, 247–252. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Iwame, T.; Matsuura, T.; Suzue, N.; Kashiwaguchi, S.; Iwase, T.; Fukuta, S.; Hamada, D.; Goto, T.; Tsutsui, T.; Wada, K.; et al. Outcome of an elbow check-up system for child and adolescent baseball players. J. Med. Investig. 2016, 63, 171–174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ikeda, K.; Okamoto, Y.; Ogawa, T.; Terada, Y.; Kajiwara, M.; Miyasaka, T.; Michinobu, R.; Hara, Y.; Yoshii, Y.; Nakajima, T.; et al. Use of a Small Car-Mounted Magnetic Resonance Imaging System for On-Field Screening for Osteochondritis Dissecans of the Humeral Capitellum. Diagnostics 2022, 12, 2551. [Google Scholar] [CrossRef] [PubMed]

- Potocnik, J.; Foley, S.; Thomas, E. Current and potential applications of artificial intelligence in medical imaging practice: A nar-rative review. J. Med. Imaging Radiat. Sci. 2023, 54, 376–385. [Google Scholar] [CrossRef] [PubMed]

- Shinohara, I.; Inui, A.; Mifune, Y.; Nishimoto, H.; Yamaura, K.; Mukohara, S.; Yoshikawa, T.; Kato, T.; Furukawa, T.; Hoshino, Y.; et al. Using deep learning for ultrasound images to diagnose carpal tunnel syndrome with high accuracy. Ultrasound Med. Biol. 2022, 48, 2052–2059. [Google Scholar] [CrossRef] [PubMed]

- Shinohara, I.; Inui, A.; Mifune, Y.; Nishimoto, H.; Mukohara, S.; Yoshikawa, T.; Kuroda, R. Ultrasound With Artificial Intelligence Models Predicted Palmer 1B Triangular Fibrocar-tilage Complex Injuries. Arthroscopy 2022, 38, 2417–2424. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Cvpr 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Aly, G.H.; Marey, M.; El-Sayed, S.A.; Tolba, M.F. YOLO Based Breast Masses Detection and Classification in Full-Field Digital Mammograms. Comput. Methods Programs Biomed. 2021, 200, 105823. [Google Scholar] [CrossRef] [PubMed]

- Su, Y.; Liu, Q.; Xie, W.; Hu, P. YOLO-LOGO: A transformer-based YOLO segmentation model for breast mass detection and seg-mentation in digital mammograms. Comput. Methods Programs. Biomed. 2022, 221, 106903. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, S.; Li, X.; Miao, S.; Dong, C.; Gao, C.; Liu, X.; Hao, D.; Xu, W.; Huang, M.; et al. Primary bone tumor detection and classification in full-field bone radiographs via YOLO deep learning model. Eur. Radiol. 2022, 33, 4237–4248. [Google Scholar] [CrossRef] [PubMed]

- Sakata, J.; Ishikawa, H.; Inoue, R.; Urata, D.; Ohinata, J.; Kimoto, T.; Yamamoto, N. Physical functions, to be or not to be a risk factor for osteochondritis dissecans of the humeral capitellum? JSES Int. 2022, 6, 1072–1077. [Google Scholar] [CrossRef] [PubMed]

- Maruyama, M.; Takahara, M.; Satake, H. Diagnosis and treatment of osteochondritis dissecans of the humeral capitellum. J. Orthop. Sci. 2018, 23, 213–219. [Google Scholar] [CrossRef] [PubMed]

- Matsuura, T.; Iwame, T.; Suzue, N.; Takao, S.; Nishio, S.; Arisawa, K.; Sairyo, K. Cumulative Incidence of Osteochondritis Dissecans of the Capitellum in Preadolescent Baseball Players. Arthroscopy 2019, 35, 60–66. [Google Scholar] [CrossRef] [PubMed]

- Otoshi, K.; Kikuchi, S.; Kato, K.; Sato, R.; Igari, T.; Kaga, T.; Konno, S. Age-Specific Prevalence and Clinical Characteristics of Humeral Medial Epicondyle Apophysitis and Osteochondritis Dissecans: Ultrasonographic Assessment of 4249 Players. Orthop. J. Sports Med. 2017, 5, 2325967117707703. [Google Scholar] [CrossRef] [PubMed]

- Shinohara, I.; Yoshikawa, T.; Inui, A.; Mifune, Y.; Nishimoto, H.; Mukohara, S.; Kato, T.; Furukawa, T.; Tanaka, S.; Kusunose, M.; et al. Degree of Accuracy with Which Deep Learning for Ultrasound Images Identifies Osteochondritis Dissecans of the Humeral Capitellum. Am. J. Sports Med. 2023, 51, 358–366. [Google Scholar] [CrossRef] [PubMed]

| Normal | OCD | Total | |

|---|---|---|---|

| Anterior long | 439 | 270 | 709 |

| Anterior short | 278 | 256 | 534 |

| Posterior long | 425 | 362 | 787 |

| Posterior short | 200 | 200 | 400 |

| Total | 1342 | 1088 | 2430 |

| Predicted Label | |||

|---|---|---|---|

| Normal | OCD | ||

| True Label | Normal | 477 | 0 |

| OCD | 1 | 393 | |

| Predicted Label | |||||

|---|---|---|---|---|---|

| AL | AS | PL | PS | ||

| True Label | AL | 137 | 0 | 6 | 0 |

| AS | 0 | 109 | 0 | 0 | |

| PL | 0 | 0 | 158 | 0 | |

| PS | 0 | 0 | 0 | 80 | |

| Model | Parameters (M) | mAP(50) | mAP(50–95) | Speed (ms/pic) | FLOPs (G) |

|---|---|---|---|---|---|

| YOLOv8n | 3.0 | 0.994 | 0.787 | 2.9 | 8.2 |

| YOLOv8m | 25.8 | 0.995 | 0.782 | 13.7 | 79.1 |

| YOLOv5n | 1.8 | 0.988 | 0.666 | 8.2 | 4.1 |

| YOLOv5m | 20.8 | 0.993 | 0.714 | 12.4 | 47.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Inui, A.; Mifune, Y.; Nishimoto, H.; Mukohara, S.; Fukuda, S.; Kato, T.; Furukawa, T.; Tanaka, S.; Kusunose, M.; Takigami, S.; et al. Detection of Elbow OCD in the Ultrasound Image by Artificial Intelligence Using YOLOv8. Appl. Sci. 2023, 13, 7623. https://doi.org/10.3390/app13137623

Inui A, Mifune Y, Nishimoto H, Mukohara S, Fukuda S, Kato T, Furukawa T, Tanaka S, Kusunose M, Takigami S, et al. Detection of Elbow OCD in the Ultrasound Image by Artificial Intelligence Using YOLOv8. Applied Sciences. 2023; 13(13):7623. https://doi.org/10.3390/app13137623

Chicago/Turabian StyleInui, Atsuyuki, Yutaka Mifune, Hanako Nishimoto, Shintaro Mukohara, Sumire Fukuda, Tatsuo Kato, Takahiro Furukawa, Shuya Tanaka, Masaya Kusunose, Shunsaku Takigami, and et al. 2023. "Detection of Elbow OCD in the Ultrasound Image by Artificial Intelligence Using YOLOv8" Applied Sciences 13, no. 13: 7623. https://doi.org/10.3390/app13137623

APA StyleInui, A., Mifune, Y., Nishimoto, H., Mukohara, S., Fukuda, S., Kato, T., Furukawa, T., Tanaka, S., Kusunose, M., Takigami, S., Ehara, Y., & Kuroda, R. (2023). Detection of Elbow OCD in the Ultrasound Image by Artificial Intelligence Using YOLOv8. Applied Sciences, 13(13), 7623. https://doi.org/10.3390/app13137623