From Scores to Predictions in Multi-Label Classification: Neural Thresholding Strategies

Abstract

:1. Introduction

- We designed novel, neural network-based thresholding models, called ThresNets, which, in terms of classification metrics, and as shown in experiments on synthetic and real datasets, are preferable compared to traditional thresholding methods. The models achieve this in a linear space complexity with respect to the number of labels. One of the proposed architectural variants of ThresNets is suitable to be initialized using threshold values obtained from traditional methods. This allows for knowledge transfer between the two methods. The independence of the method from the source of label scores is demonstrated by its positive results when applied to scores from both tested third-party scorers. A hybrid approach that combines neural and traditional thresholding strategies offers the best performance as measured by the macro-averaged F-measure metric on the tested real datasets.

- We draw a connection between classic thresholding techniques and neural network models by showing that our method can be seen as a neural implementation and a generalized version of traditional per-label optimized thresholding.

- This generalized thresholding method bases the final prediction for a given label on the score for that label as well as on all of the scores in a score vector, enabling it to the previously unseen ability to recover the classification system from mis-scoring situations. This ability is showcased for some concrete examples on real datasets. It was also empirically measured on artificially created multi-label score datasets created with the use of a simple generator coupled with a controlled scoring corruption module.

2. Problem Statement

2.1. Large-Scale Multi-Label Classification

2.2. Two Phases of Multi-Label Classifiers

- : scoring phase

- : thresholding phase

2.3. Thresholding Optimization

2.4. Evaluation Metrics

3. Related Works

3.1. Traditional Thresholding Methods

3.1.1. S-Cut

3.1.2. CS-Cut

3.1.3. R-Cut

3.1.4. DS-Cut

3.1.5. P-Cut

3.1.6. Scaled Versions

3.2. Newer Approaches to Obtaining Multi-Label Predictions

4. Neural Network Models for Thresholding Prediction Scores

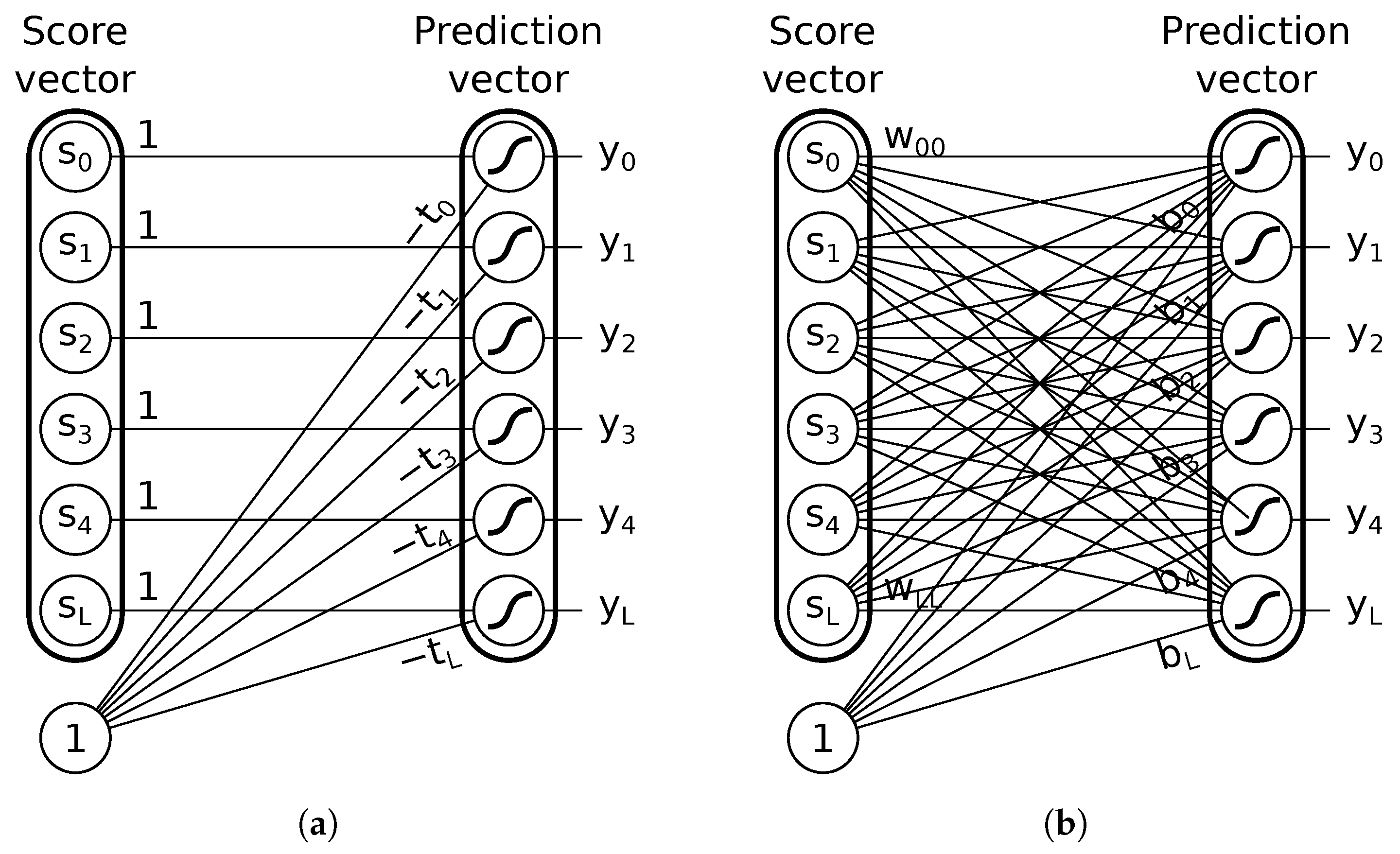

4.1. From Classic Strategies to Neural Networks

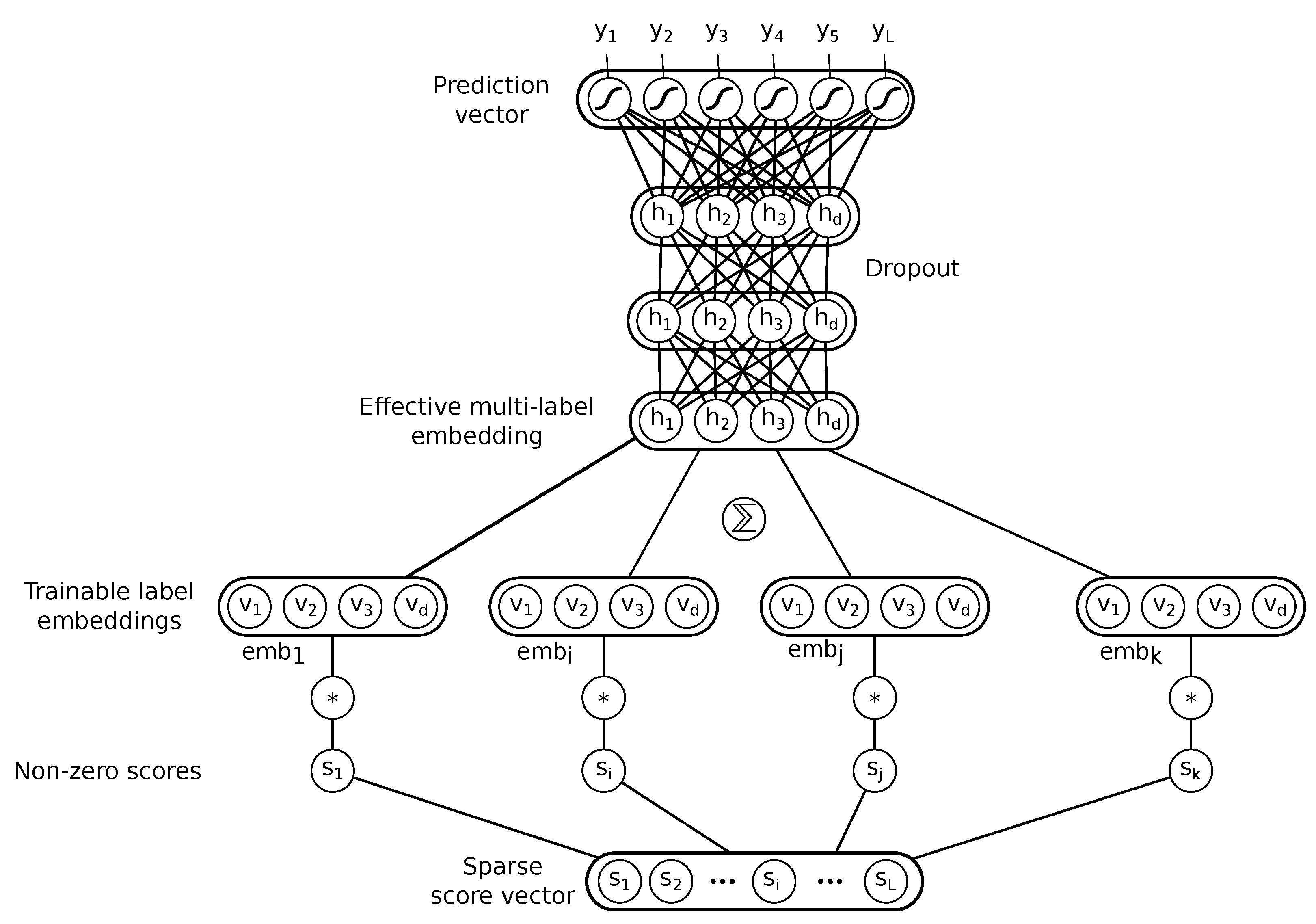

4.2. ThresNet—Neural Thresholding Model with Label Embeddings

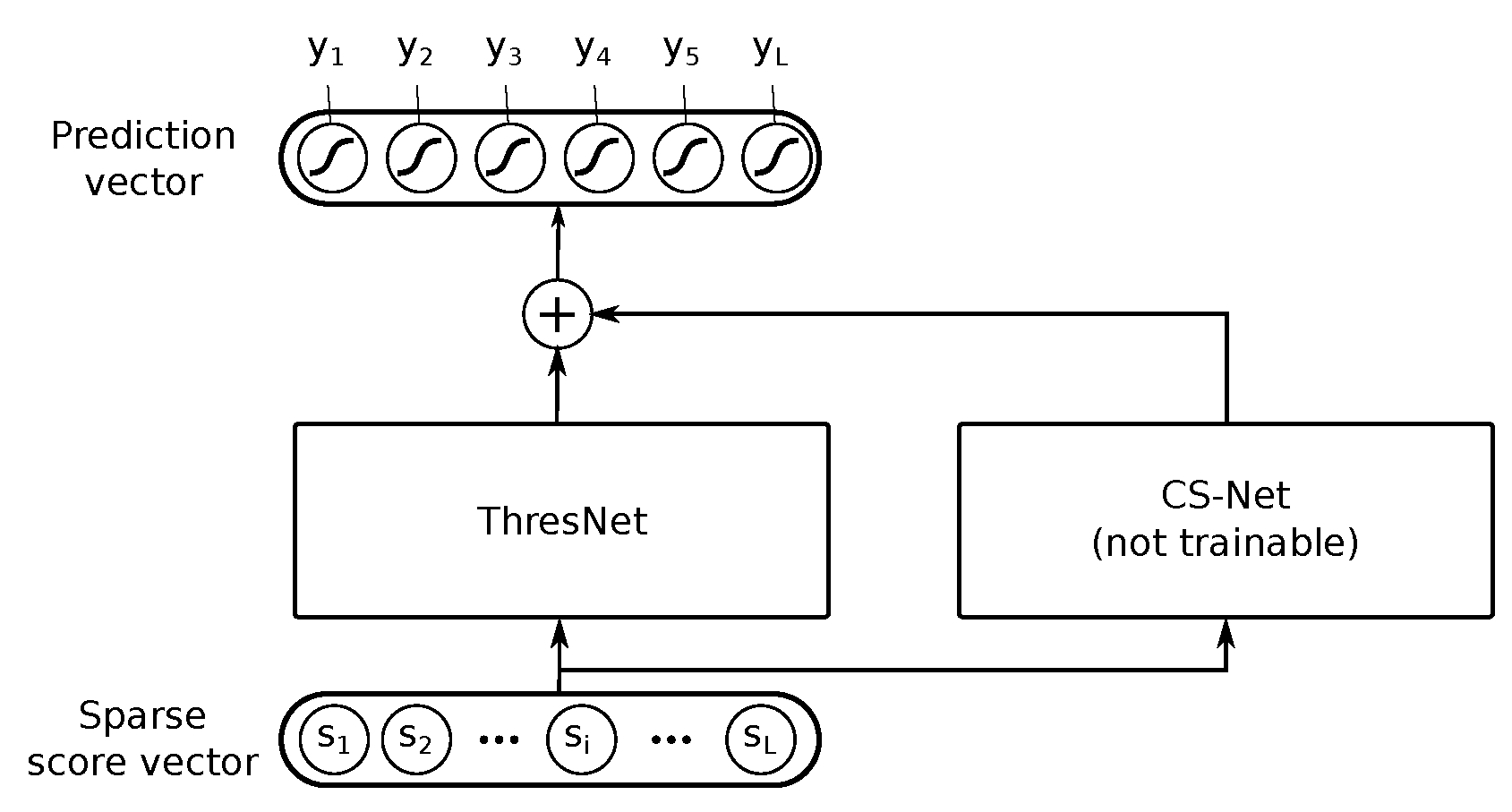

4.3. ThresNetCSS—A Residual Variant

4.4. Network Training

5. Experiments and Results

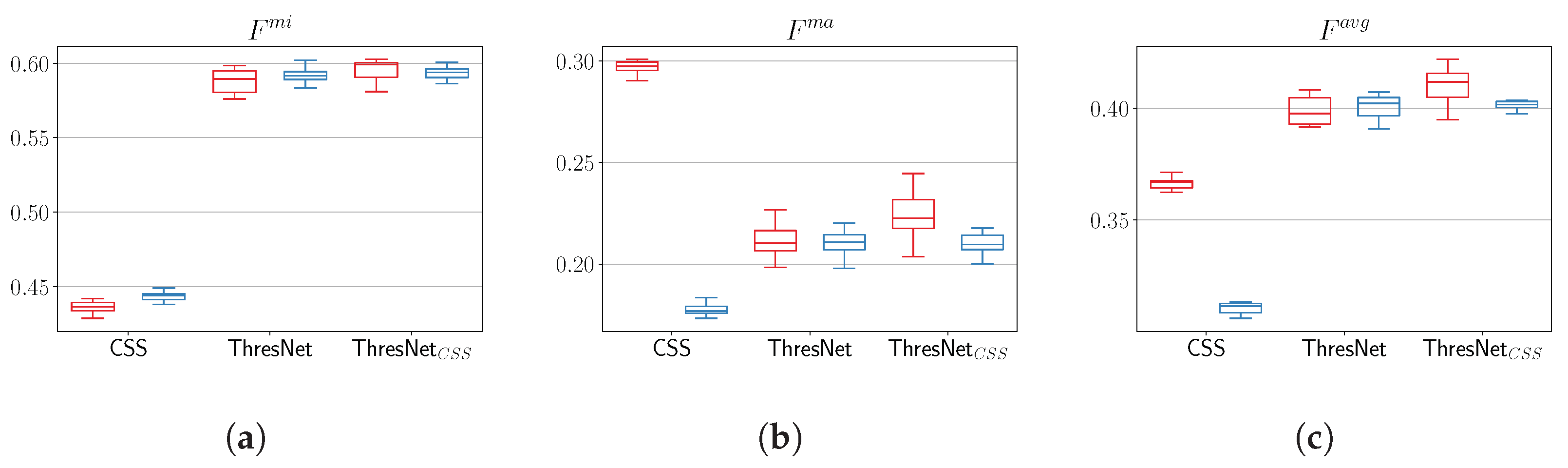

5.1. Synthetic Scores

5.1.1. Score Generator

5.1.2. Results

5.2. Real Datasets

5.2.1. Datasets

5.2.2. Methods

5.2.3. Quantitative Results

5.2.4. Qualitative Results

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| k-NN | k-Nearest Neighbors |

| EURLex4K | Eurlex dataset |

| FNR | False Negative Ratio |

| FPR | False Positive Ratio |

| LEML | Low-rank Empirical risk minimization for Multi-Label Learning |

| MLC | Multi-Label Classification |

| SimpleWiki2K | Simple English Wikipedia Dataset |

| XMLC | eXtreme Multi-Label Classification |

References

- Partalas, I.; Kosmopoulos, A.; Baskiotis, N.; Artières, T.; Paliouras, G.; Gaussier, E.; Androutsopoulos, I.; Amini, M.R.; Gallinari, P. LSHTC: A Benchmark for Large-Scale Text Classification. Available online: https://hal.science/hal-01691460 (accessed on 26 May 2023).

- Jiang, T.; Wang, D.; Sun, L.; Yang, H.; Zhao, Z.; Zhuang, F. LightXML: Transformer with Dynamic Negative Sampling for High-Performance Extreme Multi-label Text Classification. Proc. Aaai Conf. Artif. Intell. 2021, 35, 7987–7994. [Google Scholar] [CrossRef]

- Vu, H.T.; Nguyen, M.T.; Nguyen, V.C.; Pham, M.H.; Nguyen, V.Q.; Nguyen, V.H. Label-representative graph convolutional network for multi-label text classification. Appl. Intell. 2022, 53, 14759–14774. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, X.; Zhao, L.; Liang, Y.; Zhang, P.; Jin, B. Hybrid embedding-based text representation for hierarchical multi-label text classification. Expert Syst. Appl. 2022, 187, 115905. [Google Scholar] [CrossRef]

- Khataei Maragheh, H.; Gharehchopogh, F.S.; Majidzadeh, K.; Sangar, A.B. A New Hybrid Based on Long Short-Term Memory Network with Spotted Hyena Optimization Algorithm for Multi-Label Text Classification. Mathematics 2022, 10, 488. [Google Scholar] [CrossRef]

- Maltoudoglou, L.; Paisios, A.; Lenc, L.; Martínek, J.; Král, P.; Papadopoulos, H. Well-calibrated confidence measures for multi-label text classification with a large number of labels. Pattern Recognit. 2022, 122, 108271. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Kundalia, K.; Patel, Y.; Shah, M. Multi-label Movie Genre Detection from a Movie Poster Using Knowledge Transfer Learning. Augment. Hum. Res. 2020, 5, 11. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef] [Green Version]

- Cheng, X.; Lin, H.; Wu, X.; Shen, D.; Yang, F.; Liu, H.; Shi, N. Mltr: Multi-label classification with transformer. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Liang, J.; Xu, F.; Yu, S. A multi-scale semantic attention representation for multi-label image recognition with graph networks. Neurocomputing 2022, 491, 14–23. [Google Scholar] [CrossRef]

- Fonseca, E.; Plakal, M.; Font, F.; Ellis, D.P.W.; Serra, X. Audio tagging with noisy labels and minimal supervision. In Proceedings of the Submitted to DCASE2019 Workshop, New York, NY, USA, 25–26 October 2019. [Google Scholar]

- Ykhlef, H.; Diffallah, Z.; Allali, A. Ensembling Residual Networks for Multi-Label Sound Event Recognition with Weak Labeling. In Proceedings of the 2022 7th International Conference on Image and Signal Processing and their Applications (ISPA), Mostaganem, Algeria, 8–9 May 2022; pp. 1–6. [Google Scholar]

- Aironi, C.; Cornell, S.; Principi, E.; Squartini, S. Graph Node Embeddings for ontology-aware Sound Event Classification: An evaluation study. In Proceedings of the 2022 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 29 August–2 September 2022; pp. 414–418. [Google Scholar]

- Liu, W.; Ren, Y.; Wang, J. Attention Mixup: An Accurate Mixup Scheme Based On Interpretable Attention Mechanism for Multi-Label Audio Classification. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Zhong, Z.; Hirano, M.; Shimada, K.; Tateishi, K.; Takahashi, S.; Mitsufuji, Y. An Attention-Based Approach to Hierarchical Multi-Label Music Instrument Classification. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Wang, X.; Zhao, H.; Lu, B.L. Enhanced K-Nearest Neighbour Algorithm for Large-scale Hierarchical Multi-label Classification. In Proceedings of the Joint ECML/PKDD PASCAL Workshop on Large-Scale Hierarchical Classification, Athens, Greece, 5 September 2011; p. 58. [Google Scholar]

- Draszawka, K.; Szymański, J. Thresholding strategies for large scale multi-label text classifier. In Proceedings of the 6th International Conference on Human System Interaction (HSI), Sopot, Poland, 6–8 June 2013; pp. 350–355. [Google Scholar]

- Liu, Y.; Li, Q.; Wang, K.; Liu, J.; He, R.; Yuan, Y.; Zhang, H. Automatic multi-label ECG classification with category imbalance and cost-sensitive thresholding. Biosensors 2021, 11, 453. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, P.; Hu, X.; Yu, K. Learning common and label-specific features for multi-Label classification with correlation information. Pattern Recognit. 2022, 121, 108259. [Google Scholar] [CrossRef]

- Haghighian Roudsari, A.; Afshar, J.; Lee, W.; Lee, S. PatentNet: Multi-label classification of patent documents using deep learning based language understanding. Scientometrics 2022, 127, 207–231. [Google Scholar] [CrossRef]

- Bhatia, K.; Dahiya, K.; Jain, H.; Mittal, A.; Prabhu, Y.; Varma, M. The Extreme Classification Repository: Multi-Label Datasets and Code. Available online: http://manikvarma.org/downloads/XC/XMLRepository.html (accessed on 26 May 2023).

- Agrawal, R.; Gupta, A.; Prabhu, Y.; Varma, M. Multi-label learning with millions of labels: Recommending advertiser bid phrases for web pages. In Proceedings of the 22nd international conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; pp. 13–24. [Google Scholar]

- Jain, H.; Prabhu, Y.; Varma, M. Extreme multi-label loss functions for recommendation, tagging, ranking & other missing label applications. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 935–944. [Google Scholar]

- Prabhu, Y.; Kag, A.; Harsola, S.; Agrawal, R.; Varma, M. Parabel: Partitioned label trees for extreme classification with application to dynamic search advertising. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 993–1002. [Google Scholar]

- Jain, H.; Balasubramanian, V.; Chunduri, B.; Varma, M. Slice: Scalable linear extreme classifiers trained on 100 million labels for related searches. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 528–536. [Google Scholar]

- Medini, T.K.R.; Huang, Q.; Wang, Y.; Mohan, V.; Shrivastava, A. Extreme classification in log memory using count-min sketch: A case study of amazon search with 50m products. In Proceedings of the 32nd Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 13244–13254. [Google Scholar]

- Ye, H.; Chen, Z.; Wang, D.H.; Davison, B. Pretrained generalized autoregressive model with adaptive probabilistic label clusters for extreme multi-label text classification. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 10809–10819. [Google Scholar]

- Saini, D.; Jain, A.K.; Dave, K.; Jiao, J.; Singh, A.; Zhang, R.; Varma, M. GalaXC: Graph neural networks with labelwise attention for extreme classification. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 12–13 April 2021; pp. 3733–3744. [Google Scholar]

- Mittal, A.; Dahiya, K.; Malani, S.; Ramaswamy, J.; Kuruvilla, S.; Ajmera, J.; Chang, K.H.; Agarwal, S.; Kar, P.; Varma, M. Multi-modal extreme classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–14 June 2022; pp. 12393–12402. [Google Scholar]

- Dahiya, K.; Gupta, N.; Saini, D.; Soni, A.; Wang, Y.; Dave, K.; Jiao, J.; Dey, P.; Singh, A.; Hada, D.; et al. NGAME: Negative Mining-aware Mini-batching for Extreme Classification. In Proceedings of the 16th ACM International Conference on Web Search and Data Mining, Singapore, 27 February–3 March 2023; pp. 258–266. [Google Scholar]

- Yang, Y. A study of thresholding strategies for text categorization. In Proceedings of the 24th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, New Orleans, LA, USA, 9–13 September 2001; pp. 137–145. [Google Scholar]

- Triguero, I.; Vens, C. Labelling strategies for hierarchical multi-label classification techniques. Pattern Recognit. 2016, 56, 170–183. [Google Scholar] [CrossRef] [Green Version]

- Quevedo, J.R.; Luaces, O.; Bahamonde, A. Multilabel classifiers with a probabilistic thresholding strategy. Pattern Recognit. 2012, 45, 876–883. [Google Scholar] [CrossRef] [Green Version]

- Lewis, D.D. An evaluation of phrasal and clustered representations on a text categorization task. In Proceedings of the 15th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Copenhagen, Denmark, 21–24 June 1992; pp. 37–50. [Google Scholar]

- Li, L.; Wang, H.; Sun, X.; Chang, B.; Zhao, S.; Sha, L. Multi-label text categorization with joint learning predictions-as-features method. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 835–839. [Google Scholar]

- Wu, J.; Xiong, W.; Wang, W.Y. Learning to Learn and Predict: A Meta-Learning Approach for Multi-Label Classification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 4354–4364. [Google Scholar] [CrossRef]

- Yang, Y.Y.; Lin, Y.A.; Chu, H.M.; Lin, H.T. Deep learning with a rethinking structure for multi-label classification. In Proceedings of the Asian Conference on Machine Learning, PMLR, Nagoya, Japan, 17–19 November 2019; pp. 125–140. [Google Scholar]

- Huang, J.; Huang, A.; Guerra, B.C.; Yu, Y. PercentMatch: Percentile-based Dynamic Thresholding for Multi-Label Semi-Supervised Classification. arXiv 2022, arXiv:2208.13946. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, L.; Yang, X.; Su, H.; Zhu, J. Query2Label: A Simple Transformer Way to Multi-Label Classification. arXiv 2021, arXiv:2107.10834. [Google Scholar] [CrossRef]

- Ridnik, T.; Sharir, G.; Ben-Cohen, A.; Ben-Baruch, E.; Noy, A. Ml-decoder: Scalable and versatile classification head. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 32–41. [Google Scholar]

- Gray, S.; Radford, A.; Kingma, D.P. Gpu Kernels for Block-Sparse Weights. 2017. Available online: https://openai.com/research/block-sparse-gpu-kernels (accessed on 26 May 2023).

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, Valencia, Spain, 3–7 April 2017; pp. 427–431. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Dozat, T. Incorporating nesterov momentum into adam. In Proceedings of the 4th International Conference on Learning Representations: Workshop Track, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–4. [Google Scholar]

- Sechidis, K.; Tsoumakas, G.; Vlahavas, I. On the stratification of multi-label data. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Athens, Greece, 5–9 September 2011; pp. 145–158. [Google Scholar]

- Draszawka, K.; Boiński, T.; Szymański, J. TF-IDF Weighted Bag-of-Words Preprocessed Text Documents from Simple English Wikipedia. 2023. Available online: https://mostwiedzy.pl/en/open-research-data/tf-idf-weighted-bag-of-words-preprocessed-text-documents-from-simple-english-wikipedia,42511260848405-0 (accessed on 26 May 2023).

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Mencia, E.L.; Fürnkranz, J. Efficient pairwise multilabel classification for large-scale problems in the legal domain. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Antwerp, Belgium, 15–19 September 2008; pp. 50–65. [Google Scholar]

- Han, X.; Li, S.; Shen, Z. A k-NN method for large scale hierarchical text classification at LSHTC3. In Proceedings of the 2012 ECML/PKDD Discovery Challenge Workshop on Large-Scale Hierarchical Text Classification, Bristol, UK, 28 September 2012. [Google Scholar]

- Yu, H.F.; Jain, P.; Kar, P.; Dhillon, I. Large-scale multi-label learning with missing labels. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 593–601. [Google Scholar]

- Alotaibi, R.; Flach, P. Multi-label thresholding for cost-sensitive classification. Neurocomputing 2021, 436, 232–247. [Google Scholar] [CrossRef]

- Ghaderi Zefrehi, H.; Sheikhi, G.; Altınçay, H. Threshold prediction for detecting rare positive samples using a meta-learner. Pattern Anal. Appl. 2023, 26, 289–306. [Google Scholar] [CrossRef]

| Dataset | Feature Dims | Label Dims | Training Examples | Testing Examples | Avg. Examples per Label | Avg. Labels per Example |

|---|---|---|---|---|---|---|

| SimpleWiki2K | 97,179 | 1849 | 60,744 | 6761 | 52.60 | 1.60 |

| EURLex4K | 5000 | 3956 | 15,539 | 3809 | 20.86 | 5.31 |

| Thresholding Method | k-NN Scores | LEML Scores | ||||

|---|---|---|---|---|---|---|

| Fmi | Fma | Favg | Fmi | Fma | Favg | |

| CSS (single) | 0.434 | 0.299 | 0.367 | 0.429 | 0.168 | 0.299 |

| ThresNet (single) | 0.556 | 0.222 | 0.389 | 0.602 | 0.169 | 0.386 |

| ThresNetCSS (single) | 0.592 | 0.235 | 0.413 | 0.603 | 0.174 | 0.389 |

| CSS+ThresNet (single) | 0.444 | 0.297 | 0.371 | 0.470 | 0.189 | 0.329 |

| CSS+ThresNetCSS (single) | 0.449 | 0.304 | 0.377 | 0.472 | 0.193 | 0.332 |

| CSS (ensemble) | 0.442 | 0.308 | 0.375 | 0.434 | 0.177 | 0.306 |

| ThresNet (ensemble) | 0.602 | 0.216 | 0.409 | 0.622 | 0.165 | 0.394 |

| ThresNetCSS (ensemble) | 0.608 | 0.236 | 0.422 | 0.622 | 0.169 | 0.396 |

| CSS+ThresNet (ensemble) | 0.459 | 0.314 | 0.387 | 0.500 | 0.210 | 0.355 |

| CSS+ThresNetCSS (ensemble) | 0.467 | 0.320 | 0.393 | 0.502 | 0.213 | 0.358 |

| Thresholding Method | k-NN Scores | LEML Scores | ||||

|---|---|---|---|---|---|---|

| Fmi | Fma | Favg | Fmi | Fma | Favg | |

| CSS (single) | 0.344 | 0.211 | 0.278 | 0.425 | 0.166 | 0.295 |

| ThresNet (single) | 0.433 | 0.137 | 0.285 | 0.469 | 0.141 | 0.305 |

| ThresNetCSS (single) | 0.441 | 0.182 | 0.311 | 0.467 | 0.142 | 0.305 |

| CSS+ThresNet (single) | 0.331 | 0.206 | 0.268 | 0.446 | 0.167 | 0.307 |

| CSS+ThresNetCSS (single) | 0.339 | 0.210 | 0.274 | 0.447 | 0.169 | 0.308 |

| CSS (ensemble) | 0.356 | 0.219 | 0.287 | 0.428 | 0.166 | 0.297 |

| ThresNet (ensemble) | 0.464 | 0.144 | 0.304 | 0.467 | 0.130 | 0.299 |

| ThresNetCSS (ensemble) | 0.480 | 0.152 | 0.316 | 0.479 | 0.139 | 0.309 |

| CSS+ThresNet (ensemble) | 0.354 | 0.217 | 0.285 | 0.445 | 0.171 | 0.308 |

| CSS+ThresNetCSS (ensemble) | 0.353 | 0.215 | 0.284 | 0.450 | 0.173 | 0.311 |

| Label Name | k-NN | Scaled | CSS | ThresNetCSS |

|---|---|---|---|---|

| Score | Score | Prediction | Prediction | |

| Living people | 3.778 | 1.0 | FP | TN |

| Sportspeople stubs | 3.778 | 1.0 | FP | TN |

| Footballers from Tokyo | 0.153 | 0.041 | TN | TN |

| Japan stubs | 0.124 | 0.033 | TN | TN |

| North Korean footballers | 0.099 | 0.026 | TN | TN |

| Cities in Japan | 0.076 | 0.02 | TN | TN |

| Lists of Japanese football players | 0 | 0 | FN | TP |

| Label Name | k-NN | Scaled | CSS | ThresNetCSS |

|---|---|---|---|---|

| Score | Score | Prediction | Prediction | |

| Living people | 4.79 | 1.0 | FP | TN |

| Sportspeople stubs | 4.79 | 1.0 | FP | TN |

| Sports stubs | 2.961 | 0.618 | FN | TP |

| Uruguayan footballers | 0.17 | 0.035 | TN | TN |

| Croatian footballers | 0.158 | 0.033 | TN | TN |

| Geography stubs | 0.067 | 0.014 | TN | TN |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Draszawka, K.; Szymański, J. From Scores to Predictions in Multi-Label Classification: Neural Thresholding Strategies. Appl. Sci. 2023, 13, 7591. https://doi.org/10.3390/app13137591

Draszawka K, Szymański J. From Scores to Predictions in Multi-Label Classification: Neural Thresholding Strategies. Applied Sciences. 2023; 13(13):7591. https://doi.org/10.3390/app13137591

Chicago/Turabian StyleDraszawka, Karol, and Julian Szymański. 2023. "From Scores to Predictions in Multi-Label Classification: Neural Thresholding Strategies" Applied Sciences 13, no. 13: 7591. https://doi.org/10.3390/app13137591

APA StyleDraszawka, K., & Szymański, J. (2023). From Scores to Predictions in Multi-Label Classification: Neural Thresholding Strategies. Applied Sciences, 13(13), 7591. https://doi.org/10.3390/app13137591