Calibration and Multiple Reliability Assessments of a Scrum Machine Instrumented to Measure Force

Abstract

:Featured Application

Abstract

1. Introduction

- Present the basic assembly of our instrumented scrum machine to facilitate replication by other researchers and coaches;

- Present our calibration procedures for replication;

- Present the reliability of our machine, as no novel instrument can be deemed worthwhile without at least basic reliability.

2. Materials and Methods and Results

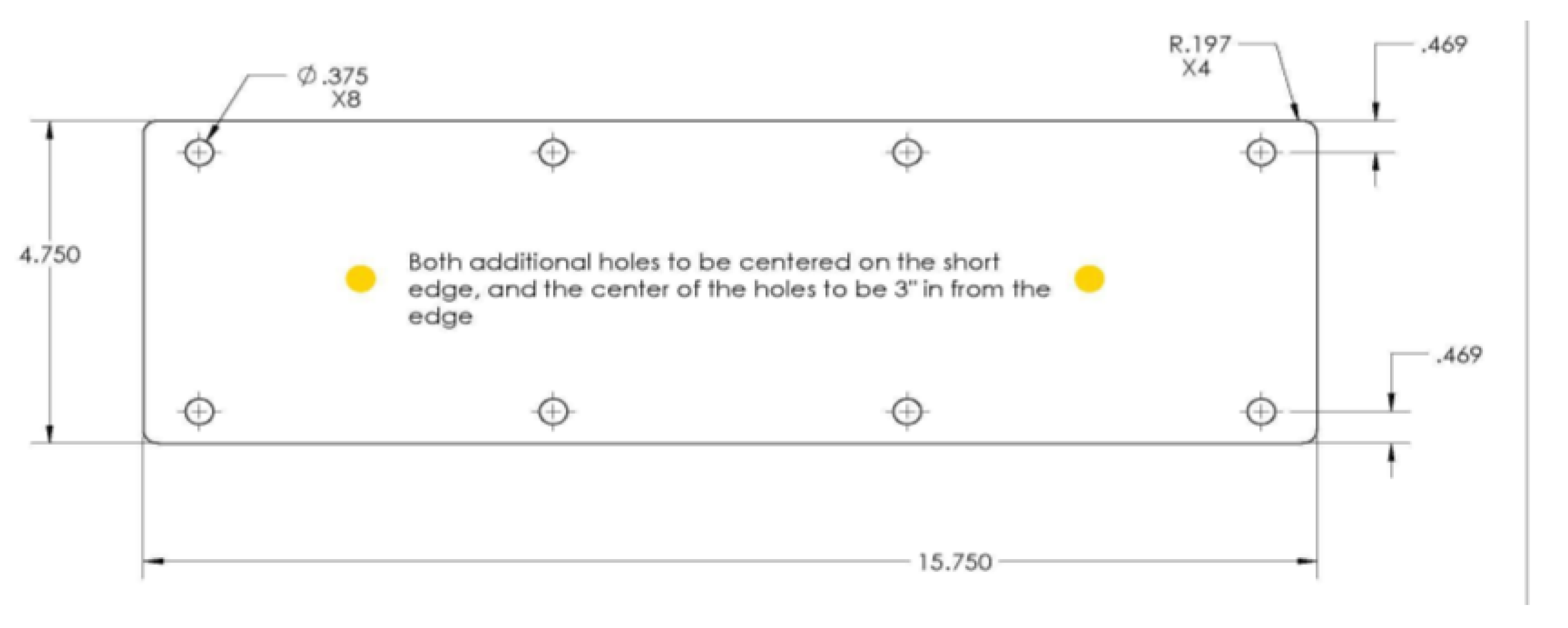

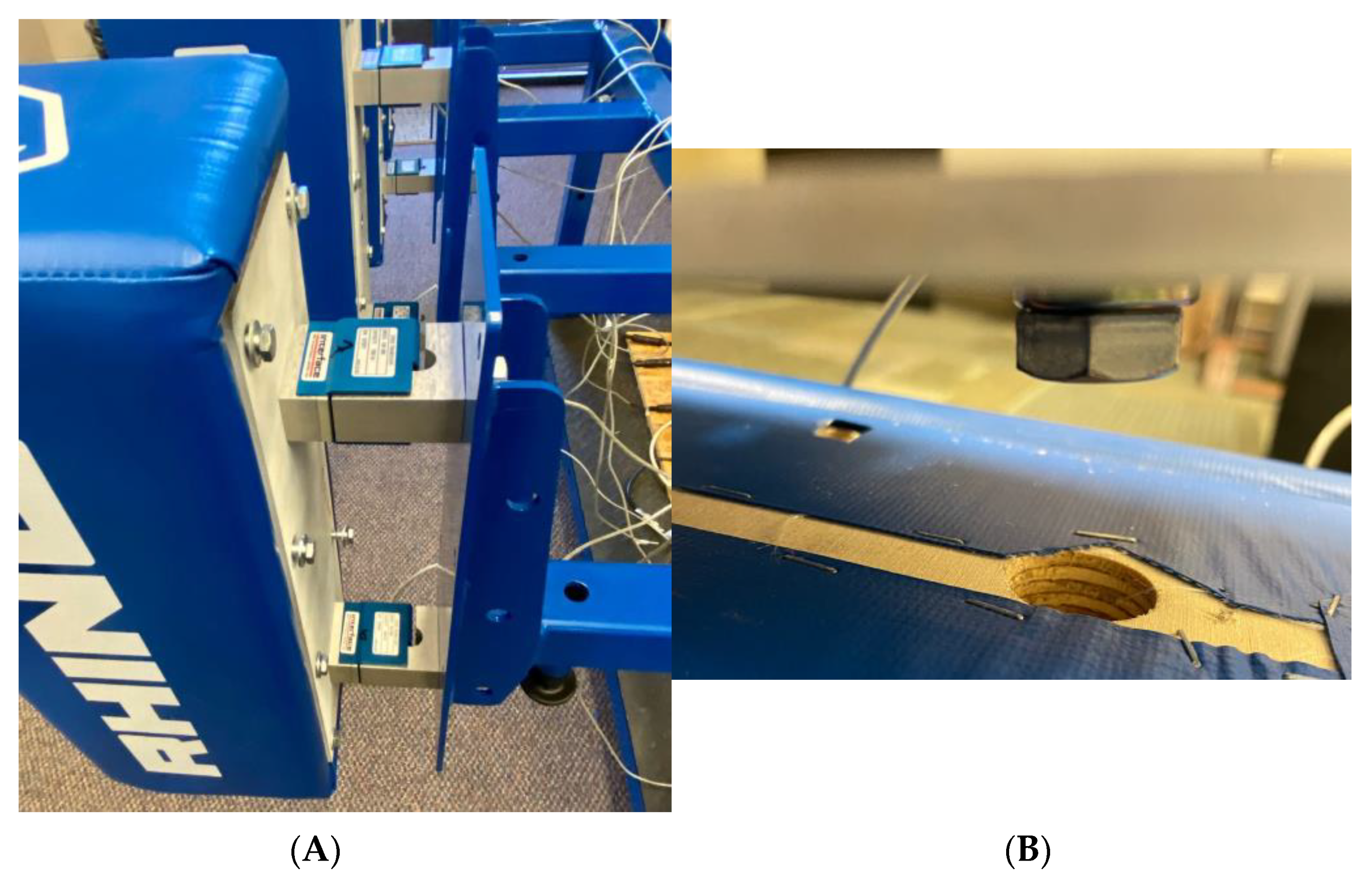

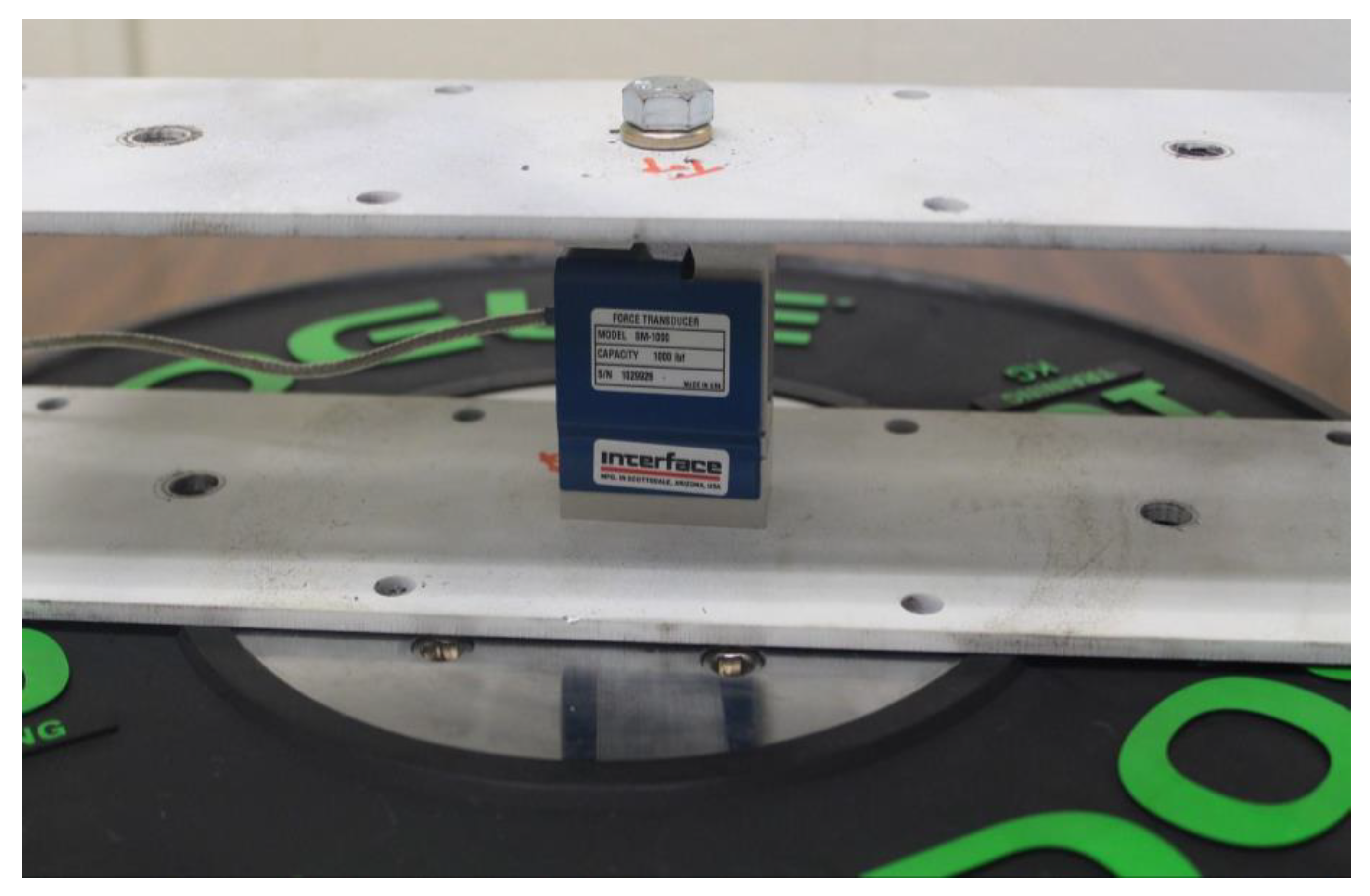

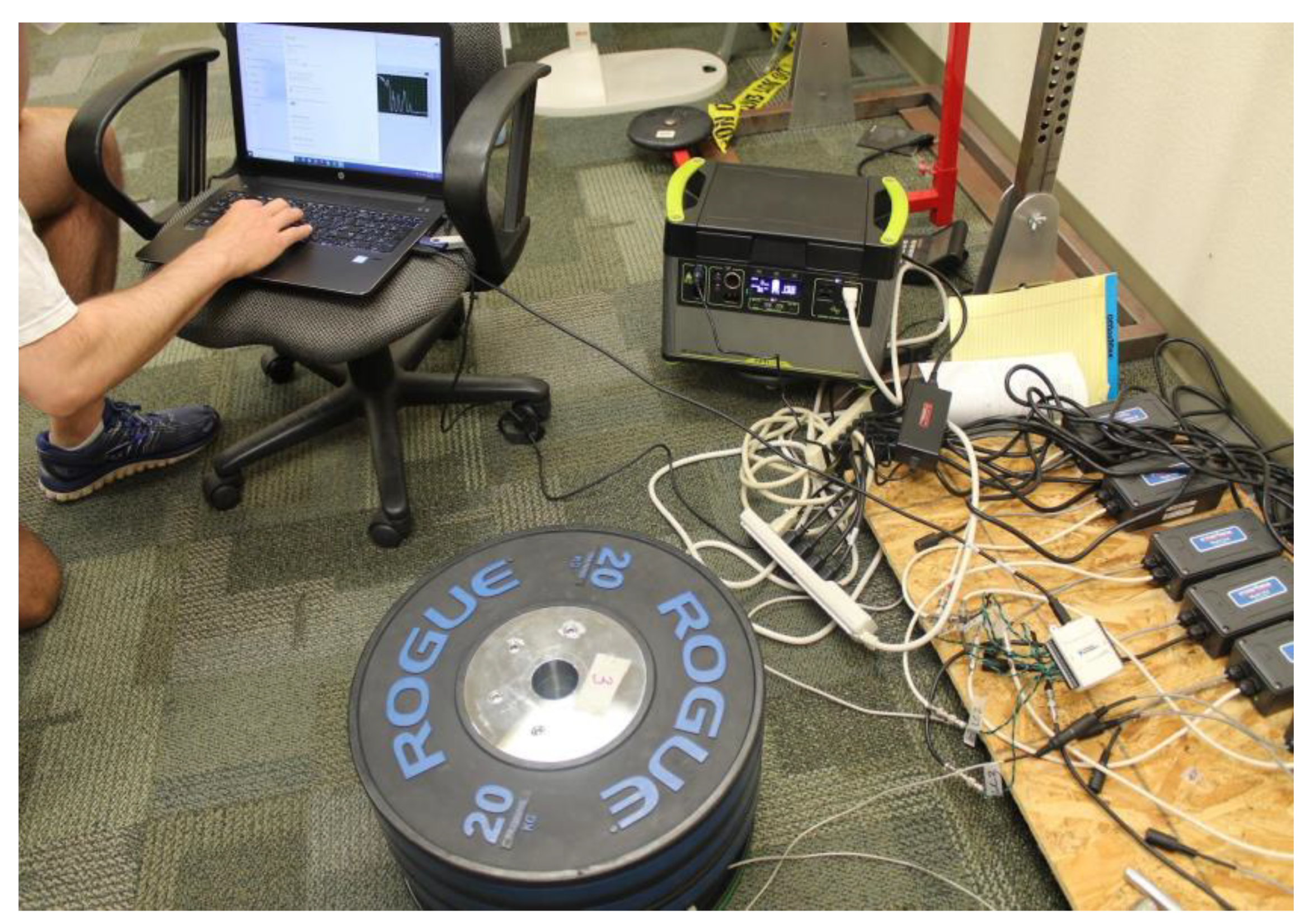

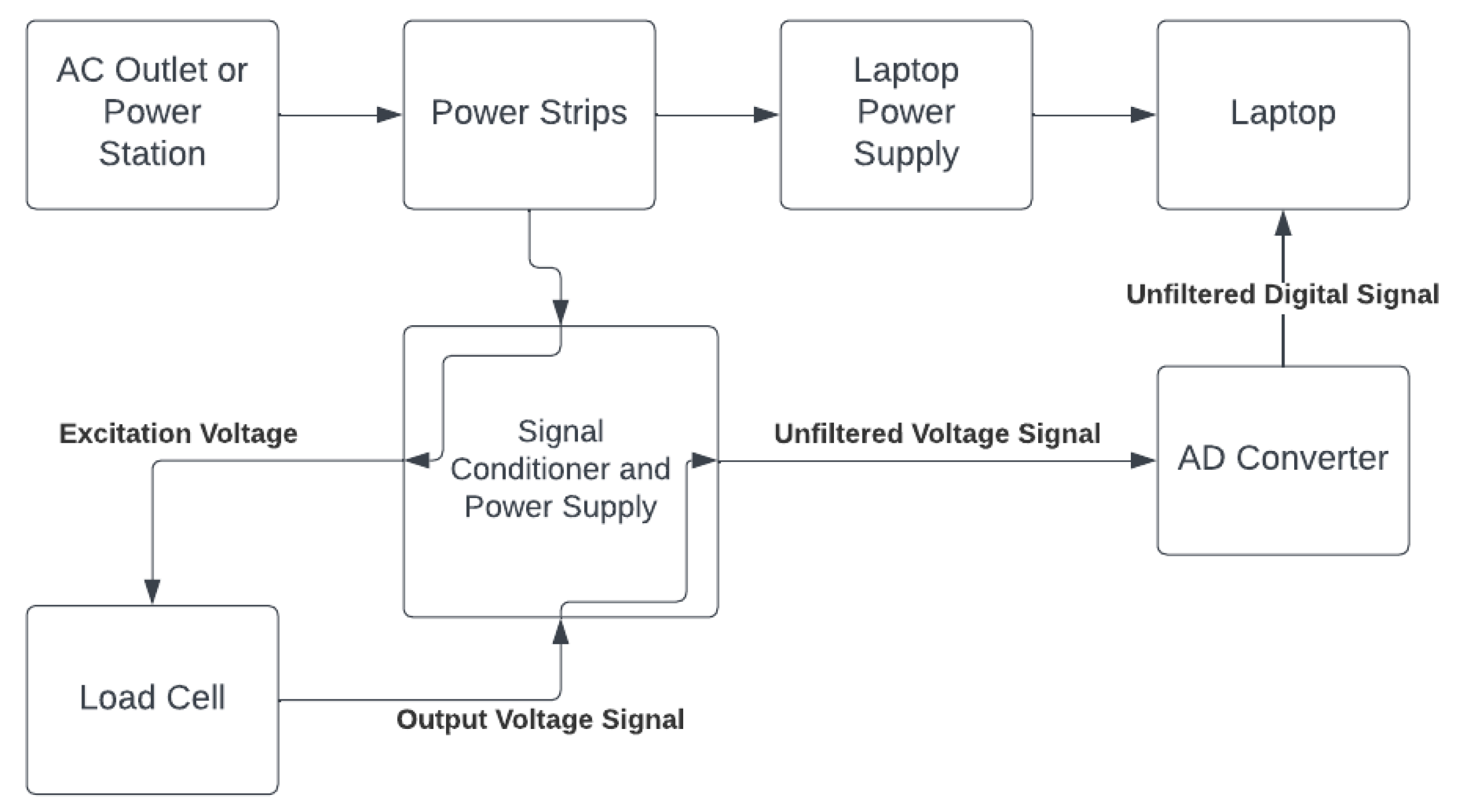

2.1. Instrumenting the Scrum Machine

2.2. Deadweight Calibrations

2.2.1. General Calibration Procedures

2.2.2. Phase 1: Effect of Power Source on Load–Voltage Relationship

2.2.3. Phase 2: Effect of Extended Warm-Up Time on Load–Voltage Relationship

2.2.4. Phase 3: Day-to-Day Reliability

2.3. Phase 4: Live Trials

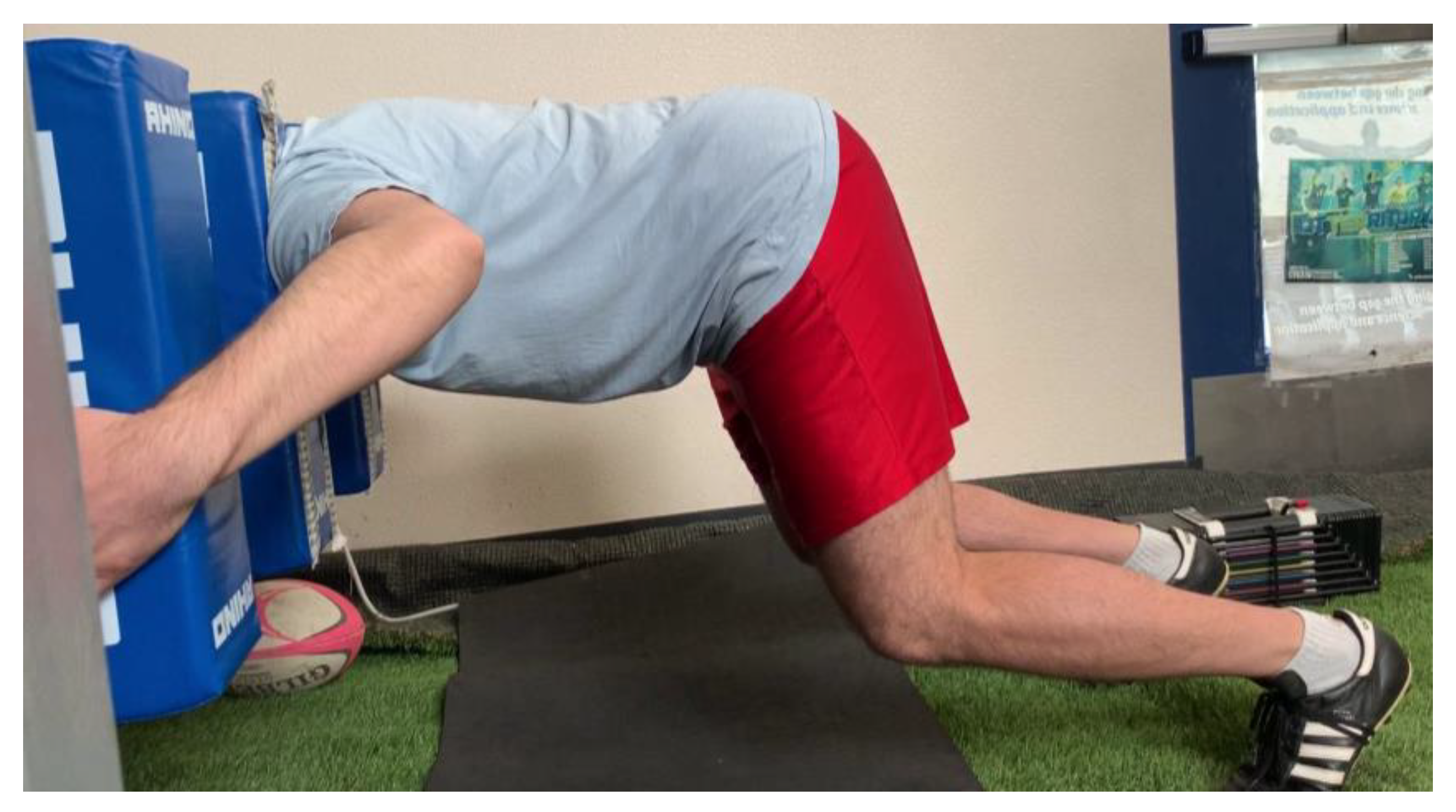

2.3.1. Individual Scrum Test Procedures

2.3.2. Data Processing and Statistical Analysis

2.3.3. Human Trial Results

3. Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- worldrugby.org Laws of the Game. World Rugby Laws. Available online: https://www.world.rugby/the-game/laws/law/19/ (accessed on 25 April 2023).

- Green, A.; Coopoo, Y.; Tee, J.C.; McKinon, W. A Review of the Biomechanical Determinants of Rugby Scrummaging Performance. S. Afr. J. Sport. Med. 2019, 31, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martin, E.; Beckham, G. Force Production during the Sustained Phase of Rugby Scrums: A Systematic Literature Review. BMC Sport. Sci. Med. Rehabil. 2020, 12, 33. [Google Scholar] [CrossRef] [PubMed]

- Preatoni, E.; Wallbaum, A.; Gathercole, N.; Coombes, S.; Stokes, K.A.; Trewartha, G. An Integrated Measurement System for Analysing Impact Biomechanics in the Rugby Scrum. Proc. Inst. Mech. Eng. Part P J. Sport. Eng. Technol. 2012, 226, 266–273. [Google Scholar] [CrossRef] [Green Version]

- Preatoni, E.; Stokes, K.A.; England, M.E.; Trewartha, G. The Influence of Playing Level on the Biomechanical Demands Experienced by Rugby Union Forwards during Machine Scrummaging: The Influence of Playing Level on Forces in Machine Scrummaging. Scand. J. Med. Sci. Sport. 2013, 23, e178–e184. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Preatoni, E.; Stokes, K.A.; England, M.E.; Trewartha, G. Engagement Techniques and Playing Level Impact the Biomechanical Demands on Rugby Forwards during Machine-Based Scrummaging. Br. J. Sport. Med. 2015, 49, 520–528. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Preatoni, E.; Cazzola, D.; Stokes, K.A.; England, M.; Trewartha, G. Pre-Binding Prior to Full Engagement Improves Loading Conditions for Front-Row Players in Contested Rugby Union Scrums: Engagement Protocols in Rugby Scrummaging. Scand. J. Med. Sci. Sport. 2016, 26, 1398–1407. [Google Scholar] [CrossRef] [PubMed]

- Indoor Rugby Scrum Training Machines, Indoor Rugby, Devon. Available online: https://www.rugbyscrummachines.co.uk/indoor-machines/ (accessed on 25 April 2023).

- Scrum Machines, Roller Machines and Ruck and Maul Training Aids. Available online: https://www.rugbyscrummachines.co.uk/roller-machines/ (accessed on 25 April 2023).

- du Toit, D.E.; Venter, D.J.L.; Buys, F.J.; Olivier, P.E. Kinetics of Rugby Union Scrumming in Under 19 Schoolboy Rugby Forwards. S. Afr. J. Res. Sport Phys. Educ. Recreat. 2004, 26, 33–50. [Google Scholar] [CrossRef]

- Lacome, M. Analyse de la Tâche et Physiologie Appliquée au Rugby: Étude de la Fatigue Associée à l’Exercice Maximal Isométrique Répété. Ph.D. Thesis, Université Claude Bernard, Lyon, France, 2013. [Google Scholar]

- Hopkins, W.G. Measures of Reliability in Sports Medicine and Science. Sport. Med. 2000, 30, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brady, C.J.; Harrison, A.J.; Comyns, T.M. A Review of the Reliability of Biomechanical Variables Produced during the Isometric Mid-Thigh Pull and Isometric Squat and the Reporting of Normative Data. Sport. Biomech. 2020, 19, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Drake, D.; Kennedy, R.; Wallace, E. Familiarization, Validity and Smallest Detectable Difference of the Isometric Squat Test in Evaluating Maximal Strength. J. Sport. Sci. 2018, 36, 2087–2095. [Google Scholar] [CrossRef] [PubMed]

- Drake, D.; Kennedy, R.; Wallace, E. The Validity and Responsiveness of Isometric Lower Body Multi-Joint Tests of Muscular Strength: A Systematic Review. Sport. Med. Open 2017, 3, 23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beckham, G. ScrumCollection. 2023. Available online: https://github.com/excellentsport/ScrumCollection (accessed on 26 April 2023).

- Bland, J.M.; Altman, D.G. Statistical Methods for Assessing Agreement between Two Methods of Clinical Measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Giavarina, D. Understanding Bland Altman Analysis. Biochem. Med. 2015, 25, 141–151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lüdecke, D.; Ben-Shachar, M.S.; Patil, I.; Waggoner, P.; Makowski, D. Performance: An R Package for Assessment, Comparison and Testing of Statistical Models. J. Open Source Softw. 2021, 6, 3139. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 26 April 2023).

- Wu, W.-L.; Chang, J.-J.; Wu, J.-H.; Guo, L.-Y. An Investigation of Rugby Scrummaging Posture and Individual Maximum Pushing Force. J. Strength Cond. Res. 2006, 21, 251–258. [Google Scholar] [CrossRef] [PubMed]

- Morel, B.; Rouffet, D.M.; Bishop, D.J.; Rota, S.J.; Hautier, C.A. Fatigue Induced by Repeated Maximal Efforts Is Specific to the Rugby Task Performed. Int. J. Sport. Sci. Coach. 2015, 10, 11–20. [Google Scholar] [CrossRef]

- Rendos, N.K.; Harriell, K.; Qazi, S.; Regis, R.C.; Alipio, T.C.; Signorile, J.F. Variations in Verbal Encouragement Modify Isokinetic Performance. J. Strength Cond. Res. 2019, 33, 708–716. [Google Scholar] [CrossRef] [PubMed]

- Guppy, S.N.; Brady, C.J.; Kotani, Y.; Connolly, S.; Comfort, P.; Lake, J.P.; Haff, G.G. A Comparison of Manual and Automatic Force-Onset Identification Methodologies and Their Effect on Force-Time Characteristics in the Isometric Midthigh Pull. Sport. Biomech. 2021, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, W.G. Spreadsheets for Analysis of Validity and Reliability. Sportscience 2015, 19, 36–42. [Google Scholar]

- Green, A.; Kerr, S.; Dafkin, C.; McKinon, W. The Calibration and Application of an Individual Scrummaging Ergometer. Sports Eng. 2016, 19, 59–69. [Google Scholar] [CrossRef]

| Comparison | Mean of Difference (Volts) | SD of Difference | 95% CI for Mean Difference | 95% LOA for Differences |

|---|---|---|---|---|

| Day 1 vs. day 0 | −0.002 | 0.010 | (−0.004, 0.000) | (−0.022, 0.017) |

| Day 7 vs. day 0 | −0.001 | 0.003 | (−0.002, −0.001) | (−0.006, 0.004) |

| Day 180 vs. day 0 | −0.001 | 0.004 | (−0.002, 0.000) | (−0.009, 0.008) |

| Day 7 vs. day 1 | 0.001 | 0.008 | (−0.001, 0.003) | (−0.015, 0.016) |

| Day 180 vs. day 1 | 0.001 | 0.007 | (−0.000, 0.003) | (−0.012, 0.015) |

| Day 180 vs. day 7 | 0.001 | 0.003 | (−0.000, 0.001) | (−0.005, 0.006) |

| Trial 2–1 | Trial 3–2 | Trial 4–3 | Trial 5–4 | Average across Trials | ||

|---|---|---|---|---|---|---|

| Raw values | Change in mean (volts) (90% CI) | 0.01 (−0.09 to 0.12) | 0.10 (−0.01 to 0.21) | 0.04 (−0.10 to 0.17) | 0.18 (0.05 to 0.32) | NA |

| Typical error (volts) (90% CI) | 0.14 (0.10 to 0.22) | 0.17 (0.13 to 0.25) | 0.20 (0.15 to 0.30) | 0.20 (0.15 to 0.30) | 0.18 (0.15 to 0.22) | |

| ICC (90% CI) | 0.96 (0.89 to 0.98) | 0.94 (0.86 to 0.98) | 0.93 (0.83 to 0.97) | 0.95 (0.87 to 0.98) | 0.95 (0.90 to 0.98) | |

| Log transformed | Change in mean (%) (90% CI) | 0.2 (−5.2 to 6.0) | 4.1 (−0.9 to 9.4) | 1.9 (−4.0 to 8.1) | 6.7 (0.6 to 13.2) | NA |

| Typical error as CV (%) (90% CI) | 7.5 (5.5 to 12.2) | 7.6 (5.8 to 11.6) | 9.3 (7.0 to 14.1) | 8.8 (6.6 to 13.6) | 8.4 (7.0 to 10.5) | |

| ICC (90% CI) | 0.95 (0.87 to 0.98) | 0.95 (0.88 to 0.98) | 0.93 (0.84 to 0.97) | 0.95 (0.87 to 0.98) | 0.95 (0.90 to 0.98) |

| Trial 2–1 | Trial 3–2 | Trial 4–3 | Trial 5–4 | Average across Trials | ||

|---|---|---|---|---|---|---|

| Raw values | Change in mean (volts) (90% CI) | 0.0 (−0.08 to 0.07) | 0.05 (−0.06 to 0.15) | 0.07 (−0.04 to 0.19) | 0.22 (0.12 to 0.32) | NA |

| Typical error (volts) (90% CI) | 0.10 (0.07 to 0.16) | 0.16 (0.12 to 0.23) | 0.18 (0.13 to 0.26) | 0.14 (0.11 to 0.22) | 0.15 (0.13 to 0.19) | |

| ICC (90% CI) | 0.97 (0.92 to 0.99) | 0.92 (0.81 to 0.97) | 0.91 (0.79 to 0.96) | 0.96 (0.89 to 0.98) | 0.94 (0.89 to 0.98) | |

| Log transformed | Change in mean (%) (90% CI) | −0.3 (−5.4 to 5.0) | 2.8 (−2.3 to 8.2) | 3.6 (−2.1 to 9.7) | 10.6 (6.0 to 15.4) | NA |

| Typical error as CV (%) (90% CI) | 7.0 (5.2 to 11.4) | 7.9 (6.0 to 12.0) | 8.9 (6.7 to 13.5) | 6.2 (4.7 to 9.6) | 7.6 (6.4 to 9.5) | |

| ICC (90% CI) | 0.96 (0.88 to 0.99) | 0.94 (0.86 to 0.98) | 0.93 (0.82 to 0.97) | 0.97 (0.92 to 0.99) | 0.95 (0.91 to 0.98) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martin, E.A.; Kim, S.B.; Beckham, G.K. Calibration and Multiple Reliability Assessments of a Scrum Machine Instrumented to Measure Force. Appl. Sci. 2023, 13, 7581. https://doi.org/10.3390/app13137581

Martin EA, Kim SB, Beckham GK. Calibration and Multiple Reliability Assessments of a Scrum Machine Instrumented to Measure Force. Applied Sciences. 2023; 13(13):7581. https://doi.org/10.3390/app13137581

Chicago/Turabian StyleMartin, Eric Alexander, Steven B. Kim, and George K. Beckham. 2023. "Calibration and Multiple Reliability Assessments of a Scrum Machine Instrumented to Measure Force" Applied Sciences 13, no. 13: 7581. https://doi.org/10.3390/app13137581

APA StyleMartin, E. A., Kim, S. B., & Beckham, G. K. (2023). Calibration and Multiple Reliability Assessments of a Scrum Machine Instrumented to Measure Force. Applied Sciences, 13(13), 7581. https://doi.org/10.3390/app13137581