Abstract

Arabic handwritten-text recognition applies an OCR technique and then a text-correction technique to extract the text within an image correctly. Deep learning is a current paradigm utilized in OCR techniques. However, no study investigated or critically analyzed recent deep-learning techniques used for Arabic handwritten OCR and text correction during the period of 2020–2023. This analysis fills this noticeable gap in the literature, uncovering recent developments and their limitations for researchers, practitioners, and interested readers. The results reveal that CNN-LSTM-CTC is the most suitable architecture among Transformer and GANs for OCR because it is less complex and can hold long textual dependencies. For OCR text correction, applying DL models to generated errors in datasets improved accuracy in many works. In conclusion, Arabic OCR has the potential to further apply several text-embedding models to correct the resultant text from the OCR, and there is a significant gap in studies investigating this problem. In addition, there is a need for more high-quality and domain-specific OCR Arabic handwritten datasets. Moreover, we recommend the practical development of a space for future trends in Arabic OCR applications, derived from current limitations in Arabic OCR works and from applications in other languages; this will involve a plethora of possibilities that have not been effectively researched at the time of writing.

1. Introduction

When it is necessary to store or edit a text written by hand in Arabic, this can only be performed manually, which can take significant time. However, fortunately, optical character recognition can be applied for this particular case. Optical character recognition (OCR) is a technique that is used to read and recognize the text present in an image and then convert it into a textual format. Once the text is extracted and digitized, it can utilize the applications of storing, retrieving, searching, and editing [1,2,3,4,5]. Interchangeably, OCR is referred to as “text recognition”. Text recognition has many benefits and applications, especially in the automation of pipelines in libraries and healthcare institutions, the overcoming of learning difficulties, and transportation by auto-plate recognition, leading to reduced human intervention and effort, a promise AI-based solutions still deliver [6,7,8,9,10,11].

The text to be recognized can be divided into one of two types: handwritten or printed. Handwritten-text recognition (HTR) tasks can themselves be divided into two types: online and offline. Offline handwritten-text recognition is the recognition of text that is not produced by computer devices and is not produced at the time of recognition but, rather, is usually found in still documents, records, and images. On the other hand, online text recognition focuses on text produced by computer-based devices, such as digital pens and tablets, rather than being extracted from documents [12,13,14,15,16].

Arabic, the language of the Quran, is acknowledged as the lingua franca in 25 countries and is considered the sixth most commonly spoken native language around the world. It is used by more than one billion Muslims around the globe and is spoken by more than 274 million people [17]. Its script’s morphological, lexical, and semantic richness make it one of the most challenging languages computationally [18]. Specifically, the Arabic writing system is from right to left and consists of 28 letters, each of which can take multiple forms, depending on its position in the word: alone, in the beginning, in the middle, or at the end. Moreover, some of the characters that can be turned into other characters simply by adding dots. Additionally, some characters have loops, while others have similar skeletons. Moreover, the Arabic writing system is connected, meaning that connections can be formed whenever two letters are combined. In addition, diacritics can be present in Arabic text in multiple forms, and different diacritics can change the meaning of a word slightly in most cases. The fonts are rich in their diversity as they originate from different eras and geographies. All these characteristics make recognition tasks difficult [19,20,21]. However, this challenge can also be viewed as an opportunity.

Predominantly, the performance of OCR by an OCR system or engine involves the following steps: (1) image acquisition, in which the image is collected; (2) pre-processing, which involves steps to preprocess the previously acquired image, such as binarization and thinning; (3) segmentation, which can be performed either line-wise, word-wise, or character-wise; (4) feature extraction, in which the important visual features are extracted, which can help in the subsequent step; (5) classification, in which the recognition is performed by devising models that utilize the features extracted previously; and (6) post-processing techniques, which deal with the produced text to correct it further [22,23].

Deep-learning techniques have achieved notable success in the fields of natural language processing and computer vision [24,25,26]. These techniques are centered around the use of different kinds of deep neural networks for training on a dataset, followed by the automatic extraction of the features, the performance of classification, and the production of the output of the classification. The training process is conducted by fine-tuning an architecture based on CNN-LSTM-CTC. Convolutional neural networks (CNNs) are useful for extracting visual features [27]; long short-term memory (LSTM) is a type of recurrent neural network (RNN), and it is used to label the long dependencies of sequences along the text [28]; and connectionist temporal classification tackles the issue of no matching between input- and output-sequence lengths [29]. After training, the text is extracted from the test set; however, the text may still need some correction.

Since the output of the previous step may need correction, another class of techniques within deep learning can be utilized; this was the subject of some previous experiments. These continued to apply the concept of using datasets to train deep neural network models, but they also used parts of Transformer, as well as language models [30] trained on voluminous amounts of data and capable of generating texts as outputs. Examples include T5 [31], GPT-3.5 [32], part of which forms the basis of ChatGPT, and BERT and its various variations [33]. These models can be used to predict masked words. Lastly, the accuracy is calculated by comparing the character-error rate [34] and the word-error rate [35] between the correct ground-truth text and the predicted text.

In summary, since the research on Arabic handwritten-text recognition still contains a gap due to the lack of works surveying this problem in its recent form, this paper aims to investigate and analyze the deep-learning techniques used to perform the OCR and text correction of Arabic handwritten text. The novelty of this work resides in the fact that no previous work has specifically tackled this problem of critically analyzing deep-learning-based Arabic handwritten-text recognition in recent years, from 2020–2023.

1.1. Significance: Why and How Is This Work Different?

The main significance of this paper can be summarized in one sentence: There are no other studies in the literature from 2020–2023 that critically analyze both deep-learning OCR and text-correction techniques.

The greatest difference between this work and other works is that this is the only study that combines all the following factors: (1) the analyzed works are recent (2020–2023), while many works in the literature, in contrast, include old studies and are not up-to-date; (2) while other, old paradigms are used in tackling OCR problems, deep learning is the current trend, and it is not investigated rigorously in the literature, as previous research did not shed light on deep learning only; (3) similarly, Arabic text-correction deep-learning techniques: similarly is not well investigated or reviewed in the literature, to the extent of neglect, even though recent works are continuously developing; (4) a curated list of approaches to enhancing the OCR models for Arabic is derived from the challenges recognized in the analysis and from potential applications for which OCR solutions can be developed.

Our work, despite the complexity of the topic, is presented in a language that is scientifically correct and does not lose the reader’s interest. It is therefore suitable for all the following: (1) advanced researchers in OCR and deep learning who want to know the specific direction of the field, the challenges, and the problems that need to be researched further; (2) deep-learning and OCR practitioners who wish to know exactly which models to use and those that other practitioners have developed, in addition to possible applications for which solutions may be implemented; and (3) interested readers who are curious about the uses of deep learning in Arabic OCR and want to learn about the topic in a friendly way.

1.2. Motivation and Why This Is an Important and Interesting Work

Pragmatically and concisely, the motivation of this work is to fill this noticeable gap in the literature, and the obvious need for a work that critically analyzes both recent OCR and text-correction works and applies deep-learning techniques. Specifically, this analysis helps to identify recent trends and challenges and provides strong recommendations for the future for researchers and practitioners, along with potential future applications.

The use of OCR is of great importance as it allows users to extract text from images, which supports the digitization process by saving resources, such as time and money. Arabic OCR is still not sufficiently mature in comparison to other languages. This study critically analyzes and presents current trends, challenges, and future trends in deep-learning OCR and text correction in Arabic.

Researchers, practitioners of OCR and deep learning, and interested readers can gain enormous benefits from this analysis. Researchers can learn what the current challenges are and the areas of focus that will be required in future work. Practitioners can determine the current trends in models for different uses and which potential applications need to be developed. In addition, practitioners from multiple sectors, such as government, healthcare, and the legal sector, can benefit from this analysis by discovering the latest models and their potential uses. Interested readers in Arabic may find the introduction and formal background sufficient to introduce themselves to this field. Moreover, they can be introduced to Arabic OCR holistically in a way that further increases their interest. Furthermore, researchers from other fields, such as calligraphy, paleontology, and paleography, can cooperate with deep-learning researchers and determine the current status of the field and its future directions.

This work can be used by multiple audiences to discover both the general progress of the field and specific developments within it, according to their needs. The findings of this review provide clear remarks and guidance on the current challenges in models, datasets, and future trends and extensions.

1.3. Contribution

This paper is for researchers, practitioners, and readers interested in researching the utilization of deep-learning techniques in Arabic OCR and text correction, as well as those who want to rapidly learn from the recent works in this area and gain a holistic understanding of the topic.

This paper’s contributions include the following:

- For the first time, a thorough review of 83 papers is presented, mainly from 2020 to 2023, to build a holistic review of Arabic OCR using deep learning and Arabic text correction. Moreover, this review elucidates the challenges to overcome for Arabic OCR. In addition, metrics for evaluating the accuracy of OCR systems are presented. Overall, this work presents the challenges, techniques, recent work analysis, and future directions for the use of deep learning for Arabic OCR and text correction.

- Unprecedented in scope, a critical analysis and deep discussion are presented on 25 recent works, from 2020 to 2023, that apply deep learning for Arabic OCR and Arabic text correction. The findings determine the current research frontier, trends, and limitations. This is a gap that no work filled, to the knowledge of the author, in the recent time frame defined above.

- Current challenges and future trends are extrapolated from the gaps that need to be filled in the works analyzed in this study and the possibilities of leveraging applications in other OCR systems for use in Arabic OCR, including datasets, models, and applications.

1.4. Survey Methodology

1.4.1. Scope and Overview

This paper focuses on critically reviewing research articles, in the form of journals, conferences, or theses, on the application of deep-learning techniques for Arabic handwritten OCR and text correction from 2020 to 2023. It does not include in its analysis works that do not apply deep-learning techniques or works that do not consider the Arabic language. The research questions are the initial points of departure for the investigation. The keywords were searched in the sources, and the studies were screened and selected according to the inclusion–exclusion criteria.

1.4.2. Research Questions

- RQ0: What is OCR? What is deep learning? What are the steps and challenges in Arabic OCR? How is OCR accuracy evaluated?

- RQ1: What are the dee- learning techniques used in Arabic OCR during the period 2020–2023? Which are the notable works in the literature?

- RQ2: What are the deep-learning techniques used in Arabic text correction for the period 2020–2023? Which are the notable works in the literature?

- RQ3: What were the limitations and challenges involved in the use of deep-learning techniques in Arabic OCR and text correction for the period 2020–2023?

- RQ4: What are the findings, recommendations, and future trends for deep-learning techniques to be used in Arabic OCR and text correction?

1.4.3. Sources

Online databases and search engines for scientific publications were used to search for the papers considered. These databases were Google Scholar, Semantic Scholar, ArXiv, MDPI, Springer, IEEE, ACM, and Elsevier.

1.4.4. Keywords

The keywords used to collect the papers were combinations of the following: “Arabic OCR”, “Arabic Deep Learning”, “Arabic Text Correction”, “Arabic Error Correction”, “Arabic Diacritization”, “Arabic Large Language Models”, “Arabic OCR Transformers”, “Arabic OCR GANs”, “Arabic OCR LSTM”, “Arabic OCR RNN”, “Arabic OCR CNN”, “Arabic OCR CTC”, “Arabic Handwritten Text Recognition”, “Deep Learning Arabic Handwritten Text Recognition”.

1.4.5. Inclusion–Exclusion

- The work must be written in English;

- The work must be published as a research article, whether in journals or conferences, or as a thesis. Preprints are included;

- In the analysis part, the work must apply deep-learning techniques;

- In the analysis part, the work must include Arabic OCR or text correction;

- The work should not be a review;

- The work must be from the period from 2020 to 2023;

- The work must not be duplicated.

1.4.6. Screening and Selection

The initial number of screened or collected papers was n = 370, which was then, according to a critical evaluation, narrowed down to the mentioned scope, resulting in a total of n = 83 papers and, specifically, n = 25 papers for a critical analysis of recent work on Arabic OCR and text correction.

1.5. Structure

This work is organized as follows. Section 2 discusses the key steps in performing OCR. Section 3 describes the challenges in Arabic OCR. Section 4 illustrates deep-learning techniques that will be used as components of Arabic OCR and text correction. Section 5 presents the metrics used to evaluate OCR accuracy. Section 6 and Section 7 are the core of the paper, in which recent works are critically analyzed and discussed. First, OCR deep-learning works are critically analyzed. These works are classified in three types: CNN-LSTM-CTC-based works, Transformers-based works, and GANs-based works. Second, post-OCR text-correction works are thoroughly analyzed. Section 7 discusses the analyzed works in more depth, highlighting the findings from our analysis. In Section 8, challenges and future trends are extrapolated from two sources: the gaps in recent works and the possibilities of applying findings from other areas in the Arabic domain. Section 9 finally recapitulates the whole paper and findings in a conclusion that can serve as a door-opener for other researchers and readers.

2. OCR Steps [22,23,24,25,26,27,28,29,30,31,32,33,34,35,36]

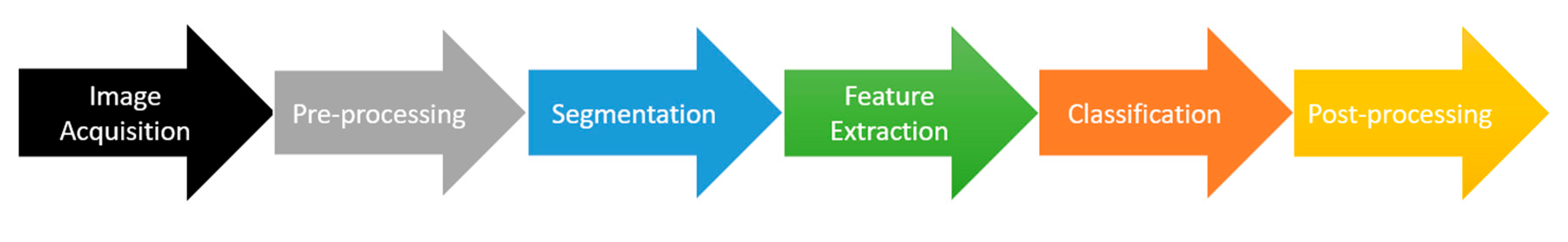

Generally, the major steps in OCR are as follows, as depicted in Figure 1.

Figure 1.

Key OCR steps.

- Image acquisition: Obtain the photograph that is captured from an exterior origin through a tool such as a camera or a scanner;

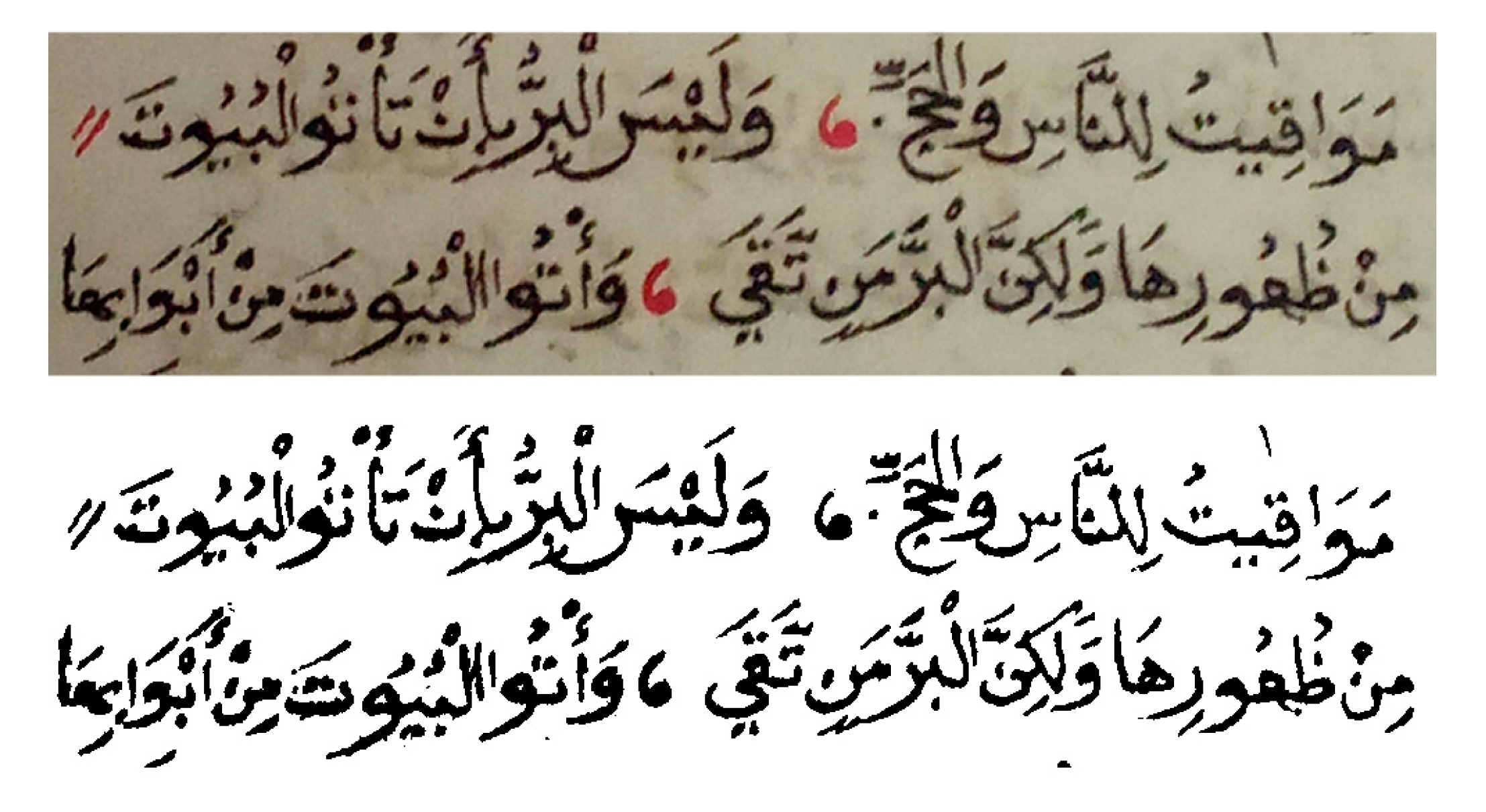

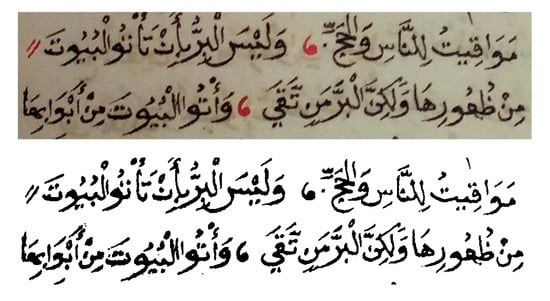

- Pre-processing: Techniques that are applied to the acquired images in order to enhance their quality. For example, removing the noise or the skew, thinning, and binarization, such as in Figure 2. In addition, sometimes, lossy filtering is performed as a preprocessing step to create a graph representation of characters by discarding non-isomorphic pairs based on simple graph properties such as the number of edges and degree distribution before moving to fine-grained filtering using deep neural networks;

Figure 2. Binarization exemplified.

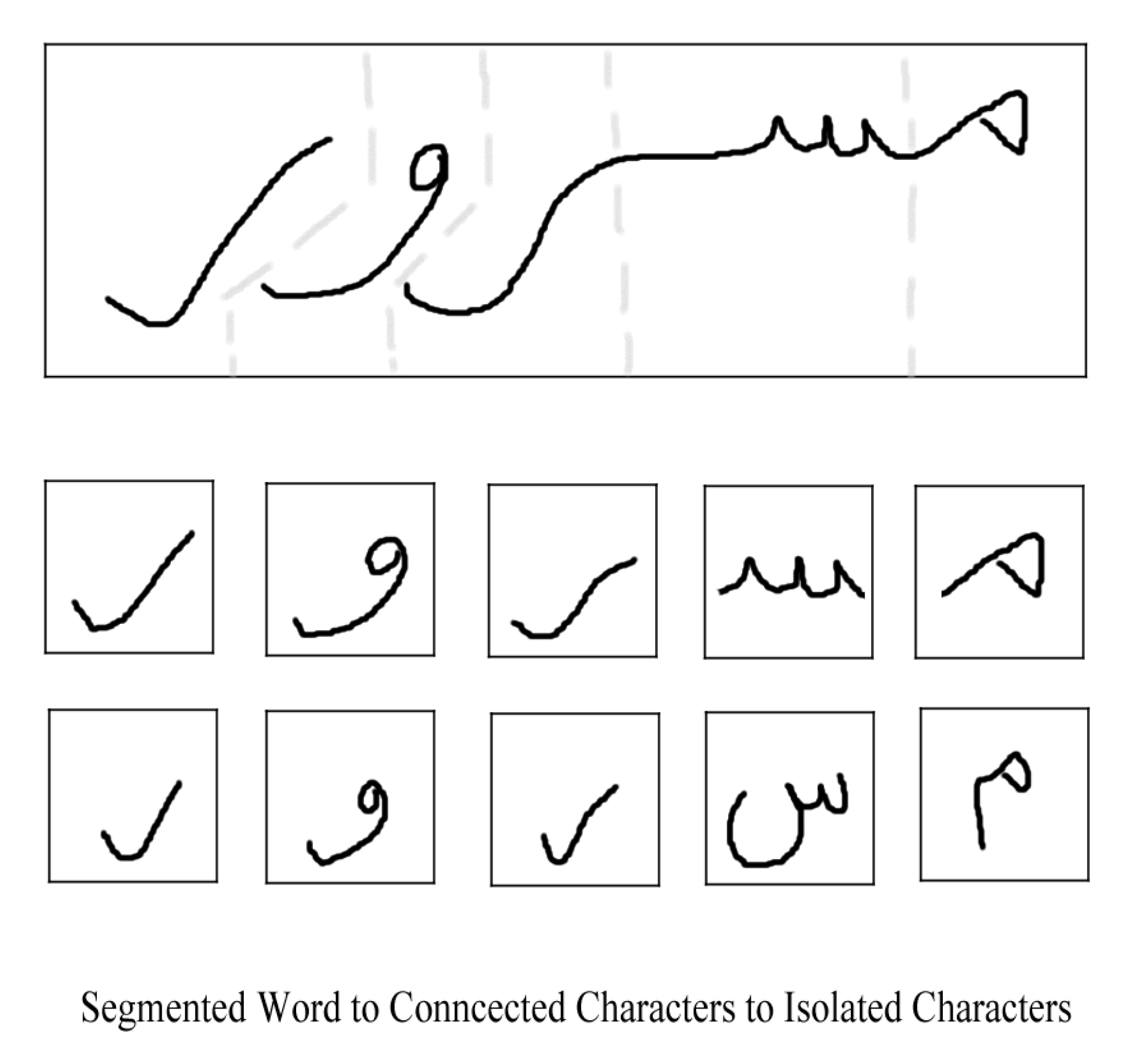

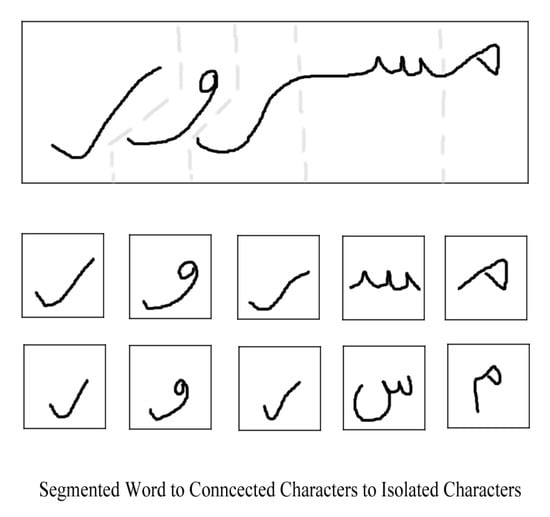

Figure 2. Binarization exemplified. - Segmentation: The splitting of the image into the characters, words, or lines constituting it. Basic techniques include component analysis and projection profiles. Moreover, histograms can be utilized to study segmentation. An example is shown in Figure 3. In addition, thinning and segmentation are performed after making the character rotation invariant;

Figure 3. Different levels of segmentation from words to isolated characters.

Figure 3. Different levels of segmentation from words to isolated characters. - Feature extraction: The extraction of features that help in learning the representation. The features can be geometrical, such as points and loops, or statistical, such as moments. The most closely related features that are representative and not redundant should be chosen after the extraction;

- Classification: Used to predict the mapping between the character and its class. Different classification techniques can be used and, recently, deep-learning-based techniques have used models that are based on deep neural networks;

- Post-processing: Further corrections of the output of the OCR to reduce the number of errors. Different techniques can be used for post-processing. Some are based on devising a dictionary to check spellings; others are based on utilizing probabilistic models, such as Markov chains and N-grams. With the advancement in natural language processing, in the case of word misclassification, using dictionaries to identify alternatives can provide multiple options. To determine the word that is most likely to be accurate, the context at the sentence level of the text needs to be explored, which requires the techniques of NLP and semantic tagging. Currently, text-embedding models and techniques, such as BERT, and their variations can be utilized for this step.

3. Arabic OCR Challenges

3.1. OCR Challenges [37,38,39]

Many challenges can occur in the OCR in images from which text is extracted, such as the following:

- Scene complexity: The segregation of text from non-text can be difficult in cases in which the rate of noise is high or when there are strokes similar to text, but which are part of the background and are not texts themselves;

- Conditions of uneven lighting: Due to the presence of shadows, pictures taken of any document can have the problem of uneven light distribution, in which part of the image has more light while the other is quite dark. Thus, scanned images are preferred, since they eliminate such unevenness;

- Skewness (rotation): The text has skewed lines and deviates from the distinctive orientation. This can be a consequence of manually taking pictures;

- Blurring and degradation: The grade of the image can be that of low quality. A remedy for this problem is to sharpen the characters;

- Aspect ratios: Different aspect ratios can require different search procedures to detect the text;

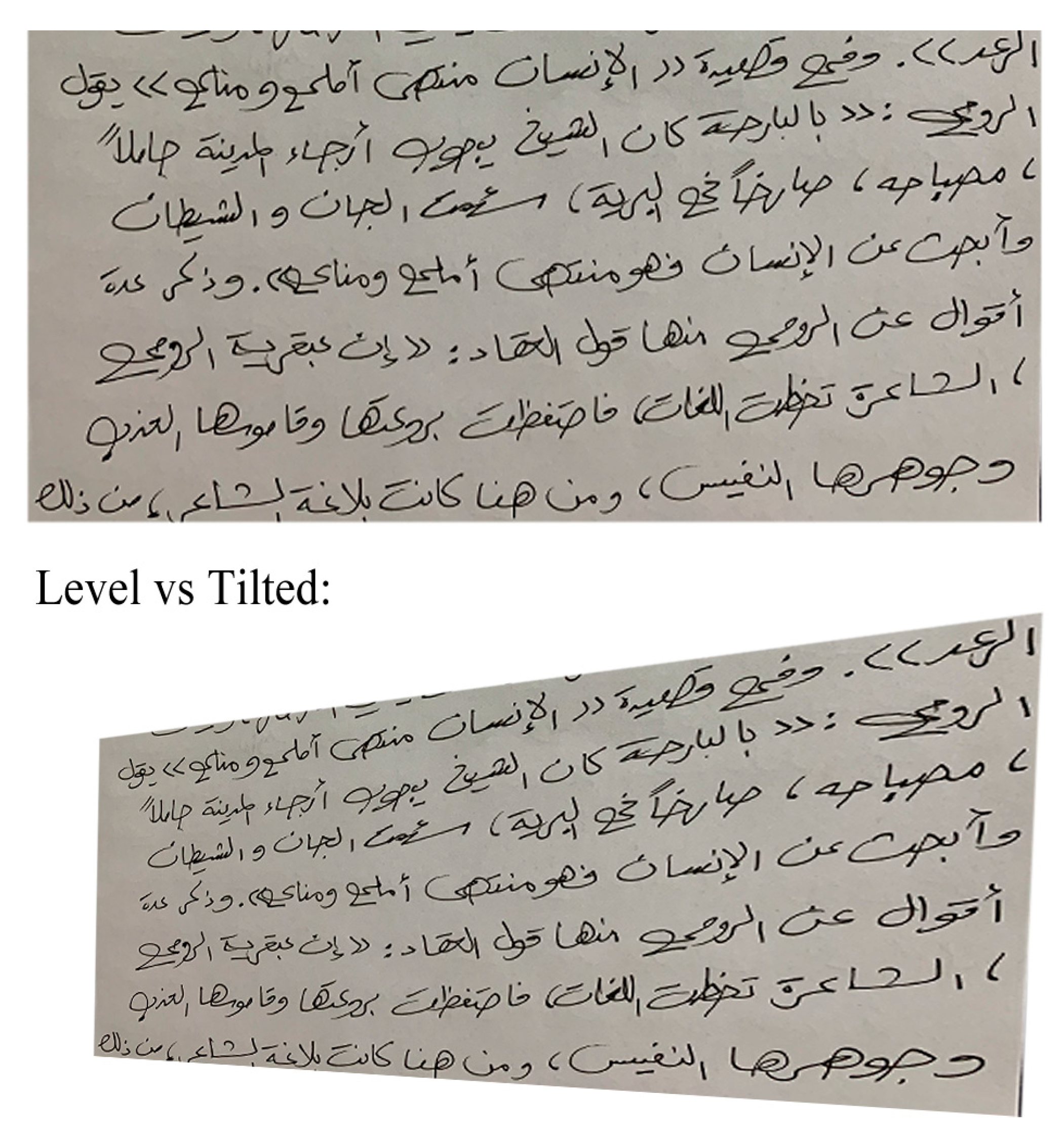

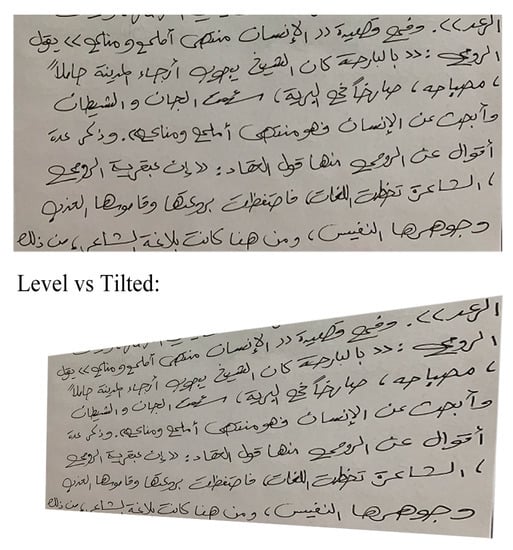

- Tilting (perspective distortion): The page itself may not appear in a correct, parallel perspective. The taking of photographs of texts by non-experts without the use of a scanner can account for such non-parallelism, making the task difficult. A simple solution is to use scanners or to try to take photographs for each page while perceiving the perspectives. An example is shown in Figure 4;

Figure 4. Example of tilting.

Figure 4. Example of tilting. - Warping: This problem is related to the text itself. The page may be perfectly parallel at the time at which the picture is taken; however, the nature of the text and how it is written are warped, which is not usually the case in writing;

- Fonts: Printed fonts and styles vary from those that are handwritten. The same writer can write the same passage in the same space in a different way each time. On the other hand, printed text is easier because the differences tend to be less frequent from one print to another;

- Multilingual and multi-font environments: Different languages feature different complexities. Some languages use cursive scripts with connected characters, as in the Arabic language. Moreover, recent individual pages can contain multiple languages, while old writings can have multiple fonts within the same page if they are written by different writers.

3.2. Arabic OCR Challenges [19,20,21]

- Arabic is written from right to left, while most models are more used to the opposite direction;

- Arabic consists of a minimum of 28 characters, and each character has a minimum of two and up to four shapes, depending on the position. Fortunately, no upper- or lower-case characters are used in Arabic;

- The position determines the shape of a character. Therefore, a character can have different shapes depending on its position: alone, at the start, in the middle, or at the end. For example, the letter Meem has different shapes according to its position;

- Ijam: Dots can be written above or below characters, and they differentiate between one character and another, such as Baa and Tha;

- Tashkeel: Marks such as Tanween and Harakat can change the context of a piece of writing. Examples applied to the letter Raa are illustrated in Table 1;

Table 1. Different types of Tashkeel.

Table 1. Different types of Tashkeel. - Hamza, in various locations, such as above or below Alif, above Waw, after or above Yaa, and in the middle or end of a word;

- Loops, such as Saad and Taa, and Meem and Ain, where the difference between characters is difficult to distinguish due to the similarity of the skeleton;

- Separated characters separate words, such as Raa, Dal, and Waw. Figure 5 presents an example with different kinds of separation;

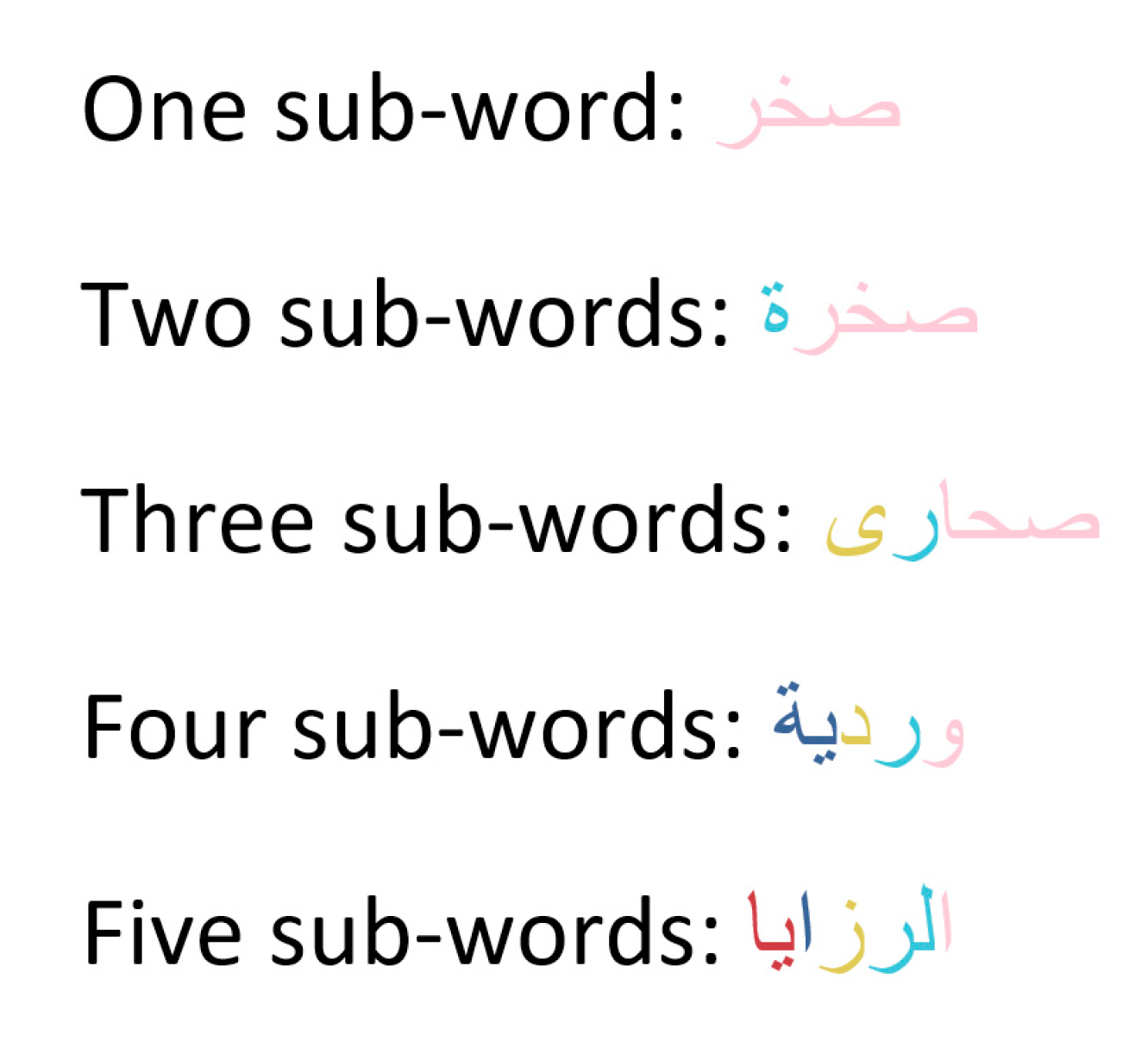

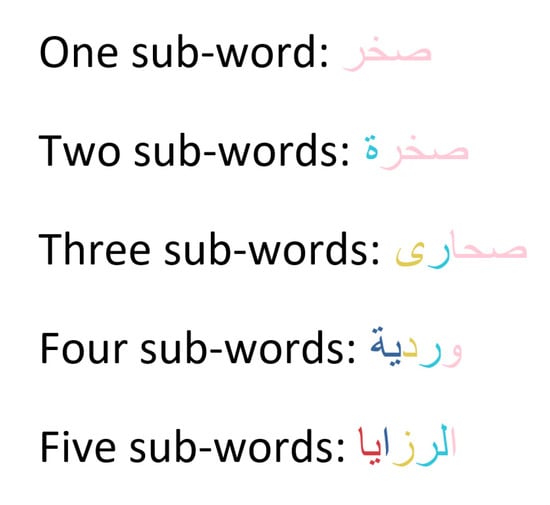

Figure 5. Elucidation of how sub-words are formed in Arabic.

Figure 5. Elucidation of how sub-words are formed in Arabic. - The total number of shapes is at least 81. Some letters share the same shape but differ in terms of dots;

- Without dots, there are at least 52 letter shapes;

- The structural characteristics of the characters, given their high similarity in shape, can be perplexing. Moreover, a slight modification can turn one character into another;

- Arabic is cursively written. Therefore, connections are formed when two characters are connected;

- The presence of other diacritics, such as Shadda, and punctuation, such as full stops can be confused with the dots that are used in Arabic;

- Most models suffer from overfitting, since generalization is not a concern, partly because of the lack of annotated datasets and partly because complex models are trained on small sets or sets that are not balanced;

- The available datasets have the issue of distorted and unclear samples;

- The majority of datasets suffer from imbalances, in which the distribution is not even between the samples, which can affect the model’s performance by overfitting to these specific samples;

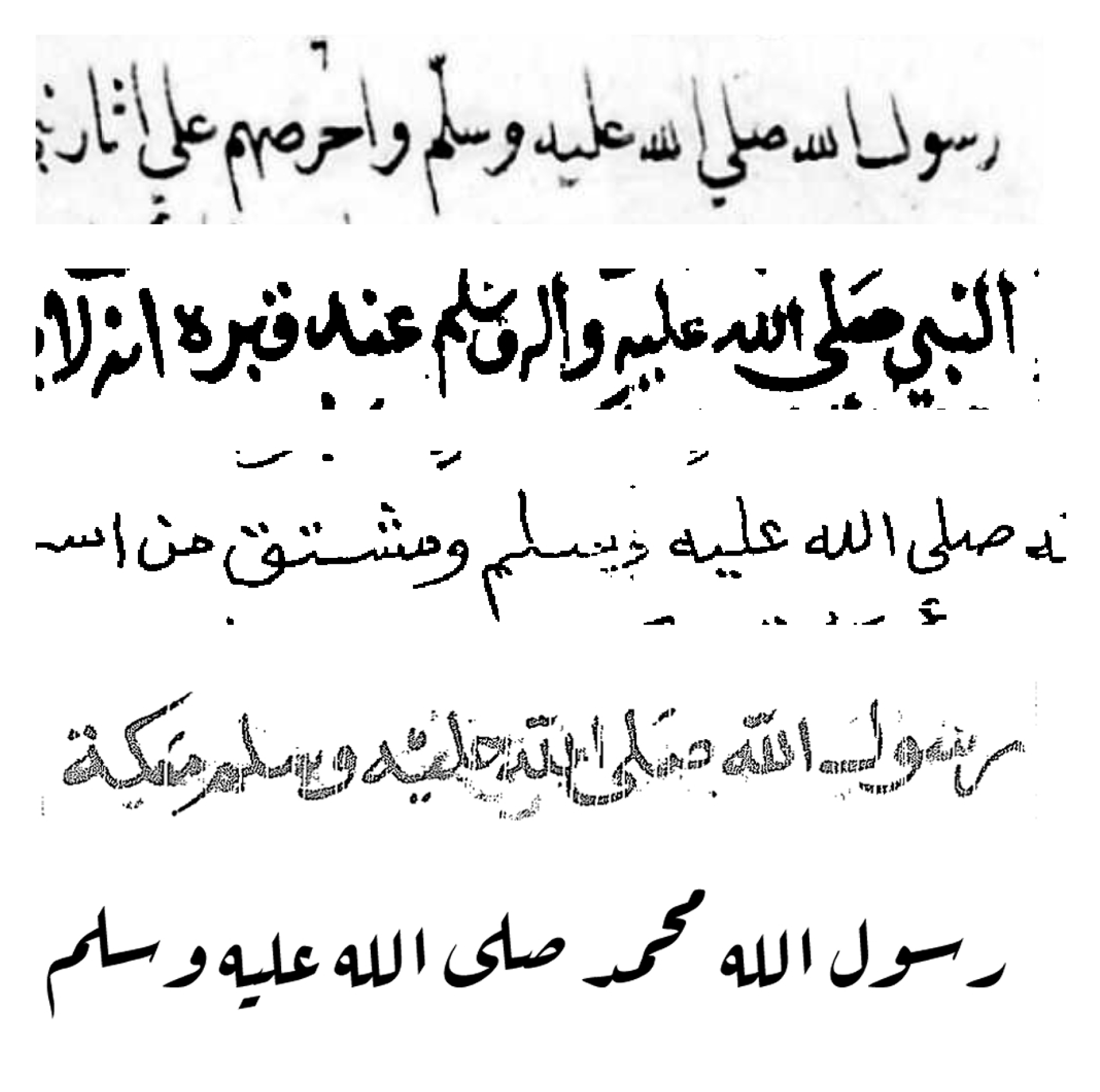

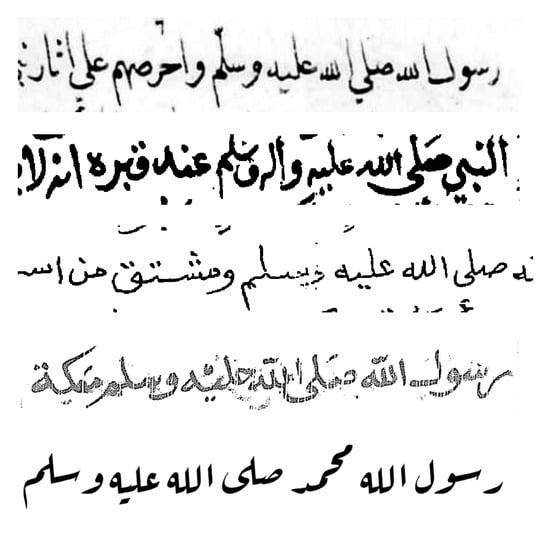

- The writing style can vary from one author to another. Figure 6 shows different types of writing style.

Figure 6. Richness and variation in Arabic fonts.

Figure 6. Richness and variation in Arabic fonts.

4. Deep-Learning Techniques

Deep learning techniques showed their effectiveness in a multitude of applications in real life, such as power systems [40], autonomous vehicles [41], and neurobiology [42]. Multiple works experimented with the use of deep-learning techniques to recognize Arabic handwritten text. The majority of these techniques are based on encoder–decoder architectures, involving different combinations of convolutional neural networks (CNN), recurrent neural networks (RNN), long short-term memory (LSTM), and connectionist temporal classification (CTC). Other works employed Transformer architectures, and some utilized generative adversarial networks (GANs).

4.1. Convolutional Neural Network (CNN) [43]

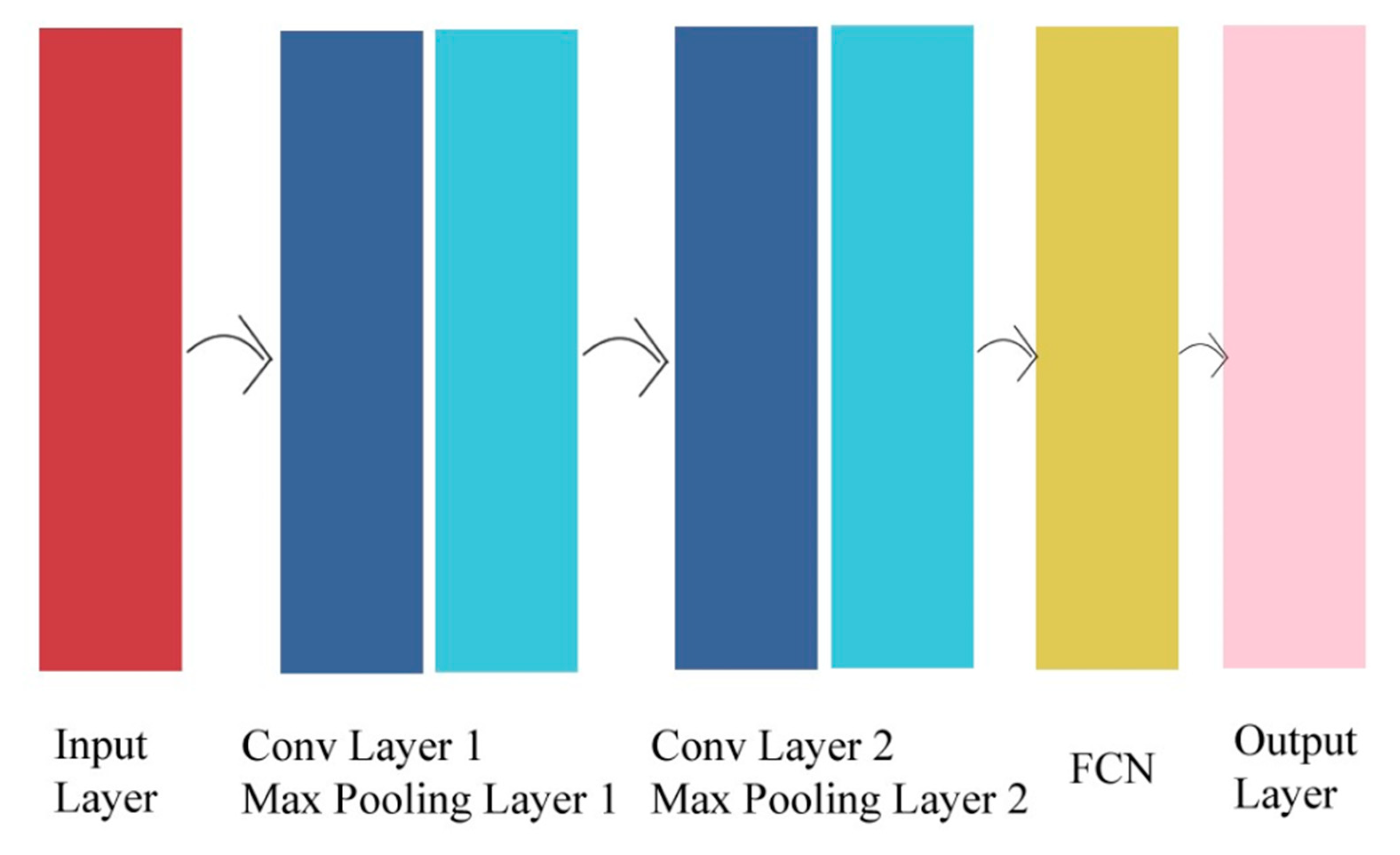

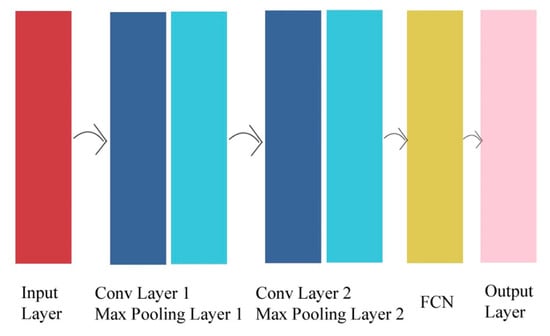

The CNN solves the problem of the requirement of an excessive number of parameters, as in fully connected networks. Moreover, it preserves the spatial context, so the output of the convolutional layer takes the shape of a picture. Most importantly, it possesses translation equivariance; that is, the passage of an image through the convolutional layer is almost the same as the translation of the output of convolution; therefore, class prediction across the different layers is almost the same. It consists of four types of stacked layer, followed by an FCN to perform the classification; these layers are the convolutional layer, padding, stride, and pooling. The convolutional layer is the core of the CNN, as it reduces model capacity compared to the FCN. In addition, it is efficient at detecting spatial patterns. It mainly applies the cross-correlation with a kernel that has learnable parameters. Padding adds zeros around rows and/or columns to inflate the size of the output. The stride jumps rows and/or columns to reduce dimensionality and computation. The pooling works as a voting or averaging mechanism to make the representation manageable and smaller. It is important to note that padding, stride, and multiple channels are non-learnable parameters. An example of a CNN is shown in Figure 7.

Figure 7.

Simplified CNN with two Conv layers.

4.2. Long Short-Term Memory (LSTM) [44]

Long short-term memory is a type of RNN; specifically, it overcomes the problem of the RNN’s inability to remember very long chains of dependencies. It adds a memory cell, which helps to remember on top of the input gate, output gate, and forget gate. However, LSTM may resolve the issue of gradient vanishing, but still suffers from gradient exploding. The reason for this is that gradients can exponentially increase with respect to the number of layers. Moreover, LSTMs are not parallelizable; therefore, they can take a long time to train, and they still cannot remember very long chains of dependencies, even though they are better than RNNs in that sense.

4.3. Connectionist Temporal Classification (CTC) [44]

Connectionist temporal classification is an approach to the modeling of the labeling of unsegmented sequence data, which can be a problem for RNN and encoder–decoder models. It is used in automatic speech recognition and handwritten-text-recognition tasks. It allows the training of RNNs without the need to manually segment the data and direct training for sequence labeling; therefore, it solves the problem of the requirement of an alignment between the input and the output. With an input of an arbitrary length, it merges the spaces, special blanks (epsilons), and repeated characters to form a word. A CTC loss function is used, which aims to have a conditional probability that marginalizes over a valid set of alignments, computing in each step the probability of each alignment. To elucidate this point, consider an input with a length of 6, Rayyan, perceived by the RNN in eight steps, making different distributions for each character of the set, {R, a, y, n, epsilon}, and then compute the probability of different sequences, such as [R, a, y, epsilon, y, a, n, n], [R, a, y, y, a, n, epsilon, n], and outputs (Rayyan, Rayann). Next, by marginalizing over the alignments, distributions over the outputs are obtained, and the Rayyan sequence has the highest probability.

4.4. Transformer [45]

Transformer addresses the problem of gradient explosion/vanishing in different types of RNN. It accomplishes this by using an encoder–decoder architecture with an attention mechanism instead of RNN to encode each position. It differs from the encoder–decoder architecture in terms of the following elements: positional encoding and Transformer blocks, which consist of multi-head attention, a position-wise feed-forward network, add, and normalize. Multi-head attention allows the model to focus on different positions, avoiding the dominant tokens and giving multiple subspaces of representation. Each head has a separate query, key, and value, and runs in parallel, with no sequential relation perceived. Previously, encoder–decoder architectures were capable of directing attention to focus on the most important parts by adding an attention layer, with key and value pairs from the encoder and a query from the decoder, and the output was passed to the decoder’s recurrent layer. However, this is now included three times in both encoder and decoder as an essential part, as there is one multi-head attention on the encoder side and two on the decoder side, and each concatenates the multiple attentions beneath it. Add and norm makes the training easier by combining the input and output of the multi-head attention layer or position-wise feed-forward network layer in a residual structure to perform batch normalization, which is conducted horizontally. The position-wise feed-forward network is a convolution layer used to reduce dimensionality and decrease the number of feature maps. The goal of this layer is to inject batches to reduce capacity. Positional encoding (which should not to be mixed with the position-wise feed-forward network) is used to add positional information through sin and cosine functions with even and odd positions of the embeddings of the input. This is because the sequentiality is not retained in the multi-head attention layer or the position-wise feed-forward network, since they work independently and in parallel.

4.5. Generative Adversarial Networks (GANs) [46]

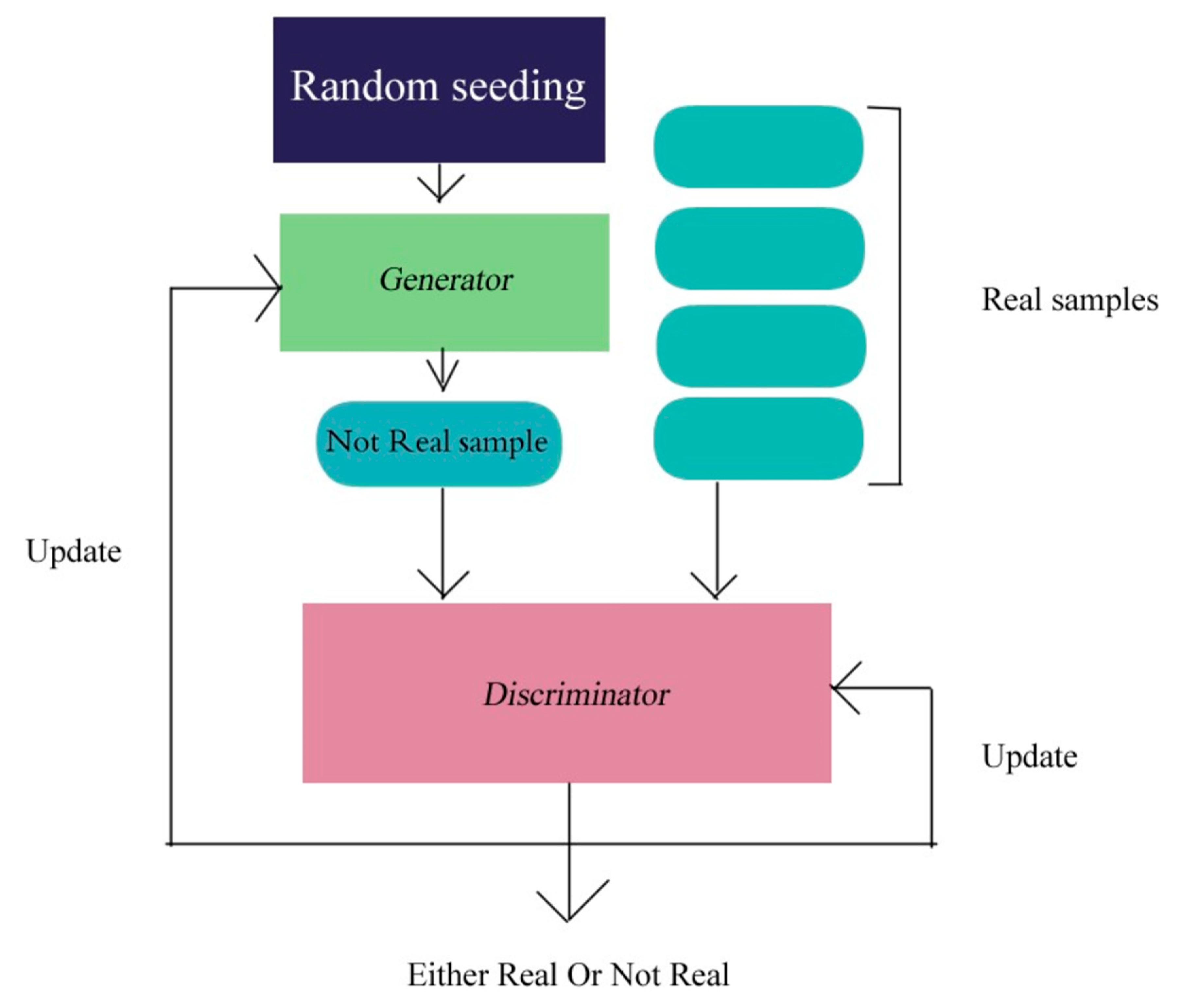

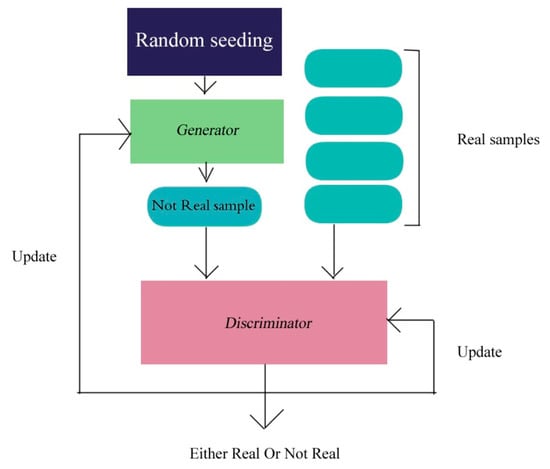

GANs consist of two parts: the generator and the discriminator. The generator and the discriminator compete against each other, which is why they are referred to as adversarial. The generator tries to produce examples that seem as real as the ground-truth examples. On the other hand, the discriminator tries to distinguish whether the image is false or true. In other words, it establishes whether the image belongs to the ground-truth dataset or whether it was generated by the generator. The generator is fed by a random input vector, and it generates an example accordingly. The discriminator, on the other hand, takes as inputs both the generated example and the real example, and then determines which is real and which is generated. Through this classification, the loss is computed, and both the generator and discriminator models are updated. The GANs are usually used to augment the data and generate multiple samples that appear similar to the real dataset, which can help to prevent overfitting and reduce generalization errors in deep-learning models. Both parts of GANs can be made of CNNs. An example of a GAN is shown in Figure 8. The green indicates the generator and the dark pink indicates the discriminator. The dark blue shows random seeding, while variations in the torque show the confusion between the real and false samples.

Figure 8.

The general framework of GANs.

5. Evaluation Metrics

The character-error rate [34] and word-error rate [35] are the metrics used to evaluate the accuracy of OCR systems. The intuition of how they are calculated is simply based on Levenshtein Distance, which calculates the minimum required number of edits for two strings to be the same, whether as words or as characters. It considers three types of error: (1) insertion errors: the mistaken addition of characters or words; (2) deletion errors: the mistaken absence of characters or words; (3) substitution errors: characters or words with errors in their spelling.

The character-error rate can be computed as CER = (S + D + I)/N, which is equivalent to (S + D + I)/(S + D + C), where I is the number of insertions, D is the number of deletions, S is the number of substitutions, C is the number of correct characters, and N is the number of characters in the reference (N = S + D + C).

The word-error rate can be computed as WER = (S + D + I)/N, which is equivalent to (S + D + I)/(S + D + C), where I is the number of insertions, D is the number of deletions, S is the number of substitutions, C is the number of correct words, and N is the number of words in the reference (N = S + D + C).

6. Analysis of Recent Works

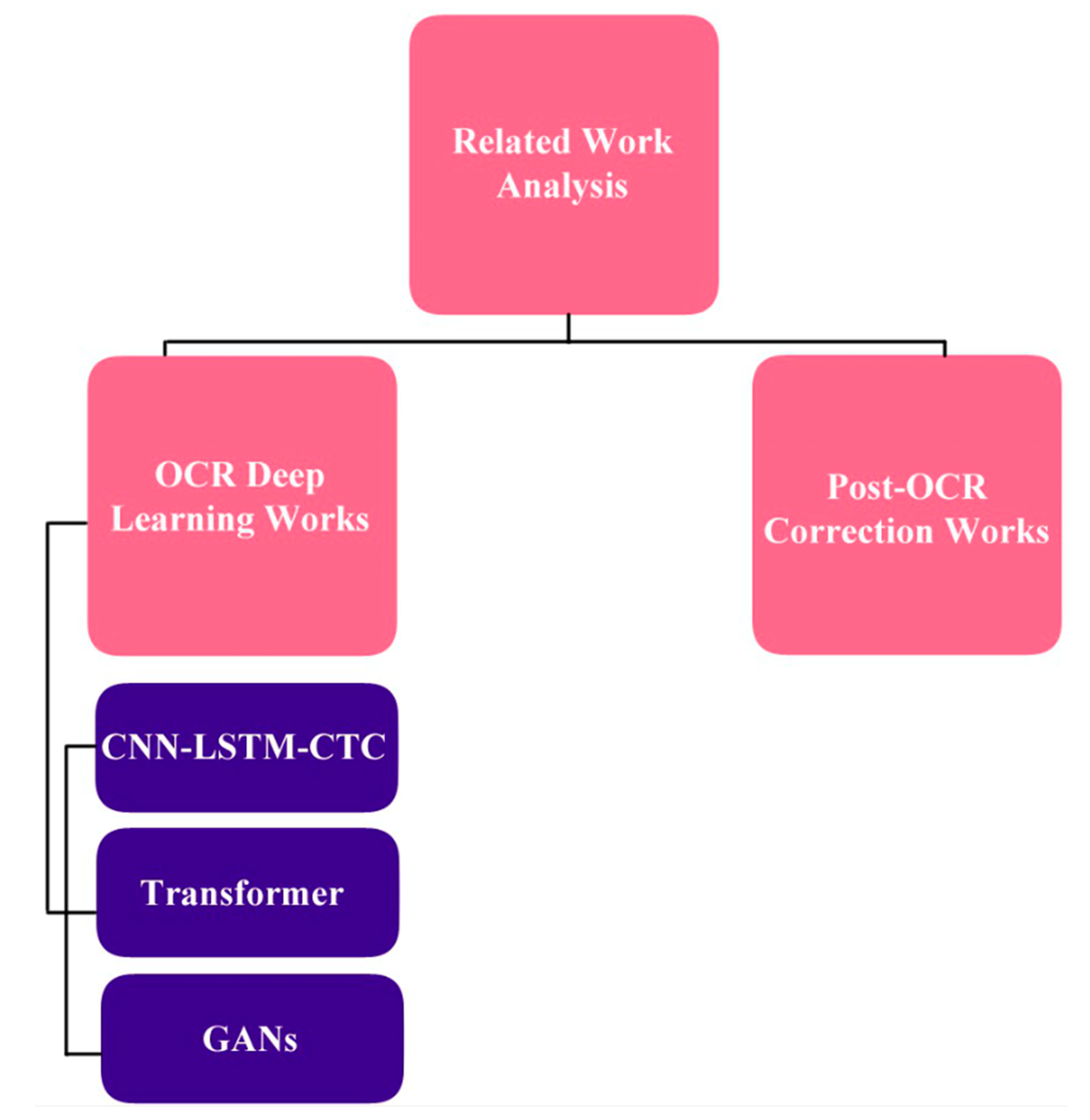

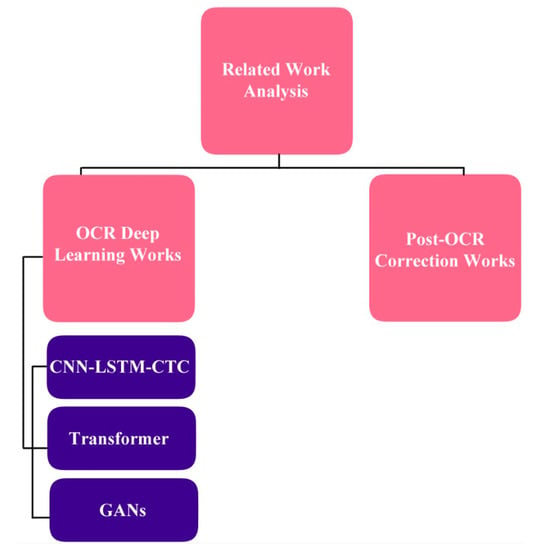

Figure 9 summarizes the taxonomy of the relevant works. The pink shows the main categorization, while the purple is the sub-category in this hierarchical diagram.

Figure 9.

Taxonomy of the analyzed works.

6.1. OCR Deep-Learning Works

6.1.1. CNN-LSTM-CTC Based-Works [47,48,49,50,51,52,53]

Noubigh [47] presented a technique using transfer learning based on the CNN-BLSTM-CTC architecture. The model was first trained on four fonts (Tahoma, Thuluth, Andalus, and Naskh) of printed text from the P-KHATT dataset using 25,888 pictures, and then validated on 5656; therefore, the parameters were learned. Next, the model was retrained on the KHATT (9494 samples) and AHTID (2699 samples) datasets of Arabic handwriting. The testing on KHATT achieved a CER of 2.74% and a WER of 17.84%. On AHTID, 2.03% CER and 15.85% WER are achieved.

Boualam et al. [48] used five layers of CNN followed by two RNN layers and then a CTC layer to compute the loss between original text and the text decoded from pictures by taking the most probable character in each time step and eliminating the duplicate characters and blanks. They used the IFN/ENIT dataset, particularly 946 names out of 32,492 handwritten names of Tunisian villages written by more than 1000 writers. To augment the images in the dataset, they applied rotations, shift-ups, and shearing-ups to generate 15 examples for each image, ending up with 487,350 training samples, which equals roughly 3,876,648 characters. The resulting WER and CER were 8.21% and 2.10%, respectively, for the best train–test split of 80–20.

Shtaiwi et al. [49] proposed a model based on CRNN-BiLSTM to recognize Arabic handwritten text. The dataset used to accurately train the model was MADCAT. This model allows simultaneous text detection and segmentation. It achieved high accuracy in parsing the page completely, segmenting it line-wise, and then predicting what would be written. A large-scale dataset of Arabic handwritten documents that is challenging for OCR was used to evaluate the model. The character-error rate was 3.96%.

Alzrrog et al. [50] created a custom dataset of handwritten Arabic text as words constituted by weekday names, called the Arabic Handwritten Weekdays Dataset (AHWD). It was written by 1000 people and has 21,357 words uniformly distributed between the seven days. The researchers further utilized DCNN for training and testing on this dataset. Their aim was to make the model generalize to new datasets as well. Their proposed model starts by training a DCNN on a balanced and randomly selected dataset using different structures. In order to enhance the results and avoid overfitting, proper drop-out, image regularization, and the learning rate were used. Lastly, blind testing was performed on the hidden set of tests. The results showed that the model’s accuracy was promising, achieving 0.9939 accuracy and a 0.0461 error rate on the AHWD dataset and, on the IFN/ENIT dataset, a 0.9971 accuracy rate and a 0.0171 error rate.

Alkhawaldeh et al. [51] proposed a model to recognize Arabic handwritten digits by employing pre-training on a CNN-LSTM architecture. The former part (VGG-16 or LeNet) is responsible for extracting the representative visual features, while the latter (LSTM) holds the long-term dependencies between the extracted features in the previous step. The datasets used for the training and testing were AHDBase and MAHDBase. They were augmented using different transformation techniques, such as shifting, shearing, and zooming. For the training, 6K images were used, and 1K images were used for the testing. To evaluate the classification model, training and validation loss, accuracy, recall, and precision were reported. In the AHDBase dataset, LeNet + LSTM scored 99% for recall and precision, and VGG-16 + LSTM reached a 98.92% accuracy. In MAHDBase, both LeNet + LSTM and VGG-17 + LSTM scored 99% for recall and precision, and LeNet + LSTM reached a 98.47% accuracy.

Fasha et al. [52] proposed a CNN-BiLSTM model with CTC loss to recognize printed Arabic text without character segmentation. The five layers of CNN worked as feature extractors, while the two layers of BiLSTM were used for long-term dependencies. The researchers created a dataset of 18 different generated fonts, and the text to be generated was from over two million words from the Arabic Wikipedia dump. When tested on the same fonts, a 98.76% character-recognition rate (CRR) and a 90.22% word-recognition rate (WRR) were achieved. The authors performed a good study, experimenting with different types of test set for the proposed model by varying the fonts and dataset sizes and adding noise.

It is noteworthy that when the model was tested on unseen data, it achieved a CRR of 85.15% and a WRR of 23.7%, and when tested on noisy images, it achieved a CRR of 77.29% and a WRR of 14.18%.

Dölek et al. [53] proposed a model to solve the issue of Ottoman OCR, since Arabic OCR does not perform well in this case. They focused on the Naskh font and created a dataset from printed Ottoman documents. Their model consists of CNN layers, followed by LSTM layers and, finally, CTC layers. They compared their model on the test set of 21 pages against ABBY Finereader, Google OCR, Tesseract Arabic, and Tesseract Persian. The resultant character-recognition rate was 97.37% for joined text, 96.12% for normalized text, and 88.86% for raw text.

Khaled [54] proposed an encoder–decoder convolutional recurrent neural network using connectionist temporal classification loss function. Five public datasets were used to train the network: Alexu, SRU-PHN, AHDB, ADAB, and IFN/ENIT, with a total of 63,403 words. The results revealed that the beam-search decoder with data augmentation achieved the best result, with a 97.38% word-recognition rate and a 99.06% character-recognition rate on the IFN/ENIT dataset. Although, without augmentation, it scored a lower word recognition rate of 88.93% on the same set, it achieved better results, of 90.65% CRR and 70% WRR, for the new dataset created for testing, containing 100 unseen Arabic words and images.

6.1.2. Transformer-Based Works [55,56]

Mustafa et al. [55] built a custom dataset generated using 13 different online fonts with various styles, sizes, and noises. It includes more than 270 million words. In addition, the KHATT dataset, which features Arabic handwritten text written by 1000 participants, was also used to train the model. The model was designed to solve the problem of Arabic handwritten-text recognition and is made of two parts: the first part is CNN, and the second part is Transformer. Resnet101, with 101 layers, was used as a feature extractor in the CNN part. The Transformer part is initialized with four encoders and decoders, attention heads, and 256 hidden dimensions. The model was trained using all 12 fonts, with diacritics, and with both long sequences (more than five words) and short sequences (five or fewer), achieving a CER of 7.27%, a WER of 8.29%, and a SER of 10%.

Momeni et al. [56] compared the application of two different Transformer types to the task of offline Arabic handwritten-text recognition: Transformer Transducer, which is used in speech-recognition tasks, and Transformer with cross-attention. The dataset used for training is a synthesis dataset of 500K printed Arabic images with their ground truth. Moreover, image-processing techniques, such as erosion, compression, shearing, distortion, and rotation, were employed. For testing, the KHATT dataset was used, which contains 1000 Arabic handwritten lines and, in particular, 6742 text lines from the unique text. Data-efficient Image Transformer (DeIT-base) and asafaya-BERT-base-arabic were the BERT models used for initializing the model; the first was for visual-feature extraction, and the second was for predicting the masked text. Twelve attention heads were used for the encoder, and eight were used for the decoder. The results showed that the Transformer Transducer achieved 19.76% CER and the Transformer with cross-attention achieved 18.45% CER.

6.1.3. GANs-Based Works [57,58,59,60]

Alwaqfi et al. [57] proposed a model to recognize Arabic handwritten characters using the generative adversarial network (GAN), followed by CNN. The GAN model is made of two components: a generator CNN of 10 layers that generates new images that appear similar to real images of Arabic characters and words and attempts to fool the discriminator, which is the other part, which tries to distinguish real images from the generated images and has nine layers. Sparse categorical cross-entropy was the loss used. Real data are fed to the discriminator, and the output of the discriminator is fed back to the generator to be used as data to generate new images. The dataset that was used was the Arabic Handwritten Characters Dataset (AHCD), which has 16,800 characters, written by 60 writers. The third model (CNN) was further trained on this generated dataset and the original dataset. The results of the testing showed that the model achieved an accuracy of 99.78% when the GAN was used, whereas, when the GAN was not used, the model had a 96.28% accuracy.

Eltay et al. [58] developed a GAN model based on the ScrabbleGAN model to address the issue of the imbalance in the frequency of generated handwritten Arabic characters in Arabic handwritten-text recognition. Their system’s architecture consists of an adaptive data-augmentation algorithm to unify the distributions of the characters, followed by a GAN model working to generate the synthesized words, followed by 200 blocks of BiLSTM to recognize the input words. The adaptive data-augmentation mechanism cuts off overly used characters, such as Alif, and balances characters for which fewer samples are available by generating more versions of these characters. To generate the data in the GAN model, the generator and discriminator work together; one generates false data that seem as real as possible, while the other tries to detect whether the data are false or real. To evaluate the performance of the GAN model, two metrics were evaluated: Fréchet inception distance (FID) and geometry score (GS). The FID calculates the distance between the vectors of real and generated images; the shorter this distance, the better. The GS calculates the quality of the samples generated by detecting the mode collapse, which occurs when the generator is not able to generate samples as diversely as the real-world data distribution, so it generates very similar, redundant, and identical samples. The datasets used to generate the images were the IFN/ENIT and AHDB datasets. For the AHDB dataset, the researchers achieved 27.70 for the FID and a GS of 0.0114; for the IFN/ENIT dataset, they achieved 38.79 for the FID and a GS of 0.01887. To evaluate the recognizer, the word-recognition rate (WRR) was calculated. When evaluated using the generated text only for AHDB, the researchers achieved 85.16% WRR, while for IFN/ENIT, they achieved 61.09% WRR as the average set (e). When evaluated using generated text in addition to the original text, the results were better, with 99.30% WRR for AHDB and 95.87% WRR on the average set (e) of IFN/ENIT.

Mustapha et al. [59] proposed a deep convolutional generative adversarial network (CDCGAN) model to generate isolated handwritten Arabic characters in a guided manner. The results, which were scored using mean multiscale structural similarity (MS-SSIM), reached 0.635 in the generated samples, compared to 0.614 in the real samples. The used dataset was the AHCD dataset, which contains 16,800 isolated handwritten Arabic characters classified into 28 classes, representing each character uniquely. Furthermore, to assess the adaptability of this generated dataset with machine-learning models, it was trained further and tested using a CNN model (LeNet5), showing a gap of 10% between the accuracy obtained while using the generated set and the real set, which achieved 94.99 on the Arabic set. Moreover, an accuracy of 95.08 was achieved on an augmented set containing elements from both sets. On the other hand, in addition to the experiment on Arabic isolated characters, English handwritten characters were generated from the EMNIST dataset, and 0.4658 MS-SSIM was achieved, in comparison to the real set’s score of 0.4610, and the ML model of classification (LeNet5) achieved accuracies of 99.91 on the real data set and 94.30 on the generated set, respectively.

Jemni et al. [60] presented a GAN-based model to enhance the process of the binarization of degraded handwritten documents, which is the step that occurs before performing the OCR, with the goal of obtaining better OCR accuracy. The binarization model consists of two parts: a generator and a discriminator. The generator employs U-net, which is an auto-encoder with an encoding downsampling task and a decoding upsampling task, containing skip connections to recover low-deterioration images. Next, the discriminator was built using a CNN architecture to discriminate the generated ground truth for a given degraded image. The OCR model adopts a CNN-RNN architecture followed by a CTC layer, where the RNN is two layers of BiGRU. The dataset used for Arabic is KHATT, and that used for English is IAM. The generated degraded sets were used in the testing of the OCR models. In particular, two OCR models were created: S1, which uses the ground truth to train the recognizer, and S2, which uses the generated images. The results of the Arabic binarization showed that the peak signal-to-noise ratio (PSNR) in S1 was 15.45, which was the second best (baseline cGAN at 15.52) of all the baseline models, with S2 producing 15.44. The best Arabic OCR accuracy was achieved by obtaining 24.33% CER and 47.67% WER in the S1. The S2, however, achieved 25.31% CER and 48.48% WER, which were the closest results to that of the S1. Similarly, for English, Scenario 1 achieved the best results among all the baseline models, scoring 15.97 PSNR, 21.98% CER, and 49.74% WER. Further experiments were conducted to find the effect of fine-tuning on DIBCO competition datasets, and it was shown that the fine-tuning of datasets from similar distributions led to better PSNR, as it reached 21.85 through fine-tuning a mixture of H-DIBCO 2016, DIBCO, and Palm-Leaf datasets, which are datasets of degraded English handwritten documents.

6.2. Post-OCR Correction Works [61,62,63,64,65,66,67,68,69,70,71]

Aichaoui et al. [61] created a large dataset of spelling errors, called SPIRAL, for use as data for training deep-learning models that aim to fix spelling errors due to the lack of such a large set in Arabic. They collected textual data from various newspaper sites, such as Okaz and available open Arabic corpora sites, such as Maktabah Shamlah. Subsequently, they normalized the text by pre-processing and removing the punctuation, duplicate words, and numbers. Next, they generated eight kinds of error. The total number of words was 248,441,892. In total, 80% of this resultant set was used to fine-tune a pre-trained model on AraBART and 20% was used for testing. The results showed that the highest recall values were 0.863 for space issues and 0.856 for Tachkil errors, while the lowest recall values were 0.337 for deletions and 0.644 for keyboard errors.

Alkhatib et al. [62] proposed a framework to detect and correct spelling and grammatical errors at the word level using AraVec word embeddings, Bi-LSTM, an intra-sentence attention mechanism, and a polynomial classifier. The manually revised corpus contains 15 million erroneous Arabic words, along with their annotation and validation. Even though 150 types of errors were derived, including NER, syntax, and morphological errors, the classifier only performed a binary classification, as the error types are not provided in unsupervised learning.

Solyman et al. [63] proposed a complex model to correct different types of spelling, syntax, and grammatical errors. The model is based on seq2seq, but features a multi-head attention Transformer, because seq2seq models suffer from exposure-bias issues during inference, such that previous target words are replaced by words generated by the model. Further, a model for noising to construct a synthetic dataset was applied to generate 272,352,599 training pairs of target words and sources injected with back-translation, spelling, and normalization errors. To achieve the dynamic aggregation of information across layers, a capsule network that applied expectation-maximization routing was devised. Lastly, Kullback–Leibler divergence was applied as a bidirectional regularization term in the training to improve the arrangement between left-to-right and right-to-left alignments, ultimately overcoming the exposure-bias problem. The best model evaluated on the QALB-2014 and QALB-2015 datasets displayed F1 scores of 71.82 and 74.18, respectively, by employing right-to-left, EM-routing, bidirectional agreement, and re-ranking L2R. The model proved its ability to detect and correct Arabic errors.

Abandah et al. [64] addressed soft Arabic-spelling errors, which usually occur due to typography, since Arabic letters possess orthographic variations. The BiLSTM network was proposed to correct such spelling errors at the character level. The problem is formulated as a one-to-one sequence transcription. Stochastic error injection and transformed input are utilized to bypass the costly encoder–decoder model. For the training and validation, either classical Arabic (Tashkeela with 2312K words) or MSA Arabic (ATB3 with 305K words) were used. The best model corrects 96.4% of the injected errors at a rate of 40% and achieved a 1.28% character error rate on a real test set.

Solyman et al. [65] presented an unsupervised approach depending on the confusion function in order to increase the amount of training sets by generating artificial data for training. A fine-tuned convolutional seq2seq model comprising nine layers of encoder–decoder with an attention mechanism is developed. For enhancing performance, feature extraction and classification were combined into one task, and the stacking of layers is considered an efficient way of capturing long-term dependencies in the local context. The generated synthetic data had 18,061,610 words from QALB and Alwatan articles. The data were preprocessed using FastText with pre-training embedding and employing the BPE algorithm for infrequent words, which made a notable improvement of 32.38%. The model achieved a 70.91 F-1 score, 63.59 for recall, and 80.23 for precision on the QALB test set, a comparable performance to those of other SOTA models.

Zribi [66] proposed a model that combines existing embedding techniques to detect and correct Arabic semantic errors that are not compatible with a sentence’s contextual meaning. These techniques are SkipGram, FastText, and BERT. The model was tested on the ANC-KACST set, and the best model was averaged the FastText and BERT, achieving 88.9 for recall and 91.8 for F-1.

Almajdoubah [67] addressed the problem of ornamenting Arabic text with diacritics and the correction of spelling mistakes. They investigated encoder–decoder LSTM and Transformer models as problems in seq2seq transcription. To train and test the models, ATB3 with 305K words and Tashkeela with 2312K words were used, with a split of approximately 15% for the testing on each. The results showed that the encoder using embedding and LSTM and the decoder using embedding and LSTM with Luong attention and Softmax activation at the dense output layer achieved the highest accuracy, of 99.78%. It was concluded that training with target-diacritized output corrects text with a high level of accuracy.

Irani et al. [68] noticed that introducing Arabic sentences into the Persian language complicated spelling-correction tasks. Thus, they utilized a conditional random field (CRF) RNN network and used 151,000 sentences to train the model. These sentences had artificial errors created by means of deletion, substitution, and insertion. The features were extracted from the dataset and used as inputs to the network to produce predictions. The results showed that the model achieved a good accuracy, of 83.36%, when a single error was introduced into the sentence.

Abbad et al. [69] proposed an approach to diacritizing Arabic letters using four BiLSTMs. The diacritics were grouped into four categories: vowels, Sukoon, Shadda, and double-case endings (Tanween). The Tashkeela set was the source of data, from which the authors used ten files, each consisting of 10K lines, for the training, and one file for the testing. The data were separated into two parts: (1) input vectors that contained indexes of letters; and (2) output vectors that contained the diacritics’ indexes according to their four groups. Subsequently, a concatenation operation occurred on both the input and the output vectors. Next, a sliding-window operation was performed to generate continuous and fixed-size data, with a size of 21 and a step of 7. Subsequently, these embeddings were fed to each corresponding BiLSTM layer during the training. Sigmoid activation was used for binary cases and Softmax for multiple cases. When including all the letters, the model resulted, at best, in a diacritization-error rate of 3 and 8.99% WER.

Abandah et al. [70] created a model to diacritize Arabic poetry due to its rich compositions and the difficulty in diacritizing them. Transfer learning was leveraged to use features of pre-trained models. Sixteen classes of diacritics were formed, and four BiLSTM layers were used, followed by a Softmax activation. The dataset scarcity was combated with careful training on sub-sets of APCD2. The best model consisted of two parts: (1) a pre-trained classification model for meter prediction; and (2) four BiLSTM layers obtaining corresponding transfer-learning layers and the outputs of lower layers. The training was triphasic: first, the first stack layer was frozen and the second stack layer was trained on DS1; second, the whole model was retrained on DS1; and third, the whole model was retrained on DS2, which was smaller than DS1 but had a higher diacritics ratio. The results showed an improvement of 42% and a decrease in the diacritization-error rate from 6.08% to 3.54%.

Almanaseer et al. [71] trained a deep belief network (DBN) to separately classify each input letter with the corresponding diacritized version. The classification was composed of 12 classes. Three layers of RBM were utilized, Borderline-SMOTE was used to over-sample and balance the classes, and ReLU activation was employed. Tashkeela and LDC ATB3 were used for the training and testing. The model, at best, achieved a 2.21% DER and 6.73% WER on the ATB3 dataset. For the Tashkeela set, it achieved 1.79%, with an improvement of 14%.

To summarize the analysis of recent works, a thorough and critical review of relevant works on both (1) OCR models and (2) text-correction models was performed. It was concluded that each type of model has gaps, and some have unique insights that can be adopted to develop further models. We identified a variety of different techniques. The effectiveness of LSTM-CNN-CTC was shown in situations in which limited data were available, and the application of deep-learning techniques for text correction showed its effectiveness in multiple works. In the following section, we discuss each work in more depth, discussing the crucial points in each work, and, finally, we present our findings for each technique, from both OCR and text-correction works.

7. Discussion

7.1. OCR Deep-Learning Works

7.1.1. CNN-LSTM-CTC Based-Works [47,48,49,50,51,52,53]

Noubigh [47] conducted the testing of model accuracy on a small AHTID dataset, testing 901 samples. Testing on more datasets would produce a more realistic evaluation of the model.

Boualam et al. [48] tested the model on a set that was split from the same augmented set for training. The production of such high accuracy is not a strange phenomenon; however, it may indicate an overfitted model, since no real testing of generalization was performed. Therefore, the authors could use the other unused parts of the IFN/ENIT dataset to perform much more robust testing of their model. Moreover, they reversed the reporting of the WER to 91.79% rather than 8.21%, which may lead to some confusion.

Shtaiwi et al. [49] used a complex architecture that has the drawback of requiring significant time to train due to the additional layers of BiLSTM.

Alzrrog et al. [50] reported accuracy as part of the confusion matrix, which is not an appropriate indicator of the accurate performance of the model in the case of OCR. The appropriate method with which to measure it is the use of the character-error rate or word-error rate, which the authors did not specify. Moreover, such high accuracy in a testing set can indicate that the set used for testing is identical to the training and not sufficiently challenging e for the model. Therefore, it was not demonstrated whether the results can be generalized, since the details of the part of the IFN/ENIT dataset on which the testing was conducted were missing and examined blindly.

Alkhawaldeh et al. [51] achieved 98% accuracy. It is no surprise that this level of accuracy was achieved. The splitting of the dataset into 6000 instances for training and only 1000 for testing meant a ratio of 85% for training and 15% for testing, which resulted in high accuracy in both the training and testing sets. Moreover, this indicated that there may have been an overfit, since the testing was performed only on a small part of the dataset. In addition, the validation-set amount was not reported; rather, it was mentioned that it was only a small subset of the training set. Overall, a better judgment of the accuracy of the model could have been made if more portions had been given to the testing set and if the validation part had been appropriately determined. Furthermore, the real indicator of the accuracy of an OCR model is not the accuracy itself but, rather, the character- or word-error rate; however, if the model focuses on a single character’s classification each time, then the use of the accuracy metric is justifiable. Therefore, for example, if a sequence of numbers is fed to this model to be tested, the accuracy metric does not represent the real accuracy of the model.

Fasha et al. [52] achieved a word-recognition rate that differed drastically from the character-recognition rate. This is an open issue to be resolved further by implementing post-processing techniques.

Dölek et al. [53] achieved, with their best model, a word-recognition rate for normalized text of 58%. This sheds light on the common issue of low WRR, suggesting the need for extra steps to post-process the resultant text. In addition, it is true that they tested the test set on different platforms, but more variations of the test set itself could have made for a detailed empirical analysis.

Khaled [54] used only 5% of their training dataset for validation, while 95% was used for training on the merged training set, which may have been a sign of overfitting.

It can be concluded from the works critiqued above that:

- CNN-LSTM-CTC works are tested on test sets that have no fair split in proportion to the training sets or are tested on sets that are not sufficiently challenging. Therefore, high accuracies could be a sign of overfitting. This issue can be fixed by training with an appropriate proportion between the training and testing splits. Moreover, this indicates the need for more challenging datasets;

- CNN-LSTM-CTC-based works can take a long time to train, especially if BiLSTM is devised. Moreover, they achieve low word-recognition rates in challenging settings;

- CNN-LSTM-CTC is currently the modus operandi architecture due to the fact that it handles spatial relations, long textual dependencies, and text alignment.

7.1.2. Transformer-Based Works [55,56]

Mustafa et al. [55] were limited by their shortage of resources and computational power, as their experiments were performed using a subset of 15,000 images of single-line text instead of the whole dataset of 30.5 million images of single-line text. Moreover, if such resources had been available, they may have achieved better results. In addition, alternating the numbers of parameters and encoders and decoders can enhance the model. Lastly, the model suffers from high temporal complexity, as it operates in quadratic run time due to the use of self-attention in the multi-head attention layer in the encoder and the decoder.

Momeni et al. [56] proposed a model that suffers from high time complexity and requires a long time for training. The Transformer Transducer has 144.7 million parameters, while the Transformer with cross-attention has 153.1 million. Moreover, a high number of K in the beam search can increase the CER. On the other hand, high latency is produced, as 223.7 ms of latency is produced when K = 5 in Transformer with cross-attention. In addition, since the model is initialized with DeIT-base and Arabic BERT, the rest of the weights that are not in the pre-trained models are initialized randomly. For example, firstly, DeiT takes the input as a 384 × 384 RGB image, whereas their encoder expects variable-length grayscale images. Secondly, Arabic BERT does not have the encoder–decoder attention layers of the attention-based decoder in Momeni et al.’s model. Thirdly, the Transducer decoder has an extra joint network. These differences can be seen as problems or as opportunities to use other techniques for such parameters rather than random initialization to obtain a better CER.

From the works discussed above, the following conclusions can be drawn:

- Transformer-based works are limited by computational resources and take significantly longer to train. Moreover, they require more annotated training data than other architectures;

- Transformer architecture has the potential to provide robust results. Nevertheless, latency is produced during the search;

- Transformer-based works have a higher number of parameters than other architectures;

- Transformer-based works are preferred if more models are developed that have compatible weights, avoiding the random initialization of weights, which, it is suggested, is responsible for lowering the recognition rate.

7.1.3. GANs-Based Works [57,58,59,60]

The work of Y. Alwaqfi et al. [57], despite its excellent idea, remains vague in many aspects and is clouded in mystery. One of the unclear aspects of the results achieved in this work is whether they are based on character recognition or word recognition. Therefore it is better to report CER or WER, which are more representative of the accuracy of the OCR model than the accuracy alone. Moreover, if it is character-based, then the other metrics, such as recall, precision, and F-1 score, should demonstrate a better understanding of the results. Furthermore, it is also not clear whether the classes were somehow balanced after they were augmented with the generated images. High accuracy alone is not sufficient to determine this. In addition, the number of images after the augmentation using GAN was not mentioned. Furthermore, the number of parameters was not reported. Additionally, since the part used for the testing was only a subset of that used for the training, high accuracy was expected. Nevertheless, the split amount between the training and the testing was still mysterious. Overall, further investigation is required to determine whether these points are signs of the overfitting of the model or of a model that genuinely generalizes well.

Eltay et al. [58] missed the details of the adaptive augmentation technique, such as the algorithm itself and the basis or formula that determines the number of characters to be cut off and scaled within a certain range, as in the IFN/ENIT dataset, most characters become 100K occurrences, while in the AHDB dataset, they become approximately 43K occurrences. Moreover, it is not clear whether the different splits for testing (d, e, and f) were made purely stochastically or according to some similar features, and further clarification is needed as to why set d performed better than set e and why set e performed better than set f. It is possible that there are sets that have noticeably more characters than others, which would explain the diversity in the levels of accuracy. In addition, when test sets d and e were combined in training, the test on set f seemed to have the lowest performance, which may indicate that set f was the most challenging set. However, the authors neither discussed this point nor trained the model on set f, and they tested it on combinations of sets e and d.

There are some limitations to Mustapha et al. [59]’s approach. First, in most cases, letters are connected in Arabic rather than being isolated, and these cases were excluded. Second, ensuring the quality of the generated samples of letters containing dots remains a challenge, as this level of quality has not yet been reached. Third, neither was the dataset used for generating the samples balanced, nor was the split between the training and testing that led to high accuracy reasonable. Fourth, since the ML model of classification is under assessment, the accuracy can mostly be a misrepresentation rather than a true representation of the model’s performance; therefore, other metrics, such as recall, F-1 score, and precision must be used.

A remarkable effort and empirical analysis were applied in the work of Jemni et al. [60], on a challenging task. Nevertheless, there are some limitations to this approach. The first is the complexity involved in using several combinations of deep-learning models in each step. The second is that the accuracy of the Arabic recognizer, despite the use of all these complex models, is still very close to that of the nearest baseline model, which suggests that more attention should be paid to the OCR and post-processing steps, rather than focusing solely on binarization as a step in pre-processing. The third is that the quality of the images generated by generative adversarial networks is better represented using Fréchet inception distance (FID), but this was not used. Moreover, the training and testing splits were ambiguous. Therefore, even the accuracy, which is currently not particularly high, could be a case of overfitting, precluding generalization. Lastly, the amount used for fine-tuning the mixture of sets is unknown. Nevertheless, Jenni et al.’s work is still notable, as it showed generally good competency in both Arabic and English degraded sets.

From the works analyzed above, that the following conclusions can be drawn:

- GANs works are sensitive to balanced/unbalanced datasets. This is due to the fact that GANs are usually devised as a way to augment data before they are classified using another classifier. The use of GANs is a method to combat the limitations of Arabic datasets. However, the overall performance becomes dependent on the application of another model for classification. In essence, this adds layers of complexity to the model and does not guarantee that it can achieve high accuracy on challenging sets;

- GANs suffer when generating letters with dots, as they can be considered noise;

- GANs works accuracy is mostly inappropriately reported.

Overall, the analysis, performance, and drawbacks of deep-learning OCR works are summarized in Table 2. We can conclude from the table and the discussion above that:

Table 2.

A holistic summary of all the analyzed deep-learning OCR works.

- LSTM-CNN-CTC is the current preferred architecture for Arabic OCR. This is due to the fact that this combination allows the handling of spatial relations, long text dependencies, and their alignments, and it does not require as many data as Transformer and GANs;

- Transformer is the most promising architecture for future works when more annotated and domain-specific data are made available;

- GANs are usually used to augment datasets, but they are sensitive to the dataset’s state of balance. In addition, GANs must be combined with a classifier, which contributes to enhancing the accuracy on one hand and the model’s complexity on the other;

- There is a crucial need for more public handwritten Arabic OCR datasets in terms of quantity, quality, scope, and font diversity in order for OCR research to progress.

7.2. Post-OCR-Correction Works [61,62,63,64,65,66,67,68,69,70,71]

One significant limitation of the work of Aichaoui et al. [61] is that the corpus building has an obviously uneven distribution between the types and categories of error; therefore, a lack of accuracy and results is associated with low-frequency errors. Evidence of this is shown as the numbers of deletion and keyboard errors (which obtained the worst recall, at 0.337 and 0.644, respectively) were, in fact, among the lowest in the dataset, comprising 0.67% and 6.26%, respectively. A remedy for this observed fact is to augment with more data from the scarcest parts and cut off from the dominant part until they become balanced; consequently, such imbalances in the dataset are ameliorated and, therefore, the overall performance can be improved. Another limitation is that since the parts of the dataset that are used for testing are not specified and it can be assumed that the testing set also consists of the same proportions as the training set, the dominant parts of the dataset can cause the testing set to achieve high results. Therefore, the testing set should have a uniform number of error categories.

Alkhatib et al. [62] presented work that was limited to experiments with word-form, verb-tense, and noun-number errors. Many error types were neglected, such as semantic errors, style problems, sentence-level problems, and incorrect ordering. An F-measure of 93.89% was achieved in comparison with other systems, and the intra-attention mechanism improved the recall by 4.28, although it failed in some cases; therefore, attention may be paid to other error types to achieve better intra-sentence attention.

Solyman et al. [63] proposed a model that still suffers when faced with challenging examples, as it can fail to correct missing words. Furthermore, it is still weak in correcting dialect words and suffers from errors in the correction of punctuation. Further efforts involving different architectures and a greater focus on dialect-word mistakes, would be beneficial. Moreover, the computation and training time are still noticeably high.

Abandah et al. [64] used a test set that was excessively small compared with the training sets, as it consisted of 2443 words, which is 0.10% of the Tashkeela set’s word count.

Solyman et al. [65] conducted testing only on a very small portion, of 0.55%, of the total data. Furthermore, their testing lacked the ability to correct dialectic and punctuation errors.

Zribi [66] was not able to compile a fair comparison with other models, as the test set was different, albeit derived from the same dataset.

Almajdoubah [67] did not report F1 or recall. In addition, the model takes an excessive amount of time to train (11 h), while Transformer achieves comparable results in less time (3 h).

Irani et al. [68] presented a model whose performance, when tested on a complex set in which four errors were injected into a sentence, dropped drastically to 60.26%.

Abbad et al. [69] proposed an approach that faces some challenges when the Shadda diacritic is to be corrected, since it should be accompanied by other diacritics, while the model uses it alone in some locations because the training set has the same problem.

Abandah et al. [70] ignored the letters with diacritics of a ratio less than half; therefore, it is not guaranteed that their model can perform well when tested in such scenarios.

Almanaseer et al. [71] used a DBN that suffers from poor results if the batch size is small; it therefore takes longer to train such batches.

The analysis, performance, and drawbacks of deep-learning text-correction works are summarized in Table 3. The conclusions drawn from the works on text correction discussed and analyzed above are as follows:

Table 3.

A holistic summary of all analyzed text-correction works.

- Uneven distribution and a lack of error types are common issues in text-correction datasets;

- Text-correction models suffer when faced with challenging examples, such as the presence of dialect words and the correction of punctuation errors;

- The problem of the ineffective testing of models is further extended to text-correction works, as this problem also exists in OCR works;

- More complex models, such as DBNs, take longer to train;

- There is a need for more text-correction datasets that are context-aware;

- The effectiveness of the application of embedding techniques to correct text, such as FastText and BERT, was shown; however, this approach is still in its infancy. Moreover, it has potential and should be explored further in Arabic.

7.3. Robust OCR and Text-Correction Techniques

There are works that are robust against difficult but limited conditions during OCR, which are limited to difficult paper conditions; however, no work is ultimately robust against all conditions, including a wide scope of text types and font variations. Moreover, even when facing difficult input conditions, there is still room and a need for development. The most robust OCR works from the analysis are described below

Mustafa et al. [55] presented a CNN-Transformer trained on a custom dataset and KHATT with 7.27 CER and 8.29 WER. The architecture consists of 101 layers of Resnet101 and four encoders and decoders of Transformer, which are trained on multiple-length sequences of words. The main drawback is that it requires significant resources, millions of dataset examples, and high computational power, and it is a complex model that needs the availability of a large amount of annotated data for training, which are limited in Arabic.

Noubigh [47] deployed a CNN-BLSTM-CTC architecture. The model was trained on 25,888 images of four printed fonts from P-KHATT, and then re-trained on KHATT and AHTID. The authors reported 2.03 CER and 15.85 WER. However, since the test was only conducted on 901 samples from the AHTID dataset, on which the model was trained it is uncertain how well the results can be generalized. Nevertheless, the current accuracy suggests a more robust result than those of other models when tested on other data.

Boualam et al. [48] utilized a CNN-RNN-CTC model. The dataset used was IFN/ENIT and 2.10 CER and 8.21 WER were reported. Five CNN and two RNN layers were trained on 487,350 samples after the augmentation. However, no testing of generalizations was conducted in this work. Nevertheless, their accuracy might suggest a better result than other models when tested on new data.

Khaled [54] used a CNN-RNN-CTC trained on multiple sets of Alexu, SRU-PHN, AHDB, ADAB, and IFN/ENIT. A beam-search decoder with data augmentation improved the results by using 63,403 words. The authors reported 0.94 CER and 2.62 WER, which are the best results from all the works analyzed if seen from a purely numerical perspective. However, only 5% of the training dataset was used to validate the model. Given the amount of data used for the training and the high results, this model, given the input conditions, may be the most robust under real-world testing.

Similarly, no universal model can handle all conditions in text-correction steps. Nevertheless, many models are under development for dealing with difficult and complex texts, including the challenges of dialects and diacritization. The analysis revealed that much development is needed in this step. The current robust works on text correction are described below.

Aichaoui et al. [61] combined AraBART with the SPIRAL dataset, which has eight kinds of error for training and testing. The best recall was reportedly 0.863, and it was for space-issued errors. However, an uneven distribution between the categories of error was noticeable, which may be the reason why the results were not better. Nevertheless, this high result suggests the potential for robust results if the model is trained on more datasets and shows the potential of applying more text embeddings.

Alkhatib et al. [62] designed a model using AraVec, Bi-LSTM, attention mechanism, and a polynomial classifier for 150 error types. The used datasets were Arabic word lists for spell checking, Arabic Corpora3, KALIMAT4, and EASC5. The F-measure was quite reliable, at 93.89%. However, semantic errors, style problems, and sentence-level problems were neglected. In light of the amount of data and error types, this work’s high results suggest that further robust results can be achieved by including more training data and error types.