Anomaly Detection through Grouping of SMD Machine Sounds Using Hierarchical Clustering

Abstract

1. Introduction

- Continuity: The SMD assembly machine constantly assembles products along the manufacturing line, so if the manufacturing line breaks down, the damage is enormous.

- Product variety: The product type varies depending on which sensor is assembled, and the product type linearly increases over time.

2. Related Work

2.1. Autoencoder-Based Anomaly Detection

2.2. Clustering-Based Anomaly Detection

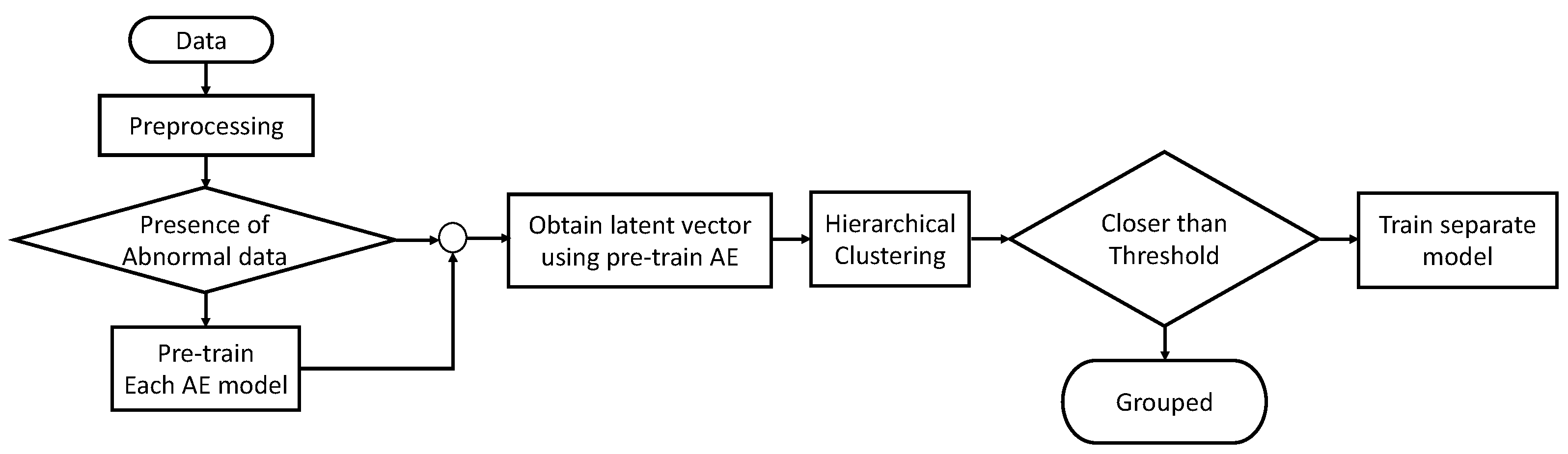

3. Proposed Method

3.1. Pre-Training the Autoencoder Model

| Algorithm 1:Pre-trained autoencoder model with normal data |

| Input: Normal dataset of the product, |

| where is the number of product data samples |

| Output: Encoder of the autoencoder |

| repeat |

| calculate by Equations (9), (10), and (17), |

| where is a set of data samples and denotes the output sequence data. |

| update parameter using gradients of . |

| until epochs given in the experiment. |

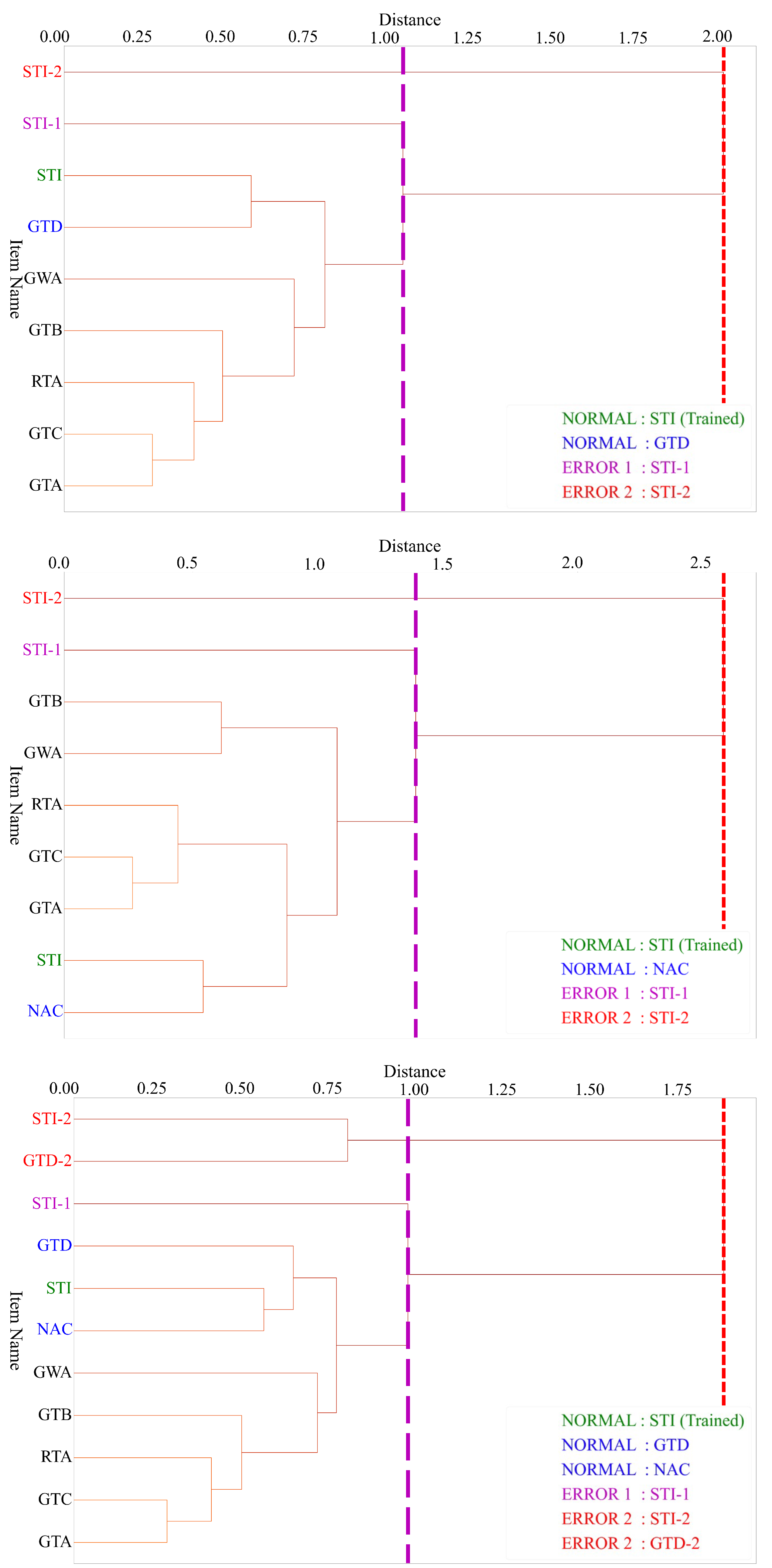

3.2. Hierarchical Clustering

- Centroid: For all combinations of data in cluster and data in cluster , the distance between the center points of clusters and according to the following equation:where and are the central points of the two clusters and , respectively. The center point of the cluster uses the average of all data contained in the cluster according to the following equation:where the symbol refers to the number of elements in a cluster.

- Single: For all combinations of data and data , we measure the distance to find the smallest value according to the following equation:

- Complete: For all combinations of data and , we measure the distance to find the largest value according to the following equation:

- Average: For all combinations of data and , we measure the distance to find the average according to the following equation:

- Median: This method is a variation of the centroid linkage method. Similar to the centroid method, the distance of the center points of clusters is the distance between clusters. If clusters and combine to form cluster according to the following equation:the center point of the cluster is not newly calculated, but the average of the center points of the two clusters of the original cluster is used according to the following equation:Therefore, the calculation is faster than obtaining the center point by averaging all the data in the cluster.

4. Experiment

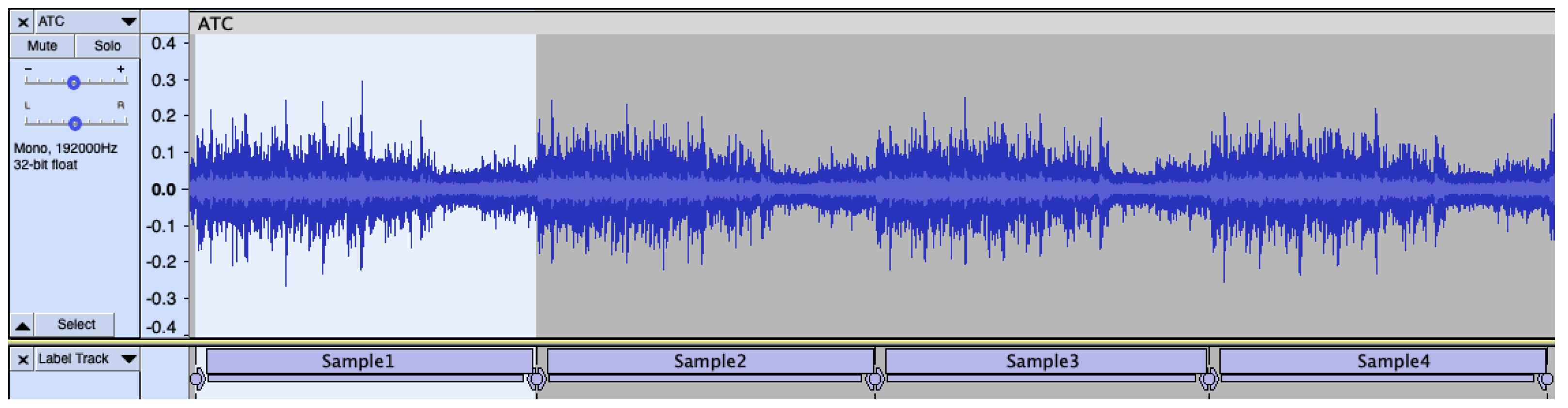

4.1. Dataset

4.1.1. Data Preprocessing

4.2. Experimental Process

- Step 1: Data preprocessing.

- Step 2: Train the autoencoder model.

- Step 3: Hierarchical clustering.

- Step 4: Verification of newly collected data.

4.3. Experiment Result

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

References

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. (CSUR) 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Chalapathy, R.; Chawla, S. Deep learning for anomaly detection: A survey. arXiv 2019, arXiv:1901.03407. [Google Scholar]

- Zhang, G.; Liu, Y.; Jin, X. A survey of autoencoder-based recommender systems. Front. Comput. Sci. 2020, 14, 430–450. [Google Scholar] [CrossRef]

- Han, J.; Liu, T.; Ma, J.; Zhou, Y.; Zeng, X.; Xu, Y. Anomaly Detection and Early Warning Model for Latency in Private 5G Networks. Appl. Sci. 2022, 12, 12472. [Google Scholar] [CrossRef]

- Elhalwagy, A.; Kalganova, T. Multi-Channel LSTM-Capsule Autoencoder Network for Anomaly Detection on Multivariate Data. Appl. Sci. 2022, 12, 11393. [Google Scholar] [CrossRef]

- Wulsin, D.; Blanco, J.; Mani, R.; Litt, B. Semi-supervised anomaly detection for EEG waveforms using deep belief nets. In Proceedings of the 2010 Ninth International Conference on Machine Learning and Applications, Washington, DC, USA, 12–14 December 2010; pp. 436–441. [Google Scholar]

- Oh, D.Y.; Yun, I.D. Residual error based anomaly detection using autoencoder in SMD machine sound. Sensors 2018, 18, 1308. [Google Scholar] [CrossRef]

- Park, Y.; Yun, I.D. Fast adaptive RNN encoder–decoder for anomaly detection in SMD assembly machine. Sensors 2018, 18, 3573. [Google Scholar] [CrossRef]

- Nam, K.H.; Song, Y.J.; Yun, I.D. SSS-AE: Anomaly Detection using Self-Attention based Sequence-to-Sequence Autoencoder in SMD Assembly Machine Sound. IEEE Access 2021, 9, 131191–131202. [Google Scholar] [CrossRef]

- Johnson, S.C. Hierarchical clustering schemes. Psychometrika 1967, 32, 241–254. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Yeo, C.K.; Lee, B.S.; Lau, C.T. Autoencoder-based network anomaly detection. In Proceedings of the 2018 Wireless Telecommunications Symposium (WTS), Phoenix, AZ, USA, 17–20 April 2018; pp. 1–5. [Google Scholar]

- Masci, J.; Meier, U.; Cireşan, D.; Schmidhuber, J. Stacked convolutional autoencoders for hierarchical feature extraction. In Proceedings of the International Conference on Artificial Neural Networks, Espoo, Finland, 14–17 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 52–59. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 dataset. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Said Elsayed, M.; Le-Khac, N.A.; Dev, S.; Jurcut, A.D. Network anomaly detection using LSTM based autoencoder. In Proceedings of the 16th ACM Symposium on QoS and Security for Wireless and Mobile Networks, Alicante, Spain, 16–20 November 2020; pp. 37–45. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Diday, E.; Simon, J. Clustering analysis. In Digital Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 1976; pp. 47–94. [Google Scholar]

- Izakian, H.; Pedrycz, W. Anomaly detection in time series data using a fuzzy c-means clustering. In Proceedings of the 2013 Joint IFSA World Congress and NAFIPS Annual Meeting (IFSA/NAFIPS), Edmonton, AB, Canada, 24–28 June 2013; pp. 1513–1518. [Google Scholar]

- Kumar, S.; Khan, M.B.; Hasanat, M.H.A.; Saudagar, A.K.J.; AlTameem, A.; AlKhathami, M. An Anomaly Detection Framework for Twitter Data. Appl. Sci. 2022, 12, 11059. [Google Scholar] [CrossRef]

- Shi, P.; Zhao, Z.; Zhong, H.; Shen, H.; Ding, L. An improved agglomerative hierarchical clustering anomaly detection method for scientific data. Concurr. Comput. Pract. Exp. 2021, 33, e6077. [Google Scholar] [CrossRef]

- Lyon, R.J.; Stappers, B.; Cooper, S.; Brooke, J.M.; Knowles, J.D. Fifty years of pulsar candidate selection: From simple filters to a new principled real-time classification approach. Mon. Not. R. Astron. Soc. 2016, 459, 1104–1123. [Google Scholar] [CrossRef]

- Saraçli, S.; Doğan, N.; Doğan, İ. Comparison of hierarchical cluster analysis methods by Cophenetic correlation. J. Inequalities Appl. 2013, 2013, 1–8. [Google Scholar] [CrossRef]

- Sharma, S.; Sharma, S.; Athaiya, A. Activation functions in neural networks. Towards Data Sci. 2017, 6, 310–316. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Murtagh, F.; Contreras, P. Algorithms for hierarchical clustering: An overview. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2012, 2, 86–97. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Name | Abbr. | Time (± s) | Amt. | Name | Abbr. | Time (± s) | Amt. |

|---|---|---|---|---|---|---|---|

| AT2−AB | ATA | 40.3 ± 2.1 | 100 | NA−9473 | NAB | 13.1 ± 0 | 53 |

| AT2−IN88 | ATB | 8.3 ± 0 | 63 | RT−12DF | RTA | 36.6 ± 0.9 | 29 |

| C−SERIES | CSA | 12. ± 0.8 | 41 | ST−2524 | STA | 20.3 ± 0.7 | 46 |

| CLR−085 | CLA | 34 ± 3.5 | 51 | ST−2624 | STB | 19.5 ± 0.8 | 43 |

| CT−C112B | CTA | 45.4 ± 3.6 | 22 | ST−2744 | STC | 17.4 ± 0.6 | 76 |

| CT−C112T | CTB | 40.1 ± 0.9 | 24 | ST−3424 | STD | 25.9 ± 3.5 | 81 |

| CT−C121B | CTC | 33.9 ± 7.7 | 18 | ST−3214 | STE | 26.8 ± 1.5 | 108 |

| CT−C134B | CTD | 44.4 ± 14.4 | 33 | ST−3214−1 | STE−1 | 27 ± 1.2 | 100 |

| CT−C134T | CTE | 34.5 ± 2.9 | 44 | ST−3214−2 | STE−2 | 27.5 ± 0.9 | 100 |

| GT−3214 | GTA | 28.3 ± 2.6 | 33 | ST−3428 | STF | 30.3 ± 2.7 | 89 |

| GT−5112 | GTB | 25.6 ± 1.7 | 51 | ST−3624 | STG | 28.5 ± 3.6 | 77 |

| GW−9XXX | GWA | 20.8 ± 2.1 | 93 | ST−3704 | STH | 27.9 ± 1.5 | 64 |

| GT−4118 | GTC | 35.9s ± 4.1 | 43 | ST−3708 | STI | 40 ± 2.9 | 64 |

| GT−4118−1 | GTC−1 | 33.9 ± 3.3 | 14 | ST−3708−1 | STI−1 | 31 ± 2.1 | 35 |

| GT−4118−2 | GTC−2 | 28.4 ± 2.7 | 100 | ST−3708−2 | STI−2 | 36 ± 7.4 | 82 |

| HV−M1230C | HVA | 49.8 ± 2.6 | 39 | ST−3804 | STJ | 25.2 ± 1.3 | 83 |

| MG−A121H | MGA | 30.1 ± 2 | 68 | ST−7111 | STK | 8.7 ± 0.9 | 30 |

| NA−9289 | NAA | 10.5 ± 0.1 | 55 | TSIO−2002 | TSA | 19.8 ± 0.7 | 65 |

| Name | Abbr. | Time (± s) | Amt. | Name | Abbr. | Time (± s) | Amt. |

|---|---|---|---|---|---|---|---|

| AT2−IO | ATC | 29.6 ± 1.0 | 10 | GT−2328−1 | GTG−1 | 21.5 ± 1.5 | 54 |

| AT2−IO−1 | ATC−1 | 34.3 ± 0.8 | 26 | GT−2744 | GTH | 17.8 ± 1.7 | 12 |

| AT2−IO−2 | ATC−2 | 30.9 ± 1.6 | 35 | GT−5102 | GTI | 24.8 ± 0.7 | 16 |

| AT2−89 | ATD | 26.7 ± 1.4 | 55 | NA−9111 | NAC | 29.8 ± 0.5 | 20 |

| AT2−89−1 | ATD−1 | 26.7 ± 0.2 | 38 | ST−2748 | STL | 19.3 ± 0.7 | 63 |

| GT−1238 | GTD | 23.5 ± 0.9 | 61 | ST−3114 | STM | 24.1 ± 2.8 | 15 |

| GT−1238−2 | GTD−2 | 28.1 ± 2.1 | 6 | ST−3118 | STN | 31.7 ± 0.9 | 67 |

| GT−225F | GTE | 31.2 ± 0.6 | 72 | ST−3234 | STO | 27.3 ± 1.8 | 32 |

| GT−226F | GTF | 30.8 ± 0.7 | 23 | ST−3544 | STP | 28.7 ± 0.9 | 19 |

| GT−2328 | GTG | 21.7 ± 0.4 | 64 | ST−4212 | STQ | 17.7 ± 0.9 | 35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.J.; Nam, K.H.; Yun, I.D. Anomaly Detection through Grouping of SMD Machine Sounds Using Hierarchical Clustering. Appl. Sci. 2023, 13, 7569. https://doi.org/10.3390/app13137569

Song YJ, Nam KH, Yun ID. Anomaly Detection through Grouping of SMD Machine Sounds Using Hierarchical Clustering. Applied Sciences. 2023; 13(13):7569. https://doi.org/10.3390/app13137569

Chicago/Turabian StyleSong, Young Jong, Ki Hyun Nam, and Il Dong Yun. 2023. "Anomaly Detection through Grouping of SMD Machine Sounds Using Hierarchical Clustering" Applied Sciences 13, no. 13: 7569. https://doi.org/10.3390/app13137569

APA StyleSong, Y. J., Nam, K. H., & Yun, I. D. (2023). Anomaly Detection through Grouping of SMD Machine Sounds Using Hierarchical Clustering. Applied Sciences, 13(13), 7569. https://doi.org/10.3390/app13137569