Abstract

Singular value decomposition (SVD) is a fundamental technique widely used in various applications, such as recommendation systems and principal component analyses. In recent years, the need for privacy-preserving computations has been increasing constantly, which concerns SVD as well. Federated SVD has emerged as a promising approach that enables collaborative SVD computation without sharing raw data. However, existing federated approaches still need improvements regarding privacy guarantees and utility preservation. This paper moves a step further towards these directions: we propose two enhanced federated SVD schemes focusing on utility and privacy, respectively. Using a recommendation system use-case with real-world data, we demonstrate that our schemes outperform the state-of-the-art federated SVD solution. Our utility-enhanced scheme (utilizing secure aggregation) improves the final utility and the convergence speed by more than 2.5 times compared with the existing state-of-the-art approach. In contrast, our privacy-enhancing scheme (utilizing differential privacy) provides more robust privacy protection while improving the same aspect by more than 25%.

1. Introduction

Advances in networking and hardware technology have led to the rapid proliferation of the Internet of Things (IoTs) and decentralized applications. These advancements, including fog computing and edge computing technologies, enable data processing and analysis to be performed at node devices, avoiding the need for data aggregation. This naturally brings benefits such as efficiency and privacy, but on the other hand, it forces data analysis tasks to be carried out in a distributed manner. To this end, federated learning (FL) has emerged as a promising solution in this context, allowing multiple parties to collaboratively train models without sharing raw data. Instead, only intermediate results are exchanged with an aggregator server, ensuring privacy preservation and decentralized data analysis [1].

With respect to machine learning tasks, research has shown that sensitive information can be leaked from the models [2,3,4,5]. For example, in [3], Shokri et al. demonstrated membership inference attacks against machine learning tasks. In such an attack, an attacker can determine whether a data sample has been used in the model training. This will violate privacy if the data sample is sensitive. Regardless of its privacy friendly status, FL suffers similar privacy issues, as demonstrated by Nasr, Shokri and Houmansadr [5]. This makes it necessary to incorporate additional privacy protection mechanisms into FL and to make it rigorously privacy-preserving.

To mitigate information leakages, FL can be aided with other privacy-enhancing technologies, such as secure aggregation (SA) [6] and differential privacy (DP) [7]. SA hides the individual contributions from the aggregator server in each intermediate step in a way that does not affect the trained model’s utility. In other words, the standalone updates are masked such that the masks cancel out during aggregation; therefore, the aggregated results remain intact. The masks could be seen as temporary noise; hence, the privacy protection does not extend to the aggregated data. In contrast, DP adds persistent noise to the model, i.e., it provides broader privacy protection but with an inevitable utility loss (due to the permanent noise). We differentiate between two DP settings depending on where the noise is injected. In local DP (LDP), the participants add noise to their updates, while in central DP (CDP), the server applies noise to the aggregate result. A comparison of LDP, CDP and SA is summarized in Table 1. While there are many privacy protection mechanisms, incorporating them into FL is not a trivial task and remains as open challenges [1].

Table 1.

Comparing secure aggregation with local and central differential privacy.

Among many data analysis methods, this paper focuses on singular value decomposition (SVD). Plainly, SVD factorizes a matrix into three new matrices. Originating from linear algebra, SVD has several interesting properties and conveys crucial insights about the underlying matrix. Hence, SVD has essential applications in data science, such as in recommendation systems [8,9], principal component analysis [10], latent semantic analysis [11], noise filtering [12,13], dimension reduction [14], clustering [15], matrix completion [16], etc. Existing federated SVD solutions fall into two categories: SVD over horizontally and vertically partitioned datasets [17]. In real-world applications, the former is much more common [18,19]; therefore, in this paper, we choose the horizontal setting and focus on the privacy protection challenges.

1.1. Related Work

The concept of privacy-preserving federated SVD has been studied in several works, which are briefly summarized below.

In the literature, many anonymization techniques have been proposed to enable privacy protection in federated machine learning and other tasks. Ref. [20] proposed substitute vectors and length-based frequent pattern tree (LFP-tree) to achieve the data anonymization. It focuses on what data can be published and how they can be published without associating subjects or identities. With the concept of data anonymization in mind, Ref. [21] proposed a strategy by decreasing the correlation between data and the identities. However, the utility of the data will be affected. And, Ref. [22] focused on high-dimensional dataset, which is divided into different subsets; then, each subset is generalized with a novel heuristic method based on local re-coding. While these works contain interesting techniques, they do not directly offer a solution for privacy-preserving federated SVD. A more detailed analysis can be found in [1].

Technically speaking, the algorithms utilized to compute SVD are mostly iterative, such as the power iteration method [23]. Recently, these algorithms were adopted to a distributed setting to solve large-scale problems [24,25]. While these works tackle important issues and advance the field, they all disregard privacy issues: we are only aware of two federated SVD solutions in the literature explicitly providing a privacy analysis [18,19]. Hartebrodt et al. [19] proposed a federated SVD algorithm with a star-like architecture for high-dimensional data such that the aggregator cannot access the complete eigenvector matrix of SVD results. Instead, each node device has access, but only to its shared part of the eigenvector matrix. In addition to the lack of a rigorous privacy analysis, its aim is different from most other federated SVD solutions where the aim is to jointly compute a global feature space. In contrast, Guo et al. [18] proposed a federated SVD algorithm based on the distributed power method, where both the server and all the participants learn the entire eigenvector matrix. Their solution incorporated additional privacy-preserving features, such as participant and aggregator server noise injection, but without a rigorous privacy analysis. We improve upon this solution by pointing out an error in its privacy analysis and by providing a tighter privacy protection with less utilized noise. Overall, these existing literature works do not provide a privacy-preserving federated SVD solution with a rigorous analysis in our setting.

1.2. Contribution and Organization

This work focuses on a setting similar to Guo et al. [18], i.e., when the server and all the participants are expected to learn the final eigenvector matrix. As our main contribution, we improve the FedPower algorithm [18] from two perspectives, i.e., both from the privacy and utility points of view. Our detailed contributions are summarized below.

- Firstly, we point out several inefficiencies and shortcomings of FedPower, such as the avoidable double noise injection steps and the unclear and confusing privacy guarantee.

- Secondly, we propose a utility enhanced solution, where the added noise is reduced due to the introduction of SA.

- Thirdly, we propose a privacy enhanced solution, which (in contrast to FedPower) satisfies DP.

- Finally, we empirically validate our proposed algorithms by measuring the privacy-utility trade-off using a real-world recommendation system use-case.

The rest of the paper is organized as follows. In Section 2, we list the fundamental definitions of the relevant techniques used throughout the paper. In Section 3, we recap the scheme proposed by Guo et al. [18], while in Section 4 and Section 5, we propose two improved schemes focusing on utility and privacy, respectively. In Section 6, we empirically compare the proposed schemes with the original work. Finally, in Section 7, we conclude the paper.

2. Preliminary

2.1. Singular Value Decomposition

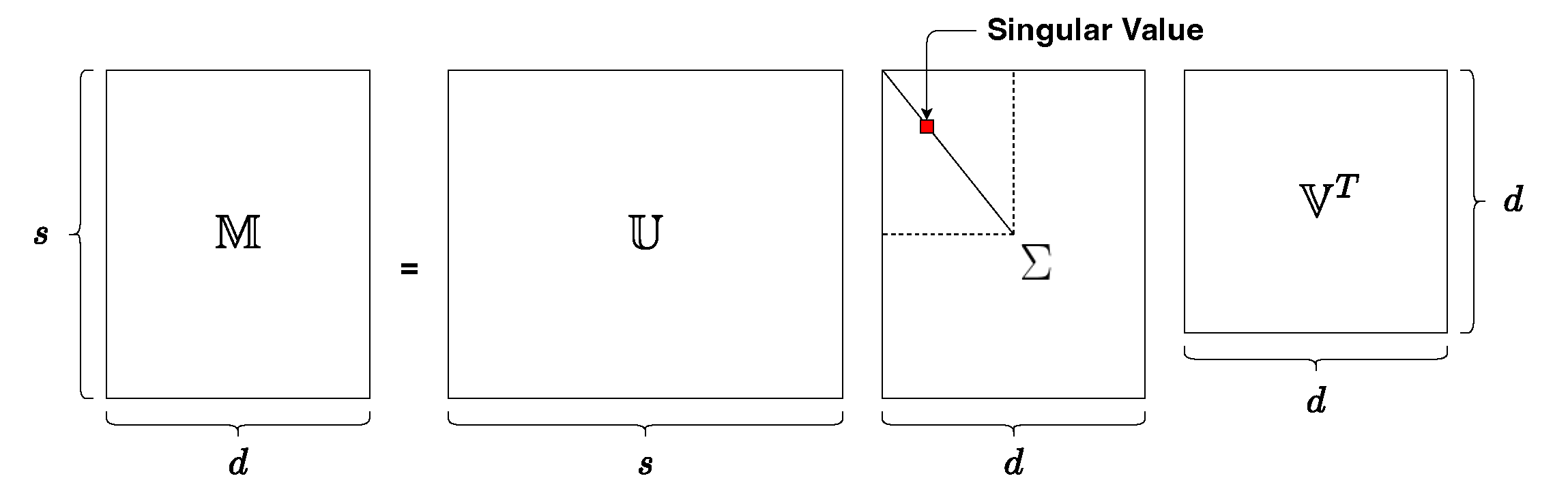

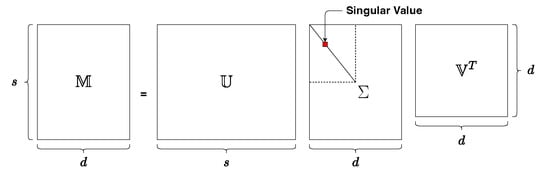

Let be a matrix with assumption of . As shown in Figure 1, the full SVD of is a factorization of the form , where T means conjugate transpose. The left-singular vectors are , the right-singular vectors are , and the diagonal matrix with the singular values in decreasing order in its diagonal is . The partial or truncated SVD [26,27] is used to find the top k singular vectors , and singular values .

Figure 1.

Singular value decomposition.

If , then the Power Method [23] could be used to compute the top k right singular vector of and the top k eigenvectors of . It works by iterating and , where both and are matrices and is the orthogonalization of the columns with QR factorization.

Moreover, if is the composition of n matrices, then computation of the Power Method can be distributed. So, if with , where and , then Equation (1) holds. Thereby, can be written as , which indicates that the Power Method can be processed in parallel by each data holder [18,28].

2.2. Secure Aggregation

In simple terms, with SA, the original data of each node device are locally masked in a particular way and shared with the server, so when the masked data are aggregated on the server, the masks are canceled and offset. In contrast, the server does not know all individual node devices’ original unmasked intermediate results. In the FL literature, many solutions have widely used the SA protocol of Bonawitz et al. [29]. We recap this protocol in Appendix A and use it in Section 4 to benchmark our enhanced SVD solution.

2.3. Differential Privacy

Besides SA, DP is also exhaustively utilized in the FL literature. DP was introduced by Dwork et al. [30], which ensures that the addition, removal, or modification of a single data point does not substantially affect the outcome of the data-based analysis. One of the core strengths of DP comes from its properties, called composition and post-processing, which we also utilize in this paper. The former ensures that the output of two DP mechanisms still satisfies DP but with a parameter change. The latter ensures that a transformation of the results of a DP mechanism does not affect the corresponding privacy guarantees. Typically, DP is enforced by injecting calibrated noise (e.g., Laplacian or Gaussian) into the computation.

Definition 1

()-Differential Privacy). A randomized mechanism with domain and range satisfies ε-differential privacy if for any two adjacent inputs and for any subset of output it holds that

The variable is called the privacy budget, which measures the privacy loss. It captures the trade-off between privacy and utility: the lower its value, the more noise is required to satisfy Equation (2), resulting in higher utility loss. Another widely used DP notion is approximate DP, where a small additive term is added to the right side of Equation (2). Typically, we are interested in values of that are smaller than the inverse of the database size. Although DP has been adopted to many domains [7] such as recommendation systems [31], we are not aware of any work besides [18] which adopts DP for SVD computation. Thus, as we later show a flaw in that work, we are the first to provide a distributed SVD computation with DP guarantees.

3. The FedPower Algorithm

Following Guo et al. [18], we assume there are n node devices, and each device i holds an independent dataset, an -by-d matrix . Each row represents a record item, while the columns of each matrix correspond to the same feature space. Moreover, denotes the composition of matrices such that , with . The solution proposed by Guo et al. [18] is presented in Algorithm 1 with the following parameters.

- T: the number of local computations performed by each node device.

- : the rounds where the node devices and the server communicate,i.e., .

- : the privacy budget.

- : the variance of noises added by the clients and the server, respectively:

| Algorithm 1 Full participation protocol of FedPower by Guo et al. [18]. |

|

In the proposed solution, each node device holds its raw data and processes the SVD locally, its eigenvectors are aggregated on the server by the orthogonal procrustes transformation (OPT) mechanism. The basic idea behind this is to find an orthogonal transformation matrix that maps one set onto another while preserving their relative characteristics. And, the aggregation result is sent back for further iterations. More details (e.g., the computation of ) are given in [18].

4. Enhancing the Utility of FedPower

Adversary Model. Throughout this paper, we consider a semi-honest setup, i.e., where the clients and the server are honest but curious. This means that they follow the protocol truthfully, but in the meantime, they try to learn as much as possible about the dataset of other participants. We also assume that the server and the clients cannot collude, so the server cannot control node devices.

Utility Analysis of FedPower. It is not a surprise that adding Gaussian noise twice (i.e., the local and the central noise in Step 6 and 8 in Algorithm 1) severely affects the accuracy of the final result. A straightforward way to increase the utility is to eliminate some of this noise. As highlighted in Table 1, the local noise protects the individual clients from the server. Moreover, it also protects the aggregate from other clients and from external attackers. On the other hand, the central noise merely covers the aggregate. Hence, if the protection level against the server is sufficient against other clients and external attackers, the central noise becomes obsolete.

Moreover, all the locally added noise accumulates during aggregation, which also negatively affects the utility of the final result. Loosely speaking, as shown in Table 1, CDP combined with SA could provide the same protection as LDP. Consequently, by utilizing cryptographic techniques with a single local noise, we can hide the individual updates and protect the aggregate as well.

Utility Enhanced FedPower. We improve on FedPower [18] from two aspects: (1) we apply an SA protocol to hide the individual intermediate results of the node devices from the server, and (2) we use a secure multi-party computation (SMPC) protocol to enforce the CDP in an oblivious manner to the server. In SMPC, multiple parties can jointly compute a function over their private inputs without revealing those inputs to each other or to the server. More details of this topic can be found in the book [32]. We supplement the assumptions and the setup of Guo et al. [18] with a homomorphic encryption key pair generated by the server. The server holds the private key and shares the public key with all node devices. The remaining part of our solution is shown in Algorithm 2. To ease understanding, the pseudo code is simplified. The actual implementation is more optimized, e.g., the encrypted results are aggregated before decryption in Step 11, and in Step 7, the ciphertexts are re-randomized rather than generated from scratch. We describe all these tricks in Section 6.

By performing SA in Step 7, the server obtains the aggregated result with Gaussian noises from all node devices. With the simple SMPC procedure (Steps 8–12), the server receives all Gaussian noises apart from the one (i.e., node device j) is randomly selected (which is hidden from the node devices). Then, in Step 13, it removes them from the output of the SA protocol. Compared with FedPower [18], our intermediate aggregation result only contains a single instance of Gaussian noise from the randomly chosen node device instead of n. Consequently, via SA and SMPC, the proposed utility-enhancing protocol reduced the locally added noise n-fold and completely eliminated the central noise.

Computational Complexity. Regarding computational complexity, we compare the proposed scheme with the original solution in Table 2. The major difference is that we have integrated SA to facilitate our new privacy protection strategy. Let and be the asymptotic computational complexities of SA on each node device and server side, respectively.

Table 2.

Complexity comparison between FedPower [18] and Algorithm 2.

Although we have added more operations, as seen in Table 2, we have distributed some computations to individual node devices. Most importantly, we no longer add secondary server-side Gaussian noise to the final aggregation result and only retain the Gaussian noise from one node device.

Analysis. As we mentioned in our adversarial model, the semi-honest server cannot collude with any of the node devices, which are also semi-honest. Thus, the server cannot eliminate the remaining noise from the final result. In terms of the node device, since no one except the server is aware of the random index in Step 8, apart from its data, a node device only knows the aggregation result with the added noise, even if the retained noise comes from itself.

| Algorithm 2 Utility-enhanced FedPower. |

|

Compared with the original solution by Guo et al. [18], we have improved the utility of the aggregation result by keeping the added noise from one single node device. As a side effect, the complexity has grown due to the SA protocol. This is a trade-off between result accuracy and solution efficiency.

5. Differentially Private Federated SVD Solution

Privacy Analysis of FedPower. Algorithm 1 injects noise both at the local (Step 6) and the global (Step 8) levels. Consequently, the claimed privacy protection of Algorithm 1 is -DP, which originates from -LDP and -CDP [18]. Firstly, as we highlighted in Table 1, LDP and CDP provide different privacy protections; hence, merely combining them is inappropriate, so the claim must be more precise. Instead, Algorithm 1 seems to provide -DP for the clients from the server and stronger protection (due to the additional central noise) from other clients and external attackers.

Yet, this is still not entirely sound, as not all computations were included in the sensitivity calculation; hence, the noise scaling is incorrect. Indeed, the authors only considered the sensitivity of the multiplication with in Step 3 when determining the variance of the Gaussian noise in Step 6; however, the noise is only added after the multiplication with in Step 5. Thus, the sensitivity of the orthogonalization is discarded.

Privacy-Enhanced FedPower. We improve on FedPower [18] from two aspects: (1) we incorporate clipping in the protocol to bound the sensitivity of the local operations performed by the clients and (2) we use SA with DP to obtain a strong privacy guarantee. For this reason, similar to FedPower [18], we assume that for all i the elements of are bounded with . In Algorithm 1, the computations the nodes undertake (besides noise injection at Step 6) are in Steps 3, 5 and 12, where the last two could be either discarded for the sensitivity computation or completely removed, as explained below.

- Step 12: Orthogonalization is intricate, so its sensitivity is not necessarily traceable. To tackle this, we propose applying the noise before, in which case it would not affect the privacy guarantee, as it would count as post-processing.

- Step 5: We remove this client-side operation from our privacy-enhanced solution, as it is not essential; only the convergence speed would be affected slightly.

The FedPower protocol with enhanced privacy is present in Algorithm 3, where besides the orthogonalization, clipping is also performed with . The only client operation which must be considered for the sensitivity computation (i.e., before noise injection) is Step 3. We calculate its sensitivity in Theorem 1.

Theorem 1.

If we assume for all , then the sensitivity (calculated via the Euclidean distance) of the client-side operations (i.e., Step 3 in Algorithm 3 is bounded by .

Proof.

To make the proof easier to follow, we remove the subscript round counter from the notation. Let us define and such that they are equal except at position . Now, multiply these with from the left results in and , respectively, which are the same except in row i:

Hence, the Euclidean distance of and boils down to this row i:

As a direct corollary of , we know that each of the r squared elements is bounded by . Therefore, . □

It is known that adding Gaussian noise with (where s is the sensitivity) results in -DP. As a corollary, we can state in Theorem 2 that a single round in Algorithm 3 is differentially private. An even tighter result was presented in [33]; we leave the exploration of this as future work. The best practice is to set as the inverse of the size of the underlying dataset, so there is a direct connection between the variance and the privacy parameter .

Theorem 2.

If , then Algorithm 3 provides -DP, where

Proof.

Can be verified by combining the provided formula with the appropriate sensitivity. □

| Algorithm 3 Privacy-enhanced FedPower. |

|

One can easily extend this result for with the composition property of DP: Algorithm 3 satisfies -DP. Besides this basic loose composition, one can obtain better results by utilizing more involved composition theorems such as in [34]. We leave this for future work.

Analysis. Similarly to Section 4, we protect the individual intermediate results with SA. On the other hand, it is equivalent to generate n Gaussian noise with variance and select one, or to generate n Gaussian noise with variance and sum them all up. Consequently, instead of relying on an SMPC protocol to eliminate most of the local noise, we could merely scale them down. combining SA with such a downsized local noise is, in fact, a common practice in FL: this is what distributed differential privacy (DDP) [35] does, i.e., DDP combined with SA provides LDP but with n times smaller noise, where n is the number of participants.

6. Empirical Comparison

In order to compare our proposed schemes with FedPower, we implement the schemes in Python [36]. As we only encrypt 0 and 1 in Section 4, we optimize the performance and take advantage of the utilized Paillier cryptosystem. More specifically, we re-randomize the corresponding ciphertexts to obtain new ciphertexts. In addition, we also exploit the homomorphic property, and instead of decrypting each value ( times), we first calculate the product of all the ciphertexts (elementary matrix multiplication) and then perform the decryption on a signal matrix. In this way, we obtain the sum of all Gaussian noises more efficiently. The decryption result is the sum of noise which will be canceled in Algorithm 2. Furthermore, we prepare the , and all keys of SA offline for each node device i.

Metric. We use Euclidean distance to represent the similarity of two matrix and , i.e., . Let denote the true eigenspace computed without any noise, let denote the eigenspace generated with Algorithm 1, let denote the eigenspace generated with Algorithm 2, and let denote the eigenspace generated with Algorithm 3.

Setup. For our experiments, we used the well-known NETFLIX rating dataset [37], and we pre-process it similarly to [38] (instead of 10, we removed users and movies with less than 50 ratings). It consists of ratings corresponding to movies from users. We split them horizontally into 100 random blocks to simulate node devices. Moreover, we set the security parameter to 128; thus, we adopt 3072 bits for N in Paillier cryptosystem (this is equivalent to RSA-3072, which provides a 128-bit security level [39]). The number of iteration rank and top eigenvectors is set to , and we keep the same synchronous trigger as [18]. To compare FedPower with our enhanced solutions, we set the noise size for these algorithms as . Moreover, for Algorithm 3 we bounded with and with for all possible i and t. Using Theorem 2, we can calculate that a single round corresponds to privacy budget with .

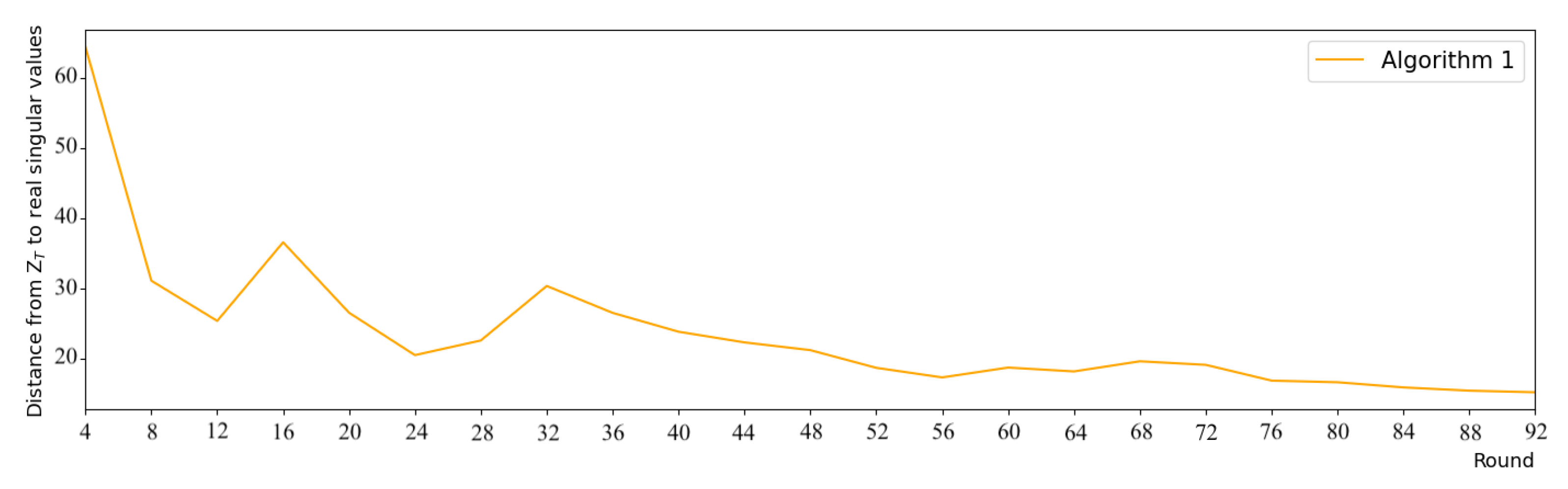

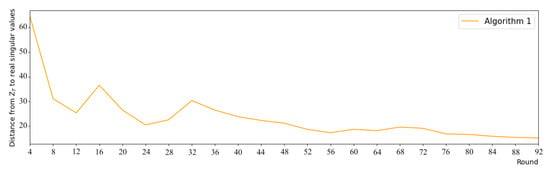

In order to determine the number of global rounds T, we set up a small experiment. We built a data matrix of size filled with integers in , and randomly divided it for 100 node devices (each has at least 10 rows). We executed Algorithm 1 for 200 rounds and compared the distance between the aggregation result and the real singular values of . From the result in Figure 2, we can see that convergence occurs around the round 92, since the subsequent results vary only slightly (). Thus, we set for our experiments.

Figure 2.

Experiment to determining T (with Algorithm 1).

The experiment is implemented in a Docker container of 40-core Intel(R) Xeon(R) Silver 4210 CPU @ 2.20GHz and 755G RAM. We run our experiments 10-fold and take the average execution time.

Results. Firstly, we compare the efficiency of our enhanced schemes and the original algorithm. The computation times are presented in Table 3. Compared with FedPower, the overall computation burden of the devices increased by a factor of for the utility-enhanced solution in Section 4 and only and the privacy-enhanced solution in Section 5. Concerning the server, the increase is and , respectively.

Table 3.

Runtime comparison of Algorithms 1–3 in milliseconds.

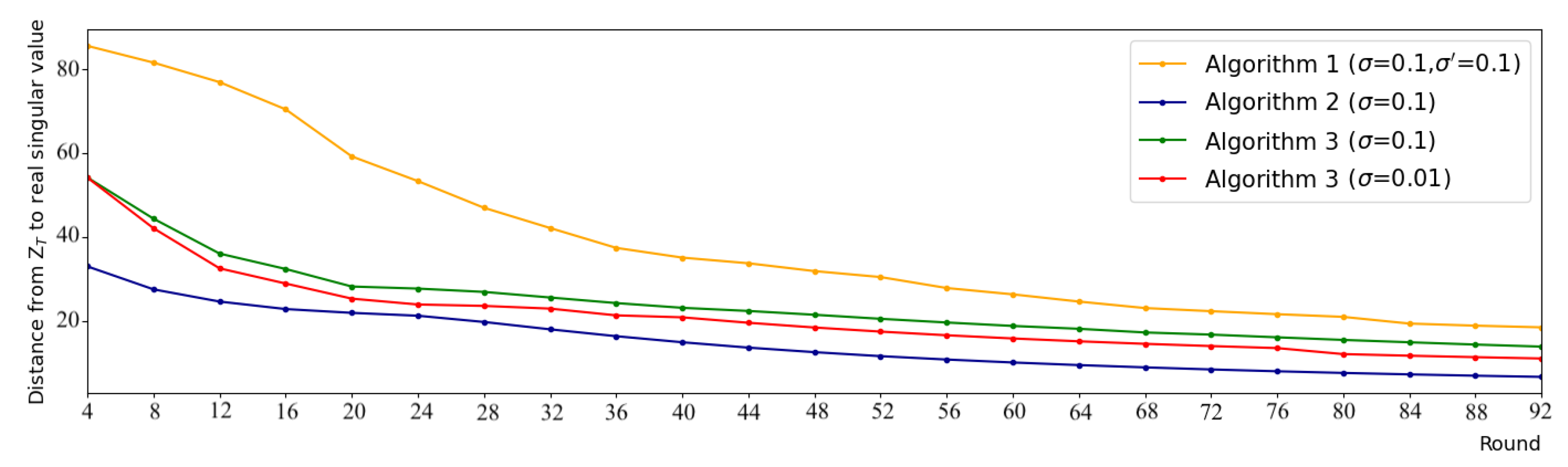

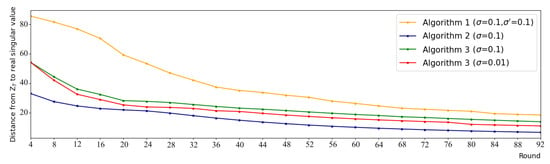

The rise in computational demand comes with benefits. Concerning Algorithm 2, significant progress is achieved in the utility while it offers a similar privacy guarantee as FedPower. Concerning Algorithm 3, the privacy guarantee is more robust, as it provides a formal DDP protection (while FedPower fails to satisfy DP). Moreover, it obtains a higher utility, which could make this solution preferable despite its computational appeal. We compare the distance between the results of each algorithm and the real eigenvalues, as shown in Figure 3, and the utility is improved (i.e., the distances are lower) with both Algorithms 2 and 3.

Figure 3.

Comparison of Eigenspaces calculated using Algorithms 1–3 with different settings.

Our utility-enhanced solution significantly outperforms FedPower: after 92 rounds, the obtained error of our scheme is almost three times () smaller than that for FedPower. The final error of Algorithm 2 is , while this value for Algorithm 1 is . Note that this level of accuracy (∼18.5) was obtained using our method in the 32nd round, i.e., almost three times () faster. Hence, the superior convergence speed can compensate for most of the computational increase caused by SA and SMPC.

Let us shift our attention to our privacy-enhanced solution. In that case, we can see that besides more robust privacy protection, our solution offers better utility: Algorithms 1 and 3 obtains and RMSE values, respectively, i.e., we acquired a 24% error reduction. Our method (with actual DP guarantees) achieved the same level of accuracy () only after 65 rounds, which is a 29% convergence speed increase.

We also compare our two proposed schemes, in a way, that the size of the accumulated noises is equal. Besides the nature of noise injection (many small vs. one large), the only factor that differentiates the results is the clipping bounds. As expected, the error is larger with clipping, i.e., compared with . Concerning the convergence speed, the utility enhanced solution is faster, reaching similar accuracy () in round 54. Note though that this result still vastly outperforms FedPower: the accuracy and the convergence speed are increased by 40% and 43%, respectively.

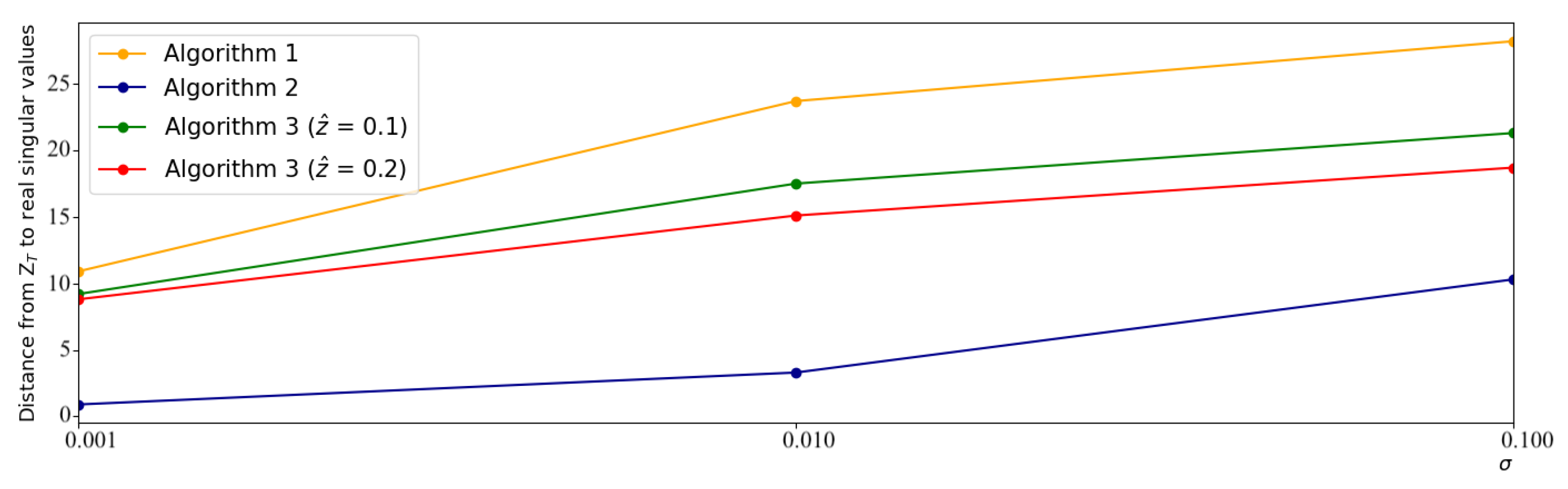

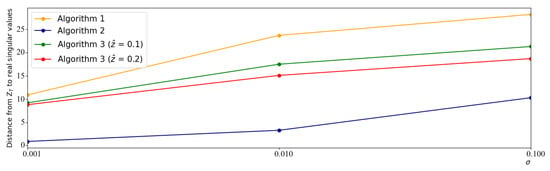

Finally, we study the effect of different levels of privacy protection on the accuracy of each algorithm. As we noticed in Figure 3, after the 60th round, the error ratios of the algorithms are reasonably stable, so for this experiment, we set . Since the clipping rate and the noise variance both contributed to the privacy parameter (as seen in Theorem 2), we varied each independently. Our results are presented in Figure 4. It is visible that the previously seen trends hold with other levels of privacy protection, making our proposed schemes favorable for a wide range of settings.

Figure 4.

The effect of various privacy parameters on the accuracy for Algorithms 1–3.

7. Conclusions

Motivated by Guo et al.’s distributed privacy-preserving SVD algorithm based on the federated power method [18], we have proposed two enhanced federated SVD schemes, focusing on utility and privacy, respectively. Both use secure aggregation to reduce the added noise, which reverts to the initial design intent and interest. Yet, the added cryptographic operations trade efficiency for superior performance ( better results) while providing either similar or superior privacy guarantee. Our work leaves several future research topics. One is to further investigate the computational complexity, particularly the secure aggregation, to achieve more efficient solutions. Another is to investigate the scalability of the proposed solutions, regarding larger datasets and different datasets in applications other than recommendation systems. In addition, scalability also concerns the number of node devices. Yet, another topic is to look further into the security assumptions. For example, the security assumptions can be weaker so that the server can be allowed to collude with one or more node devices.

Author Contributions

Conceptualization, Q.T.; methodology, Q.T., B.L. and B.P.; software, B.L.; validation, Q.T., B.L. and B.P.; formal analysis, Q.T., B.L. and B.P.; investigation, Q.T., B.L. and B.P.; resources, Q.T.; data curation, B.L.; writing—original draft preparation, Q.T. and B.L.; writing—review and editing, Q.T., B.L. and B.P.; visualization, Q.T., B.L. and B.P.; supervision, Q.T.; project administration, Q.T.; funding acquisition, Q.T., B.L. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

Bowen Liu and Qiang Tang are supported by the 5G-INSIGHT bi-lateral project (ANR-20-CE25-0015-16) funded by the Luxembourg National Research Fund (FNR) and by the French National Research Agency (ANR). Balázs Pejó is supported by Project no. 138903, which has been implemented with the support provided by the Ministry of Innovation and Technology from the NRDI Fund and financed under the FK_21 funding scheme.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Practical Secure Aggregation

The practical secure aggregation by Bonawitz et al. [29] is summarized below. First and foremost, the following parameters are generated during the setup phase and sent to relevant node devices.

- Pseudorandom Generator (PRG) [40,41]: takes a fixed length seed as the input and outputs in space , where R is a prefixed value.

- Secret Sharing [42]: takes a secret s, a set of user IDs (e.g. integers), and a threshold as the input and outputs a set of shares associated with the user ; a reconstruction algorithm takes threshold t as an input and shares the corresponding inputs to a user subset such that , and outputs a field element s.

- Key Agreement [43]: takes a security parameter k and returns some public parameters; generates a secret/public key pair; allows a user u to combine its private key with the public key of another user v into a private shared key between them.

- Authenticated Encryption [44]: and are algorithms for encrypting a plaintext with a public key and for decrypting a ciphertext with a secret key.

- Signature Scheme [45]: takes a security parameter k and outputs a secret/public key pair; signs a message with a secret key and returns the relevant signature; and verifies the signature of the relevant message and returns a boolean bit indicating whether the signature is valid.

- Number of node devices m.

- Security parameter k.

- Public parameter of key agreement .

- Threshold value t, where and n is the number of node devices.

- Input space .

- Secrets sharing field .

- Signature key pairs of each node device, where .

The complete execution of the protocol between node devices and the server is provided in the following.

- Round 0 (AdvertiseKeys):

- 0.1.

- Each node device u generates secret/public key pairs of encryption and sharing algorithms and .

- 0.2.

- Each node device u signs and into .

- 0.3.

- The two public keys and all n signatures are sent to the server.

- 0.4.

- If the server receives at least t messages from individual node devices (denote by this set of node devices), then broadcast to all node devices in .

- Round 1 (ShareKeys):

- 1.1.

- Once a node device u in receives the messages from the server, it verifies if all signatures are valid with , where .

- 1.2.

- The node device u samples a random element as a seed for a PRG.

- 1.3.

- The node device u generates two t-out-of- shares of and .

- 1.4.

- For each node device , u computes and sends them to the server.

- 1.5.

- If the server receives at least t messages from individual node devices (denoted by this set of node devices), then it shares to each node device all ciphertexts for it .

- Round 2 (MaskedInputCollection):

- 2.1.

- For the node device , once the ciphertexts are received, it computes , where .

- 2.2.

- is expanded using PRG into a random vector , where when and when ; moreover, define .

- 2.3.

- The node device u computes its own private mask vector and the masked input vector into (mod R); then, is sent to the server.

- 2.4.

- If the server receives at least t messages (denote with this set of node devices), share the node device set with all node devices in .

- Round 3 (ConsistencyCheck):

- 3.1.

- Once the node device receives the message, it returns the signature .

- 3.2.

- If the server receives at least t messages (denote by this set of node devices), share the set .

- Round 4 (Unmasking):

- 4.1.

- Each node device u verifies for all

- 4.2.

- For each node device , u decrypts the ciphertext (received in the MaskedInputCollection round) and asserts that .

- 4.3.

- Each node device u sends the shares for node devices and for node devices in to the server.

- 4.4.

- If the server receives at least t messages (denote with this set of node devices), it re-constructs, for each node device , and re-computes using PRG for all .

- 4.5.

- The server also re-constructs, for all node devices , and re-computes using the PRG.

- 4.6.

- Finally, the server outputs .

We summarize the asymptotic computational complexity of each node device and the server in Table A1. For simplicity of description, we assume that all devices participate in the protocol, that is, . Since some operations can be considered as offline pre-configuration, we focus on online operations starting from masking messages in Step 2.3.

Table A1.

Asymptotic computational complexity of online operations.

Table A1.

Asymptotic computational complexity of online operations.

| Vector Add | |||||||

|---|---|---|---|---|---|---|---|

| Node | 1 | 1 | |||||

| Server | m | m |

References

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Fredrikson, M.; Jha, S.; Ristenpart, T. Model inversion attacks that exploit confidence information and basic countermeasures. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015. [Google Scholar]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership inference attacks against machine learning models. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017. [Google Scholar]

- Zhu, L.; Liu, Z.; Han, S. Deep leakage from gradients. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Nasr, M.; Shokri, R.; Houmansadr, A. Comprehensive Privacy Analysis of Deep Learning: Passive and Active White-box Inference Attacks against Centralized and Federated Learning. In Proceedings of the 2019 IEEE Symposium on Security and Privacy, SP 2019, San Francisco, CA, USA, 19–23 May 2019; pp. 739–753. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Pejó, B.; Desfontaines, D. Guide to Differential Privacy Modifications: A Taxonomy of Variants and Extensions; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Polat, H.; Du, W. SVD-based collaborative filtering with privacy. In Proceedings of the 2005 ACM Symposium on Applied computing, Santa Fe, NM, USA, 13–17 March 2005; pp. 791–795. [Google Scholar]

- Zhang, S.; Wang, W.; Ford, J.; Makedon, F.; Pearlman, J. Using singular value decomposition approximation for collaborative filtering. In Proceedings of the Seventh IEEE International Conference on E-Commerce Technology (CEC’05), Munich, Germany, 19–22 July 2005; pp. 257–264. [Google Scholar]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Dumais, S.T. Latent semantic analysis. Annu. Rev. Inf. Sci. Technol. 2004, 38, 188–230. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, C.; Zhang, Y.; Liu, H. An efficient SVD-based method for image denoising. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 868–880. [Google Scholar] [CrossRef]

- Rajwade, A.; Rangarajan, A.; Banerjee, A. Image denoising using the higher order singular value decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 849–862. [Google Scholar] [CrossRef] [PubMed]

- Ravi Kanth, K.; Agrawal, D.; Singh, A. Dimensionality reduction for similarity searching in dynamic databases. ACM SIGMOD Rec. 1998, 27, 166–176. [Google Scholar] [CrossRef]

- Von Luxburg, U. A tutorial on spectral clustering. Stat. Comput. 2007, 17, 395–416. [Google Scholar] [CrossRef]

- Candès, E.J.; Recht, B. Exact matrix completion via convex optimization. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar] [CrossRef]

- Yakut, I.; Polat, H. Privacy-preserving SVD-based collaborative filtering on partitioned data. Int. J. Inf. Technol. Decis. Mak. 2010, 9, 473–502. [Google Scholar] [CrossRef]

- Guo, X.; Li, X.; Chang, X.; Wang, S.; Zhang, Z. Privacy-preserving distributed SVD via federated power. arXiv 2021, arXiv:2103.00704. [Google Scholar]

- Hartebrodt, A.; Röttger, R.; Blumenthal, D.B. Federated singular value decomposition for high dimensional data. arXiv 2022, arXiv:2205.12109. [Google Scholar]

- Eom, C.S.H.; Lee, C.C.; Lee, W.; Leung, C.K. Effective privacy preserving data publishing by vectorization. Inf. Sci. 2020, 527, 311–328. [Google Scholar] [CrossRef]

- Caruccio, L.; Desiato, D.; Polese, G.; Tortora, G.; Zannone, N. A decision-support framework for data anonymization with application to machine learning processes. Inf. Sci. 2022, 613, 1–32. [Google Scholar] [CrossRef]

- Wang, R.; Zhu, Y.; Chang, C.C.; Peng, Q. Privacy-preserving high-dimensional data publishing for classification. Comput. Secur. 2020, 93, 101785. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; JHU Press: Baltimore, MD, USA, 2013. [Google Scholar]

- Fan, J.; Wang, D.; Wang, K.; Zhu, Z. Distributed estimation of principal eigenspaces. Ann. Stat. 2019, 47, 3009. [Google Scholar] [CrossRef]

- Chen, X.; Lee, J.D.; Li, H.; Yang, Y. Distributed estimation for principal component analysis: An enlarged eigenspace analysis. J. Am. Stat. Assoc. 2022, 117, 1775–1786. [Google Scholar] [CrossRef]

- Eckart, C.; Young, G. The approximation of one matrix by another of lower rank. Psychometrika 1936, 1, 211–218. [Google Scholar] [CrossRef]

- Stewart, G.W. On the early history of the singular value decomposition. SIAM Rev. 1993, 35, 551–566. [Google Scholar] [CrossRef]

- Arbenz, P.; Kressner, D.; Zürich, D. Lecture notes on solving large scale eigenvalue problems. D-MATH EHT Zur. 2012, 2, 3. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography Conference, Proceedings of the Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, 4–7 March 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Guerraoui, R.; Kermarrec, A.M.; Patra, R.; Taziki, M. D 2 p: Distance-based differential privacy in recommenders. Proc. VLDB Endow. 2015, 8, 862–873. [Google Scholar] [CrossRef]

- Ronald Cramer, Ivan Bjerre Damgård, J.B.N. Secure Multiparty Computation and Secret Sharing; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Balle, B.; Wang, Y.X. Improving the gaussian mechanism for differential privacy: Analytical calibration and optimal denoising. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 394–403. [Google Scholar]

- Kairouz, P.; Oh, S.; Viswanath, P. The composition theorem for differential privacy. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1376–1385. [Google Scholar]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A hybrid approach to privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 1–11. [Google Scholar]

- LIU, B. Implementation Source Code. Available online: https://github.com/MoienBowen/Privacy-preserving-Federated-Singular-Value-Decomposition (accessed on 10 April 2023).

- Bennett, J.; Lanning, S. The netflix prize. In Proceedings of the KDD Cup and Workshop; Association for Computing Machinery: New York, NY, USA, 2007; p. 35. [Google Scholar]

- Pejo, B.; Tang, Q.; Biczok, G. Together or alone: The price of privacy in collaborative learning. Proc. Priv. Enhancing Technol. 2019, 2019, 47–65. [Google Scholar] [CrossRef]

- Barker, E. Recommendation for Key Management: Part 1—General; Technical Report NIST Special Publication (SP) 800-57, Rev. 5; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020. [CrossRef]

- Blum, M.; Micali, S. How to generate cryptographically strong sequences of pseudorandom bits. SIAM J. Comput. 1984, 13, 850–864. [Google Scholar] [CrossRef]

- Yao, A.C. Theory and application of trapdoor functions. In Proceedings of the 23rd Annual Symposium on Foundations of Computer Science (SFCS 1982), Chicago, IL, USA, 3–5 November 1982; pp. 80–91. [Google Scholar]

- Shamir, A. How to share a secret. Commun. ACM 1979, 22, 612–613. [Google Scholar] [CrossRef]

- Diffie, W.; Hellman, M. New directions in cryptography. IEEE Trans. Inf. Theory 1976, 22, 644–654. [Google Scholar] [CrossRef]

- McGrew, D.; Viega, J. The Galois/counter mode of operation (GCM). Submiss. NIST Modes Oper. Process 2004, 20, 0278–0070. [Google Scholar]

- Bellare, M.; Neven, G. Transitive signatures: New schemes and proofs. IEEE Trans. Inf. Theory 2005, 51, 2133–2151. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).