A Review of Posture Detection Methods for Pigs Using Deep Learning

Abstract

1. Introduction

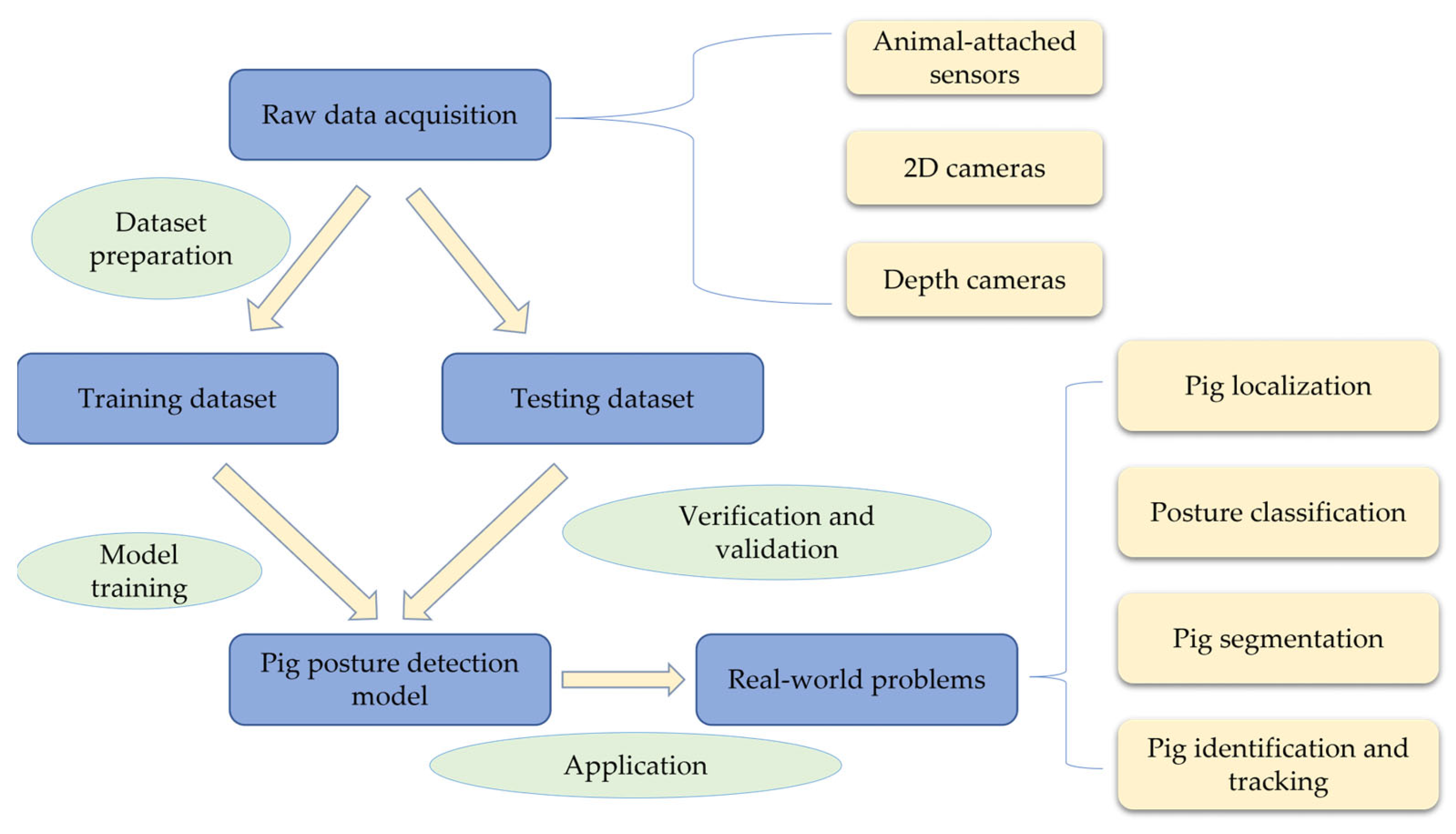

2. Data Acquisition and Sub-Tasks for Pig Posture Detection

2.1. Data Acquisition for Pig Posture Detection

2.1.1. Non-Image Data from Animal-Attached Sensors

2.1.2. Two-Dimensional Images from Optical Cameras

2.1.3. Depth Images from Depth Cameras

2.2. Sub-Tasks for Image-Based Pig Posture Detection

2.2.1. Pig Localization

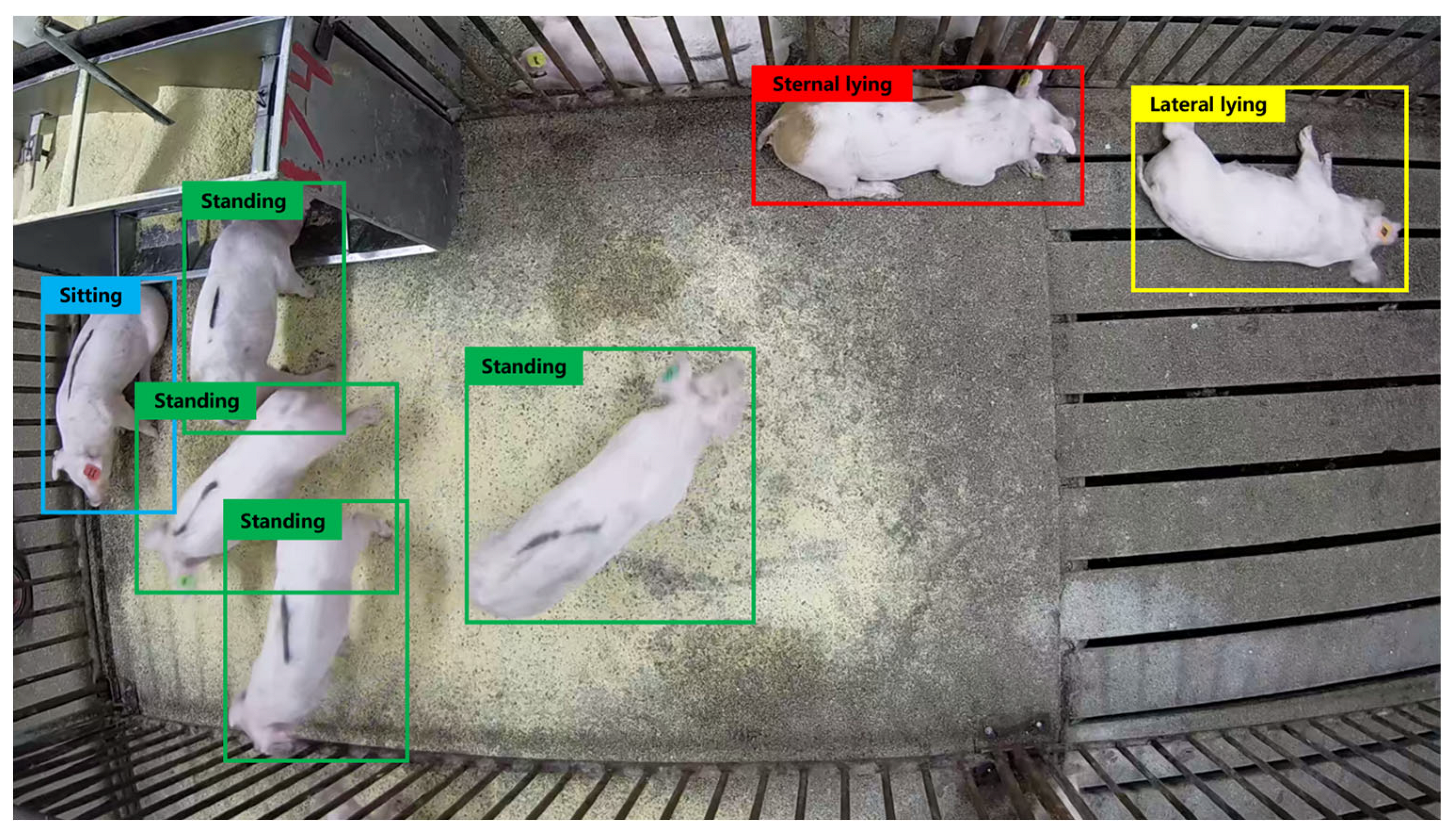

2.2.2. Posture Classification

2.2.3. Pig Segmentation

2.2.4. Pig Identification and Tracking

3. Application of Deep Learning Methods to Pig Posture Detection

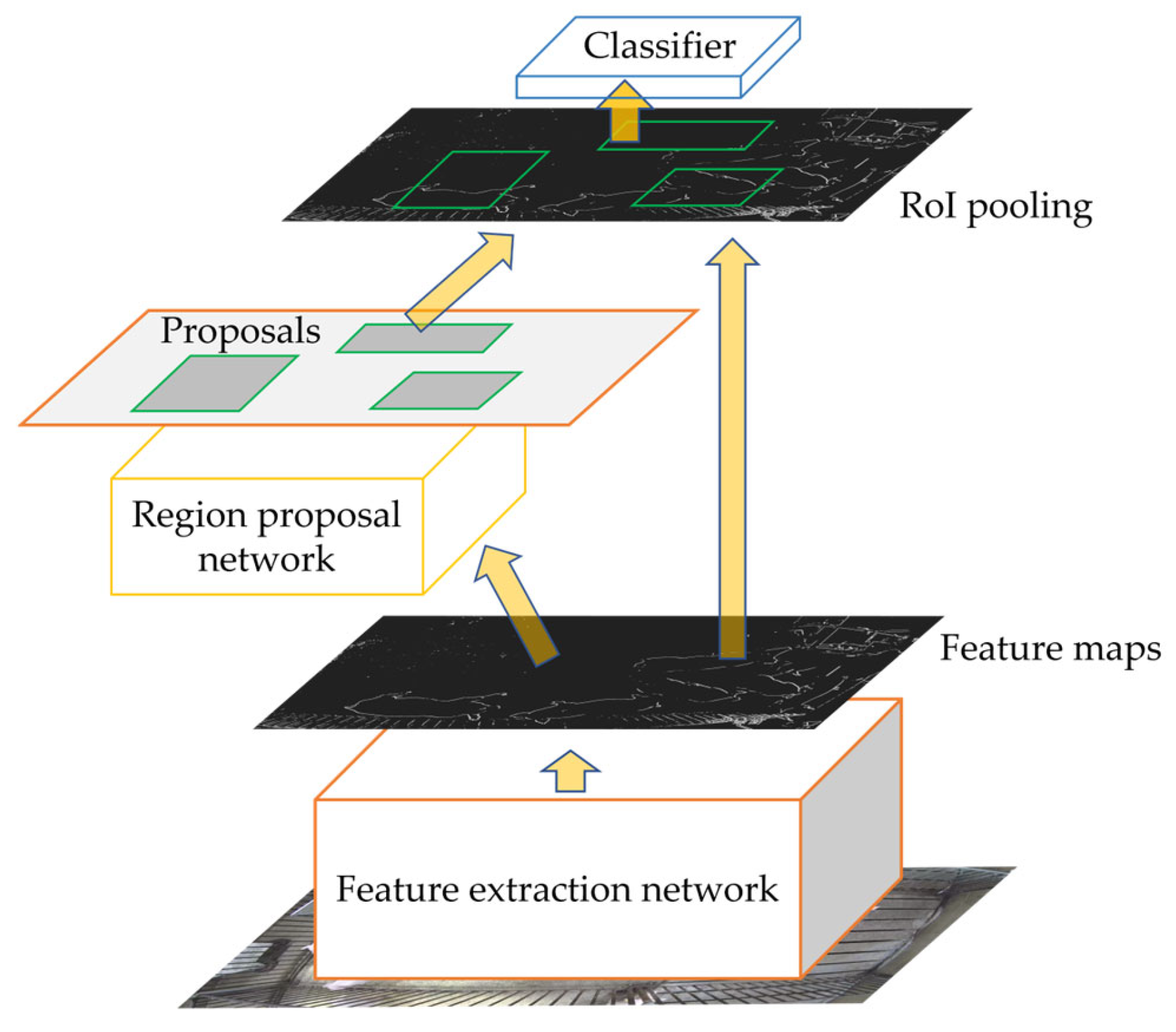

3.1. Two-Stage Detection Models

3.2. One-Stage Detection Models

3.3. Other Deep Learning Detection Methods

4. Discussion

4.1. Limitations of Current Methods and Viable Solutions

4.2. Outlook of Pig Posture Detection Methods with Deep Learning

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Iglesias, P.M.; Camerlink, I. Tail posture and motion in relation to natural behaviour in juvenile and adult pigs. Animal 2022, 16, 100489. [Google Scholar] [CrossRef] [PubMed]

- Matthews, S.G.; Miller, A.L.; Clapp, J.; Plotz, T.; Kyriazakis, I. Early detection of health and welfare compromises through automated detection of behavioural changes in pigs. Vet. J. 2016, 217, 43–51. [Google Scholar] [CrossRef] [PubMed]

- Tallet, C.; Sénèque, E.; Mégnin, C.; Morisset, S.; Val-Laillet, D.; Meunier-Salaün, M.-C.; Fureix, C.; Hausberger, M. Assessing walking posture with geometric morphometrics: Effects of rearing environment in pigs. Appl. Anim. Behav. Sci. 2016, 174, 32–41. [Google Scholar] [CrossRef]

- Camerlink, I.; Ursinus, W.W. Tail postures and tail motion in pigs: A review. Appl. Anim. Behav. Sci. 2020, 230, 105079. [Google Scholar] [CrossRef]

- Huynh, T.T.T.; Aarnink, A.J.A.; Gerrits, W.J.J.; Heetkamp, M.J.H.; Canh, T.T.; Spoolder, H.A.M.; Kemp, B.; Verstegen, M.W.A. Thermal behaviour of growing pigs in response to high temperature and humidity. Appl. Anim. Behav. Sci. 2005, 91, 1–16. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Richter, U.; Hensel, O.; Edwards, S.; Sturm, B. Using machine vision for investigation of changes in pig group lying patterns. Comput. Electron. Agric. 2015, 119, 184–190. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Olsson, A.-C.; Jeppsson, K.-H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481. [Google Scholar] [CrossRef]

- Sadeghi, E.; Kappers, C.; Chiumento, A.; Derks, M.; Havinga, P. Improving piglets health and well-being: A review of piglets health indicators and related sensing technologies. Smart Agric. Technol. 2023, 5, 100246. [Google Scholar] [CrossRef]

- Kim, T.; Kim, Y.; Kim, S.; Ko, J. Estimation of Number of Pigs Taking in Feed Using Posture Filtration. Sensors 2022, 23, 238. [Google Scholar] [CrossRef]

- Ling, Y.; Jimin, Z.; Caixing, L.; Xuhong, T.; Sumin, Z. Point cloud-based pig body size measurement featured by standard and non-standard postures. Comput. Electron. Agric. 2022, 199, 107135. [Google Scholar] [CrossRef]

- Fernandes, A.F.A.; Dorea, J.R.R.; Fitzgerald, R.; Herring, W.; Rosa, G.J.M. A novel automated system to acquire biometric and morphological measurements and predict body weight of pigs via 3D computer vision. J. Anim. Sci. 2019, 97, 496–508. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, G.; Seng, X.; Zheng, H.; Zhang, H.; Liu, T. Deep learning method for rapidly estimating pig body size. Anim. Prod. Sci. 2023. [Google Scholar] [CrossRef]

- Zonderland, J.J.; van Riel, J.W.; Bracke, M.B.M.; Kemp, B.; den Hartog, L.A.; Spoolder, H.A.M. Tail posture predicts tail damage among weaned piglets. Appl. Anim. Behav. Sci. 2009, 121, 165–170. [Google Scholar] [CrossRef]

- Main, D.; Clegg, J.; Spatz, A.; Green, L. Repeatability of a lameness scoring system for finishing pigs. Vet. Rec. 2000, 147, 574–576. [Google Scholar] [CrossRef] [PubMed]

- Krugmann, K.L.; Mieloch, F.J.; Krieter, J.; Czycholl, I. Can Tail and Ear Postures Be Suitable to Capture the Affective State of Growing Pigs? J. Appl. Anim. Welf. Sci. 2021, 24, 411–423. [Google Scholar] [CrossRef] [PubMed]

- Bao, J.; Xie, Q. Artificial intelligence in animal farming: A systematic literature review. J. Clean. Prod. 2022, 331, 129956. [Google Scholar] [CrossRef]

- Idoje, G.; Dagiuklas, T.; Iqbal, M. Survey for smart farming technologies: Challenges and issues. Comput. Electr. Eng. 2021, 92, 107104. [Google Scholar] [CrossRef]

- Racewicz, P.; Ludwiczak, A.; Skrzypczak, E.; Skladanowska-Baryza, J.; Biesiada, H.; Nowak, T.; Nowaczewski, S.; Zaborowicz, M.; Stanisz, M.; Slosarz, P. Welfare Health and Productivity in Commercial Pig Herds. Animals 2021, 11, 1176. [Google Scholar] [CrossRef] [PubMed]

- Larsen, M.L.V.; Wang, M.; Norton, T. Information Technologies for Welfare Monitoring in Pigs and Their Relation to Welfare Quality®. Sustainability 2021, 13, 692. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.H.; Olsson, A.C.; Muller, S.; Hensel, O. Deep Learning and Machine Vision Approaches for Posture Detection of Individual Pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zhang, H.; He, Y.; Liu, T.; Caputo, D. A Review in the Automatic Detection of Pigs Behavior with Sensors. J. Sens. 2022, 2022, 4519539. [Google Scholar] [CrossRef]

- Maselyne, J.; Adriaens, I.; Huybrechts, T.; De Ketelaere, B.; Millet, S.; Vangeyte, J.; Van Nuffel, A.; Saeys, W. Measuring the drinking behaviour of individual pigs housed in group using radio frequency identification (RFID). Animal 2016, 10, 1557–1566. [Google Scholar] [CrossRef]

- Cornou, C.; Lundbye-Christensen, S.; Kristensen, A.R. Modelling and monitoring sows’ activity types in farrowing house using acceleration data. Comput. Electron. Agric. 2011, 76, 316–324. [Google Scholar] [CrossRef]

- Thompson, R.; Matheson, S.M.; Plotz, T.; Edwards, S.A.; Kyriazakis, I. Porcine lie detectors: Automatic quantification of posture state and transitions in sows using inertial sensors. Comput. Electron. Agric. 2016, 127, 521–530. [Google Scholar] [CrossRef]

- Escalante, H.J.; Rodriguez, S.V.; Cordero, J.; Kristensen, A.R.; Cornou, C. Sow-activity classification from acceleration patterns: A machine learning approach. Comput. Electron. Agric. 2013, 93, 17–26. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, H.; Liu, T. Stress-Free Detection Technologies for Pig Growth Based on Welfare Farming: A Review. Appl. Eng. Agric. 2020, 36, 357–373. [Google Scholar] [CrossRef]

- Brünger, J.; Traulsen, I.; Koch, R. Model-based detection of pigs in images under sub-optimal conditions. Comput. Electron. Agric. 2018, 152, 59–63. [Google Scholar] [CrossRef]

- Alameer, A.; Kyriazakis, I.; Bacardit, J. Automated recognition of postures and drinking behaviour for the detection of compromised health in pigs. Sci. Rep. 2020, 10, 13665. [Google Scholar] [CrossRef]

- Zheng, C.; Zhu, X.; Yang, X.; Wang, L.; Tu, S.; Xue, Y. Automatic recognition of lactating sow postures from depth images by deep learning detector. Comput. Electron. Agric. 2018, 147, 51–63. [Google Scholar] [CrossRef]

- Lee, J.; Jin, L.; Park, D.; Chung, Y. Automatic Recognition of Aggressive Behavior in Pigs Using a Kinect Depth Sensor. Sensors 2016, 16, 631. [Google Scholar] [CrossRef]

- Stavrakakis, S.; Li, W.; Guy, J.H.; Morgan, G.; Ushaw, G.; Johnson, G.R.; Edwards, S.A. Validity of the Microsoft Kinect sensor for assessment of normal walking patterns in pigs. Comput. Electron. Agric. 2015, 117, 1–7. [Google Scholar] [CrossRef]

- Lao, F.; Brown-Brandl, T.; Stinn, J.P.; Liu, K.; Teng, G.; Xin, H. Automatic recognition of lactating sow behaviors through depth image processing. Comput. Electron. Agric. 2016, 125, 56–62. [Google Scholar] [CrossRef]

- D’Eath, R.B.; Foister, S.; Jack, M.; Bowers, N.; Zhu, Q.; Barclay, D.; Baxter, E.M. Changes in tail posture detected by a 3D machine vision system are associated with injury from damaging behaviours and ill health on commercial pig farms. PLoS ONE 2021, 16, e0258895. [Google Scholar] [CrossRef]

- Kim, J.; Chung, Y.; Choi, Y.; Sa, J.; Kim, H.; Chung, Y.; Park, D.; Kim, H. Depth-Based Detection of Standing-Pigs in Moving Noise Environments. Sensors 2017, 17, 2757. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Zhou, S.; Xu, A.; Ye, J.; Zhao, A. Automatic scoring of postures in grouped pigs using depth image and CNN-SVM. Comput. Electron. Agric. 2022, 194, 106746. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Brunger, J.; Gentz, M.; Traulsen, I.; Koch, R. Panoptic Segmentation of Individual Pigs for Posture Recognition. Sensors 2020, 20, 3710. [Google Scholar] [CrossRef]

- Sivamani, S.; Choi, S.H.; Lee, D.H.; Park, J.; Chon, S. Automatic posture detection of pigs on real-time using Yolo framework. Int. J. Res. Trends Innov. 2020, 5, 81–88. [Google Scholar]

- Wang, X.; Wang, W.; Lu, J.; Wang, H. HRST: An Improved HRNet for Detecting Joint Points of Pigs. Sensors 2022, 22, 7215. [Google Scholar] [CrossRef]

- Psota, E.T.; Mittek, M.; Pérez, L.C.; Schmidt, T.; Mote, B. Multi-pig part detection and association with a fully-convolutional network. Sensors 2019, 19, 852. [Google Scholar] [CrossRef]

- Zhu, W.; Zhu, Y.; Li, X.; Yuan, D. The posture recognition of pigs based on Zernike moments and support vector machines. In Proceedings of the 2015 10th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Taipei, Taiwan, 24–27 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 480–484. [Google Scholar]

- Shao, H.; Pu, J.; Mu, J. Pig-Posture Recognition Based on Computer Vision: Dataset and Exploration. Animals 2021, 11, 1295. [Google Scholar] [CrossRef]

- Cao, X.-B.; Qiao, H.; Keane, J. A low-cost pedestrian-detection system with a single optical camera. IEEE Trans. Intell. Transp. Syst. 2008, 9, 58–67. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Sun, L.; Liu, Y.; Chen, S.; Luo, B.; Li, Y.; Liu, C. Pig Detection Algorithm Based on Sliding Windows and PCA Convolution. IEEE Access 2019, 7, 44229–44238. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Gu, C.; Lim, J.J.; Arbeláez, P.; Malik, J. Recognition using regions. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1030–1037. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Luo, Y.; Zeng, Z.; Lu, H.; Lv, E. Posture detection of individual pigs based on lightweight convolution neural networks and efficient channel-wise attention. Sensors 2021, 21, 8369. [Google Scholar] [CrossRef]

- Ji, H.; Yu, J.; Lao, F.; Zhuang, Y.; Wen, Y.; Teng, G. Automatic Position Detection and Posture Recognition of Grouped Pigs Based on Deep Learning. Agriculture 2022, 12, 1314. [Google Scholar] [CrossRef]

- Huang, L.; Xu, L.; Wang, Y.; Peng, Y.; Zou, Z.; Huang, P. Efficient Detection Method of Pig-Posture Behavior Based on Multiple Attention Mechanism. Comput. Intell. Neurosci. 2022, 2022, 1759542. [Google Scholar] [CrossRef]

- Guo, Y.; Lian, X.; Yan, P. Diurnal rhythms, locations and behavioural sequences associated with eliminative behaviours in fattening pigs. Appl. Anim. Behav. Sci. 2015, 168, 18–23. [Google Scholar]

- Zhou, J.J.; Zhu, W.X. Gesture recognition of pigs based on wavelet moment and probabilistic neural network. In Applied Mechanics and Materials; Trans Tech Publications Ltd.: Zurich, Switzerland, 2014; pp. 3691–3694. [Google Scholar]

- Kim, Y.J.; Park, D.-H.; Park, H.; Kim, S.-H. Pig datasets of livestock for deep learning to detect posture using surveillance camera. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 21–23 October 2020; pp. 1196–1198. [Google Scholar]

- Zhang, Y.; Cai, J.; Xiao, D.; Li, Z.; Xiong, B. Real-time sow behavior detection based on deep learning. Comput. Electron. Agric. 2019, 163, 104884. [Google Scholar] [CrossRef]

- Tu, S.; Liu, H.; Li, J.; Huang, J.; Li, B.; Pang, J.; Xue, Y. Instance segmentation based on mask scoring R-CNN for group-housed pigs. In Proceedings of the 2020 International Conference on Computer Engineering and Application (ICCEA), Guangzhou, China, 27–29 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 458–462. [Google Scholar]

- Yao, R.; Lin, G.; Xia, S.; Zhao, J.; Zhou, Y. Video object segmentation and tracking: A survey. ACM Trans. Intell. Syst. Technol. (TIST) 2020, 11, 1–47. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Cowton, J.; Kyriazakis, I.; Bacardit, J. Automated Individual Pig Localisation, Tracking and Behaviour Metric Extraction Using Deep Learning. IEEE Access 2019, 7, 108049–108060. [Google Scholar] [CrossRef]

- Larsen, M.L.V.; Andersen, H.M.-L.; Pedersen, L.J. Can tail damage outbreaks in the pig be predicted by behavioural change? Vet. J. 2016, 209, 50–56. [Google Scholar] [CrossRef] [PubMed]

- Hansen, M.F.; Smith, M.L.; Smith, L.N.; Salter, M.G.; Baxter, E.M.; Farish, M.; Grieve, B. Towards on-farm pig face recognition using convolutional neural networks. Comput. Ind. 2018, 98, 145–152. [Google Scholar] [CrossRef]

- Ma, C.; Deng, M.; Yin, Y. Pig face recognition based on improved YOLOv4 lightweight neural network. Inf. Process. Agric. 2023. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.-K. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic individual pig detection and tracking in pig farms. Sensors 2019, 19, 1188. [Google Scholar] [CrossRef]

- Yang, Q.; Xiao, D. A review of video-based pig behavior recognition. Appl. Anim. Behav. Sci. 2020, 233, 105146. [Google Scholar] [CrossRef]

- Du, L.; Zhang, R.; Wang, X. Overview of two-stage object detection algorithms. J. Phys. Conf. Ser. 2020, 1544, 012033. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Riekert, M.; Opderbeck, S.; Wild, A.; Gallmann, E. Model selection for 24/7 pig position and posture detection by 2D camera imaging and deep learning. Comput. Electron. Agric. 2021, 187, 106213. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Zhu, X.; Chen, C.; Zheng, B.; Yang, X.; Gan, H.; Zheng, C.; Yang, A.; Mao, L.; Xue, Y. Automatic recognition of lactating sow postures by refined two-stream RGB-D faster R-CNN. Biosyst. Eng. 2020, 189, 116–132. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L.; Laughing, A.; Hogan, A.; Hajek, J.; Diaconu, L.; Marc, Y. ultralytics/yolov5: v5. 0-YOLOv5-P6 1280 models AWS Supervise. ly and YouTube integrations. Zenodo 2021, 11. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 February 2023).

- Witte, J.-H.; Marx Gómez, J. Introducing a new Workflow for Pig Posture Classification based on a combination of YOLO and EfficientNet. In Proceedings of the 55th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2022. [Google Scholar]

- Ocepek, M.; Žnidar, A.; Lavrič, M.; Škorjanc, D.; Andersen, I.L. DigiPig: First developments of an automated monitoring system for body, head and tail detection in intensive pig farming. Agriculture 2021, 12, 2. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Gan, H.; Ou, M.; Huang, E.; Xu, C.; Li, S.; Li, J.; Liu, K.; Xue, Y. Automated detection and analysis of social behaviors among preweaning piglets using key point-based spatial and temporal features. Comput. Electron. Agric. 2021, 188, 106357. [Google Scholar] [CrossRef]

- Taherkhani, A.; Cosma, G.; McGinnity, T.M. AdaBoost-CNN: An adaptive boosting algorithm for convolutional neural networks to classify multi-class imbalanced datasets using transfer learning. Neurocomputing 2020, 404, 351–366. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, S. Multi-view Multi-modal Approach Based on 5S-CNN and BiLSTM Using Skeleton, Depth and RGB Data for Human Activity Recognition. Wirel. Pers. Commun. 2023, 130, 1141–1159. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–21. [Google Scholar]

- Mattina, M.; Benzinou, A.; Nasreddine, K.; Richard, F. An efficient anchor-free method for pig detection. IET Image Process. 2022, 17, 613–626. [Google Scholar] [CrossRef]

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behaviour in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef]

- Zhang, K.; Li, D.; Huang, J.; Chen, Y. Automated video behavior recognition of pigs using two-stream convolutional networks. Sensors 2020, 20, 1085. [Google Scholar] [CrossRef] [PubMed]

- Aziz, R.M.; Desai, N.P.; Baluch, M.F. Computer vision model with novel cuckoo search based deep learning approach for classification of fish image. Multimed. Tools Appl. 2023, 82, 3677–3696. [Google Scholar] [CrossRef]

| Posture Type | Description | Reference |

|---|---|---|

| Standing | Upright body position on extended legs with hooves only in contact with the floor | [29] |

| Ventral recumbency | Lying on abdomen/sternum with front legs folded under the body and visible hind legs (right side, left side); udder is partially obscured | [29] |

| Sternal recumbency/Lying on belly/Sternal lying/Lying on the stomach/Prone | Lying on abdomen/sternum with front and hind legs folded under the body; udder is totally obscured | [7,29,55,56,57] |

| Lateral recumbency/Lying on side/Lateral lying/Sidling | Lying on either side with all four legs visible (right side, left side); udder is totally visible | [7,29,55,57] |

| Sitting | Partly erected on stretched front legs with caudal end of body contacting the floor | [29] |

| Mounting | Stretch the hind legs and standing on the floor with the front legs in contact with the body of another pig | [55] |

| Exploring | The pig’s snout approached or nosed a part of the pen for more than 2 s. This was differentiated depending on the objects, such as a wall (including a fence door) or the floor | [42,58] |

| Posture Classification | Data Source | Detection Model | Evaluation Index | Results | Advantage | References |

|---|---|---|---|---|---|---|

| Lying and not lying | 2D RGB image | Faster R-CNN + NASNet | APIoU=0.5 of localization | 87.4% | Consider dataset diversity and focus on generalization under limited training data and different application settings | [51] |

| mAP | 80.2% | |||||

| Faster R-CNN + NASNet | mAP@0.50 on day | 84% | Enable continuous 24/7 pig posture detection and provide an optimal deep learning model after experimenting with over 150 different model configurations | [75] | ||

| mAP@0.50 on night | 58% | |||||

| mAP | 89.9% | |||||

| Precision of posture classification | 93% | |||||

| Standing, lying on side, and lying on belly | R-FCN + ResNet 101 | mAP | >93% | High flexibility and robustness | [20] | |

| Standing, sitting, sternal recumbency, ventral recumbency, and lateral recumbency | Depth images | Faster R-CNN | mAP | 87.1% | Reach real-time detection speed; Summarize the changing laws of pig position and posture | [29] |

| 2D RGB image + Depth image | Refined two-stream RGB-D faster R-CNN | mAP | 95.47% | Improve the accuracy of posture recognition by feature-level fusion strategy using RGB-D data | [79] |

| Posture Classification | Data Source | Detection Model | Evaluation Index | Results | Advantage | References |

|---|---|---|---|---|---|---|

| Nine types of posture and behaviors | 2D RGB image | YOLOv2 | Accuracy | 97% | Contribute high-quality datasets for building deep learning models; design the livestock safety surveillance system | [60] |

| Standing, lateral lying, sternal lying, and sitting | YOLOv2 + ResNet-50 | mAP | 98.88% | Enable robust and accurate monitoring of individual pigs; generate a profile of each pig | [28] | |

| Sitting, lying, Standing, multi, part-of, and other | Tiny YOLOv3 | mAP | 95.9% | High accuracy with fewer computational resources | [38] | |

| Standing, lying on the stomach, lying on the side, and exploring | YOLOv5 + DeepLabv3+ + Resnet | Classification accuracy | 92.26% | Propose a joint training method that involves pig posture detection and pig semantic segmentation | [42] | |

| Segmentation accuracy | 92.45% | |||||

| Standing, lying, and sitting | Improved YOLOX | AP0.5 for localization | 99.5% | Focus on sitting detection; solve class imbalance on the lack of sitting posture | [56] | |

| AP0.5–0.95 for localization | 91% | |||||

| mAP0.5 | 95.7% | |||||

| mAP0.5–0.95 | 87.2% | |||||

| Standing, sitting, prone, and sidling | High-effect YOLO | mAP | 97.43% | Optimize the YOLO v3 model; construct multiple attention mechanism | [57] | |

| Standing, lying on the belly, lying on the side, sitting, and mounting | Light-SPD-YOLO | mAP | 92.04% | High speed and accuracy with low model parameters and the computation complexity | [55] | |

| Lying and not lying | YOLOv5 + EfficientNet | APIoU=0.5 of localization | 99.4% | Achieve significant improvement in accuracy by considering pig posture classification as a two-step classification process | [82] | |

| mAP | 89.9% | |||||

| Precision of posture Classification | 93% |

| Limitation | Proposed Solutions |

|---|---|

| Lack of standardized and comprehensive databases. | Collaboration for database construction and open-source data initiatives |

| Class imbalance. | Employment of data augmentation, transfer learning, and ensemble models |

| Demerit of single-view camera systems. | Adoption of depth cameras or multi-modal models compatible with different views |

| The challenge in multi-pig identification and tracking. | Combination with MOT technique |

| Neglect of temporal information. | Introduction of video-based detection and spatial-temporal model |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Lu, J.; Wang, H. A Review of Posture Detection Methods for Pigs Using Deep Learning. Appl. Sci. 2023, 13, 6997. https://doi.org/10.3390/app13126997

Chen Z, Lu J, Wang H. A Review of Posture Detection Methods for Pigs Using Deep Learning. Applied Sciences. 2023; 13(12):6997. https://doi.org/10.3390/app13126997

Chicago/Turabian StyleChen, Zhe, Jisheng Lu, and Haiyan Wang. 2023. "A Review of Posture Detection Methods for Pigs Using Deep Learning" Applied Sciences 13, no. 12: 6997. https://doi.org/10.3390/app13126997

APA StyleChen, Z., Lu, J., & Wang, H. (2023). A Review of Posture Detection Methods for Pigs Using Deep Learning. Applied Sciences, 13(12), 6997. https://doi.org/10.3390/app13126997