STA-GCN: Spatial-Temporal Self-Attention Graph Convolutional Networks for Traffic-Flow Prediction

Abstract

1. Introduction

2. Literature Review

3. Dataset and Methodology

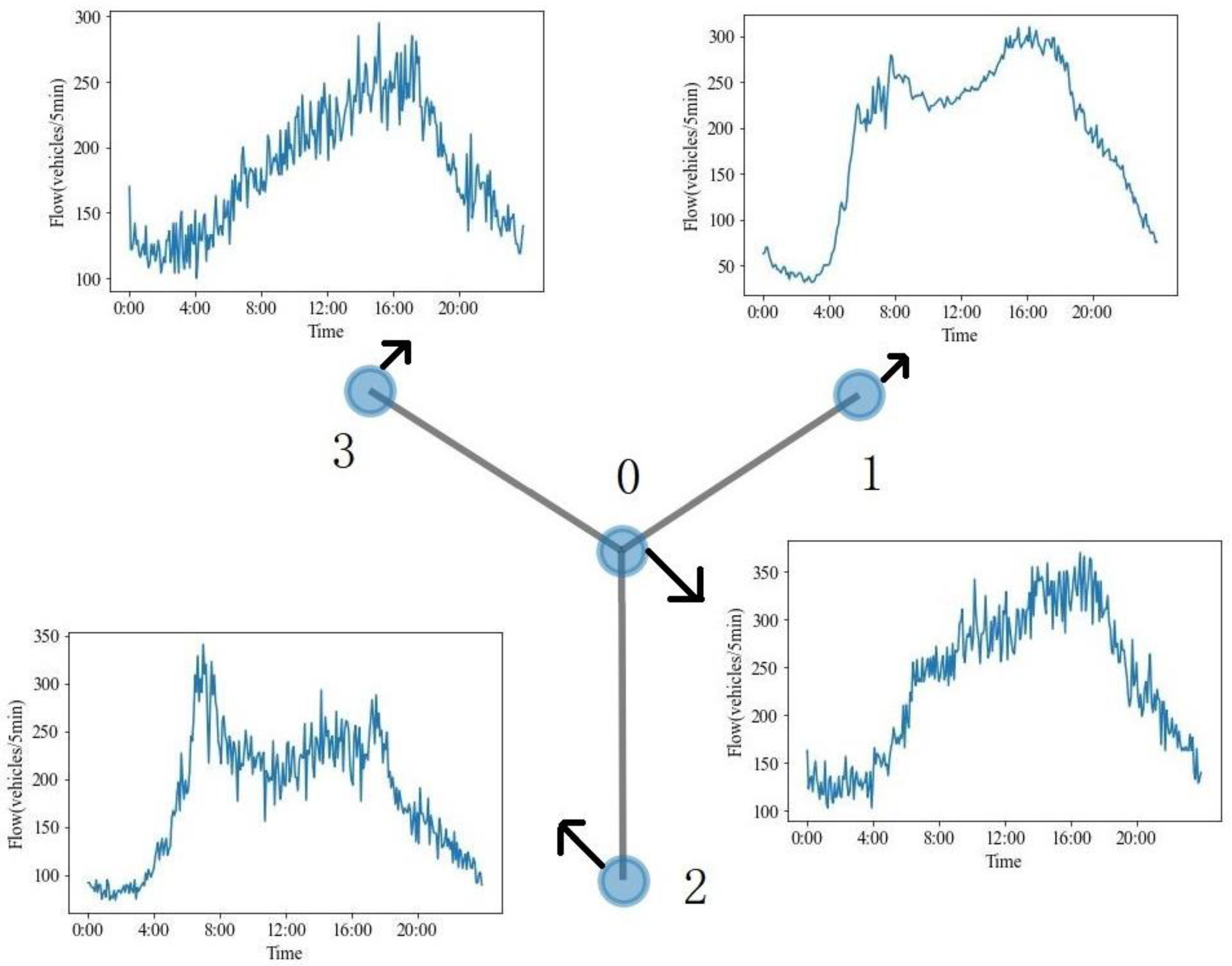

3.1. Dataset

3.2. Symbols and Feature Encoding

3.3. Spatial–Temporal Self-Attention Graph Convolution Networks (STA-GCN)

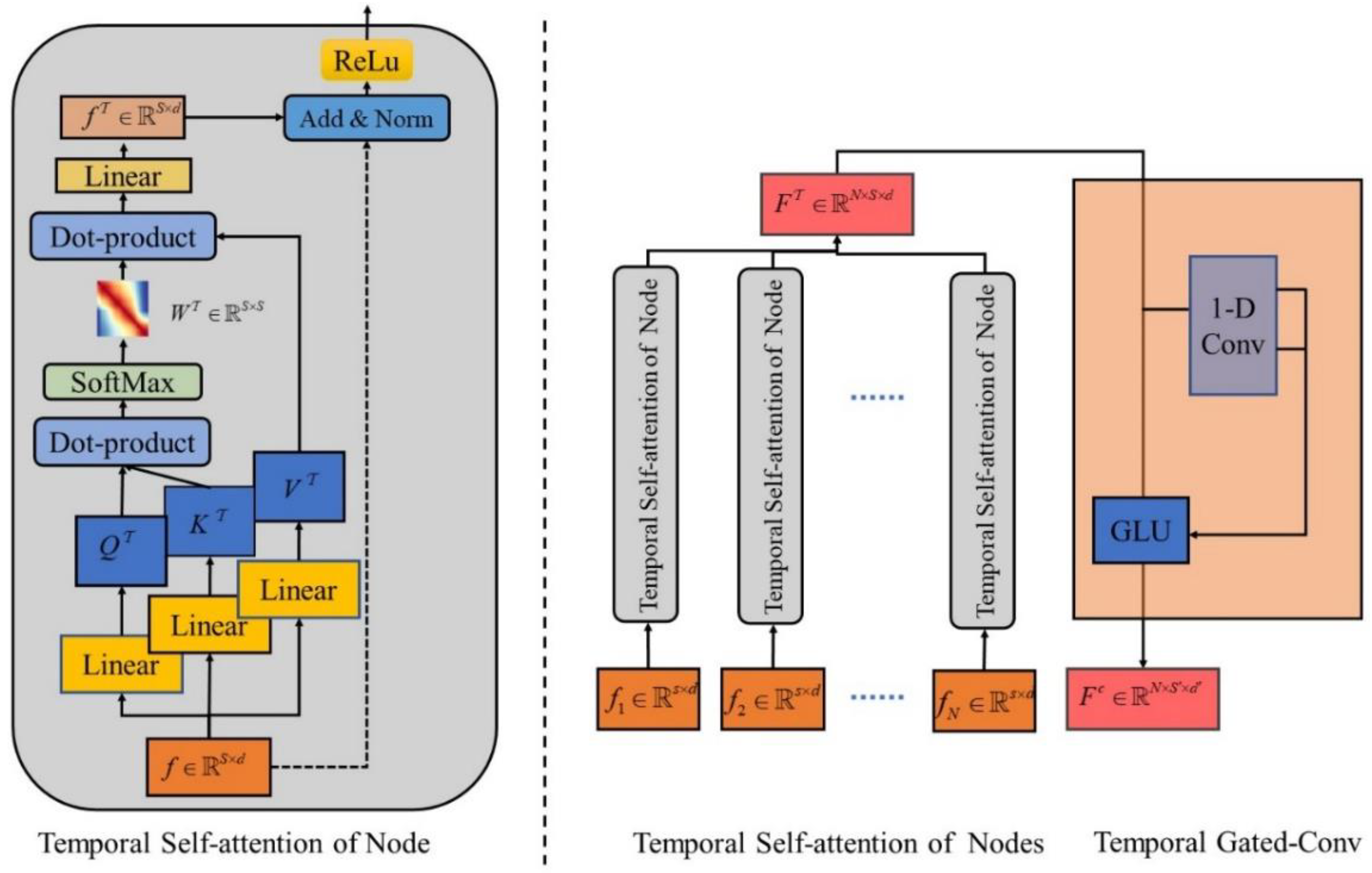

3.3.1. Temporal Self-Attention

3.3.2. Temporal Gated Convolution

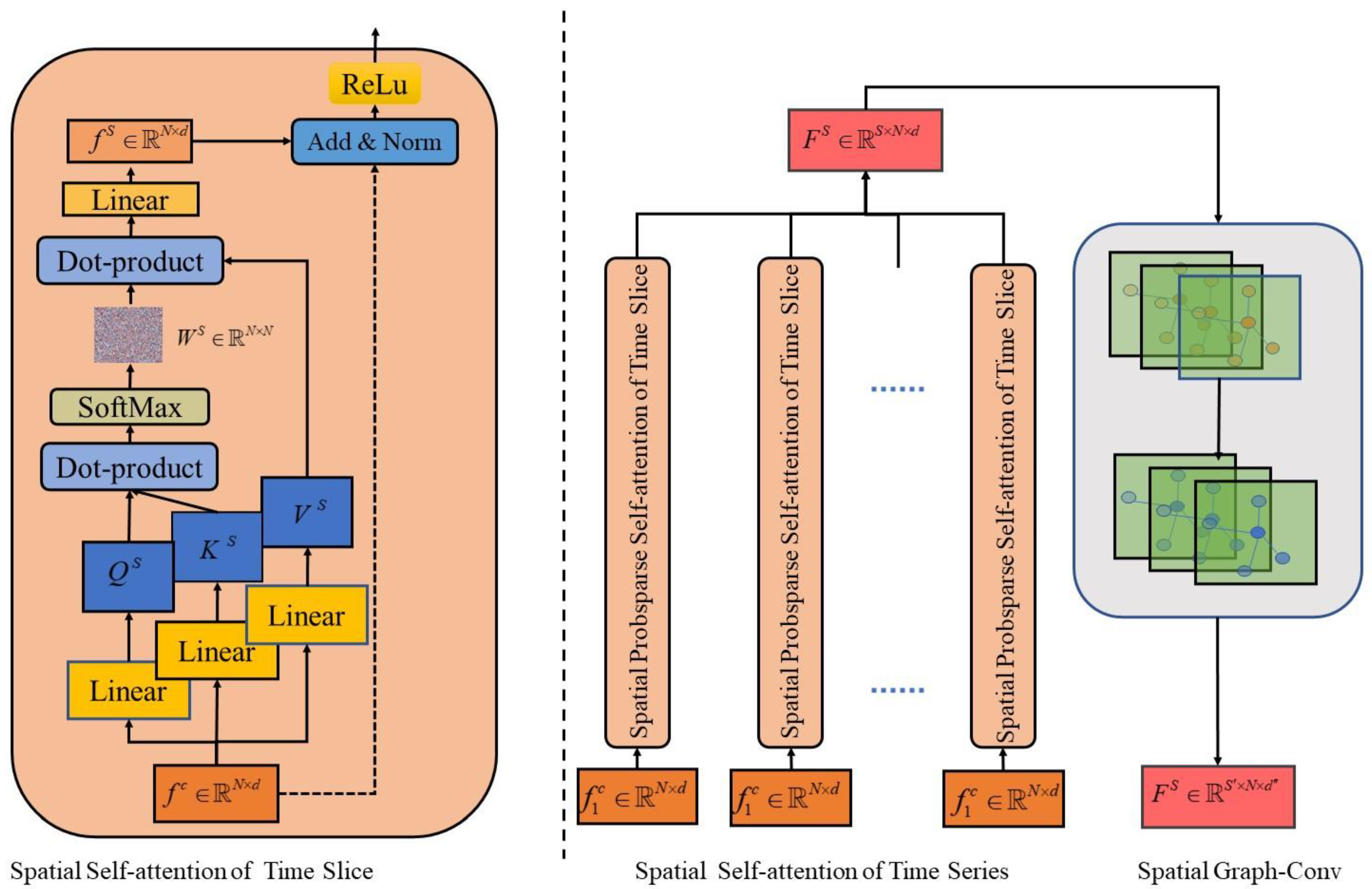

3.3.3. Spatial Self-Attention

3.3.4. Spatial Graph Convolution

4. Results and Discussion

4.1. Data Pre-Processing

4.2. Parameter Setting

4.3. Baseline Models

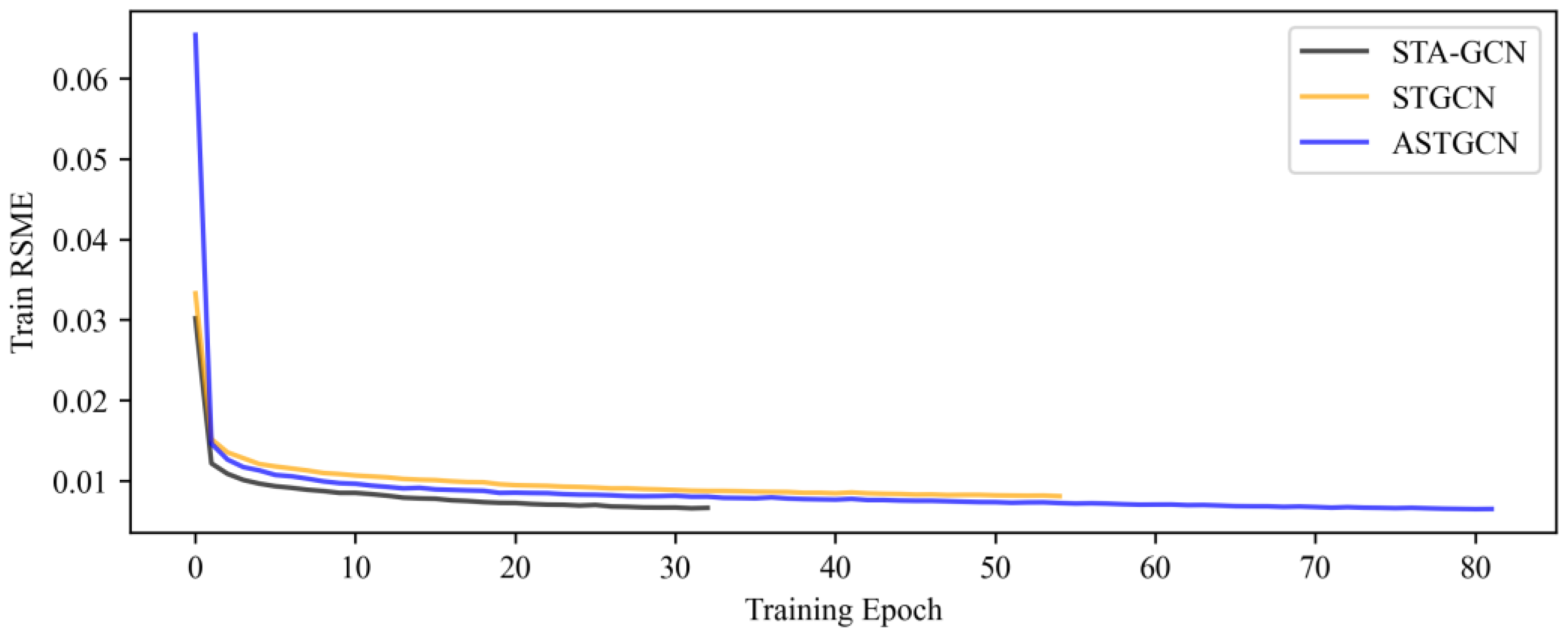

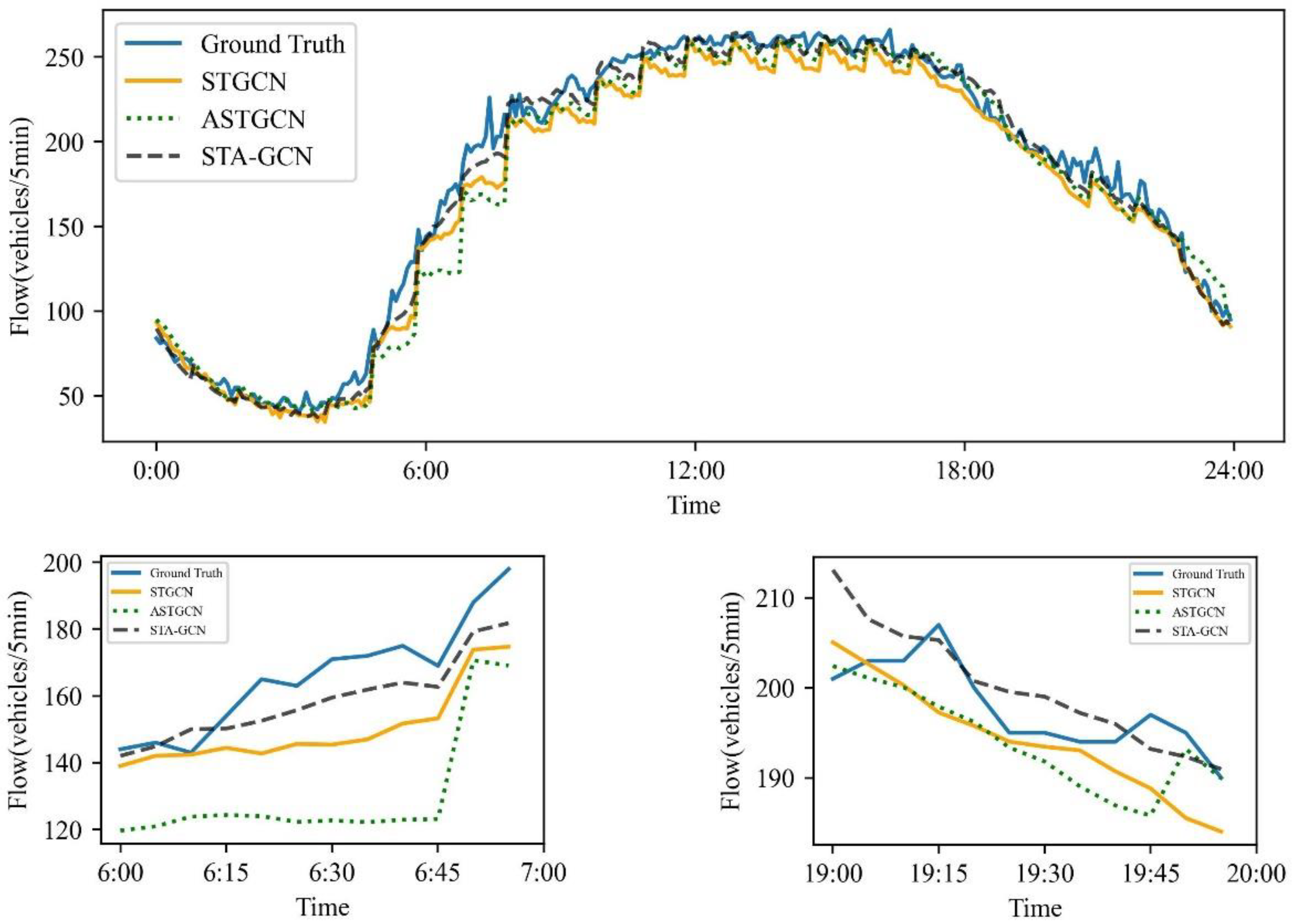

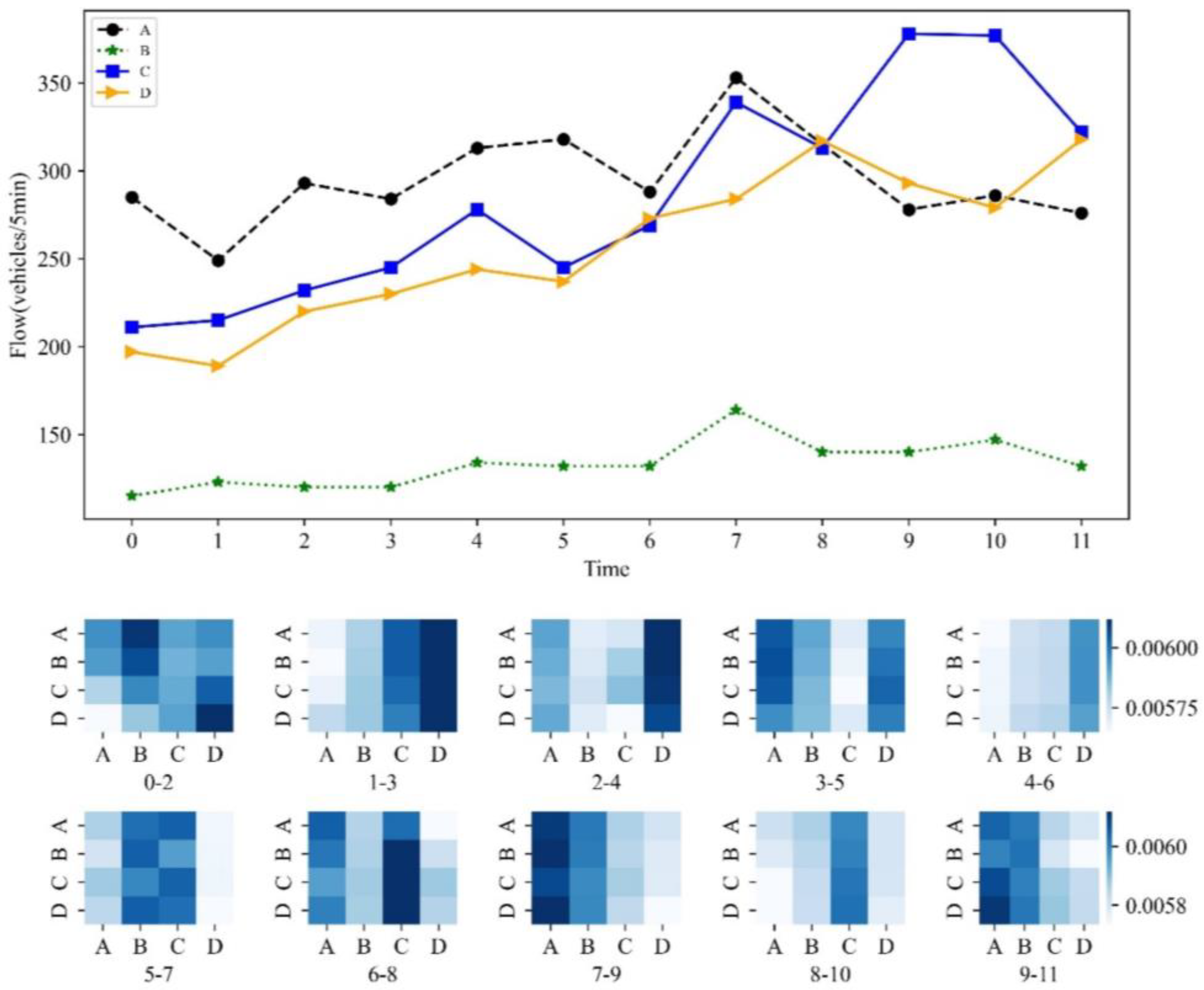

4.4. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, J.; Wang, F.-Y.; Wang, K.; Lin, W.-H.; Xu, X.; Chen, C. Data-Driven Intelligent Transportation Systems: A Survey. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1624–1639. [Google Scholar] [CrossRef]

- Lana, I.; Ser, J.D.; Velez, M.; Vlahogianni, E.I. Road Traffic Forecasting: Recent Advances and New Challenges. IEEE Intell. Transp. Syst. Mag. 2018, 10, 93–109. [Google Scholar] [CrossRef]

- Williams, B.; Hoel, L. Modeling and Forecasting Vehicular Traffic Flow as a Seasonal Arima Process: Theoretical Basis and Empirical Results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Kumar, S.V.; Vanajakshi, L. Short-Term Traffic Flow Prediction Using Seasonal Arima Model with Limited Input Data. Eur. Transp. Res. Rev. 2015, 7, 21. [Google Scholar] [CrossRef]

- Cai, L.; Yu, Y.; Zhang, S.; Song, Y.; Xiong, Z.; Zhou, T. A Sample-Rebalanced Outlier-Rejected K -Nearest Neighbor Regression Model for Short-Term Traffic Flow Forecasting. IEEE Access 2020, 8, 22686–22696. [Google Scholar] [CrossRef]

- Jeong, Y.S.; Byon, Y.J.; Castro-Neto, M.M.; Easa, S.M. Supervised Weighting-Online Learning Algorithm for Short-Term Traffic Flow Prediction. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1700–1707. [Google Scholar] [CrossRef]

- Dong, X.; Lei, T.; Jin, S.; Hou, Z. Short-Term Traffic Flow Prediction Based on Xgboost. In Proceedings of the IEEE 7th Data Driven Control and Learning Systems Conference (DDCLS), Beijing, China, 25–27 May 2018; pp. 854–859. [Google Scholar]

- Wang, S.; Zhao, J.; Shao, C.; Dong, C.; Yin, C. Truck Traffic Flow Prediction Based on Lstm and Gru Methods with Sampled Gps Data. IEEE Access 2020, 8, 208158–208169. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.-Y. Traffic Flow Prediction with Big Data: A Deep Learning Approach. IEEE Trans. Intell. Transp. Syst. 2015, 16, 865–873. [Google Scholar] [CrossRef]

- Duan, Z.; Yang, Y.; Zhang, K.; Ni, Y.; Bajgain, S. Improved Deep Hybrid Networks for Urban Traffic Flow Prediction Using Trajectory Data. IEEE Access 2018, 6, 31820–31827. [Google Scholar] [CrossRef]

- Huang, X.H.; Tang, J.; Yang, X.F.; Xiong, L.Y. A Time-Dependent Attention Convolutional Lstm Method for Traffic Flow Prediction. Appl. Intell. 2022, 52, 17371–17386. [Google Scholar] [CrossRef]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Cui, Z.; Henrickson, K.; Ke, R.; Wang, Y. Traffic Graph Convolutional Recurrent Neural Network: A Deep Learning Framework for Network-Scale Traffic Learning and Forecasting. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4883–4894. [Google Scholar] [CrossRef]

- Yu, B.; Yin, H.T.; Zhu, Z.X. Spatial-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 3634–3640. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 922–929. [Google Scholar]

- Zhuang, W.; Cao, Y. Short-Term Traffic Flow Prediction Based on a K-Nearest Neighbor and Bidirectional Long Short-Term Memory Model. Appl. Sci. 2023, 13, 2681. [Google Scholar] [CrossRef]

- Huang, R.; Chen, Z.; Zhai, G.; He, J.; Chu, X. Spatial-temporal correlation graph convolutional networks for traffic forecasting. In IET Intelligent Transport Systems; John Wiley & Sons Ltd.: London, UK, 2023; pp. 1–15. [Google Scholar]

- Ma, Q.; Sun, W.; Gao, J.; Ma, P.; Shi, M. Spatio-temporal adaptive graph convolutional networks for traffic flow forecasting. In IET Intelligent Transport Systems; John Wiley & Sons Ltd.: London, UK, 2022; pp. 1–13. [Google Scholar]

- Cheng, Y.; Cheng, X.; Tan, M. Traffic Flow Prediction Based on Combination Model of ARIMA and Wavelet Neural Network. Comput. Technol. Dev. 2017, 27, 169–172. [Google Scholar]

- Tang, J.; Liang, J.; Liu, F.; Hao, J.; Wang, Y. Multi-community passenger demand prediction at region level based on spatio-temporal graph convolutional network. Transport. Res. Part C Emerg. Technol. 2021, 124, 102951. [Google Scholar] [CrossRef]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural Message Passing for Quantum Chemistry. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1263–1272. [Google Scholar]

- Fang, S.; Zhang, Q.; Meng, G.; Xiang, S.; Pan, C. GSTNet: Global Spatial-Temporal Network for Traffic Flow Prediction. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; pp. 2286–2293. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–16. [Google Scholar]

- Song, C.; Lin, Y.; Guo, S.; Wan, H. Spatial-Temporal Synchronous Graph Convolutional Networks: A New Framework for Spatial-Temporal Network Data Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 914–921. [Google Scholar]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Zhang, C. Graph wavenet for deep spatial-temporal graph modeling. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 1907–1913. [Google Scholar]

- Bruna, J.; Zaremba, W.; Szlam, A.; Lecun, Y. Spectral networks and locally connected networks on graphs. In Proceedings of the International Conference on Learning Representations (ICLR2014), Banff, AB, Canada, 14–16 April 2014; pp. 1–14. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3844–3852. [Google Scholar]

- Diao, Z.; Wang, X.; Zhang, D.; Liu, Y.; Xie, K.; He, S. Dynamic Spatial-Temporal Graph Convolutional Neural Networks for Traffic Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 890–897. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Computation and Language, Long Beach, CA, USA, 4–9 December 2017; p. 3058. [Google Scholar]

- Dong, Y.; Liu, Q.; Du, B.; Zhang, L. Weighted feature fusion of convolutional neural network and graph attention network for hyperspectral image classification. IEEE Trans. Image Process. 2022, 31, 1559–1572. [Google Scholar] [CrossRef]

- Cai, L.; Janowicz, K.; Mai, G.; Yan, B.; Zhu, R. Traffic Transformer: Capturing the Continuity and Periodicity of Time Series for Traffic Forecasting. Trans. GIS 2020, 24, 736–755. [Google Scholar] [CrossRef]

- Xie, Y.; Niu, J.; Zhang, Y.; Ren, F. Multisize patched spatial-temporal transformer network for short-and long-term crowd flow prediction. IEEE Trans. Intell. Transp. Syst. 2022, 23, 21548–21568. [Google Scholar] [CrossRef]

- Yin, X.; Wu, G.; Wei, J.; Shen, Y.; Qi, H.; Yin, B. Deep Learning on Traffic Prediction: Methods, Analysis, and Future Directions. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4927–4943. [Google Scholar] [CrossRef]

- Wen-Hsiung, C.; Smith, C.; Fralick, S. A Fast Computational Algorithm for the Discrete Cosine Transform. IEEE Trans. Commun. 1977, 25, 1004–1009. [Google Scholar] [CrossRef]

- Wen, C.; Zhu, L. A Sequence-to-Sequence Framework Based on Transformer with Masked Language Model for Optical Music Recognition. IEEE Access 2022, 10, 118243–118252. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| Number of traffic nodes on the road network | |

| Historical sequence length | |

| Future sequence length | |

| The dimensionality of the traffic-node attributes mapped by the input layer | |

| The dimensionality of the node attributes after passing through a temporal gated convolutional layer | |

| The dimensionality of the node attributes after passing through a graph convolutional layer | |

| The spatial–temporal information of the input | |

| The information from the self-attention layer of an input time for a single transportation node | |

| Self-attention matrix for time | |

| The information from a single traffic node after passing through self-attention for time | |

| The information from all traffic nodes after passing through self-attention for time | |

| The information after passing through a temporal gated convolutional layer | |

| The information of the input space attention for a single time slice | |

| The information after passing through a spatial attention layer for a single time slice | |

| Information from the spatial self-attention layer across all time series | |

| Information from the spatial graph convolutional layer across all time series | |

| The final output of spatial–temporal prediction information |

| Datasets | T | Metric | ARIMA | SVR | FNN | GRU | FC-LSTM | STGCN | ASTGCN | STA-GCN |

|---|---|---|---|---|---|---|---|---|---|---|

| PeMSD04 | 15 | MAE | 25.52 | 25.34 | 25.02 | 24.85 | 24.32 | 22.31 | 21.02 | 19.02 |

| RMSE | 33.21 | 32.02 | 31.89 | 30.24 | 30.08 | 35.92 | 32.98 | 29.79 | ||

| MAPE | 18.25% | 18.02% | 17.85 | 17.23 | 16.85 | 17.05% | 15.21% | 12.55% | ||

| 30 | MAE | 31.75 | 30.23 | 29.52 | 29.20 | 28.78 | 24.02 | 21.87 | 18.05 | |

| RMSE | 40.26 | 38.67 | 37.52 | 37.21 | 36.84 | 38.94 | 34.12 | 30.54 | ||

| MAPE | 23.56% | 21.23% | 20.32 | 19.85 | 18.02 | 16.83% | 15.24% | 12.51% | ||

| 60 | MAE | 35.65 | 32.35 | 31.25 | 30.26 | 28.35 | 26.12 | 23.02 | 18.23 | |

| RMSE | 52.25 | 48.28 | 47.02 | 46.32 | 44.25 | 40.89 | 36.51 | 31.20 | ||

| MAPE | 26.69% | 23.78% | 21.02 | 20.23 | 18.20 | 17.23% | 16.95% | 12.32% | ||

| PeMSD08 | 15 | MAE | 19.06 | 19.07 | 19.08 | 19.21 | 19.12 | 15.26 | 14.94 | 12.01 |

| RMSE | 29.72 | 29.64 | 29.68 | 29.82 | 29.71 | 23.24 | 22.85 | 20.05 | ||

| MAPE | 13.10% | 12.98% | 13.02% | 13.45% | 13.07% | 10.19% | 9.91% | 7.21% | ||

| 30 | MAE | 23.12 | 21.51 | 21.05 | 20.85 | 20.13 | 15.52 | 15.04 | 12.30 | |

| RMSE | 35.53 | 32.25 | 31.25 | 31.01 | 30.65 | 23.88 | 23.23 | 21.45 | ||

| MAPE | 16.21 | 14.62% | 13.71% | 13.69 | 13.54% | 9.76% | 9.60% | 7.69% | ||

| 60 | MAE | 29.21 | 24.25 | 23.91 | 23.85 | 22.35 | 17.43 | 16.91 | 12.84 | |

| RMSE | 40.02 | 37.21 | 36.13 | 36.01 | 34.10 | 26.68 | 25.82 | 22.25 | ||

| MAPE | 18.02% | 15.03% | 14.35% | 14.24% | 14.01% | 11.74% | 10.95% | 7.83% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, Z.; Liu, C.; Jia, J. STA-GCN: Spatial-Temporal Self-Attention Graph Convolutional Networks for Traffic-Flow Prediction. Appl. Sci. 2023, 13, 6796. https://doi.org/10.3390/app13116796

Chang Z, Liu C, Jia J. STA-GCN: Spatial-Temporal Self-Attention Graph Convolutional Networks for Traffic-Flow Prediction. Applied Sciences. 2023; 13(11):6796. https://doi.org/10.3390/app13116796

Chicago/Turabian StyleChang, Zhihong, Chunsheng Liu, and Jianmin Jia. 2023. "STA-GCN: Spatial-Temporal Self-Attention Graph Convolutional Networks for Traffic-Flow Prediction" Applied Sciences 13, no. 11: 6796. https://doi.org/10.3390/app13116796

APA StyleChang, Z., Liu, C., & Jia, J. (2023). STA-GCN: Spatial-Temporal Self-Attention Graph Convolutional Networks for Traffic-Flow Prediction. Applied Sciences, 13(11), 6796. https://doi.org/10.3390/app13116796