Instance Segmentation and Number Counting of Grape Berry Images Based on Deep Learning

Abstract

1. Introduction

1.1. Related Work

1.2. Contribution

- The detection segmentation-based instance segmentation method can better solve the segmentation of grape images in industrial and natural scenes.

- An improved linear weighting post-processing method solve the berry missed detection problem in whole grape.

- The improved model can segment grape images with only a few annotations.

2. Materials and Methods

2.1. Sample Collection

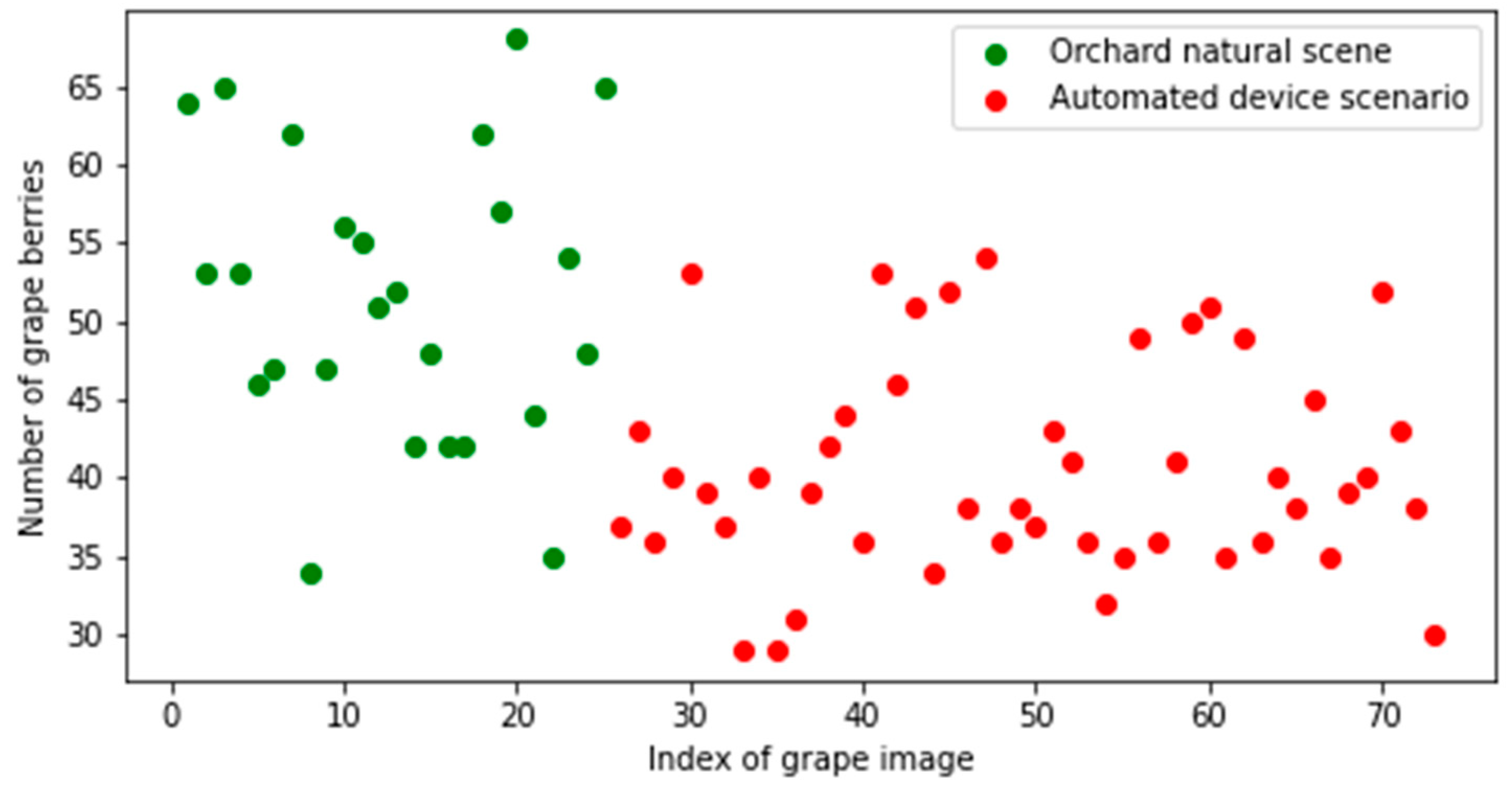

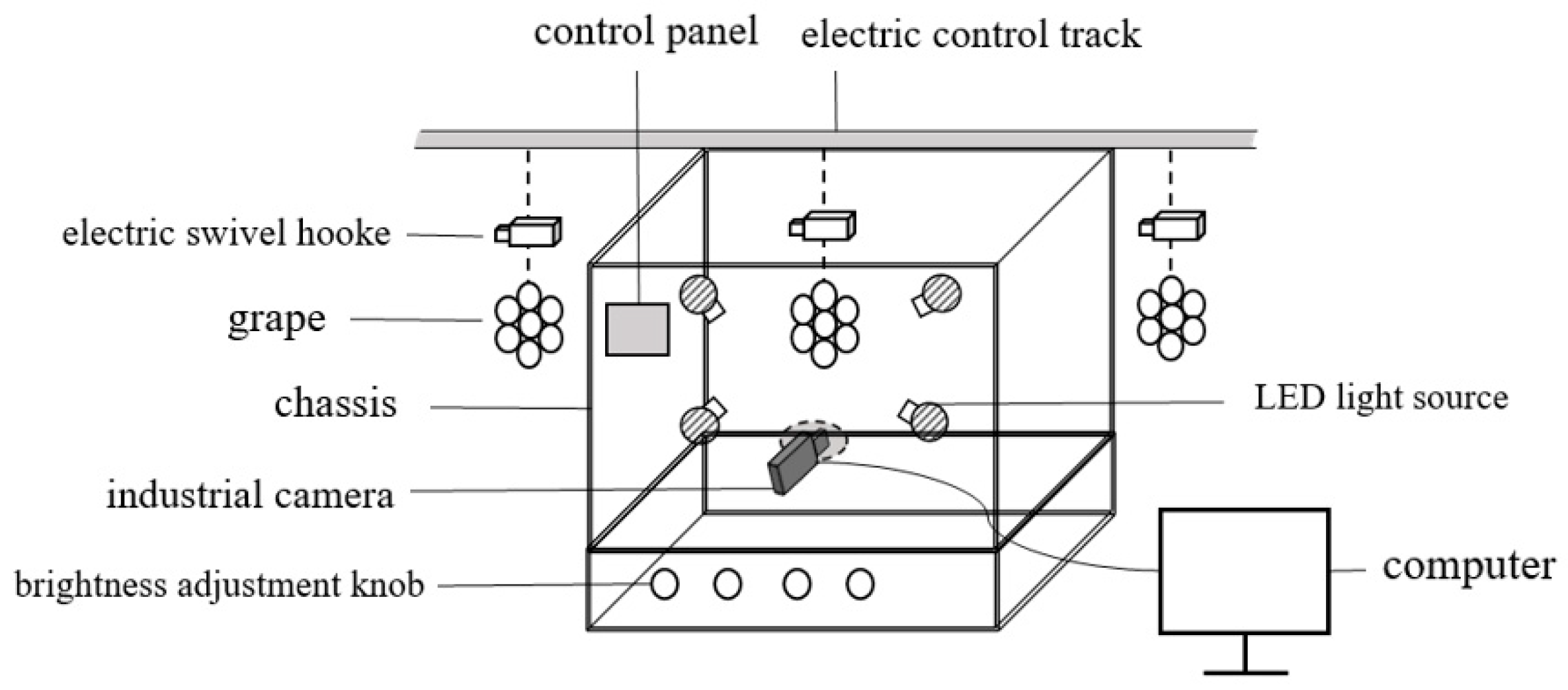

2.1.1. Grape Image Data in the Scene of Automated Equipment

2.1.2. Grape Image Data in Natural Scenes of Orchards

2.2. Image Preprocessing

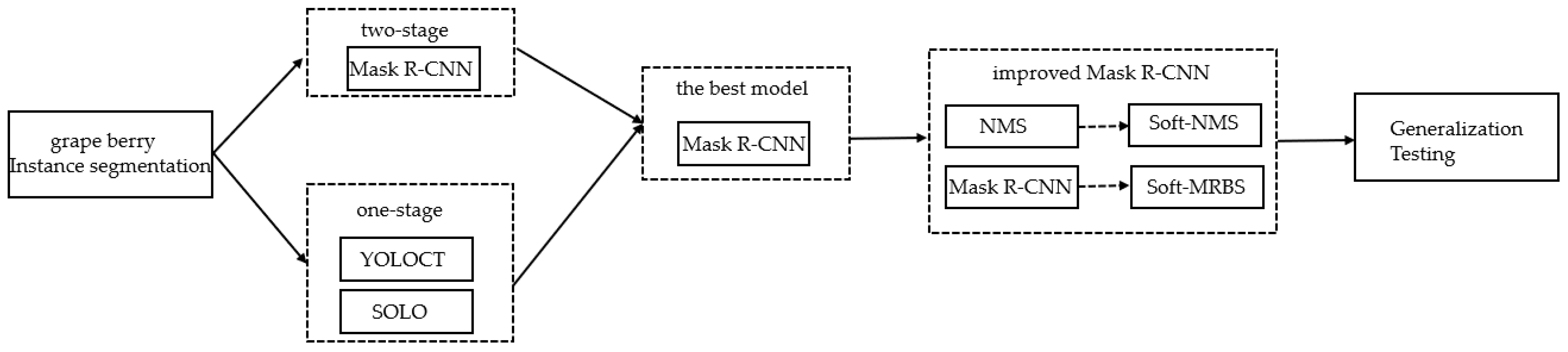

2.3. Instance Segmentation Model

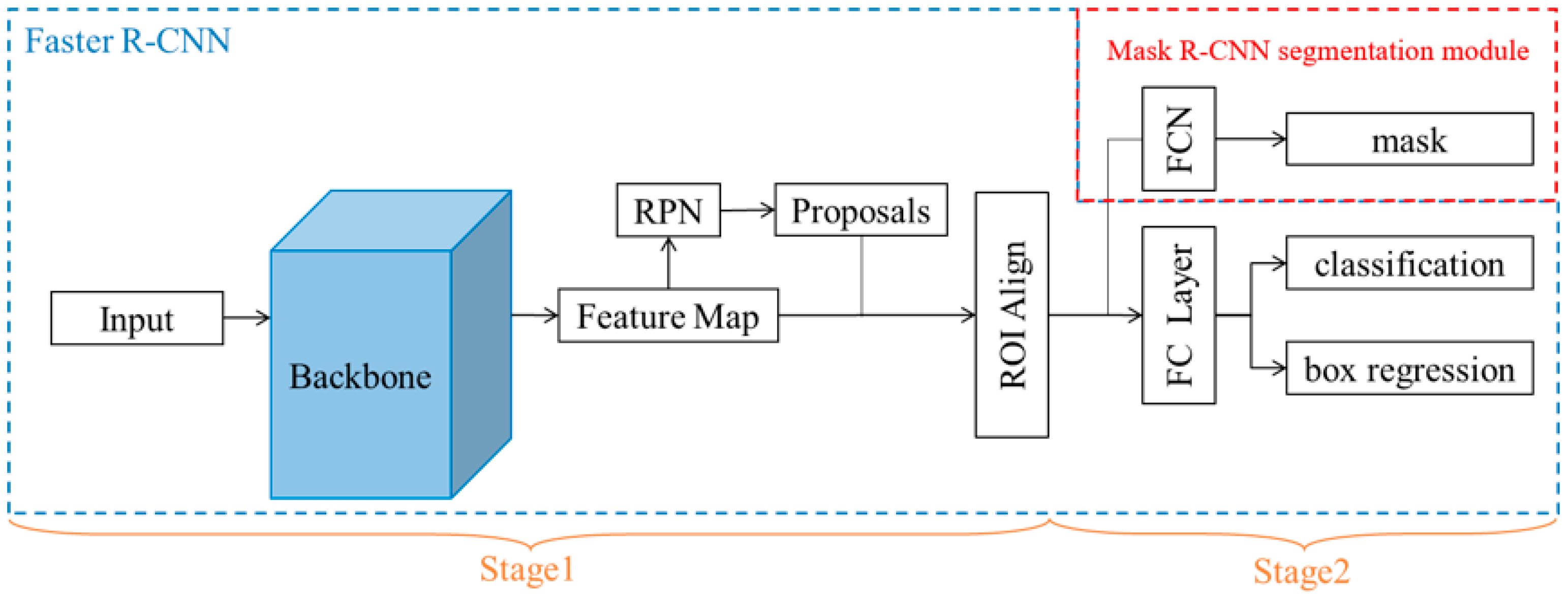

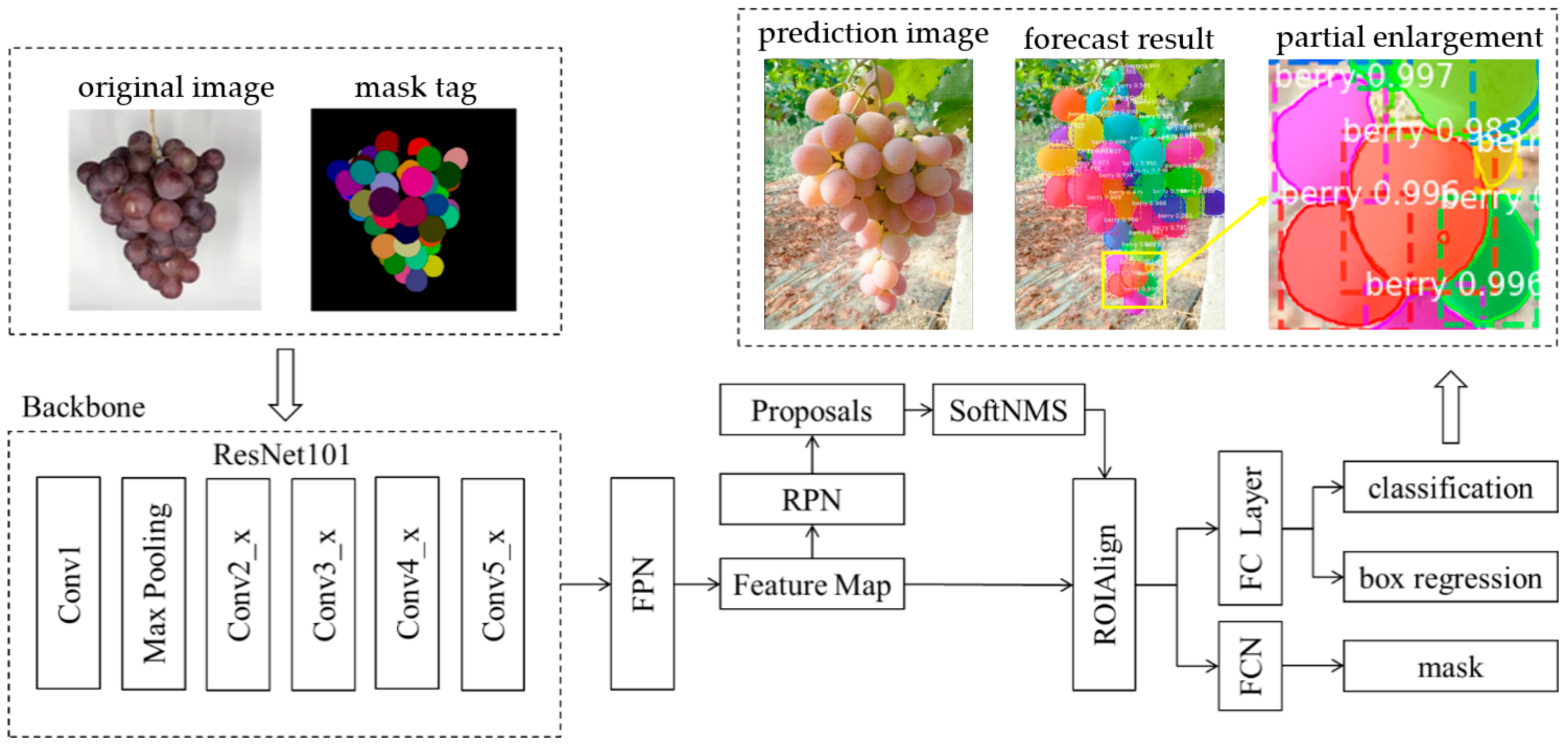

2.3.1. Mask R-CNN Model

2.3.2. YOLACT Model

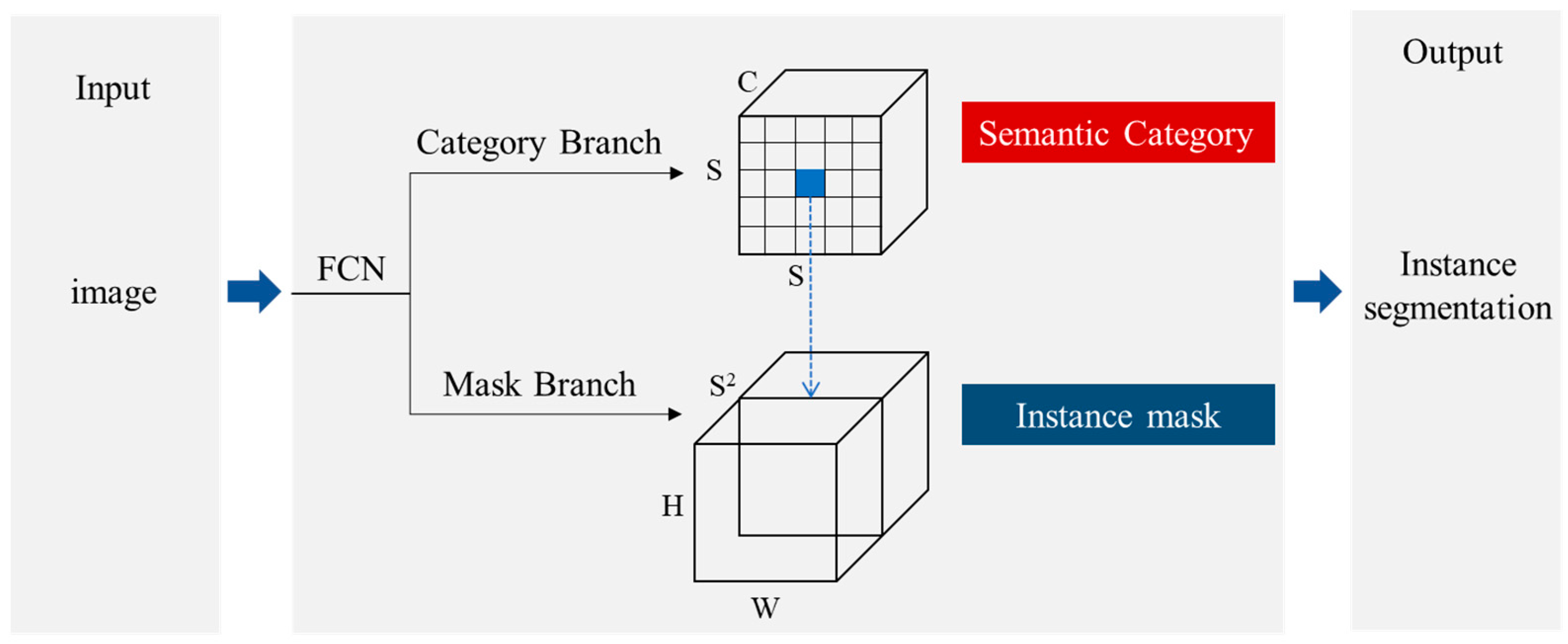

2.3.3. SOLO Model

2.4. Improved Algorithm

2.4.1. Non-Maximum Suppression Algorithm

2.4.2. Soft-NMS

| Algorithm 1: Soft-NMS |

| Input: candidate box set: B = {b1,…, bN}; detection score set S = {s1,…, sN}; IoU threshold Nt Output: The candidate boxes set D and detection score set S processed by the algorithm 1: D = {} 2: while B ≠ empty do 3: m = argmax S 4: M = bm 5: D = D ∪ Mi, B = B − M 6: for bi in B do 7: si = si f(iou(M, bi)) 8: end 9: end 10: return D, S |

2.4.3. Soft-MRBS Model

3. Results and Discussion

3.1. Experimental Configuration

3.2. Evaluation Indicators

- 1.

- Mean Intersection over Union (mIoU):

- 2.

- Average Precision (AP):

- 3.

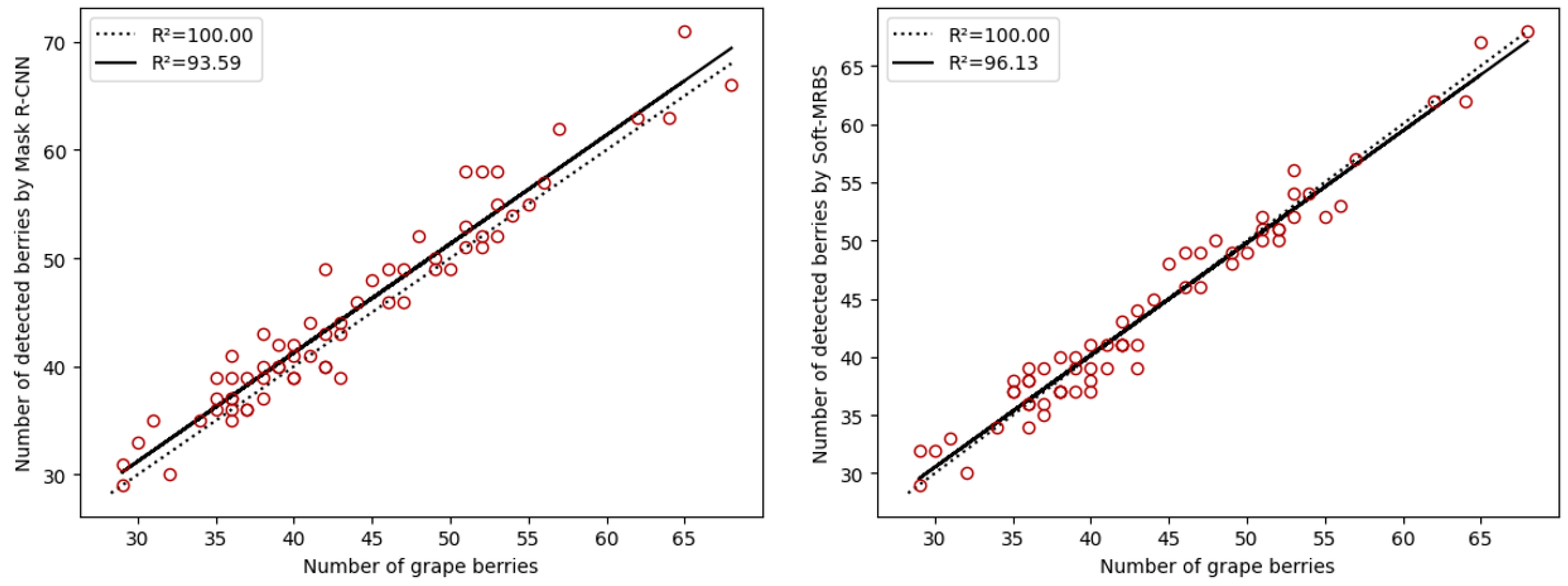

- Coefficient of determination (R2):

3.3. Model Training

3.4. Experimental Results

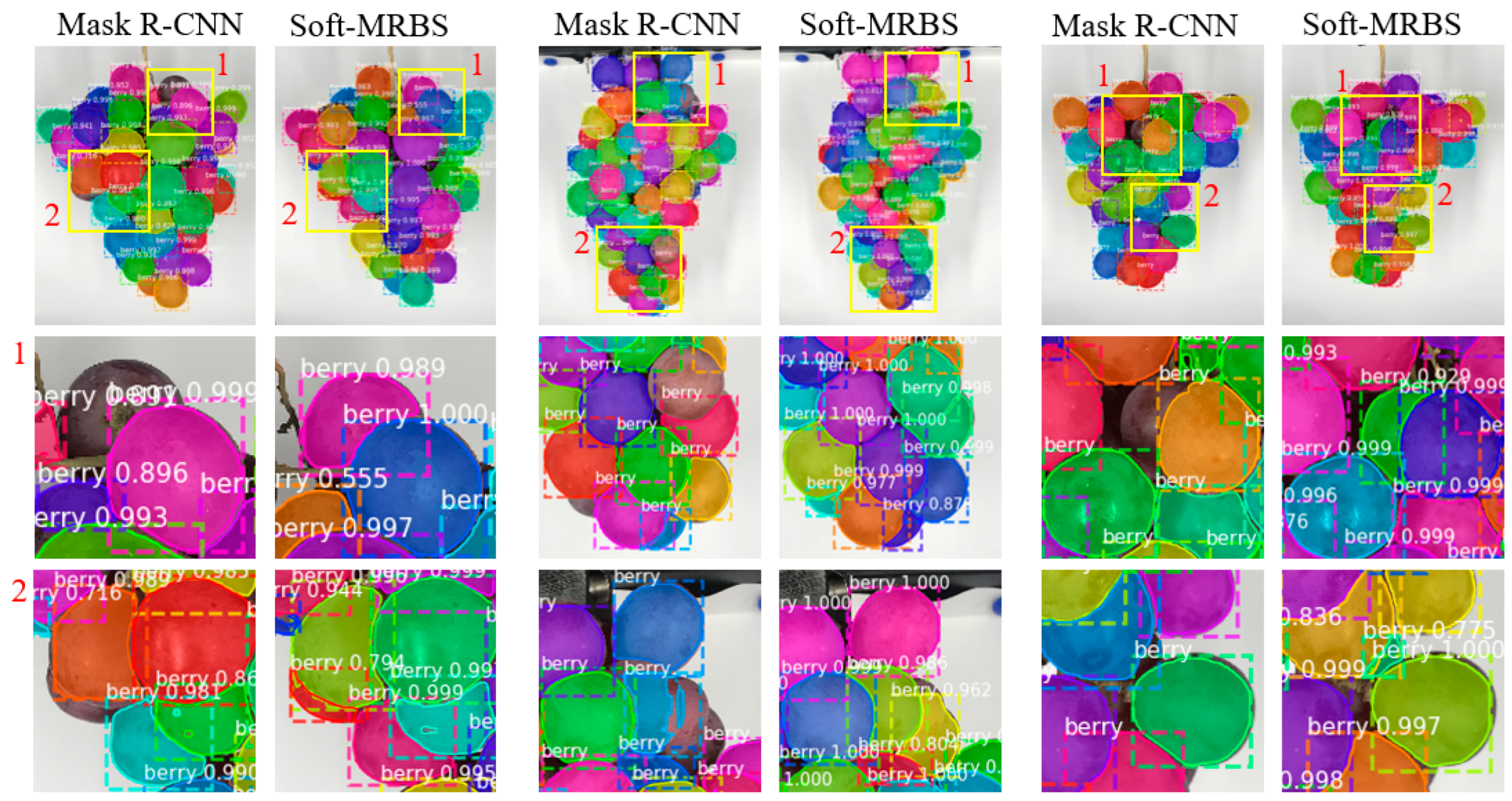

3.4.1. Preliminary Experimental Results

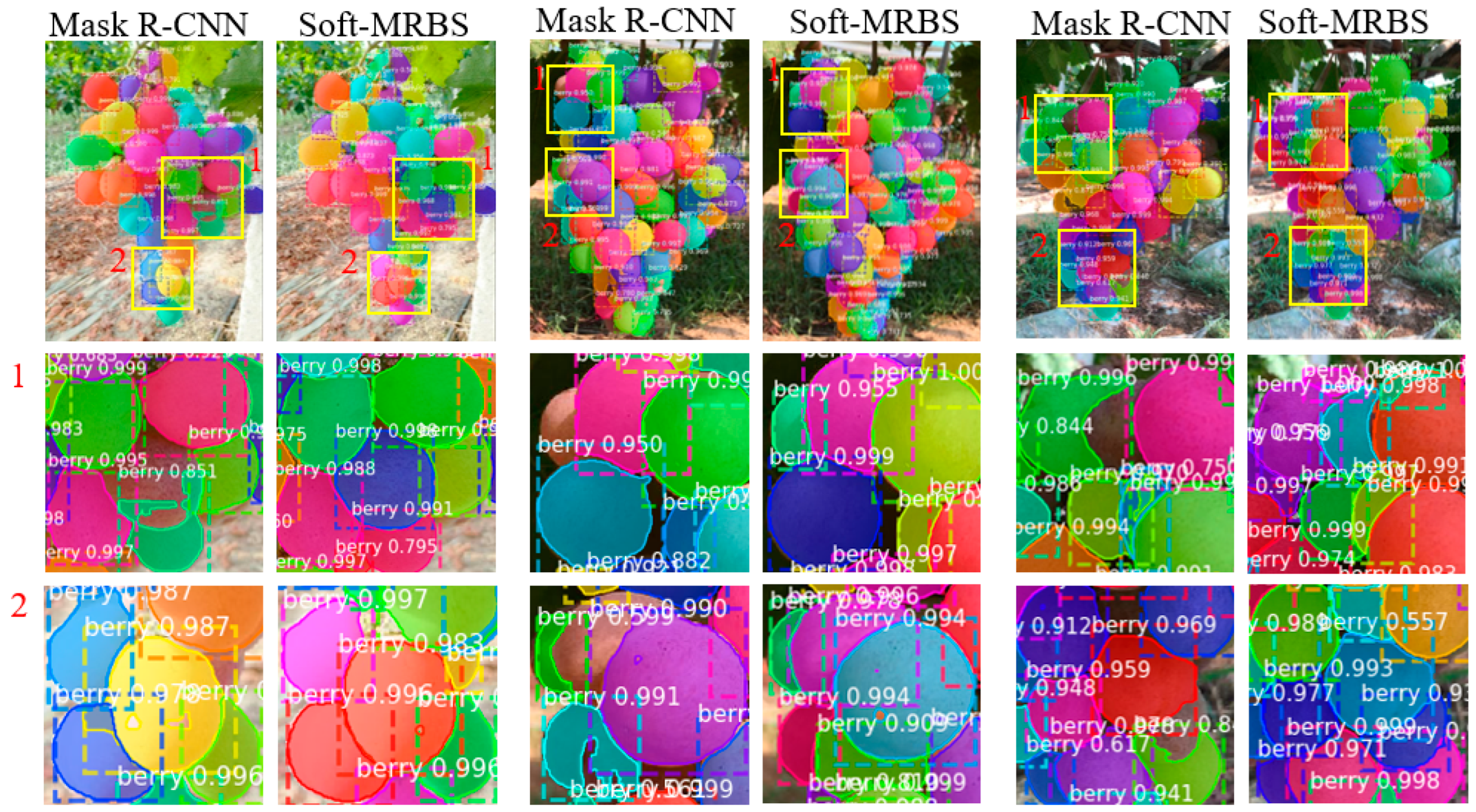

3.4.2. Improving the Experimental Results

3.4.3. Red Globe Grape Berries Number Count

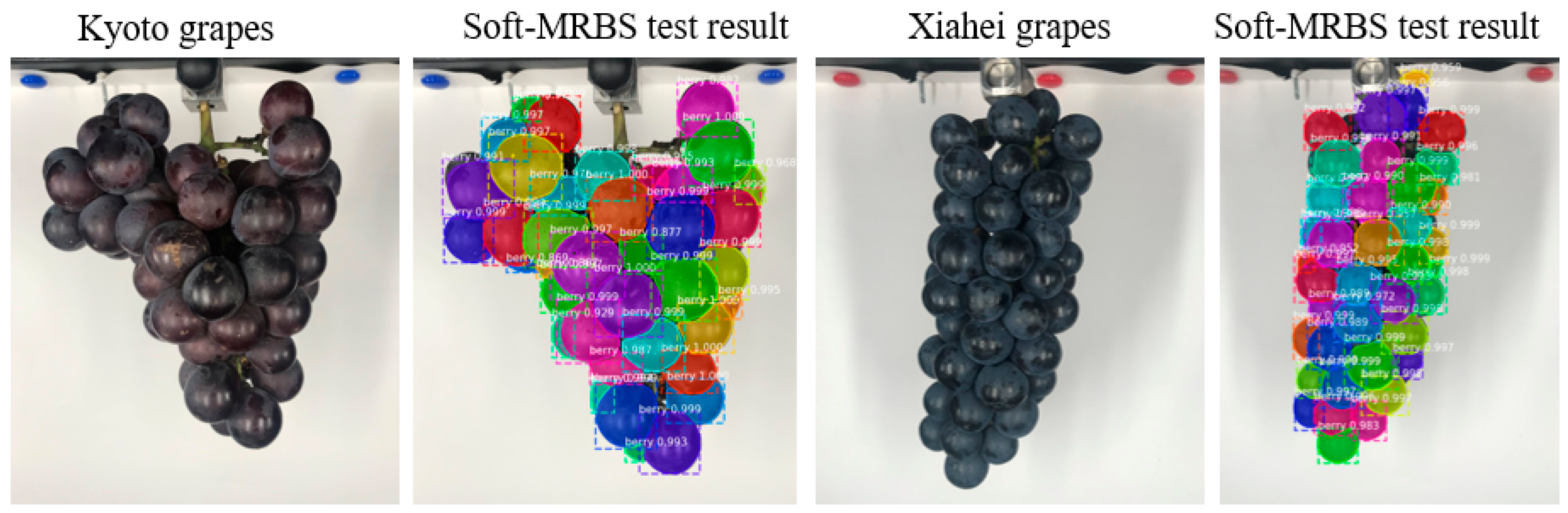

3.5. Generalization Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, F. Current Situation and Development Trend of Viticulture in China. Deciduous Fruits 2017, 49, 1–4. (In Chinese) [Google Scholar]

- Luo, L.; Zou, X.; Xiong, J.; Zhang, Y.; Peng, H.; Lin, G. Automatic positioning for picking point of grape picking robot in natural environment. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2015, 31, 14–21. (In Chinese) [Google Scholar]

- Ma, B.; Jia, Y.; Mei, W.; Gao, G.; Lv, C.; Zhou, Q. Study on the Recognition M ethod of Grape in Different Natural Environment. Mod. Food Sci. Technol. 2015, 31, 145–149. (In Chinese) [Google Scholar]

- Nasser, B.K.; Maleki, M.R. A robust algorithm based on color features for grape cluster segmentation. Comput. Electron. Agric. 2017, 142, 41–49. [Google Scholar]

- Liu, Z. Image-Based Detection Method of Kyoho Grape Fruit Size Research. Master’s Thesis, Northeast Forestry University, Harbin, China, 2019. (In Chinese). [Google Scholar]

- Pothen, Z.S.; Nuske, S. Texture-based fruit detection via images using the smooth patterns on the fruit. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Nuske, S.; Wilshusen, K.; Achar, S.; Yoder, L.; Narasimhan, S.; Singh, S. Automated Visual Yield Estimation in Vineyards. J. Field Robot. 2014, 31, 837–860. [Google Scholar] [CrossRef]

- Badeka, E.; Kalabokas, T.; Tziridis, K.; Nicolaou, A.; Vrochidou, E.; Mavridou, E.; Papakostas, G.A.; Pachidis, T. Grapes visual segmentation for harvesting robots using local texture descriptors. In Computer Vision Systems; Springer: Cham, Switzerland, 2019; pp. 98–109. [Google Scholar]

- Font, D.; Pallejà, T.; Tresanchez, M.; Teixidó, M.; Martinez, D.; Moreno, J.; Palacín, J. Counting Red Globe grapes in vineyards by detecting specular spherical reflection peaks in RGB images obtained at night with artificial illumination. Comput. Electron. Agric. 2014, 108, 105–111. [Google Scholar] [CrossRef]

- Reis, M.J.C.S.; Morais, R.; Peres, E.; Pereira, C.; Contente, O.; Soares, S.; Valente, A.; Baptista, J.; Ferreira, P.J.S.G.; Bulas Cruz, J. Automatic detection of bunches of grapes in natural environment from color images. J. Appl. Log. 2012, 10, 285–290. [Google Scholar] [CrossRef]

- Santos, T.T.; Souza, L.L.; Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef]

- Ni, X.P.; Li, C.Y.; Jiang, H.Y.; Takeda, F. Three-dimensional photogrammetry with deep learning instance segmentation to extract berry fruit harvestability traits. ISPRS J. Photogramm. Remote Sens. 2021, 171, 297–309. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Elkamil, T.; Liu, Z.; Li, R.; Cui, Y. Image recognition method of multi-cluster kiwifruit in field based on convolutional neural networks. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2018, 34, 205–211. [Google Scholar]

- Yu, Y.; Zhang, K.L.; Yang, L.; Zhang, D.X. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Wang, Y.J.; Lv, J.D.; Xu, L.M.; Gu, Y.W.; Zou, L.; Ma, Z.H. A segmentation method for waxberry image under orchard environment. Sci. Hortic. 2020, 266, 109309. [Google Scholar] [CrossRef]

- Jia, W.K.; Tian, Y.Y.; Luo, R.; Zhang, Z.H.; Lian, J.; Zheng, Y.J. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Qiao, H.; Feng, Q.; Zhao, B. Instance Segmentation of Grape Leaf Based on Mask R-CNN. For. Mach. Woodwork. Equip. 2019, 47, 15–22. (In Chinese) [Google Scholar]

- Lou, T.T.; Yang, H.; Hu, Z.W. Grape cluster detection and segmentation based on deep convolutional network. J. Shanxi Agric. Univ. (Nat. Sci. Ed.) 2020, 40, 109–119. (In Chinese) [Google Scholar]

- Zabawa, L.; Kicherer, A.; Klingbeil, L.; Töpfer, R.; Kuhlmann, H.; Roscher, R. Counting of grapevine berries in images via semantic segmentation using convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 164, 73–83. [Google Scholar] [CrossRef]

- Su, L.; Sun, Y.; Yuan, S. A survey of instance segmentation research based on deep learning. CAAI Trans. Intell. Syst. 2022, 17, 16–31. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13 December 2015. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 28 June 2014. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-time Instance Segmentation. arXiv 2019, arXiv:1904.02689. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 12 June 2015. [Google Scholar]

- Wang, X.L.; Kong, T.; Shen, C.H.; Jiang, Y.N.; Li, L. SOLO: Segmenting Objects by Locations. arXiv 2019, arXiv:1912.04488. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving Object Detection with One Line of Code. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Lawrence Zitnick, C. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

| Model | Optimizer | Rate | Decay | Momentum | Batch Size |

|---|---|---|---|---|---|

| YOLACT | SGD | 1 × 10−2 | 5 × 10−4 | 0.9 | 2 |

| SOLO | SGD | 1 × 10−2 | 1 × 10−4 | 0.9 | 2 |

| Mask R-CNN | SGD | 1 × 10−3 | 1 × 10−4 | 0.9 | 2 |

| Model | mIoU (%) | AP0.50 (%) | AP0.75 (%) | mAP (%) |

|---|---|---|---|---|

| YOLACT | 82.91 | 85.14 | 79.08 | 66.59 |

| SOLO | 83.47 | 86.69 | 80.27 | 67.25 |

| Mask R-CNN | 85.98 | 88.08 | 82.04 | 69.93 |

| Model | mIoU (%) | AP0.50 (%) | AP0.75 (%) | mAP (%) | |

|---|---|---|---|---|---|

| Automated device scenario | Mask R-CNN | 88.12 | 88.85 | 83.09 | 71.10 |

| Soft-MRBS | 90.20 | 90.91 | 86.53 | 79.62 | |

| Orchard natural scene | Mask R-CNN | 85.25 | 84.89 | 78.12 | 68.29 |

| Soft-MRBS | 86.24 | 84.95 | 78.98 | 72.35 | |

| Total | Mask R-CNN | 85.98 | 88.08 | 82.04 | 69.93 |

| Soft-MRBS | 89.53 | 90.06 | 84.76 | 74.23 |

| Model | mIoU (%) | mAP (%) |

|---|---|---|

| Soft-MRBS | 87.24 | 69.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Li, X.; Jia, M.; Li, J.; Hu, T.; Luo, J. Instance Segmentation and Number Counting of Grape Berry Images Based on Deep Learning. Appl. Sci. 2023, 13, 6751. https://doi.org/10.3390/app13116751

Chen Y, Li X, Jia M, Li J, Hu T, Luo J. Instance Segmentation and Number Counting of Grape Berry Images Based on Deep Learning. Applied Sciences. 2023; 13(11):6751. https://doi.org/10.3390/app13116751

Chicago/Turabian StyleChen, Yanmin, Xiu Li, Mei Jia, Jiuliang Li, Tianyang Hu, and Jun Luo. 2023. "Instance Segmentation and Number Counting of Grape Berry Images Based on Deep Learning" Applied Sciences 13, no. 11: 6751. https://doi.org/10.3390/app13116751

APA StyleChen, Y., Li, X., Jia, M., Li, J., Hu, T., & Luo, J. (2023). Instance Segmentation and Number Counting of Grape Berry Images Based on Deep Learning. Applied Sciences, 13(11), 6751. https://doi.org/10.3390/app13116751