Object-Oriented Remote Sensing Image Change Detection Based on Color Co-Occurrence Matrix

Abstract

Featured Application

Abstract

1. Introduction

- (1)

- The proposed approach abandons the traditional method of describing the texture features in multi-channel images, which involves separately extracting texture features from single-band gray images and then concatenating them to describe multi-channel texture features. The CCM is innovatively used to establish inter-channel correlation and directly extract color texture features from multi-channel images for change detection.

- (2)

- The cumulative backward search strategy (CBSS) is proposed to eliminate the impact of instability in feature sorting under the one-time training on feature selection, which is beneficial for finding more representative and effective feature subsets.

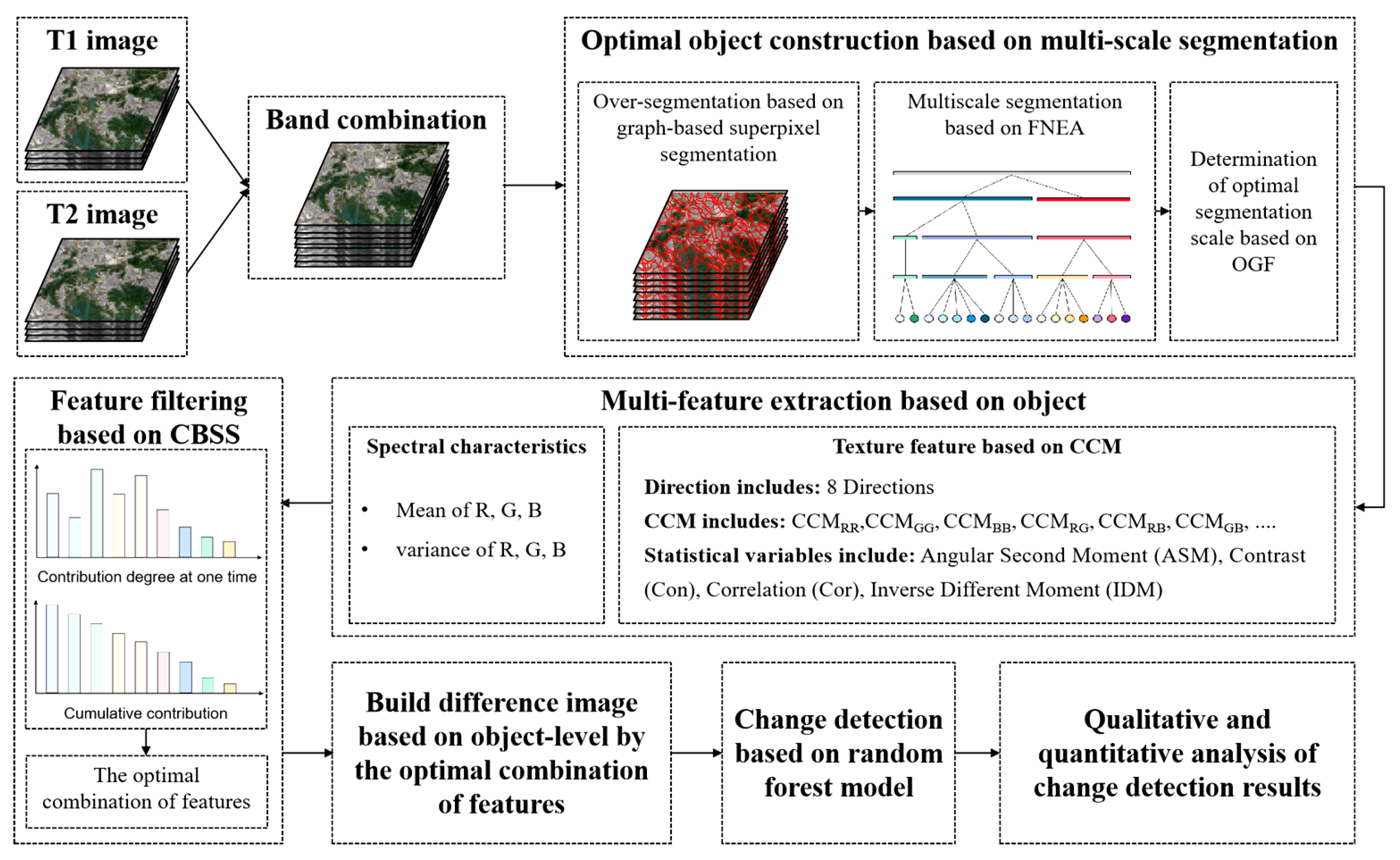

2. Methods

- (a)

- Optimal object construction based on multi-scale segmentation: In order to ensure the spatial consistency of the two periods of remote sensing images, band combination is carried out. On the basis of over-segmentation based on graph-based superpixel segmentation, the FNEA algorithm is utilized to establish multi-scale objects, and then the optimal segmentation scale is determined according to the maximum criterion of OGF to obtain the optimal object.

- (b)

- Multi-feature extraction based on object: There are two types of features: spectral features and texture features. Spectral features include the mean and variance of bands, and texture features are extracted by CCM on the basis of a pairwise combination of bands, including 8 directions and 4 statistical variables, i.e., angular second moment (ASM), contrast (Con), correlation (Cor), and inverse different moment (IDM).

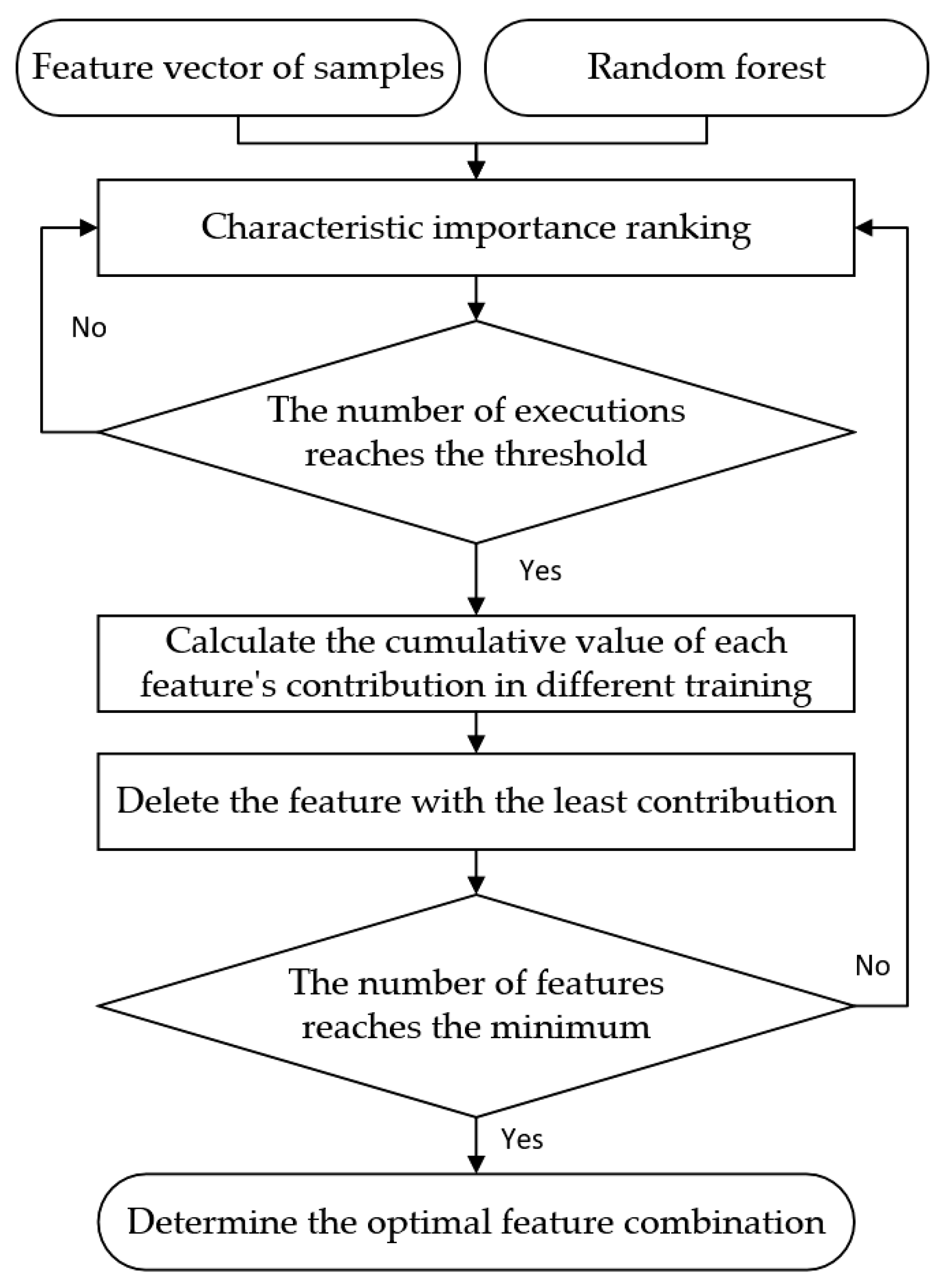

- (c)

- Feature filtering based on CBSS: On the basis of the random forest model, the contribution degree of characteristics of one-time training is estimated. The cumulative value of the contribution degrees obtained from multiple rounds of training is considered as a standard to delete the least important feature in turn until the given minimum number of features is reached. Then, the feature combination with the highest accuracy and the smallest number of features is selected as the optimal feature combination.

- (d)

- Object-oriented change detection based on the random forest: The differential image based on the optimal feature vector set after screening is incorporated into the random forest model for change detection, and the detection results are evaluated qualitatively and quantitatively.

2.1. Optimal Object Construction Based on Multi-Scale Segmentation

2.2. Object-Oriented Multi-Feature Extraction

2.2.1. Spectral Features

2.2.2. Texture Features Based on Color Co-Occurrence Matrix

2.3. Feature Filtering Based on the Cumulative backward Search Strategy

2.4. Change Detection Based on Random Forest

3. Experimental Results and Discussion

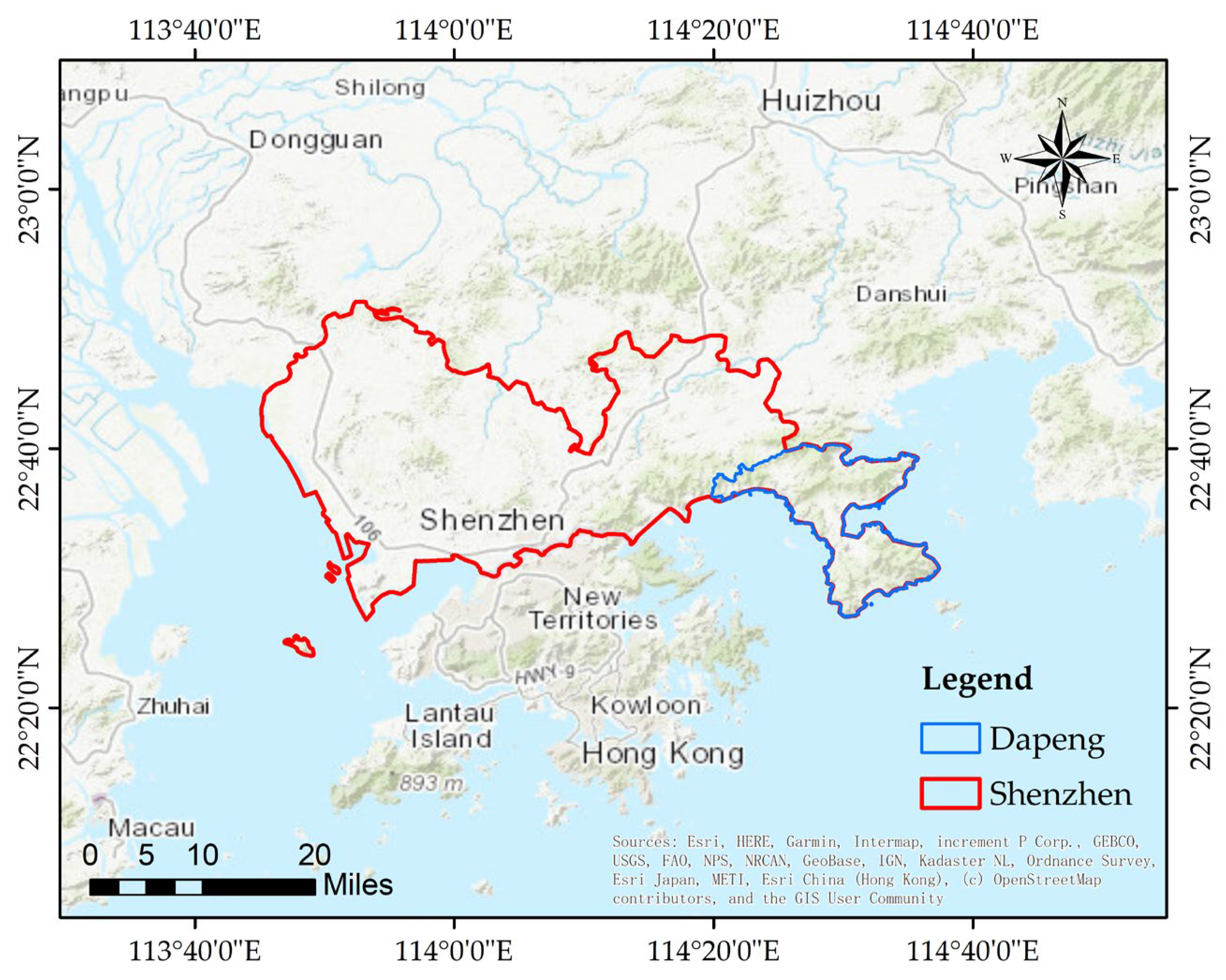

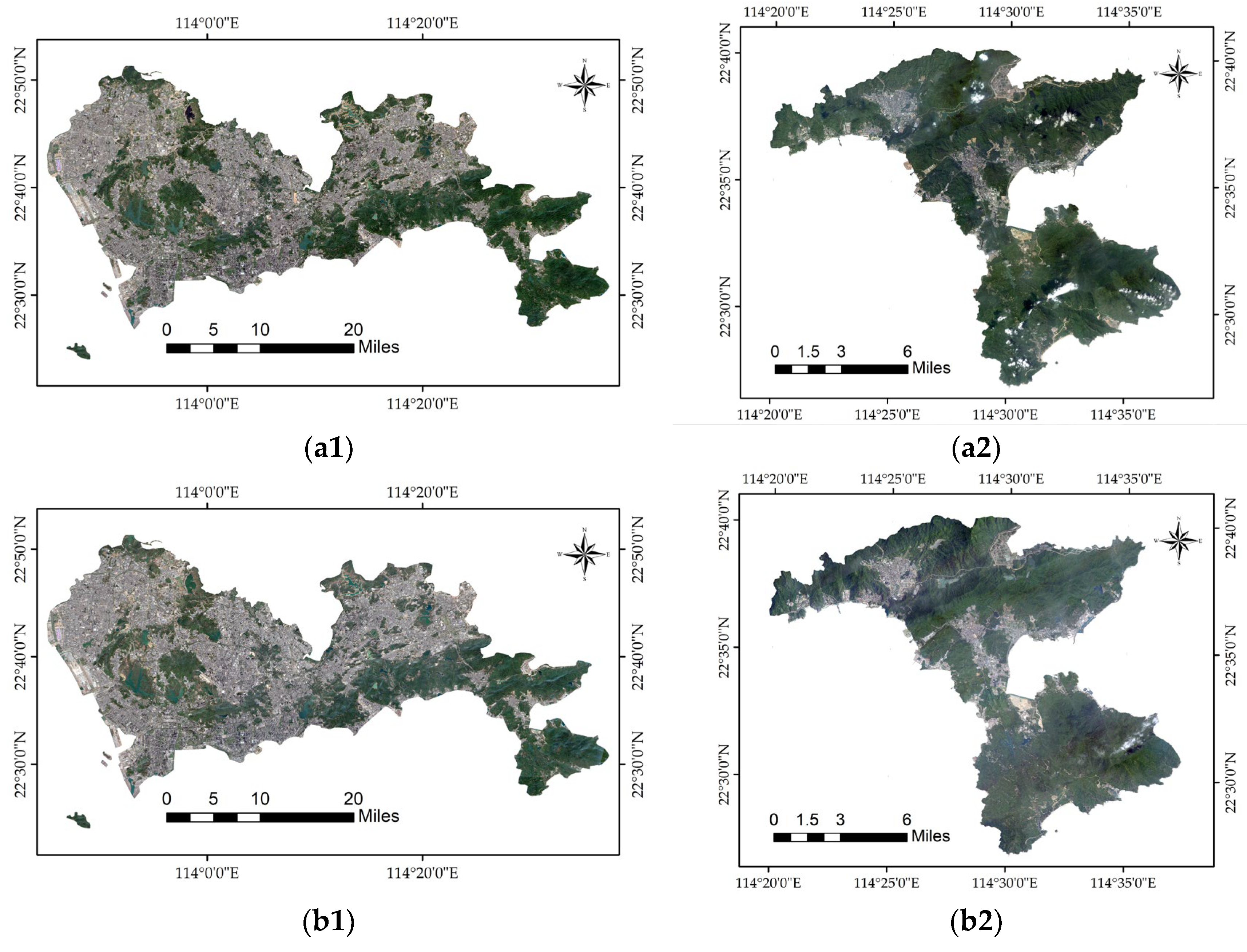

3.1. Study Area and Experimental Data

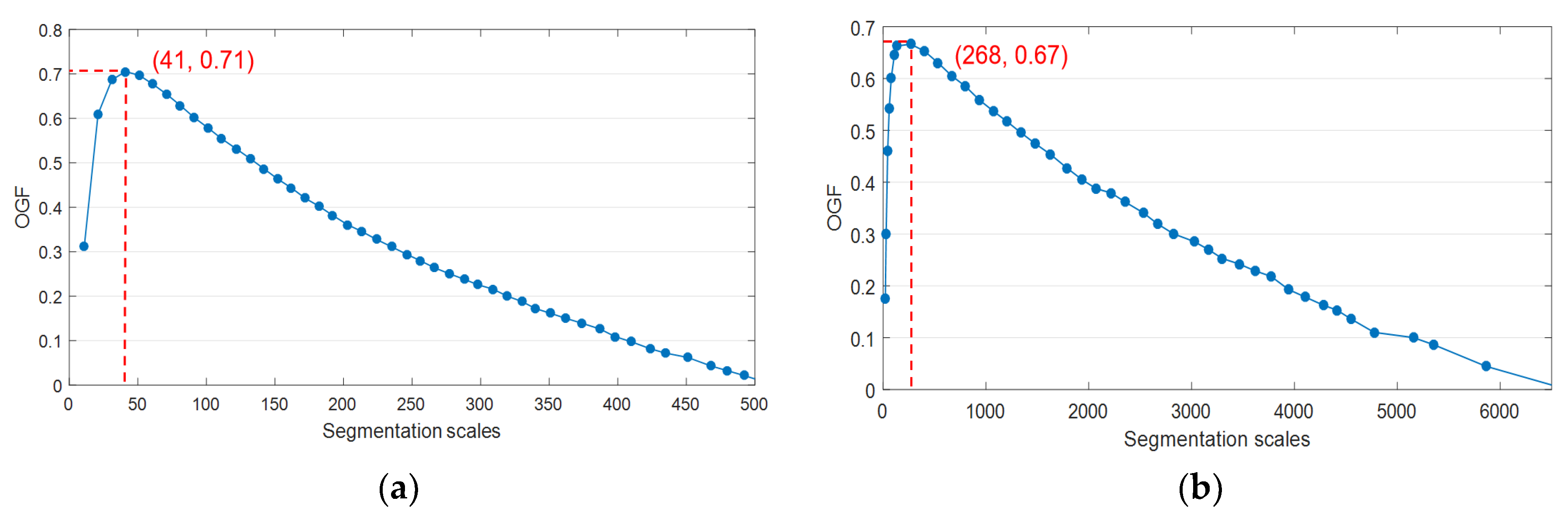

3.2. Determination of the Optimal Segmentation Scale

3.3. Optimal Feature Combination Filtering

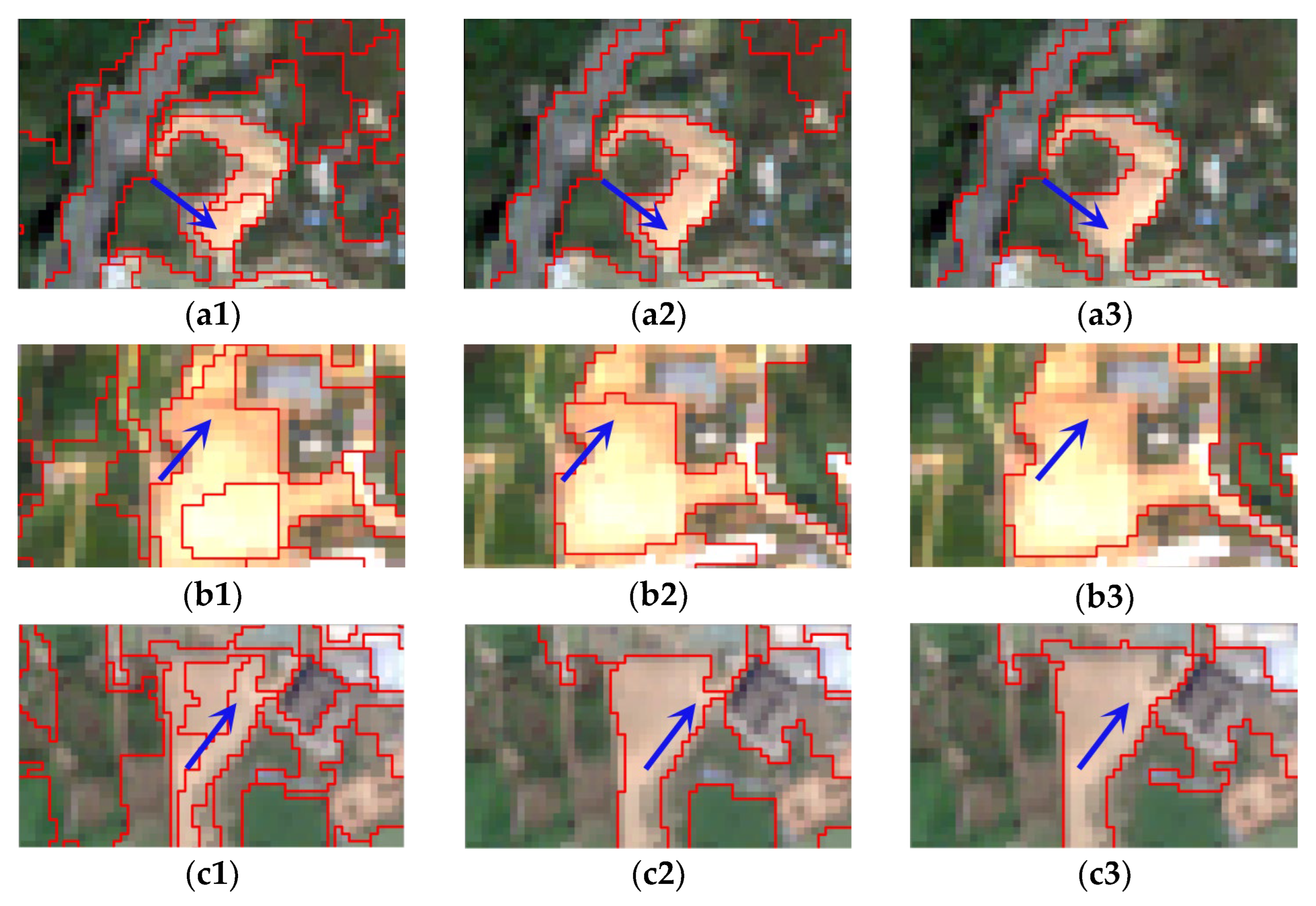

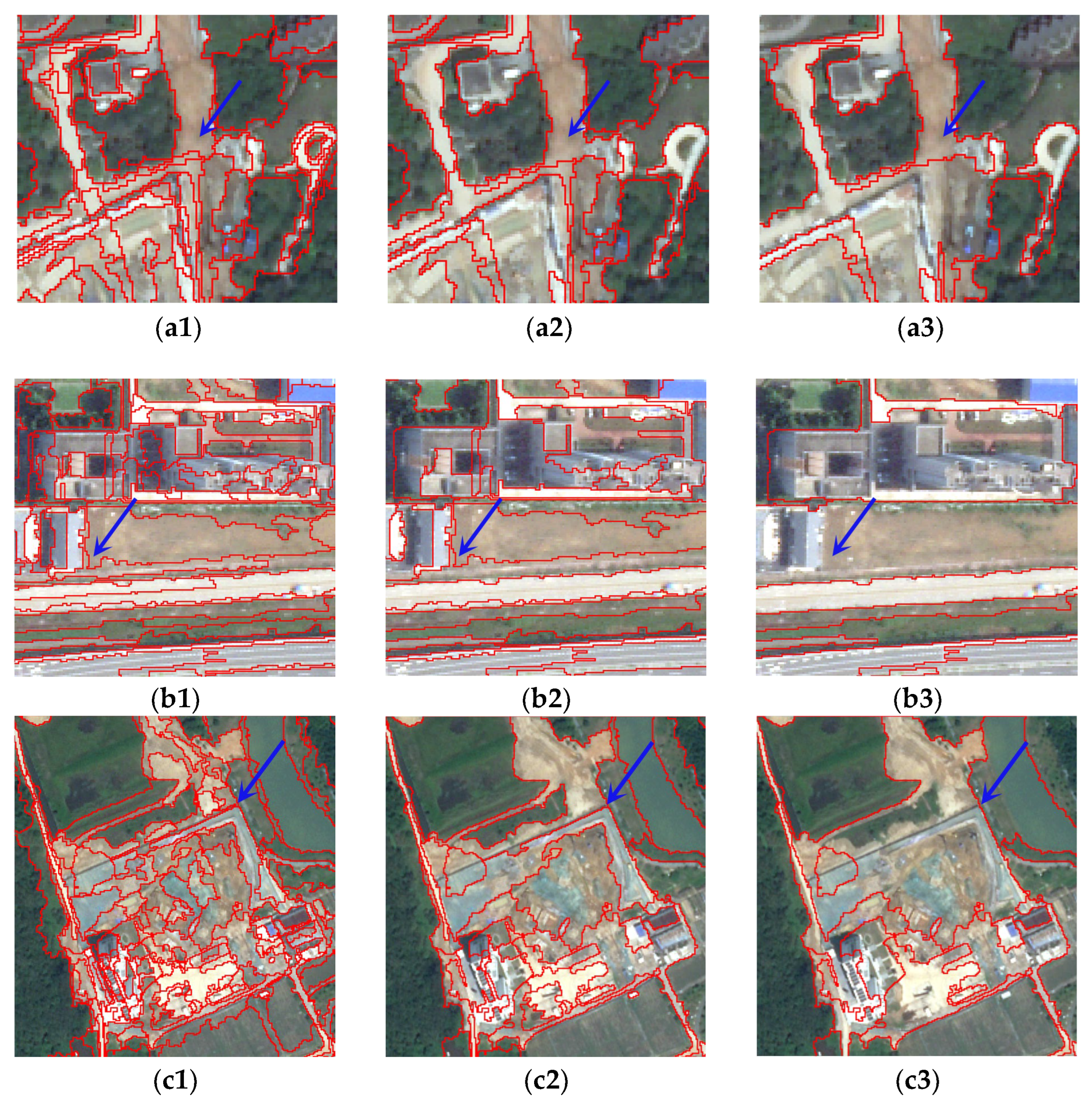

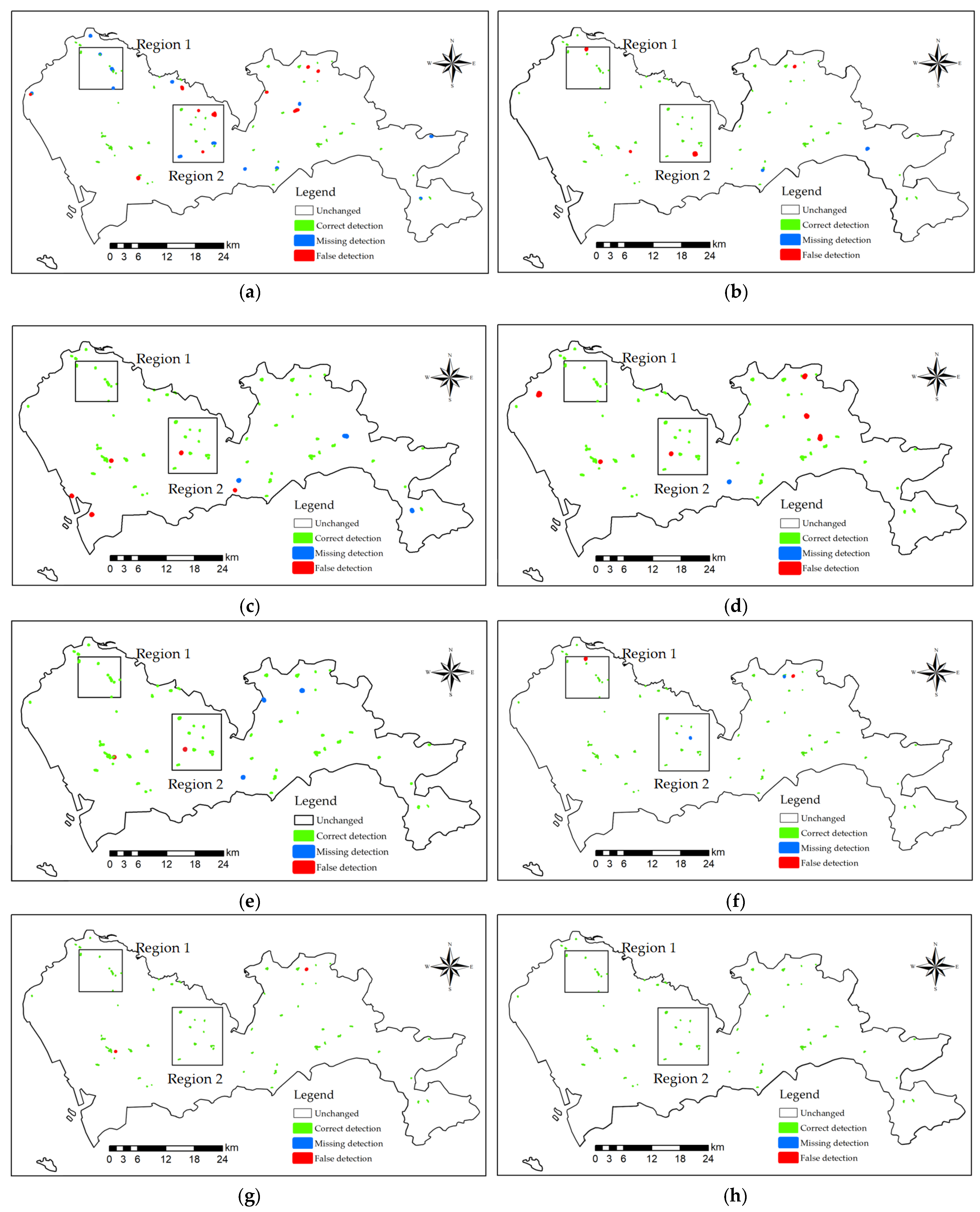

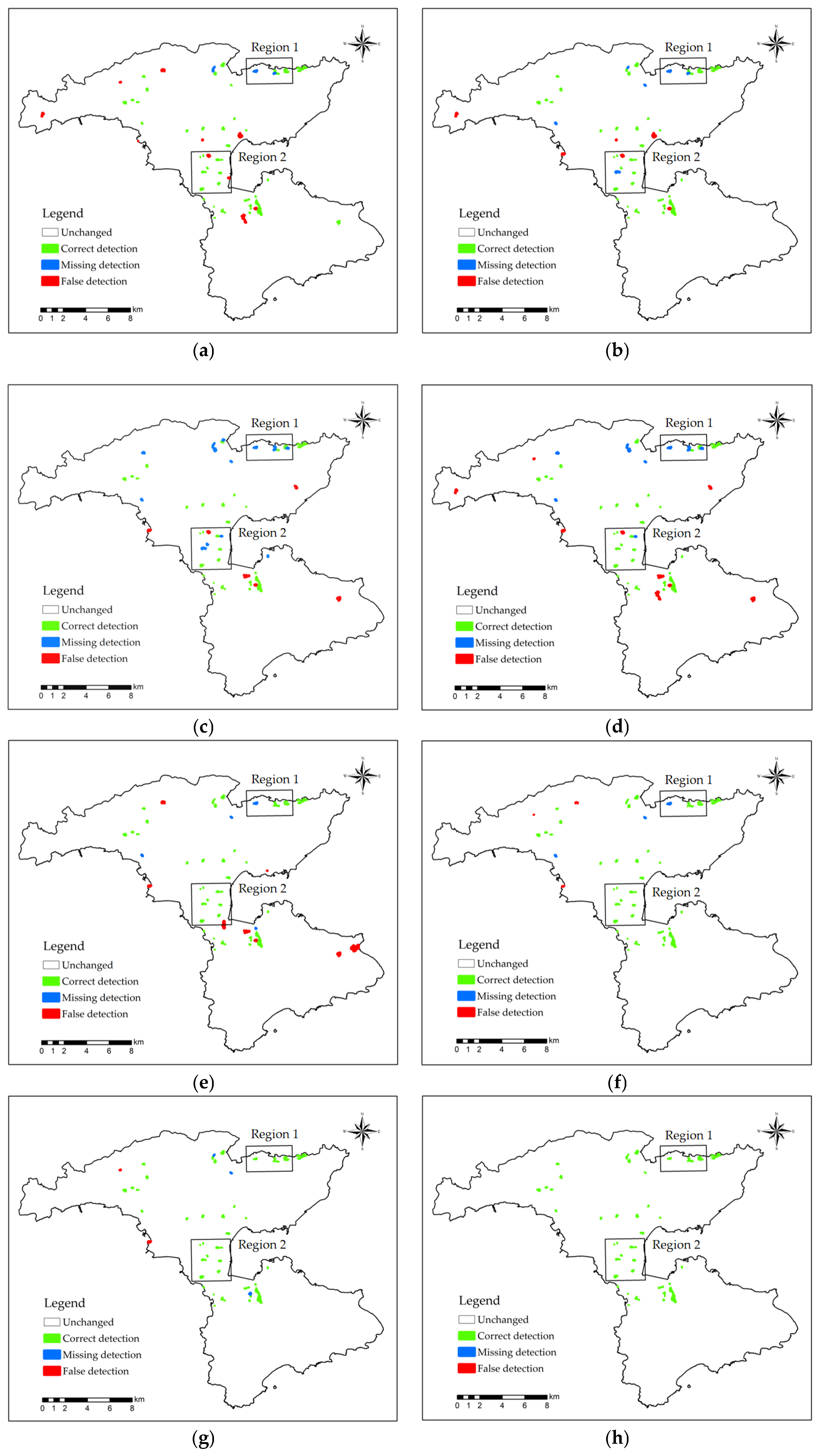

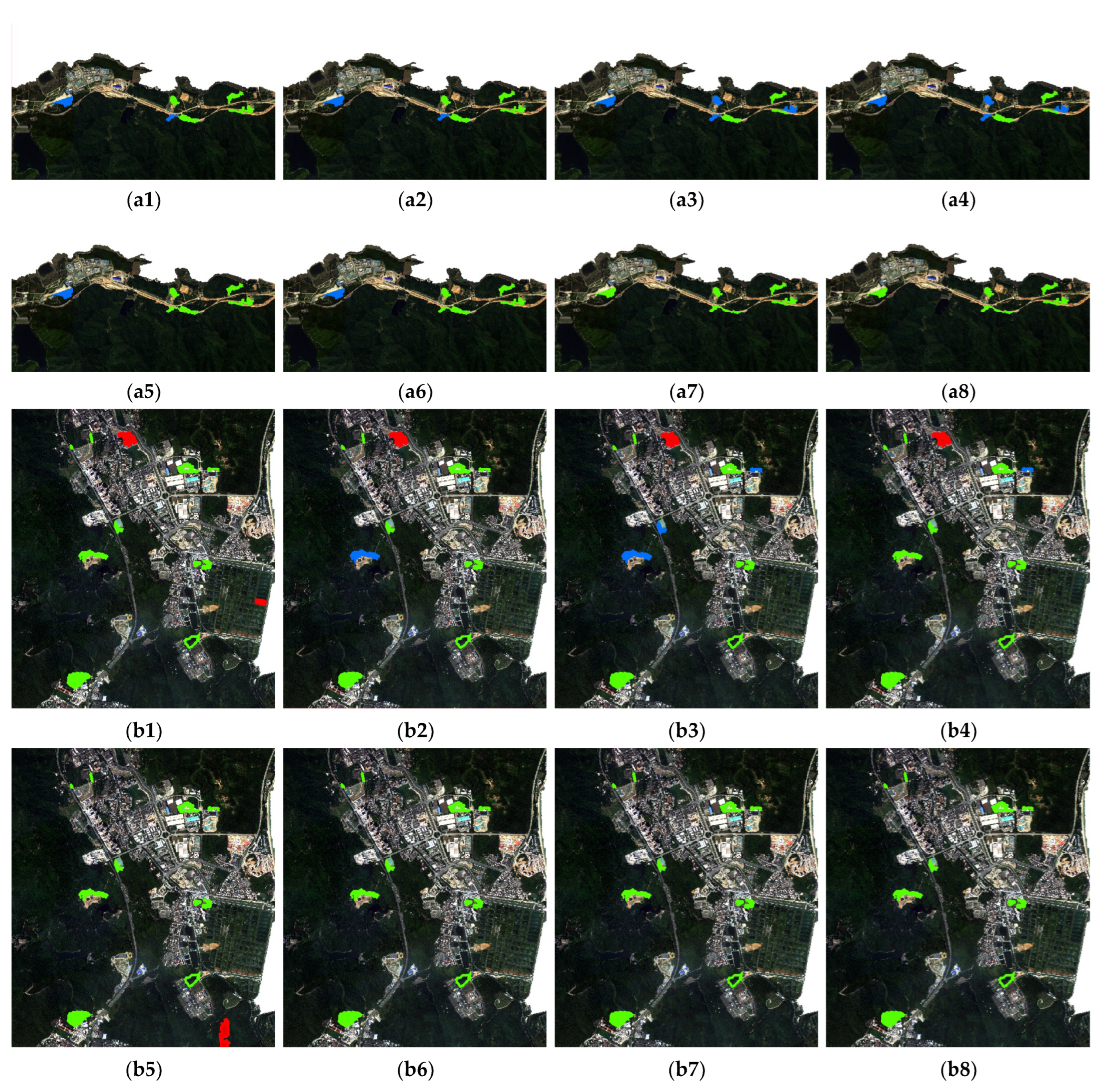

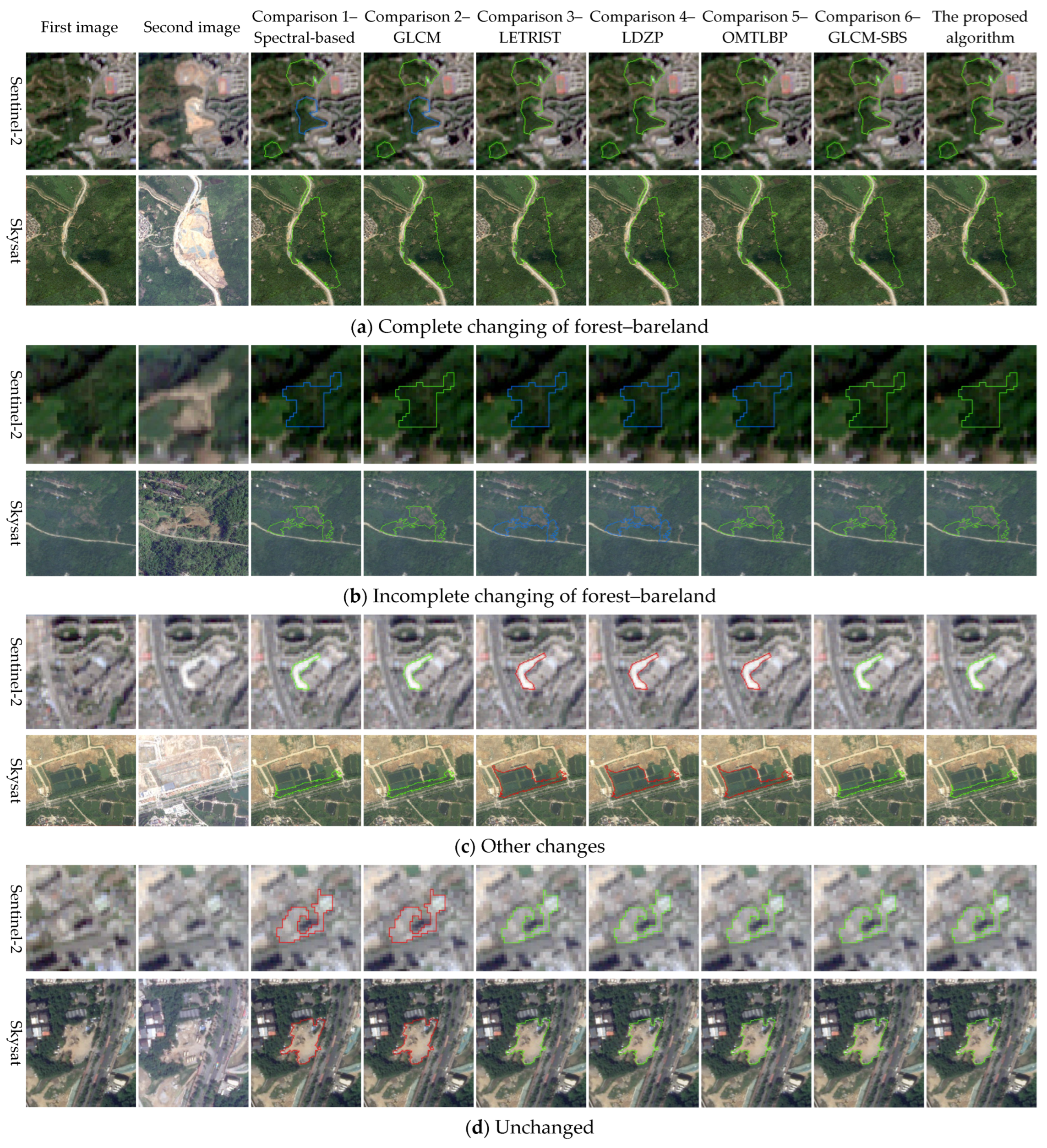

3.4. Object-Oriented Change Detection

- (a)

- Sentinel-2 data

- (b)

- Skysat data

3.5. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-oriented change detection in urban areas from high spatial resolution images based on multiple features and ensemble learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to Object-oriented approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Sun, C.; Li, J.; Liu, Y.; Cao, L.; Zheng, J.; Yang, Z.; Ye, J.; Li, Y. Ecological quality assessment and monitoring using a time-series remote sensing-based ecological index (ts-RSEI). GISci. Remote Sens. 2022, 59, 1793–1816. [Google Scholar] [CrossRef]

- Cao, G.; Zhou, L.; Li, Y. A new change-detection method in high-resolution remote sensing images based on a conditional random field model. Int. J. Remote Sens. 2016, 37, 1173–1189. [Google Scholar] [CrossRef]

- Lv, Z.; Wang, F.; Sun, W.; You, Z.; Falco, N.; Benediktsson, J.A. Landslide inventory mapping on VHR images via adaptive region shape similarity. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change detection in heterogenous remote sensing images via homogeneous pixel transformation. IEEE Trans. Image Process. 2018, 27, 1822–1834. [Google Scholar] [CrossRef]

- Wang, Z.; Wei, C.; Liu, X.; Zhu, L.; Yang, Q.; Wang, Q.; Zhang, Q.; Meng, Y. Object-oriented change detection for vegetation disturbance and recovery using Landsat time series. GISci. Remote Sens. 2022, 59, 1706–1721. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Remote Sens. 2003, 69, 369–379. [Google Scholar]

- Niemeyer, I.; Marpu, P.R.; Marpu, P.R. Change detection using object features. In Object-Oriented Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 185–201. [Google Scholar]

- Lv, Z.; Huang, H.; Li, X.; Zhao, M.; Benediktsson, J.A.; Sun, W.; Falco, N. Land cover change detection with heterogeneous remote sensing images: Review, Progress, and Perspective. Proc. IEEE 2022, 110, 1976–1991. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, H.; Gao, L.; Benediktsson, J.A.; Zhao, M.; Shi, C. Simple multiscale UNet for change detection with heterogeneous remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Liu, T.; Yang, L.; Lunga, D. Change detection using deep learning approach with object-based image analysis. Remote Sens. Environ. 2021, 256, 112308. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 265–2401. [Google Scholar] [CrossRef]

- Gong, M.; Zhou, Z.; Ma, J. Change detection in synthetic aperture radar images based on image fusion and fuzzy clustering. IEEE Trans. Image Process. 2012, 21, 2141–2151. [Google Scholar] [CrossRef]

- Bovolo, F.; Marchesi, S.; Bruzzone, L. A framework for automatic and unsupervised detection of multiple changes in multitemporal images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2196–2212. [Google Scholar] [CrossRef]

- Lv, Z.; Wang, F.; Cui, G.; Benediktsson, J.A.; Lei, T.; Sun, W. Spatial–spectral attention network guided with change magnitude image for land cover change detection using remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef]

- Addink, E.A.; Van Coillie, F.M.B.; De Jong, S.M. Introduction to the GEOBIA 2010 special issue: From pixels to geographic objects in remote sensing image analysis. Int. J. Appl. Earth Obs. 2012, 15, 1–6. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Wang, X.; Chen, Y. Object-oriented change detection using multiple classifiers and multi-scale uncertainty analysis. Remote Sens. 2019, 11, 359. [Google Scholar] [CrossRef]

- Zhang, Y.; Peng, D.; Huang, X. Object-oriented change detection for VHR images based on multiscale uncertainty analysis. IEEE Geosci. Remote Sens. 2018, 1, 13–17. [Google Scholar] [CrossRef]

- Aguirre-Gutiérrez, J.; Seijmonsbergen, A.C.; Duivenvoorden, J.F. Optimizing land cover classification accuracy for change detection, a combined pixel-based and Object-oriented approach in a mountainous area in Mexico. Appl. Geogra. 2012, 34, 29–37. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Blaschke, T.; Ma, X.; Tiede, D.; Cheng, L.; Chen, Z.; Chen, D. Object-oriented change detection in urban areas: The effects of segmentation strategy, scale, and feature space on unsupervised methods. Remote Sens. 2016, 8, 761. [Google Scholar] [CrossRef]

- Lv, Z.; Yang, X.; Zhang, X.; Benediktsson, J.A. Object-based sorted-histogram similarity measurement for detecting land cover change with VHR remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Cai, L.; Shi, W.; Zhang, H.; Hao, M. Object-oriented change detection method based on adaptive multi-method combination for remote-sensing images. Int. J. Remote Sens. 2016, 37, 5457–5471. [Google Scholar] [CrossRef]

- Wang, J.; Bretz, M.; Dewan, M.A.; Delavar, M.A. Machine learning in modelling land-use and land cover-change (LULCC): Current status, challenges and prospects. SCI. Total Environ. 2022, 822, 153559. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Gray scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image cIassification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Lei, H.; Hao, Y.; Jin, L.; Yun, Z. A change detection method for remote sensing image based on LBP and SURF feature. In Proceedings of the 9th International Conference on Graphic and Image Processing (ICGIP 2017), Qingdao, China, 21 February 2017; pp. 1–7. [Google Scholar]

- Liu, L.; Long, Y.; Fieguth, P.W.; Lao, S.; Zhao, G. BRINT: Binary rotation invariant and noise tolerant texture classification. IEEE Trans. Image Process. 2014, 23, 3071–3084. [Google Scholar]

- Song, T.; Li, H.; Meng, F.; Wu, Q.; Cai, J. LETRIST: Locally encoded transform feature histogram for rotation-invariant texture classification. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1565–1579. [Google Scholar] [CrossRef]

- Roy, S.K.; Chanda, B.; Chaudhuri, B.B.; Banerjee, S.; Ghosh, D.K.; Dubey, S.R. Local directional ZigZag pattern: A rotation invariant descriptor for texture classification. Pattern Recogn. Lett. 2018, 108, 23–30. [Google Scholar]

- Fawad; Khan, M.J.; Riaz, M.A.; Shahid, H.; Khan, M.S.; Amin, Y.; Loo, J.; Tenhunen, H. Texture representation through overlapped multi-oriented tri-scale local binary pattern. IEEE Access 2019, 7, 66668–66679. [Google Scholar] [CrossRef]

- Bianconi, F.; González, E.; Fernández, A. Dominant local binary patterns for texture classification: Labelled or unlabelled. Pattern Recogn. Lett. 2015, 65, 8–14. [Google Scholar] [CrossRef]

- Li, Z.; Shi, W.; Hao, M.; Zhang, H. Unsupervised change detection using spectral features and a texture difference measure for VHR remote-sensing images. Int. J. Remote Sens. 2017, 38, 7302–7315. [Google Scholar] [CrossRef]

- Palm, C. Color texture classification by integrative Co-occurrence matrices. Pattern Recogn. 2004, 37, 965–976. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-oriented image analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient graph-based image segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Baatz, M.; Schape, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informationsverarbeitung; XII; Wichmann: Appleton, WI, USA, 2000; pp. 12–23. [Google Scholar]

- Johnson, B.A.; Bragais, M.; Endo, I.; Magcale-Macandog, D.B.; Macandog, P.B.M. Image segmentation parameter optimization considering within-and between-segment heterogeneity at multiple scale levels: Test case for mapping residential areas using Landsat imagery. ISPRS Int. J. Geo-Inf. 2015, 4, 2292–2305. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhou, F.Q.; Zhang, A.N. Optimal subset selection of time-series MODIS images and sample data transfer with random forests for supervised classification modelling. Sensors 2016, 16, 1783. [Google Scholar] [CrossRef]

- Jain, A.; Zongker, D. Feature selection: Evaluation, application, and small sample performance. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 153–158. [Google Scholar] [CrossRef]

| Feature Names | Feature Description | Calculation Formula |

|---|---|---|

| Angular Second Moment (ASM) | The evenness of the grayscale distribution of the image | |

| Contrast (Con) | Graphic sharpness | |

| Correlation (Cor) | Consistency of image texture | |

| Inverse Different Moment (IDM) | Homogeneity of image texture |

| Experiment Name | Basic Operating Unit | Feature Set | Feature Filtering | Feature-Filtering Method | Reference |

|---|---|---|---|---|---|

| Comparison 1– Spectral-based | Object-level | Spectral features | No | - | - |

| Comparison 2– GLCM | Object-level | Spectral features and GLCM texture features | No | - | [27] |

| Comparison 3– LETRIST | Object-level | Spectral features and LETRIST texture features | No | - | [30] |

| Comparison 4– LDZP | Object-level | Spectral features and LDZP texture features | No | - | [31] |

| Comparison 5– OMTLBP | Object-level | Spectral features and OMTLBP texture features | No | - | [32] |

| Comparison 6– GLCM-SBS | Object-level | Spectral features and GLCM texture features | Yes | Single backward search | [27,43] |

| The proposed algorithm | Object-level | Spectral features and CCM texture features | Yes | Cumulative backward search | - |

| Experiment Name | Missing Detection Rate | False Detection Rate | Overall Accuracy Rate | Kappa Coefficient |

|---|---|---|---|---|

| Comparison 1– Spectral-based | 48.15% | 39.13% | 60.00% | 0.20 |

| Comparison 2– GLCM | 6.25% | 9.09% | 90.74% | 0.81 |

| Comparison 3– LETRIST | 2.22% | 6.38% | 94.96% | 0.89 |

| Comparison 4– LDZP | 7.45% | 1.10% | 94.62% | 0.88 |

| Comparison 5– OMTLBP | 3.33% | 1.60% | 97.83% | 0.95 |

| Comparison 6– GLCM-SBS | 6.67% | 3.45% | 94.44% | 0.89 |

| The proposed algorithm | 0.00% | 3.33% | 98.15% | 0.96 |

| Experiment Name | Missing Detection Rate | False Detection Rate | Overall Accuracy Rate | Kappa Coefficient |

|---|---|---|---|---|

| Comparison 1– Spectral-based | 5.43% | 9.17% | 92.45% | 0.85 |

| Comparison 2– GLCM | 5.00% | 6.52% | 94.34% | 0.88 |

| Comparison 3– LETRIST | 19.57% | 5.00% | 88.68% | 0.77 |

| Comparison 4– LDZP | 15.22 | 7.50% | 89.15% | 0.78 |

| Comparison 5– OMTLBP | 4.35% | 6.67% | 94.34% | 0.89 |

| Comparison 6– GLCM-SBS | 5.43% | 2.5% | 96.23% | 0.92 |

| The proposed algorithm | 4.35% | 1.67% | 97.17% | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Z.; Zhou, T.; Chen, J.; Li, X.; Guo, S.; Zhao, L.; Sun, L. Object-Oriented Remote Sensing Image Change Detection Based on Color Co-Occurrence Matrix. Appl. Sci. 2023, 13, 6748. https://doi.org/10.3390/app13116748

Zhu Z, Zhou T, Chen J, Li X, Guo S, Zhao L, Sun L. Object-Oriented Remote Sensing Image Change Detection Based on Color Co-Occurrence Matrix. Applied Sciences. 2023; 13(11):6748. https://doi.org/10.3390/app13116748

Chicago/Turabian StyleZhu, Zhu, Tinggang Zhou, Jinsong Chen, Xiaoli Li, Shanxin Guo, Longlong Zhao, and Luyi Sun. 2023. "Object-Oriented Remote Sensing Image Change Detection Based on Color Co-Occurrence Matrix" Applied Sciences 13, no. 11: 6748. https://doi.org/10.3390/app13116748

APA StyleZhu, Z., Zhou, T., Chen, J., Li, X., Guo, S., Zhao, L., & Sun, L. (2023). Object-Oriented Remote Sensing Image Change Detection Based on Color Co-Occurrence Matrix. Applied Sciences, 13(11), 6748. https://doi.org/10.3390/app13116748