Abstract

Blasthole detection is crucial but challenging in tedious underground mining processes, given the diversity of surrounding rock backgrounds and uneven light intensity. However, existing algorithms have limitations in extracting image features and identifying differently sized objects. This study proposes a cascade-network-based blasthole detection method. The proposed method includes a blasthole feature extract transformation (BFET) module and a blasthole detection (BD) module. Firstly, we constructed the BFET module on the improved Cycle Generative Adversarial Network (CycleGAN) by multi-scale feature fusion. Then, we fused the convolution features of the generators in CycleGAN to obtain the enhanced feature map of the blasthole images. Secondly, the BD module was cascaded with the BFET module to accomplish the task of detecting blastholes. Results indicated that the detection accuracy of the blasthole image was significantly improved by strengthening the contrast of the image and suppressing over-exposure. The experimental results also showed that the proposed method enhanced the contrast of the image and could improve the accuracy of blasthole detection in real time. Compared with the YOLOv7 and CycleGAN+YOLOv7 methods, the detection accuracy of our method was improved by 5.34% and 2.38%, respectively.

1. Introduction

The precise detection of blastholes in the roadway working face has become an inevitable trend in the sustainable development of future mining construction [1]. Currently, blasting operations still rely on manual charging or the assistance of mechanical arms operated by experienced operators. Over-reliance on manual operation makes it difficult to ensure the effectiveness of blasting and also poses a threat to the safety of workers. Image processing technology can help the mechanical arm to accurately detect, locate, and pad the blastholes on roadway working faces, which ensures the safety of blasting. Therefore, designing and developing an intelligent detection and recognition charging arm is crucial to achieve the intelligent padding of roadway working faces [2].

Charging explosives is an important part of engineering mining [3], and accurately conveying explosives to the required blasthole location is a critical link in the development of an intelligent charging system. The main objectives of this work were focused on assisting blasthole detection for charge blasting systems. Unlike other object detection tasks, the detection of blastholes on roadway working faces is influenced by many factors, especially the illumination intensity and geological conditions. Although some progress has been made in the blasthole image detection algorithm of roadway working faces, it is prone to problems such as the insufficient detection of low-illumination blasthole images. Enhancing the features of blastholes images in complex environments can effectively improve the performance of object detection networks applied to blasthole detection in roadway working faces. Therefore, this paper proposes a method to enhance the contrast of images by enhancing the image’s blasthole features, according to the characteristics of the blasthole images.

Traditional detection algorithms struggle to accurately detect blastholes in roadway working faces due to interference from surrounding rock, low illumination and coal dust. To improve the accuracy of blasthole detection, this paper proposes a cascade detection network called the feature extract transformation network for blasthole detection (BFET-BD). The contributions of this paper mainly include the following.

(1) To reduce the impact of the surrounding rock on blasthole images and generate high-contrast blasthole feature maps, the blasthole feature extract transformation (BFET) module and the blastholes detection (BD) module are proposed. The BFET module utilizes features from different convolutional layers in CycleGAN for multi-scale feature fusion to generate enhanced feature maps. The BD module is built on existing single-scale object detection networks. The output feature map of the BFET module is used as input to the BD module to form an end-to-end cascaded blasthole detection network.

(2) To address the interference issue arising from low-illumination conditions, this paper suggests utilizing multi-scale channels rather than three-channel images. This approach enhances the features of blastholes in low-illumination environments, thereby improving the detection accuracy and reducing false detection rates.

(3) To address the lack of available blasthole datasets, this study collects a large number of blasthole images from various roadway working faces and establishes the CUMTB-BI dataset. Model training and testing are conducted on this dataset, providing a foundation for the verification of the proposed blasthole detection network.

The remainder of this paper is organized as follows: the recent literature is reviewed in Section 2; Section 3 describes the proposed method in detail; Section 4 provides a detailed account of the dataset collection and training process; Section 5 reports the experiments and results; and Section 6 concludes the work.

2. Related Work

Numerous researchers are focused on the preprocessing stage of image detection. The research is briefly reviewed in the following section.

With the emergence of generative networks such as the Pixel Convolutional Neural Network (PixelCNN) [4], Variational Autoencoder (VAE) [5] and Generative Adversarial Network (GAN) [6], data generative networks based on deep learning are gradually being widely used in image generation. Among them, the GAN network can achieve equivalent detection performance with larger objects by mapping low-resolution small object features into high-resolution equivalent features. Many research directions and applications have been generated through the fusion of deep learning methods with GAN. Chen et al. [7] proposed a GAN framework based on the fully connected (FC) layer. However, the framework shows high performance only on a few types of data distributions. In view of the instability of the GAN network, which cannot be well adapted to the convolutional neural network, the DCGAN network [8] was proposed. The DCGAN is suitable for processing image data and does not easily overfit, but it is difficult to extract and generate data. To address the issue that DCGAN is unable to extract data, Odena et al. [9] proposed the Semi-Supervised Generative Adversarial Network (SGAN). The network fuses multiple types of global context information of different sizes by pyramid pooling. The method uses an alternating strategy of maximum pooling and average pooling to enhance the detection of small objects. The PGAN network was proposed by Li et al. [10], and it uses a continuously updated generator network and discriminator network to generate ultra-high-resolution images of small objects to improve the detection performance. The generator learns the residual representation from the shallow detail features and attempts to deceive two well-trained discriminators. It enhances the representation of small objects to approximate large objects, thereby improving the detection performance of small objects. A small object detection algorithm, SOD-MTGAN, based on GAN multi-task combination, was proposed by Bai et al. [11]. The generator network upsamples the input low-resolution images to restore as much detail as possible. Then, high-resolution images are reconstructed for more accurate detection.

In actual projects, the problem of insufficient sample sizes still exists. In response to this problem, Sixt et al. [12] proposed RenderGAN. The network uses adversarial learning to generate more images for the purpose of data augmentation. To enhance the robustness of the detection model, Wang et al. [13] used the method of automatically generating samples containing occlusion and deformation features to improve the detection performance of non-obvious objects. Deng et al. [14] proposed an extended feature pyramid network. The network generates ultra-high-resolution pyramidal layers through the feature texture module, thus enriching the feature information of small objects. Seonjae Kim et al. [15] proposed two lightweight neural networks with a hybrid residual and dense connection structure. These methods can significantly reduce the inference speed and improve the super-resolution performance. Li et al. [16] proposed a lightweight convolutional neural network called WearNet. This network greatly reduces the number of layers and parameters, resulting in a smaller model with a faster detection speed. On the basis of these algorithms, some progress has been made in the detection of blasthole images on roadway working faces [17]. W Zhang [18] proposed an algorithm based on ResNet-51 to obtain blasthole parameters and the detection of blastholes. The method integrates two-stage and three-stage training but has a longer training time, and the information from blasthole images with low-light conditions and low contrast is insufficient. The improved Faster-RCNN algorithm was proposed by Y Zhang [19]. The algorithm improves the detection speed of blastholes, but it is prone to missed detection and false detection.

3. The Research Method

In this section, a cascade network structure, BFET-BD, is proposed to enhance the blasthole features and improve the detection accuracy of blastholes. BFET-BD consists of two modules: a blasthole feature extract transformation module (BFET) and a blasthole detection module (BD). The feature extract transformation module uses the improved CycleGAN [20] as the basic network to learn from the input of unpaired blasthole images. The BFET module realizes image feature extraction under high-light conditions. The BD module is mainly constructed based on the existing single-scale object detection network. The output feature maps of the BFET module are used as the input of the BD module to form an end-to-end cascaded blasthole detection network.

3.1. Proposed BFET-BD Network

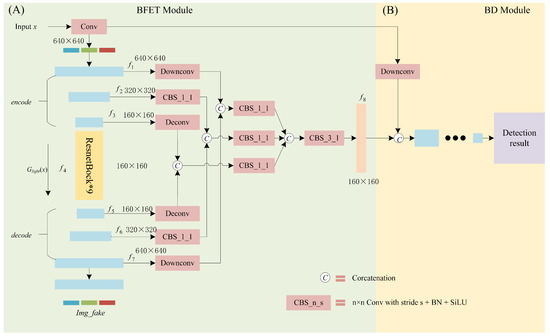

CycleGAN network can be trained using unpaired images as input. The model includes generators and discriminators. The training process draws on the ideas of game theory to maximize the self-interest and advantage of the generator and discriminator as much as possible. Through continuous training of the adversarial network, the generator outputs as many “real” image data as possible. The discriminator distinguishes as much as possible between the real image and the generated image. Models are continuously improved in terms of their respective capabilities through adversarial learning. Eventually, the model reaches a Nash equilibrium state and the training is terminated. At this point, the generator outputs the optimal result. The discriminator tends to judge the generated data correctly at 0.5. However, a disadvantage of the CycleGAN network is that it maps images under low-light conditions to images under high-light conditions and cannot learn the precise locations of blastholes. Therefore, we conduct an in-depth exploration and research to achieve global feature extract transformation from low to strong illumination. We propose a BFET-BD cascade network, in which the BFET module is constructed on the improved CycleGAN network, and the BD module is composed of a detection network. It overcomes the shortcomings of CycleGAN and improves the detection accuracy in blasthole detection. Different from other training methods, we train the BFET module and BD module together, which can guide the network optimization and highlight the blasthole features. The following Figure 1 shows the cascade structure of the proposed BFET-BD network, which is composed of two parts (A) and (B). (A) is the BFET module, which translates the image from low-light to high-light and fuses these into multi-scale feature maps to enhance the features of the blastholes. The enhanced feature maps are used as input to the BD module for the detection of the blasthole images.

Figure 1.

Network structure model architecture. (A) is the BFET module to translate the image from low-light to hight-light and enhance the features of the blastholes. Features – are the encoded feature maps to extracted low-light image features from x; – are the decoded feature maps that tranlate the low-light features into hight-light features. (B) is the BD module, which is used to detect the feature maps generated by (A).

3.2. Blasthole Feature Extract-Transformation (BFET) Module

The proposed BFET module is based on the improved CycleGAN network. Suppose that we have the feature map at layer i with dimension . Let be a generic function that acts as the basic generator block. It consists of convolution layers followed by instance normalization layers and activation functions. is the operation of encoding the input image as a multi-scale feature map. Among them,

where , and .

In the formula, is the input of a low-light image with a size of 640 × 640. The feature map is the number of channels in the intermediate activation of the generator, , b=4. is the operation block decoding the translated strong-light image features into the output restored image. The output image features for the final strong-illumination conditions are .

where , and .

The multi-scale feature fusion of the feature map – and the original input image may produce redundancy and confounding effects of high-dimensional feature dimensions [21]. To solve this problem, before we fuse the feature maps of different sizes, each feature map is first passed through a convolution kernel to reduce the blending effect. In this way, we reduce the feature dimensions, and the features of different sizes are adjusted to the same size. The image size of 320 × 320 is chosen for the input image to reduce the network complexity.

3.3. Blasthole Detection (BD) Module

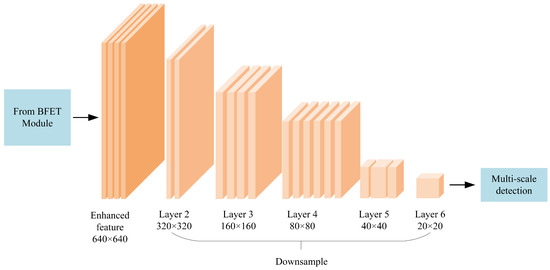

The proposed BD module extracts the enhanced fused image features. The main feature of this module is that after fusing the image features under low- and high-light conditions, a single-cycle network is formed by cascading the input feature images. The fused feature image extracted by the BFET module is directly used as the input to the BD module, instead of the RGB image. The entire network realizes end-to-end training and detection, reducing the number of network layers and the computing time. As can be seen from Figure 1, part (B) is the BD module, which can be based on the YOLOv3 [22], SSD [23], RetinaNet [24], YOLOv5 [25], YOLOv7 [26], or YOLOv7-tiny networks. As shown in Figure 2, we take the YOLOv7-related framework into this module for detection, with 640 × 640 RGB images as the input. This method adopts Resnet-9 as the backbone for feature extraction, and three layers of feature maps with different sizes are output after the head. The minimum feature scale is 20 × 20, which is 1/32 of the input size. CBS is mainly composed of Conv+BN+SiLU, n = 3 and stride = 2. ELAN in YOLOv7 consists of multiple CBSs so that its input and output feature sizes remain constant. After the last CBS output for the desired channel, the reduced RGB image is replaced with the fused feature image.

Figure 2.

BD module structure diagram.

3.4. Loss Function

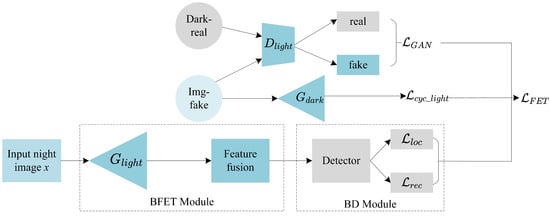

The improved CycleGAN network is a ring structure, which mainly consists of two generators ( and ) and two discriminators ( and ). X and Y denote the image of the X domain and the image of the Y domain, respectively. The image of the X domain is generated into the image of the Y domain by the generator , and then reconstructed back to the original image of the X domain input by the generator . The image of the Y domain is generated into the image of the X domain by the generator , and then reconstructed to the original image of the Y domain input by the generator . The discriminators and are used to discriminate the “true” and “false” images under low-light and high-light conditions, respectively. The network structure loss is shown in Figure 3.

Figure 3.

Network structure loss chart.

The BFET module loss consists of the adversarial loss () and the cyclic consistency loss (), expressed by the following equation:

The BD module losses include the bounding box loss () and the object detection loss (). The loss function is added to the BD module, which is an important part of optimization, allowing it to focus on enhancing the fused features of the blasthole region. Different weights are applied to the bounding box loss () and the object detection loss (). The network losses are as follows:

where and are determined by experience, 1.2 and 0.5 respectively.

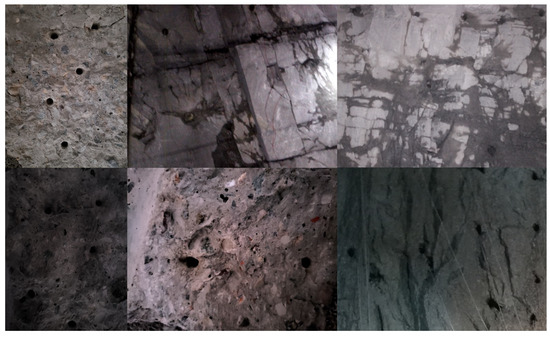

4. CUMTB-BI Dataset Detection

The image acquisition of roadway working faces is primarily performed using an intrinsically safe explosion-proof camera model ZHS2420 and an intrinsically safe KTW280 explosion-proof cellphone. The camera has a maximum resolution of 6000 × 4000, while the cellphone has a maximum resolution of 2340 × 1080. To ensure the reliability of the blasthole image detection algorithm in roadway working faces, we collected an image dataset from the No. 15121 air inlet and bottom extraction roadway of the Sijiazhuang Company of the Shanxi Yangmei Group. This dataset, named CUMTB-BI, was acquired with an intrinsically safe explosion-proof camera equipped with a new landmark intrinsically safe LED flash. It contains 3620 blasthole images with an original resolution of 1080 × 720. In the experiment, we adjusted the images to 640 × 640 after down-sampling. Figure 4 shows some samples collected in complex background conditions.

Figure 4.

Training sample chart.

Training Process

The proposed BFET-BD fusion algorithm has the following implementation process. First, the original images are down-sampled to a 640 × 640 resolution. Next, these images are input into the BFET module, which is based on the CycleGAN network structure, to obtain the feature maps. Then, the feature maps and the down-sampled original images are used as inputs for the BD module. Finally, the detection results of the blasthole image dataset are output through the detection network.

5. Experimental Results and Analysis

The server configuration used for the experiment is CPU i9-10900K with 64 GB RAM and a GPU with 24 GB, using Python 3.8, a deep learning framework based on PyTorch.

5.1. Experimental Results

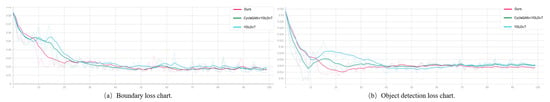

During the experiment, we selected 2500 randomly as the training set and 500 as the test set. The relevant parameters were set as follows: epoch = 100, bach_size = 16, input image size 640 × 640, learning rate = 0.0002, and the optimizer was Adam. Figure 5 compares the training results of different methods. The method of YOLOv7+BFET-BD can better detect blastholes in the dataset. The bounding box loss and object detection loss are also reduced accordingly.

Figure 5.

Loss function chart.

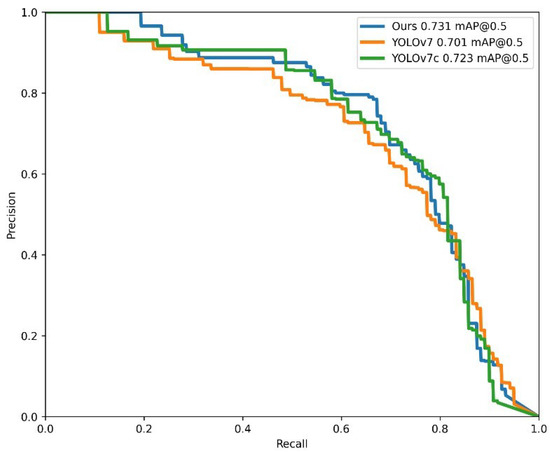

Figure 6 shows the precision–recall (PR) curves for several different methods on the blasthole dataset. It can be seen that the detection effect of the method proposed in this paper is better than the effect of using YOLOv7 detection after enhancing the dataset image and directly inputting YOLOv7 by comparing the area under the curve. The mAP@0.5 in this study reached 0.731.

Figure 6.

PR curve comparison chart.

5.2. Performance Estimation

To evaluate the experimental results, we used evaluation metrics. These metrics were used to measure the strengths and weaknesses of the algorithms. The metrics were evaluated in terms of both real time and accuracy, as described below.

a. Precision–Recall (PR) Curve

The curve is formed with precision and recall as variables, where recall is the horizontal coordinate and precision is the vertical coordinate. The recall rate, also known as the completion rate, represents the proportion of the number of correctly detected blasthole samples to the number of positive blasthole samples.

The accuracy of the blasthole inspection model is also called the accuracy rate. It denotes the percentage of all samples detected as blastholes that are correctly detected as real blastholes.

b. Mean Average Precision (mAP)

This experiment has only one category, so it does not have a classification problem; thus, the classification and AP are equivalent to mAP. The established mAP is widely used to evaluate the performance of blasthole detection algorithms. The mAP is proportional to the area under the PR curve. The mAP size range is [0,1], and a larger size represents better performance.

c. Detection Speed

In this paper, the detection speed of different networks is evaluated in terms of the time required to complete training. The following figure represents the visualization results of different models after training.

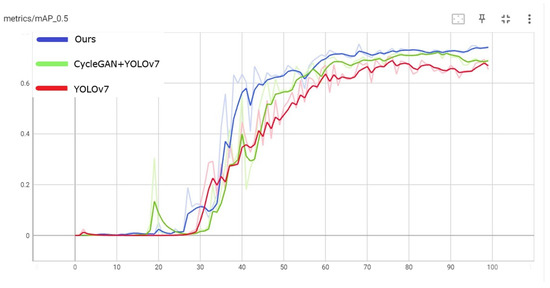

Table 1 shows the performance comparison of the original dataset detection, CycleGAN-enhanced dataset detection and detection using the algorithm of this work. Figure 7 shows the comparison of the mAP for each method.

Table 1.

Comparison of different models.

Figure 7.

Epoch mAP comparison chart on CUMTB-BI dataset.

The following outcomes can be seen from the data in Table 1. (1) In terms of detection accuracy, the method proposed in this paper is slightly improved, increasing by 5.34% compared to YOLOv7, and it is 2.38% higher than when inputting the enhanced dataset into YOLOv7. (2) In terms of detection speed, that of YOLOv7 is slightly higher. This is because the network structure of the method proposed in this paper is more complicated compared to the YOLOv7 structure. (3) In general, the detection of this experimental scenario and the use of the dataset are specific. According to Figure 7, it can be seen that compared with other methods, the method in this paper improves the detection accuracy and better meets the application requirements in actual scenarios.

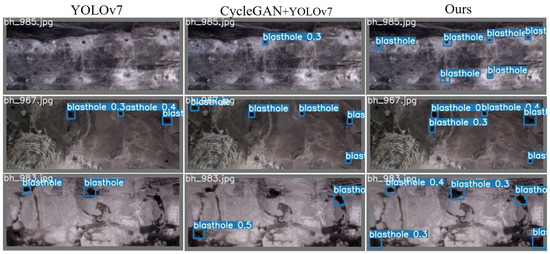

5.3. Comprehensive Performance Analysis

We randomly selected images from the dataset to directly detect blastholes, to detect blastholes after CycleGAN enhancement and to use this method to detect blastholes. The results are shown in Figure 8. The three typical images selected from all the detection images as object detection results are very representative and effective. The results show that the method proposed in this paper greatly improves the processing of blasthole detection in low-light conditions and under low contrast.

Figure 8.

Blasthole image detection chart.

Compared with the algorithm in this paper, the two algorithms in the comparison experiment lost some details of the texture and blurred the contour information of the blasthole, reducing the accuracy and quality of blasthole detection. The increased detail provided by our algorithm helps to reduce interference in situations with uneven lighting and dust, bringing higher accuracy. From the above results, it can be seen that the proposed method has better comprehensive performance and is more suitable for the detection of blasthole images in low-light conditions and under low contrast.

6. Conclusions

To address the issue of low accuracy in blasthole detection by traditional algorithms due to the complex background of surrounding rock, uneven illumination and coal dust interference in roadway working faces, this paper proposes a blasthole detection method called BFET-BD that cascades the BFET module and BD module. The BFET module mainly extracts the features of different convolution layers in CycleGAN for multi-scale feature fusion to generate the enhanced feature maps. It is worth noting that the enhanced feature maps are multi-channel features instead of three-channel images. The BD module is mainly constructed based on the YOLOv7 correlation network for better detection. Our experimental results show that the proposed method effectively fuses multi-scale features and suppresses the interference of uneven illumination. It is able to improve the detection accuracy and reduce the false/missed detection rate. Compared with the methods of feeding blasthole images directly into object detection networks and feeding the enhanced blasthole image into YOLOv7, our method improves the detection accuracy by 5.34% and 2.38%, respectively. The proposed BFET-BD framework can promote intelligent blasting on the roadway working face.

The widespread application of artificial intelligence in engineering can gradually improve project operations, increase production efficiency, reduce labor costs and eliminate the need for on-site manual operation, thereby preventing workers from encountering dangerous situations. The limited number of experimental samples constrained the detection accuracy in our results. However, as we expand our sample size, we anticipate further improvements in the accuracy of our proposed detection network. Future research will focus on improving the power consumption of the model, extracting blasthole position information and compressing the network model to achieve precise positioning and construct lightweight network models.

Author Contributions

Validation, Y.Q.; investigation, T.Y.; writing—original draft preparation, S.P.; writing—review and editing, Z.T. and Z.Y.; funding acquisition, Z.T. and Z.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 52074305 and 51874300) and the National Key R&D Program of China (No. 2021YFC2902103).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, G.; Ren, H.; Zhao, G.; Zhang, D.; Wen, Z.; Meng, L.; Gong, S. Research and practice of intelligent coal mine technology systems in China. Int. J. Coal Sci. Technol. 2022, 9, 24. [Google Scholar] [CrossRef]

- Jian, G.; Kai, Z. Intelligent Mining Technology for an Underground Metal Mine Based on Unmanned Equipment. Engineering 2018, 4, 381–391. [Google Scholar]

- Wang, G. Accelerate the intelligent construction of coal mines and promote the high-quality development of the coal industry. China Coal. 2021, 47, 2–10. [Google Scholar]

- van den Oord, A.; Kalchbrenner, N.; Kavukcuoglu, K. Pixel Recurrent Neural Networks. In Proceedings of the 33rd International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 1747–1756. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2014, arXiv:1312.6114. [Google Scholar]

- Wang, K.; Gou, C.; Duan, Y.; Lin, Y.; Zheng, X.; Wang, F.-Y. Generative adversarial networks: Introduction and outlook. IEEE/CAA J. Autom. Sin. 2017, 4, 588–598. [Google Scholar] [CrossRef]

- Chen, Z.; Jiang, C. Building occupancy modeling using generative adversarial network. Energy Build. 2018, 174, 372–379. [Google Scholar] [CrossRef]

- Ravanbakhsh, S.; Lanusse, F.; Mandelbaum, R.; Schneider, J.G.; Poczos, B. Enabling Dark Energy Science with Deep Generative Models of Galaxy Images. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Tu, Y.; Wang, Y.L.J.; Kim, J. Semi-supervised learning with generative adversarial networks on digital signal modulation classification. Comput. Mater. Contin. 2018, 55, 243–254. [Google Scholar]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual Generative Adversarial Networks for Small Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 1951–1959. [Google Scholar]

- Bai, Y.; Zhang, Y.; Ding, M.; Ghanem, B. SOD-MTGAN: Small Object Detection via Multi-Task Generative Adversarial Network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 210–226. [Google Scholar]

- Sixt, L.; Wild, B.; Landgraf, T. RenderGAN: Generating Realistic Labeled Data. Front. Robot. AI 2018, 5, 66. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Shrivastava, A.; Gupta, A. A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 3039–3048. [Google Scholar]

- Deng, C.; Wang, M.; Liu, L.; Liu, Y.; Jiang, Y. Extended Feature Pyramid Network for Small Object Detection. IEEE Trans. Multimed. 2022, 24, 1968–1979. [Google Scholar] [CrossRef]

- Kim, S.; Jun, D.; Kim, B.-G.; Lee, H.; Rhee, E. Single Image Super-Resolution Method Using CNN-Based Lightweight Neural Networks. Appl. Sci. 2021, 11, 1092. [Google Scholar] [CrossRef]

- Li, W.; Zhang, L.; Wu, C.; Niu, C. A new lightweight deep neural network for surface scratch detection. Int. J. Adv. Manuf. Technol. 2022, 123, 1999–2015. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 936–944. [Google Scholar]

- Zhang, W. Research on Image Recognition Algorithm and Smooth Blasting Parameter Optimization of Rock Tunnel. Ph.D. Thesis, Shandong University, Jinan, China, 2019. [Google Scholar]

- Zhang, Y. Intelligent Explosive Filling Robot Blasthole Identification and Feasible Area Planning Related Technology Research. Master’s Thesis, Liaoning University of Science and Technology, Anshan, China, 2020. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Chen, H.; Jiang, D.; Sahli, H. Transformer Encoder With Multi-Modal Multi-Head Attention for Continuous Affect Recognition. IEEE Trans. Multimed. 2021, 23, 4171–4183. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Glenn, J.; Alex, S.; Jirka, B. ultralytics/yolov5 YOLOv5-P6 1280 models. AWS Supervise. ly YouTube Integr. 2021, 10. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).