Incremental Bag of Words with Gradient Orientation Histogram for Appearance-Based Loop Closure Detection

Abstract

1. Introduction

2. Related Work

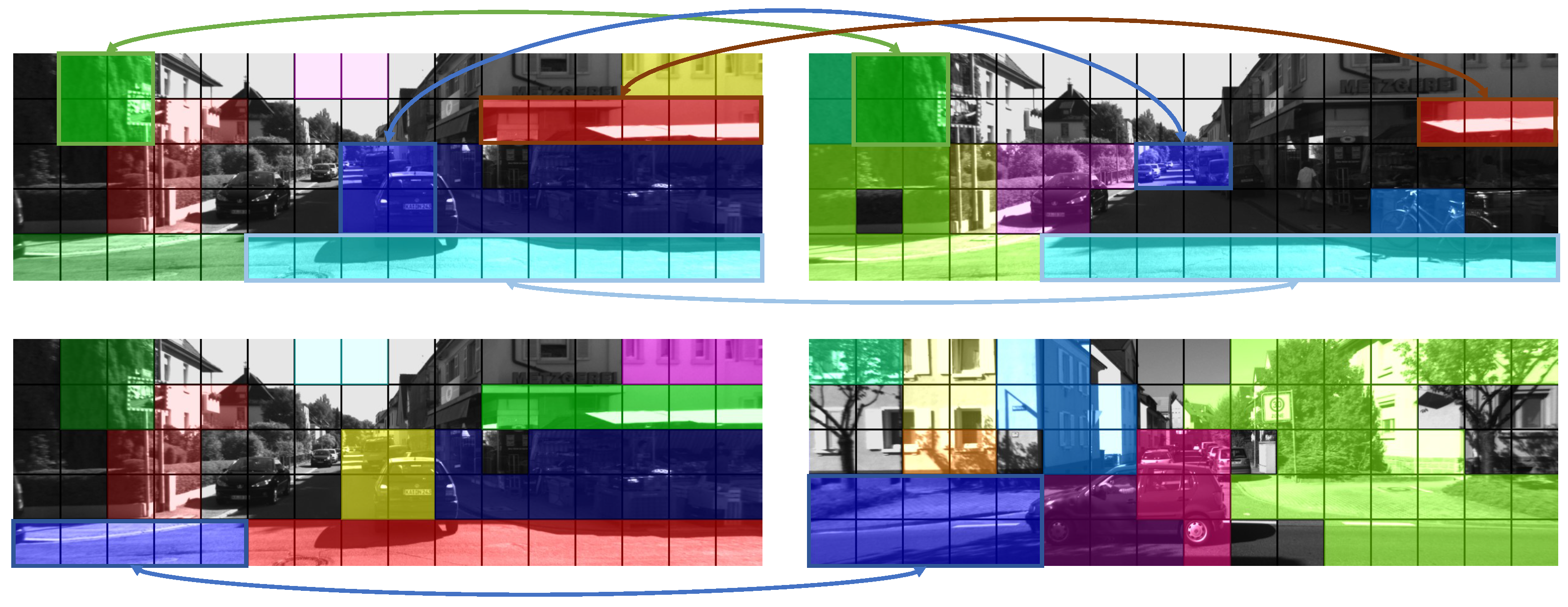

3. Proposed System

3.1. Gradient Orientation Histogram Feature Extraction

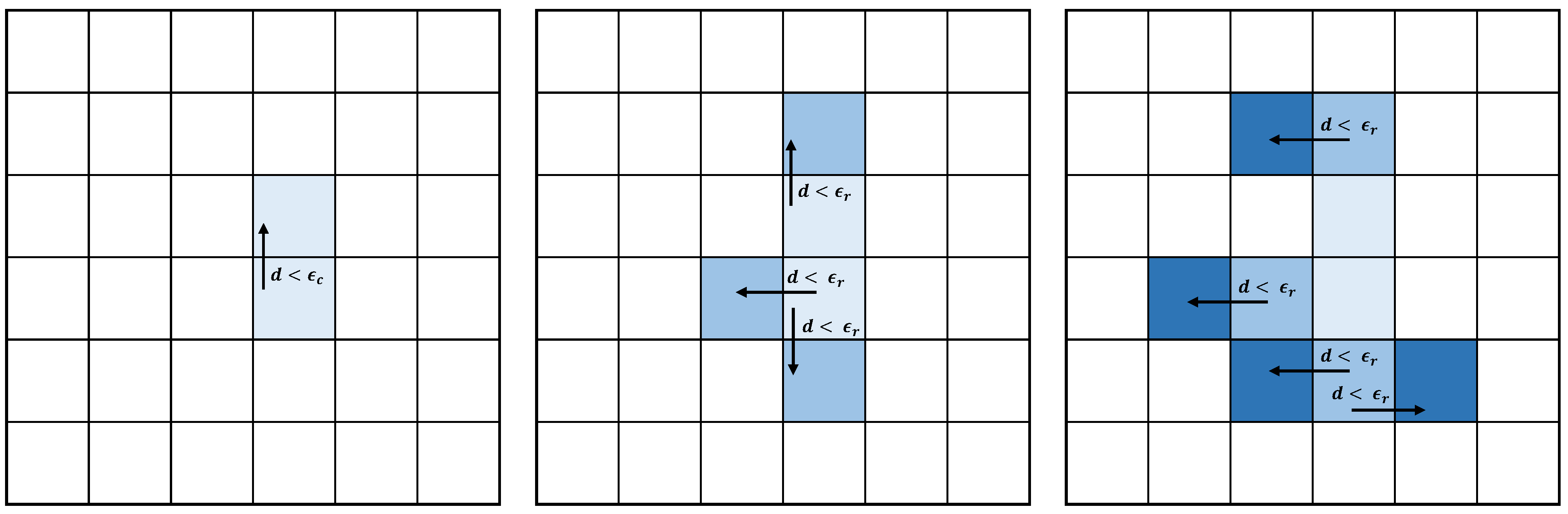

- Parameter Selection: Choose the core distance, , and the reachability distance, .

- Core Object Identification: Identify objects whose distance to at least one of its neighboring objects (image blocks adjacent this block upper, lower, left and right) is less than . These objects are known as core objects.

- Reachable Object Identification: If the distance between an object and one of its neighboring objects is less than , the object is considered a reachable object of this neighboring object.

- Cluster Generation: Assign each core object and its reachable objects to a cluster. For each reachable core object, add its reachable objects to the cluster until no new objects can be added.

- Output: Return the clusters.

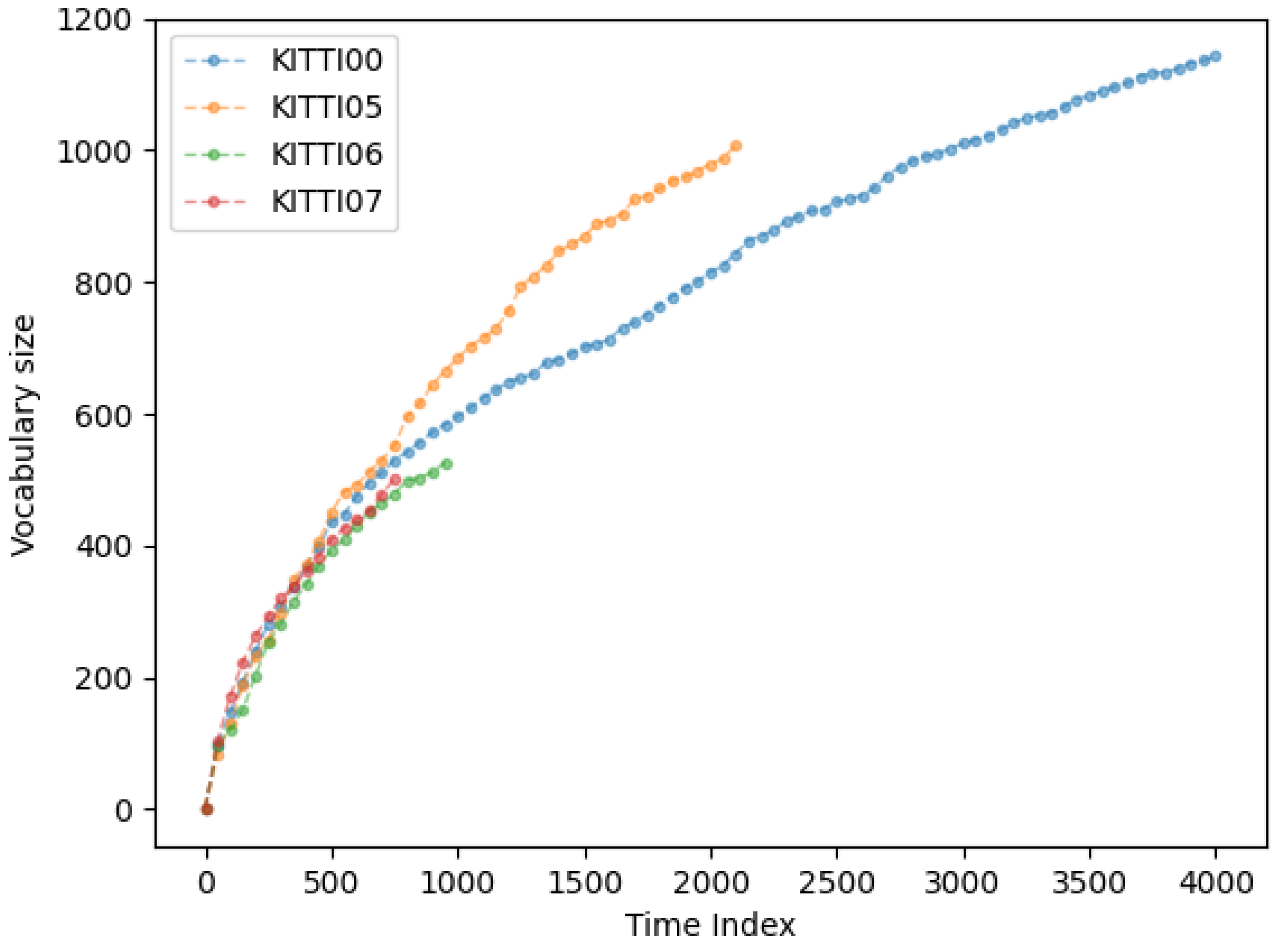

3.2. The Incremental BoW Model

3.3. Loop Closure Detection

3.3.1. Searching for Matching Candidates

3.3.2. Similarity Measure

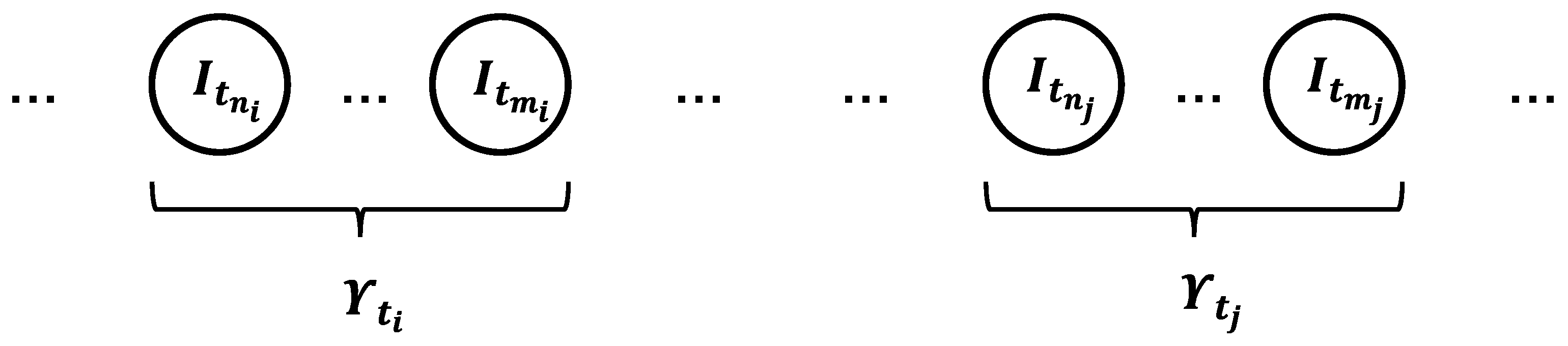

3.3.3. Islands Computation

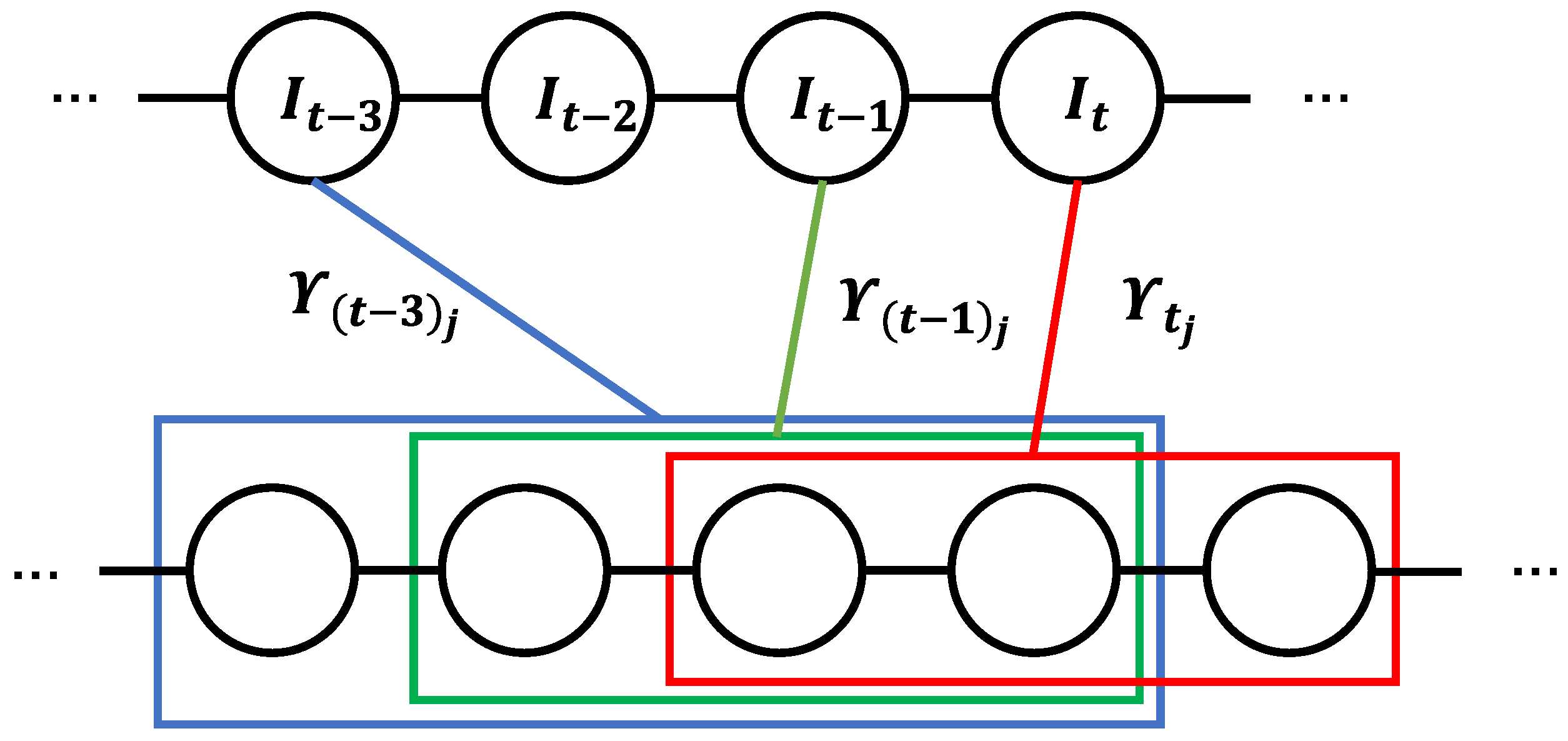

3.3.4. Temporal Consistency

4. Experimental Results

4.1. Methodology

4.2. Dataset

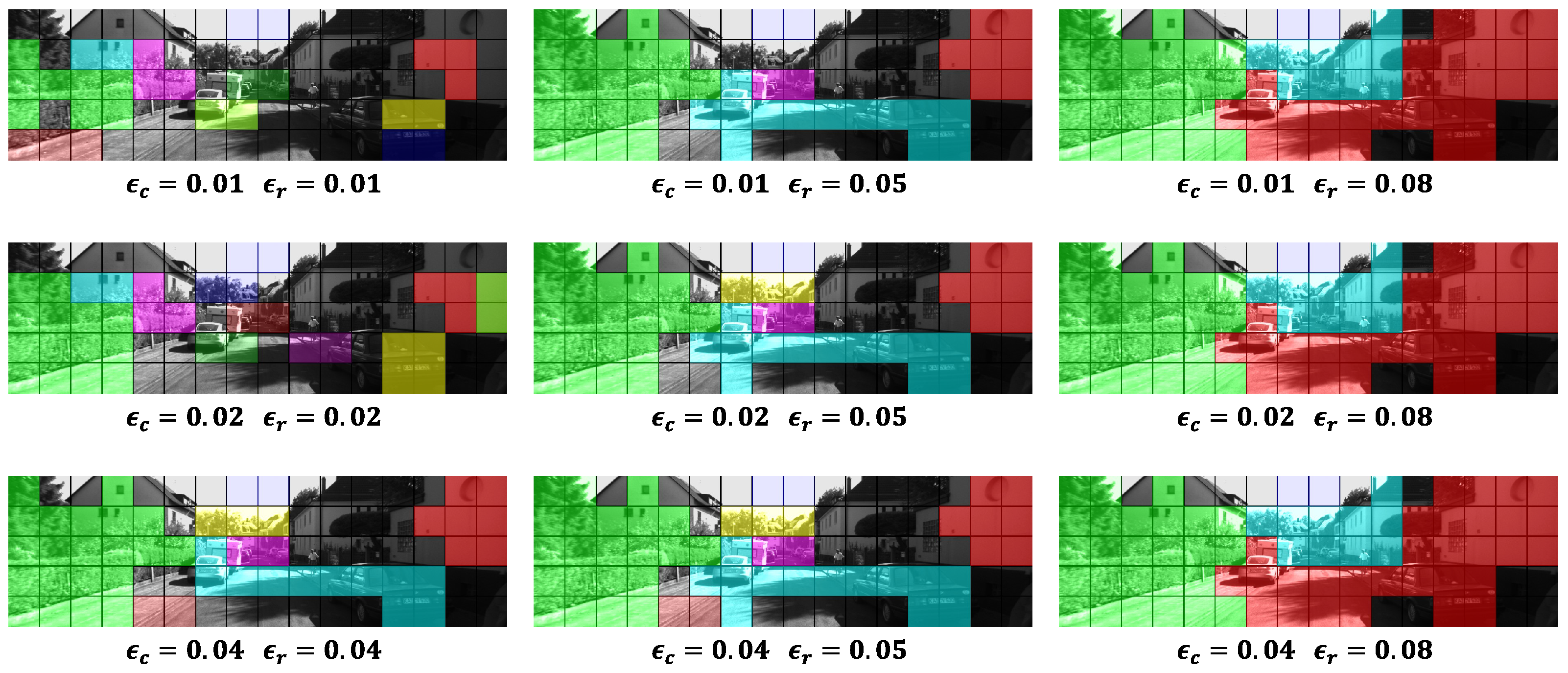

4.3. Feature Extraction

4.4. Feature Matching

4.5. General Performance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cummins, M.; Newman, P. FAB-MAP: Probabilistic localization and mapping in the space of appearance. Int. J. Robot. Res. 2008, 27, 647–665. [Google Scholar] [CrossRef]

- Cummins, M.; Newman, P. Appearance-only SLAM at large scale with FAB-MAP 2.0. Int. J. Robot. Res. 2011, 30, 1100–1123. [Google Scholar] [CrossRef]

- Zhang, K.; Jiang, X.; Ma, J. Appearance-Based Loop Closure Detection via Locality-Driven Accurate Motion Field Learning. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2350–2365. [Google Scholar] [CrossRef]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Khan, S.; Wollherr, D. IBuILD: Incremental bag of binary words for appearance based loop closure detection. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5441–5447. [Google Scholar]

- Garcia-Fidalgo, E.; Ortiz, A. iBoW-LCD: An appearance-based loop-closure detection approach using incremental bags of binary words. IEEE Robot. Autom. Lett. 2018, 3, 53051–53057. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Murillo, A.C.; Guerrero, J.J.; Sagues, C. Surf features for efficient robot localization with omnidirectional images. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3901–3907. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 778–792. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Ulrich, I.; Nourbakhsh, I. Appearance-based place recognition for topological localization. In Proceedings of the 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings, San Francisco, CA, USA, 24–28 April 2000; pp. 1023–1029. [Google Scholar]

- Oliva, A.; Torralba, A. Modeling the Shape of the Scene: A Holistic Representation of the Spatial Envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Kröse, B.J.; Vlassis, N.; Bunschoten, R.; Motomura, Y. A probabilistic model for appearance-based robot localization. Image Vis. Comput. 2001, 19, 381–391. [Google Scholar] [CrossRef]

- Nister, D.; Stewenius, H. Scalable recognition with a vocabulary tree. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 2161–2168. [Google Scholar]

- Zhang, T.; Ramakrishnan, R.; Livny, M. BIRCH: An efficient data clustering method for very large databases. ACM Sigmod Rec. 1996, 25, 103–114. [Google Scholar] [CrossRef]

- Lowry, S.; Sünderhauf, N.; Newman, P.; Leonard, J.J.; Cox, D.; Corke, P.; Milford, M.J. Visual place recognition: A survey. IEEE Trans. Robot. 2016, 2, 1–19. [Google Scholar] [CrossRef]

- Badino, H.; Huber, D.; Kanade, T. Real-time topometric localization. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1635–1642. [Google Scholar]

- Sünderhauf, N.; Protzel, P. Brief-gist-closing the loop by simple means. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1234–1241. [Google Scholar]

- Glocker, B.; Shotton, J.; Criminisi, A.; Izadi, S. Real-time RGB-D camera relocalization via randomized ferns for keyframe encoding. IEEE Trans. Vis. Comput. Graph. 2014, 21, 571–583. [Google Scholar] [CrossRef] [PubMed]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef] [PubMed]

- Mendieta, D.F.; Capua, F.R.; Tarrio, J.J.; Moreyra, M.L. Edge-Based Loop Closure Detection in Visual SLAM. In Proceedings of the 2018 Argentine Conference on Automatic Control (AADECA), Buenos Aires, Argentina, 7–9 November 2018; pp. 1–6. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Fast relocalisation and loop closing in keyframe-based SLAM. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 846–853. [Google Scholar]

- Nicosevici, T.; Garcia, R. Automatic visual bag-of-words for online robot navigation and mapping. IEEE Trans. Robot. 2012, 28, 886–898. [Google Scholar] [CrossRef]

- McManus, C.; Upcroft, B.; Newman, P. Scene signatures: Localised and point-less features for localisation. In Proceedings of the Robotics: Science and Systems X, Berkeley, CA, USA, 12–13 July 2014; pp. 1–9. [Google Scholar]

- Sünderhauf, N.; Shirazi, S.; Jacobson, A.; Dayoub, F.; Pepperell, E.; Upcroft, B.; Milford, M. Place recognition with convnet landmarks: Viewpoint-robust, condition-robust, training-free. In Proceedings of the Robotics: Science and Systems XI, Rome, Italy, 13–15 July 2015; pp. 1–10. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Nagpal, P.B.; Mann, P.A. Comparative study of density based clustering algorithms. Int. J. Comput. Appl. 2011, 27, 421–435. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-means++: The advantages of careful seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Sivic, J.; Zisserman, A. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 1470–1477. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

| Dataset | Number of Images | Image Size (Height × Width) |

|---|---|---|

| KITTI 00 | 4541 | |

| KITTI 05 | 2761 | |

| KITTI 06 | 1101 | |

| KITTI 07 | 1101 |

| Dataset | Image | Precision (%) | Recall (%) |

|---|---|---|---|

| KITTI 00 | 4007 | 93.52 | 76.53 |

| KITTI 05 | 2135 | 92.49 | 74.76 |

| KITTI 06 | 995 | 100 | 77.64 |

| KITTI 07 | 756 | 100 | 66.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wei, W.; Zhu, H. Incremental Bag of Words with Gradient Orientation Histogram for Appearance-Based Loop Closure Detection. Appl. Sci. 2023, 13, 6481. https://doi.org/10.3390/app13116481

Li Y, Wei W, Zhu H. Incremental Bag of Words with Gradient Orientation Histogram for Appearance-Based Loop Closure Detection. Applied Sciences. 2023; 13(11):6481. https://doi.org/10.3390/app13116481

Chicago/Turabian StyleLi, Yuni, Wu Wei, and Honglei Zhu. 2023. "Incremental Bag of Words with Gradient Orientation Histogram for Appearance-Based Loop Closure Detection" Applied Sciences 13, no. 11: 6481. https://doi.org/10.3390/app13116481

APA StyleLi, Y., Wei, W., & Zhu, H. (2023). Incremental Bag of Words with Gradient Orientation Histogram for Appearance-Based Loop Closure Detection. Applied Sciences, 13(11), 6481. https://doi.org/10.3390/app13116481