Abstract

The deep learning-based image segmentation approach has evolved into the mainstream of target detection and shape characterization in microscopic image analysis. However, the accuracy and generalizability of deep learning approaches are still hindered by the insufficient data problem that results from the high expense of human and material resources for microscopic image acquisition and annotation. Generally, image augmentation can increase the amount of data in a short time by means of mathematical simulation, and has become a necessary module for deep learning-based material microscopic image analysis. In this work, we first review the commonly used image augmentation methods and divide more than 60 basic image augmentation methods into eleven categories based on different implementation strategies. Secondly, we conduct experiments to verify the effectiveness of various basic image augmentation methods for the image segmentation task of two classical material microscopic images using evaluation metrics with different applicabilities. The U-Net model was selected as a representative benchmark model for image segmentation tasks, as it is the classic and most widely used model in this field. We utilize this model to verify the improvement of segmentation performance by various augmentation methods. Then, we discuss the advantages and applicability of various image augmentation methods in the material microscopic image segmentation task. The evaluation experiments and conclusions in this work can serve as a guide for the creation of intelligent modeling frameworks in the materials industry.

1. Introduction

The quantitative analysis of microstructures through the microscopic image segmentation method is essential for the control of the material properties and performances of metals or alloys in various industrial applications [1,2]. In recent years, deep learning-based methods [3], which can automatically learn powerful and beneficial features from large amounts of microscopic image data, have gradually become mainstream in the field of image processing [4,5,6]. Ma [7,8] used the DeepLab module [9] to extract numerous Al-La phase tissues in material microscopic images. Liu [10] used a modified U-Net [11] to extract grain boundaries in serial sections. These studies tried to provide substantial information for investigating the intrinsic relationships between material composition, preparation processes, and macroscopic properties [12], and ultimately facilitate the optimal design of materials.

Unfortunately, the accuracy and generalization performance of deep learning models have been hindered by the lack of large training data due to the time-consuming labeling of material microscopic images, which is so-called the insufficient data (or small sample learning) problem [13]. It is still a huge challenge in deep learning, especially for microscopic image segmentation, where it needs to generate pixel-wise semantic labels for each image, which requires a large amount of human effort and expertise to trace the object boundaries accurately. Generally, in cases where large amounts of real data cannot be generated in a short period of time, researchers often use image augmentation methods to expand the data set that is used to train deep learning models.

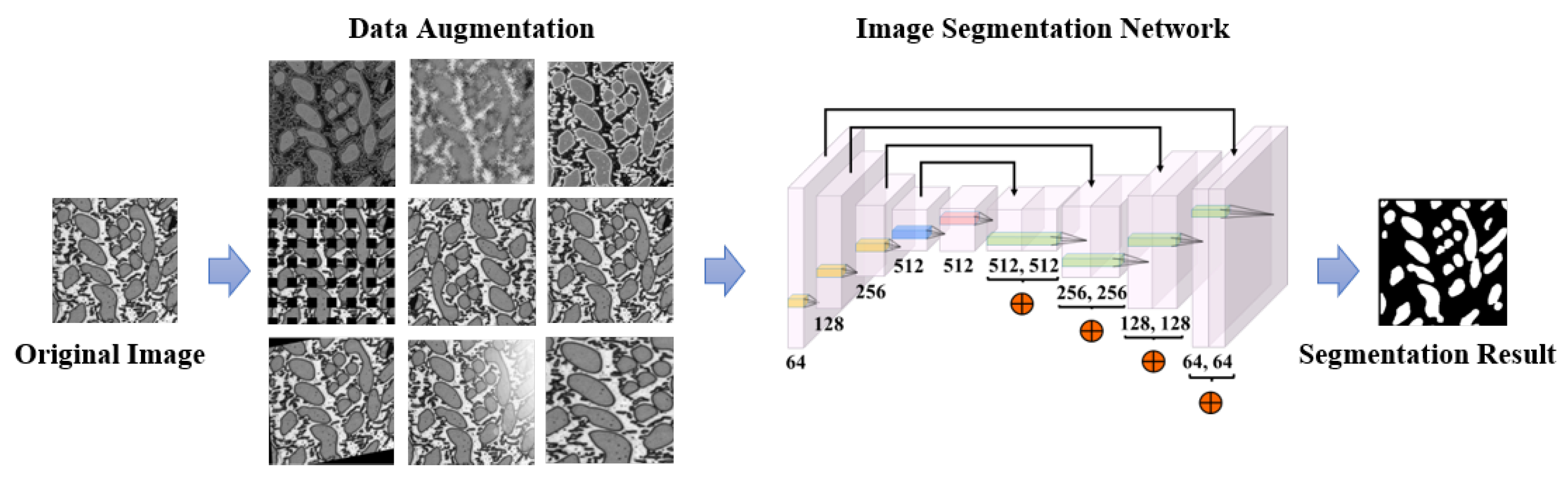

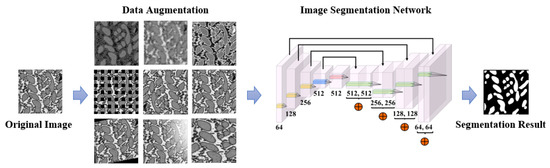

Data augmentation, also known as Data enhancement, is a technique for increasing the quantity and diversity of limited data. Unlike other common methods to prevent overfitting, such as pretraining [14], dropout [15], batch normalization [16], and transfer learning [17], data augmentation methods start from the root cause of the overfitting problem, i.e., insufficient training samples, and prevent overfitting by simulating the possible changes in real data through mathematical simulation. As shown in Figure 1, it can extract more generalized information and features from small data sets, increase the size of the data set used to train machine learning models, and finally enhance the precision and generalizability of those models [18].

Figure 1.

Image data augmentation used in deep learning-based material microscopic image segmentation.

With the development of deep learning technology, many different kinds of image augmentation methods have been proposed and are widely used in various image analysis applications. However, as of yet, there is little work that has evaluated the performance of different image augmentation methods in material microscopic image segmentation tasks. In particular, the study focuses on the use of augmentation methods for addressing the issue of few-shot learning in microscopic image analysis [13].

In this work, we first review commonly used image augmentation methods and divide more than 60 basic image augmentation methods into eleven categories based on different implementation strategies. Secondly, we conduct experiments to verify the effectiveness of various basic image augmentation for the image segmentation task of two classical material microscopic images using evaluation metrics with different applicabilities. Then, we discuss the advantages and applicability of various image augmentation methods in the material microscopic image segmentation task. The evaluation experiments and conclusions in this work can serve as a guide for the creation of intelligent modeling frameworks in the materials industry.

2. Method Review

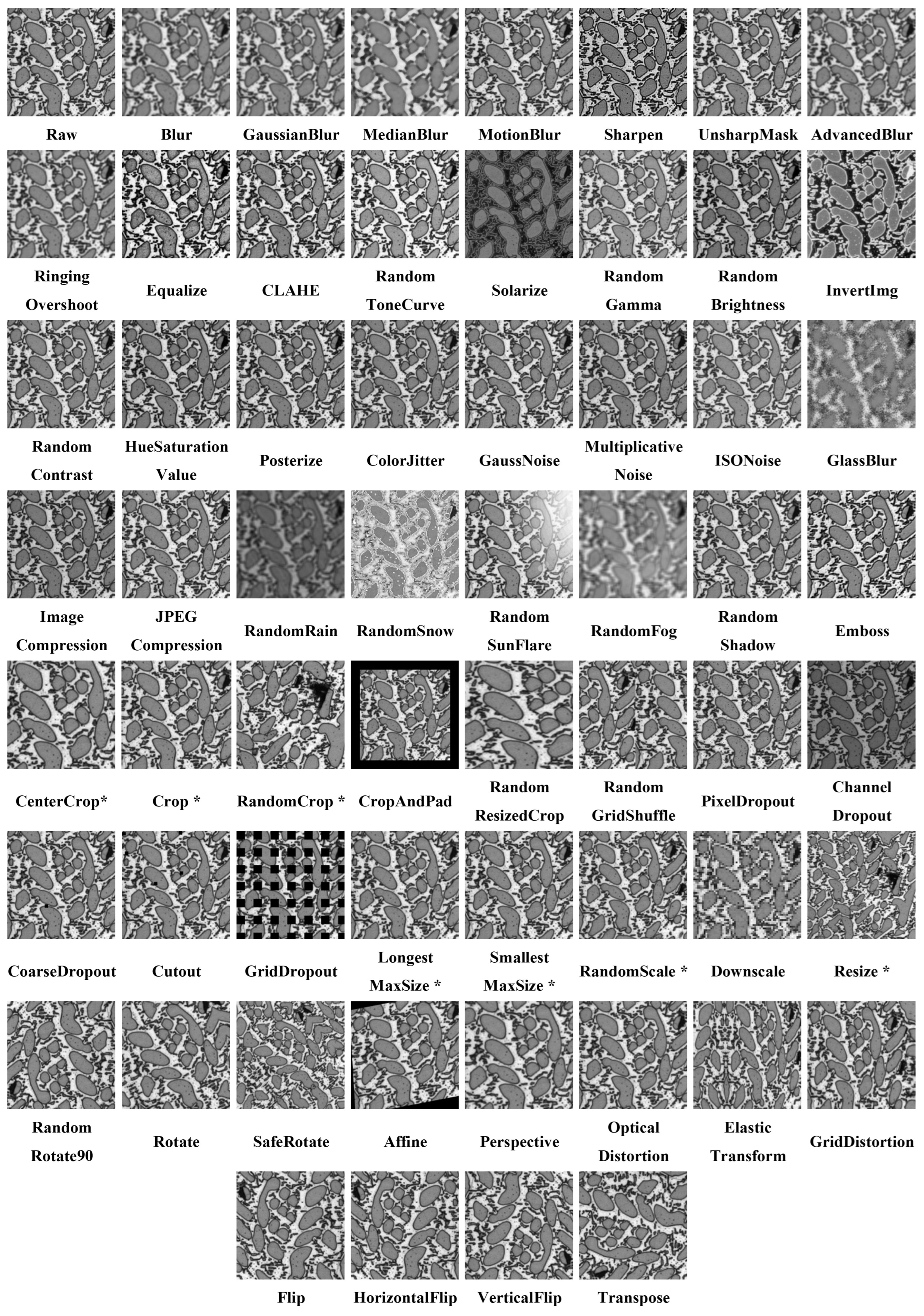

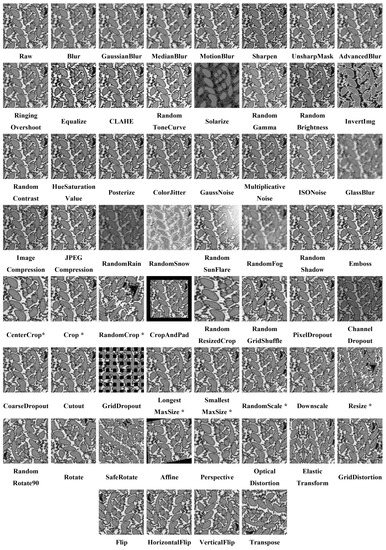

According to the computational complexity of the augmentation method, the image augmentation method can be divided into two classes: basic image augmentation methods and deep learning-based image augmentation methods. Basic image augmentation methods use simple handcrafted mathematical transformations to augment data. The visualization of image augmentation is shown in Figure 2. Meanwhile, deep learning-based image augmentation methods try to learn effective data augmentation strategies.

Figure 2.

Material image enhancement effects (where * indicates that the image size after augmentation is different from the original size, but adjusted to the same size for the visualization).

2.1. Basic Image Augmentation Methods

In this work, we further divide basic image augmentation methods into five primary categories in this work. These are pixel transformation, region cropping/padding, dropout, geometric deformation, and domain adaption. The detailed information on pixel transformation methods is shown in Table 1, while the detailed information on methods for the other four categories is shown in Table 2.

Table 1.

Classification overview of basic augmentation methods (1).

Table 2.

Classification overview of basic augmentation methods (2).

2.1.1. Pixel Transformation

Pixel transform-based methods mainly change the pixel values of the original image by introducing various pixel value transformations, such as changing the intensity, contrast, and transparency of the image, to produce new samples different from the original image and achieve data augmentation [19]. They mainly include convolutional filtering processing, histogram equalization, color/brightness contrast transformation, and noise injection methods. Pixel transform-based augmentation methods are mainly used for single-image processing, and they have been widely used in various tasks in image processing because of their simplicity and computational efficiency.

- Filtering: The filtering method modifies the pixel value of the original image based on the weighted sum of those in the surrounding pixel points [20] and then processes the entire image using a sliding window. By adjusting the size and value of the sliding window, it can create various transformation effects such as image blurring, sharpening, and simple deformation. For instance, Gaussian blur convolves the original image with a Gaussian filter of variable size, producing a new image with varying degrees of blurring. Median blurring is a convolution process using a median filter, which sets the gray value of each pixel of the original image to the median of the gray values of all pixels in the neighborhood of that point.

- Histogram equalization: The histogram represents the statistical results of the frequency of different gray values in an image. The gray histogram of an image can visualize the overall gray range, the frequency of different gray values, and the distribution of the image [21]. The contrast of an image is the difference between the highest and lowest brightness in the image, and the greater the difference, the greater the contrast of the image. Histogram equalization refers to the nonlinear stretching of an image with uneven gray distribution so that the frequencies of different gray levels in a certain gray range are approximately equal, and the contrast of the image can be improved by equalization. Ordinary histogram equalization does not fully consider the distribution of gray values in local areas, while contrast limited adaptive histogram equalization, or CLAHE [22], can enhance the local visualization of images while suppressing the effect of noise, and has primarily been applied to the enhancement of medical images.

- Color/brightness/contrast transformation: The color/brightness/contrast transformation method alters the image’s color space, brightness, contrast, and saturation values at random to generate a new, distinct image from the original image [23]. In image processing, color images can be described using a variety of color spaces, including RGB, HSV [24], YUV, and others. The ColorJitter [25] method can change the color distribution of the original image based on custom values of three input parameters. In addition to color space, brightness, contrast, and saturation are three important parameters of an image that can be used to change imaging effect, such as the RandomBrightness (RandomBrightness) and InvertImg (InvertImg) methods for changing image brightness, and the RandomContrast (RandomContrast) method for changing image contrast.

- Noise injection [26]: In image segmentation applications, any information that interferes with the extraction of the target of interest is collectively called noise. By actively introducing noise that may exist in the real scene for the image to achieve the augmentation of training samples, it can introduce interference information to the image segmentation model, and improve the generalization performance of the training model. To achieve data augmentation, there are two basic methods of noise injection: adding randomly distributed noise or adding noise with specific effects. Gaussian noise, uniform noise, gamma noise, pretzel noise, etc., are kinds of randomly distributed noise [27]. For example, by randomly sampling the Gaussian distribution, adding Gaussian noise can alter the intensity of each pixel’s value in an image, simulating the interference that an actual scene can bring. In addition, it can add random algorithmic effects with physical simulations, such as RandomRain, RandomSnow, RandomSunFlare, Emboss, Image Compression, Downscale, and Upscale. Furthermore, the method of using image compression for data augmentation involves generating images of varying qualities by changing compression quality parameters to produce different degrees of compression. This approach can increase the diversity of the data set and improve the robustness and generalization capabilities of neural networks. In the actual image segmentation application, the appropriate noise amplification type should be carefully chosen according to the needs of realistic scenes.

2.1.2. Region Cropping/Padding

Region cropping/padding-based methods improve the accuracy and generalization of deep learning models for segmenting regions of interest by performing various crop and fill operations on the original image as a way to change the content in the neuronal receptive field. Examples include CenterCrop, RandomCrop, RandomResizedCrop, and CropAndPad. Region cropping/padding methods are widely used in image classification and target detection tasks to cut or remove targets to be classified and detected, both of which can effectively improve the generalization ability of the model [28].

2.1.3. Dropout

Dropout [29] refers to randomly discarding the channel of the image or discarding some areas of the image in different ways to achieve the effect of data augmentation [30]. Discarding the image area sets the pixel value of the area to the background pixel value or zero. It is similar to randomly setting the activation value of some neurons to zero in the neural network, which can realize the regularization of the neural network model and make the network learn more robust features to improve the segmentation ability of the depth network for the image foreground and background. Examples include ChannelDropout, CoarseDropout and GridDropout.

2.1.4. Geometric Deformation

Geometric deformation is suited to image segmentation tasks where the region of interest may shift position and shape [31]. The position of the foreground in an image can be modified or harmonized using simple geometric deformation techniques such as affine or perspective transformation, which will help the segmentation network truly understand the segmentation targets. When the original image changes, the corresponding truth value result should also change. Examples include RandomScale, Affine, ElasticTransform, Perspective, and Flip. Since microscopic images can be acquired at various magnifications and scaling angles during the process of microscopic image acquisition, the geometric deformation method is frequently utilized in this field of segmentation.

2.1.5. Domain Adaption

In practical applications, it is often encountered that the training data for model learning and the test data for model reasoning have different data distributions. Any change in data distribution after model training will reduce its performance in testing. Domain adaptation [32] can reduce data distribution differences between different domains. In order to reduce the difference between the source and target distributions, Yang et al. [33] proposed an unsupervised domain adaptive method using Fourier and its inverse transform. By exchanging the low-frequency spectra of various distributions, this method can achieve excellent style transformation-like effects by simply aligning the underlying statistical data distributions. Yaras et al. [34] proposed randomized histogram matching, which matches the histogram of each training image with the unlabeled target domain image so that the spectral offset between any set of source and target domains is approximated. Pixel distribution adaptation [35] achieves the data augmentation of the target domain by fitting a simple transformation on the target domain image and the reference domain image, such as principal component analysis (PCA) [36], StandardScaler [37], and MinMaxScaler [38].

2.2. Deep Learning-Based Image Augmentation Methods

Three major categories of deep learning-based image augmentation methods are introduced in this section, with detailed information is shown in Table 3.

Table 3.

Classification overview of deep learning-based image augmentation methods.

2.2.1. Autoaugment

The combination of various basic data augmentation methods could potentially enhance the accuracy of deep neural networks. However, the performance gains from the combination of individual basic data augmentation methods are not linearly additive. Thus, manually combining basic augmentation methods could be time-consuming and ineffective. AutoAugment [39] addresses this issue by defining a search space that contains multiple basic data augmentation algorithms. The approach searches the optimal data augmentation methods for origin data automatically by using a reinforcement learning-based search algorithm. It has achieved the highest accuracy on various image classification data sets, including CIFAR-10 [40] and ImageNet [41], and has been experimentally proven to be more transferable.

Nevertheless, AutoAugment’s search algorithm requires 5000 h of GPU training time to find the most suitable strategy on the CIFAR-10 data set with 4000 images due to some time-consuming steps in the search process. To tackle this bottleneck problem, Lim et al. [42] proposed Fast-AutoAugment, which uses a more effective density matching-based search algorithm to accelerate the process of searching the optimal strategy combination. With this improvement, the processing time on the CIFAR-10 data set was reduced to 3.5 GPU h. In 2020, Hataya et al. [43] proposed Faster-AutoAugment, which employs a differentiable search algorithm to further improve the efficiency of automatic search.

In addition to optimizing the search strategy, the AutoAugment team then proposed RandAugment [44], which described that expanding the search space can help speed up the ideal augmentation combination approach. As there exists a significant inverse relationship between data augmentation strategy and data size, Ho et al. [45] also found that the optimal data augmentation magnitude tends to increase with model training; a fixed magnitude should be adopted rather than solely expanding the search space. Following this concept, the search space is reduced by several orders of magnitude, resulting in a substantial increase in search efficiency while preserving the original accuracy improvement.

In 2020, Greedy AutoAugment [46] employed a greedy-based search algorithm to achieve high algorithm efficiency, and UniformAugment [47] found that uniform sampling in the continuous space of the augmented transform is adequate to train sophisticated models, which is based on the assumption that approximate distribution of the augmented space remains constant. As a result, this method avoids a significant number of unwanted searches.

Although the group of methods known as AutoAugment has improved in efficiency and accuracy when compared to basic methods, image augmentation methods introduce distribution bias, which negatively affect the performance of origin data during inference. To alleviate this contradiction, Gong et al. [48] proposed KeepAugment, which detects important regions in the original image and retains this information via saliency map. The proximity of the augmented image to the distribution of real data is enhanced. Deep AutoAugmentation (Deep AA), a completely automated data augmentation search approach, was proposed by Zheng et al. [49] in 2022. Experiments have shown that Deep AA can learn an augmentation strategy even in the absence of default augmentation; meanwhile, stable performance was demonstrated.

2.2.2. Image Generation for Image Augmentation

The obvious drawback of basic augmentation and AutoAugment methods is that they can only learn isolated information from a small amount of training data, rather than grasp the fundamental patterns of the data distribution in the whole data sets. However, many differences have been created by using basic image augmentation methods [50].

Since 2014, Generative Adversarial Net (GAN) [51] has gained more attention in the field of deep learning. In this case, Radford et al. [52] used a convolutional neural network (CNN) combined with GAN to obtain deep convolution generative adversarial nets (DCGAN), which led to the explosion of realistic image generation. An innovative concept for image data augmentation is the use of synthetic images to boost the training data. Theoretically, synthetic images can be generated that closely resemble the distribution of real image data by using adversarial training [53]. The issue of overfitting caused by a lack of training samples is anticipated to be resolved. GAN has the ability to efficiently and rapidly generate unreal images that meet specific requirements and seamlessly integrate them with real training data to augment the data set.

The issue with generating image inputs in GAN is that synthetic images are randomly sampled from the noise space, which cannot produce that are related to real images. In other words, there is no user control over the generation process. Conditional generative adversarial nets(CGAN) was proposed by Mirza and Osindero et al. [54] with real images as network input. Isola et al. [55] in 2017 proposed the first general image transformation CGAN framework, Pix2Pix, which uses aligned input images to learn the mapping relationship between real and synthetic images. Later, Zhu et al. [56] proposed cycle-consistent adversarial networks (CycleGAN), which eliminates the challenge of requiring precisely paired training samples in pix2Pix and enables training with unpaired samples. CycleGAN expands the potential use of GAN in multiple domains. Liu et al. [57] suggest that it is possible to supplement the segmentation task training with images generated by the Pix2PixHD [58]. The output can be manually reconstructed, semantically segmented, labeled images.

For the collaborative creation of paired images and masks for the image segmentation challenge, Pandey et al. [59] proposed a two-stage GAN framework. Instead of combining synthetic and real images for model training, Li and Bastiani et al. [60] proposed a novel approach that enhances the performance of downstream MRI brain image segmentation tasks. A task-guided branching was integrated into the end of the generator with a learned low-dimensional latent space. To face the Unsupervised Part Segmentation challenge, He et al. [61] used GANSeg to generate images conditional on potential masks. The masked images were synthesized without a limit on the number of masks that could be used to supplement training data. Ma [13] et al. proposed a style transfer-based image augmentation technique that would migrate the styles of actual images to simulated images to generate synthetic images as an expansion of the data set, creating a bridge for the simulated data-assisted predictive analysis of real data.

However, none of the above methods can also produce images consistent with their underlying structural information. Furthermore, training GAN-based image augmentation methods also needs an amount of data. As a result, the lack of sufficient data hinders the development of deep learning-based data augmentation methods.

2.2.3. Learned Transformations Method

Zhao and Balakrishnan et al. [62] proposed a learned transformation method for deep learning image generation, which can capture image transformations such as nonlinear deformation and imaging intensity. The model learns the transformations from few-sample images, which include both spatial and appearance individuals. To automatically generate medical images and corresponding masks for training a supervised segmentation network, the two separate learned transformation models are sampled and merged with annotated images, which outperforms the previous method of one-shot semantic task [63].

3. Results

In this section, we utilized two commonly available material microscopic image segmentation tasks as the application scenario. The performance of various basic image augmentation methods was evaluated when employed in conjunction with a uniform segmentation algorithm during training. Specifically, the aim of this work is to evaluate segmentation performance in terms of accuracy, robustness, and efficiency under these experimental conditions. The experiment results provide insights into the effects of image augmentation methods on segmentation outcomes, thus contributing to improving performance in materials microscopy.

3.1. Data Sets

The information of the target data sets, Austenite and Al-La alloy, is shown in Table 4. The number of foreground grain boundary pixels of the austenite image is much lower than that in the background, which is a category-unbalanced image segmentation task. The foreground region of the Al-La alloy has a similar number of pixels to the background region, which is a category-balanced image segmentation task.

Table 4.

The description of the material microscopy image data set.

3.1.1. Austenite

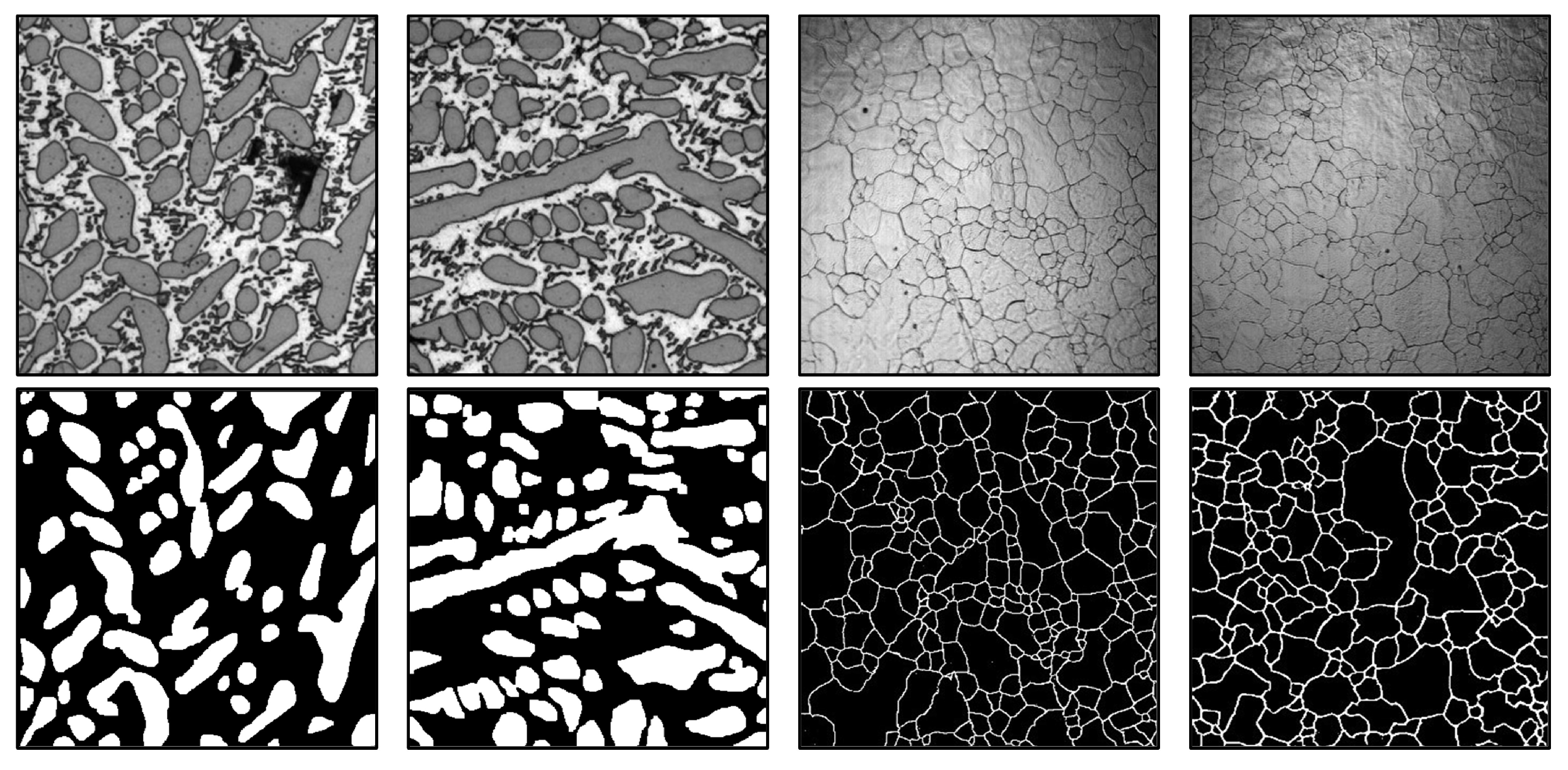

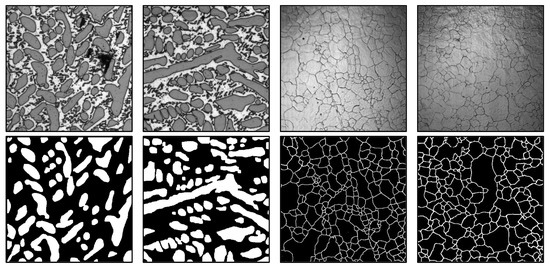

The austenite data set contains a total of 223 optical images with a resolution of pixels, from which we randomly selected 40 image–label pairs for the experiments. The two semantic categories (grain and grain boundary) in the ground truth of this image were manually labeled by material scientists. The grain boundary is represented by 1 and the grain region is represented by 0. The austenite images and masks are shown in the left two columns of Figure 3.

Figure 3.

The raw images and ground truth of Al-La alloy and austenite. The first row shows the raw images and the second row shows the ground truth. The first two columns are Al-La alloy images and the last two columns are austenite.

3.1.2. Al-La Alloy

The uneven development of nuclei forms aluminum–lanthanum dendrites during liquid metal solidification. In contrast to the formation of other metallic materials, gaps tend to grow between the early- and later-formed dendrites. The dendritic image is identified as a binary image, where 1 represents a dendrite and 0 represents a background region without dendrites. The origin images and masks are shown in the right two columns of Figure 3.

3.2. Experimental Settings

We used the deep learning-based image segmentation model U-Net [11] as the benchmark model to evaluate the performance of different image augmentation methods in this work. U-Net is a deep convolutional neural network with an encoder-decoder structure for semantic segmentation tasks. It uses skip connections to directly connect low-level features from the encoder to the decoder, improving segmentation accuracy and avoiding information loss. The original data set is divided into training, validation, and test sets at a ratio of 6:2:2. Only the training data were used for image augmentation, and the number of images obtained using different augmentation methods was twice the total amount of nontest set data. For a fair comparison, the U-Net models trained on different augmentation methods were evaluated on the same test set, which ensured the objectivity of the experiments. U-Net was trained on the training set and validated on the validation set at each iteration, and the network parameters with the lowest loss on the validation set were selected for evaluation on the test set. The final results were averaged, and a 5-fold cross-validation was applied to reduce the influence of different results from a single experiment on a small data set. During the training stage, we set the batch size to 2 with an initial learning rate of in 50 epochs under the adam optimizer [64]. All experiments were performed on a workstation equipped with an nvidia tesla v100 (32 GB).

3.3. Evaluation Metrics

3.3.1. Metric for Austenite

It is difficult to accurately identify the different grain regions in austenitic microscopic images because each grain has a similar texture and is densely packed, which has become a big challenge for austenitic microscopic image segmentation.

Variation of information, also known as VI [65], is a widely used evaluation metric in the microscopic image segmentation field. The formulation for VI includes two parts: the oversegmentation error and the undersegmentation error, which together represent the total error indicator of segmentation. The smaller the value of VI, the better the segmentation performance. The key to measuring VI is to calculate the conditional entropy between the predicted labels and the ground truth. The VI is calculated as shown in Equation (1) below.

3.3.2. Metric for Al-La Alloy

The segmentation target of the Al-La alloy is to extract the dendritic region from the microscopic image. In contrast to the austenite image, the foreground and background proportions are approximately equal in the Al-La alloy, and each foreground target is interspersed with various background areas, which facilitates the algorithm to separate each area. In this work, we conduct experiments using intersection over union (IoU), a widely used evaluation metric in the field based on intraclass overlap [66]. The bigger the IoU, the better segmentation performance, as a value of 1 indicates that the set of regions from the two samples is identical. The idea behind IoU is to calculate the ratio of intersection and union of the ground truth and predicted results. The IoU is calculated as shown in Equation (2) below.

3.4. Discussions

Data augmentation can be categorized into two types: online augmentation and offline augmentation. The key advantage of the online way is that it does not require the synthesis of additional data, thereby conserving data storage space and enhancing flexibility. Moreover, in certain cases, the model may not converge effectively when the image undergoes significant changes after data augmentation. In such scenarios, offline augmentation can be employed as a viable alternative. The benefit of offline augmentation is that the scaled data can be visualized prior to model training and image generation, enabling developers to control the impact of the scaled data. However, a drawback to this approach is that it necessitates more storage space and is less flexible. It should be noted that all the methods employed in this paper are of the offline variety.

As shown in Table 5 and Table 6, different image augmentation methods were used to evaluate the segmentation performance of austenite and Al-La alloys. In comparison with other methods, the geometric deformation method produces the highest benefit for both data sets. There are two potential explanations: (1) The microstructure itself is rotational invariant, and the tissue structure can be distorted and deformed as a result of the preparation process. The geometric deformation simulates the change due to rotation, flipping, distortion, etc., which is quite similar to the real situation of structural change. Thus, it has a considerable positive effect on deep learning-based image segmentation. (2) The convolution operation is not rotational-invariant, but data augmentation by geometric deformation can compensate for this drawback by obtaining the image features that are actually appropriate for microstructure extraction.

Table 5.

Results of austenite and Al-La alloy in basic augmentation methods (1). The ↓ means the lower value, the better performance, and vice versa of ↑. The values in parentheses represent the difference between the current results and the original model.

Table 6.

Results of austenite and Al-La alloy in basic augmentation methods (2). The ↓ means the lower value, the better performance, and vice versa of ↑. The values in parentheses represent the difference between the current results and the original model.

The brightness-based augmentation method can also improve the performance of image segmentation. As the microscopic imaging process requires external supplementation and adjustment of the light source, we postulate that the brightness-based expansion method can simulate the effects of this procedure on imaging. CLAHE is preferable to global histogram equalization, as the central portion of the image is brighter than the surrounding area in microscopic imaging. The local histogram equalization method can overcome the difficulty in global histogram equalization by limiting contrast and enhancing the regional area. This method is particularly suitable for materials with austenite-like grain organization. On the contrary, InvertImg, despite being related to the brightness-based augmentation method, is not applicable to changes that may occur in real-world scenarios for Al-La alloy images, resulting in inferior performance in segmentation experiments.

The noise injection method can introduce various types of noise in the microscopic image to simulate the interference of the imaging system. The extra noise can be roughly divided into two categories: normal noise and physical simulation noise. The former includes Gaussian noise, glass noise, and noise brought by image format compression. The latter can simulate real-world phenomena such as rain, fog, solar flares, and shadows. Physical simulation noise impairs performance rather than correctly simulating changes that might occur in real-world scenarios. Compared to background noise, this has a more detrimental effect on two materials’ image segmentation. As a result, it greatly reduces the deep learning model’s inference capability in both data sets.

The use of combined data augmentation techniques, such as color-jitter, which enhances brightness, contrast, saturation, and hue; and image flipping, which includes both horizontal and vertical flipping, generally results in higher performance than single augmentation. However, when performing combined optimization, it is important to consider the degree of improvement for each component. Automated data augmentation methods based on AutoAugment can significantly reduce the time required for manual search. However, optimization methods are still critical to minimize the time required for automated searches.

4. Conclusions

The low generalization ability of deep learning models due to insufficient data is a challenge that can be alleviated by image augmentation methods. We categorize image augmentation methods into two categories based on different computational capabilities: basic image augmentation methods and deep learning image augmentation methods. The basic methods are further classified into more than 60 types, including pixel transformation, region cropping, dropout, geometric deformation, and domain adaptation. Additionally, the performance of each basic method is experimentally evaluated by various evaluation metrics for two microscopic segmentation tasks: metallographic materials and polycrystalline materials. The benefits and applicability of various image augmentation methods in the context of materials intelligence research and development applications are discussed above. The evaluation experiments and results presented in this work can serve as a guide for the development of intelligent models in the field of materials microscopic image segmentation.

We found that not all augmentation methods can improve microscopic image segmentation performance. Thus, it is critical to choose appropriate methods for microscopic tasks based on the image deformation that might occur in real-world circumstances. The search latency of the combined optimization approach of the basic augmentation methods should be further decreased in later research. A primary challenge for deep learning-based data augmentation methods is the consistency of the images and ground truth in the generated outputs, as well as the reduction of the amount of data required to train the image-generating model.

Author Contributions

J.M. conceived the idea and designed the experiment; C.H. participated in the paper writing and conducted experiments; P.Z. participated in the experimental design and discussion; F.J. and X.W. were involved in the analyses of data; H.H. organized the research project. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key Research and Development Program of China under Grant 2021YFB3202404-02, the National Foreign Expert Program under Grant G2022105005L, the Scientific and Technological Innovation Foundation of Shunde Graduate School, USTB under Grant BK22BF010, and Fundamental Research Funds for the Central Universities of China under Grant 00007467, and Interdisciplinary Research Project for Young Teachers of USTB (Fundamental Research Funds for the Central Universities), FRF-IDRY-21-022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets and codes are available from the corresponding author upon reasonable request.

Acknowledgments

The computing work is supported by USTB MatCom of Beijing Advanced Innovation Center for Materials Genome Engineering.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dursun, T.; Soutis, C. Recent developments in advanced aircraft aluminium alloys. Mater. Des. 2014, 56, 862–871. [Google Scholar] [CrossRef]

- Hu, J.; Shi, Y.; Sauvage, X.; Sha, G.; Lu, K. Grain boundary stability governs hardening and softening in extremely fine nanograined metals. Science 2017, 355, 1292–1296. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2014. [Google Scholar]

- Ma, B.; Zhu, Y.; Yin, X.; Ban, X.; Huang, H.; Mukeshimana, M. Sesf-fuse: An unsupervised deep model for multi-focus image fusion. Neural Comput. Appl. 2021, 33, 5793–5804. [Google Scholar] [CrossRef]

- Ma, B.; Yin, X.; Wu, D.; Shen, H.; Ban, X.; Wang, Y. End-to-end learning for simultaneously generating decision map and multi-focus image fusion result. Neurocomputing 2022, 470, 204–216. [Google Scholar] [CrossRef]

- Ma, B.; Ban, X.; Huang, H.; Chen, Y.; Liu, W.; Zhi, Y. Deep learning-based image segmentation for al-la alloy microscopic images. Symmetry 2018, 10, 107. [Google Scholar] [CrossRef]

- Ma, B.; Ma, B.; Gao, M.; Wang, Z.; Ban, X.; Huang, H.; Wu, W. Deep learning-based automatic inpainting for material microscopic images. J. Microsc. 2021, 281, 177–189. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Liu, W.; Chen, J.; Liu, C.; Ban, X.; Ma, B.; Wang, H.; Xue, W.; Guo, Y. Boundary learning by using weighted propagation in convolution network. J. Comput. Sci. 2022, 62, 101709. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Boyuan, M. Research and Application of Few-Shot Image Segmentation Method for Complex 3D Material Microstructure. Ph.D. Thesis, University of Science and Technology Beijing, Beijing, China, 2021. [Google Scholar]

- Ma, B.; Wei, X.; Liu, C.; Ban, X.; Huang, H.; Wang, H.; Xue, W.; Wu, S.; Gao, M.; Shen, Q.; et al. Data augmentation in microscopic images for material data mining. NPJ Comput. Mater. 2020, 6, 125. [Google Scholar] [CrossRef]

- Pan, H.; Guo, Y.; Deng, Q.; Yang, H.; Chen, J.; Chen, Y. Improving fine-tuning of self-supervised models with Contrastive Initialization. Neural Netw. 2023, 159, 198–207. [Google Scholar] [CrossRef] [PubMed]

- Molchanov, D.; Ashukha, A.; Vetrov, D. Variational dropout sparsifies deep neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Melbourne, Australia, 19–25 August 2017; pp. 2498–2507. [Google Scholar]

- Bjorck, N.; Gomes, C.P.; Selman, B.; Weinberger, K.Q. Understanding batch normalization. In Proceedings of the Advances in Neural Information Processing Systems Conference, Montreal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Ma, D.; Tang, P.; Zhao, L.; Zhang, Z. Review of data augmentation for image in deep learning. J. Image Graph. 2021, 26, 487–502. [Google Scholar]

- Xu, M.; Yoon, S.; Fuentes, A.; Park, D.S. A comprehensive survey of image augmentation techniques for deep learning. Pattern Recognit. 2023, 137, 109347. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef]

- Haiqiong, W.; Jiancheng, L. An adaptive threshold image enhancement algorithm based on histogram equalization. China Integrated Circuit 2022, 31, 38–42, 71. [Google Scholar]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. Graph. Gems 1994, 6, 474–485. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Sural, S.; Qian, G.; Pramanik, S. Segmentation and histogram generation using the HSV color space for image retrieval. In Proceedings of the IEEE International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Volume 2, pp. 22–25. [Google Scholar]

- Taylor, L.; Nitschke, G. Improving deep learning with generic data augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bengaluru, India, 18–21 November 2018; pp. 1542–1547. [Google Scholar]

- Moreno-Barea, F.J.; Strazzera, F.; Jerez, J.M.; Urda, D.; Franco, L. Forward noise adjustment scheme for data augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bengaluru, India, 18–21 November 2018; pp. 728–734. [Google Scholar]

- Shijie, J.; Ping, W.; Peiyi, J.; Siping, H. Research on data augmentation for image classification based on convolution neural networks. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 4165–4170. [Google Scholar]

- Wang, X.; Wang, K.; Lian, S. A survey on face data augmentation for the training of deep neural networks. Neural Comput. Appl. 2020, 32, 15503–15531. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- DeVries, T.; Taylor, G.W. Improved regularization of convolutional neural networks with cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- Elgendi, M.; Nasir, M.U.; Tang, Q.; Smith, D.; Grenier, J.P.; Batte, C.; Spieler, B.; Leslie, W.D.; Menon, C.; Fletcher, R.R.; et al. The effectiveness of image augmentation in deep learning networks for detecting COVID-19: A geometric transformation perspective. Front. Med. 2021, 8, 629134. [Google Scholar] [CrossRef] [PubMed]

- Farahani, A.; Voghoei, S.; Rasheed, K.; Arabnia, H.R. A brief review of domain adaptation. In Advances in Data Science and Information Engineering; Springer: Berlin/Heidelberg, Germany, 2021; pp. 877–894. [Google Scholar]

- Yang, Y.; Soatto, S. Fda: Fourier domain adaptation for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4085–4095. [Google Scholar]

- Yaras, C.; Huang, B.; Bradbury, K.; Malof, J.M. Randomized Histogram Matching: A Simple Augmentation for Unsupervised Domain Adaptation in Overhead Imagery. arXiv 2021, arXiv:2104.14032. [Google Scholar]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and flexible image augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Raju, V.G.; Lakshmi, K.P.; Jain, V.M.; Kalidindi, A.; Padma, V. Study the influence of normalization/transformation process on the accuracy of supervised classification. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; pp. 729–735. [Google Scholar]

- Shaheen, H.; Agarwal, S.; Ranjan, P. Ensemble Maximum Likelihood Estimation Based Logistic MinMaxScaler Binary PSO for Feature Selection. In Soft Computing: Theories and Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 705–717. [Google Scholar]

- Cubuk, E.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q. Autoaugment: Learning augmentation policies from data. arXiv 2019, arXiv:1805.09501. [Google Scholar]

- Recht, B.; Roelofs, R.; Schmidt, L.; Shankar, V. Do cifar-10 classifiers generalize to cifar-10? arXiv 2018, arXiv:1806.00451. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Lim, S.; Kim, I.; Kim, T.; Kim, C.; Kim, S. Fast autoaugment. In Advances in Neural Information Processing Systems; Springer: Berlin/Heidelberg, Germany, 2019; Volume 32. [Google Scholar]

- Hataya, R.; Zdenek, J.; Yoshizoe, K.; Nakayama, H. Faster autoaugment: Learning augmentation strategies using backpropagation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 1–16. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 702–703. [Google Scholar]

- Ho, D.; Liang, E.; Chen, X.; Stoica, I.; Abbeel, P. Population based augmentation: Efficient learning of augmentation policy schedules. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 2731–2741. [Google Scholar]

- Naghizadeh, A.; Abavisani, M.; Metaxas, D.N. Greedy autoaugment. Pattern Recognit. Lett. 2020, 138, 624–630. [Google Scholar] [CrossRef]

- LingChen, T.C.; Khonsari, A.; Lashkari, A.; Nazari, M.R.; Sambee, J.S.; Nascimento, M.A. Uniformaugment: A search-free probabilistic data augmentation approach. arXiv 2020, arXiv:2003.14348. [Google Scholar]

- Gong, C.; Wang, D.; Li, M.; Chandra, V.; Liu, Q. Keepaugment: A simple information-preserving data augmentation approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1055–1064. [Google Scholar]

- Zheng, Y.; Zhang, Z.; Yan, S.; Zhang, M. Deep autoaugment. arXiv 2022, arXiv:2203.06172. [Google Scholar]

- Yang, S.; Xiao, W.; Zhang, M.; Guo, S.; Zhao, J.; Shen, F. Image Data Augmentation for Deep Learning: A Survey. arXiv 2022, arXiv:2204.08610. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Olaniyi, E.; Chen, D.; Lu, Y.; Huang, Y. Generative adversarial networks for image augmentation in agriculture: A systematic review. arXiv 2022, arXiv:2204.04707. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Liu, S.; Zhang, J.; Chen, Y.; Liu, Y.; Qin, Z.; Wan, T. Pixel level data augmentation for semantic image segmentation using generative adversarial networks. In Proceedings of the ICASSP 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1902–1906. [Google Scholar]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8798–8807. [Google Scholar]

- Pandey, S.; Singh, P.R.; Tian, J. An image augmentation approach using two-stage generative adversarial network for nuclei image segmentation. Biomed. Signal Process. Control. 2020, 57, 101782. [Google Scholar] [CrossRef]

- Li, R.; Bastiani, M.; Auer, D.; Wagner, C.; Chen, X. Image Augmentation Using a Task Guided Generative Adversarial Network for Age Estimation on Brain MRI. In Proceedings of the Annual Conference on Medical Image Understanding and Analysis, Cambridge, UK, 12–14 July 2021; pp. 350–360. [Google Scholar]

- He, X.; Wandt, B.; Rhodin, H. GANSeg: Learning to Segment by Unsupervised Hierarchical Image Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1225–1235. [Google Scholar]

- Zhao, A.; Balakrishnan, G.; Durand, F.; Guttag, J.V.; Dalca, A.V. Data augmentation using learned transformations for one-shot medical image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8543–8553. [Google Scholar]

- Shaban, A.; Bansal, S.; Liu, Z.; Essa, I.; Boots, B. One-shot learning for semantic segmentation. arXiv 2017, arXiv:1709.03410. [Google Scholar]

- Kingma; Diederik, P.; Jimmy, B. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Meilă, M. Comparing clusterings—An information based distance. J. Multivar. Anal. 2007, 98, 873–895. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).