1. Introduction

Facility management (FM) employees must have high technical skills and detailed knowledge of the technology of all systems necessary for the efficient and safe operation of buildings. Constant supervision of the efficiency of fire protection, ventilation, energy, and many other systems, as well as monitoring, alterations, and modernization of installations, is crucial in ensuring the safety of all users. Therefore, improving and verifying the staff’s current skills are essential. For this purpose, it is necessary to develop a system of training and assessment of skills acquired by the staff. High safety conditions, low costs, and high efficiency should characterize applied training systems. Traditional facility management training is often not easy to carry out due to the following limitations [

1]:

Time consumption related to the need to prepare the place of training and accommodation;

High price due to the costs of preparing materials and coaching staff;

Low attractiveness and non-intuitiveness due to the lack of visual hints available during the training;

Inability to test some events, e.g., those related to human safety.

Therefore, organizing staff training in virtual reality (VR) seems interesting. There are many areas in which employees are trained using virtual reality: first responder training [

2,

3,

4], medical training [

5,

6,

7], military training [

8,

9], and transportation [

10,

11]. Another area of using VR in workforce training applies to many industries and various purposes. The VR simulator of work at high altitudes has been used to train people [

12]. It also allows us to determine how future workers can manage their stress and detect symptoms of acrophobia. High-risk and high-stress jobs are often the focus of VR training, such as maintenance concentrated on the reliability of power grids [

13] or construction workers operating machinery on construction sites [

14]. VR training can reduce accidents and fatalities in high-risk work [

15,

16]. It is also important that VR training allows employees to train in dangerous situations without exposing themselves to real danger. Nevertheless, the main reason for using the VR environment compared to traditional training is the low cost and repeatability while maintaining resistance to environmental errors without threat to personal safety [

17]. Properly conducted training of employees in virtual reality allows for the reconstruction of identical test conditions for all participants, thanks to which high comparability of training results is achieved. The expert group method can also use the virtual environment for ergonomic workplace design [

18].

Training in virtual reality allows for a deeper analysis of the training results. In traditional training, an in-depth analysis of each participant, including a detailed analysis of the movement, the subtleties of the technique used, and focusing attention on individual elements of the equipment, is impossible. However, virtual training allows for an in-depth analysis of each participant’s results, and we can carefully analyze their movement, how they perform a task, and even their attention to critical elements. The correct design of the VR environment must consider many aspects. In the cognitive theory of learning through multimedia (CTML), we should avoid rich and highly stimulating multimedia graphics that overload cognitive processes and, therefore, can hinder the learning process [

19]. The training participant should pay attention to the essential part of the learning material, the information in the VR environment should be consistent, and all the information parts should be close together [

20]. Learners should be given time to mentally understand multimedia instruction, especially when the content is complex [

20]. To perform an in-depth analysis, we should use eye-tracking during training, providing us with additional essential data [

21]. VR training with eye tracking allows understanding of what the user focuses on during training in the decision-making process and when this takes place. Moreover, accurate eye movement analysis even allows to identify higher nervous functions, such as the emotion of fear, regret, and disappointment [

22,

23,

24]. Therefore, it is essential to properly build a VR environment so that the elements used do not focus on an object that would affect the decision-making process and indicate to select only one answer [

25]. The VR environment with eye tracking should also provide the information necessary for analysis. Creating a system for interacting with VR objects is particularly important [

26]. When interaction points for eye tracking, it is crucial to know the different types of eye movement [

27]. We can divide eye tracking indices into two categories [

28]: types of movements (fixation, saccade, and mixed) and scale of measurement (temporal, spatial, and count). Temporal indicates a focus on the time of activity, e.g., the time spent gazing at a particular object. Therefore, temporal indices describe when cognitive processes take place and for how long. Spatial indices focus on a spatial dimension, e.g., fixation position and sequence. Therefore, spatial indices describe where questions of the cognitive processes take place and how. The research in the article used easy-to-identify elements and quantified distinct types of eye movements with information about scene content related to spatial and temporal fixations on an object.

Today’s technology makes it possible to create a training environment in virtual reality that accurately reconstructs the natural conditions in the workplace and enables the acquisition of necessary skills. An additional advantage of VR training is the less invasive nature of the instructors during the training. A person whose every movement is directly and scrupulously observed by many observers may feel uncomfortable and thus perform the evaluated activities much worse than when ensuring a certain amount of privacy. However, we must remember that participating in VR training may differ from their actual behavior. Virtual reality may cause them not to take training seriously and treat it like a computer game. In addition, the representation of reality is imperfect. Poor 3D vision may even cause undesired sicknesses [

29]. Another element is the rapid change of image, which can also cause sickness that often manifests through sweating, nausea, and dizziness [

30]. Of course, care should be taken to ensure that the virtual training is not just an audiovisual representation of the training scenario, as participants will treat the training as a computer game. An alternative to VR high-immersive training using a headset is low-immersive VR training, often called desktop VR. The low-immersive VR training is a cheaper alternative that only needs to display the virtual world on a computer monitor [

31]. However, it does not ensure the measurement of a high range of parameters available in high-immersive training. Nevertheless, overall, VR learning activities and experiences lead to better learning outcomes and motivate learners to improve [

20].

This paper proposes a new method of training and evaluation in virtual reality for facility management employees using eye tracking. The article describes the complete procedure, including training in VR operation, which is almost always skipped. A process was shown that is not only supposed to show immersion but focuses primarily on teaching FM employees how to use devices. A proposed procedure allows the unifying results of various VR training in different modules into one value. This division is fundamental if we want to choose a set of features or parameters that make it easier to determine the learning speed of employees in the FM industry based on a simple VR scenario.

2. Creation of a Virtual Reality Scenario with Eye-Tracking Capabilities

To conduct training in virtual reality, three training and evaluation VR modules were created based on scenarios of learning how to operate selected equipment. In addition, one VR training module was built so that the employee could learn how to use the VR environment before the training. The process of creating a VR module was divided into three stages [

1]: (1) training task analysis, (2) training scenario sketching, and (3) implementation. Each scenario consists of training an employee to perform a given FM task and evaluating how the employee performs FM tasks after the training. During the training, we have step-by-step instructions on completing the task, which will be passed after its correct completion. We perform the same tasks during the evaluation without guidance so that the employee can make mistakes. Therefore, each VR module can be defined as a training and evaluation module.

The training task analysis was aimed at determining the information needed to perform a specific training task and summarizing all factors related to the training system during the design process. The selection of scenarios and work performed was determined by the FM specialist based on real-life training. After the set of FM tasks was determined, each task was analyzed using hierarchical task analysis (HTA). Tasks are divided into subtasks, expressing the relationship between the parent task and its subtasks using a numbering scheme. This allowed for a clear diagram of the task’s high- and low-level steps.

The scenario sketching, also called scenario design, needed to assume a set of detailed descriptions of how people accomplish a task. Each interaction point determined a set of parameters about the correctness of the performed activities. Therefore, the critical part of the VR module was to select at least eight categories of data to be collected during the VR scenario with eye tracking. The activities were divided into two main groups:

Time length of activities, visual fixation, staying in one location, and total time;

Number of mistakes, successes, places of eyesight fixation, and sequence of actions.

The total number of parameters relates to the number and specificity of selected activities performed in subsequent FM tasks. The data are collected while performing FM activities during training and evaluation. An example of FM tasks would be checking the operating mode of the control panel, which comprises the following:

Going to the control panel.

Finding a black button at the bottom of the control panel.

Checking or setting the button to “auto” mode.

Marking the task as complete.

Therefore, the critical parameters for the implementation of these tasks are not only the order and time of execution but also the operator’s concentration on performed tasks using eye-tracking analysis. As in the example, if the person did not check whether the “auto” mode was set but only marked the answer, regardless of the correctness of the statement, we can verify it by eye movement analysis. Therefore, the eye movement analysis allows for a much more comprehensive range of information about the participant’s focus on critical elements of devices and the correctness of their actions. The choice of behavior is reflected in gaze location. People tend to look longer at an option they choose than others, indicating that spending more time on the irrelevant choice results in worse learning [

24]. When creating an FM activity, we should focus on two aspects: the knowledge the user must acquire during the training and the analysis of performed activities with eye-tracking capabilities.

The settings of each VR module must consider a significant number of eye-tracking aspects, including an ample space allowing the participant to move around and highlight parts of tools. Such solutions allow an immersive virtual environment to enhance training effectiveness [

16]. High interactivity will also allow users to control virtual reality better, which with high-quality VR should impact cognitive processing and increase the involvement of participants [

32]. Therefore, the critical elements of the VR module were active points in the VR module, which are points of interaction between the user and the VR environment. Due to the eye-tracking analysis, the number of active points must be greater than usual. The VR environment used the Cognitive Affective Model of Immersive Learning (CAMIL) characteristics, emphasizing the central role of VR interactivity [

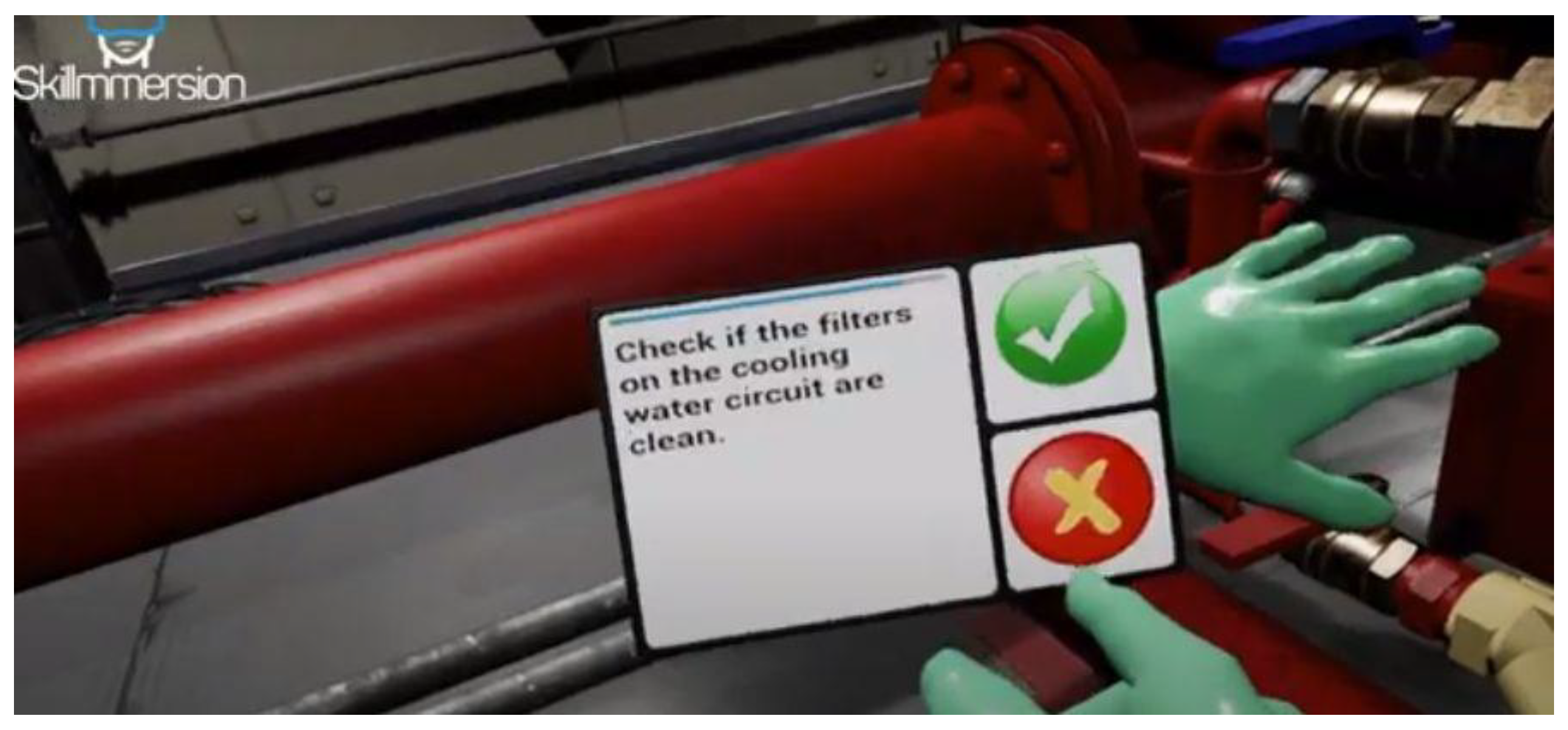

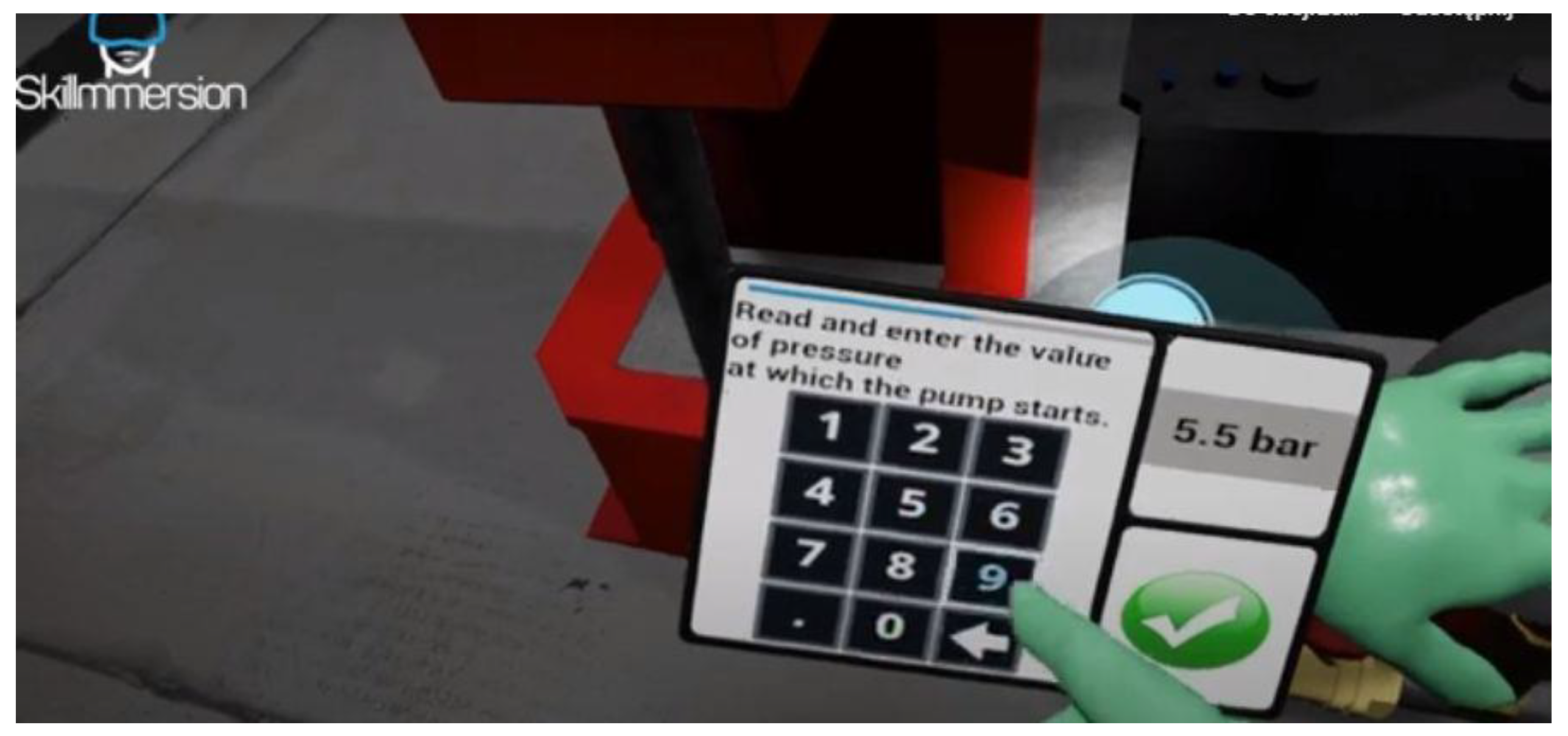

33]. The interaction points must be present in each element of the VR module: environment, tools (e.g., hammer and pyrometer), a screen displaying information, interactive objects (e.g., buttons and switches), static objects (e.g., platforms), texture-changing objects (e.g., lamps and diodes), virtual hands, and others. With so many interaction points, an important aspect is the proper presentation of tasks and assistance in their performance. Therefore, an indispensable tool supporting the training process was a virtual tablet with the following functions:

Informing about the completion of a given task or activity;

Informing about next steps;

Interface for selecting answers.

The developed VR modules have a total of 102 defined active points.

Unreal Engine 4 was used in implementation to create a high-quality visual 3D model of the virtual environment. Eye tracking requires correct highlighting of objects and high reproduction accuracy in VR training.

3. Virtual Reality Training and Evaluation Scenarios

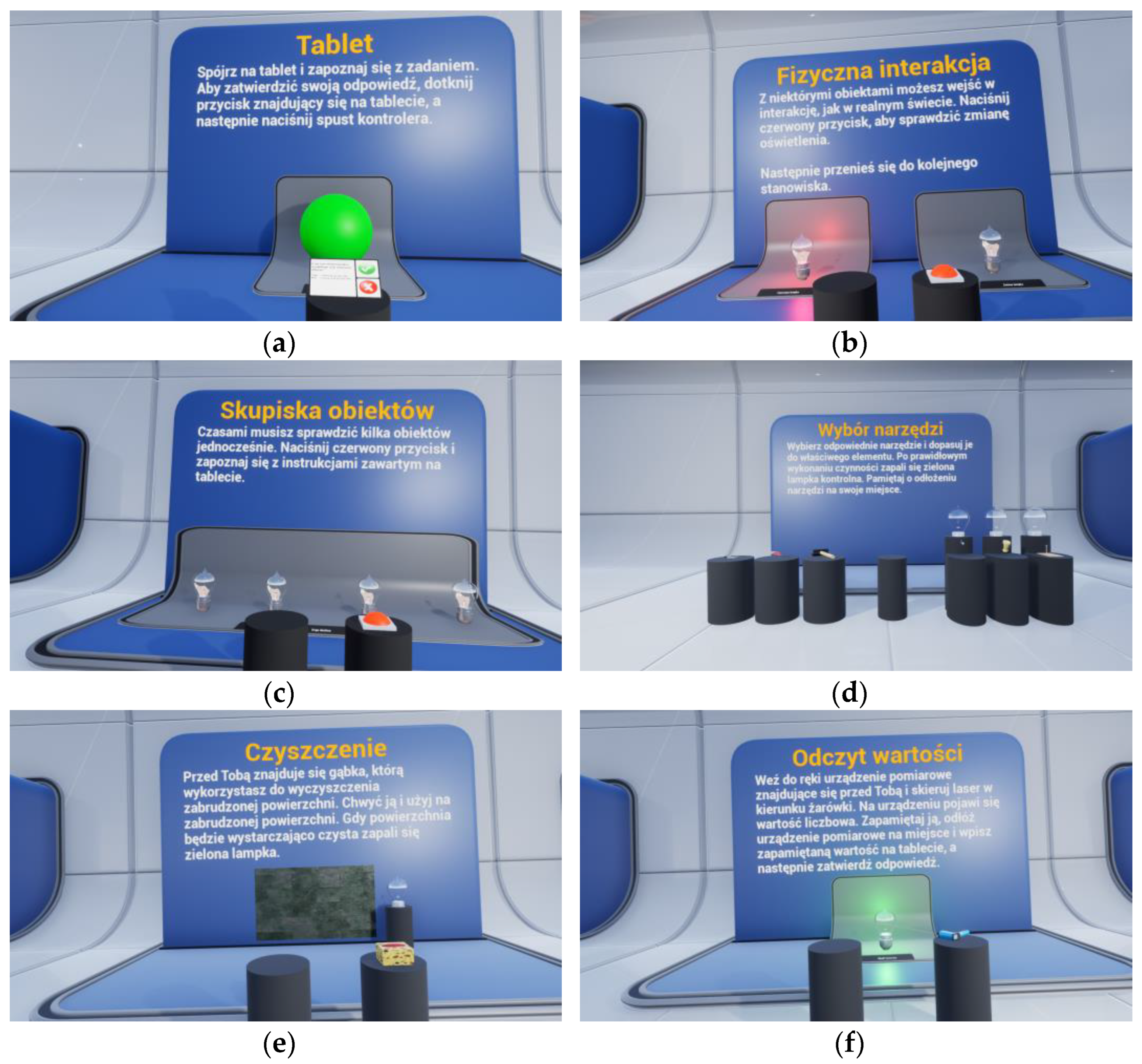

Virtual training of an employee in FM tasks must be preceded by initial training in the use of tools and moving around in the VR environment. Correct implementation of initial training allows for obtaining reliable results during the fundamental training. To carry out the initial training, a separate VR training module was created containing a set of workstations, enabling learning to perform specific activities in the VR environment (

Figure 1):

Login station—the user enters their data.

Transport station—the user learns to move around in the VR.

Tablet station—the user learns to analyze the content of the task and mark the answers on the tablet.

Physical interaction station—the user learns to interact physically with virtual objects.

Object positioning station—the user learns to move several objects simultaneously.

Big objects station—the user learns to move several smaller objects on a large object.

Tool selection station—the user learns to select and use the appropriate tools.

Cleaning station—the user learns to use the tool on a large object.

Buttons station—the user learns to turn buttons on the object.

Distance station—the user learns to verify the object’s distance.

Handle interaction station—the user learns how to handle moving objects manually.

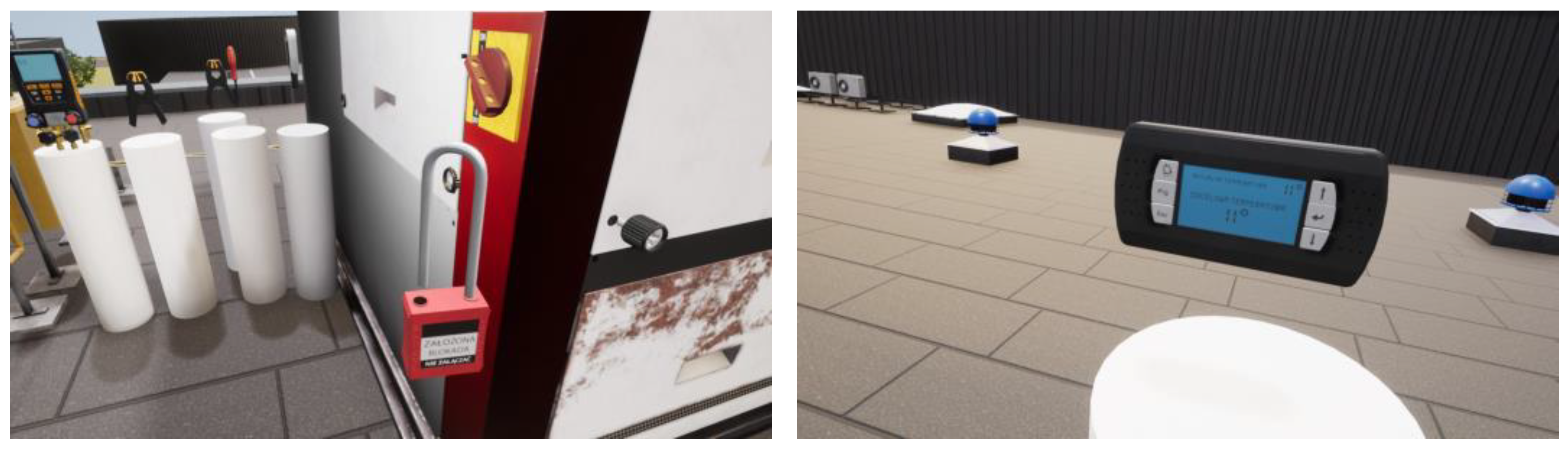

The advanced VR training and evaluation modules concerned the operation of the following equipment: fire pump station, HVAC roof station (heating, ventilation, and air conditioning), and air conditioning unit. The modeled activities represent typical tasks performed by FM employees. FM tasks are not related to each other, i.e., the result of one task does not affect the other, but also, in many tasks, the same object can be set differently. Examples of tasks performed in each module are as follows:

Fire pump station: check that the room heating is working correctly, verify the correctness of the pressure gauge indications, or check that the battery chargers show the correct values.

HVAC roof station: check that the electric system is working correctly, check the tightness of chimney ducts, and check the gas pressure.

Air conditioning unit: check the temperature-rising function of the indoor air conditioner, disinfect the air conditioner evaporator, and clean the air conditioner turbine.

Each module was designed as a virtual room of 80 × 30 m with a minimum of 50 activity points and 30 FM tasks. After completing the initial training in the use of tools and moving around in the VR environment, the training participant proceeded to undergo fundamental training on the fire pump station (

Figure 2), HVAC roof station (

Figure 3), and air conditioning unit (

Figure 4).

Virtual glasses are one of the solutions for immersion in VR. The year 1968 is assumed to be the time of the first proposed solutions, and their development is still ongoing [

29]. The VR training and evaluation modules used the most popular head-mounted displays (HMDs) [

1]. Eye-tracking measurements were performed using HTC VIVE Pro Eye VR virtual glasses characterized by high-precision eye-tracking capabilities. The glasses allow you to display the image on two OLED displays with a total resolution of 2880 × 1600 pixels (615 PPI) and a refresh rate of 90 Hz. The research used the whole set, which complements the glasses with the controllers and base station.

5. Clustering for Fast and Slow Learners

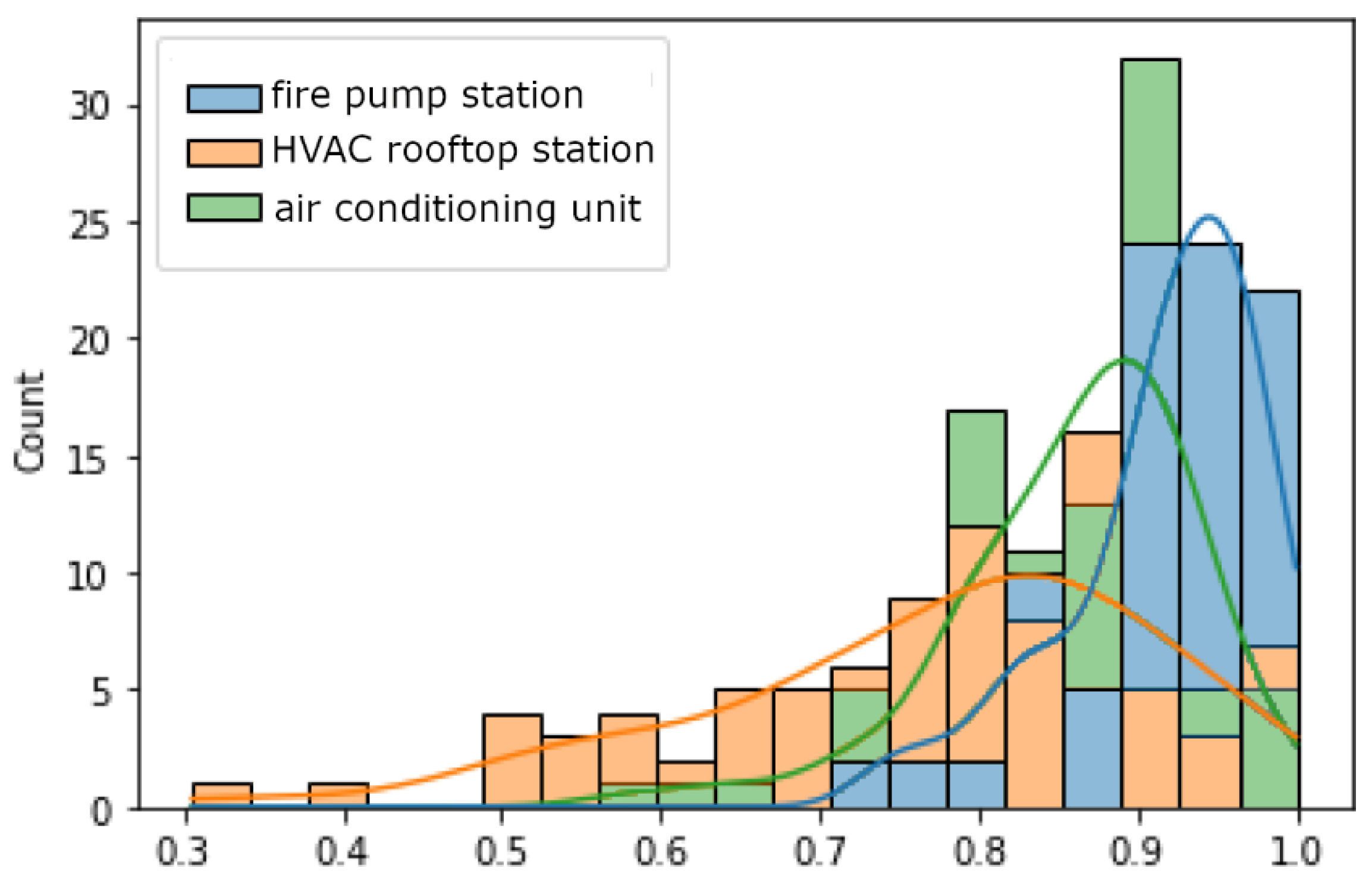

Subsequent research aimed to divide the training participants into fast and slow learners. The clustering algorithm was based on the results of each participant’s skills tests conducted after training in all VR modules. The scores for each test were normalized by dividing the test score by the value of the maximum possible score. Thus, the clustering algorithm was based on three values representing the relative (percentage) evaluation of the skills acquired after training in each VR module (

Figure 6).

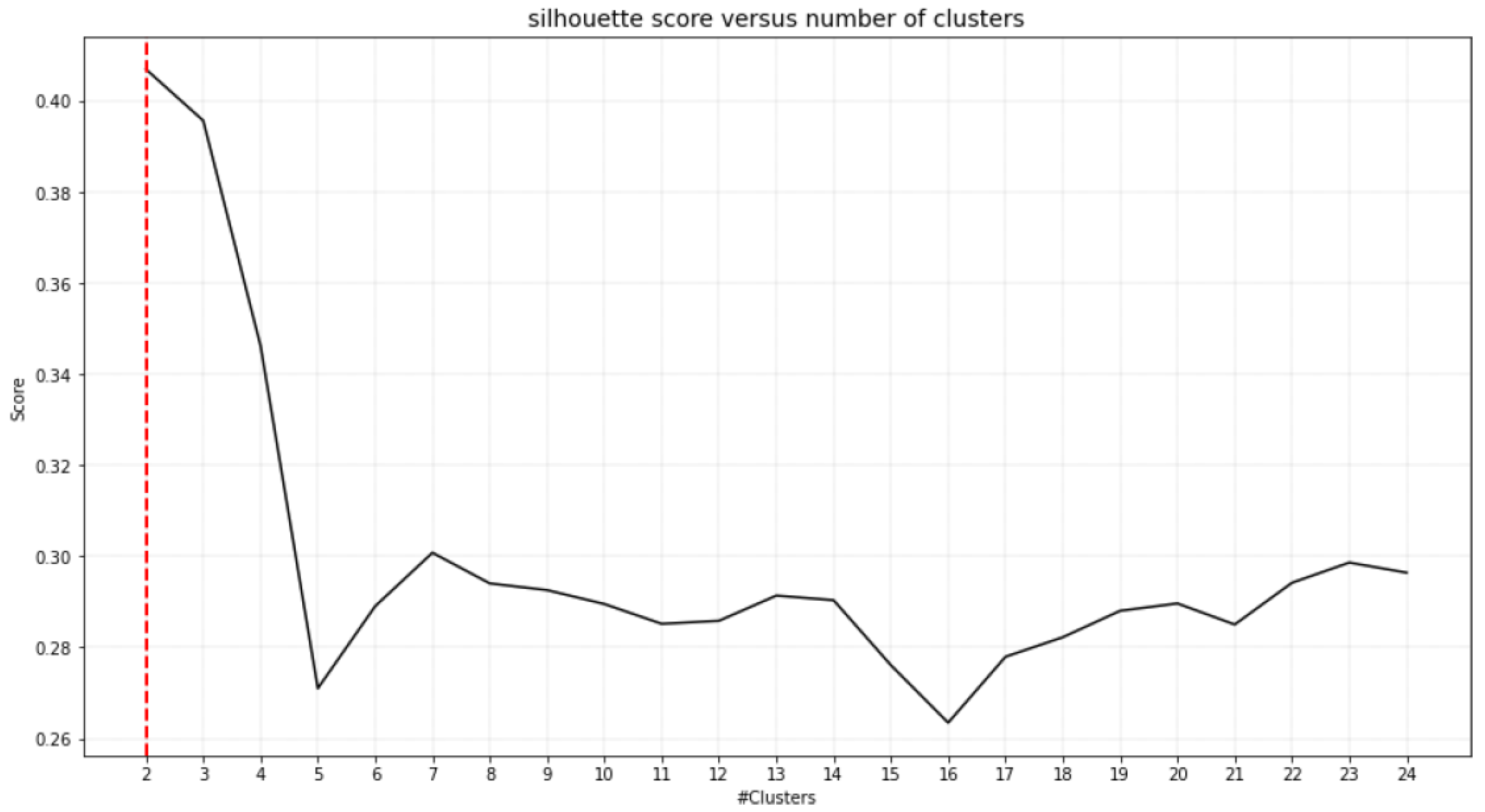

The Ward method was used to carry out the clustering, and the distances were determined based on the Euclidean metric. The number of clusters was chosen by silhouette coefficient agglomerative clustering analysis. Ward’s method starts with a cluster number equal to the number of observations with only one observation assigned. It successively merges the most similar clusters, which minimally increases within-cluster variance until all the observations are included in one cluster. The results confirmed the correctness of grouping training participants into two classes (

Figure 7). The highest value was obtained for the division into two clusters and slightly lower for the division into three. The analysis of the results related to passing the tests and the timely execution of the FM tasks allows dividing the participants into two groups: fast learners, i.e., those who may need less training before taking the skills test, and slow learners, who may need more training to be able to pass the skills test.

The possible subsequent division was in the group of slow learners, resulting in three classes (

Figure 8). However, the created new cluster of participants between fast and slow learners is small and constitutes less than 10% of all participants. Additionally, the participants who were classified as fast learners passed at least two VR scenarios and thus achieved the basic learning goal. As a result, an additional division was no longer needed.

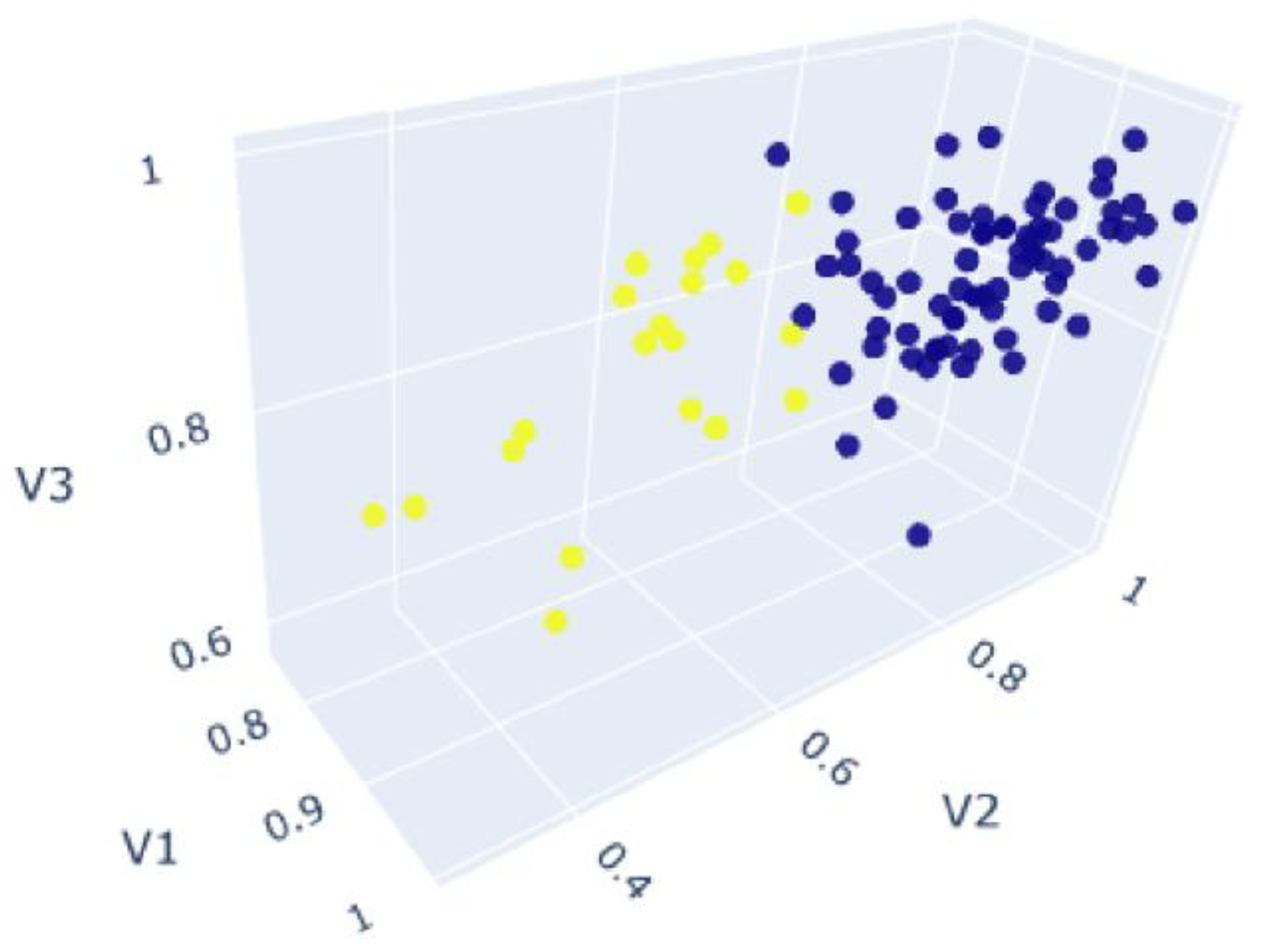

The aim was to determine clusters with more different average values and thus group participants into two more distinguished groups. The K-means method was used to divide the training participants into two clusters representing fast and slow learners (

Figure 9). Initial cluster centers were determined using the k-means++ algorithm. As a result, a more significant number of participants assigned to the class of fast learners was distinguished, and their percentage share increased from 57% for the Ward method to 74% for the K-means method. The criteria for classifying into the group of fast learners decreased, and the percentage of fast learner training participants is more like the percentage of people who passed each VR scenario (

Table 2).

The results indicate that only those participants who score above 90% on the test of skills acquired during the training with the use of the fire pump station VR module and above 70% on the test of skills acquired during the training with the use of the HVAC roof station VR module will be classified as fast learners. The test results of skills acquired during the training using the air conditioning unit module are relatively low in evaluating the employee’s learning speed. The air conditioning unit module contained the most elements of low technical complexity, such as checking the correctness of machine operations, checking for dirt, checking if cables are connected, and so on. Hence, the last test examined the conscientiousness of the performance of individual tasks and was less attractive to the participants than the other modules. This is in line with the results of the research that showed learning was positively predicted by the degree of situational interest experienced by the participants [

33]. In turn, situational interest resulted in greater immersion and interactivity [

33]. It is also worth noting that the number of people without working experience with air conditioners or with less than five years of professional experience in FM represents a relatively high share of people in the fast learner cluster. Therefore, the results may indicate that the predisposition to fast learners with the use of VR technology does not necessarily result from participants’ professional experience but presumably from the quality of the constructed VR training environment. At the same time, based on the results, it is impossible to determine the impact of professional experience clearly. The logic indicates that people who have worked for many years with various devices in the FM industry should achieve better results.

6. Conclusions

This article presents the concept of a VR environment for the training and evaluation of facility management staff using eye tracking, which allows for the reconstruction of identical test conditions for all participants, high comparability of training results, and advanced analysis of the results achieved by individual employees. Eye-tracking technology allows for an accurate analysis of the focus of each participant on the critical elements of the devices in the decision-making process, which enables obtaining a much more comprehensive range of information on the concentration and correctness of the employee’s actions. The eye movement information allows us to assess better the learning process and the correctness of completing tasks within individual VR modules.

The analysis of results achieved by training participants allowed them to be divided into two groups, which were defined as fast and slow learners. Achieving such an effect was possible thanks to the use of clustering of the results of each participant’s skills tests conducted after completing training in all VR modules. The analysis of the results showed that the attractiveness of the tasks performed in VR modules impacts the result of participant training. In addition, among people included in the fast learner’s cluster, there were relatively many people without experience working in the FM industry. The training results are mostly based on the constructed VR training environment quality and less on experience using similar devices. Recognizing an employee’s ability to learn is essential to the employer, as it enables the identification of employees who require additional support. Thanks to the obtained information, it will be possible to predict how much training a given employee will need to master the knowledge within the industry specialization. This information will make it possible to propose a strategy for the professional development of this person within the examined competencies. Ultimately, the training should be fitted to the user and supported by a user-assistance system to help the participant learn effectively.

The research results contribute to employee training in inspections of individual components of office and industrial buildings. Based on the research, a process innovation was developed as a cloud-based platform for the training and skill evaluation of facility management staff using a VR environment and eye-tracking technology:

https://www.vrfmhub.com/en (accessed on 2 May 2023). Using the VR environment technology, an employee can enter a simulated version of a building’s technical infrastructure and receive practical training and verify skills in inspecting its tasks without exposing themselves to any danger or risking any damage to the technical infrastructure. The developed solution is intended for use by the facility management industry based on the SaaS (Software as a Service) sales model, in which the provider develops and maintains cloud applications, ensures their automatic updates, and makes the software available to its customers via the Internet on a “pay-as-you-go” basis, i.e., depending on the use of resources. Training in the VR environment with the use of eye-tracking technology, offered in the SaaS model with the possibility of purchasing the service online, has a good chance of achieving widespread use, as it will be an attractively priced opportunity to comfortably improve and verify employees’ qualifications while reducing technical barriers to application. It is worth noting that it is possible to use achieved research results to build similar solutions for other industries.

Future work will focus on developing a classifier that will automatically evaluate the results of the skills tests considering the results of eye movement. However, VR training with eye tracking provides a large amount of data describing the proceedings of the training process. Creating a classifier must be preceded by research on the selection and processing of this source data to obtain valuable parameters needed to evaluate the results of the skills tests in a VR environment. In the future, the data obtained from eye tracking can also be used to identify the trainee to check whether we are evaluating the right person, i.e., whether there is no fraud involving taking the exam by people other than those who should take it. Precise identification of the trainee is critical in the case of training available via the Internet.