Abstract

Deep learning technology has been widely applied in emitter identification. With the deepening research, the problem of emitter identification under the few-shots condition has become a frontier research direction. As a special communication signal, OFDM (Orthogonal Frequency Division Multiplexing) signal is of high complexity so emitter identification technology under OFDM is extremely challenging. In this paper, an emitter identification method based on contrastive learning and residual network is proposed. First, according to the particularity of OFDM, we adjust the structure of ResNet and propose a targeted data preprocessing method. Then, some data augmentation strategies are designed to construct positive samples. We conduct self-supervised pretraining to distinguish features of positive and negative samples in hidden space. Based on the pretrained feature extractor, the classifier is no longer trained from scratch. Extensive experiments have validated the effectiveness of our proposed methods.

1. Introduction

Communication emitter individual identification technology is of great significance in improving the security of the wireless communication system and improving communication reconnaissance against ability [1]. Essentially, emitter individual identification is a classification task. Based on the known communication source category information of training samples, it aims at training the feature extractor and the classifier, Then the unknown category of samples was tested to determine their emitter’s attributes. The key of emitter identification is to extract effective features. Deep learning has been widely applied in various situations in the field of image classification. Emitter identification is recognized as an important application of deep learning. However, there are still many problems under the few-shots condition. At the same time, due to the particularity and complexity of OFDM signals, there is no good progress in the research of OFDM signal emitter identification based on deep learning.

In a variety of communication emitter resources, OFDM divides the frequency bands to achieve a time domain signal containing multiple frequency band signal components, which meets the user’s demand for speed and breaks through the physical limitations of the electromagnetic spectrum and is widely used. OFDM signals are usually superimposed by multiple subcarriers, and the overlap between carriers often obscures the key features, making it difficult to use data efficiently. Therefore, how to extract features reasonably and accurately is the key to solve the problem of OFDM signal identification. In addition, the deep neural network has many parameters and needs large amounts of data to train. In the electronic communication environment, only a small number of signal samples can be collected, which is easy to lead to overfitting and decrease the classification accuracy. Therefore, individual identification under few-shots conditions is an important challenge.

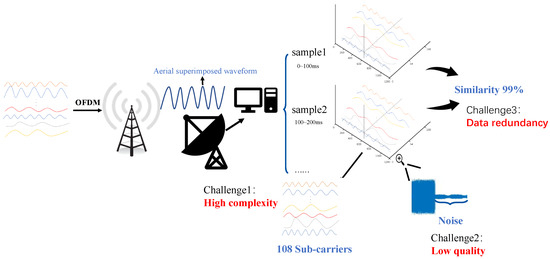

As shown in Figure 1, OFDM signals carry multiple sub carriers. For our data, 108 sub carriers have high complexity and these subcarriers are transmitted in parallel, which causes the spectrum of OFDM signals to be overlapped. However, emitter identification based on convolutional neural network usually input signal’s spectrum. If we input the spectrum to CNN, the model will collapse. This is the first challenge. In addition, there is often noise and mutual interference between carriers, making the signal quality poor. How to effectively learn from this kind of data is another challenge. Finally, as the sampling time is 100ms, and most of the OFDM signals are the result of continuous sampling, which leads to very similar useful samples, and similar samples appearing repeatedly no longer distinguishable. The OFDM signal identification was studied under the condition of few-shots.

Figure 1.

Challenges of OFDM signal identification. There are three challenges: high complexity, low quality and data redundancy. In particular, OFDM signals have 108 sub carriers, and the quality is likely to be poor, and our works are under the condition of few-shots.

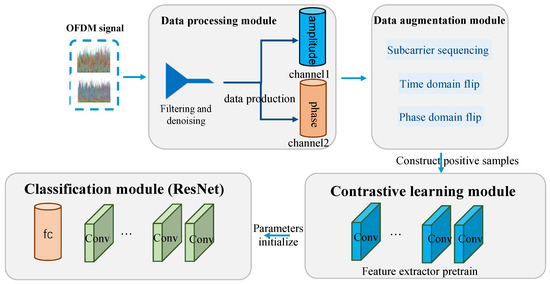

As shown in Figure 2, in terms of the particularity of OFDM signals, in this paper, we propose an individual identification method of OFDM signals based on the residual network to obtain the features of amplitude and phase of two-channel data for generating. The residual network model is improved to focus on extracting the internal features of the same subcarrier at the input layer. In addition, subcarrier sequencing, time domain flip, and phase domain flip data augmentation are proposed to create positive samples, then self-supervised pretraining is carried out to distinguish learned features of positive and negative samples in hidden space to deal with the challenge of few-shots. Finally, the pretrained model parameters are used to initialize the feature extraction layer of the classifier, and the final emitter identification task is started. 4G OFDM signals in the frequency domain are collected as the dataset, and multiple groups of comparative experiments are conducted under the condition of few-shots. The results show that the proposed method to some extent solves the problem of the high complexity of OFDM signal data. At the same time, the data augmentation strategies and contrastive learning strengthens the generalization ability of the model and achieves better accuracy improvement.

Figure 2.

Overall structure of OFDM signal classification. There are four parts in our structure, the data processing module will generate two-channel datasets, and the data augmentation module will generate positive samples for constrastive learning. Then, we will pretrain the contrastive learning module and initialize the classification module with pretrained model’s parameters.

Our contributions are summarized as follows:

- We constitute the first attempt to study OFDM emitter identification on deep learning methods.

- We propose a generalized framework, including contrastive learning, data augmentation, and ResNet, which can extract effective features from complex OFDM data under few-shots condition;

- We perform extensive experiments and analyze the results, demonstrating the effectiveness of our method. The results reflect that the preprocessing and training methods in our framework can be migrated to other machine learning models and our works can be effectively migrated to other signals.

2. Related Work

Emitter Identification. The key of individual identification is to extract effective features of emitter resources. In the complex electromagnetic environment, individual features are difficult to extract accurately. The authors of [2] proposed an individual identification method based on wavelet transform for feature extraction. Ref. [3] realized the identification of speech signals by calculating time-frequency singular values and singular vectors and adopting a Gaussian classifier. Ren Dongfang et al. [4] used the Babbitan distance to select the bispectrum and improve the rectangular integral bispectrum to obtain features. The authors of [5] proposed a global low-rank representation to extract subtle features. The authors of [6] proposed extraction of signal integral bispectrum as the feature vector. Han Guochuan et al. [7] proposed a method based on nonlinear dynamical features. Some achievements have been made in the classification of communication radiation sources by feature extraction, but such methods often need more manual pretreatment and a large number of experiments to verify the effectiveness of the feature extraction method, which is difficult to be applied in practice for different electromagnetic signals.

Neural Network models. With the development and wide application of deep learning, more and more experts and scholars apply the method of deep learning on identification problem, such as human intention recognition [8,9], video semantic recognition [10] and emitter identification [11,12,13,14] The residual neural network proposed by [15] solved the performance degradation caused by increasing the depth of CNN through the residual module. Qin Jia [16] optimized the deep learning model by combining LSTM and integrating features extracted from different convolutional layers. The authors of [17] proposed a radiation source individual identification algorithm based on the stack-based LSTM network, which directly used IQ time series signals to train the LSTM network. The authors of [18] converted IQ road data into images and processed the images with the convolutional neural network to complete the classification and identification task. The authors of [19] proposed a method for individual identification of radiation sources based on the deep confidence network under the condition of small samples. The authors of [20] applied convolutional neural network in radio cognition technology. Chen Hao et al. [21] proposed an identification method based on the deep residual adaptation network to realize migration identification from the source domain to the target domain. The authors of [22] inputs i-channel and Q-channel signals into the deep convolutional neural network and achieves good results in radio classification tasks under different modulation modes.

Contrastive learning. Contrastive learning has gradually become a mainstream visual self-supervision task. Many works [23,24,25,26,27] construct positive sample pairs by random data augmentation operations on images, decrease the feature distances of different forms of the same image, and increase the feature distances between different images. The authors of [28] presented Contrastive Predictive Coding (CPC) for extracting compact latent representations to encode predictions over future observations. Pretraining with contrastive learning is dedicated to learning generic representations, based on the intrinsic adequacy of the data itself, making great use of existing data for knowledge learning, and can be easily migrated to downstream tasks. The parameters of the classification model are then no longer randomly initialized, which is helpful for achieving better results.

3. Proposed Method

In this section, we describe data collection, data preprocessing, data augmentation, contrastive learning and residual network framework.

3.1. Data Collection

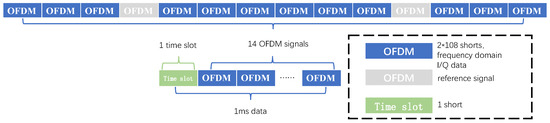

In this paper, data are collected from five different phones, each data sampling length 100 ms, as shown in Figure 3, 1 ms including 14 OFDM symbols and 1 time slot number, data points two time slot, 14 OFDM symbols in the order 3 effective signal, a reference signal, six effective signals, a reference signal, 3 effective signals. Each OFDM signal has 108 subcarriers, and each subcarrier is represented by two IQ channels. The length of data stored in each file: 100 × (108 × 2 × 14 + 1) = 302,500 Bytes.

Figure 3.

OFDM signal introduction.

3.2. Data Preprocessing

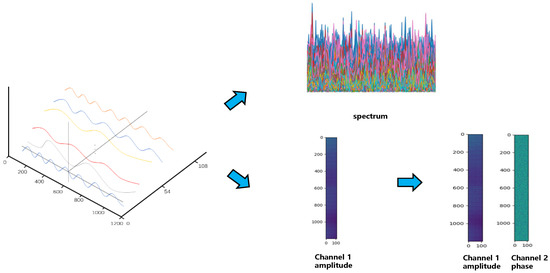

Data preprocessing is divided into three parts: effective signal screening, data normalization, and dual-channel data generation. First, each 100 ms data is segmented, and the signals in each data file are divided into effective signals, reference signals, and time slots, among which the effective signals are selected. Second, the individual fingerprint features of the emitter are independent of the transmitted power of the signal. In order to avoid the influence of the signal power difference, the data samples are normalized. Finally, in view of the particularity that the OFDM signal contains 108 subcarriers, each subcarrier will overlap with the image input, resulting in a poor effect. However, deep learning is mostly based on real number operation, which to some extent loses the intrinsic connection between IQ signals. As shown in Figure 4, in view of the OFDM signal, we first draw it into a spectrum diagram which is overlappedand then save the data value. The amplitude of IQ data is calculated as the first channel, and the phase is used as the second channel to generate single-channel dataset and two-channel dataset. New datasets generated in this way comprehensively utilize the amplitude and phase information of the IQ signal, and make full use of the internal connection between the two IQ channels.

Figure 4.

Data preparing. We tried plenty of data types, including spectrum, one-channel (amplitude), and two-channels (amplitude and phase).

3.3. Data Augmentation

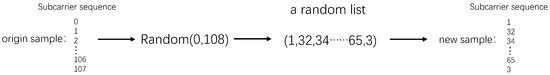

Subcarrier rerank. Aiming at the characteristic that the OFDM signal contains 108 subcarriers, we propose a method by adjusting the order of subcarriers to generate extra samples. The specific method is shown in Figure 5: Randomly generate N random sequences ranging from 0 to 108, adjust the order of subcarriers of each original sample according to this sequence, and ensure that each signal generates new samples of the same subcarrier sequence. This method expands the number of samples in the training set to N+1 times than the original, so as to achieve the purpose of data augmentation.

Figure 5.

Data augmentation: Subcarrier rerank, Randomly adjust the order of subcarriers of OFDM signals.

Time domain flip. In terms of the periodicity of the signal wave, we propose a data augmentation method that numerically flips the time-domain signal to generate new samples. The 1200 symbols of each subcarrier in the original signal are horizontally flipped, and the flipped subcarriers are recombined into an OFDM signal containing 108 subcarriers to become a new training sample.

Phase domain flip. In the phase domain, Hilbert Transform is a common method in signal processing, which reverses the phase of the original signal and shows different perspectives of the signal. We adopt the Hilbert transform method, manually carrying out a convolution to construct the time-frequency analytic signal of the target. The Hilbert transform is essentially a 90-degree phase shifter. Supposing the existing signal , then the Hilbert transformation of the signal is defined as :

3.4. Contrastive Learning and Residual Network Framework

The problem of emitter identification is a classification problem in deep learning. In order to solve the identification problem of OFDM signals under the condition of few shots, we divided the training stage into self-supervised pretraining based on contrastive learning and transmitter classification based on CNN.

We use the residual network as a backbone of this classification task, the advantage is that it added the residual block in the convolutional neural network, the network computing the difference between original mapping and the input feature x, to solve the error gradually increase along with the network layer increases and gradient diffusion problems, makes deep networks have better performance than the shallow network.

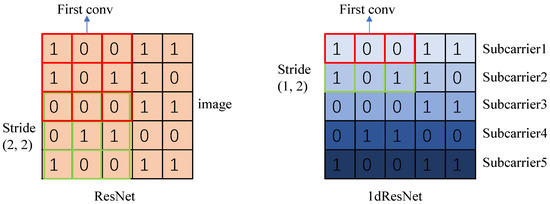

In terms of the particularity that OFDM signals contain 108 seed carriers, we made some adjustments to the structure of ResNet: (1) The input layer of the residual network that originally supported RGB three-channel color image was modified to support the structure of amplitude and phase dual channels as input, and the parameters of the first two channels were copied to ensure that the initial weights were consistent with the residual network. (2) We adjust the method of residual network feature extraction, reducing the input layer convolution kernel to one dimension so that it pays more attention to the features displayed by the same subcarrier. At the same time, in this paper, we further compare the identification effect of residual networks with different depths under OFDM signals, and finally uses the ResNet18 as the backbone, the difference between ResNet18 and 1dResNet18 is shown in Figure 6.

Figure 6.

ResNet is usually used to extract the features of RGB images. There is a large amount of local information in the images, but each line of OFDM signal represents a subcarrier with timing information inside. Therefore, it is more reasonable to use 1-dimensional convolution and adjust the step size in OFDM data.

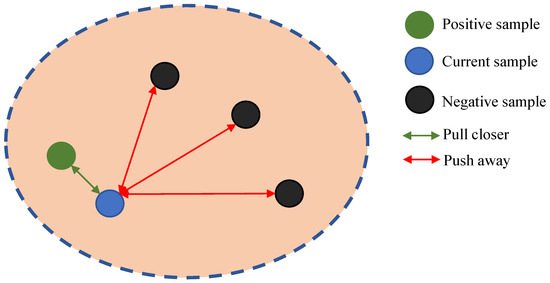

We use the method of contrastive learning to construct positive samples and negative samples, and pull closer the distance of positive samples, and pull away the distance of negative samples to obtain a pretrained model. The goal of contrastive learning is shown in Figure 7.

Figure 7.

The goals of contrastive learning.

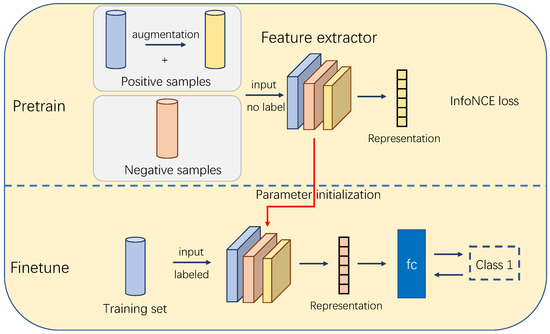

We propose a framework based on the above residual structure, as shown in Figure 8, our framework consists of two parts: In the upper part of Figure 8, we conduct pretraining by contrastive learning, and the pre-training network is the feature extraction layer of the residual network mentioned above. Supposing we have an OFDM dataset , where N is the total number of samples. We denote training samples from a batch as , where is batch size. For each in each batch, we perform data augmentation, and we obtain . Then, we select the other samples in the Mini-batch , ⋯ and feed these samples into the feature extractor, we take the output of and through the network as q and , respectively, and we take the output of , ⋯ as , ⋯. Since q and come from the same sample , they both belong to positive samples and have the highest similarity. In this part, we use InfoNCE loss function, which are defined as:

where the numerator is the dot product of q with , and the denominator is the dot product of q with all k, is a temperature coefficient. Based on this, the training of the feature extraction layer is completed.

Figure 8.

Contrastive learning and residual network framework. (1) In the upper part, we conduct pretraining based on contrastive learning, we perform data augmentation to generate positive samples, and the feature extractor is the residual network without FC layer. We use the InfoNCE loss function in this part. (2) In the bottom part, we initialize the feature extraction layer of the residual network with the pretrained model’s parameters, and the loss function is cross-entropy.

Next, in the fine-tune phase, we use the training dataset X to train a residual network, including the fully connected layer. We first initialize the feature extraction layer of the residual network with the pretrained model’s parameters and then guide the model training through the cross-entropy loss function, formulated as:

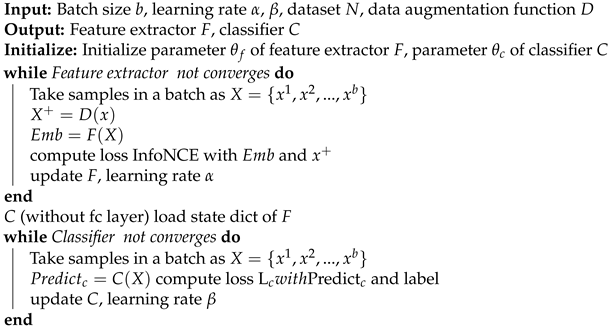

where represents the probability of actually being like, and represents the probability of predicting like. The proposed training strategy is represented in Algorithm 1.

In this framework, the internal information of a few-shots OFDM data is more fully expressed, and the classifier is no longer trained from scratch, so we can learn more effective and expressive features and achieve better classification accuracy.

| Algorithm 1: Training Strategy |

|

4. Experiments

4.1. Data Construction

The experimental data are collected from the 4G signals of 30 mobile phones, and all data are collected by the same SIM card. OFDM frequency domain signals of 5 mobile phones were randomly selected to construct the dataset. The structure of signals has been represented in Section 3.1. Our experiment is carried out under the condition of few-shots, and the number of samples of various mobile phones is 62, 54, 60, 60, and 49, respectively. All samples are divided into the training set and validation set according to the ratio of 4:1. In order to eliminate randomness, each experiment was repeated 10 times, and the average accuracy and standard deviation were calculated.

4.2. Implementation Details

In the experiment, we use SGD as the optimizer, where the learning rate is set to 10-3 for the pretrain phase, and the learning rate is set to 10-2 for the fine-tune phase. The batch size b is set as 32. The momentum is 0.9, and the training iteration of pretrain and fine-tune are 30 and 100, respectively. The Flops and parameters of our method are shown in Table 1.

Table 1.

Flops and parameters of our model. Batch-size will affect flops while parameters are fixed. The input dim is (batch-size, channel num, dim1, dim2).

4.3. Experimental Results and Analysis

We provided the first study on individual identification of OFMD signals based on deep learning, so there is no direct baseline. However, improvements and innovations in data preprocessing, data augmentation, model structure, and training methods are proposed in our works. Therefore, in the experimental part of this paper, we intend to gradually deepen from the above aspects to show how to improve the identification accuracy of OFDM signals step by step and also confirm the effectiveness of the proposed methods.

Data preprocessing experiment analysis. In this paper, we propose to change the IQ data into single-channel and dual-channel during preprocessing. The dimension of single-channel data is (108, 1200, 1), and the dimension of dual-channel data is (108, 1200, 2). The backbone model is ResNet18 with the number of input channels modified. The spectrogram input of the signal is used as the baseline. The experimental results are shown in the "Input" part of Table 1. Taking the spectrum map as the input, the performance is poor, the reason is OFDM contains 108 subcarriers, the overlap between subcarriers will blur the features, and the information utilization is very low. In contrast, by using origin data to make the channel dataset, more information is retained to achieve higher accuracy. The complex number can be described from the two dimensions of amplitude and phase, and the dual-channel data made by using both amplitude and phase achieves the highest accuracy.

ResNet structural adjustment experiment analysis. As mentioned in Section 3.4, in view of the particularity that OFDM signals contain multiple subcarriers, we adjust the parameters of the ResNet input layer. Here, we compared the adjusted residual network structure with ResNet18, and the experimental results are shown in the “Model” part of Table 2, after adjusting the structure of the input layer, the 1dResNet18 will focus on the features of a subcarrier rather than between the subcarriers, and all subcarriers will be considered to make the information utilization more reasonable and sufficient. Therefore, the average accuracy is 2.58% higher than ResNet18, and the standard deviation is also smaller, which proves the effectiveness of this method.

Table 2.

OFDM signals and radio signals identification max accuracy and average accuracy. We show the effectiveness of our proposed method by gradually adding proposed modules. Radio signals is different from OFDM signals, which has only one carriers without knowing the modulation type.

Pretraining based on contrastive learning experiment analysis. On the basis of our adjusted residual network, we introduce the work of pretraining based on comparative learning. During pretraining, we will build positive samples through the data augmentation method, and other samples in the same batch will be used as negative samples for training through InfoNCE loss. We have three data augmentation methods in total. At the same time, we conduct the experiment of selecting a data augmentation method randomly in each training iteration. As shown in the “Data augmentation in contrastive learning” of Table 1, we proposed three data augmentation methods to generate positive samples for self-supervised pretraining. 1dResNet18 without pretraining was used as baseline (70.86%). It is clear that all three methods achieve better results. The highest average accuracy of 80.03% was achieved when phase domain flip. However, although the maximum accuracy of the time domain flip method is lower, its average accuracy is higher than that of subcarriers rerank, and it has a smaller standard deviation, indicating that it is more stable. In addition, we conducted a set of experiments using random data augmentation methods, each time randomly using one data augmentation method, and obtained the highest average accuracy of 81.55%.

Additional experiments. In order to better show the difficulty of OFDM signal identification task, we conducted an additional experiment, we collected three categories of signals from radio signals, which have no subcarriers, and trained them on adjusted ResNet. As shown in the “Radio signals” part of Table 2, the radio signal dataset achieved a good performance on ResNet, and the average accuracy rate is very high and stable. Such results are good enough to support practical applications, which reflects the powerful feature extraction and classification ability of ResNet, and also reflects the difficulty of our task.

4.4. Model Robustness Experiment

We conducted a group of experiments to verify the robustness of our method on radar signals. First, divide the whole dataset into two parts; the training data set is of 10 magnitudes. We use ResNet as a baseline. Randomly select 10-level data in the training data set, use the Contrastive Learning framework to process the data, then use the same algorithm to test. Results are shown in the following Table 3.

Table 3.

Model accuracy when the number of training set samples drops sharply.

According to the experimental requirements, when the training set is of 10 magnitudes, baseline achieves an accuracy of 96.86%, while when the training set is reduced to 10, the baseline accuracy is reduced to 85.98%. As for our proposed framework. When the training set is of 10 magnitudes, our framework achieves the highest recognition accuracy of 97.48%. The experimental result is 0.62% higher than the baseline. When the training set size is reduced to 10, our method can achieve the highest recognition accuracy of 90.40%. The experimental result is 4.42% higher than the baseline. It has achieved more stable results, which represents that our method has better robustness.

5. Discussion

There are few works on emitter identification in OFDM. We proposed methods from data collection to classification. One limitation of this work is that the identification accuracy is not enough to support the practical application. In the above discussion, we have confirmed the difficulty of this task and the difference between it and the general emitter identification tasks. We expect that our work can motivate more researchers to explore this research direction, and finally, practical application performance can be achieved.

6. Conclusions

Orthogonal frequency division multiplexing (OFDM) signals are widely used in daily life, and the task of emitter identification on OFDM is extremely challenging. In this paper, we proposed a framework based on contrast learning and residual networks. In view of the feature that it contains multiple subcarriers, dual channel input and 1dResNet are used to extract better features from complex signals and make full use of data. Three data augmentation strategies are proposed to construct positive samples and achieved good performance under the condition of few-shots. Extensive experimental results demonstrated the effectiveness of our method.

Author Contributions

Conceptualization, J.Y.; data curation, Q.Z. and W.Z.; formal analysis, Y.Y.; funding acquisition, Q.Z. and W.Z.; investigation, J.Y.; methodology, J.Y., Y.Y. and Z.F.; project administration, J.Y.; resources, F.J., Q.Z. and W.Z.; software, Q.Z. and W.Z.; validation, J.Y. and Z.F.; visualization, J.Y.; writing—original draft, J.Y.; writing—review and editing, J.Y., F.J. and Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology on Electronic Information Control Laboratory and the National Natural Science Foundation of China (NSFC) under Grant U2001211.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jia, Y. The Research on Communication Emitters Identification Technology. Ph.D. Thesis, UESTC, Chengdu, China, 2017. [Google Scholar]

- Yu, Q.; Cheng, W. Specific Emitter Identification Using Wavelet Transform Feature Extraction. J. Signal Process. 2018, 34, 10. [Google Scholar]

- Ding, G.; Huang, Z.; Wang, X. Radio frequency fingerprint extraction based on singular values and singular vectors of time-frequency spectrum. In Proceedings of the 2018 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Qingdao, China, 14–16 September 2018; pp. 1–6. [Google Scholar]

- Jie, R.D.T. Communication Emitter Identification Based on Bispectrum and Feature Selection. J. Inf. Eng. Univ. 2018, 19, 6. [Google Scholar]

- Tang, Z.; Lei, Y. The extraction of latent fine feature of communication transmitter. Chin. J. Radio Sci. 2016, 31, 8. [Google Scholar]

- Lin, J.J. RF fingerprint extraction method based on bispectrum. J. Terahertz Sci. Electron. Inf. Technol. 2021, 19, 5. [Google Scholar]

- Huang, G.; Yuan, Y.; Wang, X.; Huang, Z. Specific emitter identification based on nonlinear dynamical characteristics. Can. J. Electr. Comput. Eng. 2016, 39, 34–41. [Google Scholar] [CrossRef]

- Chen, K.; Yao, L.; Zhang, D.; Wang, X.; Chang, X.; Nie, F. A semisupervised recurrent convolutional attention model for human activity recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1747–1756. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Yao, L.; Chen, K.; Wang, S.; Chang, X.; Liu, Y. Making sense of spatio-temporal preserving representations for EEG-based human intention recognition. IEEE Trans. Cybern. 2019, 50, 3033–3044. [Google Scholar] [CrossRef]

- Luo, M.; Chang, X.; Nie, L.; Yang, Y.; Hauptmann, A.G.; Zheng, Q. An adaptive semisupervised feature analysis for video semantic recognition. IEEE Trans. Cybern. 2017, 48, 648–660. [Google Scholar] [CrossRef]

- Ding, L.; Wang, S.; Wang, F.; Zhang, W. Specific emitter identification via convolutional neural networks. IEEE Commun. Lett. 2018, 22, 2591–2594. [Google Scholar] [CrossRef]

- Shieh, C.S.; Lin, C.T. A vector neural network for emitter identification. IEEE Trans. Antennas Propag. 2002, 50, 1120–1127. [Google Scholar] [CrossRef]

- Matuszewski, J.; Sikorska-Łukasiewicz, K. Neural network application for emitter identification. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech Republic, 28–30 June 2017; pp. 1–8. [Google Scholar]

- Kong, M.; Zhang, J.; Liu, W.; Zhang, G. Radar emitter identification based on deep convolutional neural network. In Proceedings of the 2018 International Conference on Control, Automation and Information Sciences (ICCAIS), Hangzhou, China, 24–27 October 2018; pp. 309–314. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Jia, Q. Individual Identification of Communication Emmiter Based On Deep Learning. Ph.D. Thesis, BUPT, Beijing, China, 2019. [Google Scholar]

- Wu, Z.; Chen, H.; Lei, Y.; Li, X.; Xiong, H. Communication emitter individual identification based on stacked LSTM network. Syst. Eng. Electron. 2020, 42, 9. [Google Scholar]

- Chen, Y.; Lei, Y.; Li, X.; Ye, L.; Mei, F. Specific Emitter Identification of Communication Radiation Source Based on the Characteristics IQ Graph Features. J. Signal Process. 2021, 37, 120–125. [Google Scholar]

- Liu, G.; Zhang, X. A method for personal identification of communication radiation source based on deep belief network. Chin. J. Radio Sci. 2020, 35, 9. [Google Scholar]

- Zhang, M.; Diao, M.; Guo, L. Convolutional neural networks for automatic cognitive radio waveform recognition. IEEE Access 2017, 5, 11074–11082. [Google Scholar] [CrossRef]

- Chen, H.; Yang, J. Communicaiton transmitter individual identification based on deep residual adaptation network. Syst. Eng. Electron. 2021, 43, 7. [Google Scholar]

- West, N.E.; O’shea, T. Deep architectures for modulation recognition. In Proceedings of the 2017 IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Baltimore, MD, USA, 6–9 March 2017; pp. 1–6. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.; He, K. Improved baselines with momentum contrastive learning. arXiv 2020, arXiv:2003.04297. [Google Scholar]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G.E. Big self-supervised models are strong semi-supervised learners. Adv. Neural Inf. Process. Syst. 2020, 33, 22243–22255. [Google Scholar]

- Kim, M.; Tack, J.; Hwang, S.J. Adversarial self-supervised contrastive learning. Adv. Neural Inf. Process. Syst. 2020, 33, 2983–2994. [Google Scholar]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).