1. Introduction

Most data in applications are generated by a series of computations and transformations on the native data, making it difficult to trace the source. For example, SwissProt and Wikipedia are curated databases, and their contents are derived or edited from other sources, including curated databases. However, applications, such as scientific computing [

1], data warehouse [

2], grid-computing [

3], curated databases [

4], data flow management [

5], and copyright management [

6], are very concerned with data provenance, which refers to the origin, lineage, or source of data, which is essential for assessing data quality, as well as understanding the lineage and dependencies between data.

In relational databases, data are represented as relational tables, fields, tuples, or attribute values in terms of granularity. The creation and transformation of data depend on operations in the database management process, including the execution of SQL or stored procedural languages and the importing of external data. Adding the following functions to database management system (DBMS) helps to improve the usability of supporting data provenance in DBMS: (i) extracting provenance information (including provenance data and provenance transformation) in the data management process; (ii) supporting data provenance information at different levels of granularity; and (iii) supporting the full-featured SQL, stored procedure, and even other script languages for data processing in the real-life application scenarios. All of these functions play a crucial role in data interpretation, data debugging, and troubleshooting, which will efficiently enhance the data analysis capabilities of the database system.

However, existing research in relational data provenance is insufficient in supporting provenance in DBMS. The main challenges are: (i) Few studies support the relational data provenance of multiple granularities simultaneously. The current provenance system captures provenance information in coarse granularity using schema-based or fine granularity, such as tuple-based and value-based, and the coexistence of multi-granularity provenance in the same system lacks efficient models and methods. For example, many workflow systems supporting data provenance attach provenance information to coarse-grained files [

7,

8,

9,

10], while other customized systems attach provenance information to fine-grained attribute values [

11,

12,

13]. (ii) Tuple-level and value-level data provenance attract more research than data transformation [

14,

15]. Despite their significant application value, systems based on these studies have limitations in many aspects, for example, the inconvenience of comprehensively understanding data provenance relation (especially the data transformation process). (iii) Few database systems provide support for data provenance except DBNotes [

14], pSQL [

16], ORCHESTRA [

17], and ProvSQL [

18]. Furthermore, a large percentage of studies are based on lineage [

19,

20], why-provenance, where-provenance [

21], how-provenance [

22], and other theories. For the convenience of theoretical research, they are confined to a simple SQL, whereas there are many complex SQL in practical application scenarios. Glavic [

23,

24] proposed a provenance representation model of perm-provenance and perm-transformation on the basis of lineage, which can support complex SQL queries. However, the model significantly relies on the SQL syntax structure, making the transformation too redundant and complicated to understand the dependencies between data and extend to languages other than SQL.

To address the above challenges, we present a provenance model that fully supports full-featured SQL and procedural query language. Our model describes data provenance information at multiple levels of relation tables, fields, tuples, and values, especially the provenance transformation process.

The main contributions of this paper are summarized as follows:

A novel method and model of data provenance are presented. The model describes data provenance information at multiple levels, including relational table schema, attribute fields, tuples, and values. Our model investigates provenance transformation, which has been neglected in previous research.

Data provenance supporting full-featured SQL and procedural languages is implemented. We combine basic operations, such as the projection and filter to represent provenance transformation, making it possible to support full-featured SQL or even procedural languages.

The rest of this paper is organized as follows. The next section introduces related research on data provenance techniques,

Section 3 discusses the semantic representation of the relational data provenance,

Section 4 describes the relational data provenance model, and

Section 5 concludes the paper.

2. Related Research

Wang et al. [

25] first proposed the concept of data provenance and applied it to heterogeneous databases. Earlier research mainly focused on fine-grained (tuple, value). Annotation propagation [

26,

27] or inverse operation were the typical methods used to track data provenance. Woodruff et al. [

28] obtained provenance data by inverting the original query to solve the problems of data volume expansion and computational complexity. Later, Cui et al. [

19,

20] proposed the lineage approach, which utilized reverse queries to compute tuple-level provenance data and gave the first standardized definition of tuple-level data provenance in the relational database. Based on lineage, Buneman et al. [

21] presented the tuple-, field-, and value-level provenance representations, called why-provenance and where-provenance, respectively, to track data provenance from different perspectives. Green et al. [

22] introduced a “provenance semiring” structure of provenance representation, called how-provenance. How-provenance canonically explains the generation of tuples and plays a vital role in uncertain databases and computing data credibility. Glavic [

23,

24] proposed perm-provenance on the basis of lineage, which could adapt to complex SQL queries. In the meantime, perm-transformation was proposed with a relational expression tree structure to describe and compare provenance transformation. The utilization of the rewrite mechanism in provenance queries makes it possible that the query engine simultaneously computes provenance data and query results, along with the optimal utilization of the computation engine and the optimization for calculation efficiency. Lineage, why-, where-, and how-provenance, and extensions and optimizations of previous research [

17,

29] form the theoretical foundation for subsequent provenance models and applications.

The typical applications of data provenance are: (1) Orchestra [

30] is a collaborative data-sharing system based on how-provenance in a P2P environment, which takes advantage of the characteristics of provenance transformation to synchronize data on different ends. (2) DBNotes [

14], a system based on where-provenance, tracked provenance information by attaching zero or more notes to every value in a relation and propagating the attached notes to the result of queries. (3) Based on how-provenance, the Trio system [

15] quantitatively calculates the credibility of data in the uncertainty database by combining and distilling data provenance and uncertainty theory. (4) SPIDER [

31] proposes a method of “Route” as a description of the relationship between source and target data with schema mapping, tracking the transformation process of the target data, which is very useful for understanding and exploring schema mappings.

In recent years, most research built on previous studies and applied them to business contexts. Specifically, research directions include data provenance of distributed systems [

32,

33,

34,

35,

36], compaction of data provenance information with full-featured query languages [

37,

38,

39,

40,

41,

42], etc. Studies on full-featured query language are intimately related to our study, and we highlight them below: ProvenanceCurious [

43] is a tool for inferring data provenance in scripting languages. It demonstrates a provenance graph to represent a view of data dependencies, which helps to debug. This tool is implemented only with Python as the scripting language, without more details or a formal definition of the transformation process. Inspired by the ideas of program slicing and abstract interpretation, Miiller et al. [

44] proposed an approach to translate the query language into an abstract Turing complete language (similar to Python), which can compute and track fine-grained (tuple, value) provenance data based on why- and where-provenance. This method embraces any SQL constructs, such as (correlated) sub-queries, recursive common table expressions, and built-in and user-defined functions, which are valuable in real-world database scenarios. Dietrich et al. [

45] developed observational debuggers for SQL. These studies show that data lineage no longer solely relies on SQL, Datalog, or simple streaming processes, and the support of procedural languages with control structures is an urgent requirement.

3. Semantic Expression of Relational Data Provenance

Relational data provenance refers to the source of relational schemas or relational data (tables, fields, tuples, values) and all operations involved in changing these data in database management. This section first gives a canonical definition of provenance data based on where-prov and extends it to the tuple-level, called TupWhere-Prov. Then, we define provenance explaining transformation (PET) to introduce provenance transformation in SQL and procedural languages.

3.1. Contribution Semantics

In the view of query, relational schemas or relational data are the results of SQL execution in DBMS, and all inputs are the provenance data of the output. A super coarse-grained provenance has less significance and causes a large amount of provenance data storage. Therefore, separating the normalized data contributed to the output data from the input data as the provenance data represent a more rational approach. Currently, the provenance data research solves the division of provenance by contribution semantics, which includes three categories from a high-level perspective: input contribution semantics, copy contribution semantics, and influence contribution semantics [

23]. In the input contribution semantics, all inputs are the provenance data. In the semantics of influence contribution, only the inputs that affect the existence of the outputs are the provenance data, and missing data items of these data will lead to unrecoverable provenance. For copy contribution semantics, only those data items (partial or complete) copied to the output are provenance data. For example, some items are only used for filtering data but not copied to the output. These data are provenance data in influence contribution semantics but are not subject to copy contribution semantics.

The investigation of the provenance transformation process is the evaluation of the contribution semantics of provenance data. This is difficult in data provenance systems as SQL queries and relational algebra operations are transformation units in the transformation process. Input contribution semantics ignore the internal details of transformations, and thus the process is a black box. Unlike input contribution semantics, copy and influence contribution semantics analyze the details of the modifications, and influence semantics contain richer details than copy semantics. However, the influence semantics are complicated and cumbersome for understanding the relationship between data in a complex query language.

Previous studies use how-prov and perm-transformations to express provenance transformation. However, how-prov’s K-relation expression contains little information about the provenance transformation. The relational algebraic expression of perm-transformations, a tree structure that significantly relies on SQL syntax, is complicated and cumbersome for complex queries. Therefore, this paper proposes provenance explaining transformation (PET) to investigate this in more detail. PET begins with several basic operations (such as projection and join), providing the ability to describe conversions in SQL and stored procedures. To eliminate the influence of the complex features of languages, such as SQL, and explain the provenance transformation process concisely and clearly, we choose where-prov, a kind of copy contribution semantics, as the theoretical basis of the provenance data and define PET based on it.

3.2. Terminology and Symbols

In order to facilitate the definition of methods and models, we introduce symbols and the relational algebra used in the subsequent sections.

Let be a collection of field names (or attribute names) , U and V are finite subsets of , is a finite domain of attribute . A record (or tuple) is a function , written as . Then, is the value of field A, and t[U] restricts the tuple t to fields set .

A relation or table is a finite set of tuples over U. Let be a finite collection of relation names . A schema R is a mapping from to finite subsets of . A database (or instance) is a function mapping each to a relation over . For relation table and attribute set , is the value of tuple t () on field set A and .

We also define tuple locations as tuples tagged with relation names, written as (R, t). Similarly, field locations are written as a triple (R, t, A). If the context is clear, the triple (R, t, A) is simplified to . Moreover, denotes the concatenation of tuple u and v, and the result is tuple t.

Constant data involved in SQL, including constants, constant tuples, such as VALUES (101, ‘abc’), or data in external text files, are uniformly replaced by the notation to minimize the impact of the tedious constant data on the discussion. For instance, is a simplification of “A + func(B, 1) − 2”.

We use the expression Q(I) as a query for a database instance I and for any output tuple . R(I) is a relational table in the instance I. Particularly, a query is written as Q in the context where the instance I is clear. In query , q is the language structure that corresponds to SQL, stored procedural language, and other data processing script languages. is the relational table name involved in the query Q. In particular, only references the relational table .

We use the following notation for (monotone) relational algebra queries:

Here, {t} is a set of constant tuples, and the following symbols denote selection, projections, join (or natural join), union, and group and aggregation operation, respectively. The group and aggregation operation is represented as , where g , e is an expression parameterized by the grouping filed A, g is the set of the output tuples, and denotes that the aggregation function that takes the values of tuples in the group G over the aggregation field B as the input. The final output tuples are the concatenation performed on the output result of the calculation expression and aggregation operation.

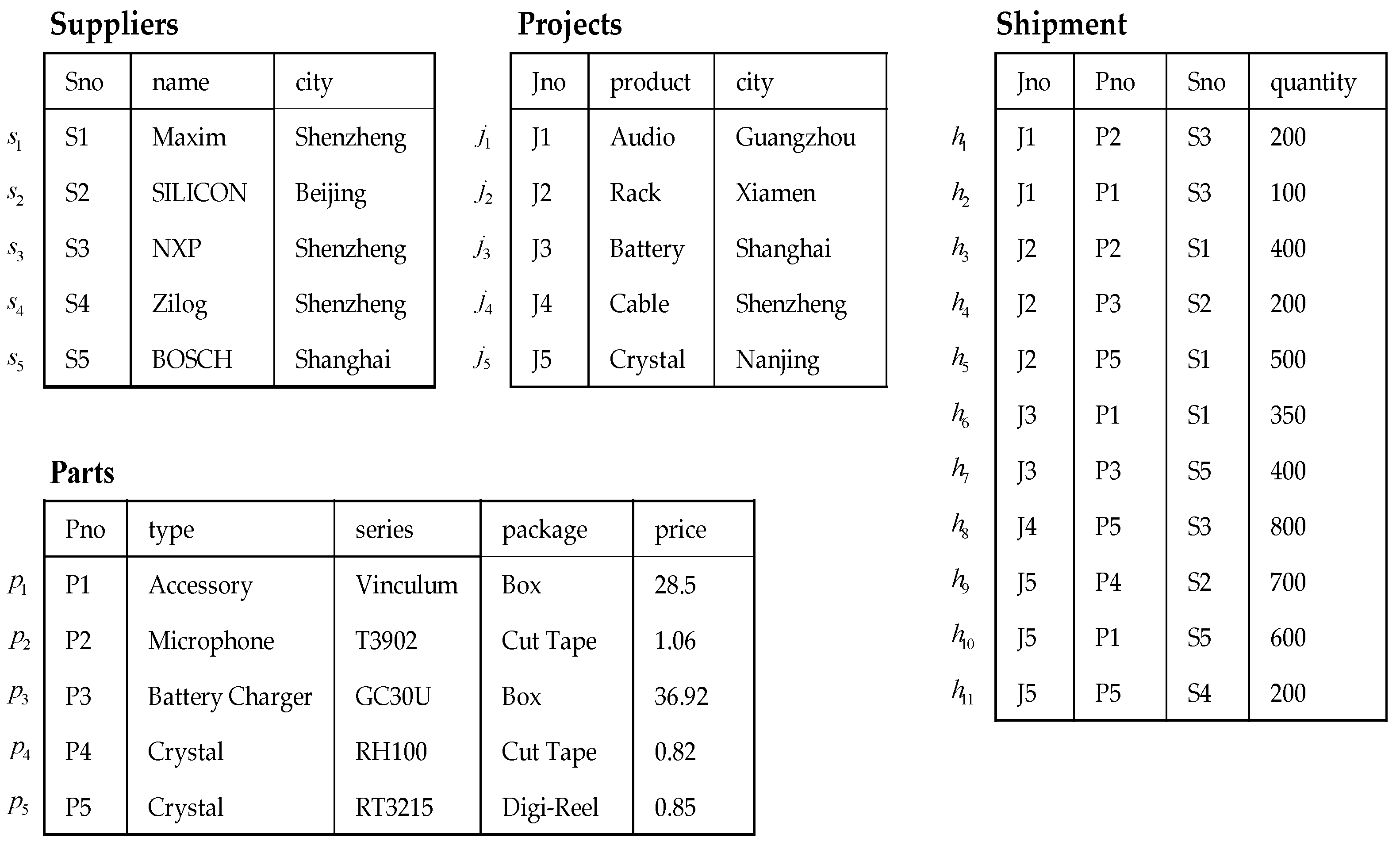

To illustrate the semantics of our method with examples, we considered the suppliers–parts–projects database, reproduced for convenience in

Figure 1, where

,

,

, and

are used to identify the tuples.

3.3. Representation of Provenance Data

Where-prov is a copy contribution semantics describing the relationship between the inputs and outputs by defining propagation rules of attribute values on algebraic operations. To support full-featured SQL and procedural language queries, we extend the annotation propagation rules of [

46] to group aggregation operation and formally define where-provenance on the filter, projection, join, union, and group aggregation operations (See Definition 1).

Hereafter, we consider annotated relations and annotations propagating between locations in the database. Assume that location is denoted as triple , which carries a set of zero or more annotations.

Selection. For , an annotation on propagates to if .

Projection. For , an annotation on propagates to , where and .

Join. For , annotations on and/or propagate to , where and , and are the attributes of and , respectively.

Union. For , annotations on and/or propagate to where or .

Group Aggregation. For , annotations on or propagate to if (or ), where e and aggr denote a calculation expression and an aggregation function, respectively.

The above rules indicate that the annotation propagation is based on the copy behavior. If the context is clear, the triple can be simplified to .

Definition 1 (Where-prov). Consider a database instance I, Q is a query on I, and . Where-provenance is the value of t on field A according to Q and I, denoted as , and is defined as follows:

Direct copy.

Selection.

Projection.

Join.

Union.

Definition 1 shows that the lineage of values propagates and outputs under the rules, making a copy semantic contribution to the final output value.

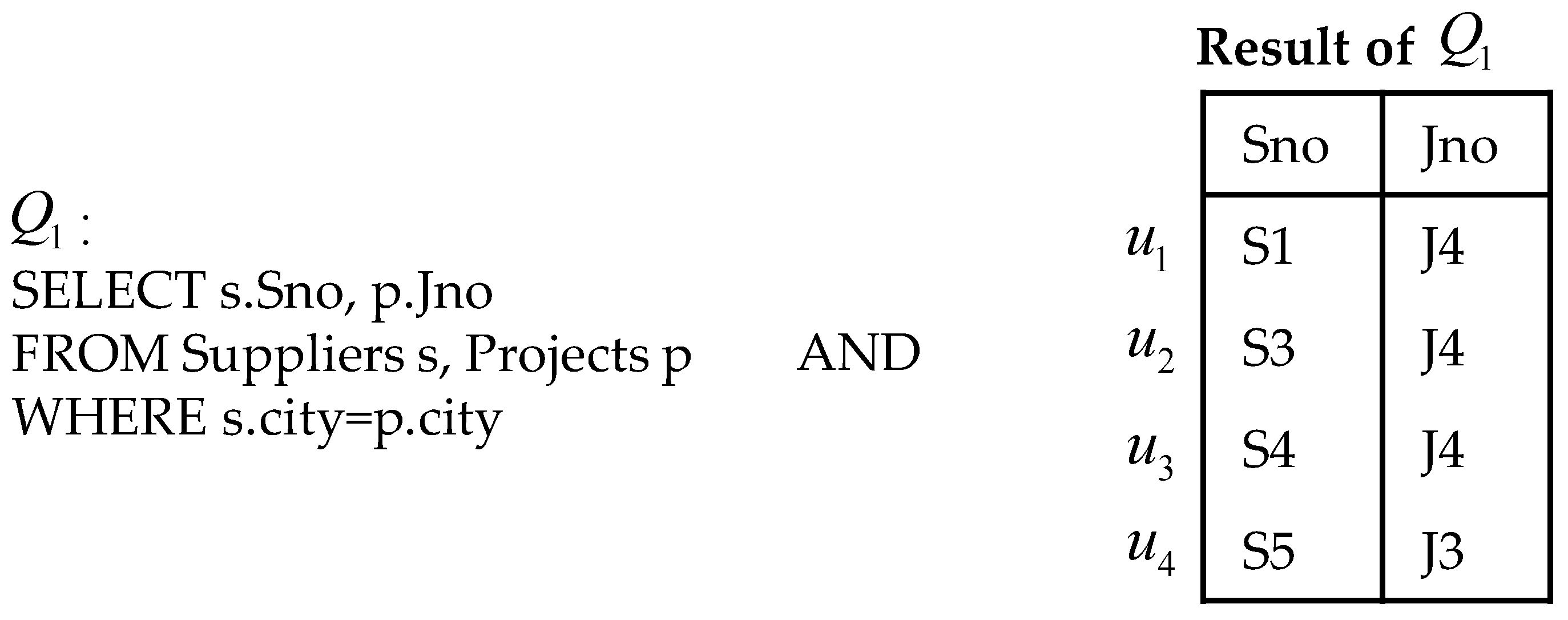

Example 1. Consider a query finding all identifier numbers of suppliers and projects located in the same city. As show in Figure 2,

i

s a typical SQL query, and the corresponding relational algebra is .

According to Definition 1, has provenance: and .

Based on where-prov, it is not difficult to extend to tuple granularity. Intuitively, if the complete or partial copy of tuple u is used to compute the output tuple t, then u is the provenance data of t. In other words, when is the provenance data of , then u is the provenance data of t.

Definition 2 (TupWhere). Consider a tuple t, its provenance is as follows. .

According to Definition 2, the provenance of , and in the result of can be represented as , , and , respectively.

Using where-prov, if a tuple u is fully or partially copied to compute the output tuple t, then u is the provenance data of t. Compared with the value-level where-prov, a schema is at a high level of granularity. Similarly, if tuples of relation table contribute to tuples of relation table , we can intuitively infer that the schema U for contributes to the schema T for at the schema level. Consequently, the standardized definitions of field-level and schema-level provenance data can be obtained (Definitions 3 and 4).

Definition 3 (FProv). The provenance data of field A: where is any query containing A in the list of target fields.

Definition 3 demonstrates that all fields contributing to the value of every tuple on field constitute the field-level provenance of field . For example, .

Definition 4 (SProv). The provenance data of schema T: where is any query inserting its querying results to table T.

Under the copy contribution semantics, if tuples of relation table contribute to tuples of relation table and , then contributes to T at the schema level.

3.4. Representation of Provenance Transformation

In a closed relational database management system that supports data provenance, the process of generating relational data is called provenance transformation. Provenance transformation emerges at different levels of granularity, such as tuples, fields, and values. For the relational data of a given granularity, their provenance transformations form a transformation expression, explaining data creation. To accurately illustrate data provenance, this paper introduces provenance explaining transformation (PET), a method based on transformation expressions. Notably, PET can describe provenance transformation in queries and is exclusive to the provenance transformation of tuples and values.

3.4.1. Tuple and Value Provenance Transformation

Tuples are operating objects in computing the provenance transformation of tuples. Modern DBMS provides a variety of powerful function operations for complex queries. Essentially, such operations can be converted to operations on two-dimensional tables. Our purpose is to eliminate the influence of sophisticated features of query language and, more importantly, to support data provenance for any SQL-like and procedural language with a control structure. Hence, we utilize and standardize five basic operations to construct transformation expressions. We call them meta-transformation, including null transformation (ONull), projection transformation (OProj), group aggregation transformation (OAggr), join transformation (OJoin), and union transformation (OUnion). It is worth noting that these transformations are mainly relational algebraic operations, so it is easy to express SQL-like languages.

Definition 5. Copy-based meta-transformations:

Null transformation , .

Projection transformation , .

Group aggregation transformation , ,

Join transformation ,

Union transformation ,

In the above meta transformations, the Null transformation operation, including the direct copy in Definition 1, filter operations, and other database operations not involving copy transformation do not modify the input tuples, so the input is the output. The projection transformation retains tuples in the specified fields. The group aggregation transformation divides input tuples into groups by specific attribute values, aggregates each group with specified attributes, and outputs the results, where each input group corresponds to an output tuple. The join transformation discovers matched tuples meeting the join condition, and the union transformation merges two input sets into an output. Both join and union operations are binary operations and extendable to multivariate operations. The tuple provenance transformation PET combines the above basic operations to express the provenance transformation process and complex SQL operations in the database. Using the above meta transformations, we define PET as follows.

Definition 6. , provenance transformation of tuple t:

In Definition 6, is an expression for the transformation instead of transformation operation itself. Take as an example. For , the provenance transformation of tuple u can be expressed as , namely, .

It is worth pointing out that in the above transformations, only the transformation can aggregate multiple tuples into one tuple. In this case, an output tuple may simultaneously derive from both and . Therefore, in the process of transforming queries, the output of must be used as the input of . Namely, must be used as a subexpression in the expression of . Otherwise, has no meaning in the transformation expression, and we can replace it with either or or both.

Value can be regarded as a tuple with only one field, so the value-level provenance transformation can be derived from the tuple-level provenance transformation. In this paper, we refer to this derivative version as VPET (Value PET). Similar to PET, the expression of VPET is also formed based on meta-transformation. In the context of VPET, the granularity of the data unit is a value rather than a tuple, which is the exact difference between VPET and PET.

Definition 7. . The value provenance transformation of tuple t on field A:

Given

,

we haveGiven B and C are group and aggregation fields, ,

,

,

,

we have Given B and C are group and aggregation fields, ,

,

,

we have

In Definition 7, for group and aggregation operation , if only originates from the group fields, we can infer that the aggregation function contributes nothing to the result. In other words, the transformation of values is not the result of operation.

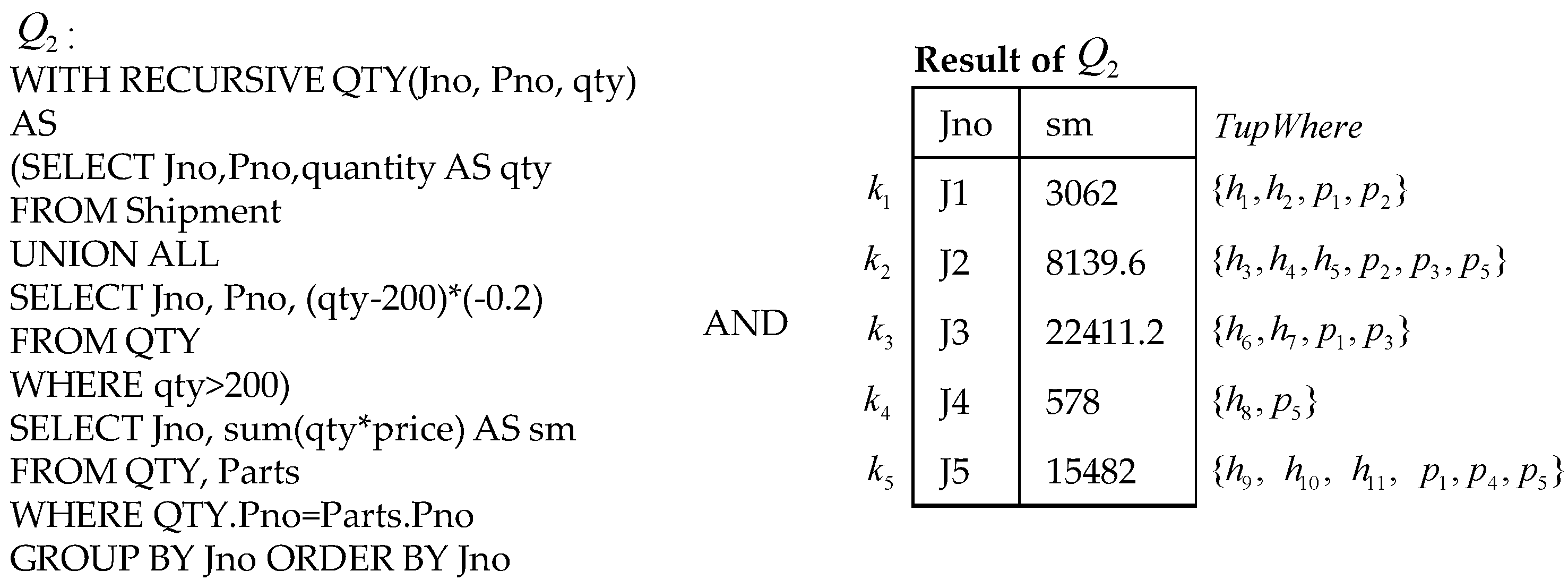

Example 2. Consider a querycomputing the total price after discount for each project (assuming the excess parts can enjoy 20% off when the number of parts exceeds 200).

As shown in

Figure 3, the tuple-level where-prov of the tuple

k1 is

. Suppose

is the CTE recursive subquery for

. The provenance transformations for all output tuples of

are

and

. According to Definitions 6 and 7, the provenance transformation of

is

. The value provenance transformations of

on field

and

are

, and

.

3.4.2. Representing Provenance Transformation in SQL with Meta-Transformation

We SQL-revised PET to support full-featured SQL, and we present how PET represents SQLs in this section. For the sake of discussion, assume that the main body of a query is the SQL of ASPJ-CTE-Win-Sub with selection and filter, projection, join, group aggregation, CTE (common table expression), window function, and set operation. Furthermore, PET supports the query language of small features (such as Sort, Limit, and Distinct). For each SQL feature, the representation method based on meta-transformations is described as follows. To illustrate this, we use to represent the transformation expressions that function as the subexpressions of the operations discussed below.

Ordinary import. Ordinary import is treated as a query on constant relation tables and expressed as .

Selection and filter. The filtering operation does not perform any transformation on tuples, so the corresponding transformation expression is .

Group aggregation, projection, and union are expressed as , , and , respectively.

Joins (both input sets contribute to the output) include inner joins, left/right outer joins, and full outer joins. The Cartesian product operation can be treated as an inner join if join conditions always hold. The join transformation is expressed as:

Inner join: ;

Left outer join: or ;

Right outer join: or ;

Full outer join: , or .

Join (when only one of the two input sets contribute to the output). In this case, the other input set plays the role of a filter. Assume that only the left input set contributes, then the join transformation is expressed as or (right outer join and full outer join will make the left input set a constant relational table).

Window function. The window function is an extension of group aggregation in SQL and can be equivalently represented by group aggregation and join. Therefore, the window function is equivalent to .

Except operation (Except, ). The output tuples only come from , so the except operation is expressed as .

Intersect operation (Intersect, ). The output tuples are from and . In this case, there are two kinds of parallel provenance transformations, denoted as and .

CTE (Including recursive CTE and non-recursive CTE). Non-recursive CTE can be regarded as a nested subquery. Recursive CTE is divided into a base query and a recursive query on the language structure. The input of the recursive query may be the output of the base query or the output of the previous recursion. The output of the CTE can be regarded as the union of a base query, a recursive query with the base query as the input, and a recursive query with the output of the previous recursion as the input. Therefore, recursive CTE is expressed as , (the asterisk indicates using the output of the last recursion as the input) or among the third item that has the premise that the current expression works as a subexpression of the aggregation operation.

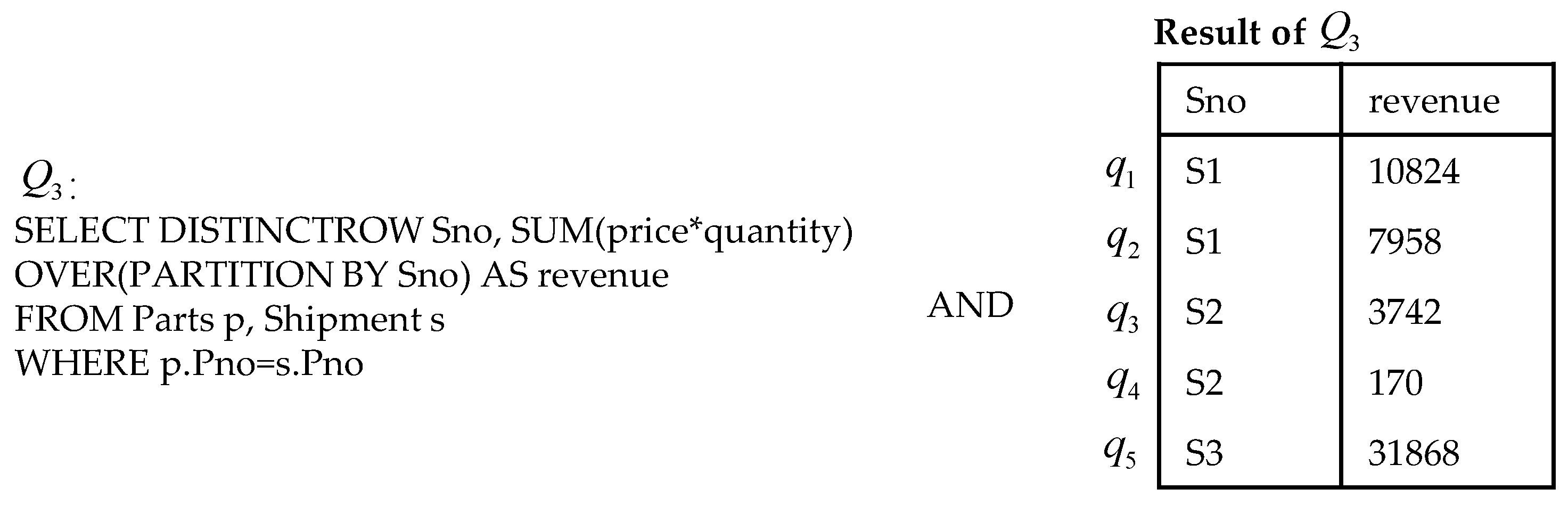

Example 3. Suppose we have the following querycalculating the revenue for suppliers with a window function. The query a

nd its output are shown in Figure 4.

The first output column derives from the table , and the second column represents an aggregation of each supplier’s revenue on each project. For the above provenance transformation definition, it is easy to see that the PET representation for each tuple can be written as ). The value provenance transformations of in field and are and .

3.4.3. Representing Provenance Transformation in Stored Procedures with Meta-Transformation

Database-stored procedures are similar to SQL-like languages or other scripting languages, such as Python and Perl. In this paper, we only focus on SQL-like procedural languages, such as T-SQL for SQL Server, PL/SQL for Oracle, and PL/pgSQL for PostgreSQL. The stored procedure covers two kinds of flow structure: data flow (assignment, SQL execution) and control flow (conditional control, loop control). Only the data flow structure addresses the copy transformation relation of the data, while the control flow structure controls the switch branch of transformation. Here, we introduce how to use basic operations to represent each language feature.

Constant. In procedural language, when statement blocks do not reference any relational table in the database, the data output by this program can be regarded as coming from a constant relational table. Therefore, any query referencing a constant is treated as an ordinary import and expressed as .

Assignment. The assignment operation assigns the calculation result of an expression to a variable. If values of an output tuple on some fields come from the variable, then a projection operation is used to project the input variable to the output tuple on these fields. Suppose that , when A covers all the fields of t, then the transformation expression is . Otherwise, tuple t must contain values of other field set A′ other than A, and the values are the result of other projection expressions. If there is , , then the transformation expression is .

The SQL or dynamic SQL in stored procedures is equivalent to a nested subquery, and we convert it into a subexpression.

Conditional control statement block. A conditional control structure has multiple switch sub-statement blocks that cannot simultaneously execute, so the tuple can only be from one of the sub-statement blocks. Similar to a union operation, the transformation expressions of conditional control structure are or . is a requisite only when the present transformation is a subexpression of . Therefore, a conditional control operation is equivalent to a union operation.

Loop control. Assume is a variable in the body of the loop statement. Before entering the loop statement, , and the transformation expression of in the loop body is . After the execution of the loop statement, we have four cases: (a) if the judgment condition of the loop does not meet at the beginning, the loop body statement will not execute the following: ; (b) the loop body statement is executed only once as the following: ; (c) the loop body statement is executed multiple times, but does not reference the variable . Therefore, the expression is non-recursive, and the value of is always the result of the last loop, namely ; and (d) the statement of the loop body is executed multiple times, and is recursive. Then, we have , which shows that loop control is equivalent to one CTE.

Example 4. Suppose functionreturns the project number and the discount amount, where x and y are inputs representing the discount and the minimum quantity for the discount. The body of this stored procedure is as follows:

03 DECLARE

04 t DISCOUNT; -- DISCOUNT is a custom data type, namely the return type (Jno, amount)

05 r Shipment%rowtype; -- Declare r to be the same type as the tuple of the table Shipment

06 a NUMERIC;

07 p NUMERIC;

08 BEGIN

09 FOR r IN SELECT * FROM Shipment LOOP

10 SELECT price INTO p FROM Parts WHERE Pno = r.Pno;

11 IF r. quantity > x THEN

12 a:= x + (r.quantity − x) *y;

13 SELECT r.Jno, a*p INTO t;

14 ELSE SELECT r.Jno, r.quantity*p INTO t;

15 END IF;

16 RETURN NEXT t;

17 END LOOP;

18 RETURN;

19 END

Assuming the loop in line 9 runs multiple times and the condition in line 11 has been satisfied, the transformation expressions for lines 9 and 10 are . Similarly, the transformation representations of lines 12–14 can be further summarized: for line 12, for line 13, and for line 14.

4. Representing Data Provenance in DBMS

Where-prov and PET can represent provenance data and transformation in a database. In this section, we propose a relational provenance representation model based on where-prov and PET to comprehensively interpret data provenance from multiple levels (relational schemas, attribute fields, tuples, values), especially the provenance transformation process.

4.1. Schema-Level Provenance Representation

Schema-level data provenance is coarser and more suitable for applications that do not focus on the details. In a transformation-intensive database environment, the relational table instances are the results of relation interactions, which reflect the dependencies between tables at the schema level. Since provenance data between schemas does not have a formal definition, this paper introduces a representation model for schema-level provenance.

Schema-level provenance is a directed graph, G = <VR, VO, E>, where VR represents relation table nodes, VO denotes the transformation operation nodes, and E is the directed edges set representing the dependencies among VO, and the dependencies between VR and VO. Specifically, we have:

Definition 8. (1) Tuple Provenance Graph (TupPGraph), (2) Schema Provenance Graph of T (SPGraph), (3) Schema Provenance Graph of database I (SPGraph),.

From the above definition, the schema provenance graph not only includes the provenance transformation of each tuple but also explains the transformation process between the target relation schema and source relation schema. Similarly, the overall schema provenance graph SPGraph(I) merges all the schema provenance graphs of the database and can explain the specific dependencies between different relation schemas.

Every output tuple has a tuple provenance graph, and multiple output tuples may have the same tuple provenance graph for a query Q, indicating that output tuples can share a tuple provenance graph. Furthermore, two tuple provenance graphs in the same query Q may have the common subgraph, indicating that merging tuple provenance graphs is possible. Therefore, we keep one tuple provenance graph for multiple output tuples and combine different tuple provenance graphs to obtain the schema provenance graph. During the merging process, some nodes may lose information, such as the number of operations or operation objects of the lower nodes. To avoid this, we introduce the “Option’’ node without transformation meaning, which means multiple possible branches for this node.

For example, the tuple provenance graph of transformation expression can be expressed as , ,, where is the basic transformation ( is the top-level operation), and is a set containing the relations among operations and the relations between operations and reference relation tables.

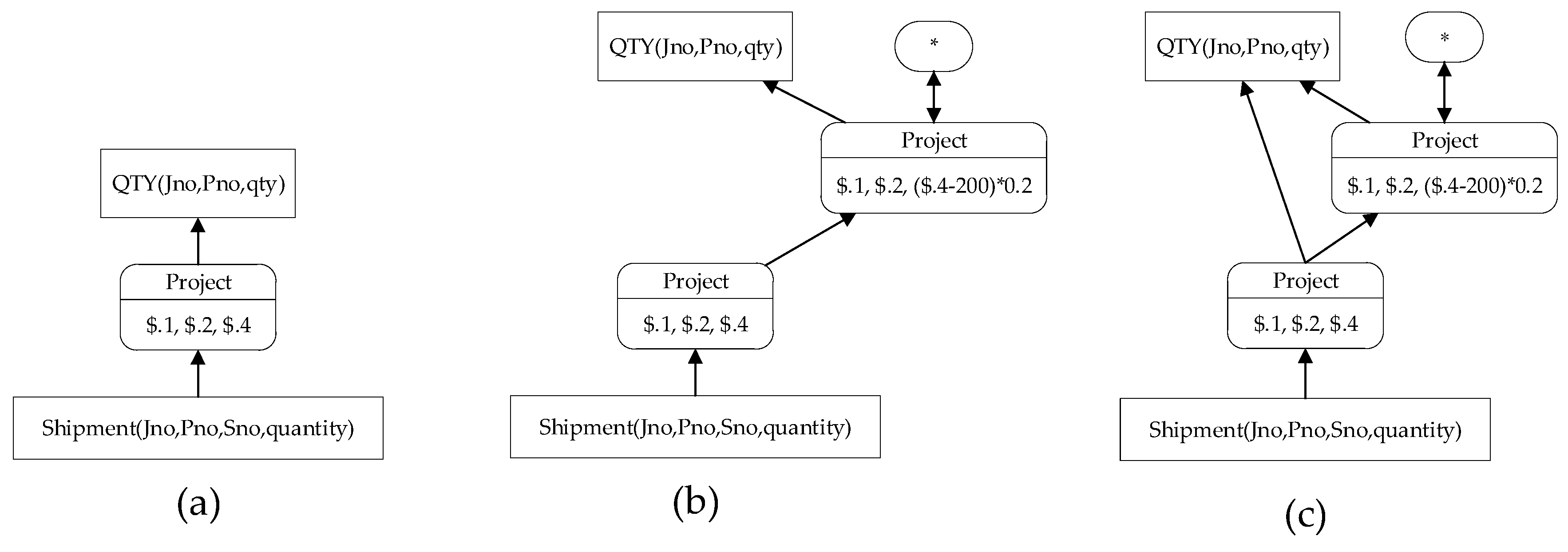

Figure 5 shows the possible tuple provenance graphs of the output tuples of table

in query

in Example 2, which is output by the subquery

.

Figure 5a–c displays tuple provenance subgraphs of the tuple

and

, tuples

, and tuples

and

, respectively. Notably, the tuple provenance graph in

Figure 5c is actually the SPGraph of the query

.

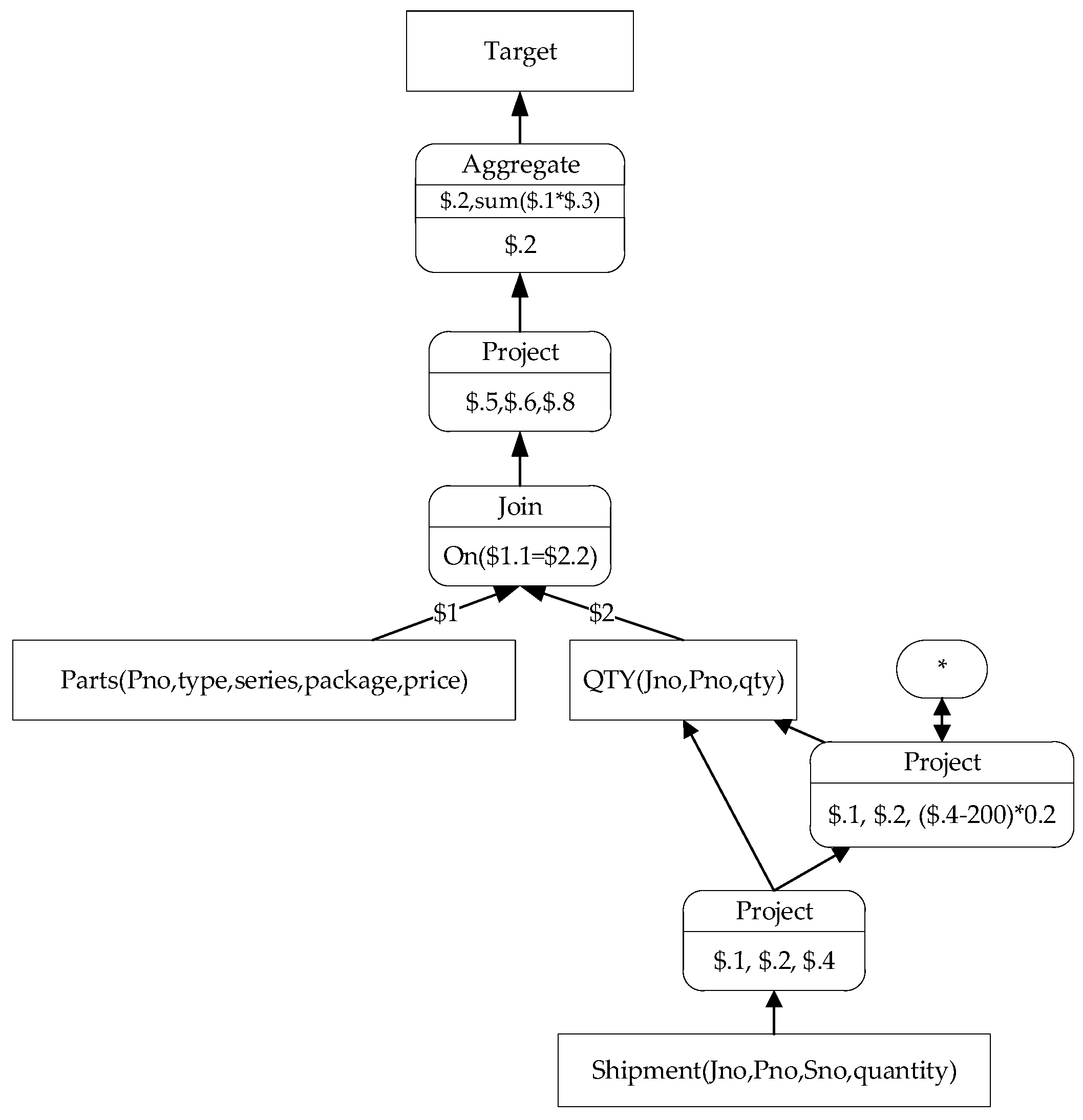

According to the discussion in Example 2, we have

. It is not difficult to infer that the remaining part of the SPGraph for

is clear and can be merged with the SPGraph for

to obtain the SPGraph for

, as shown in

Figure 6.

4.2. A Compact Representation of TupPGraph

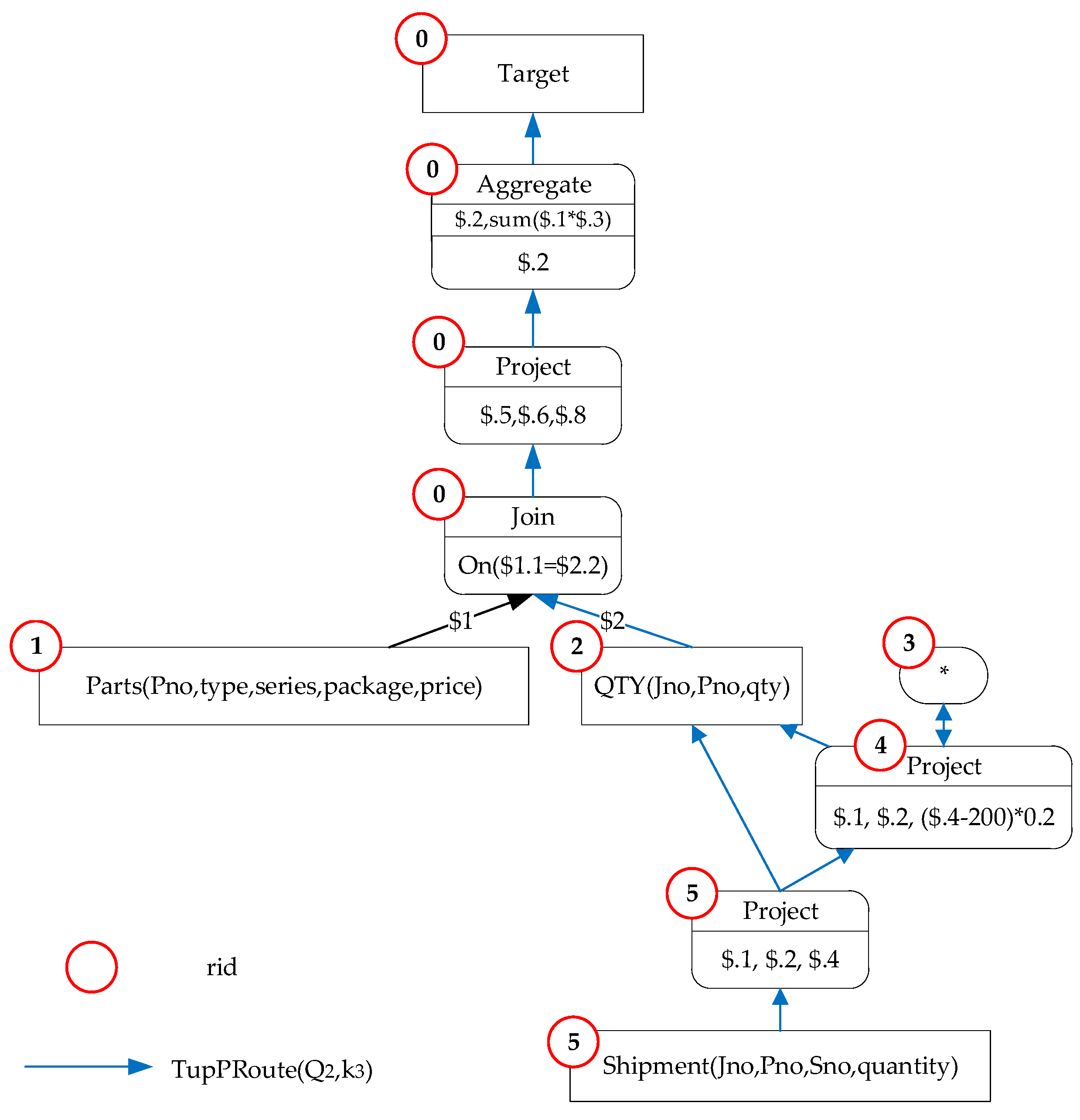

As a subgraph of schema provenance graph SPGraph, each tuple provenance graph corresponds to one kind of schema transformation relations. To clarify the relationship between TupPGraph and SPGraph and reflect TupPGraph of each tuple in SPGraph (or every possibility in schema transformation relations), we put forward the concept of tuple provenance route (TupPRoute) to represent the provenance of tuples.

Definition 9. Tuple Provenance Route(TupPRoute),, where, and.

From the description of the provenance graph, TupPGraph is an expression tree with several branches. Each node in SPGraph has a semantic parent and multiple child nodes in the tree structure. In Definition 9, BP is a bitmap array for the provenance route, and

Vrid represents a vertex identified as

rid. All nodes in a SPGraph (including relational table nodes and transformation nodes) have an identifier rid that the maximum identifier value is MAX_RID. The rid bit in the BP is set to 1 if a node belongs to a TupPGraph, and otherwise 0. Though rid is theoretically unique, the bitmap may be too large if each node is assigned an identifier bit. Therefore, this paper uses a more compact way to ascertain a route by preorder traversing the schema provenance graph and allocating the same rid for nodes on the same branch, meaning that the value of MAX_RID is small (Algorithm 1).

| Algorithm 1. |

Alg. generateRids(SPGraph):

01 begin

02 root := SPGraph.rootNode

03 generateRidRecursive(root, 0)

04 end

Alg. generateRidRecursive(eachNode, currRid):

05 begin

06 n := eachNode

07 if n.rid has not been assigned then

08 n.rid := currRid

09 end if

10 foreach cn in n.childNodes

11 if count(n.childNodes) = 1 then

12 generateRidRecursive(cn, currRid)

13 else

14 generateRidRecursive(cn, currRid + 1)

15 end if

16 end foreach

17 end |

Figure 7 shows the bitmaps corresponding to the three provenance routes in the query

Q2 (base query, recursive query with base query as input, recursive query with the last recursion as input) are “101001”, “101111”, and “110000”. Combining the bitmap array and the schema provenance graph, we can deduce the transformation path of the tuple

in the provenance graph (the blue path in the figure).

4.3. Field and Value Level Provenance Representation

Value provenance transformation (VPET) derives from the provenance transformation of tuples. Using a similar way of representing schema provenance graph and tuple provenance route, we utilize the field provenance graph (PGraph of Field, FPGraph) and value provenance route (VPRoute) to represent field- and value-level provenance. For :

Definition 10. (1) PGraph of Value (VPGraph), value provenance graph

(2) PGraph of Field (FPGraph), provenance graph for field A, . (3) PRoute of Value (VPRoute), value provenance route .

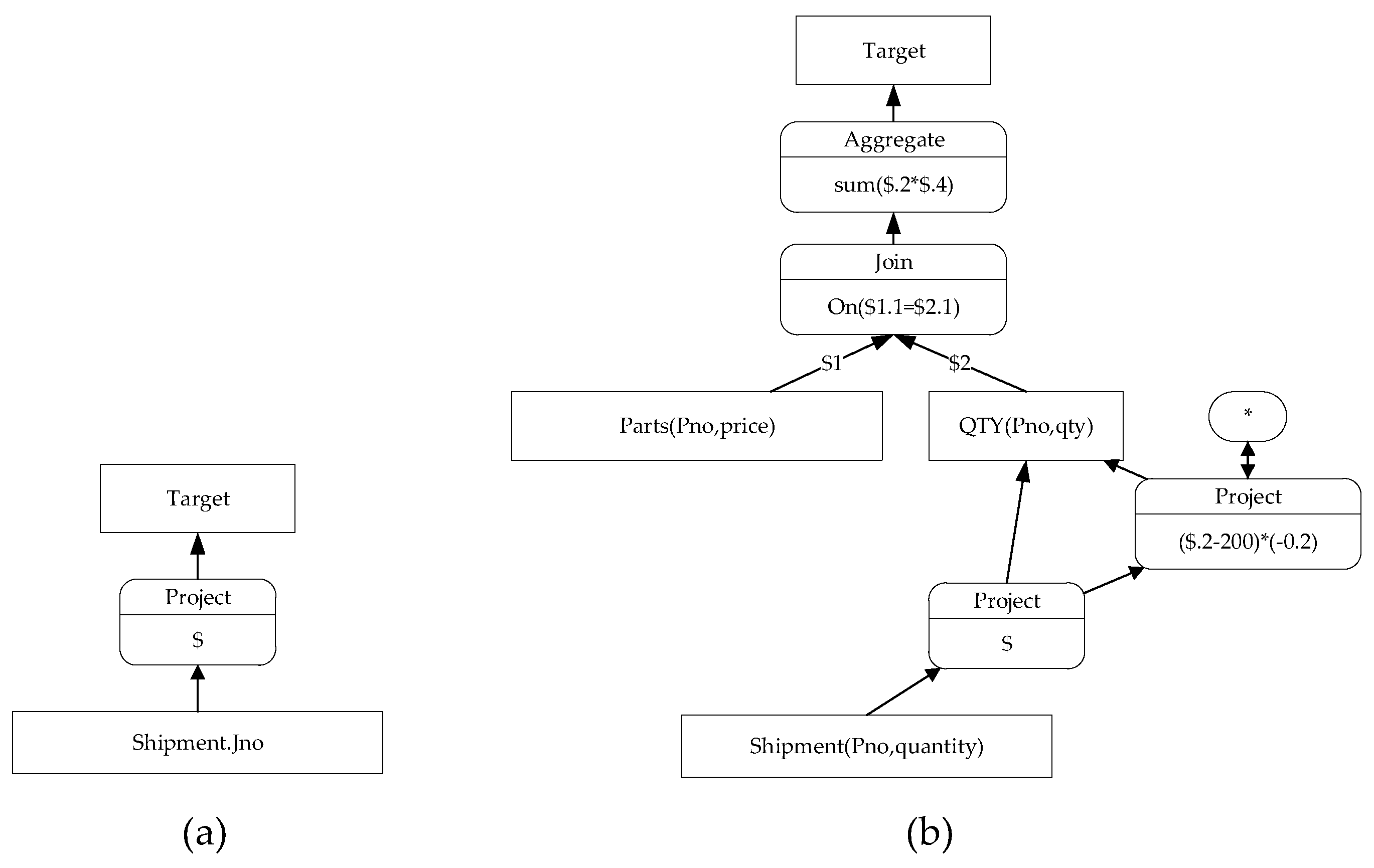

Looking back to Example 2 in

Figure 3, the VPET representation for each record in field

is

. It is easy to represent the provenance graph for field

(

Figure 8a). For field

, the provenance transformation representations corresponding to each tuple are

and

, as shown in

Figure 8b.

4.4. Implementation of Data Provenance in PostgreSQL

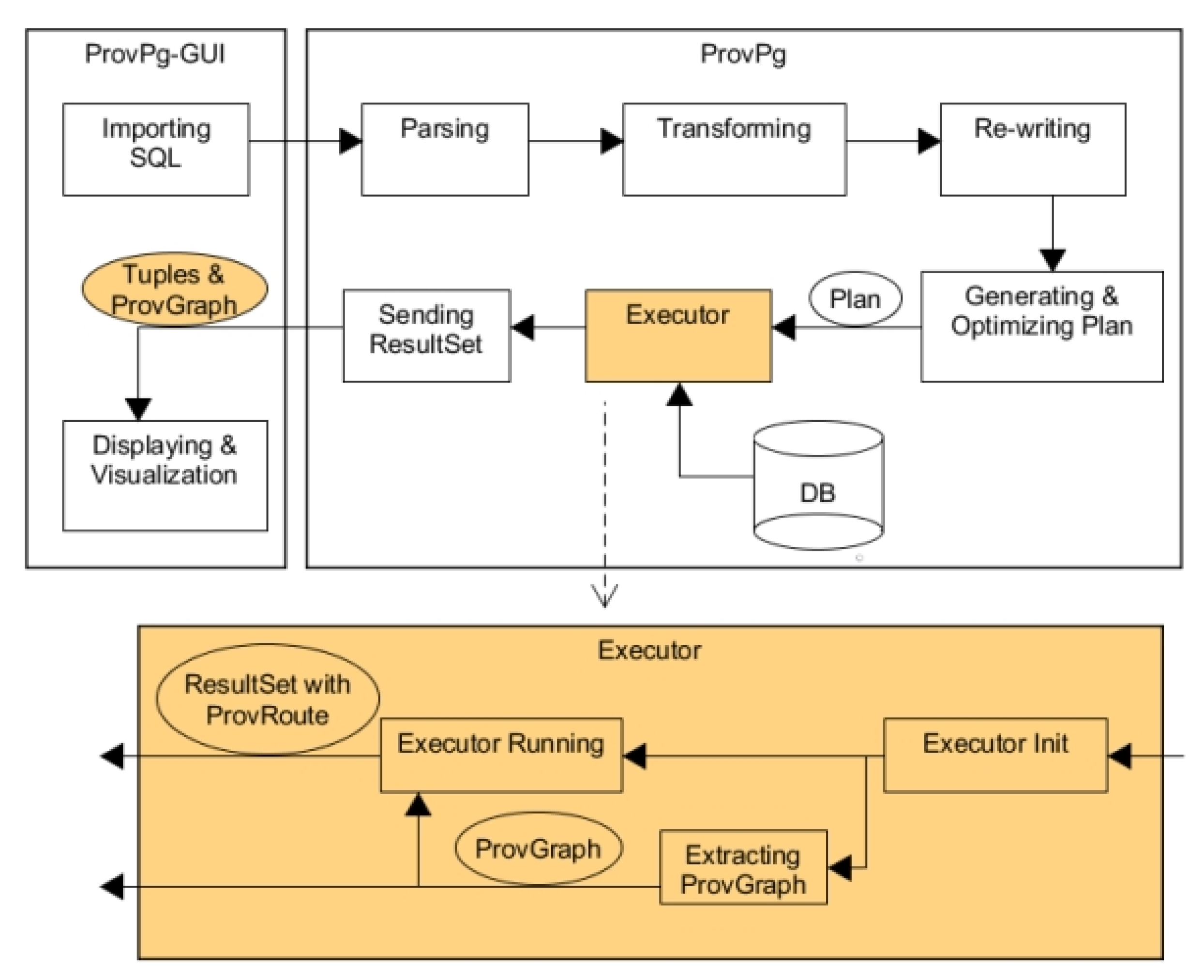

We implemented the model of provenance graph and provenance route in PostgreSQL and provided a client to query and visualized the provenance information. We refer to this improved system as ProvPg. ProvPg has a graphical client known as ProvPg-GUI.

The primary function modules added in ProvPg include the extraction and query of provenance information. The provenance information extraction is implemented by the provenance extraction subsystem. In addition, ProvPg provides a series of query interfaces in the client to support provenance information query and visualization.

ProvPg (

Figure 9) is accomplished by using the Hook mechanism of PostgreSQL to add extended functions, and the source code of the Executor is modified. For the plan tree of a query, the processing flow is: (a) Before executing the query, a schema provenance graph (SPGraph) is extracted and produced with the help of the structural information of the plan tree. (b) During the execution of the plan tree, each time a tuple is an output, the corresponding provenance route (TupPRoute) is extracted in the form of a bitmap array. The bitmap array will be inserted into the output tuple as an additional field value. Finally, the client will receive two result sets. One is the output tuple set (with the provenance route), and the other is a provenance graph in the format of JSON. The graphical client ProvPg-GUI is used to receive the SQL input by the user, display the returned query results, and visualize the provenance graph and provenance route.

In order to evaluate the impact of ProvPg on PostgreSQL’s query engine, we conduct TEC Benchmark™ H [

47] (TPC-H) test on ProvPg and PostgreSQL. The computation overload of ProvPg on PostgreSQL is analyzed. Our TPC-H instance contains eight relational tables, and we test 22 customized pieces of SQL on this instance (Standard SQL 92). Although the 22 SQLs are missing some features, such as CTE, and are not involved in the stored procedure, the performance of the query engine mainly depends on underlying physical operations, such as tuple scanning, join, and sort. The 22 SQLs have covered the primary calculation process of the query engine. The major significant load of stored procedure in PostgreSQL lies in the execution of a query plan. Therefore, the TPC-H criterion is sufficient to compare ProvPg with the original PostgreSQL.

We construct three datasets ranging from 1G to 4G and 10G for the query tests. The specific running environment is (a) CPU: 64-bit, Intel(R)Core (TM) i5-4460 3.20 GHz; (b) memory: 12G; (c) operating system: Ubuntu 12.04 for operating system; (d) kernel: Linux 3.5.0; (e) PostgreSQL: version 9.5.0.

Table S1 shows the query execution time of PostgreSQL and ProvPg on the above dataset.

Figure S1 shows the ratios of overhead imposed by the data provenance module. The experiment results indicate the following:

The overall extra cost is relatively small and within acceptable limits. The experiments show that, except for Q2, Q10, Q13, and Q18, the extra cost resulting from the provenance module for each SQL is less than 5%, and the average overhead for three kinds of load sizes is all below 3%. Taking the benefits from data provenance into consideration, we believe that the additional cost is acceptable.

There is no positive correlation between the extra cost and load size. For different-sized datasets, the extra cost of ProvPg on PostgreSQL is stable overall and does not dramatically fluctuate according to data scale. Therefore, we think the extra cost of a query is predictable and estimated.

We also analyze the correlation between the extra cost ratio and execution strategy of the database engine. The Pearson’s correlation coefficient of

R1 and

R2 demonstrate that the extra cost ratio is related to the materialization ratio when we increase the load (

Tables S1 and S2), and the dataset scale is more than 10G. The correlation is more significant when we exclude Q8 and Q9 from the SQLs, indicating that materialization clearly prolongs the running time of the extended system. Furthermore, the underlying operation of the database system also affects the increment of the overhead. For example, as Q8 and Q9 mainly perform hash operations, the overhead caused by the provenance module is low even when their materialization ratios are high. As a contrast, Q18 needs to execute a sort operation, and the overhead ratio is high even if it has a small materialization ratio on 1G, 4G scale.