Multi-Target Regression Based on Multi-Layer Sparse Structure and Its Application in Warships Scheduled Maintenance Cost Prediction

Abstract

1. Introduction

- Based on the traditional MTR framework, the latent variable space is introduced to form a multi-layer learning structure, and the sparsity constraint is imposed so that the same latent variables can be shared among the associated targets, thus improving the performance of the algorithm with multiple outputs.

- An auxiliary matrix is introduced in the latent variable space to learn the structural noise among the output targets and reduce its adverse effects on the regression modelling, and an alternating optimization algorithm is proposed for solving the problem.

- The MTR algorithm is applied to the WSM cost prediction problem to improve the prediction accuracy of subentry costs and total costs by making use of the correlation information among different subentry costs. Extensive experimental evaluation on real-world datasets and cost datasets of WSM demonstrate the effectiveness of the proposed method in the WSM cost prediction problem.

2. Related Work

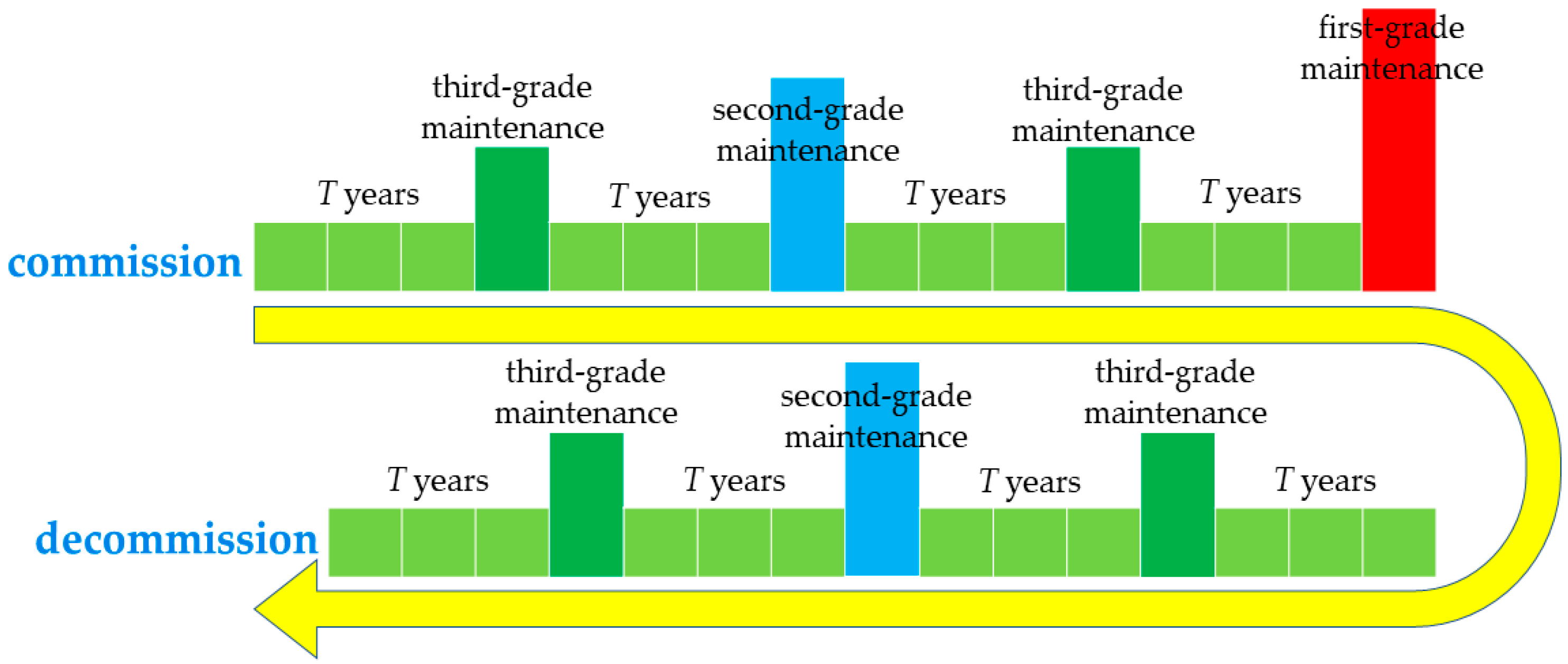

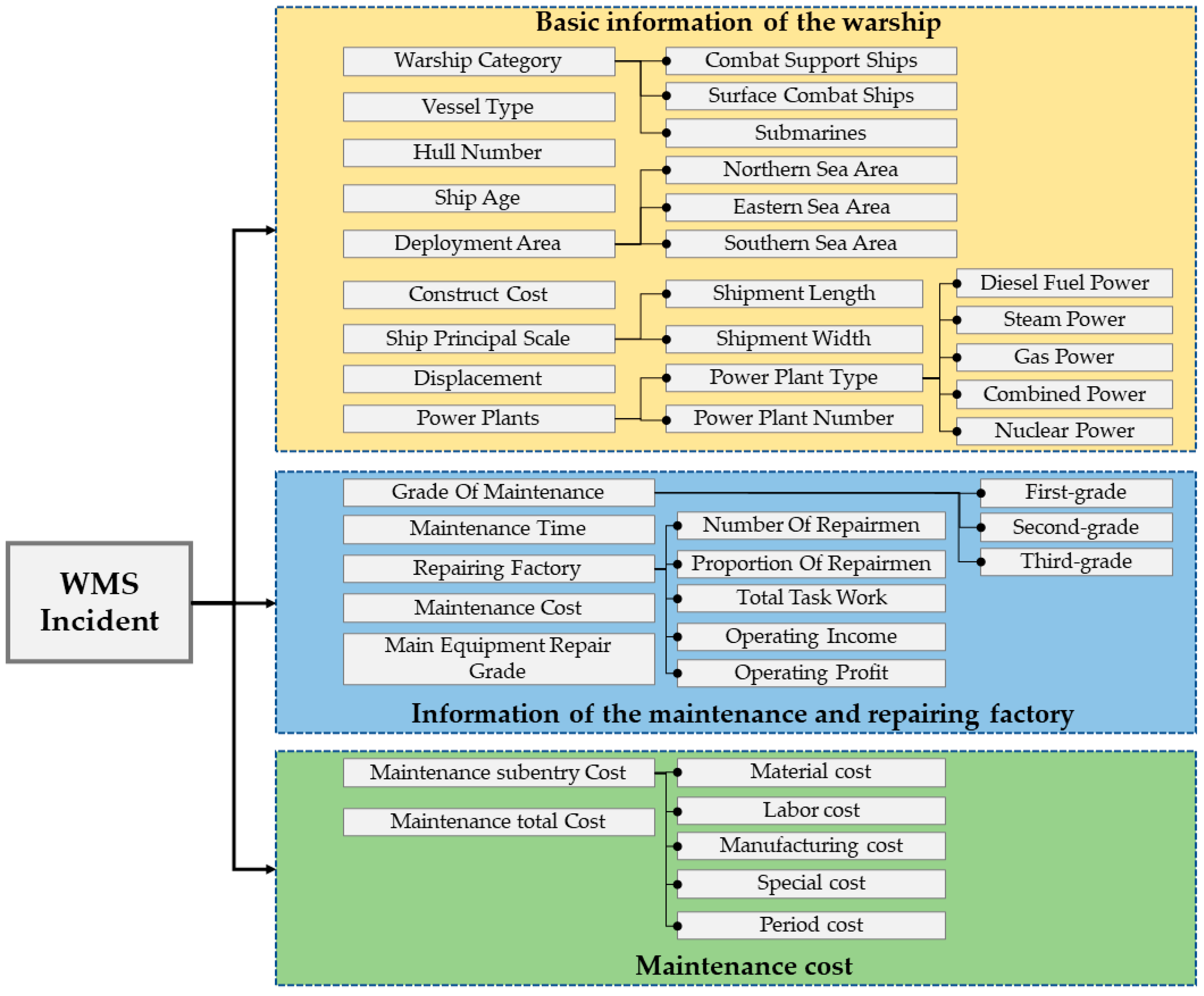

2.1. Warships Scheduled Maintenance Cost Prediction

2.2. MTR Algorithm

3. MTR Algorithm Based on Multi-Layer Sparse Structure

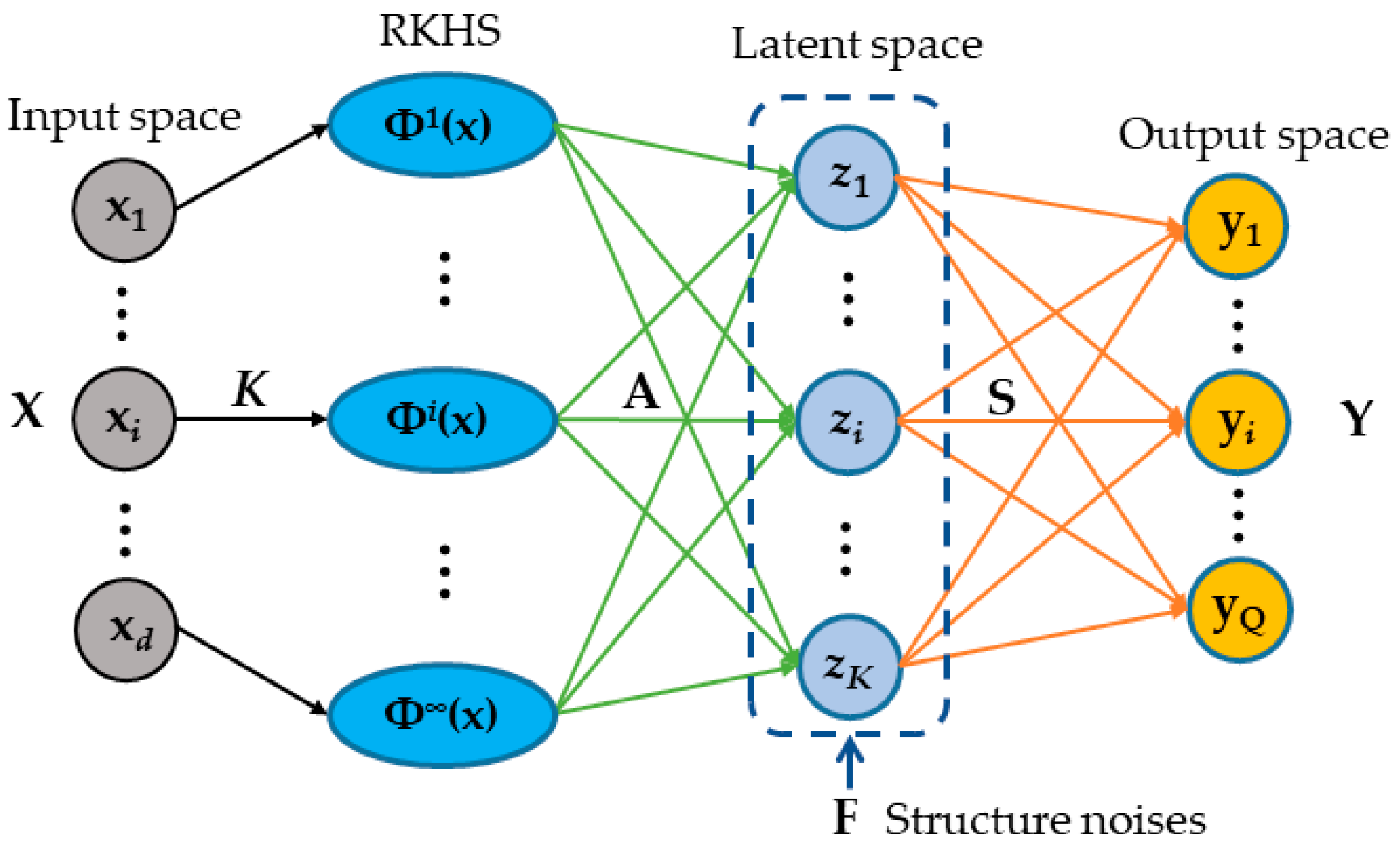

3.1. Multi-Layer MTR

3.2. Non-Linear Extensions Based on Kernel Tricks

3.3. Robust MTR by Alleviating Noises

3.4. Alternating Optimization

3.4.1. Fix S Update F and A

3.4.2. Fix F and A update S

| Algorithm 1 The alternative optimization algorithm to solve MTR-MLS. |

| Input: data matrix X associated with corresponding targets matrix Y; Regularization parameter λ1, λ2, λ3. |

| Output: the regression coefficient matrix A; the structure matrix S; the latent feature matrix F. |

| 1. Initialize , and , and set i = 1; 2. Repeat 2.1. Update the matrix by solving (13); 2.2. Update the matrix by solving (15); 2.3. Calculate the diagonal matrix by solving (5); 3. Update the matrix by solving (19). 4. 5. Until Convergence. |

| 6. Return A, S and F. |

3.5. Convergence and Complexity Analysis

4. Experiments and Results

4.1. Experimental Setting and Datasets

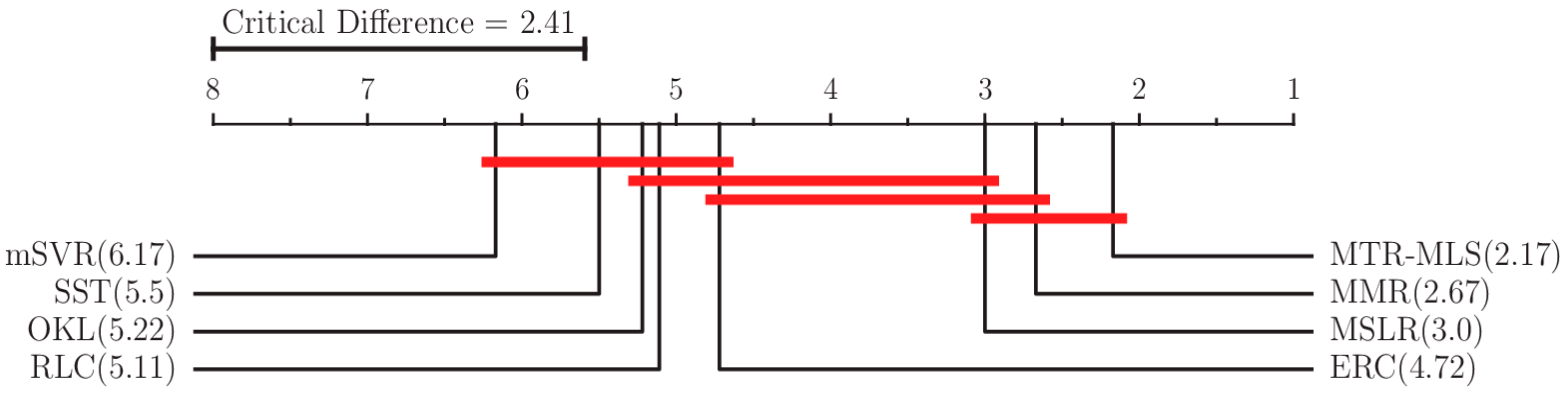

4.2. Results on Real-World Datasets

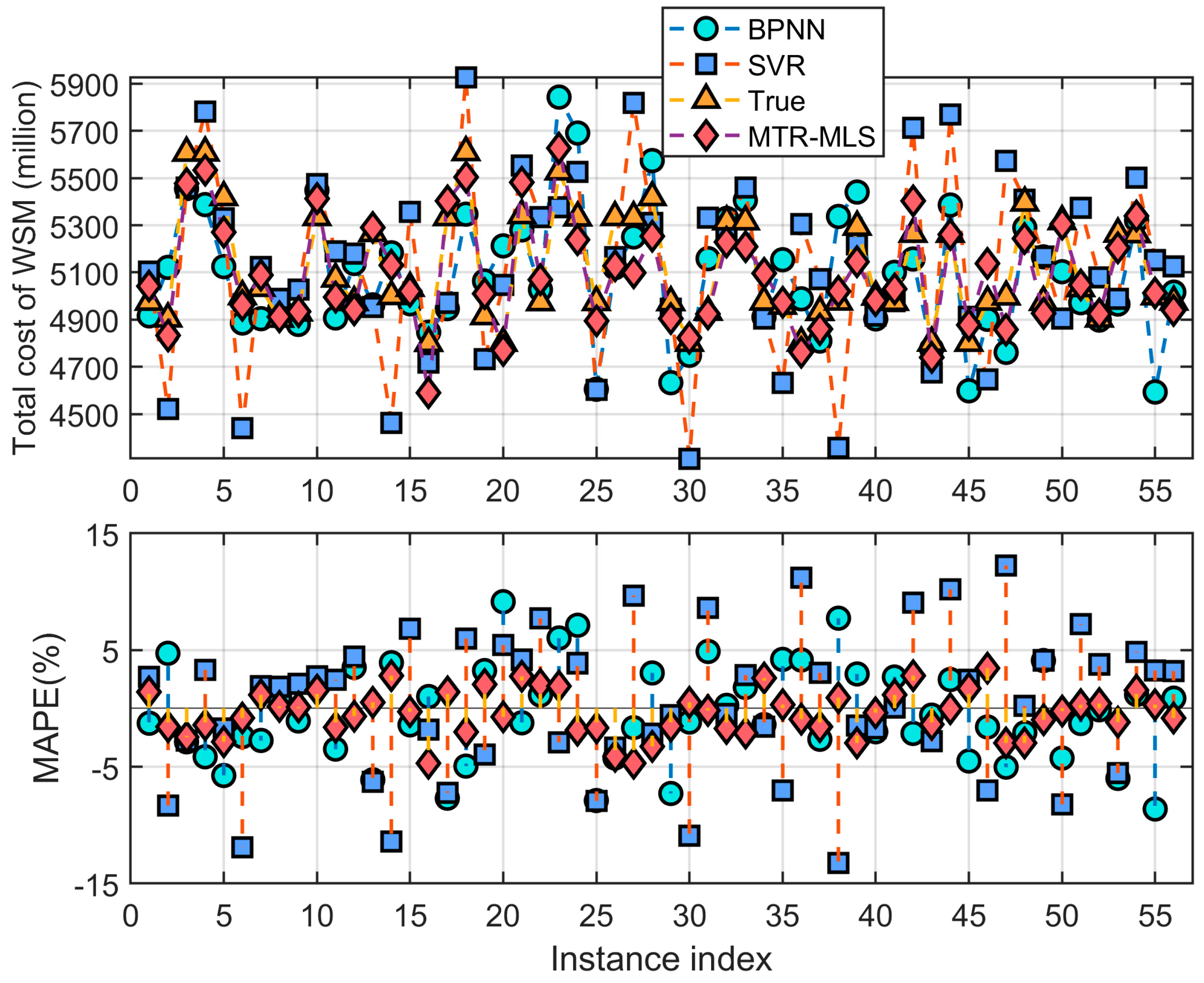

4.3. Experiments in Cost Prediction of WMS

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, M.; He, D.; Sun, S. Multivariable case adaptation method of case-based reasoning based on multi-case clusters and Multi-output support vector machine for equipment maintenance cost prediction. IEEE Access 2021, 9, 151960–151971. [Google Scholar] [CrossRef]

- Zhang, Q. Prediction of ship equipment maintenance cost based on grey relational degree and SVM. Comput. Digit. Eng. 2010, 38, 15–18. [Google Scholar] [CrossRef]

- Shang, F.; An, T.; Xie, L.; Sun, S. Grey combination prediction model for calculating target price of equipment repair. J. Wuhan Univ. Technol. (Inf. Manag. Eng. Ed.) 2015, 37, 497–501. [Google Scholar]

- Liu, M. Application of improved GM (1,1) model in ship maintenance cost prediction. Ship Electron. Eng. 2010, 30, 151–154. [Google Scholar]

- Liu, L.; Geng, J.; Wei, S.; Xu, S. Analysis of factors affecting ship equipment maintenance costs based on grey orthogonal. Firepower Command Control 2018, 43, 89–93. [Google Scholar]

- He, P.; Sun, S. Prediction model of ship equipment maintenance cost based on improved GM (0, N). Ship Electron. Eng. 2022, 42, 151–154. [Google Scholar]

- Yin, S.; Xie, N.; Hu, C. Development cost estimation of civil aircraft based on combination model of GM (1, N) and MLP neural network. In Proceedings of the 2015 IEEE International Conference on Grey Systems and Intelligent Services (GSIS), Leicester, UK, 18–20 September 2015; pp. 312–317. [Google Scholar]

- Zi, S.; Wei, R.; Jiang, T.; Xie, L. Improved adjustment model of ship maintenance cost cases. Syst. Eng. Electron. Technol. 2012, 34, 539–543. [Google Scholar]

- Zi, S.; Wei, R.; Jiang, T.; Xie, L. Application of case-based reasoning in prediction of ship maintenance costs. J. Nav. Univ. Eng. 2012, 24, 107–112. [Google Scholar]

- Zi, S.; Wei, R.; Guan, B.; Xie, L.; Jiang, T. Case similarity retrieval technology in ship maintenance cost prediction. Comput. Integr. Manuf. Syst. 2012, 18, 208–215. [Google Scholar]

- Lin, M.; Wang, C.; Xie, L. Case based reasoning prediction of ship equipment maintenance cost based on double similarity retrieval. J. Nav. Univ. Eng. 2022, 34, 68–73. [Google Scholar]

- Lin, M.; Wang, C.; Tang, Z. Prediction method of ship planned maintenance cost based on case based reasoning. China Ship Res. 2021, 16, 72–76. [Google Scholar]

- Kasie, F.; Bright, G. Integrating fuzzy case-based reasoning, parametric and feature-based cost estimation methods for machining process. J. Model. Manag. 2021, 16, 825–847. [Google Scholar] [CrossRef]

- Jian, T.; Zhong, Q.; Jin, B. Study on Cost Forecasting Modeling Framework Based on KPCA & SVM and a Joint Optimization Method by Particle Swarm Optimization. In Proceedings of the 2009 International Conference on Information Management, Innovation Management and Industrial Engineering, Washington, DC, USA, 26–27 December 2009; pp. 375–378. [Google Scholar]

- Jian, T.; Zhang, H. Study on Cost Prediction Modeling with SVM Based on Sample-Weighted. In Proceedings of the 2010 3rd International Conference on Information Management, Innovation Management and Industrial Engineering, Kunming, China, 26–28 November 2010; pp. 477–480. [Google Scholar]

- Ibrahim, E.-S.N. Support Vector Machine Cost Estimation Model for Road Projects. J. Civ. Eng. Archit. 2015, 9, 1115–1125. [Google Scholar]

- Chou, J.-S.; Cheng, M.-Y.; Wu, Y.-W.; Tai, Y. Predicting high-tech equipment fabrication cost with a novel evolutionary SVM inference model. Expert Syst. Appl. 2011, 38, 8571–8579. [Google Scholar] [CrossRef]

- Chen, X.; Yi, M.; Huang, J. Application of a PCA-ANN Based Cost Prediction Model for General Aviation Aircraft. IEEE Access 2020, 8, 130124–130135. [Google Scholar] [CrossRef]

- Wang, H.; Huang, Y.; Gao, C.; Jiang, Y. Cost Forecasting Model of Transformer Substation Projects Based on Data Inconsistency Rate and Modified Deep Convolutional Neural Network. Energies 2019, 12, 3043. [Google Scholar] [CrossRef]

- Ujong, J.; Mbadike, E.; Alaneme, G. Prediction of cost and duration of building construction using artificial neural network. Asian, J. Civ. Eng. Build. Hous. 2022, 23, 1117–1139. [Google Scholar] [CrossRef]

- Papatheocharous, E.; Andreou, A. Hybrid Computational Models for Software Cost Prediction: An Approach Using Artificial Neural Networks and Genetic Algorithms; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Liu, G.; Tang, X.; Liu, Y. Prediction for Missile Development Cost Based on Neural Network. Tactical Missile Technol. 2003, 1, 23–26. [Google Scholar]

- Liu, J.; Ye, Q. Xiamen Project Cost Prediction Model Using BP and RBF Neural Networks. J. Overseas Chin. Univ. Nat. Sci. Ed. 2013, 34, 576–580. [Google Scholar]

- Borchani, H.; Varando, G.; Bielza, C.; Larrañaga, P. A survey on multi-output regression. WIREs Data Min. Knowl. Discov. 2015, 5, 216–233. [Google Scholar] [CrossRef]

- Spyromitros-Xioufis, E.; Tsoumakas, G.; Groves, W.; Vlahavas, I. Multi-Label Classification Methods for Multi-Target Regression. Comp. Sci. 2012, 104, 55–98. [Google Scholar]

- Tsoumakas, G.; Spyromitros-Xioufis, E.; Vrekou, A.; Vlahavas, I. Multi-Target Regression via Random Linear Target Combinations; Springer: Berlin/Heidelberg, Germany, 2014; pp. 225–240. [Google Scholar]

- Zhen, X.; Yu, M.; He, X.; Li, S. Multi-Target Regression via Robust Low-Rank Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 4, 497–504. [Google Scholar] [CrossRef] [PubMed]

- Evgeniou, T.; Micchelli, C.; Pontil, M. Learning Multiple Tasks with Kernel Methods. J. Mach. Learn. Res. 2005, 6, 615–637. [Google Scholar]

- Dinuzzo, F. Learning output kernels for multi-task problems. Neurocomputing 2013, 118, 119–126. [Google Scholar] [CrossRef][Green Version]

- Arashloo, S.R.; Kittler, J. Multi-target regression via non-linear output structure learning. Neurocomputing 2022, 492, 572–580. [Google Scholar] [CrossRef]

- Dinuzzo, F.; Ong, C.; Gehler, P.; Pillonetto, G. Learning Output Kernels with Block Coordinate Descent. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Washington, DC, USA, 28 June–2 July 2011. [Google Scholar]

- Mauricio, A.; Lorenzo, R.; Neil, D. Kernels for Vector-Valued Functions: A Review. Found. Trends® Mach. Learn. 2012, 4, 195–266. [Google Scholar]

- Rai, P.; Kumar, A.; Daumé, H. Simultaneously leveraging output and task structures for multiple-output regression. In Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 2; Curran Associates Inc.: Lake Tahoe, NV, USA, 2012; pp. 3185–3193. [Google Scholar]

- Zhen, X.; Yu, M.; Zheng, F.; Nachum, I.B.; Bhaduri, M.; Laidley, D.; Li, S. Multitarget Sparse Latent Regression. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1575–1586. [Google Scholar] [CrossRef]

- Zhang, Y.; Yeung, D.-Y. A convex formulation for learning task relationships in multi-task learning. In Proceedings of the Twenty-Sixth Conference on Uncertainty in Artificial Intelligence; AUAI Press: Catalina Island, CA, USA, 2010; pp. 733–742. [Google Scholar]

- Dinuzzo, F.; Schölkopf, B. The Representer Theorem for Hilbert Spaces: A Necessary and Sufficient Condition; Curran Associates Inc.: Red Hook, NY, USA, 2012. [Google Scholar]

- Tsoumakas, G.; Spyromitros-Xioufis, E.; Vilcek, J.; Vlahavas, I. MULAN: A Java Library for Multi-Label Learning. J. Mach. Learn. Res. 2011, 12, 2411–2414. [Google Scholar]

- Sanchez-Fernandez, M.; de-Prado-Cumplido, M.; Arenas-Garcia, J.; Perez-Cruz, F. SVM Multiregression for Nonlinear Channel Estimation in Multiple-Input Multiple-Output Systems. IEEE Trans. Signal Process. 2004, 52, 2298–2307. [Google Scholar] [CrossRef]

- Gong, P.; Ye, J.; Zhang, C. Robust Multi-Task Feature Learning, KDD: Proceedings. Int. Conf. Knowl. Discov. Data Min. 2012, 2012, 895–903. [Google Scholar]

- Herrera, S.G.i.F. An Extension on “Statistical Comparisons of Classifiers over Multiple Data Sets” for all Pairwise Comparisons. J. Mach. Learn. Res. 2008, 9, 2677–2694. [Google Scholar]

- Demsar, J. Statistical Comparison of Classifiers over multiple dataset. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Qi, J.; Hu, J.; Peng, Y. A new adaptation method based on adaptability under k-nearest neighbors for case adaptation in case-based design. Expert Syst. Appl. 2012, 39, 6485–6502. [Google Scholar] [CrossRef]

| Datasets | Samples | Input (d) | Target (Q) | #-fold |

|---|---|---|---|---|

| ANDRO | 49 | 30 | 6 | 10 |

| ATP1D | 337 | 411 | 6 | 10 |

| ATP7D | 296 | 411 | 6 | 10 |

| EDM | 154 | 16 | 2 | 10 |

| ENB | 768 | 8 | 2 | 10 |

| JURA | 359 | 15 | 3 | 10 |

| OES10 | 403 | 298 | 16 | 10 |

| OES97 | 334 | 263 | 16 | 10 |

| OSALES | 639 | 413 | 12 | 10 |

| RF1 | 9125 | 64 | 8 | 5 |

| RF2 | 9125 | 576 | 8 | 5 |

| SCM1D | 9803 | 280 | 16 | 2 |

| SCM20D | 8965 | 61 | 16 | 2 |

| SCPF | 1137 | 23 | 3 | 10 |

| SF1 | 323 | 10 | 3 | 10 |

| SF2 | 1066 | 10 | 3 | 10 |

| SLUMP | 103 | 7 | 3 | 10 |

| WQ | 1066 | 16 | 14 | 10 |

| Datasets | SST | RLC | ERC | OKL | mSVR | MMR | MSLR | MTR-MLS |

|---|---|---|---|---|---|---|---|---|

| ANDRO | 57.9 | 57.0 | 56.7 | 55.3 | 62.7 | 52.7 | 51.5 | 49.5 |

| ATP1D | 37.2 | 38.4 | 37.2 | 36.4 | 38.1 | 33.2 | 35.2 | 38.2 |

| ATP7D | 50.7 | 46.1 | 51.2 | 47.5 | 47.8 | 44.3 | 45.8 | 43.7 |

| EDM | 74.7 | 73.5 | 74.1 | 74.1 | 73.7 | 71.6 | 68.6 | 67.3 |

| ENB | 12.1 | 12.0 | 11.4 | 13.8 | 22.0 | 11.1 | 19.4 | 11.1 |

| JURA | 59.1 | 59.6 | 59.0 | 59.9 | 61.1 | 58.2 | 62.3 | 60.8 |

| OES10 | 42.1 | 41.9 | 42.0 | 43.2 | 44.7 | 40.3 | 40.7 | 40.3 |

| OES97 | 52.6 | 52.3 | 52.4 | 53.5 | 55.7 | 49.7 | 48.9 | 46.0 |

| OSALES | 72.6 | 74.1 | 71.3 | 71.8 | 77.8 | 74.5 | 73.6 | 71.3 |

| RF1 | 69.8 | 72.7 | 69.9 | 81.4 | 75.4 | 73.1 | 66.3 | 65.3 |

| RF2 | 69.9 | 70.4 | 69.8 | 81.8 | 83.6 | 74.6 | 67.1 | 66.7 |

| SCM1D | 47.0 | 45.7 | 46.6 | 47.6 | 54.3 | 44.7 | 43.3 | 42.2 |

| SCM20D | 77.7 | 74.7 | 76.0 | 76.4 | 76.3 | 75.8 | 74.0 | 73.7 |

| SCPF | 83.1 | 83.5 | 83.0 | 82.0 | 82.8 | 81.2 | 81.7 | 83.3 |

| SF1 | 106.8 | 116.3 | 108.8 | 105.9 | 102.1 | 95.8 | 104.2 | 105.4 |

| SF2 | 116.7 | 119.4 | 113.6 | 100.4 | 104.3 | 98.4 | 105.8 | 99.3 |

| SLUMP | 69.5 | 69.0 | 69.0 | 69.9 | 71.1 | 58.7 | 56.7 | 57.7 |

| WQ | 90.9 | 90.2 | 90.6 | 89.1 | 89.9 | 88.9 | 89.3 | 88.5 |

| AveRank | 5.50 | 5.11 | 4.72 | 5.22 | 6.17 | 2.67 | 3.00 | 2.17 |

| Feature Sets | Feature Type | Denotes | |

|---|---|---|---|

| Input features | Displacement | Continuous | C1 |

| Shipment length | Continuous | C2 | |

| Shipment width | Continuous | C3 | |

| Maximum speed | Continuous | C4 | |

| Power unit type | Semantic | C5 | |

| Power unit number | Enumerates | C6 | |

| Construction cost | Continuous | C7 | |

| Shaft horsepower | Continuous | C8 | |

| Warship age | Continuous | C9 | |

| Repairing factory | Semantic | C10 | |

| Maintenance time | Continuous | C11 | |

| Total task work | Continuous | C12 | |

| Number of repairmen | Continuous | C13 | |

| Proportion of repairmen | Continuous | C14 | |

| Original value of fixed assets | Continuous | C15 | |

| Operating income of repairing factory | Continuous | C16 | |

| Operating profit of repairing factory | Continuous | C17 | |

| Output targets | Material cost | Continuous | D1 |

| Labor cost | Continuous | D2 | |

| Manufacturing cost | Continuous | D3 | |

| Special cost | Continuous | D4 | |

| Period cost | Continuous | D5 | |

| Ship Category | Maintenance Grade | Dataset Size (N) | Denotes |

|---|---|---|---|

| combat support ships | second-grade | 44 | Dataset 1 |

| third-grade | 25 | Dataset 2 | |

| surface combat ships | second-grade | 35 | Dataset 3 |

| third-grade | 57 | Dataset 4 | |

| Submarines | second-grade | 27 | Dataset 5 |

| third-grade | 26 | Dataset 6 |

| Datasets | λ1 | λ2 | λ3 | σ |

|---|---|---|---|---|

| Dataset 1 | 10−3 | 10−2 | 10−3 | 10−3 |

| Dataset 2 | 10−3 | 10−2 | 10−2 | 10−3 |

| Dataset 3 | 10−4 | 10−4 | 10−5 | 10−4 |

| Dataset 4 | 10−3 | 10−3 | 10−2 | 10−4 |

| Dataset 5 | 10−4 | 10−2 | 10−2 | 10−3 |

| Dataset 6 | 10−3 | 10−3 | 10−2 | 10−6 |

| MAPE (%) | Dataset 1 | Dataset 2 | Dataset 3 | Dataset 4 | Dataset 5 | Dataset 6 |

|---|---|---|---|---|---|---|

| D1 | 13.7 | 11.6 | 21.0 | 20.3 | 15.8 | 14.9 |

| D2 | 13.0 | 26.1 | 16.3 | 15.1 | 11.1 | 14.9 |

| D3 | 7.9 | 14.5 | 18.6 | 12.0 | 10.7 | 12.3 |

| D4 | 15.3 | 12.5 | 14.8 | 15.3 | 16.5 | 17.7 |

| D5 | 12.3 | 6.3 | 15.6 | 13.3 | 17.5 | 9.3 |

| Average | 12.6 | 13.4 | 17.5 | 15.2 | 14.5 | 14.0 |

| Index | Real Value | mSVR | BPNN | MTR-MLS | Index | Real value | mSVR | BPNN | MTR-MLS |

|---|---|---|---|---|---|---|---|---|---|

| 01 | 5009 | 5146 | 4946 | 5080 | 13 | 5321 | 4986 | 4996 | 5348 |

| 02 | 4933 | 4523 | 5166 | 4856 | 14 | 5035 | 4460 | 5232 | 5175 |

| 03 | 5686 | 5528 | 5523 | 5547 | 15 | 5072 | 5417 | 5000 | 5057 |

| 04 | 5686 | 5874 | 5451 | 5607 | 16 | 4822 | 4734 | 4871 | 4595 |

| 05 | 5484 | 5390 | 5169 | 5327 | 17 | 5393 | 5003 | 4978 | 5469 |

| 06 | 5034 | 4436 | 4913 | 4993 | 18 | 5688 | 6030 | 5407 | 5575 |

| 07 | 5072 | 5168 | 4933 | 5130 | 19 | 4944 | 4749 | 5104 | 5046 |

| 08 | 4932 | 5022 | 4977 | 4941 | 20 | 4822 | 5086 | 5263 | 4788 |

| 09 | 4960 | 5065 | 4907 | 4966 | 21 | 5402 | 5629 | 5336 | 5551 |

| 10 | 5393 | 5543 | 5515 | 5477 | 22 | 5007 | 5393 | 5065 | 5110 |

| 11 | 5114 | 5240 | 4936 | 5030 | 23 | 5601 | 5439 | 5939 | 5707 |

| 12 | 5007 | 5229 | 5183 | 4974 | 24 | 5392 | 5601 | 5776 | 5292 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, D.; Sun, S.; Xie, L. Multi-Target Regression Based on Multi-Layer Sparse Structure and Its Application in Warships Scheduled Maintenance Cost Prediction. Appl. Sci. 2023, 13, 435. https://doi.org/10.3390/app13010435

He D, Sun S, Xie L. Multi-Target Regression Based on Multi-Layer Sparse Structure and Its Application in Warships Scheduled Maintenance Cost Prediction. Applied Sciences. 2023; 13(1):435. https://doi.org/10.3390/app13010435

Chicago/Turabian StyleHe, Dubo, Shengxiang Sun, and Li Xie. 2023. "Multi-Target Regression Based on Multi-Layer Sparse Structure and Its Application in Warships Scheduled Maintenance Cost Prediction" Applied Sciences 13, no. 1: 435. https://doi.org/10.3390/app13010435

APA StyleHe, D., Sun, S., & Xie, L. (2023). Multi-Target Regression Based on Multi-Layer Sparse Structure and Its Application in Warships Scheduled Maintenance Cost Prediction. Applied Sciences, 13(1), 435. https://doi.org/10.3390/app13010435