Auto-Scoring Feature Based on Sentence Transformer Similarity Check with Korean Sentences Spoken by Foreigners

Abstract

Featured Application

Abstract

1. Introduction

2. Related Works

- Template-based (matching unknown speech with known information);

- Knowledge-based (variations in speech are saved into the system, and the interference system deduces a complex rule);

- Neural network-based (uses a neural network to detect speech based on the training data automatically);

- Dynamic time warping-based (matching sequences of the same speech with varying time or speed);

- Statistical-based (uses automatic learning procedure on large training data samples).

3. System Development and Design

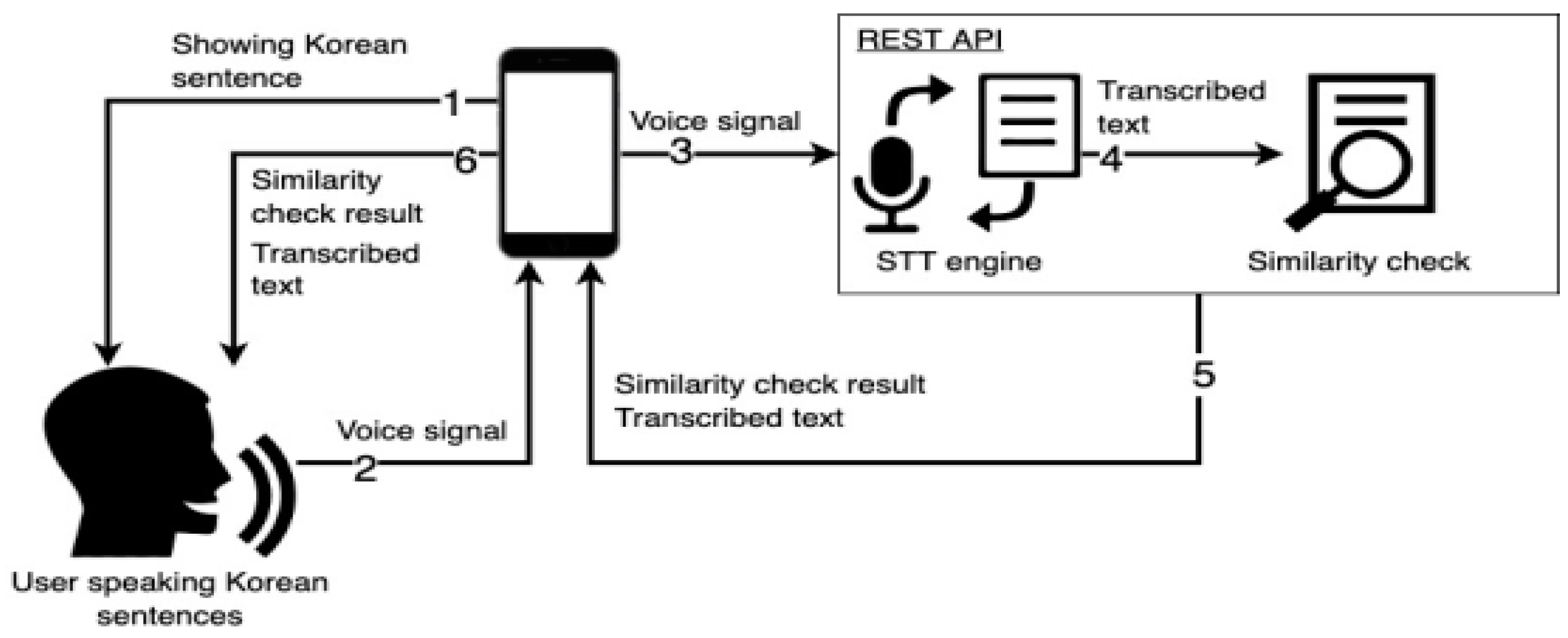

3.1. System Architecture

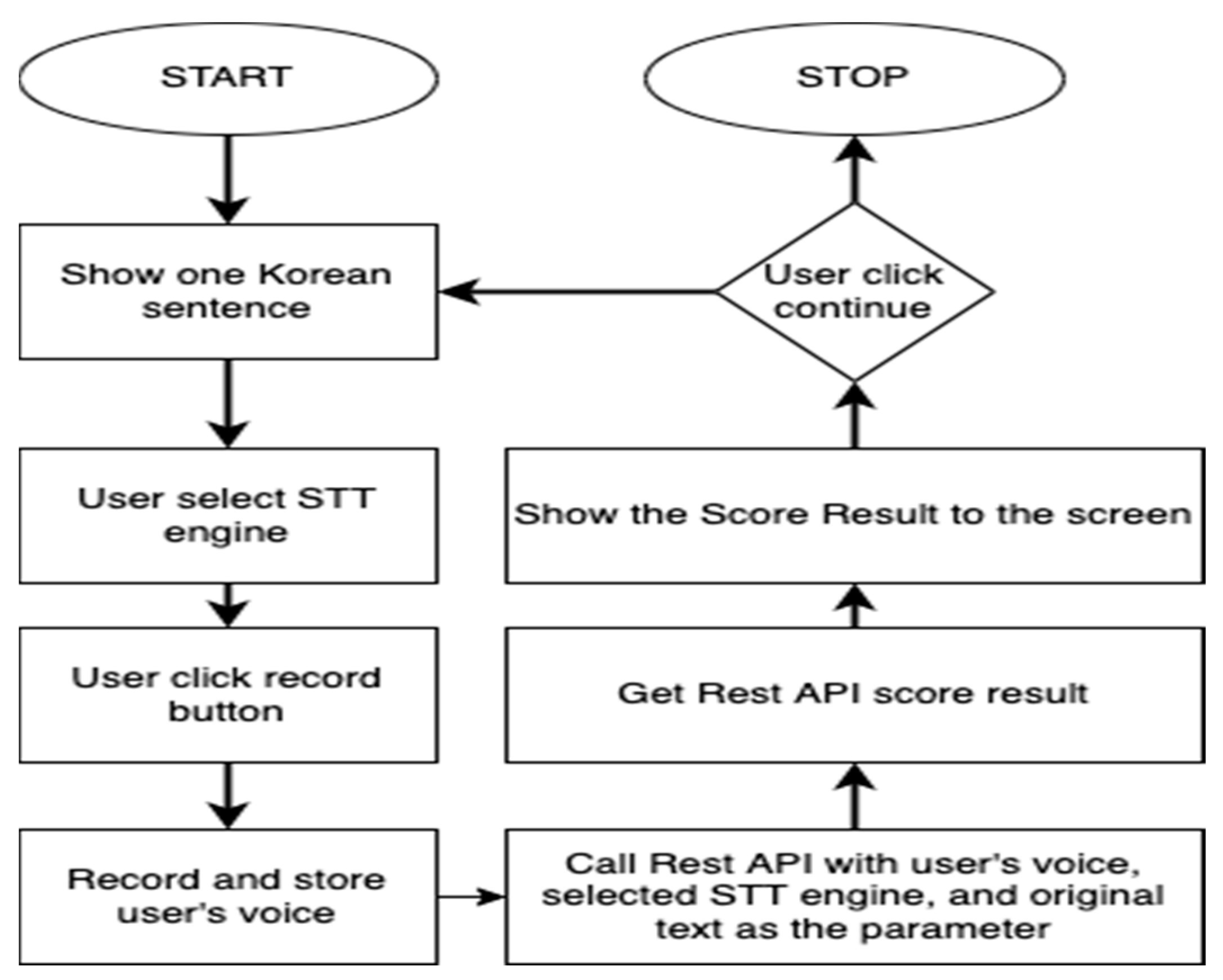

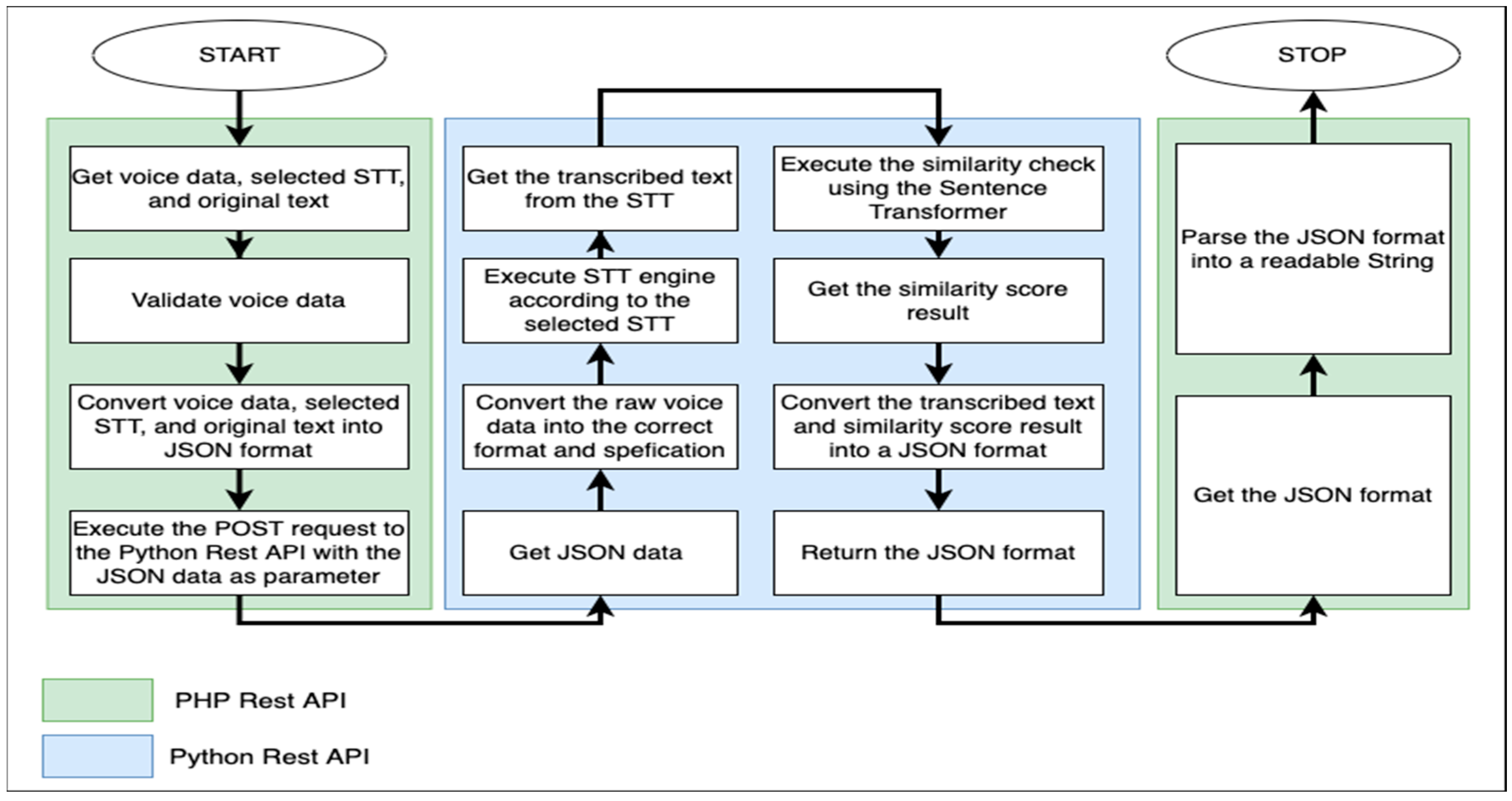

3.2. Flowchart Algorithm

3.2.1. Mobile Application

3.2.2. Rest API

3.3. System Requirements

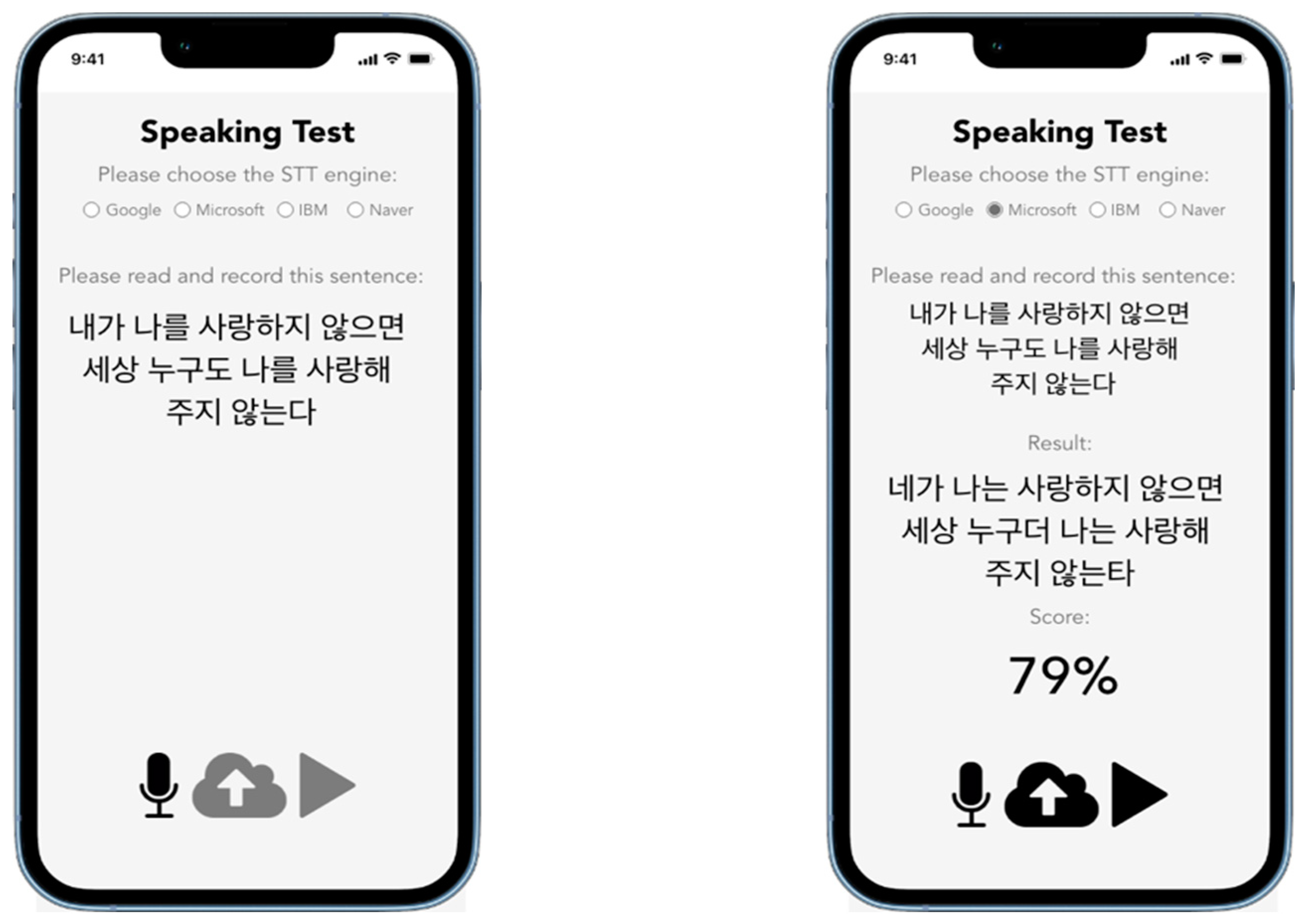

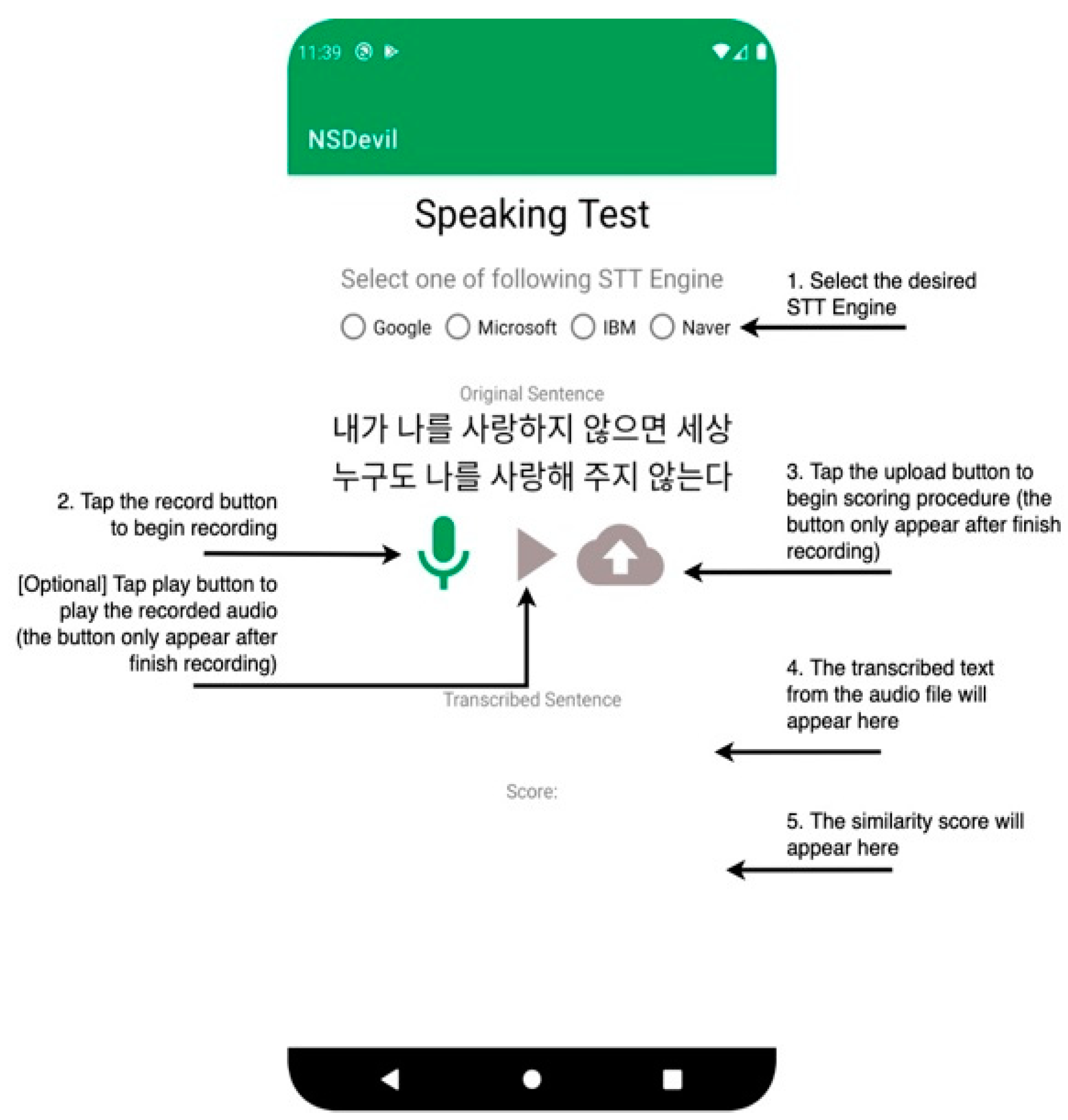

3.4. Initial User Interface Design

4. System Implementation

4.1. Implementation Environment

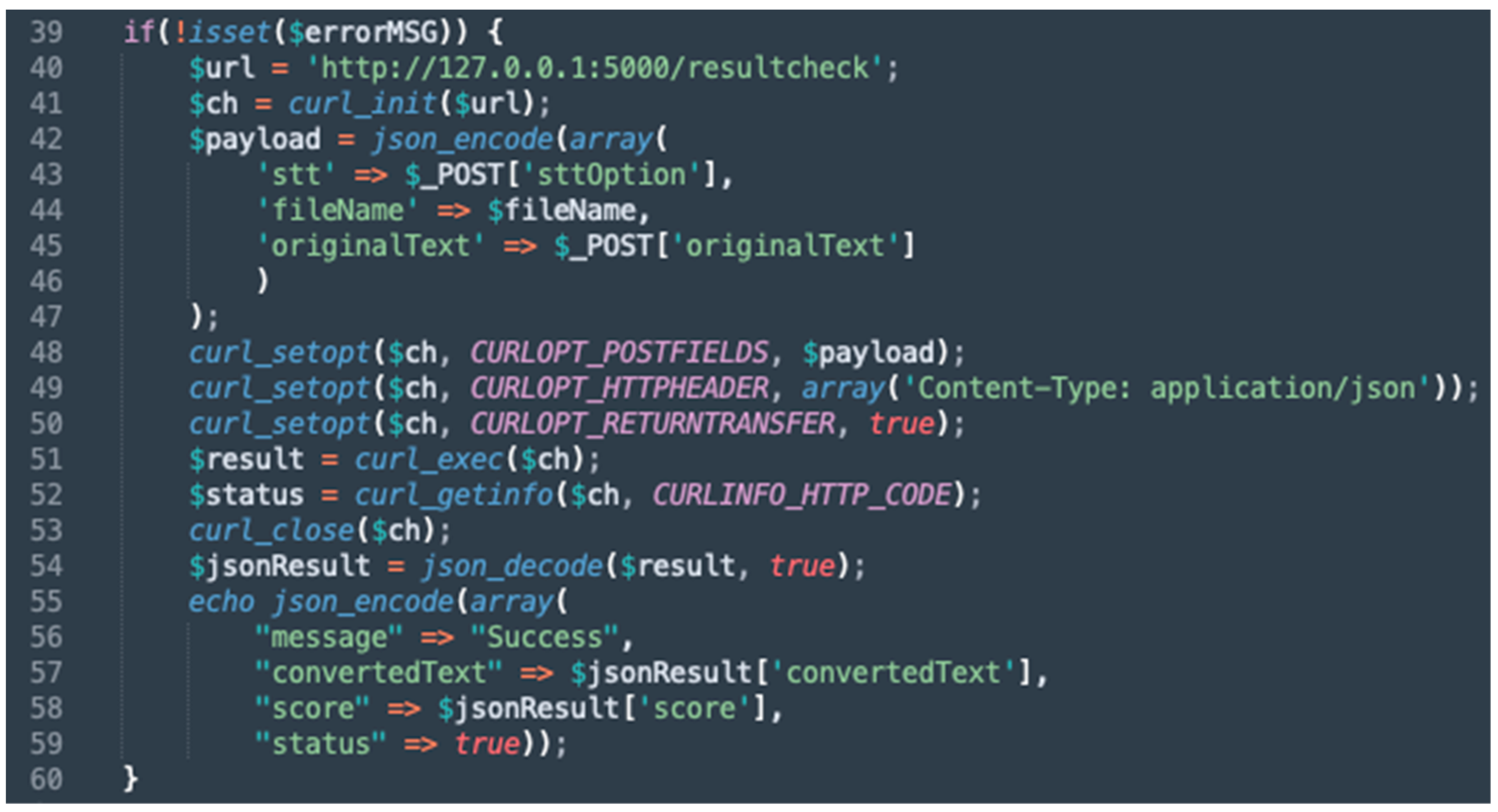

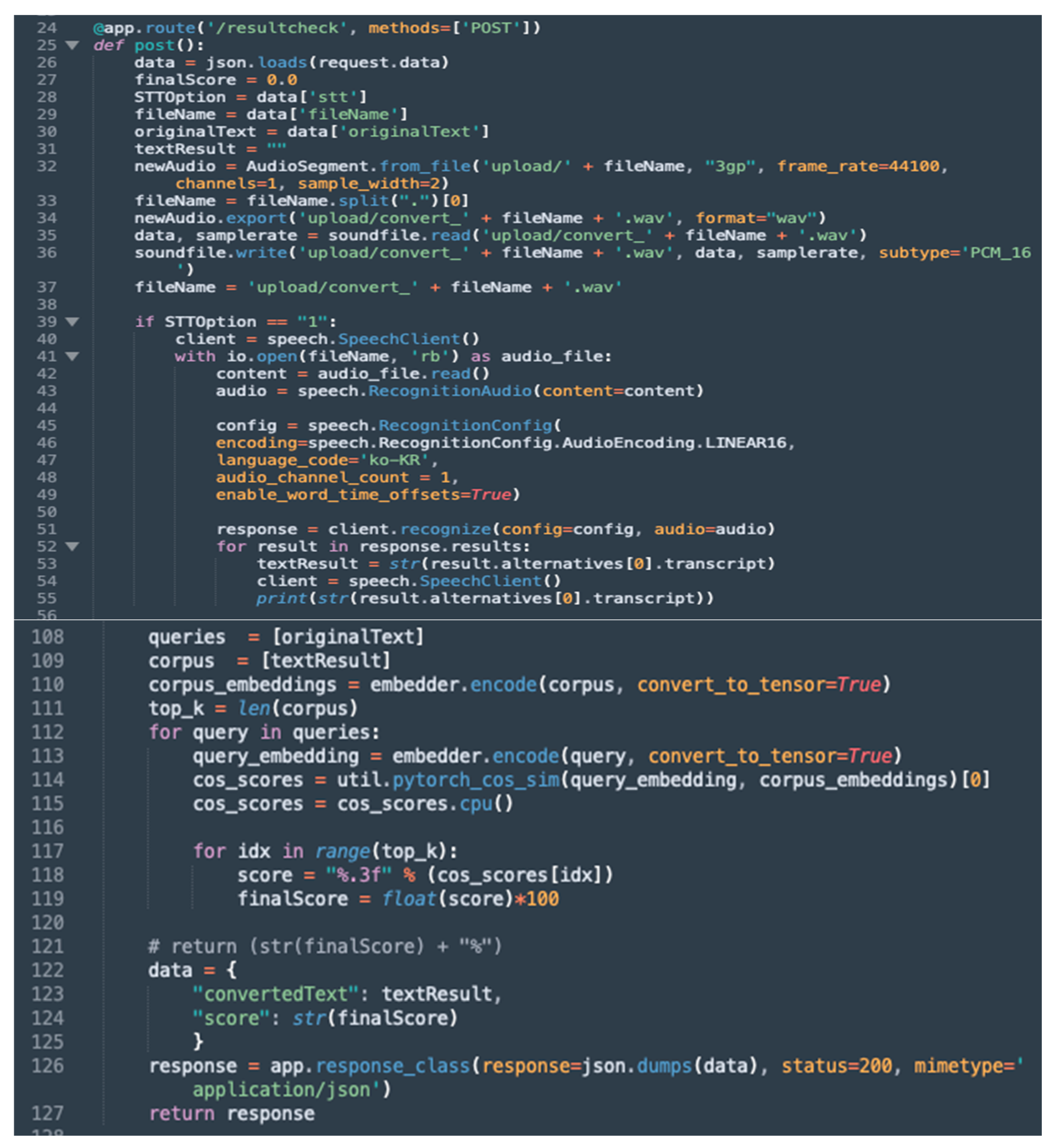

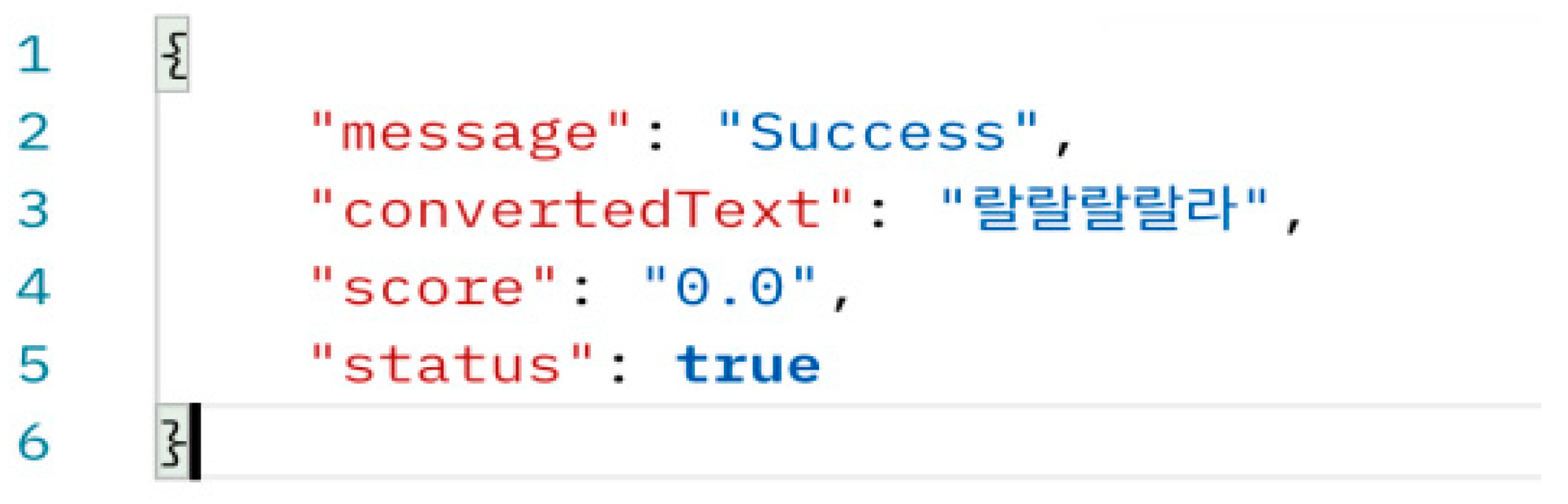

4.2. Rest API Implementation

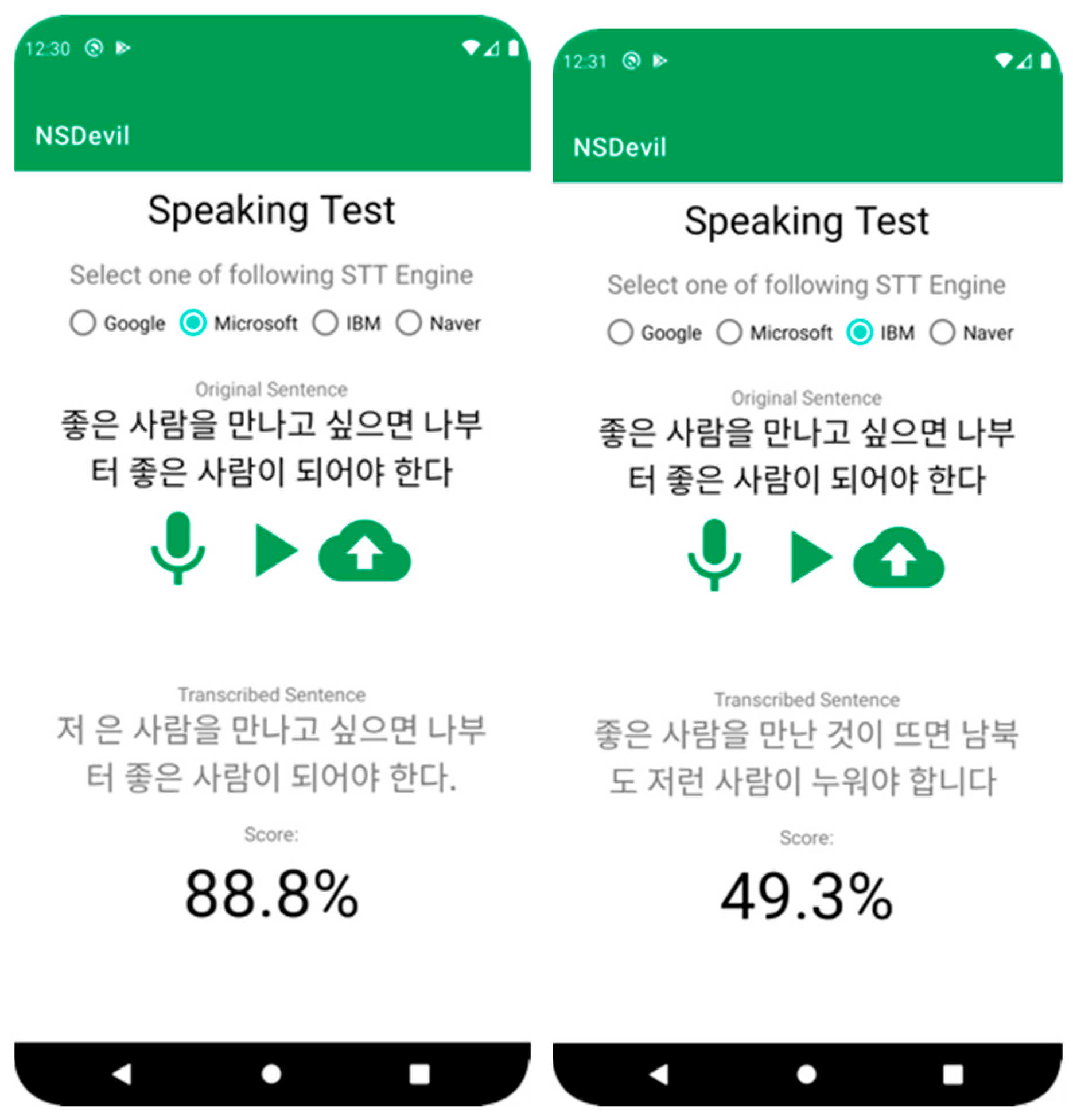

4.3. Mobile Application Implementation

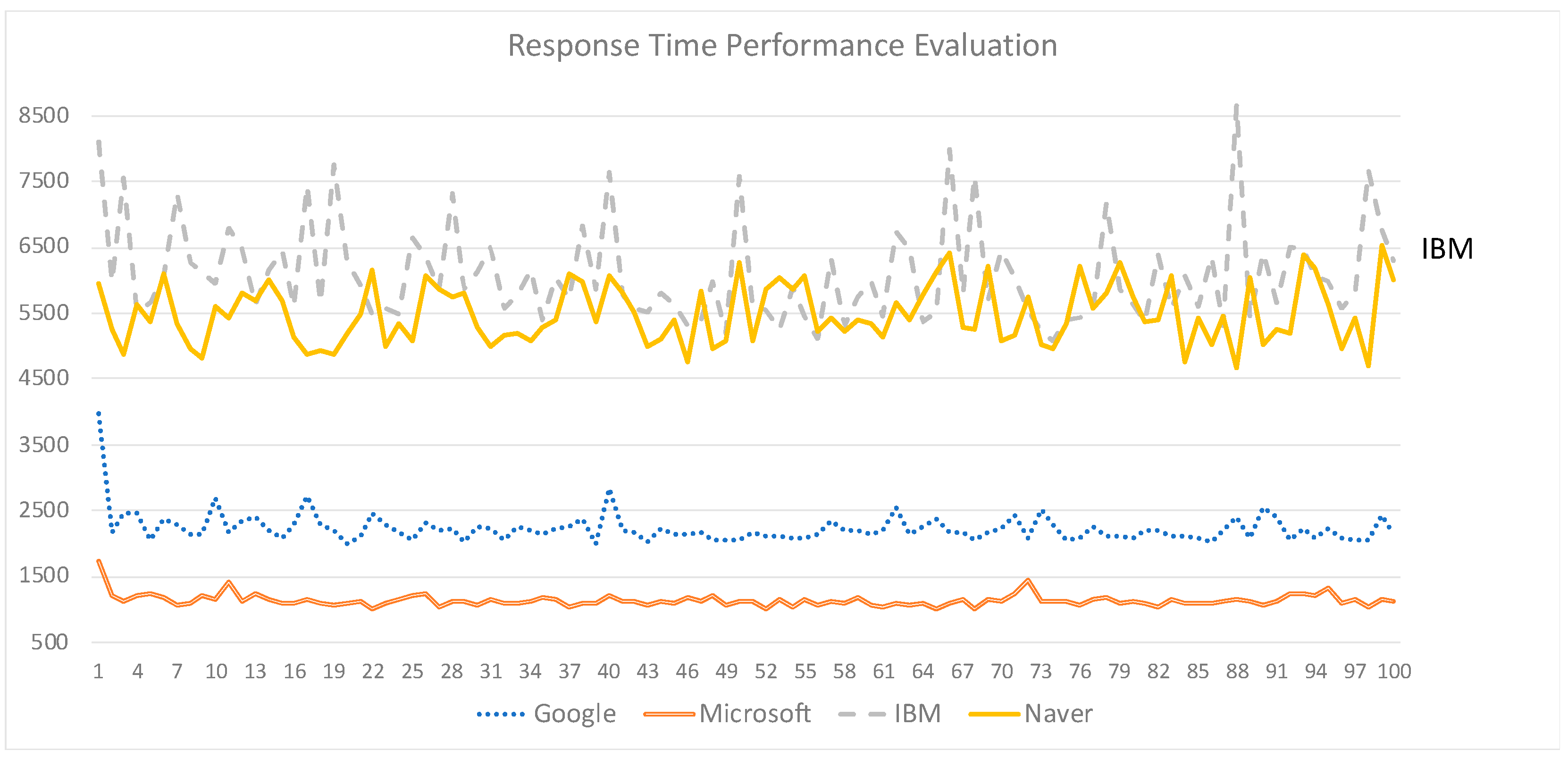

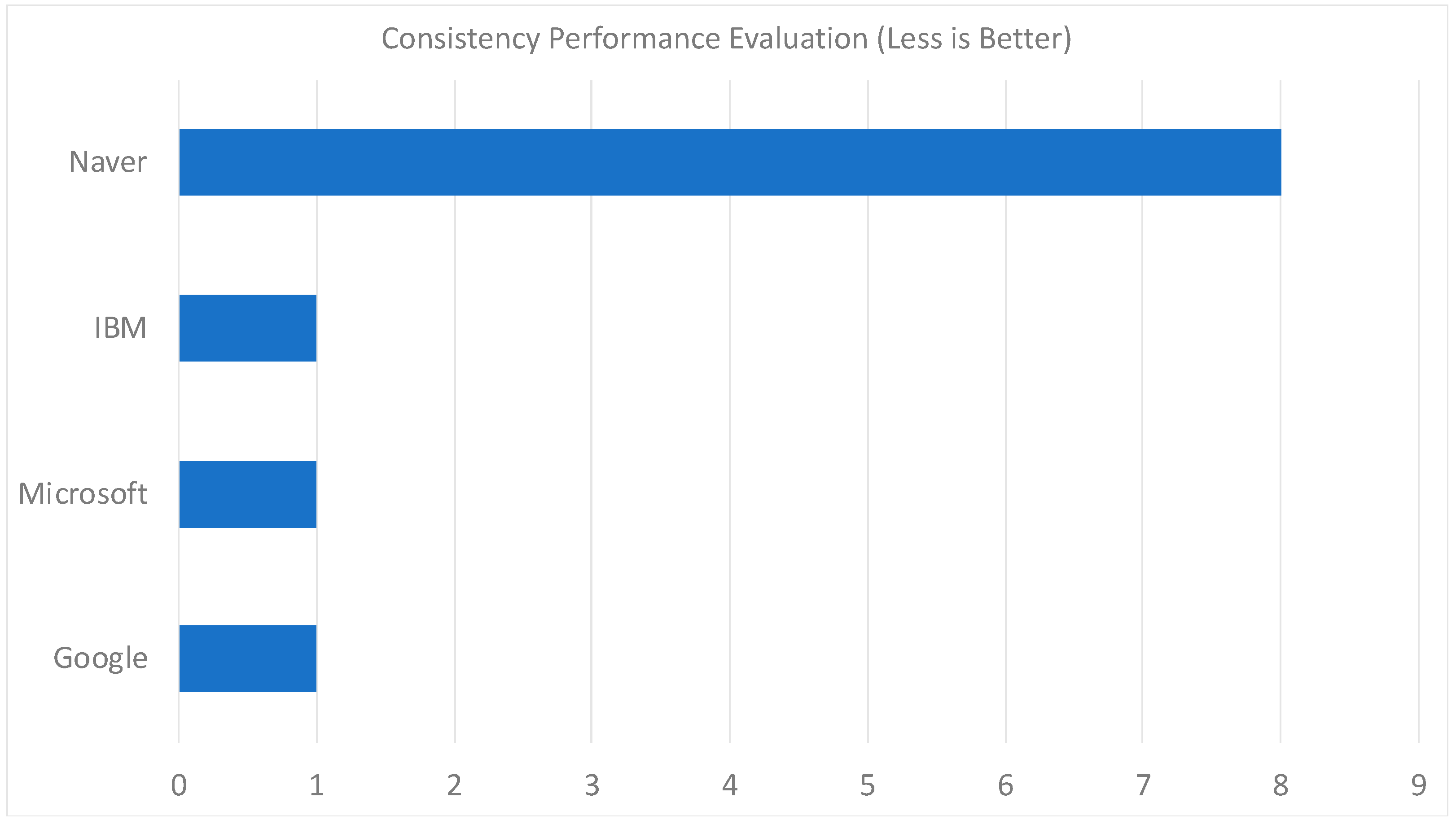

5. Performance Evaluation

6. Conclusions and Future Studies

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- The Korean Culture and Information Service (KOCIS). Summary: Korea.Net: The Official Website of the Republic of Korea. Available online: https://www.korea.net/AboutKorea/Society/South-Korea-Summary (accessed on 12 October 2022).

- Lee, K.M.; Ramsey, S.R. A History of the Korean Language; Cambridge University Press: New York, NY, USA, 2011. [Google Scholar]

- Yonhap. Number of Foreigners Staying in S. Korea Decreased 3.9% in 2021 Amid Pandemic. Available online: https://www.koreaherald.com/view.php?ud=20220126000736 (accessed on 12 October 2022).

- TOPIK Information. Test Outline: TOPIK Korean Proficiency Test. Available online: https://www.topik.go.kr/TWGUID/TWGUID0010.do (accessed on 12 October 2022).

- A, V.; Jose, D.V. Speech to Text Conversion and Summarization for Effective Understanding and Documentation. Int. J. Electr. Comput. Eng. (IJECE) 2019, 9, 3642–3648. [Google Scholar] [CrossRef]

- Karpagavalli, S.; Chandra, E. A Review on Automatic Speech Recognition Architecture and Approaches. IJSIP 2016, 9, 393–404. [Google Scholar] [CrossRef]

- Ziman, K.; Heusser, A.C.; Fitzpatrick, P.C.; Field, C.E.; Manning, J.R. Is Automatic Speech-to-Text Transcription Ready for Use in Psychological Experiments? Behav. Res. Methods 2018, 50, 2597–2605. [Google Scholar] [CrossRef] [PubMed]

- Iancu, B. Evaluating Google Speech-to-Text API’s Performance for Romanian e-Learning Resources. Inform. Econ. 2019, 23, 17–25. [Google Scholar] [CrossRef]

- Wang, X. Research on Open Oral English Scoring System Based on Neural Network. Comput. Intell. Neurosci. 2022, 2022, e1346543. [Google Scholar] [CrossRef] [PubMed]

- Zhan, X. A Convolutional Network-Based Intelligent Evaluation Algorithm for the Quality of Spoken English Pronunciation. J. Math. 2022, 2022, 7560033. [Google Scholar] [CrossRef]

- Mitra, S.; Tooley, J.; Inamdar, P.; Dixon, P. Improving English Pronunciation: An Automated Instructional Approach. Inf. Technol. Int. Dev. 2003, 1, 75–84. [Google Scholar] [CrossRef]

- Oh, Y.R.; Park, K.; Jeon, H.-B.; Park, J.G. Automatic Proficiency Assessment of Korean Speech Read Aloud by Non-Natives Using Bidirectional LSTM-Based Speech Recognition. ETRI J. 2020, 42, 761–772. [Google Scholar] [CrossRef]

- Razak, Z.; Sumali, S.R.; Idris, M.Y.I.; Ahmedy, I.; Yusoff, M.Y.Z.B.M. Review of hardware implementation of Speech-To-Text Engine for Jawi Character. In Proceedings of the 2010 International Conference on Science and Social Research, Kuala Lumpur, Malaysia, 5–7 December 2010. [Google Scholar]

- Chen, L.; Zechner, K.; Yoon, S.-Y.; Evanini, K.; Wang, X.; Loukina, A.; Tao, J.; Davis, L.; Lee, C.M.; Ma, M.; et al. Automated Scoring of Nonnative Speech Using the SpeechRaterSM v. 5.0 Engine. ETS Res. Rep. Ser. 2018, 2018, 1–31. [Google Scholar] [CrossRef]

- Wang, C.; Tang, Y.; Ma, X.; Wu, A.; Okhonko, D.; Pino, J. Fairseq S2T: Fast Speech-to-Text Modeling with Fairseq. In Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing: System Demonstrations, Suzhou, China, 4–7 December 2020. [Google Scholar]

- Liu, Y.; Shriberg, E.; Stolcke, A.; Hillard, D.; Ostendorf, M.; Harper, M. Enriching Speech Recognition with Automatic Detection of Sentence Boundaries and Disfluencies. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1526–1540. [Google Scholar] [CrossRef]

- Jones, D.; Jones, D.A.; Wolf, F.; Gibson, E.; Williams, E.; Fedorenko, E.; Reynolds, D.A.; Zissman, M.A. Measuring the Readability of Automatic Speech-to-Text Transcripts. In Proceedings of the 8th European Conference on Speech Communication and Technology, EUROSPEECH, Geneva, Switzerland, 1–4 September 2003. [Google Scholar]

- Pattnaik, S.; Nayak, A.K.; Patnaik, S. A semi-supervised learning of HMM to build a POS tagger for a low resourced language. J. Inf. Commun. Converg. Eng. 2020, 18, 207–215. [Google Scholar] [CrossRef]

- Jiang, S.; Fu, S.; Lin, N.; Fu, Y. Pretrained models and evaluation data for the Khmer language. Tsinghua Sci. Technol. 2022, 27, 709–718. [Google Scholar] [CrossRef]

- Wahyutama, A.B.; Hwang, M. Performance Comparison of Open Speech-to-Text Engines using Sentence Transformer Similarity Check with the Korean Language by Foreigners. In Proceedings of the IEEE International Conference on Industry 4.0, Artificial Intelligence and Communications Technology, Kuta, Bali, 28–30 July 2022. [Google Scholar]

- Mobile Operating System Market Share Republic of Korea. Available online: https://gs.statcounter.com/os-market-share/mobile/south-korea/#monthly-202112-202212 (accessed on 13 December 2022).

- Wahyutama, A.B.; Hwang, M. Design and Implementation of Digital Game-based Contents Management System for Package Tour Application. J. Korea Inst. Inf. Commun. Eng. 2022, 26, 872–880. [Google Scholar] [CrossRef]

- Wahyutama, A.B.; Hwang, M. Implementation of Digital Game-based Learning Feature for Package Tour Management Application. J. Korea Inst. Inf. Commun. Eng. 2022, 26, 1004–1012. [Google Scholar] [CrossRef]

| Title | Contribution |

|---|---|

| Research on Open Oral English Scoring System Based on Neural Network [9] | A scoring system for open-spoken English using Neural Network (NN) based on the user’s recorded voice at phonetic and text levels. The research separately scores the spoken content and spoken speech, in which the spoken content results from an external speech recognition engine and the spoken speech is scored using an in-house NN model. |

| A Convolutional Network-Based Intelligent Evaluation Algorithm for the Quality of Spoken English Pronunciation [10] | A Convolutional Neural Network (CNN) is used to evaluate the quality of spoken English pronunciation. The research directly feeds the voice signal into the CNN model to extract each feature rather than using any speech recognition engine to transcribe the voice into text. |

| Improving English Pronunciation: An Automated Instructional Approach [11] | An experiment that checks which group of children is willing to improve their English pronunciation using several English films and English-language learning software such as Speech-To-Text software engine |

| Automatic Proficiency Assessment of Korean Speech Read Aloud by Non-Natives Using Bidirectional LSTM-Based Speech Recognition [12] | The foreigners are told to speak specific Korean sentences and tested in two scenarios: with and without text. The spoken sentences are then fed into a Bidirectional Long Short-Term Memory (BLSTM)-based model that measures five holistic proficiency scores: segmental accuracy, phono-logical accuracy, fluency, pitch, and accent. |

| Review of hardware implementation of Speech-To-Text Engine for Jawi Character [13] | A hardware implementation for helping students to read Jawi characters more effectively using Speech-To-Text tools |

| Automated Scoring of Nonnative Speech Using the SpeechRaterSM v. 5.0 Engine [14] | This research shows the overview of multiple features of the SpeechRater engine to evaluate the speech performance of foreigners speaking English. |

| Fairseq S2T: Fast Speech-to-Text Modeling with Fairseq [15] | This research developed a FAIRSEQ extension for Speech-To-Text modelling tasks such as STT translation and speech recognition called FAIRSEQ S2T. |

| Requirements | Description |

|---|---|

| Show pre-defined Korean sentence | The application can show the pre-defined sentence by the user |

| Users can choose which STT to use | The application can give the user the options of the available STT |

| Record users’ voices and save them into a file | The application can record the user’s voice and save it into a file in the storage |

| Play the recorded voice | The application can play the last recorded voice by the user |

| Call the Rest API with the required parameters | The application can call the Rest API and pass the required parameters: a raw voice file, selected STT, and original text. |

| Retrieve the result from the server | The application can retrieve and show the similarity score and transcribed text via Rest API |

| Requirements | Description |

|---|---|

| Retrieve and validate the raw voice data | Rest API can retrieve and validate the raw voice data to ensure it is in the acceptable format. |

| Convert the original recorded file | Rest API can convert the raw voice data into the correct specification for the STT engine to work. |

| Execute the STT engine and similarity check | Rest API can execute the STT engine based on what the user had selected, then pass the transcribed text to the similarity check to get the score. |

| Return the final results | Rest API can return the similarity score and the transcribed text in the form of a readable JSON to the mobile application |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wahyutama, A.B.; Hwang, M. Auto-Scoring Feature Based on Sentence Transformer Similarity Check with Korean Sentences Spoken by Foreigners. Appl. Sci. 2023, 13, 373. https://doi.org/10.3390/app13010373

Wahyutama AB, Hwang M. Auto-Scoring Feature Based on Sentence Transformer Similarity Check with Korean Sentences Spoken by Foreigners. Applied Sciences. 2023; 13(1):373. https://doi.org/10.3390/app13010373

Chicago/Turabian StyleWahyutama, Aria Bisma, and Mintae Hwang. 2023. "Auto-Scoring Feature Based on Sentence Transformer Similarity Check with Korean Sentences Spoken by Foreigners" Applied Sciences 13, no. 1: 373. https://doi.org/10.3390/app13010373

APA StyleWahyutama, A. B., & Hwang, M. (2023). Auto-Scoring Feature Based on Sentence Transformer Similarity Check with Korean Sentences Spoken by Foreigners. Applied Sciences, 13(1), 373. https://doi.org/10.3390/app13010373