Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us

Abstract

:1. Introduction

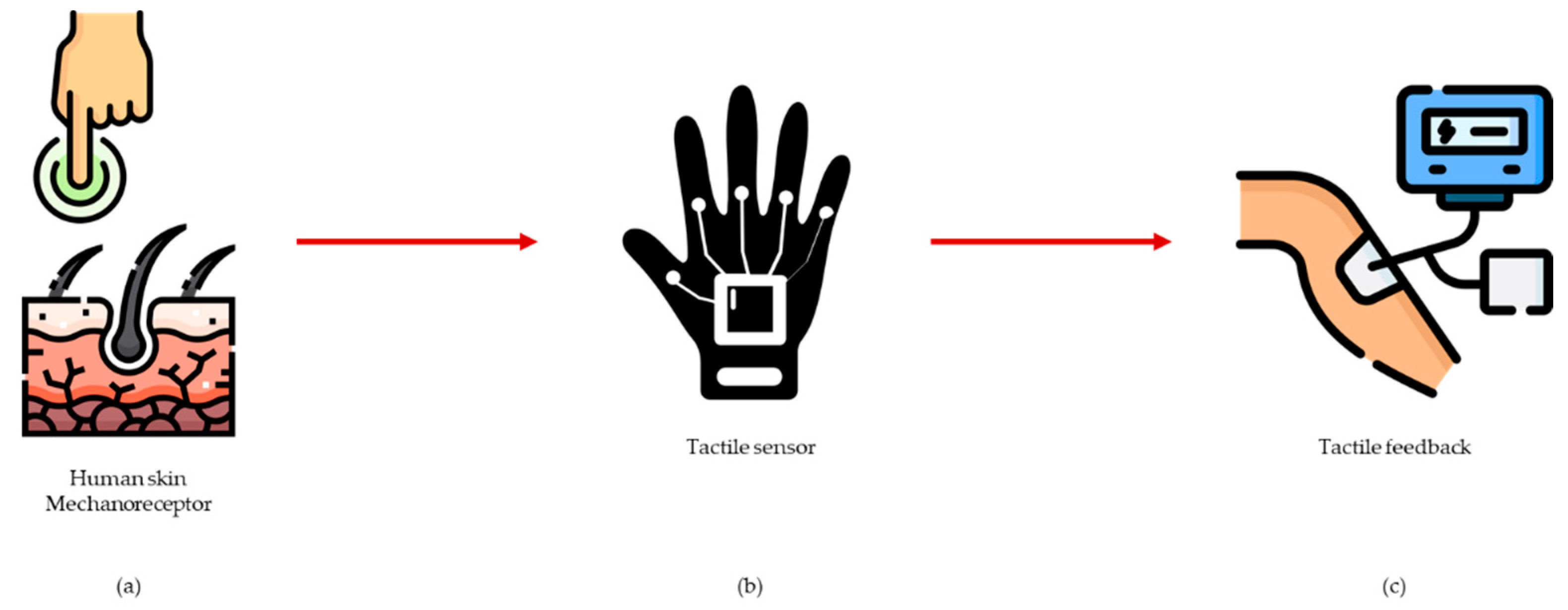

2. The Human Skin and Its Functions in Touch

3. Sensors

3.1. Strain Gauge

3.2. Accelerometer

3.3. Piezoresistive Sensor

3.4. Piezoelectric Sensor

3.5. Optical Sensor

3.6. Multimodal Sensor

4. Haptic Feedback

4.1. Kinesthetic Feedback

4.1.1. Grounded Force Feedback System

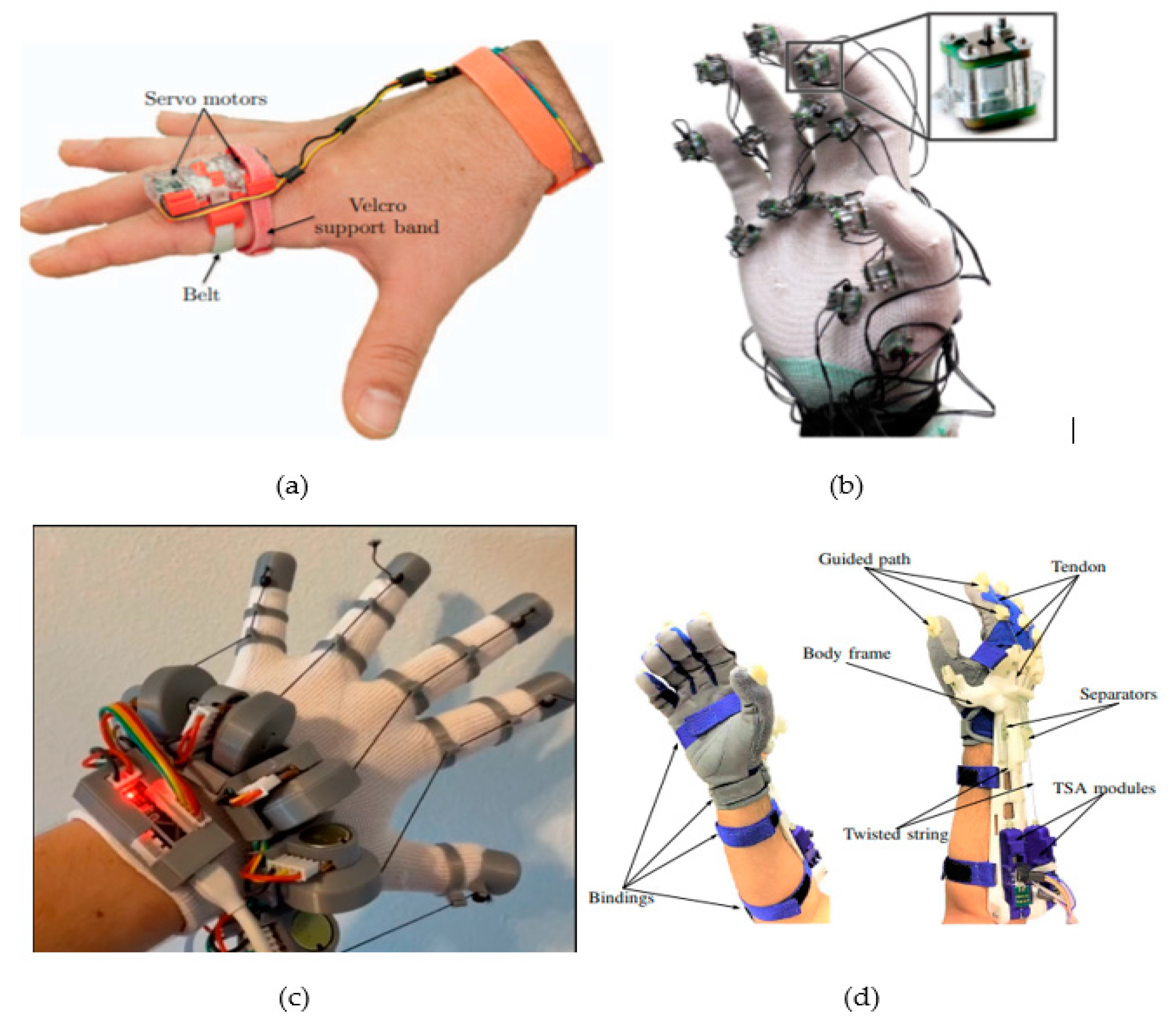

4.1.2. Exoskeleton-Based Force Feedback System

4.2. Cutaneous Feedback

4.2.1. Vibrotactile Stimulation

4.2.2. Skin Indentation

4.2.3. Skin Stretch

4.2.4. Electrotactile Feedback

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hannaford, B.; Okamura, A.M. Haptics. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 1063–1084. [Google Scholar]

- Salisbury, K.; Conti, F.; Barbagli, F. Haptic rendering: Introductory concepts. IEEE Comput. Graph. Appl. 2004, 24, 24–32. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, C.; Tang, B. Research on the application of AR technology based on Unity3D in education. J. Phys. Conf. Ser. 2019, 1168, 032045. [Google Scholar] [CrossRef]

- Englund, C.; Olofsson, A.D.; Price, L. Teaching with technology in higher education: Understanding conceptual change and development in practice. High. Educ. Res. Dev. 2017, 36, 73–87. [Google Scholar] [CrossRef]

- Xie, B.; Liu, H.; Alghofaili, R.; Zhang, Y.; Jiang, Y.; Lobo, F.D.; Li, C.; Li, W.; Huang, H.; Akdere, M. A Review on Virtual Reality Skill Training Applications. Front. Virtual Real. 2021, 2, 49. [Google Scholar] [CrossRef]

- Cao, F.-H. A Ship Driving Teaching System Based on Multi-level Virtual Reality Technology. Int. J. Emerg. Technol. Learn. 2016, 11, 26–31. [Google Scholar] [CrossRef] [Green Version]

- Alexander, T.; Westhoven, M.; Conradi, J. Virtual environments for competency-oriented education and training. In Advances in Human Factors, Business Management, Training and Education; Springer: Berlin/Heidelberg, Germany, 2017; pp. 23–29. [Google Scholar]

- Reilly, C.A.; Greeley, A.B.; Jevsevar, D.S.; Gitajn, I.L. Virtual reality-based physical therapy for patients with lower extremity injuries: Feasibility and acceptability. OTA Int. 2021, 4, e132. [Google Scholar] [CrossRef] [PubMed]

- Cipresso, P.; Giglioli, I.A.C.; Raya, M.A.; Riva, G. The past, present, and future of virtual and augmented reality research: A network and cluster analysis of the literature. Front. Psychol. 2018, 9, 2086. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castelvecchi, D. Low-cost headsets boost virtual reality’s lab appeal. Nat. News 2016, 533, 153. [Google Scholar] [CrossRef] [Green Version]

- Flavián, C.; Ibáñez-Sánchez, S.; Orús, C. The impact of virtual, augmented and mixed reality technologies on the customer experience. J. Bus. Res. 2019, 100, 547–560. [Google Scholar] [CrossRef]

- Ebert, C. Looking into the Future. IEEE Softw. 2015, 32, 92–97. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Mao, Z.; Zeng, C.; Gong, H.; Li, S.; Chen, B. A new method of virtual reality based on Unity3D. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Brookes, J.; Warburton, M.; Alghadier, M.; Mon-Williams, M.; Mushtaq, F. Studying human behavior with virtual reality: The Unity Experiment Framework. Behav. Res. Methods 2020, 52, 455–463. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ajey, L. Virtual reality and its military utility. J. Ambient Intell. Humaniz. Comput. 2013, 4, 17–26. [Google Scholar]

- Song, H.; Chen, F.; Peng, Q.; Zhang, J.; Gu, P. Improvement of user experience using virtual reality in open-architecture product design. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2018, 232, 2264–2275. [Google Scholar] [CrossRef]

- Lelevé, A.; McDaniel, T.; Rossa, C. Haptic training simulation. Front. Virtual Real. 2020, 1, 3. [Google Scholar] [CrossRef]

- Piromchai, P.; Avery, A.; Laopaiboon, M.; Kennedy, G.; O’Leary, S. Virtual reality training for improving the skills needed for performing surgery of the ear, nose or throat. Cochrane Database Syst. Rev. 2015, 9, CD010198. [Google Scholar] [CrossRef]

- Feng, H.; Li, C.; Liu, J.; Wang, L.; Ma, J.; Li, G.; Gan, L.; Shang, X.; Wu, Z. Virtual reality rehabilitation versus conventional physical therapy for improving balance and gait in parkinson’s disease patients: A randomized controlled trial. Med. Sci. Monit. Int. Med. J. Exp. Clin. Res. 2019, 25, 4186. [Google Scholar] [CrossRef]

- Kim, K.-J.; Heo, M. Comparison of virtual reality exercise versus conventional exercise on balance in patients with functional ankle instability: A randomized controlled trial. J. Back Musculoskelet. Rehabil. 2019, 32, 905–911. [Google Scholar] [CrossRef] [PubMed]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Khan, T.; Johnston, K.; Ophoff, J. The impact of an augmented reality application on learning motivation of students. Adv. Hum.-Comput. Interact. 2019, 2019, 7208494. [Google Scholar] [CrossRef] [Green Version]

- De Buck, S.; Maes, F.; Ector, J.; Bogaert, J.; Dymarkowski, S.; Heidbuchel, H.; Suetens, P. An augmented reality system for patient-specific guidance of cardiac catheter ablation procedures. IEEE Trans. Med. Imaging 2005, 24, 1512–1524. [Google Scholar] [CrossRef]

- Jiang, H.; Xu, S.; State, A.; Feng, F.; Fuchs, H.; Hong, M.; Rozenblit, J. Enhancing a laparoscopy training system with augmented reality visualization. In Proceedings of the 2019 Spring Simulation Conference (SpringSim), Tucson, AZ, USA, 29 April–2 May 2019; pp. 1–12. [Google Scholar]

- Satriadi, K.A.; Ens, B.; Cordeil, M.; Jenny, B.; Czauderna, T.; Willett, W. Augmented reality map navigation with freehand gestures. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 593–603. [Google Scholar]

- Lederman, S.J. Tactile roughness of grooved surfaces: The touching process and effects of macro-and microsurface structure. Percept. Psychophys. 1974, 16, 385–395. [Google Scholar] [CrossRef] [Green Version]

- Lederman, S.J.; Taylor, M.M. Fingertip force, surface geometry, and the perception of roughness by active touch. Percept. Psychophys. 1972, 12, 401–408. [Google Scholar] [CrossRef] [Green Version]

- Klatzky, R.L.; Lederman, S.J. Tactile roughness perception with a rigid link interposed between skin and surface. Percept. Psychophys. 1999, 61, 591–607. [Google Scholar] [CrossRef] [PubMed]

- Tiest, W.M.B. Tactual perception of material properties. Vis. Res. 2010, 50, 2775–2782. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LaMotte, R.H.; Mountcastle, V.B. Capacities of humans and monkeys to discriminate vibratory stimuli of different frequency and amplitude: A correlation between neural events and psychological measurements. J. Neurophysiol. 1975, 38, 539–559. [Google Scholar] [CrossRef] [PubMed]

- Barrea, A.; Delhaye, B.P.; Lefèvre, P.; Thonnard, J.-L. Perception of partial slips under tangential loading of the fingertip. Sci. Rep. 2018, 8, 7032. [Google Scholar] [CrossRef] [Green Version]

- Darian-Smith, I.; Davidson, I.; Johnson, K.O. Peripheral neural representation of spatial dimensions of a textured surface moving across the monkey’s finger pad. J. Physiol. 1980, 309, 135–146. [Google Scholar] [CrossRef]

- Lamb, G.D. Tactile discrimination of textured surfaces: Peripheral neural coding in the monkey. J. Physiol. 1983, 338, 567–587. [Google Scholar] [CrossRef] [Green Version]

- Lamb, G.D. Tactile discrimination of textured surfaces: Psychophysical performance measurements in humans. J. Physiol. 1983, 338, 551–565. [Google Scholar] [CrossRef]

- Ergen, E.; Ulkar, B. Proprioception and Coordination; Elsevier Health Sciences: Amsterdam, The Netherlands, 2007; pp. 237–255. [Google Scholar]

- Weber, A.I.; Saal, H.P.; Lieber, J.D.; Cheng, J.-W.; Manfredi, L.R.; Dammann, J.F.; Bensmaia, S.J. Spatial and temporal codes mediate the tactile perception of natural textures. Proc. Natl. Acad. Sci. USA 2013, 110, 17107–17112. [Google Scholar] [CrossRef] [Green Version]

- Lieber, J.D.; Bensmaia, S.J. High-dimensional representation of texture in somatosensory cortex of primates. Proc. Natl. Acad. Sci. USA 2019, 116, 3268–3277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bensmaia, S. Texture from touch. Scholarpedia 2009, 4, 7956. [Google Scholar] [CrossRef]

- Okamoto, S.; Nagano, H.; Yamada, Y. Psychophysical dimensions of tactile perception of textures. IEEE Trans. Haptics 2012, 6, 81–93. [Google Scholar] [CrossRef] [PubMed]

- Bartolozzi, C.; Natale, L.; Nori, F.; Metta, G. Robots with a sense of touch. Nat. Mater. 2016, 15, 921–925. [Google Scholar] [CrossRef]

- Chortos, A.; Liu, J.; Bao, Z. Pursuing prosthetic electronic skin. Nat. Mater. 2016, 15, 937–950. [Google Scholar] [CrossRef]

- Kim, K.; Lee, K.R.; Kim, W.H.; Park, K.-B.; Kim, T.-H.; Kim, J.-S.; Pak, J.J. Polymer-based flexible tactile sensor up to 32× 32 arrays integrated with interconnection terminals. Sens. Actuators A Phys. 2009, 156, 284–291. [Google Scholar] [CrossRef]

- Hu, Y.; Katragadda, R.B.; Tu, H.; Zheng, Q.; Li, Y.; Xu, Y. Bioinspired 3-D tactile sensor for minimally invasive surgery. J. Microelectromech. Syst. 2010, 19, 1400–1408. [Google Scholar] [CrossRef]

- Fernandez, R.; Payo, I.; Vazquez, A.S.; Becedas, J. Micro-vibration-based slip detection in tactile force sensors. Sensors 2014, 14, 709–730. [Google Scholar] [CrossRef]

- Chathuranga, K.; Hirai, S. A bio-mimetic fingertip that detects force and vibration modalities and its application to surface identification. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 575–581. [Google Scholar]

- Romano, J.M.; Kuchenbecker, K.J. Creating realistic virtual textures from contact acceleration data. IEEE Trans. Haptics 2011, 5, 109–119. [Google Scholar] [CrossRef]

- Oddo, C.M.; Controzzi, M.; Beccai, L.; Cipriani, C.; Carrozza, M.C. Roughness encoding for discrimination of surfaces in artificial active-touch. IEEE Trans. Robot. 2011, 27, 522–533. [Google Scholar] [CrossRef]

- Wei, Y.; Chen, S.; Yuan, X.; Wang, P.; Liu, L. Multiscale wrinkled microstructures for piezoresistive fibers. Adv. Funct. Mater. 2016, 26, 5078–5085. [Google Scholar] [CrossRef]

- Rongala, U.B.; Mazzoni, A.; Oddo, C.M. Neuromorphic artificial touch for categorization of naturalistic textures. IEEE Trans. Neural Netw. Learn. Syst. 2015, 28, 819–829. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chen, J.; Mei, D. Recognition of surface texture with wearable tactile sensor array: A pilot Study. Sens. Actuators A Phys. 2020, 307, 111972. [Google Scholar] [CrossRef]

- Nguyen, H.; Osborn, L.; Iskarous, M.; Shallal, C.; Hunt, C.; Betthauser, J.; Thakor, N. Dynamic texture decoding using a neuromorphic multilayer tactile sensor. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- Cao, Y.; Li, T.; Gu, Y.; Luo, H.; Wang, S.; Zhang, T. Fingerprint-inspired flexible tactile sensor for accurately discerning surface texture. Small 2018, 14, 1703902. [Google Scholar] [CrossRef]

- Sankar, S.; Balamurugan, D.; Brown, A.; Ding, K.; Xu, X.; Low, J.H.; Yeow, C.H.; Thakor, N. Texture discrimination with a soft biomimetic finger using a flexible neuromorphic tactile sensor array that provides sensory feedback. Soft Robot. 2020, 8, 577–587. [Google Scholar] [CrossRef]

- Gupta, A.K.; Ghosh, R.; Swaminathan, A.N.; Deverakonda, B.; Ponraj, G.; Soares, A.B.; Thakor, N.V. A neuromorphic approach to tactile texture recognition. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Cleveland, OH, USA, 17–19 October 2018; pp. 1322–1328. [Google Scholar]

- Yi, Z.; Zhang, Y.; Peters, J. Bioinspired tactile sensor for surface roughness discrimination. Sens. Actuators A Phys. 2017, 255, 46–53. [Google Scholar] [CrossRef]

- Qin, L.; Zhang, Y. Roughness discrimination with bio-inspired tactile sensor manually sliding on polished surfaces. Sens. Actuators A Phys. 2018, 279, 433–441. [Google Scholar] [CrossRef]

- Yi, Z.; Zhang, Y. Bio-inspired tactile FA-I spiking generation under sinusoidal stimuli. J. Bionic Eng. 2016, 13, 612–621. [Google Scholar] [CrossRef]

- Birkoben, T.; Winterfeld, H.; Fichtner, S.; Petraru, A.; Kohlstedt, H. A spiking and adapting tactile sensor for neuromorphic applications. Sci. Rep. 2020, 10, 17260. [Google Scholar] [CrossRef]

- Shaikh, M.O.; Lin, C.-M.; Lee, D.-H.; Chiang, W.-F.; Chen, I.-H.; Chuang, C.-H. Portable pen-like device with miniaturized tactile sensor for quantitative tissue palpation in oral cancer screening. IEEE Sens. J. 2020, 20, 9610–9617. [Google Scholar] [CrossRef]

- Ward-Cherrier, B.; Pestell, N.; Cramphorn, L.; Winstone, B.; Giannaccini, M.E.; Rossiter, J.; Lepora, N.F. The tactip family: Soft optical tactile sensors with 3d-printed biomimetic morphologies. Soft Robot. 2018, 5, 216–227. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ward-Cherrier, B.; Pestell, N.; Lepora, N.F. Neurotac: A neuromorphic optical tactile sensor applied to texture recognition. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual Event, USA, 31 May–31 August 2020; pp. 2654–2660. [Google Scholar]

- Fishel, J.A.; Santos, V.J.; Loeb, G.E. A robust micro-vibration sensor for biomimetic fingertips. In Proceedings of the 2008 2nd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Scottsdale, AZ, USA, 19–22 October 2008; pp. 659–663. [Google Scholar]

- Fishel, J.A.; Loeb, G.E. Sensing tactile microvibrations with the BioTac—Comparison with human sensitivity. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–28 June 2012; pp. 1122–1127. [Google Scholar]

- Liang, Q.; Yi, Z.; Hu, Q.; Zhang, Y. Low-cost sensor fusion technique for surface roughness discrimination with optical and piezoelectric sensors. IEEE Sens. J. 2017, 17, 7954–7960. [Google Scholar] [CrossRef]

- Ke, A.; Huang, J.; Chen, L.; Gao, Z.; Han, J.; Wang, C.; Zhou, J.; He, J. Fingertip tactile sensor with single sensing element based on FSR and PVDF. IEEE Sens. J. 2019, 19, 11100–11112. [Google Scholar] [CrossRef]

- Knud, G. NDE Handbook; Butterworth-Heinemann: Oxford, UK, 1989; pp. 295–301. [Google Scholar]

- Wiertlewski, M.; Lozada, J.; Hayward, V. The spatial spectrum of tangential skin displacement can encode tactual texture. IEEE Trans. Robot. 2011, 27, 461–472. [Google Scholar] [CrossRef] [Green Version]

- Nobuyama, L.; Kurashina, Y.; Kawauchi, K.; Matsui, K.; Takemura, K. Tactile estimation of molded plastic plates based on the estimated impulse responses of mechanoreceptive units. Sensors 2018, 18, 1588. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yin, J.; Aspinall, P.; Santos, V.J.; Posner, J.D. Measuring dynamic shear force and vibration with a bioinspired tactile sensor skin. IEEE Sens. J. 2018, 18, 3544–3553. [Google Scholar] [CrossRef]

- Lang, J.; Andrews, S. Measurement-based modeling of contact forces and textures for haptic rendering. IEEE Trans. Vis. Comput. Graph. 2010, 17, 380–391. [Google Scholar] [CrossRef] [Green Version]

- Culbertson, H.; Kuchenbecker, K.J. Ungrounded haptic augmented reality system for displaying roughness and friction. IEEE/ASME Trans. Mechatron. 2017, 22, 1839–1849. [Google Scholar] [CrossRef]

- Mohd-Yasin, F.; Nagel, D.J.; Korman, C.E. Noise in MEMS. Meas. Sci. Technol. 2009, 21, 012001. [Google Scholar] [CrossRef]

- Hu, H.; Han, Y.; Song, A.; Chen, S.; Wang, C.; Wang, Z. A finger-shaped tactile sensor for fabric surfaces evaluation by 2-dimensional active sliding touch. Sensors 2014, 14, 4899–4913. [Google Scholar] [CrossRef] [Green Version]

- Almassri, A.M.; Wan Hasan, W.; Ahmad, S.A.; Ishak, A.J.; Ghazali, A.; Talib, D.; Wada, C. Pressure sensor: State of the art, design, and application for robotic hand. J. Sens. 2015, 2015, 846487. [Google Scholar] [CrossRef] [Green Version]

- Pacchierotti, C.; Salvietti, G.; Hussain, I.; Meli, L.; Prattichizzo, D. The hRing: A wearable haptic device to avoid occlusions in hand tracking. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 134–139. [Google Scholar]

- Vechev, V.; Zarate, J.; Lindlbauer, D.; Hinchet, R.; Shea, H.; Hilliges, O. Tactiles: Dual-mode low-power electromagnetic actuators for rendering continuous contact and spatial haptic patterns in VR. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 312–320. [Google Scholar]

- LucidVR, LucidGloves. Available online: https://github.com/LucidVR/lucidgloves (accessed on 10 October 2021).

- Hosseini, M.; Sengül, A.; Pane, Y.; De Schutter, J.; Bruyninck, H. Exoten-glove: A force-feedback haptic glove based on twisted string actuation system. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 320–327. [Google Scholar]

- Placzek, J.D.; Boyce, D.A. Orthopaedic Physical Therapy Secrets-E-Book; Elsevier Health Sciences: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Taylor, J. Proprioception; Elsevier: Amsterdam, The Netherlands, 2009; pp. 1143–1149. [Google Scholar]

- Park, J.; Son, B.; Han, I.; Lee, W. Effect of cutaneous feedback on the perception of virtual object weight during manipulation. Sci. Rep. 2020, 10, 1357. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bloom, F.E.; Spitzer, N.C.; Gage, F.; Albright, T. Encyclopedia of Neuroscience; Academic Press: Cambridge, MA, USA, 2009; Volume 1. [Google Scholar]

- Westling, G.; Johansson, R.S. Responses in glabrous skin mechanoreceptors during precision grip in humans. Exp. Brain Res. 1987, 66, 128–140. [Google Scholar] [CrossRef] [PubMed]

- Quek, Z.F.; Schorr, S.B.; Nisky, I.; Provancher, W.R.; Okamura, A.M. Sensory substitution and augmentation using 3-degree-of-freedom skin deformation feedback. IEEE Trans. Haptics 2015, 8, 209–221. [Google Scholar] [CrossRef] [PubMed]

- Girard, A.; Marchal, M.; Gosselin, F.; Chabrier, A.; Louveau, F.; Lécuyer, A. Haptip: Displaying haptic shear forces at the fingertips for multi-finger interaction in virtual environments. Front. ICT 2016, 3, 6. [Google Scholar] [CrossRef] [Green Version]

- Choi, I.; Culbertson, H.; Miller, M.R.; Olwal, A.; Follmer, S. Grabity: A wearable haptic interface for simulating weight and grasping in virtual reality. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Quebec City, QC, Canada, 22–25 October 2017; pp. 119–130. [Google Scholar]

- Maisto, M.; Pacchierotti, C.; Chinello, F.; Salvietti, G.; De Luca, A.; Prattichizzo, D. Evaluation of wearable haptic systems for the fingers in augmented reality applications. IEEE Trans. Haptics 2017, 10, 511–522. [Google Scholar] [CrossRef] [Green Version]

- Prattichizzo, D.; Chinello, F.; Pacchierotti, C.; Malvezzi, M. Towards wearability in fingertip haptics: A 3-dof wearable device for cutaneous force feedback. IEEE Trans. Haptics 2013, 6, 506–516. [Google Scholar] [CrossRef]

- Schorr, S.B.; Okamura, A.M. Three-dimensional skin deformation as force substitution: Wearable device design and performance during haptic exploration of virtual environments. IEEE Trans. Haptics 2017, 10, 418–430. [Google Scholar] [CrossRef]

- Cyberglove Systems LLC, Cyberforce. Available online: http://www.cyberglovesystems.com/cyberforce (accessed on 18 October 2021).

- Force Dimension, Omega.6. Available online: https://www.forcedimension.com/images/doc/specsheet_-_omega6.pdf (accessed on 10 October 2021).

- Phantom Omni—6 DOF Master Device. Available online: https://delfthapticslab.nl/device/phantom-omni/ (accessed on 10 October 2021).

- Hinchet, R.; Vechev, V.; Shea, H.; Hilliges, O. Dextres: Wearable haptic feedback for grasping in vr via a thin form-factor electrostatic brake. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, Berlin, Germany, 14–17 October 2018; pp. 901–912. [Google Scholar]

- Egawa, M.; Watanabe, T.; Nakamura, T. Development of a wearable haptic device with pneumatic artificial muscles and MR brake. In Proceedings of the 2015 IEEE Virtual Reality (VR), Arles, France, 23–27 March 2015; pp. 173–174. [Google Scholar]

- Maestro Glove. Available online: https://contact.ci/#maestro-product (accessed on 10 October 2021).

- Carpi, F.; Mannini, A.; De Rossi, D. Elastomeric contractile actuators for hand rehabilitation splints. In Proceedings of the Electroactive Polymer Actuators and Devices (EAPAD) 2008, San Diego, CA, USA, 10–13 March 2008; p. 692705. [Google Scholar]

- Mistry, M.; Mohajerian, P.; Schaal, S. An exoskeleton robot for human arm movement study. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 4071–4076. [Google Scholar]

- Ryu, D.; Moon, K.-W.; Nam, H.; Lee, Y.; Chun, C.; Kang, S.; Song, J.-B. Micro hydraulic system using slim artificial muscles for a wearable haptic glove. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3028–3033. [Google Scholar]

- Polygerinos, P.; Wang, Z.; Galloway, K.C.; Wood, R.J.; Walsh, C.J. Soft robotic glove for combined assistance and at-home rehabilitation. Robot. Auton. Syst. 2015, 73, 135–143. [Google Scholar] [CrossRef] [Green Version]

- Wehner, M.; Quinlivan, B.; Aubin, P.M.; Martinez-Villalpando, E.; Baumann, M.; Stirling, L.; Holt, K.; Wood, R.; Walsh, C. A lightweight soft exosuit for gait assistance. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3362–3369. [Google Scholar]

- Dexmo. Available online: https://www.dextarobotics.com/about (accessed on 10 October 2021).

- Cybergrasp. Available online: http://www.cyberglovesystems.com/cybergrasp (accessed on 18 October 2021).

- Senseglove. Available online: https://www.senseglove.com/about-us/ (accessed on 10 October 2021).

- Jadhav, S.; Kannanda, V.; Kang, B.; Tolley, M.T.; Schulze, J.P. Soft robotic glove for kinesthetic haptic feedback in virtual reality environments. Electron. Imaging 2017, 2017, 19–24. [Google Scholar] [CrossRef] [Green Version]

- Brancadoro, M.; Manti, M.; Tognarelli, S.; Cianchetti, M. Fiber jamming transition as a stiffening mechanism for soft robotics. Soft Robot. 2020, 7, 663–674. [Google Scholar] [CrossRef] [PubMed]

- Jadhav, S.; Majit, M.R.A.; Shih, B.; Schulze, J.P.; Tolley, M.T. Variable Stiffness Devices Using Fiber Jamming for Application in Soft Robotics and Wearable Haptics. Soft Robot. 2021, 9, 173–186. [Google Scholar] [CrossRef] [PubMed]

- Massie, T.H.; Salisbury, J.K. The phantom haptic interface: A device for probing virtual objects. In Proceedings of the ASME Winter Annual Meeting, Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Chicago, IL, USA, 13–18 November 1994; pp. 295–300. [Google Scholar]

- Sato, M.; Hirata, Y.; Kawarada, H. Space interface device for artificial reality—SPIDAR. Syst. Comput. Jpn. 1992, 23, 44–54. [Google Scholar] [CrossRef]

- Van der Linde, R.Q.; Lammertse, P.; Frederiksen, E.; Ruiter, B. The HapticMaster, a new high-performance haptic interface. In Proceedings of the Eurohaptics, Madrid, Spain, 10–13 June 2002; pp. 1–5. [Google Scholar]

- Leuschke, R.; Kurihara, E.K.; Dosher, J.; Hannaford, B. High fidelity multi finger haptic display. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, World Haptics Conference, Pisa, Italy, 18–20 March 2005; pp. 606–608. [Google Scholar]

- Yoon, J.; Ryu, J.; Burdea, G. Design and analysis of a novel virtual walking machine. In Proceedings of the 11th Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, HAPTICS 2003, Los Angeles, CA, USA, 22–23 March 2003; pp. 374–381. [Google Scholar]

- Ding, Y.; Sivak, M.; Weinberg, B.; Mavroidis, C.; Holden, M.K. Nuvabat: Northeastern university virtual ankle and balance trainer. In Proceedings of the 2010 IEEE Haptics Symposium, Waltham, MA, USA, 25–26 March 2010; pp. 509–514. [Google Scholar]

- Tobergte, A.; Helmer, P.; Hagn, U.; Rouiller, P.; Thielmann, S.; Grange, S.; Albu-Schäffer, A.; Conti, F.; Hirzinger, G. The sigma. 7 haptic interface for MiroSurge: A new bi-manual surgical console. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3023–3030. [Google Scholar]

- Grange, S.; Conti, F.; Rouiller, P.; Helmer, P.; Baur, C. The Delta Haptic Device; Ecole Polytechnique Fédérale de Lausanne: Lausanne, Switzerland, 2001. [Google Scholar]

- Vulliez, M.; Zeghloul, S.; Khatib, O. Design strategy and issues of the Delthaptic, a new 6-DOF parallel haptic device. Mech. Mach. Theory 2018, 128, 395–411. [Google Scholar] [CrossRef] [Green Version]

- Schiele, A.; Letier, P.; Van Der Linde, R.; Van Der Helm, F. Bowden cable actuator for force-feedback exoskeletons. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3599–3604. [Google Scholar]

- Herbin, P.; Pajor, M. Human-robot cooperative control system based on serial elastic actuator bowden cable drive in ExoArm 7-DOF upper extremity exoskeleton. Mech. Mach. Theory 2021, 163, 104372. [Google Scholar] [CrossRef]

- Das, S.; Kishishita, Y.; Tsuji, T.; Lowell, C.; Ogawa, K.; Kurita, Y. ForceHand glove: A wearable force-feedback glove with pneumatic artificial muscles (PAMs). IEEE Robot. Autom. Lett. 2018, 3, 2416–2423. [Google Scholar] [CrossRef]

- Takahashi, N.; Takahashi, H.; Koike, H. Soft exoskeleton glove enabling force feedback for human-like finger posture control with 20 degrees of freedom. In Proceedings of the 2019 IEEE World Haptics Conference (WHC), Tokyo, Japan, 9–12 July 2019; pp. 217–222. [Google Scholar]

- Tanjung, K.; Nainggolan, F.; Siregar, B.; Panjaitan, S.; Fahmi, F. The use of virtual reality controllers and comparison between vive, leap motion and senso gloves applied in the anatomy learning system. J. Phys. Conf. Ser. 2020, 1542, 012026. [Google Scholar] [CrossRef]

- Ooka, T.; Fujita, K. Virtual object manipulation system with substitutive display of tangential force and slip by control of vibrotactile phantom sensation. In Proceedings of the 2010 IEEE Haptics Symposium, Waltham, MA, USA, 25–26 March 2010; pp. 215–218. [Google Scholar]

- Nakagawa, R.; Fujita, K. Wearable 3DOF Substitutive Force Display Device Based on Frictional Vibrotactile Phantom Sensation. In Haptic Interaction; Springer: Berlin/Heidelberg, Germany, 2015; pp. 157–159. [Google Scholar]

- Ji, X.; Liu, X.; Cacucciolo, V.; Civet, Y.; El Haitami, A.; Cantin, S.; Perriard, Y.; Shea, H. Untethered feel-through haptics using 18-µm thick dielectric elastomer actuators. Adv. Funct. Mater. 2020, 31, 2006639. [Google Scholar] [CrossRef]

- Ito, K.; Okamoto, S.; Yamada, Y.; Kajimoto, H. Tactile texture display with vibrotactile and electrostatic friction stimuli mixed at appropriate ratio presents better roughness textures. ACM Trans. Appl. Percept. (TAP) 2019, 16, 1–15. [Google Scholar] [CrossRef]

- Saga, S.; Kurogi, J. Sensing and Rendering Method of 2-Dimensional Haptic Texture. Sensors 2021, 21, 5523. [Google Scholar] [CrossRef]

- Solazzi, M.; Frisoli, A.; Bergamasco, M. Design of a novel finger haptic interface for contact and orientation display. In Proceedings of the 2010 IEEE Haptics Symposium, Waltham, MA, USA, 25–26 March 2010; pp. 129–132. [Google Scholar]

- Chinello, F.; Malvezzi, M.; Pacchierotti, C.; Prattichizzo, D. Design and development of a 3RRS wearable fingertip cutaneous device. In Proceedings of the 2015 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Busan, Korea, 7–11 July 2015; pp. 293–298. [Google Scholar]

- Kellaris, N.; Gopaluni Venkata, V.; Smith, G.M.; Mitchell, S.K.; Keplinger, C. Peano-HASEL actuators: Muscle-mimetic, electrohydraulic transducers that linearly contract on activation. Sci. Robot. 2018, 3, eaar3276. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Leroy, E.; Hinchet, R.; Shea, H. Multimode hydraulically amplified electrostatic actuators for wearable haptics. Adv. Mater. 2020, 32, 2002564. [Google Scholar] [CrossRef] [PubMed]

- Pelrine, R.; Kornbluh, R.; Pei, Q.; Joseph, J. High-speed electrically actuated elastomers with strain greater than 100%. Science 2000, 287, 836–839. [Google Scholar] [CrossRef]

- Mazursky, A.; Koo, J.-H.; Yang, T.-H. Design, modeling, and evaluation of a slim haptic actuator based on electrorheological fluid. J. Intell. Mater. Syst. Struct. 2019, 30, 2521–2533. [Google Scholar] [CrossRef]

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A wearable fingertip haptic device with 3 DoF asymmetric 3-RSR kinematics. In Proceedings of the 2015 IEEE World Haptics Conference (WHC), Evanston, IL, USA, 22–26 June 2015; pp. 388–393. [Google Scholar]

- Minamizawa, K.; Fukamachi, S.; Kajimoto, H.; Kawakami, N.; Tachi, S. Gravity grabber: Wearable haptic display to present virtual mass sensation. In ACM SIGGRAPH 2007 Emerging Technologies; ACM SIGGRAPH: San Diego, CA, USA, 2007; p. 8-es. [Google Scholar]

- Tan, D.W.; Schiefer, M.A.; Keith, M.W.; Anderson, J.R.; Tyler, J.; Tyler, D.J. A neural interface provides long-term stable natural touch perception. Sci. Transl. Med. 2014, 6, 257ra138. [Google Scholar] [CrossRef] [Green Version]

- Ortiz-Catalan, M.; Håkansson, B.; Brånemark, R. An osseointegrated human-machine gateway for long-term sensory feedback and motor control of artificial limbs. Sci. Transl. Med. 2014, 6, 257re256. [Google Scholar] [CrossRef]

- Davis, T.S.; Wark, H.A.; Hutchinson, D.; Warren, D.J.; O’neill, K.; Scheinblum, T.; Clark, G.A.; Normann, R.A.; Greger, B. Restoring motor control and sensory feedback in people with upper extremity amputations using arrays of 96 microelectrodes implanted in the median and ulnar nerves. J. Neural Eng. 2016, 13, 036001. [Google Scholar] [CrossRef]

- Farina, D.; Aszmann, O. Bionic limbs: Clinical reality and academic promises. Sci. Transl. Med. 2014, 6, 257ps212. [Google Scholar] [CrossRef]

- Jones, I.; Johnson, M.I. Transcutaneous electrical nerve stimulation. Contin. Educ. Anaesth. Crit. Care Pain 2009, 9, 130–135. [Google Scholar] [CrossRef] [Green Version]

- Dupan, S.S.; McNeill, Z.; Brunton, E.; Nazarpour, K. Temporal modulation of transcutaneous electrical nerve stimulation influences sensory perception. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 3885–3888. [Google Scholar]

- Vargas, L.; Huang, H.; Zhu, Y.; Hu, X. Object shape and surface topology recognition using tactile feedback evoked through transcutaneous nerve stimulation. IEEE Trans. Haptics 2020, 13, 152–158. [Google Scholar] [CrossRef]

- D’anna, E.; Petrini, F.M.; Artoni, F.; Popovic, I.; Simanić, I.; Raspopovic, S.; Micera, S. A somatotopic bidirectional hand prosthesis with transcutaneous electrical nerve stimulation based sensory feedback. Sci. Rep. 2017, 7, 10930. [Google Scholar] [CrossRef]

- Schweisfurth, M.A.; Markovic, M.; Dosen, S.; Teich, F.; Graimann, B.; Farina, D. Electrotactile EMG feedback improves the control of prosthesis grasping force. J. Neural Eng. 2016, 13, 056010. [Google Scholar] [CrossRef]

- Garenfeld, M.A.; Mortensen, C.K.; Strbac, M.; Dideriksen, J.L.; Dosen, S. Amplitude versus spatially modulated electrotactile feedback for myoelectric control of two degrees of freedom. J. Neural Eng. 2020, 17, 046034. [Google Scholar] [CrossRef]

- Isaković, M.; Belić, M.; Štrbac, M.; Popović, I.; Došen, S.; Farina, D.; Keller, T. Electrotactile feedback improves performance and facilitates learning in the routine grasping task. Eur. J. Transl. Myol. 2016, 26, 6090. [Google Scholar] [CrossRef] [Green Version]

- Witteveen, H.J.; Droog, E.A.; Rietman, J.S.; Veltink, P.H. Vibro-and electrotactile user feedback on hand opening for myoelectric forearm prostheses. IEEE Trans. Biomed. Eng. 2012, 59, 2219–2226. [Google Scholar] [CrossRef]

- Svensson, P.; Antfolk, C.; Björkman, A.; Malešević, N. Electrotactile feedback for the discrimination of different surface textures using a microphone. Sensors 2021, 21, 3384. [Google Scholar] [CrossRef]

- Jiang, C.; Chen, Y.; Fan, M.; Wang, L.; Shen, L.; Li, N.; Sun, W.; Zhang, Y.; Tian, F.; Han, T. Douleur: Creating Pain Sensation with Chemical Stimulant to Enhance User Experience in Virtual Reality. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–26. [Google Scholar] [CrossRef]

- Lu, J.; Liu, Z.; Brooks, J.; Lopes, P. Chemical Haptics: Rendering Haptic Sensations via Topical Stimulants. In Proceedings of the 34th Annual ACM Symposium on User Interface Software and Technology, Virtual Event, USA, 10–14 October 2021; pp. 239–257. [Google Scholar]

| Sensing Modality | Design | Advantages | Disadvantages |

|---|---|---|---|

| Strain Gauge | [42,43,44] | Low-cost | Prone to errors from moisture |

| Versatile | Requires supplementary devices to amplify data | ||

| Good sensing range | Difficult to assemble | ||

| Accelerometer | [45,46] | Good accuracy | Noise |

| Versatile | |||

| High precision | |||

| Piezoresistive | [47,48,49,50,51,52,53,54] | High accuracy | High power |

| High spatial resolution | |||

| Small and light | |||

| Piezoelectric | [55,56,57,58,59] | High sensing range | Poor spatial resolution |

| High precision | Limited to dynamic touch scenarios | ||

| Optical | [60,61] | High accuracy | Bulky |

| High precision | |||

| Good spatial resolution | |||

| Multimodal | [62,63,64,65] | Compensates for other sensor limitations | High cost |

| Difficult to manufacture |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

See, A.R.; Choco, J.A.G.; Chandramohan, K. Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us. Appl. Sci. 2022, 12, 4686. https://doi.org/10.3390/app12094686

See AR, Choco JAG, Chandramohan K. Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us. Applied Sciences. 2022; 12(9):4686. https://doi.org/10.3390/app12094686

Chicago/Turabian StyleSee, Aaron Raymond, Jose Antonio G. Choco, and Kohila Chandramohan. 2022. "Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us" Applied Sciences 12, no. 9: 4686. https://doi.org/10.3390/app12094686

APA StyleSee, A. R., Choco, J. A. G., & Chandramohan, K. (2022). Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us. Applied Sciences, 12(9), 4686. https://doi.org/10.3390/app12094686