Dynamic Viewpoint Selection for Sweet Pepper Maturity Classification Using Online Economic Decisions

Abstract

:1. Introduction

1.1. Maturity Classification

1.2. Dynamic Viewpoint Selection

1.3. Contributions of This Paper

2. Methods

2.1. Data Collection

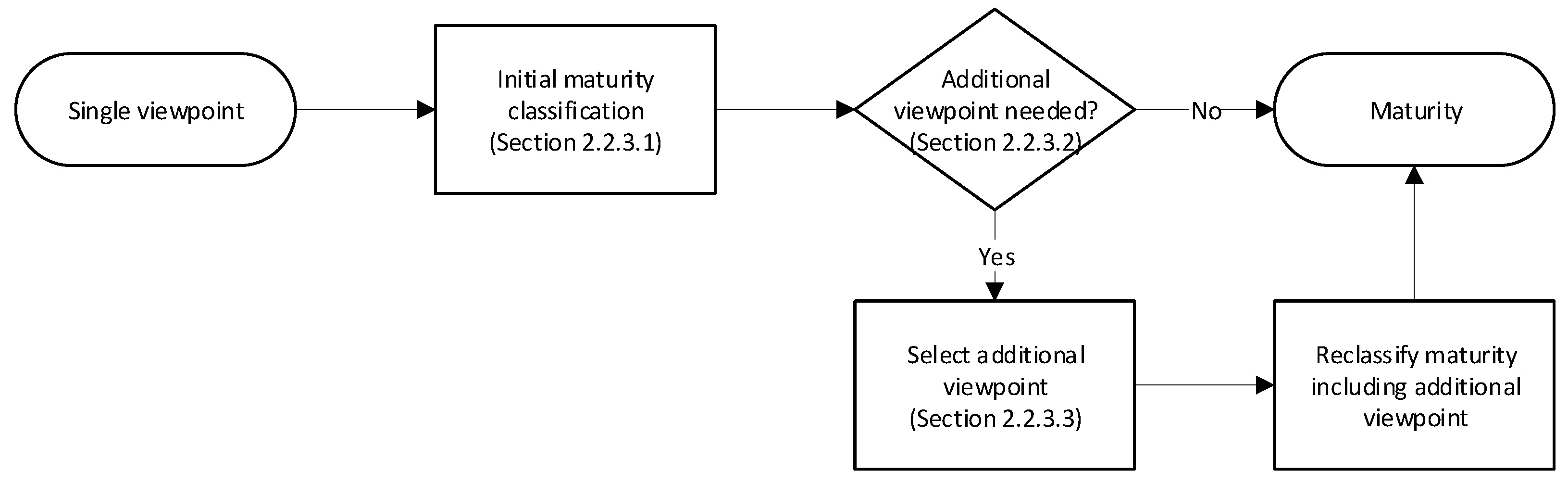

2.2. Active Maturity Classification

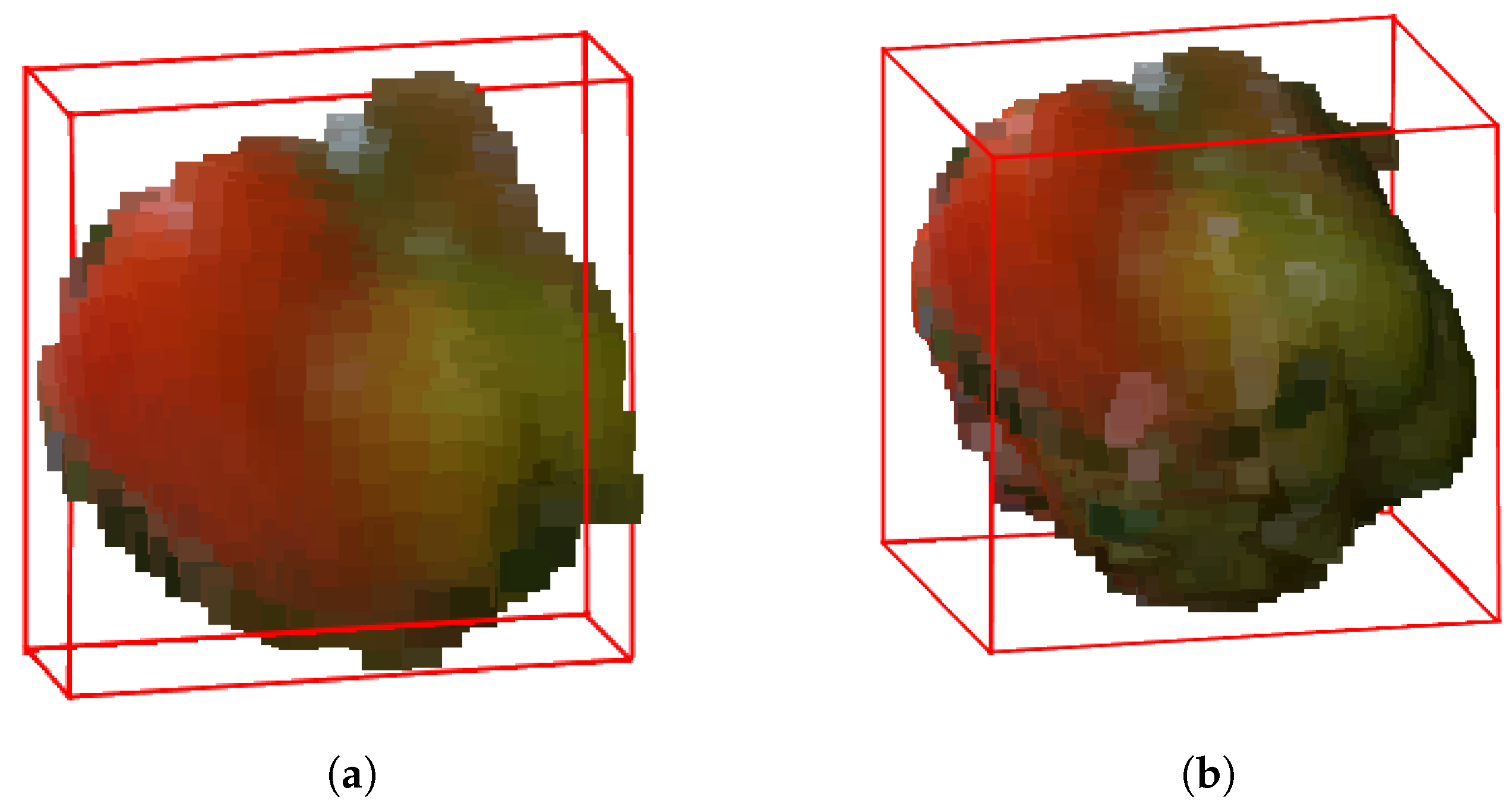

2.2.1. 3D Point-Cloud Representation

2.2.2. Color Feature Extraction

2.2.3. Dynamic Viewpoint Selection and Maturity Classification

2.2.3.1. Initial Maturity Classification

2.2.3.2. Additional Viewpoint Decision

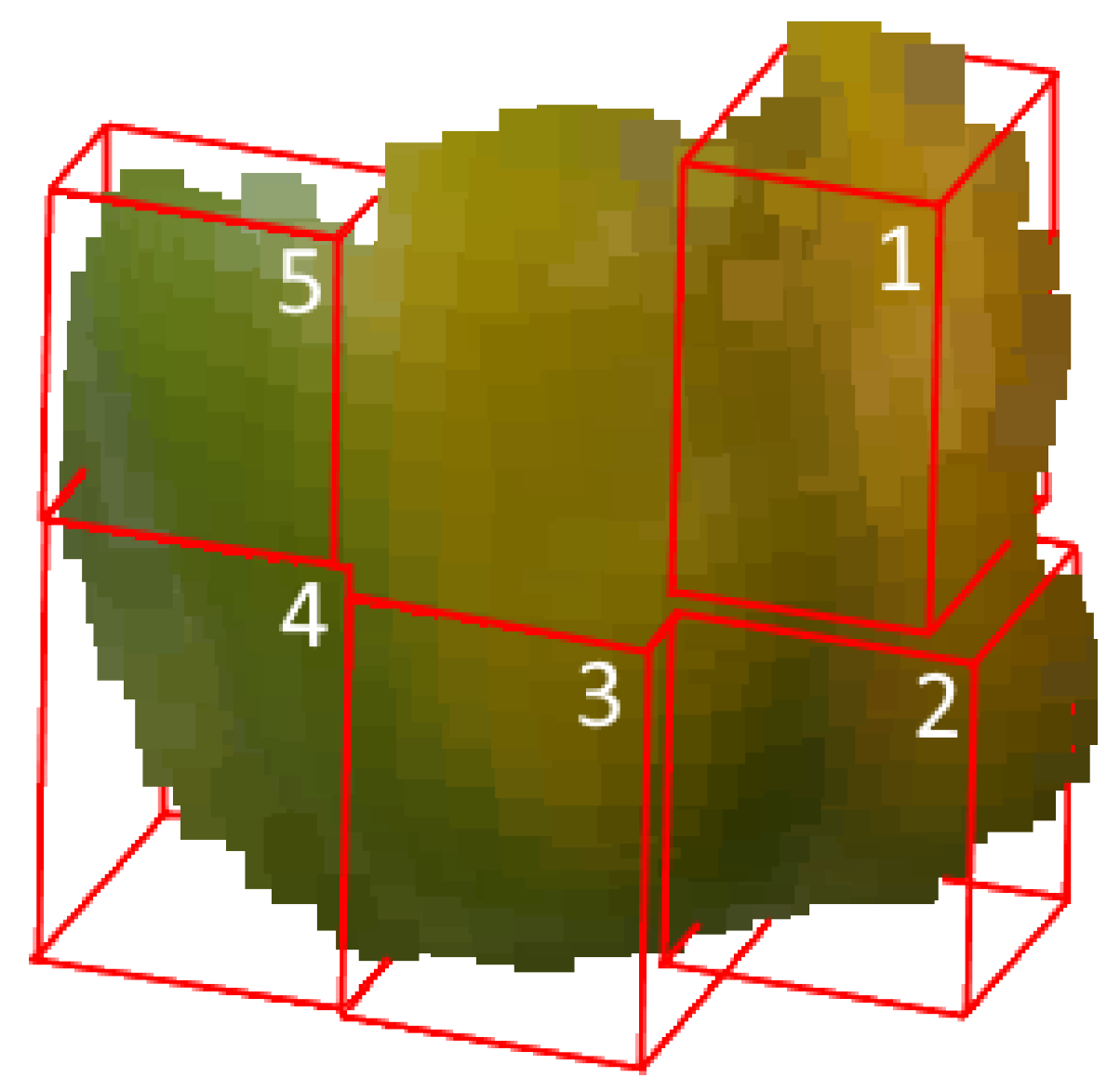

2.2.3.3. Additional Viewpoint Selection

2.3. Evaluation

2.3.1. Performance Measures

2.3.2. Analyses

3. Results

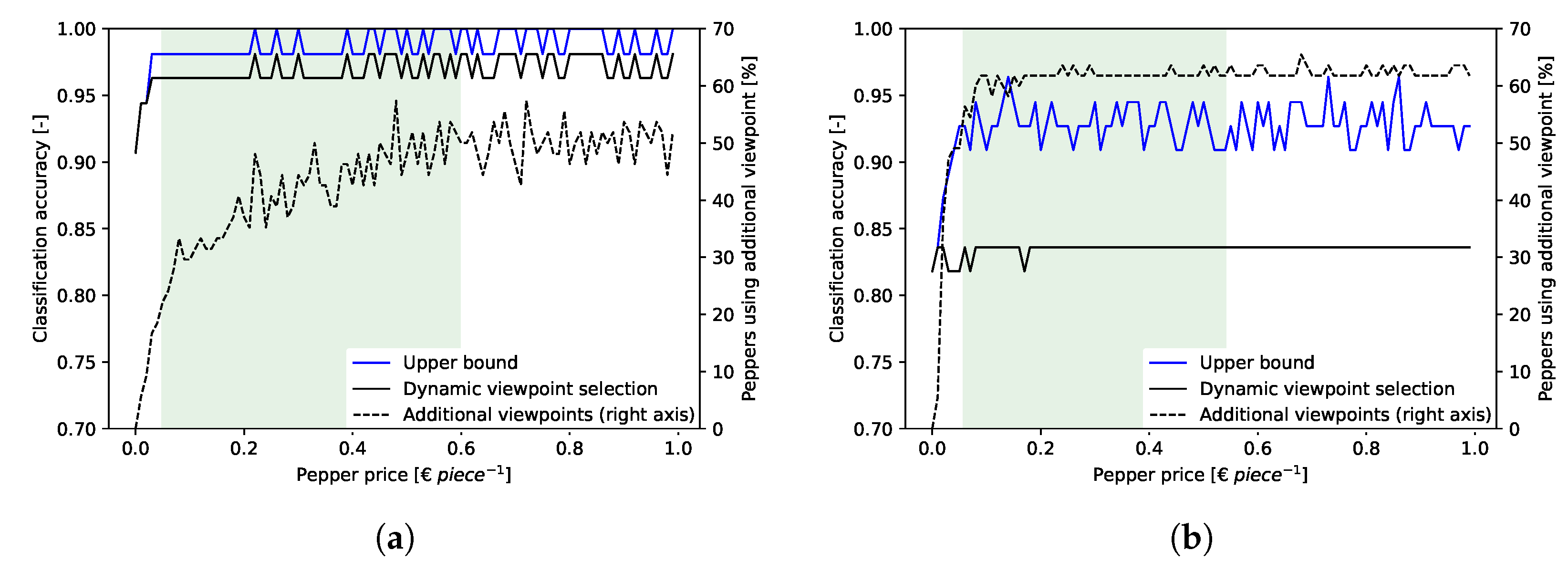

3.1. Dynamic Viewpoint Selection

3.2. Sensitivity Analysis

3.3. Repeatability

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kootstra, G.; Bender, A.; Perez, T.; van Henten, E.J. Robotics in Agriculture. In Encyclopedia of Robotics; Ang, M.H., Khatib, O., Siciliano, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–19. [Google Scholar] [CrossRef]

- Elkoby, Z.; van ’t Ooster, B.; Edan, Y. Simulation Analysis of Sweet Pepper Harvesting Operations. In Advances in Production Management Systems. Innovative and Knowledge-Based Production Management in a Global-Local World; Grabot, B., Vallespir, B., Gomes, S., Bouras, A., Kiritsis, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 441–448. [Google Scholar]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Kapach, K.; Barnea, E.; Mairon, R.; Edan, Y.; Ben-Shahar, O. Computer vision for fruit harvesting robots—State of the art and challenges ahead. Int. J. Comput. Vis. Robot. 2012, 3, 4–34. [Google Scholar] [CrossRef] [Green Version]

- Kootstra, G.; Wang, X.; Blok, P.M.; Hemming, J.; van Henten, E. Selective Harvesting Robotics: Current Research, Trends, and Future Directions. Curr. Robot. Rep. 2021, 2, 95–104. [Google Scholar] [CrossRef]

- Tadesse, T.; Hewett, E.W.; Nichols, M.A.; Fisher, K.J. Changes in physicochemical attributes of sweet pepper cv. Domino during fruit growth and development. Sci. Hortic. 2002, 93, 91–103. [Google Scholar] [CrossRef]

- Baietto, M.; Wilson, A. Electronic-Nose Applications for Fruit Identification, Ripeness and Quality Grading. Sensors 2015, 15, 899–931. [Google Scholar] [CrossRef]

- Tan, S.; Zhang, L.; Yang, J. Sensing fruit ripeness using wireless signals. In Proceedings of the International Conference on Computer Communications and Networks (ICCCN), Hangzhou, China, 30 July–2 August 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Arendse, E.; Fawole, O.A.; Magwaza, L.S.; Opara, U.L. Non-destructive prediction of internal and external quality attributes of fruit with thick rind: A review. J. Food Eng. 2018, 217, 11–23. [Google Scholar] [CrossRef]

- Lu, R.; Van Beers, R.; Saeys, W.; Li, C.; Cen, H. Measurement of optical properties of fruits and vegetables: A review. Postharvest Biol. Technol. 2020, 159, 111003. [Google Scholar] [CrossRef]

- Hussain, A.; Pu, H.; Sun, D.W. Innovative nondestructive imaging techniques for ripening and maturity of fruits—A review of recent applications. Trends Food Sci. Technol. 2018, 72, 144–152. [Google Scholar] [CrossRef]

- Harel, B.; Parmet, Y.; Edan, Y. Maturity classification of sweet peppers using image datasets acquired in different times. Comput. Ind. 2020, 121, 103274. [Google Scholar] [CrossRef]

- Semenov, V.; Mitelman, Y. Non-destructive Fruit Quality Control Using Radioelectronics: A Review. In Proceedings of the 2020 Ural Symposium on Biomedical Engineering, Radioelectronics and Information Technology (USBEREIT), Yekaterinburg, Russia, 14–15 May 2020; pp. 0281–0284. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; van Tuijl, B.A.; Barth, R.; Wais, E.; van Henten, E.J. Performance Evaluation of a Harvesting Robot for Sweet Pepper. J. Field Robot. 2017, 34, 1123–1139. [Google Scholar] [CrossRef]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous Sweet Pepper Harvesting for Protected Cropping Systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef] [Green Version]

- Hemming, J.; Bac, C.W.; van Tuijl, B.A.; Barth, R.; Bontsema, J.; Pekkeriet, E.; van Henten, E.J. A robot for harvesting sweet-pepper in greenhouses. In Proceedings of the International Conference of Agricultural Engineering, Zurich, Switzerland, 6–10 July 2014; pp. 1–8. [Google Scholar]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J.; Kurita, M. Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef] [Green Version]

- Zhaoxiang, L.; Gang, L. Apple maturity discrimination and positioning system in an apple harvesting robot. N. Z. J. Agric. Res. 2007, 50, 1103–1113. [Google Scholar] [CrossRef]

- Choi, K.; Lee, G.; Han, Y.J.; Bunn, J.M. Tomato maturity evaluation using color image analysis. Trans. Am. Soc. Agric. Eng. 1995, 38, 171–176. [Google Scholar] [CrossRef]

- Wang, X.; Mao, H.; Han, X.; Yin, J. Vision-based judgment of tomato maturity under growth conditions. Afr. J. Biotechnol. 2011, 10, 3616–3623. [Google Scholar] [CrossRef]

- Zhang, L.; Jia, J.; Gui, G.; Hao, X.; Gao, W.; Wang, M. Deep Learning Based Improved Classification System for Designing Tomato Harvesting Robot. IEEE Access 2018, 6, 67940–67950. [Google Scholar] [CrossRef]

- Altaheri, H.; Alsulaiman, M.; Muhammad, G. Date Fruit Classification for Robotic Harvesting in a Natural Environment Using Deep Learning. IEEE Access 2019, 7, 117115–117133. [Google Scholar] [CrossRef]

- Brown, J.; Sukkarieh, S. Design and Evaluation of a Modular Robotic Plum Harvesting System Utilising Soft Components. arXiv 2020, arXiv:abs/2007.06315. [Google Scholar]

- Mim, F.S.; Galib, S.M.; Hasan, M.F.; Jerin, S.A. Automatic detection of mango ripening stages—An application of information technology to botany. Sci. Hortic. 2018, 237, 156–163. [Google Scholar] [CrossRef]

- Kader, A.A. Fruit maturity, ripening, and quality relationships. Acta Hortic. 1999, 485, 203–208. [Google Scholar] [CrossRef]

- Fox, A.J.; Del Pozo-Insfran, D.; Joon, H.L.; Sargent, S.A.; Talcott, S.T. Ripening-induced chemical and antioxidant changes in bell peppers as affected by harvest maturity and postharvest ethylene exposure. Hortscience 2005, 40, 732–736. [Google Scholar] [CrossRef] [Green Version]

- Harel, B.; Kurtser, P.; Parmet, Y.; Edan, Y. Sweet pepper maturity evaluation. Adv. Anim. Biosci. 2017, 8, 167–171. [Google Scholar] [CrossRef]

- Harel, B.; Kurtser, P.; Herck, L.V.; Parmet, Y.; Edan, Y. Sweet pepper maturity evaluation via multiple viewpoints color analyses. In Proceedings of the International Conference on Agricultural Engineering CIGR-AgEng, Aarhus, Denmark, 26–29 June 2016; pp. 1–7. [Google Scholar]

- Harel, B.; van Essen, R.; Parmet, Y.; Edan, Y. Viewpoint Analysis for Maturity Classification of Sweet Peppers. Sensors 2020, 20, 3783. [Google Scholar] [CrossRef] [PubMed]

- Harel, B.; Edan, Y.; Perlman, Y. Optimization Model for Selective Harvest Planning Performed by Humans and Robots. Appl. Sci. 2022, 12, 2507. [Google Scholar] [CrossRef]

- Dutta, R.; Chaudhury, S.; Banerjee, S. Active recognition through next view planning: A survey. Pattern Recognit. 2004, 37, 429–446. [Google Scholar] [CrossRef] [Green Version]

- Vázquez, P.P.; Feixas, M.; Sbert, M.; Heidrich, W. Viewpoint Selection using Viewpoint Entropy. In Proceedings of the Vision Modeling and Visualization Conference (VMV-01), Stuttgart, Germany, 21–23 November 2001; pp. 273–280. [Google Scholar] [CrossRef]

- Bajcsy, R. Active Perception. Proc. IEEE 1988, 76, 966–1005. [Google Scholar] [CrossRef]

- MacKay, D.J.C. Information-Based Objective Functions for Active Data Selection. Neural Comput. 1992, 4, 590–604. [Google Scholar] [CrossRef]

- Foix, S.; Alenyà, G.; Torras, C. 3D Sensor planning framework for leaf probing. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 6501–6506. [Google Scholar] [CrossRef] [Green Version]

- Kurtser, P.; Edan, Y. The use of dynamic sensing strategies to improve detection for a pepper harvesting robot. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 8286–8293. [Google Scholar] [CrossRef]

- Hemming, J.; Ruizendaal, J.; Hofstee, J.W.; van Henten, E.J. Fruit detectability analysis for different camera positions in sweet-pepper. Sensors 2014, 14, 6032–6044. [Google Scholar] [CrossRef] [Green Version]

- Kurtser, P.; Edan, Y. Statistical models for fruit detectability: Spatial and temporal analyses of sweet peppers. Biosyst. Eng. 2018, 171, 272–289. [Google Scholar] [CrossRef]

- boerenbond.be. Boerenbond. Paprika. 2020. Available online: https://www.boerenbond.be/markten/groenten/paprika (accessed on 12 June 2020).

- sweeper-robot.eu. Sweeper FAQ. 2019. Available online: http://www.sweeper-robot.eu/10-article/54-sweeper-faq (accessed on 17 December 2019).

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Lehnert, C.; Mccool, C.; Sa, I.; Perez, T. Performance improvements of a sweet pepper harvesting robot in protected cropping environments. J. Field Robot. 2020, 37, 1197–1223. [Google Scholar] [CrossRef]

- Deinzer, F.; Derichs, C.; Niemann, H.; Denzler, J. A framework for actively selecting viewpoints in object recognition. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 765–799. [Google Scholar] [CrossRef] [Green Version]

- Zemmour, E.; Kurtser, P.; Edan, Y. Automatic parameter tuning for adaptive thresholding in fruit detection. Sensors 2019, 19, 2130. [Google Scholar] [CrossRef] [Green Version]

- Arad, B.; Kurtser, P.; Barnea, E.; Harel, B.; Edan, Y.; Ben-Shahar, O. Controlled lighting and illumination-independent target detection for real-time cost-efficient applications. The case study of sweet pepper robotic harvesting. Sensors 2019, 19, 1390. [Google Scholar] [CrossRef] [Green Version]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; Mccool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [Green Version]

- Vitzrabin, E.; Edan, Y. Adaptive thresholding with fusion using a RGBD sensor for red sweet-pepper detection. Biosyst. Eng. 2016, 147, 45–56. [Google Scholar] [CrossRef]

| Class | Classification | % Colored | Number of Red Peppers | Number of Yellow Peppers |

|---|---|---|---|---|

| 2 | Immature | 5–50% | 23 | 23 |

| 3 | Mature | 50–95% | 24 | 26 |

| 4 | Mature | 95–100% | 22 | 21 |

| Total | 69 | 70 |

| Actual | |||

|---|---|---|---|

| Mature | Immature | ||

| Predicted | Mature | TP | FP |

| Immature | FN | TN | |

| Method | Class | Red Peppers | Yellow Peppers | ||||

|---|---|---|---|---|---|---|---|

| CA | TPR | TNR | CA | TPR | TNR | ||

| Single viewpoint | All | 0.907 | 0.944 | 0.833 | 0.800 | 0.892 | 0.611 |

| Dynamic viewpoint selection | 2 | 0.944 | 0.778 | ||||

| 3 | 0.947 | 0.762 | |||||

| 4 | 1.000 | 1.000 | |||||

| All | 0.963 | 0.972 | 0.944 | 0.836 | 0.865 | 0.778 | |

| Random viewpoint | All | 0.944 | 0.972 | 0.889 | 0.855 | 0.892 | 0.778 |

| All viewpoints | All | 0.963 | 0.944 | 1.000 | 0.818 | 0.838 | 0.778 |

| Upper bound | All | 0.981 | 1.000 | 0.944 | 0.945 | 1.000 | 0.833 |

| Red Peppers | Yellow Peppers | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Single Viewpoint | Dynamic Viewpoint Selection | All Viewpoints | Upper Bound | Single Viewpoint | Dynamic Viewpoint Selection | All Viewpoints | Upper Bound | ||

| Peppers using additional viewpoint(s) (%) | 0% | 39% | 100% | 39% | 0% | 62% | 100% | 62% | |

| Average additional cycle time (s) | 0 | 3.14 | 44.08 | 2.75 | 0 | 5.06 | 44.08 | 4.53 | |

| Costs (EUR) | Misclassification | EUR 0.98 | EUR 0.39 | EUR 0.39 | EUR 0.20 | EUR 2.22 | EUR 1.82 | EUR 2.02 | EUR 0.61 |

| Additional sensing | EUR 0.00 | EUR 0.08 | EUR 1.13 | EUR 0.07 | EUR 0.00 | EUR 0.13 | EUR 1.15 | EUR 0.12 | |

| Total | EUR 0.98 | EUR 0.47 | EUR 1.53 | EUR 0.27 | EUR 2.22 | EUR 1.95 | EUR 3.17 | EUR 0.73 | |

| Costs per pepper (EUR piece−1) | EUR 0.018 | EUR 0.009 | EUR 0.028 | EUR 0.005 | EUR 0.040 | EUR 0.035 | EUR 0.058 | EUR 0.013 | |

| Pepper Color | Pepper Class | Single Viewpoint | Dynamic Viewpoint | All Viewpoints | Upper Bound | ||||

|---|---|---|---|---|---|---|---|---|---|

| CCF | MCA | CCF | MCA | CCF | MCA | CCF | MCA | ||

| Red | 2 | 0.42 | 0.71 | 0.57 | 0.86 | 1.00 | 0.86 | 0.86 | 0.86 |

| 3 | 0.86 | 1.00 | 0.71 | 0.86 | 0.71 | 0.86 | 0.86 | 1.00 | |

| 4 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| All | 0.71 | 0.88 | 0.71 | 0.88 | 0.88 | 0.88 | 0.94 | 0.94 | |

| Yellow | 2 | 0.57 | 0.57 | 0.43 | 0.71 | 0.71 | 0.86 | 1.00 | 1.00 |

| 3 | 0.71 | 1.00 | 0.43 | 1.00 | 0.57 | 0.86 | 1.00 | 1.00 | |

| 4 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| All | 0.71 | 0.83 | 0.52 | 0.88 | 0.71 | 0.88 | 1.00 | 1.00 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

van Essen, R.; Harel, B.; Kootstra, G.; Edan, Y. Dynamic Viewpoint Selection for Sweet Pepper Maturity Classification Using Online Economic Decisions. Appl. Sci. 2022, 12, 4414. https://doi.org/10.3390/app12094414

van Essen R, Harel B, Kootstra G, Edan Y. Dynamic Viewpoint Selection for Sweet Pepper Maturity Classification Using Online Economic Decisions. Applied Sciences. 2022; 12(9):4414. https://doi.org/10.3390/app12094414

Chicago/Turabian Stylevan Essen, Rick, Ben Harel, Gert Kootstra, and Yael Edan. 2022. "Dynamic Viewpoint Selection for Sweet Pepper Maturity Classification Using Online Economic Decisions" Applied Sciences 12, no. 9: 4414. https://doi.org/10.3390/app12094414

APA Stylevan Essen, R., Harel, B., Kootstra, G., & Edan, Y. (2022). Dynamic Viewpoint Selection for Sweet Pepper Maturity Classification Using Online Economic Decisions. Applied Sciences, 12(9), 4414. https://doi.org/10.3390/app12094414