Abstract

Slope hazards threaten the safety of buildings and people’s lives and property. Real-time and dynamic monitoring of slope deformation by digital image monitoring technology is an effective method to prevent slope hazards. In this study, the Zhang Zhengyou calibration method is used to calibrate a non-metric digital camera, which is used to monitor the simulated slope with ring marks. The sub-pixel algorithm is used to identify the center coordinates of the landmarks. The proportional coefficient is obtained from the relationship between the landmarks and the actual distance. The change in displacement of the position of the digital camera is calculated in combination with the specific displacement value of the slope, yielding the rapid and accurate displacement trend of the slope. The outdoor experimental results show that the monitoring accuracy of this method can reach millimeter level, which can meet the demand of slope monitoring.

1. Introduction

The collapse and slide of a mountain slope is a common natural disaster that threatens the safety of buildings and people’s lives and property. Landslides cause the deaths of thousands of people every year, and the direct economic loss exceeds hundreds of millions of USD. Therefore, deformation monitoring and stability analysis of the slope are very important. Currently, the commonly used slope displacement monitoring methods include laser ranging, GPS observation, remote sensing, and close-range photogrammetry [1,2,3,4].

In the traditional close-range photogrammetry method, the slope is monitored by setting landmarks and collecting data at different positions using a camera. The total station is used to measure the control points, and software is used to carry out internal processing such as aerial triangulation. The results before and after the deformation are compared, yielding the deformation within the corresponding time section. The measurement accuracy of slope displacement monitoring by close-range photography may not be as good as some traditional measurement methods [5]; however, it fully meets normal monitoring requirements. The rapid development of close-range photogrammetry has enabled different applications in aerospace, archaeology, architecture, bridges, and other fields [6,7,8,9]. The development of GPS technology and its combination with close-range photogrammetry exploits GPS measurement advantages and solves some positioning problems in close-range photogrammetry [10,11]. Due to the inconvenience of control-point measurement, some researchers applied the photogrammetry method to detect the size of the target object without a control point, considering the physical parameters of the camera [12]. Several researchers used close-range photogrammetry technology to generate a digital elevation model (DEM) and obtain the movement of the slope area using DEM subtraction of multi-period data [13,14]. Kim et al. [15] and other researchers employed close-range photogrammetry technology and the block analysis method to analyze the rock mass failure of a slope DEM. Furthermore, close-range photogrammetry technology can be used to produce a digital terrain model (DTM) and a digital orthophoto model (DOM), and obtain 3D point cloud data. Stylianidis et al. [16] used these methods to monitor slopes. Zhang et al. [17] combined close-range photogrammetry with surface modeling to predict landslide trends. Without any control points, Ohnishia et al. [18] used the Moore–Penrose generalized inverse matrix to analyze the equation derived from the geometric relationship between the camera, the measuring point, and the shooting image, and obtained the distance between the measuring points installed on the target slope. The purpose of dynamic monitoring of the slope was achieved via the change in the distance. Close-range photogrammetry is widely used in slope displacement monitoring. However, the close-range photogrammetry method also has shortcomings: The data processing is cumbersome, the degree of specialization is high, it is vulnerable to terrain restrictions, and the relevant hardware equipment is expensive.

Using the measurement principle of white light speckle, Lin [19] used a non-metric digital camera to verify its performance in building deformation monitoring. However, the effect of digital camera calibration on the results was not considered. Luo et al. [20] programmed to call the camera’s Software Development Kit (SDK) to achieve automatic control of the photography and used a GPS wireless network to achieve data exchange and remote control of the camera so as to enable real-time monitoring of slope displacement by the remote-control camera. Nevertheless, the real-time displacement of the digital camera was not considered. This method can only monitor slope displacement when the digital camera position is fixed.

In order to effectively make up for the shortcomings of the above method [19,20], as well as to overcome the shortcomings of close-range photogrammetry with a high degree of specialization and tedious internal processing [21], in this study, a non-metric digital camera is calibrated by the Zhang Zhengyou calibration method. The sub-pixel method is used to extract the center coordinates of the ring marks by using our own program for image processing and recognition. Physical and geometric information of the measured object is acquired by camera and used to obtain the displacement of the slope in all dimensions. The method holds the advantages of practical application value, strong universality, low cost, and timely measurement.

2. Materials

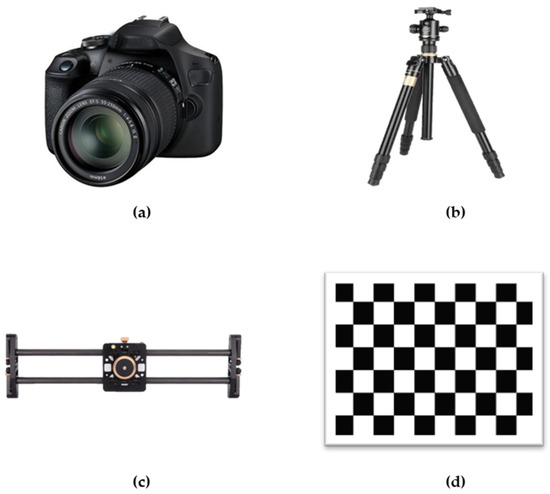

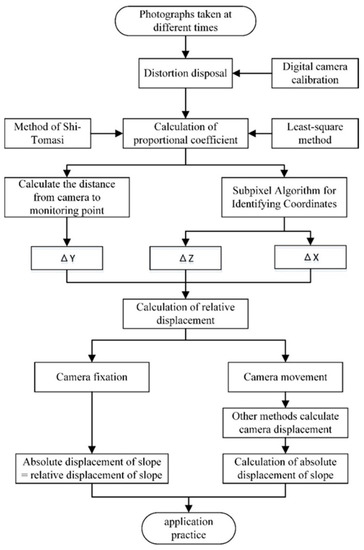

The monitoring system comprises a Canon 1500D digital camera, camera tripod, slide rail, Zhang Zhengyou calibration board, monitoring landmarks, and digital image processing and other related software (Figure 1). The parameters of the digital camera are shown in Table 1. In the monitoring process, first, the digital camera is calibrated by the Zhang Zhengyou calibration method to obtain the distortion parameters. Artificial landmarks are portrayed on the simulated slope to be measured, and the displacement of the slope is reflected by their displacement. The proportional coefficient is obtained by the actual distance between the landmarks obtained via the object–image relationship. The camera is placed at a known fixed point opposite the simulated slope, and it is programmed to capture images of the slope at certain intervals. MATLAB and Python are used to process and identify the image. The displacement of the camera measured by other methods is considered simultaneously, and the actual displacement of the slope is calculated based on the first image. If necessary, the imaging interval is shortened or a video is captured to achieve real-time monitoring. Figure 2 illustrates the flow chart of the experiment.

Figure 1.

Experimental materials: (a) Canon 1500D digital camera; (b) camera tripod; (c) slide rail; (d) chessboard.

Table 1.

The parameters of the camera.

Figure 2.

Flow chart illustrating the experiment.

3. Monitoring Methods

3.1. Digital Camera Calibration

Due to inherent imperfections in the digital camera lens, captured images will exhibit various forms of distortion. The purpose of the digital camera calibration is to obtain internal, external, and distortion parameters of the camera for correction. Four coordinate systems are involved in the camera imaging system, namely, the pixel coordinate system, image coordinate system, camera coordinate system, and world coordinate system.

The following formula can be used to express the relationship between these coordinate systems [22]:

where represents the pixel coordinate in the pixel coordinate system; is the physical coordinate of a point in the world coordinate system; is the scale factor; is the internal parameter matrix, with being the coordinates of the principal point; and are the scale factors in the image’s and axes, and is the parameter describing the skewness of the two image axes; and is the external parameter matrix, where is the rotation matrix and is the translation vector.

The distortion model is divided into two types: radial and tangential distortion. The radial distortion formula (of the third order) is as follows:

The tangential distortion formula is as follows:

where is the ideal normalized image coordinate without distortion; is the normalized image coordinate after distortion; are the radial distortion parameters; and are the tangential distortion parameters.

The calibration board used in this experiment is a square with an edge length of 18 mm and a total of 9 × 6 corners. Using a digital camera with focal length of 55 mm, the calibration board was imaged from multiple angles, capturing a total of 20 photographs. The camera calibration was conducted in MATLAB software. The obtained camera calibration parameters are shown in Table 2.

Table 2.

Camera calibration parameters.

3.2. Sub-Pixel Algorithm for Calculating Coordinates of Center of Ring Mark

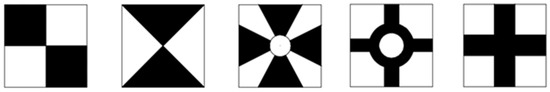

3.2.1. Marks of Monitoring Points

In deformation monitoring, monitoring marks play crucial roles as feature points. Scholars have designed a variety of measurement marks, and several commonly used traditional measurement marks are shown in Figure 3.

Figure 3.

Common artificial markers.

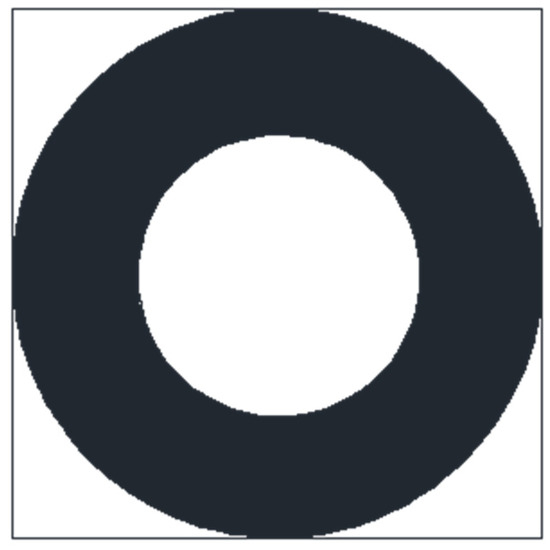

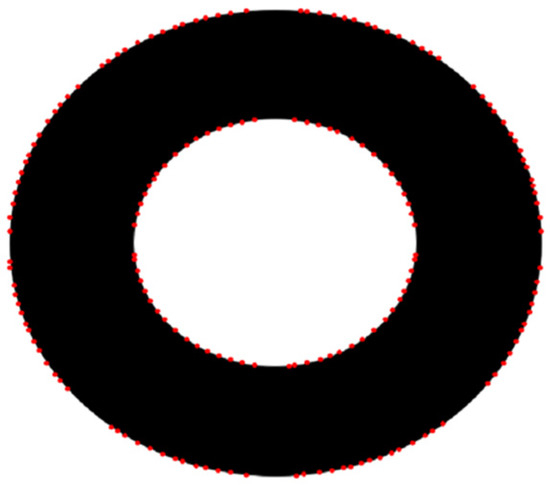

The monitoring marks must not be too complex, otherwise they will increase the processing time and algorithm complexity. To accurately determine the center of the monitoring marks, their form and size are compared and selected, and the image-processing algorithms for calculating their center pixel must be considered when selecting their form. The commonly employed algorithms include the gravity center method, edge detection method, and circular contour sub-pixel center method. In this study, the algorithm of the circular contour sub-pixel center method was used, such that a black ring mark with white background was designed, as shown in Figure 4. The inner and outer circle diameters are 80 and 150 mm, respectively. It is characterized by two circular contours and has higher accuracy when using the circular contour sub-pixel algorithm to fit the center coordinates under the same conditions.

Figure 4.

Ring mark.

3.2.2. Sub-Pixel Corner Detection

In the actual image processing, due to the influence of hardware and the environment, the image exhibits excessive noise, which complicates the coordinate recognition in landmark images. Therefore, preprocessing is required. The main purpose of image preprocessing is to eliminate irrelevant information in the image, restore useful real information, enhance the detectability of relevant information, and simplify data to the maximum extent. The processes include image graying, binarization, image denoising, and image enhancement.

The subpixel method is used to detect the corner points of the circular contour of landmarks. The Harris corner detection principle is required before detection. The Harris corner detection principle involves using a moving window to calculate the gray change value in the image. The key processes include transformation into the gray image, calculating the differential image, Gaussian smoothing, calculating the local extremum, and confirming corners [23]. The Harris corner detection algorithm steps are summarized as follows:

1. When the window (small image fragment) moves in both the and directions, the pixel value change, , inside the window is calculated. Let the center of a window be located at a position in the gray image, and the pixel gray value of this position be denoted by . If the window moves a small displacement and to a new position in the directions of and , respectively, the pixel gray value of this position is . The variation formula of the pixel gray value caused by window moving in all directions is given as follows:

where is the window function of position , which represents the weight of each pixel in the window.

Equation (5) below is obtained by simplifying Equation (4):

where .

Variables and are taken out to obtain the final form:

where .

2. For each window, its corresponding corner response function is calculated. The size of depends on the matrix , which can be performed using the eigenvalues of the matrix. The corner response function corresponding to each window is calculated.

where is the determinant of the matrix, is the trace of the matrix, and is an empirical constant between the range (0.04, 0.06).

Supposing that and are characteristic of matrix , then Equation (7) can be written as follows:

3. According to the value of , the area in which the window is located may be divided into planes, edges, or corners. Then, a reasonable threshold is set; if is larger than the threshold, this indicates that the window corresponds to a corner.

Because the stability of the Harris corner detection algorithm is related to , and is an empirical value, it is difficult to set the optimal value. The Shi–Tomasi method is based on the Harris algorithm. The stability of the corner is actually related to the smaller eigenvalue of the matrix , and the smaller eigenvalue is used as the corner response function [24]. The form of the corner response function of the Shi–Tomasi method is given as follows:

If is larger than the set threshold, the corner point is detected. The corner points extracted by the above method can only reach the pixel level, and the corner coordinates are integers. To obtain more accurate corner position coordinates, the relevant algorithm can be used to make the corner coordinates reach the sub-pixel level. In the corner detection algorithm with sub-pixel accuracy, the vector from the sub-pixel corner point to the surrounding pixel point must be perpendicular to the gray gradient of the image, and the coordinate value of the sub-pixel accuracy is obtained by the iterative method of minimizing the error function. Therefore, the cornerSubPix() function is used in OpenCV to obtain sub-pixel corner values. OpenCV (Open Source Computer Vision Library) is an open source computer vision and machine learning library (https://opencv.org). Figure 5 shows the detection results of the sub-pixel method for ring marks. The red dots in the figure represent the detected subpixel corners.

Figure 5.

Results of sub-pixel algorithm detection.

3.2.3. Least-Square Fitting Center Coordinates

The least-square method is a mathematical optimization technique that finds the best function matching a set of data by minimizing the sum of squares of errors [25]. In the two-dimensional plane coordinate system, the circular equation is expressed as follows.

where are the center coordinates and is the radius.

For circle fitting with the least-square method, the optimized objective function of the error square is given as follows.

where is the coordinate of characteristic points on the circular arc and is the number of feature points involved in fitting.

To avoid the square root, the following improvements are applied to define the error square, and a direct solution to the minimization problem is obtained. The definition is given as follows:

Supposing that , then Equation (12) may be simplified as follows:

According to the principle of the least-square method, parameters , , and must yield the minimum value of . According to the minimum value method, , , and must meet the following conditions:

Thus, the optimal fitting center coordinate and radius are obtained. Therefore, after calculating the sub-pixel corner points of the circular contour, the least-square method is used to fit the sub-pixel center coordinates of the ring mark.

3.3. Calculation of Slope Displacement

First, the proportional coefficient is calculated according to the object–image relationship. denotes the ratio of the actual distance to the corresponding pixel distance, whose unit is mm/pixel. This value is mainly related to the parameters of the digital camera and the distance from the camera to the simulated slope. The acquisition method is described as follows:

1. The distance between the landmarks is obtained to calculate the proportional coefficient . The landmarks are placed separately at the top, bottom, and middle of the simulated slope, amounting to a total of nine landmarks. The actual distance between the landmarks is measured using a tape measure, and the digital camera is fixed 20 m in front of the simulated slope. The sub-pixel algorithm is used to calculate the center coordinates of the ring mark, and the pixel distance between adjacent landmarks is calculated according to their coordinates.

The proportional coefficient is calculated according to the coordinates of the landmarks and is expressed as follows:

where is the actual average distance of all landmarks, with units in and is the pixel average distance between the two landmarks, whose unit is pixels.

2. The distance from the digital camera to the landmarks is calculated:

When the resolution of the camera parameters, CMOS size, , focal length, and other parameters, along with the proportional coefficient , are known, the distance from the digital camera to the landmark can be calculated with these parameters. The formula is as follows:

where is the distance from the digital camera to the landmark, with units in mm; is the size of digital camera CCD, with units in mm; is the focal length of the digital camera, with units in mm; and is the resolution of the digital camera, with units in pixels.

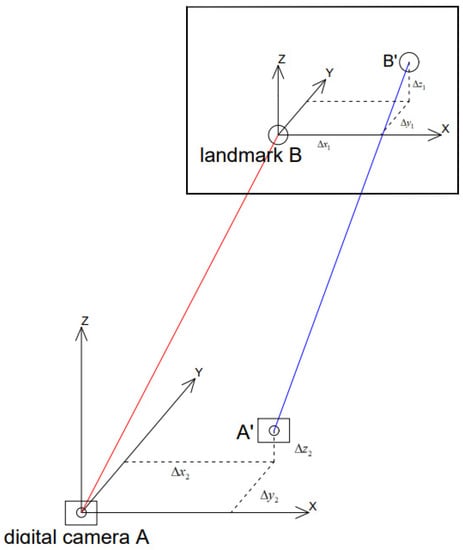

As shown in Figure 6, the initial position of the digital camera is at point , and point is the initial position of the landmark. The corresponding coordinate system is established. The axis marks the horizontal direction, the axis marks the direction of the digital camera, and the axis marks the vertical direction, forming the right-handed coordinate system. At the initial time , the digital camera located at point captures images of the landmarks located at point . At time , due to the influence of other disturbance factors, the position of the digital camera moves from point to point , and the position of the landmark moves from point to point . The digital camera located at point takes pictures of the landmarks located at point . The displacement components of landmark on the axes are , respectively.

where is the displacement of landmark .

Figure 6.

Schematic diagram of the experimental principle. The red line represents the shooting Angle before the digital camera and the landmark are moved. The blue line represents the shooting Angle after the digital camera and the mark point are moved.

3. Calculating and

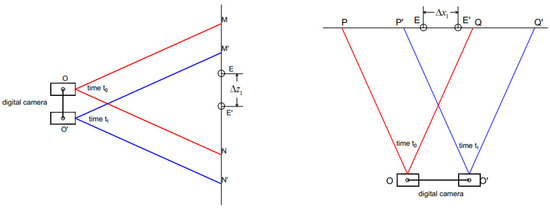

As shown in Figure 7, at time , the digital camera is located at point , the vertical range of the image captured is , the horizontal range is , and the location of the monitoring landmark is . At time , the digital camera moves to point , the vertical range of the photograph is , the horizontal range is , and the monitoring point moves to .

Figure 7.

Calculation principle of , . The red line represents the shooting Angle before the digital camera and the landmark are moved. The blue line represents the shooting Angle after the digital camera and the mark point are moved.

At time , for the image captured from point , the sub-pixel ring algorithm is used to calculate the vertical coordinates of monitoring point , in units of pixels. After the displacement of the digital camera and the simulated slope at time are known, the vertical coordinates of the monitoring landmarks can be calculated from the photos taken from point , and the unit is pixels. The actual displacement of the digital camera and the landmarks is in millimeters. Therefore, the following formula is used to express the relationship between the above vertical directions.

where is the vertical coordinate after the landmark moves and is the vertical coordinate before the landmark moves. is the proportional coefficient and is the vertical displacement of the digital camera, which can be obtained by actual measurement.

Similarly, the relationship between the horizontal directions can be expressed by the following formula:

where is the horizontal coordinate after the landmark moves and is the horizontal coordinate before the landmark moves. is the proportional coefficient and is the horizontal displacement of the digital camera that is obtained by actual measurement.

4. Calculating

At time , the obtained proportional coefficient is recorded as , and at time , the obtained proportional coefficient is recorded as . The positions of the monitoring landmarks and the digital camera have been changing over time, and therefore .

Using Equation (16), the distance from the digital camera to the landmark can be calculated from the proportional coefficient . Therefore,

where is the proportional coefficient at time , is the proportional coefficient at time , is the size of the digital camera CCD, is the focal length, and is the resolution of the digital camera.

4. Results

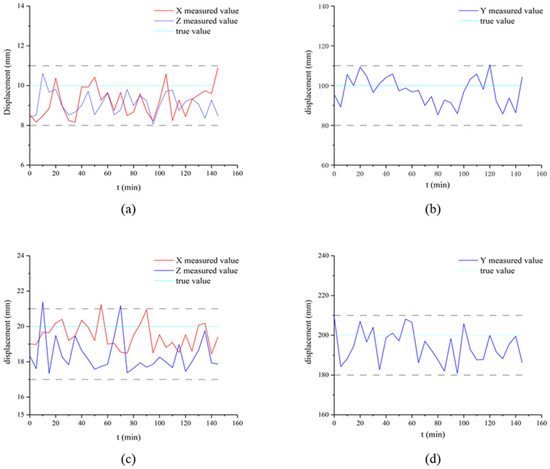

4.1. Camera Fixation

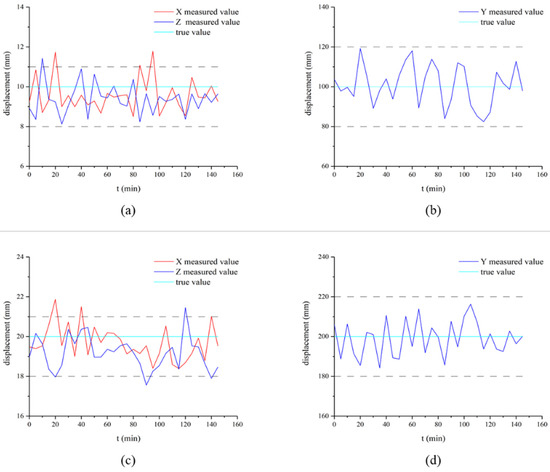

The experimental environment was an outdoor open place, and the wall was regarded as the monitoring object. Upon fixing the digital camera, the landmark was artificially controlled to move 10 mm on the and axes, and the camera was controlled to capture continuous images of the landmark. The displacement of the landmark was calculated using the above method, and images were captured every 5 min for a total of 150 min. Over time, the calculated and axis displacements fluctuated around the true value of 10 mm, as shown in Figure 8a. The landmark was artificially controlled to move 100 mm on the axis and imaged every 5 minutes for a total of 150 min. The results calculated by this method are shown in Figure 8b. Subsequently, the landmark was artificially controlled to move 20 mm on the and axes and 200 mm on the axis for the same operation, and the results are shown in Figure 8c,d.

Figure 8.

Calculation results for the fixed camera. (a) The result of the landmark moving 10 mm in X and Z axes. (b) The result of the landmark moving 100 mm in Y axes. (c) The result of the landmark moving 20 mm in X and Z axes. (d) The result of the landmark moving 10 mm in Y axes.

The root mean square error of the displacement calculated by this method was obtained by subtracting the calculated displacement from the corresponding true value:

where represents the true value and represents the calculated displacement. Results show that when the true values of the landmarks on the and axes were 10 mm, the RMSE in the axis direction was 1.11 mm, whereas that on the axis was 1.06 mm. When the true value on the axis was 100 mm, the corresponding RMSE was 7.55 mm.

When the true values on the and axis were 20 mm, the RMSE in the direction was 0.91 mm and that in the direction was 1.91 mm. When the true value on the axis was 200 mm, the corresponding root mean square error was 9.82 mm.

4.2. Camera Movement

The digital camera was moved 10 mm in the and directions, and the landmarks were moved 10 mm in the and directions. The camera captured continuous photographs of the landmarks, whose displacement was subsequently calculated by the above method. Images were captured every 5 min for a total of 150 min. With time, the calculated displacements in the and axes fluctuated around the true value of 10 mm (Figure 9a). The digital camera moved 100 mm on the axis, and the landmarks were accordingly artificially controlled to move 100 mm. Images were captured every 5 min for a total of 150 min. The results are shown in Figure 9b.

Figure 9.

Calculation results upon camera movement. (a) The result of the landmark moving 10 mm in X and Z axes. (b) The result of the landmark moving 100 mm in Y axes. (c) The result of the landmark moving 20 mm in X and Z axes. (d) The result of the landmark moving 10 mm in Y axes.

Then, the landmarks were artificially controlled to move 20 mm in the and directions and 200 mm in the direction. The results are shown in Figure 9c,d.

When the camera moved 10 mm on the and axes, the true value of the landmark on the and axes was 10 mm. The RMSE on the axis was 0.93 mm, and that on the axis was 0.98 mm. When the digital camera moved 100 mm on the axis and the corresponding true value of the landmark on the axis was 100 mm, the root mean square error on the axis was 10.1 mm.

When the digital camera moved 10 mm on the and axes, the true value of the mark point on those axes was 20 mm. The RMSE on the axis was 0.91 mm, and that on the axis was 1.19 mm. When the digital camera moved 100 mm on the axis and the true value of the mark point on the axis was 200 mm, the RMSE on the axis was 8.89 mm.

5. Discussion

We employed the Zhang Zhengyou calibration method to calibrate the digital camera. The sub-pixel method was used to extract the center coordinates of the ring marks by using our own program for image processing and recognition to obtain the monitoring results. The application of the method, accuracy of measurement, and measures to improve the accuracy of results are discussed below.

- (1)

- The relative position of the camera and the slope changed because of the real-time displacement of the slope. The proportional coefficient in this study was the mean value of the two moments, and thus the calculated displacement of the slope had a certain error. Therefore, the time difference between the two proportional coefficients could be shortened to improve the accuracy and obtain more precise displacement values. This method is most suitable for monitoring the large displacement of a slope within a short time.

- (2)

- The accuracy of measurement was similar to the study of Luo and Lin et al. [19,20], reaching the accuracy of millimeters. However, this study took into account the changing position of the digital camera, and the result was accurate to the millimeter level, too. The calibration of the digital camera was not considered in the study by Lin [19]. For non-metric digital cameras, the camera calibration directly affects the accuracy of measurement results. The Zhang Zhengyou calibration method is convenient; however, its robustness is not as good as in the space resection method. Therefore, a better distortion parameter solution method and correction model could be used for camera calibration to improve measurement accuracy. On the simulated slope, when the distance was 20 m, the accuracy achieved approximately 2 mm on the X and Z axes and ~10 mm on the Y axis.

- (3)

- During the temporary placement of the digital cameras and landmarks on the test day, the field error was large due to the influence of external weather factors. Several improvement measures can be taken for the test device: constructing a pan-tilt camera to ensure stability, using steel landmarks for the deeply buried slope of the concrete foundations, and using better image-processing algorithms to calculate the center coordinates of landmarks. In this study, the slope displacement value was obtained by processing the images. The temporal resolution could be increased by shooting a monitoring video, and the data could be transmitted and processed in real time. The specific displacement and velocity of the slope could thus be calculated, and an early warning device could be installed.

6. Conclusions

The sub-pixel ring mark of a non-metric digital camera was used to conduct multiple tests on the displacement of a simulated slope. We summarize the results of this study as follows:

- (1)

- The designed ring mark points were used as monitoring marks. Compared with the traditional mark points, more corners could be calculated using the circular contour sub-pixel algorithm. In addition, based on the Harris corner detection algorithm, the Shi–Tomasi method was used to calculate the corner points, and the cornerSubPix() function in OpenCV was used to calculate the sub-pixel coordinates of the corner points. Then, the least-square method was used to fit the sub-pixel coordinates of the center of the ring marks, which improved the accuracy of the center coordinates.

- (2)

- Slope-monitoring technology based on the sub-pixel ring mark of a non-metric camera overcomes cumbersome and internal data processing and high specialization requirements, facilitates restriction by terrain, and avoids expensive hardware equipment required in common methods. Simultaneously, when the displacement of the digital camera is known, the displacement of the slope can also be obtained. Moreover, the monitoring accuracy of this method can reach the millimeter level. This method can be used in building deformation monitoring, slope monitoring, etc. Further work will focus on improving temporal resolution by shooting a monitoring video, and the data can be transmitted and processed in real time.

Author Contributions

Conceptualization, Y.H. and X.L.; methodology, Y.H. and X.L.; software, Y.H.; validation, F.W., Y.H. and H.F.; formal analysis, X.L.; resources, X.L.; data curation, F.W.; writing—original draft preparation, Y.H.; writing—review and editing, X.L.; visualization, Y.H.; supervision, X.L.; project administration, F.W.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant numbers 42101414 and 51704205.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

Thanks to the scholars and software developers involved in this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bai, Y. Comparison of several optical measurement methods applied to measure displacement of test model. Rock Soil Mech. 2009, 30, 2783–2786. [Google Scholar]

- Chen, W.; Li, X.; Wang, Y.; Chen, G.; Liu, S. Forested landslide detection using LiDAR data and the random forest algorithm: A case study of the Three Gorges. Remote Sens. Environ. 2009, 152, 291–301. [Google Scholar] [CrossRef]

- Lian, X.; Li, Z.; Yuan, H.; Hu, H.; Cai, Y.; Liu, X. Determination of the stability of high-steep slopes by global navigation satellite system (GNSS) real-time monitoring in long wall mining. Appl. Sci. 2020, 10, 1952. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Song, C.; Yu, C. Application of satellite radar remote sensing to landslide detection and monitoring: Challenges and solutions. Geomat. Inf. Sci. Wuhan Univ. 2019, 44, 967–979. [Google Scholar]

- Feng, W. Close Range Photogrammetry: Photographic Determination of the Shape and Motion of an Object, 1st ed.; Wuhan University Press: Wuhan, China, 2002; pp. 10–30. [Google Scholar]

- Aldao, E.; Gonzalez-Jorge, H.; Pérez, J.A. Metrological comparison of LiDAR and photogrammetric systems for deformation monitoring of aerospace parts. Measurement 2021, 174, 109037. [Google Scholar] [CrossRef]

- Vilbig, J.; Sagan, V.; Bodine, C. Archaeological surveying with airborne LiDAR and UAV photogrammetry: A comparative analysis at Cahokia Mounds. Sci. Rep. 2020, 33, 102509. [Google Scholar] [CrossRef]

- Suziedelyte-Visockiene, J.; Bagdziunaite, R.; Malys, N. Close-range photogrammetry enables documentation of environment-induced deformation of architectural heritage. Environ. Eng. Manag. 2015, 14, 1371–1381. [Google Scholar]

- Jiang, R.; Jáuregu, D.; White, K. Close-range photogrammetry applications in bridge measurement: Literature review. Measurement 2008, 41, 823–834. [Google Scholar] [CrossRef]

- Choi, H.; Ahn, C.; Hong, S. The application of digital close-range photogrammetric linkage to navigation system. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2008, 36, 465–473. [Google Scholar]

- Song, L.; Zhang, C.; Bi, J.; Fang, S.; Wang, B.; Gu, S.; Wang, D.; Wang, H. Locating method of close-range photogrammetry based on GPS. Sci. Surv. Mapp. 2011, 36, 40–41. [Google Scholar]

- Hwang, J.; Yun, H.; Kang, J. Development of close range photogrammetric model for measuring the size of objects. KSCE J. Civ. Environ. Eng. Res. 2009, 29, 129–134. [Google Scholar]

- Matori, A.; Mokhtar, M.; Cahyono, B.; Yusof, K. Close-range photogrammetric data for landslide monitoring on slope area. In Proceedings of the 2012 IEEE Colloquium on Humanities, Science & Engineering, Kota Kinabalu, Malaysia, 27 May 2013. [Google Scholar]

- Alameda-Hernández, P.; Hamdouni, R.; Irigaray, C.; Chacón, J. Weak foliated rock slope stability analysis with ultra-close-range terrestrial digital photogrammetry. Bull. Eng. Geol. Environ. 2019, 78, 1157–1171. [Google Scholar] [CrossRef]

- Kim, D.; Gratchev, I.; Berends, J.; Balasubramaniam, A. Calibration of restitution coefficients using rockfall simulations based on 3D photogrammetry model: A case study. Nat. Hazards 2015, 78, 1931–1946. [Google Scholar] [CrossRef] [Green Version]

- Stylianidis, E.; Patias, P.; Tsioukas, V. A digital close-range photogrammetric technique for monitoring slope displacements. In Proceedings of the 11th FIG Symposium on Deformation Measurements, Santorini, Greece, 25 May 2003. [Google Scholar]

- Zhang, C.; Zha, C.; Zhou, S. 3D Visualization of landslide based on close-range photogrammetry. Instrum. Mes. Metrol. 2019, 18, 479–484. [Google Scholar] [CrossRef]

- Ohnishi, Y.; Nishiyama, S.; Yano, T.; Matsuyama, H.; Amano, K. A study of the application of digital photogrammetry to slope monitoring systems. Int. J. Rock Mech. Min. 2006, 43, 756–766. [Google Scholar] [CrossRef]

- Lin, X. Study on Application of Ordinary Digital Cameras in Detection of Engineering. Master’s Thesis, Zhejiang University, Hangzhou, China, 2008. [Google Scholar]

- Luo, R.; Liu, X. A Study of the application of digital photogrammetry in slope deformation monitoring. J. Shijiazhuang Railw. Inst. 2011, 24, 69–74. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Harris, C.; Stephens, M. A combined corner and edge detector. Alvey Vis. Conf. 1988, 15, 10-5244. [Google Scholar]

- Shi, J.; Tomasi, C. Good Features to Track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21 June 1994. [Google Scholar]

- Zhong, H.; Ye, J.; He, G. Optimization design of center coordinates location based on OpenCV. Test Qual. 2021, 5, 110–115. [Google Scholar]

- He, G.; Luo, H.; Wang, Y. Applicability of slope superficial displacement monitoring by ordinary digital camera. China Energy Environ. Prot. 2018, 40, 88–93. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).