1. Introduction

Classification is the mechanism of dividing any dataset based on its labels. All classification approaches are based on a two-step process: first, a model is trained to classify a dataset into two or more groups, and second, the model generally represented by a mathematical formula is tested on a previously unseen dataset, and based on its performance it is accepted or rejected. The most commonly used classification algorithms include Support Vector Machines (SVM), Naive Bayes (NB), Decision Trees (DTs), and Neural Networks (NNs), among many [

1].

Machine Learning (ML) and classification have applications in a wide range of industries including manufacturing, retail, healthcare, and life sciences, and for all these sectors, the distinction between being on the cutting-edge or falling behind on the progress is being gradually determined by data-driven decisions. The key to unlocking the potential of corporate and consumer data and enacting decisions that keep businesses ahead of the competition will be ML. There is already a growing body of research indicating that ML may be an important contributing factor in healthcare, such as the diagnosis of certain diseases, an area where it can perform at the same level or even better than humans. Several algorithms already outperform radiologists in detecting malignant tumors. However, for a variety of reasons, especially the lack of large datasets in healthcare, it will take several years before AI will be able to replace humans. It has been reported in the literature that the potential that ML provides in automating certain routine aspects of medical treatments is significant, and despite the current limitations, rapid adoption of ML approaches in healthcare is gaining speed. Deep learning or NN models with multiple layers can accurately classify and predict outcomes in many medical fields such as X-rays, intensive care unit (ICU) real-time clinical readings, and overall clinical outcomes of patients based on thousands of features. Diagnosing and treating diseases have been the focus of ML since the 1970s during the development of MYCIN for blood-borne and bacterial infections. However, they could not be integrated within clinicians’ workflows and medical record systems, nor substitute human diagnosticians [

2].

Another essential domain where ML is being very efficiently implemented is cybersecurity [

3,

4]. Phishing attacks are widely known as one of the worst problems for web users from individuals to businesses, and detecting phishing attacks is becoming a core target for programmers in cybersecurity in an attempt to prevent or minimize the threats from hackers to steal internet users’ important personal information such as bank accounts, passwords, and business data, among others [

5]. A frequency feature extractor, a spatial feature extractor, and a dual-domain feature resource allocation model comprise Deep-IRTarget, a unique backbone network. The Hypercomplex Infrared Fourier Transform is used to quantify infrared intensity saliency in the frequency domain, with a convolutional neural network used to extract feature maps in the spatial domain [

6].

Phishers’ victims are lured into inserting confidential information on pages similar to those they are used to dealing with. Phishing attacks are becoming more widespread around the world day after day [

7] and designing and constructing effective detection methods for phishing URLs has become a major issue, especially now that social engineering implementations mimicking URLs and websites seeking to collect users’ different data types such as personal information, bank accounts, and passwords are getting more sophisticated. In addition, phishing URLs are becoming a major threat to businesses worldwide [

8].

Classification algorithms have extensively been used in the domain of healthcare, phishing attack detection, business, and finance. This article will provide a detailed discourse on different ML classification algorithmic approaches and their applications in medical research such as predicting patient outcomes for different treatments or medical data processing. In the field of ML, the primary concern is achieving accurate predictions by increasing accuracy and minimizing type I and II errors [

9]. Various algorithms and models are used by data scientists to detect patterns leading to actionable outcomes, and this exploratory process helps by leading the classifier to a better understanding of the data through the process of training. Ostensibly, classification’s most beneficial use is to predict things and classify objects based on a specific model which is trained and then used to predict an outcome such as gender or whether a person suffers from high blood sugar on a completely novel dataset. Predictions might be a sensitive issue when it comes to specific domains where the probability of inaccurate predictions is usually objectionable. In addition, ML classification algorithms such as SVM, NB, DTs, and NNs have been proven to perform better in diagnosing diseases, a field in which data mining and ML strength relies on the ability of designing models to harvest the patterns of immense bodies of data collected from various sources, including the integration of background information. To evaluate the accuracy of the trained models in a specific domain, it is essential to compare the predictions of various algorithms with the true outcomes of the data that we already know about. For instance, DT models are exclusively exhaustive for classification because their structures utilize the “if-then” rule. Additionally, DTs have the propensity to over-fit by generating too many branches that might reflect anomalies such as noise or outliers until meeting a termination condition. The artificial neural networks (ANNs) are inspired by the way the neurons of the brain function, as understood by neurobiologists a long time ago, and they mathematically simulate the way information is processed according to the principles of network topology. In this paper, different types of classification algorithms have been compared with our proposed algorithm in terms of accuracy. As formerly mentioned, each algorithm has its own mechanism or strategy for training a suitable model. Some utilize probabilistic approaches such as NNs, some partial differentiation (linear equations) to update their weights, such as the case of KNN and the nearest neighbor, and others, such as SVM, use linear algebra. A quick study of the available literature shows that, in terms of accuracy and effectiveness, the family of ANN algorithms is highly adaptable for the classification of all types of data starting from text to images and videos, in addition to audio and other standard numerical datasets [

10]. Nevertheless, the availability of multiple algorithms is a great advantage, as each of them has its own peculiarity in dealing with different challenges in various datasets. Thus, some algorithms work perfectly in some cases while in other cases they are weak in terms of model accuracy.

In this study, a novel classification algorithm called Core Classifier Algorithm (CCA) is presented. It is based on the cores which represent the characteristics and features of each class and can be used to classify new points based on their similarity to the cores. K-means clustering algorithm is part of the proposed algorithm to minimize the effects of data distribution irregularities such as outliers and overlapping and to simulate the learning process in NNs. Of note, the results of the K-means algorithm are not constant and change based on various implementations, but the main reason for using K-means was to exploit its ability to generate cores and increase the accuracy of the model. Whereas NNs go through multiple iterations of adjusting weights and error rate for each feature until they achieve the most optimal results, CCA uses multiple iterations of K-value to better represent different distributions until it converges to the most optimal one, in this way achieving high accuracy even on data with very disparate distribution.

The rest of the study is organized as follows:

Section 2 for literature review,

Section 3 for proposal algorithm,

Section 4 for the experimental analysis and results, and

Section 5 for conclusion and future work.

2. Literature Review

Classification is used for categorizing data and generating decisions in multiple domains. Researchers have tried to hybridize NNs with other metaheuristic algorithms to improve the accuracy of classification to further manipulate and explore the search space, and as a result, solve several classification problems by adding feature selection methods [

11,

12]. Image classification is currently a very popular area and a major branch of image processing research. In machine learning, SVM is a very good classification algorithm. Convolutional Neural Networks (CNN) is a type of feed-forward NN that involves calculating convolutions and has a deep structure. It is also one of the most important representative algorithms of deep learning. In this article, standard machine learning and deep learning image classification algorithms, SVM and CNN, respectively, were compared and analyzed. Our results showed the accuracy of SVM and CNN to be 0.88 and 0.98, respectively, when using a large sample dataset, and 0.86 and 0.83, respectively, when using a small sample COREL1000 dataset. The findings of this paper indicate that conventional machine learning approaches have better accuracy on smaller sample datasets while deep learning yields greater precision on larger datasets [

13,

14]. In this study, by constructing an efficient class allocation matrix, we suggest using support and query instances to estimate class centers. Extensive studies on few-shot benchmarks show that our graph-based few-shot learning pipeline beats the baseline by 12% and outperforms state-of-the-art results in both full-supervised and semi-supervised settings [

15].

In a recent study designed to detect and classify phishing websites using various classification algorithms, it was found that the accuracy of different algorithms varied drastically, with the lowest being BPNN followed by RBFN, SVM, NB, and C4.5, while KNN and RF showed the best performance [

3]. Phishing attacks can be detected by using classification algorithms, as it was presented in a model in 2015 designed by Riad Jabri with an accuracy of 87% and an error rate lower than 0.1% [

16]. Another novel classification approach was presented later with the advantage of limiting the complexity of predicting phishing attacks on the e-banking systems [

17]. These were followed by more improved classification approaches such as RF, SVM, and NN combined with back-propagation which showed accuracies of 97.369%, 97.451%, and 97.259%, respectively, in detecting phishing attacks on the web [

18]. By using different percentages of training data and feature selection, a model was created to detect phishing URLs [

19].

Clustering based on critical distance methodology was developed by Kuwil et al. [

20] who carried out a comparison between K-mean, Hierarchical, and DBscan algorithms, and they were able to achieve high accuracy despite the presence of outliers, different regions, and convex shapes. Gravity center clustering (GCC) was compared with K-means, k-medoid, and k-median based on the criteria for partition clustering algorithms, and precisely obtained more connectivity and cohesion [

21]. By comparing the performance of three well-known ML methods, it was found that while the decision trees are able to differentiate risks for an outcome or a prediction, deep learning methods were the key in simplifying the complexity of nonlinear patterns or interactions between variables of particular outcomes, and ensemble predictive methods were established by combining multiple machine learning methods [

22]. Shi and Yang investigated how parameters can thematically affect the accuracy of classifiers [

23]. Murtagh and Contreras [

24] built a computationally efficient clustering approach focused on the creation of random spanning paths for very large, very high dimensional and sparse data. They used random projection to process high-dimensional data, thus enabling computationally effective hierarchical clustering using the Baire metric.

Using ML in the domain of healthcare for the purpose of predictions is not new. Arian et al. suggested that KNN can be used to build a classifier model to distinguish between active and inactive protein kinase enzymes that regulate biological activities of various downstream proteins, and their approach showed superiority to other algorithms such as SVM and NB [

25]. Recently, there has been an explosion of new approaches to help in diagnosis, especially cancer, and selecting genes that may be involved in disease progression and patient survival. The Moth Flame Optimization Algorithm (MFOA) is less costly in terms of computation and can converge faster than many other techniques [

26]. Considering the proposed detection method as well as recent competitive strategies on chest CT images, detailed studies have been performed. Experimental studies have shown that in terms of precision, error rate, and sensitivity/recall, the proposed feature selection methodology produces fast and precise results relative to the current methods. HFSM offers accuracy, recall, precision, and error values that exceed 0.72, 0.71, 0.93, and 0.07, respectively. The suggested CPDS produced an accuracy of 96%, which is better than most recent methodologies [

27]. ML implementation on a diabetes dataset to predict the patterns and the probability of readmission of diabetes patients included many approaches such as KNN, LDA, NB, J48, and SVM. Results indicated that patients who did not undergo robust laboratory assessments, diagnosis, and medications had higher chances of readmission. Improvements were noticed when patients were discharged without receiving insulin control, especially those of Caucasian descent and women, or both [

28]. In these experiments, three classification algorithms, namely DTs, SVM, and NB, were used to diagnose the early stages of diabetes. Experiments were conducted on the Pima Indians Diabetes Database (PIDD), part of UCI machine learning repository, and tests were carried out on various measures such as precision, accuracy, and learning repository, the performances of all three algorithms were evaluated, and accuracy was measured over correctly and incorrectly classified instances. Results showed that NB outperformed the rest with the best precision of 76.30%. Using Receiver Operating Characteristic (ROC) curves in a correct and standardized way, these results were checked [

29].

As shown in the literature studies, there are many algorithms that have been used in the classification and they provide different results. KNN algorithm is one of these and has many limitations by using different K values, which always gives different results. In our proposal algorithm, we have used one core class to minimize the use of K value and we based on the concept of training as simulated in NN. However, the difference is not in updating the weights, as occurs in NN, but in updating the Cores within each cluster, which differs in the strength of its representation and the results show good performance.

3. The Proposed Algorithm

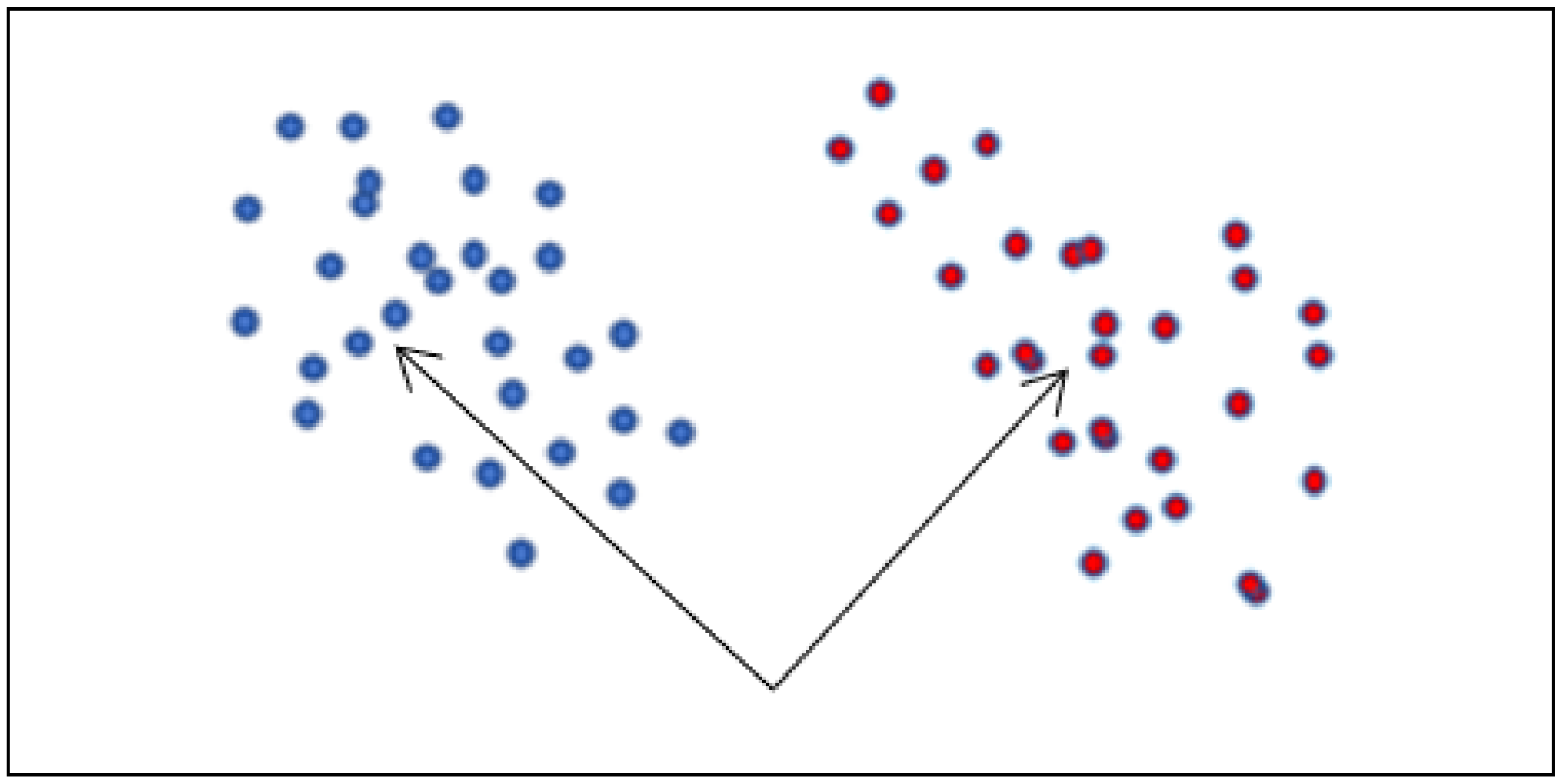

Our proposed algorithm is derived from KNN as it is based on studying the similarities of all points with a unique point inside every class, which is also known as the core. That point is assumed to be the true representative of the class as it comprises all or most of its characteristics. Thus, the proposed algorithm is able to overcome the challenges of changing the results by changing the K parameter in the KNN algorithm in an attempt to determine a suitable parameter that gives the most accurate classification. As shown in

Figure 1, in the case of liner classification, the test point has only been tested with the Cores in both classes, and it will be classified to the class that has the greatest similarity with its core.

The study aimed to hybridize the derived algorithm from KNN with one of the partition unsupervised learning algorithms (K-means) as the hybridization process gives the derived algorithm strength and enables it to work efficiently while dealing with large numbers of domains and cases. Each algorithm in machine learning has weaknesses and strengths within its mechanism, therefore, it is effective in certain scenarios and ineffective in others.

The idea of hybridization of two algorithms in many cases is required to improve performance or to increase efficiency, and most importantly, it can be efficiently used to overcome some of the defects and challenges in one of the two algorithms used to create the hybridization. Traditionally, hybrid algorithms have been built on the principle of exploiting the advantage of a particular algorithm to improve the performance and efficiency of another algorithm. However, our study shows that it is more important when the flaw of one algorithm is used to increase the efficiency of the other. The main mechanism of the proposed algorithm (CCA) is based on three principles as follows:

Simulating KNN algorithm and finding one Core for each class bearing its own characteristics, instead of changing the classification result based on K-value in KNN algorithm.

Clustering algorithm to be used to overcome the dataset distribution problems such as nonlinear classification, overlapping, or noise, as all the mentioned problems simulate the hidden layers in NNs.

Depending on the unstable results of the K-means, a number of iterations is required to construct different numbers of clusters reaching newer cores, similar to the approach of NNs.

Figure 2a on the left represents the linear classification of the data distribution, where the data are classified based on their labels, hence, we can have two classes as an outcome.

The necessity to combine the algorithm with clustering arises in cases where the core does not accurately represent all the points in the class defined by the data as shown in

Figure 2b. In the figure, classes are represented by C (C1 and C2) and clusters by K (K1 and K2), and in this particular case, because of distribution disparities, it would be impossible to correctly classify the data (especially class 1) unless we introduce clustering which correctly aligns the distributions with the classes.

3.1. Mathematical Formula

This Section highlights the mathematical formula for CCA algorithm in its basic form without hybridization of clustering to make it more clear and easier to describe. The formulas below give strength, evidence, and reliability to the mechanism of the proposed algorithm. The algorithm starts with constructing the distance matrix (D_M) for every class. Each row in D_M consists of the distances between a point and the other points in a specific class. The summation for every row is stored in a new column. The row with the smallest summation shows the strongest similarity, also called the Core point, which summarizes most or all the characteristics of a certain class. Classifying any test point will be determined according to the similarities with the Cores of the classes. The scenario of three classes is shown symbolically as

from the original dataset (DS) and can be represented in sub-datasets as

dsc1,

dsc2, and

dsc3, with every class, respectively, consisting of a number of points (

i,

j,

k), as follows:

Distance matrixes for the three classes are represented as

with sizes,

i ×

i,

j ×

j, and

k ×

k, respectively. For the

every row contains i cells which represent the distances between every point

in class 1 with all the others. The same approach is used to create

, as shown by the equations below:

The core vectors (Co

r_

v) with sizes (

i × 1), (

j × 1), and (

k × 1) are created by calculating the sum of each row in D

M representing the furthest extent that the point (row) might represent the characteristics of its class.

The core for every class is found by selecting the minimum value in Cor_v, where this core has most similarities of the points within its class. Accordingly, three cores (Co, (Co, and (Co are obtained for every class , and , respectively, thus, test points can be classified to appropriate classes by realizing the greatest similarity with their cores.

3.2. The Pseudo-Code of the Proposed Algorithm

A scenario of training a dataset and classifying the outcomes into three classes will be shown as an example. The distance matrix for each class is constructed (DM)ci and the summation of every row represents the similarity between this particular point (row) and the other points within the same class. The point that achieves the highest similarity or has the minimum sum is selected as a core for the class (ci), and thus it is the best representation of that particular class. The test points will be classified accordingly to satisfy the similarity with these cores instead of using K nearest neighbors, as in the KNN algorithm.

Consider S as a given dataset which consists of n numbers of points S: = p1, p2,…, pn which are classified into three classes, and each point has a form of pi = (xi,ci), where xi denotes the feature vector of the point pi and ci denotes the class that pi belongs to.

Suppose that x is a point that needs to be classified to an appropriate class using CCA (Algorithm 1).

- (1)

Calculate distance matric for the three classes (DM)c1, (DM)c2, (DM)c3.

- (2)

Find the core for each class (Cor)c1, (Cor)c2, (Cor)c3.

- (3)

Calculate the distance between x and all cores to obtain three values: (dis1, dis2, dis3).

- (4)

X will be classified to the class with minimum distance within (dis1, dis2, dis3).

These steps are shown in pseudocode below and notations in

Table 1:

| Algorithm 1. Algorithm for CCA. |

| 1: Input: in |

| 2: Output: out |

| 3: Initialisation: |

| 4: loop process |

| 5: for i = 1 to length of test_ds do |

| 6: find the dis between test_ds [i] and (Cor)cx |

| 7: if dis (1) is minimum |

| 8: Classify test_ds[i] to class (1) |

| 9: else if dis (2) is minimum |

| 10: Classify test_ds[i] to class (2) |

| 11: else |

| 12: Classify test_ds[i] to class (3) |

| 13: end loop |

| 14: return Class (x) |

3.3. Hybridization Proposed Algorithm with K-Means

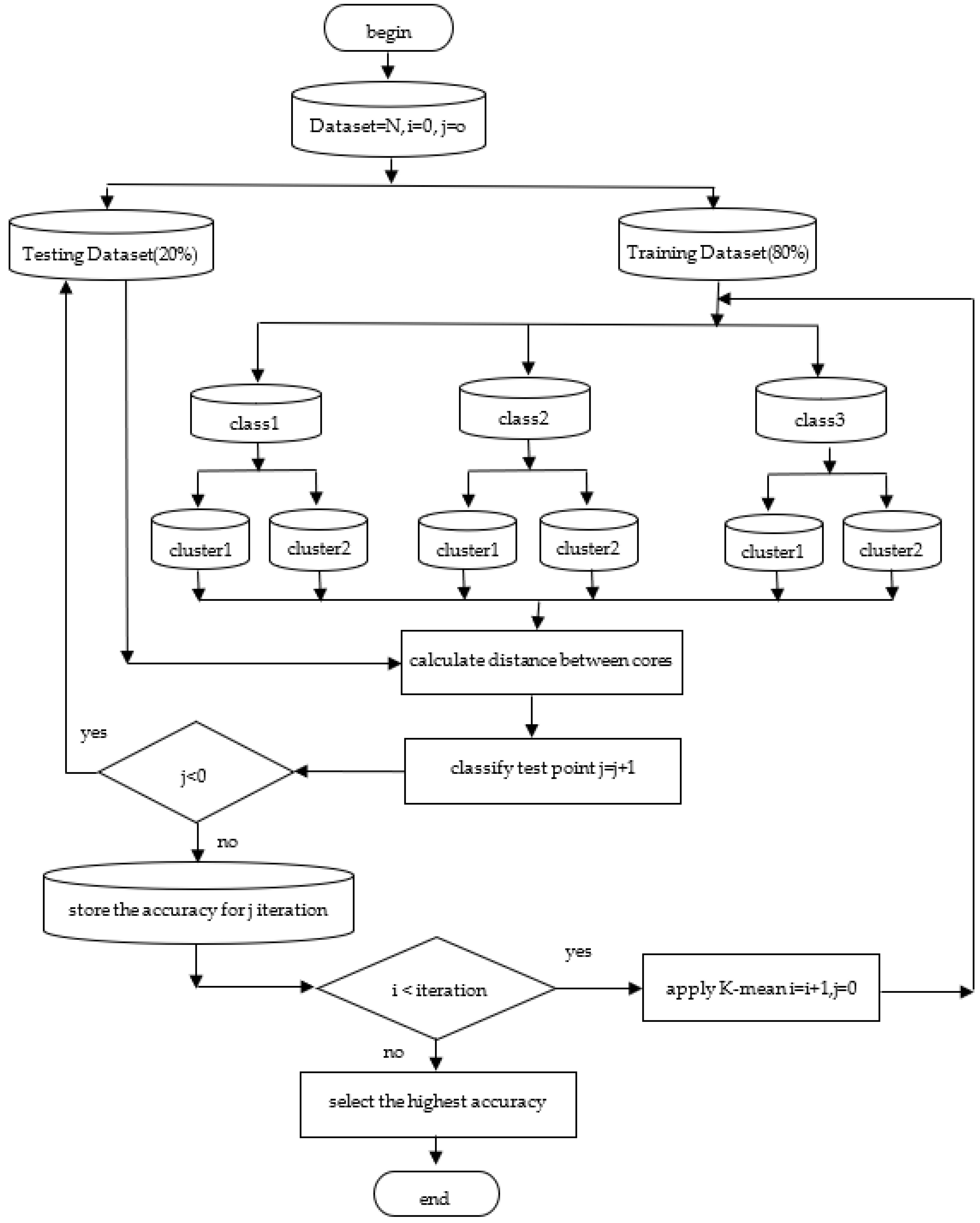

Many challenges appeared while utilizing real data in classification problems such as nonlinear classification, overlap among classes, and the presence of outliers; one of the clustering algorithms is used to make CCA algorithm more flexible to deal with such cases. This represents an advantage of an algorithm that is used in another algorithm to solve a particular problem or to increase its efficiency, using clustering with CCA in such a case as using hidden layers in Neural Networks NN, whereas in another simulation of NN, partition clustering gives different results with the same input dataset. The iteration is to be applied using K-means at a specific number and different clusters are obtained, whereas it depends on the initial random selection to select the center for such a process to lead to different Cores within these clusters in every iteration. Therefore, the model will be trained to attain higher accuracy. Both techniques are used in dealing with nonlinear classification and other problems.

The structure of training dataset separation, representing 80% of all datasets, according to this study’s scenario, has three classes confronting two clusters in each of them, for instance, Cor_c1 (k1) is the Core of cluster 1 within class 1 and Cor_c1 (k2) represents the Core of cluster 2 within class 1, and so on.

Figure 3 shows a flowchart summarizing the general idea of Core Classify Algorithm (CCA), with three class scenarios divided into two clusters within every class; n represents the number of points in the testing dataset using j as a counter, and I as a counter of the iterations’ times (ite) to train the model within every iteration process the accuracy and Cores to be stored. Finally, the Cores of clusters that have a high accuracy will be chosen as appropriate Cores of a perfect model.

The strength and spread of any classification algorithm do not depend on its accuracy in ideal cases such as linear or discriminative classification, but on the extent to which the algorithm adapts in non-ideal classifications cases. One of the most common unsupervised learning algorithms was involved in the proposed algorithm becoming one entity, leading to higher classification accuracy. Additionally, depending on a feature of the K-means algorithm, which one is obtaining different and unfixed results with every implementation? Therefore, the concept of training was simulated in NN, however, the difference is not in updating the weights, as occurs in NN, but in updating the Cores within clusters, which differs in the strength of its representation of clusters.

The contribution of this paper is as follows:

- (1)

Introducing a new classification algorithm.

- (2)

Hybridization between classification and clustering to reduce the effect of data distribution problems on the model accuracy.

- (3)

Employing and utilizing the insufficiencies of a specific algorithm in improving the performance of another algorithm by simulating an important concept in building effective models with the highest possible accuracy rate.

4. Experimental Analysis and the Results

In this paper, the performance and efficiency of the CCA algorithm are tested using four real datasets from two different domains, which are Phisher URL and Healthcare, including one linear classification synthetic dataset, as is described in

Table 2 The results will be evaluated and discussed, then compared with other common classification algorithms. Several papers have introduced Web Phishing Attacks techniques such as Feature Selection, preparing data

, and other related issues. Therefore, in this study, phishing and healthcare datasets were retrieved from [

30,

31,

32,

33].

In

Table 3, five different datasets have been examined, labeled as Experiment 1–5. CCA was applied to each of these datasets with four different iterations and four different cluster numbers (

K-values). The results show that for dataset 1, which is a linear classification case, the accuracy was the highest and clustering was not necessary for CCA because the Core of each class is completely represented, meaning that it carries all the characteristics of this class. For the rest of the experiments 2–5, that were not linearly separable, the use of the CCA showed different better results for different clusters, all of them with better accuracy as compared to the case of a single cluster.

In

Table 4, the CCA examined using the confusion matrix and we calculated the F1-score, precision, and recall. We examined the four datasets 1–4 [

30,

31,

32,

33], and the CCA has given different results for different datasets. We set the iterations of all experiments at 2.

4.1. Comparison with Other Classification Algorithms

In this section, the performance of our proposed algorithm is compared with other similar and familiar machine learning algorithms in order to show its characteristics and what the sites of strength and weakness are within. Random Forest, SVM, and Decision Trees are compared with the proposed algorithm in terms of the model accuracy.

Table 5 shows the experimental results of the three classification algorithms including CCA. By examining the results, it appears that the accuracy of the model in all non-linear classification experiments, as in 2–5, is lower than experiment 1, in which all algorithms achieved perfect accuracy in the case of linear classification. The descending order of the algorithms in terms of accuracy was RF, SVM, DT, and CCA. The results also show that the proposed algorithm is affected by the same data distribution problems that affect each of the other three compared algorithms. The model′s accuracy is the highest, as shown in the first case experiment, while it came to its least, as in experiment 4, for all experiments.

4.2. Time Complexity

The CCA algorithm is divided into three steps. The first step is to categorize the data into a few categories; as a result, the step′s time complexity is O(n). We need to use K-means to divide each class into some clusters based on similarity in the second step, which has a time complexity of O(n2). The final step is to quantify Cores across all clusters. Therefore, the final step is also needed O(n2). Therefore, the holistic time complexity is calculated as: O(n) + O(n2) + O(n2) = O(n2). Additionally, time complexity was calculated for the general form of the algorithm, assuming the fixed number of clusters k and excluding the number of iterations while executing the clustering to update Cores.

5. Conclusions and Future Work

A new classification algorithm was introduced in this study which was hybridized with clustering to overcome the data distribution problems of classification. By utilizing the special characteristics of the K-means algorithm which changes the data distribution with every iteration, our proposed CCA achieved higher accuracy and has the potential to serve as a stepping stone for better and more efficient computational approaches to deal with datasets of mixed distributions. Four experiments were carried out on two domains’ datasets, Phishing URL and Healthcare, in addition to one synthetic dataset in the linear classification case. The results show that the use of clustering increased the accuracy of the model, as each datum has an appropriate number of clusters that can achieve better results in addition to increasing the probability of obtaining a higher accuracy by increasing the number of iterations for K-means. All algorithms showed perfect results in the case of linear classification represented in the first experiment, while their accuracies differed in the other four experiments with mixed distributions. It can be said that the proposed algorithm is an optimized version of the KNN algorithm because it eliminates the high variability of results due to the changing values of k parameter.

In addition, in terms of the dataset, there is a missing values problem where there are many methods that deal with that, such as data mining technique and [

34,

35,

36], to the use of other statistical measures such as One-Way ANOVA instead of utilizing Euclidean distance to express similarity between the test points and the Core of each cluster. Furthermore, the limitation of the algorithm is calculated in terms of large overlapping in the dataset, and also the receptivity to concept of clustering should be valid in terms of applying clustering as it shown in the CDC algorithm [

20]. For the future, we propose that an approach similar to the GCC [

20] algorithm, by taking an active set of points instead of a single Core, will lead to a significant increase in classification accuracy. Using other clustering algorithms such as DBscan, K-SCC, or CDC that can identify the appropriate number of clusters [

37] based on the nature of dataset distribution, it will lead to higher model accuracy, as well.