Development of a Mobile Application for Smart Clinical Trial Subject Data Collection and Management

Abstract

:1. Introduction

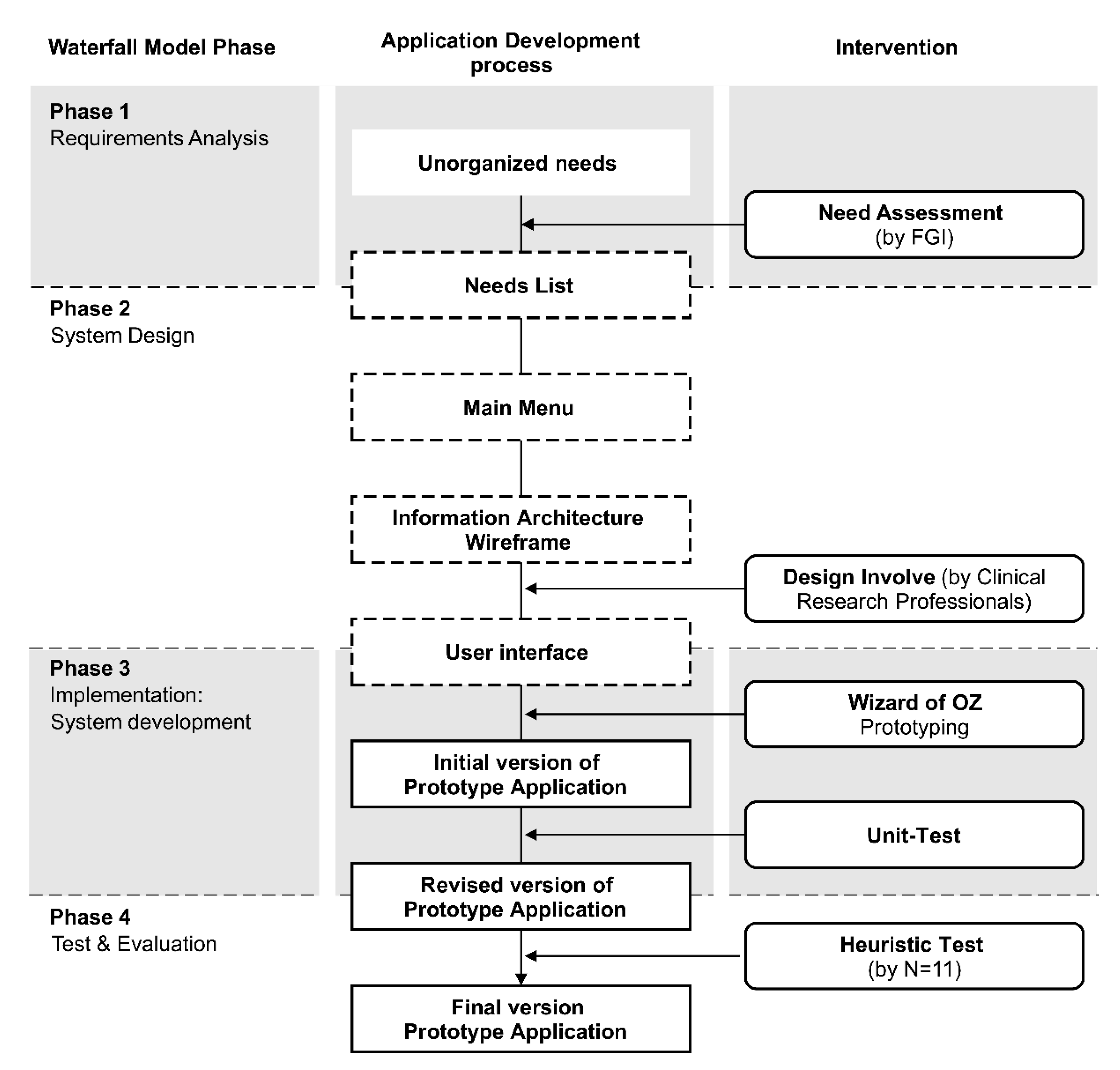

2. Materials and Methods

3. Results

3.1. Phase 1. Requirements Analysis

3.2. Phase 2. System Design

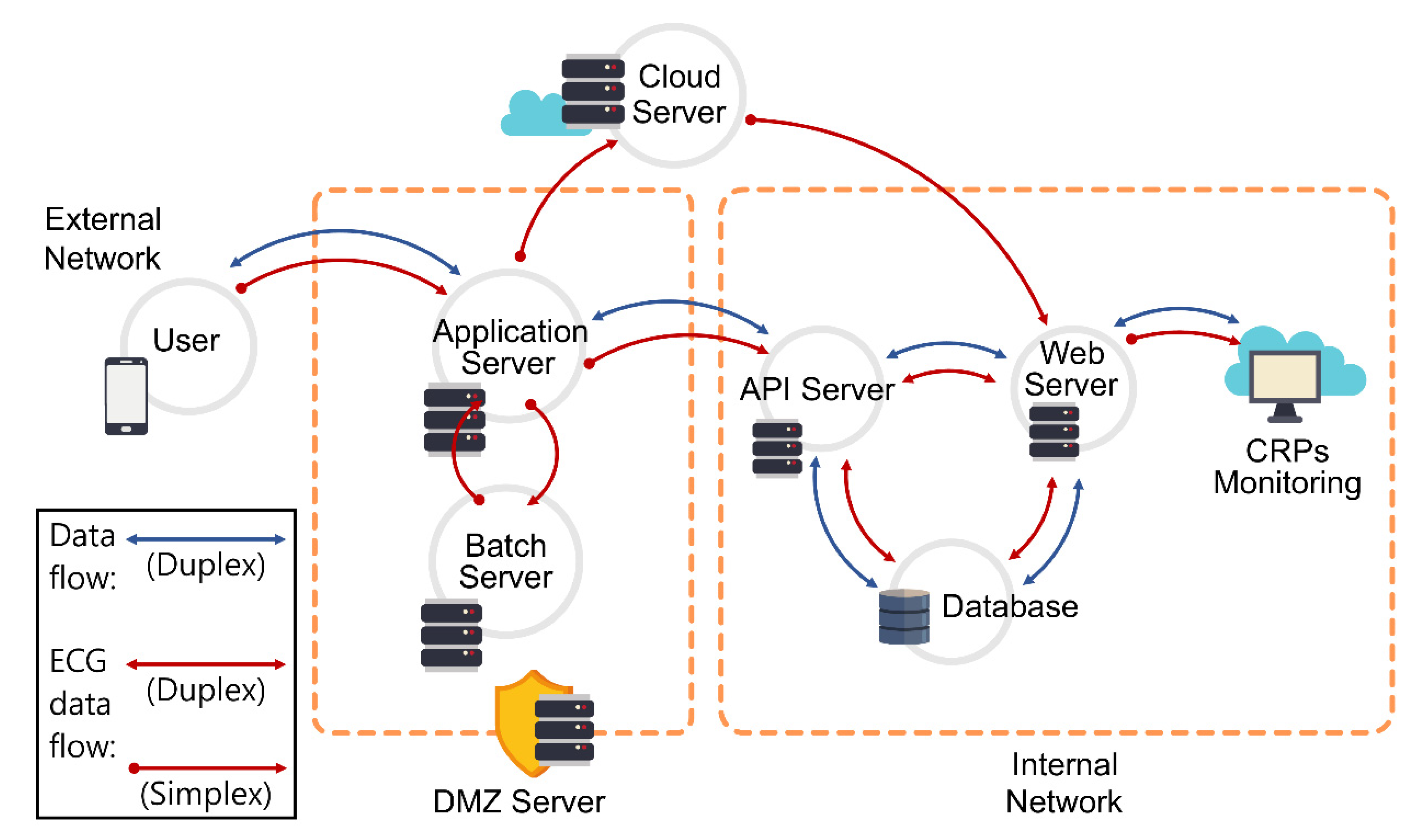

3.3. Phase 3. Implementation: System Development

3.4. Phase 4. Testing and Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Moore, T.J.; Zhang, H.; Anderson, G.; Alexander, G.C. Estimated Costs of Pivotal Trials for Novel Therapeutic Agents Approved by the US Food and Drug Administration, 2015–2016. JAMA Intern. Med. 2018, 178, 1451–1457. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fischer, S.M.; Kline, D.M.; Min, S.J.; Okuyama, S.; Fink, R.M. Apoyo Con Carino: Strategies to Promote Recruiting, Enrolling, and Retaining Latinos in a Cancer Clinical Trial. J. Natl. Compr. Canc. Netw. 2017, 15, 1392–1399. [Google Scholar] [CrossRef] [PubMed]

- Fogel, D.B. Factors Associated with Clinical Trials That Fail and Opportunities for Improving the Likelihood of Success: A Review. Contemp. Clin. Trials Commun. 2018, 11, 156–164. [Google Scholar] [CrossRef] [PubMed]

- Soares, R.R.; Parikh, D.; Shields, C.N.; Peck, T.; Gopal, A.; Sharpe, J.; Yonekawa, Y. Geographic Access Disparities to Clinical Trials in Diabetic Eye Disease in the United States. Ophthalmol. Retina 2021, 5, 879–887. [Google Scholar] [CrossRef]

- Carlisle, B.G. Clinical Trials Stopped by COVID-19 [Internet]. The Grey Literature. 2020. Available online: https://covid19.bgcarlisle.com/ (accessed on 1 January 2022).

- Asaad, M.; Habibullah, N.K.; Butler, C.E. The Impact of COVID-19 on Clinical Trials. Ann. Surg. 2020, 272, e222–e223. [Google Scholar] [CrossRef]

- Hamzelou, J. World in Lockdown. New Sci. 2020, 245, 7. [Google Scholar] [CrossRef]

- Apostolaros, M.; Babaian, D.; Corneli, A.; Forrest, A.; Hamre, G.; Hewett, J.; Podolsky, L.; Popat, V.; Randall, P. Legal, Regulatory, and Practical Issues to Consider When Adopting Decentralized Clinical Trials: Recommendations from the Clinical Trials Transformation Initiative. Ther. Innov. Regul. Sci. 2020, 54, 779–787. [Google Scholar] [CrossRef]

- Hashiguchi, T.C.O. Bringing Health Care to the Patient: An Overview of the Use of Telemedicine in OECD Countries; OECD Health Working Papers, No. 116; OECD Publishing: Paris, France, 2020. [Google Scholar] [CrossRef]

- Weinstein, R.S.; Lopez, A.M.; Joseph, B.A.; Erps, K.A.; Holcomb, M.; Barker, G.P.; Krupinski, E.A. Telemedicine, Telehealth, and Mobile Health Applications That Work: Opportunities and Barriers. Am. J. Med. 2014, 127, 183–187. [Google Scholar] [CrossRef]

- Won, J.H.; Lee, H. Can the COVID-19 Pandemic Disrupt the Current Drug Development Practices? Int. J. Mol. Sci. 2021, 22, 5457. [Google Scholar] [CrossRef]

- Little, R.J.; D’Agostino, R.; Cohen, M.L.; Dickersin, K.; Emerson, S.S.; Farrar, J.T.; Frangakis, C.; Hogan, J.W.; Molenberghs, G.; Murphy, S.A.; et al. The Prevention and Treatment of Missing Data in Clinical Trials. N. Engl. J. Med. 2012, 367, 1355–1360. [Google Scholar] [CrossRef] [Green Version]

- Inan, O.T.; Tenaerts, P.; Prindiville, S.A.; Reynolds, H.R.; Dizon, D.S.; Cooper-Arnold, K.; Turakhia, M.; Pletcher, M.J.; Preston, K.L.; Krumholz, H.M.; et al. Digitizing Clinical Trials. NPJ Digit. Med. 2020, 3, 101. [Google Scholar] [CrossRef] [PubMed]

- Kario, K.; Tomitani, N.; Kanegae, H.; Yasui, N.; Nishizawa, M.; Fujiwara, T.; Shigezumi, T.; Nagai, R.; Harada, H. Development of a New ICT-Based Multisensor Blood Pressure Monitoring System for Use in Hemodynamic Biomarker-Initiated Anticipation Medicine for Cardiovascular Disease: The National IMPACT Program Project. Prog. Cardiovasc. Dis. 2017, 60, 435–449. [Google Scholar] [CrossRef] [PubMed]

- Korea National Enterprise for Clinical Trials [Internet]. The Grey Literature. 18 May 2021. Available online: https://www.konect.or.kr/kr/contents/datainfo_data_01_tab03/view.do (accessed on 15 March 2022).

- Levy, H. Reducing the Data Burden for Clinical Investigators. Appl. Clin. Trials. 2017, 26, 17. [Google Scholar]

- Roger, S.P.; Bruce, R.M. Software Engineering: A Practitioner’s Approach; McGraw-Hill Education: New York, NY, USA, 2015. [Google Scholar]

- Pressman, R.S. Software Engineering: A Practitioner’s Approach; Palgrave MacMillan: London, UK, 2005. [Google Scholar]

- Carlsen, B.; Glenton, C. What about N? A Methodological Study of Sample-Size Reporting in Focus Group Studies. BMC Med. Res. Methodol. 2011, 11, 26. [Google Scholar] [CrossRef] [Green Version]

- Krueger, R.A. Focus Groups: A Practical Guide for Applied Research; Sage Publications: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Hsieh, H.F.; Shannon, S.E. Three Approaches to Qualitative Content Analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef]

- Green, P.; Wei-Haas, L. The Rapid Development of User Interfaces: Experience with the Wizard of Oz Method. Proc. Hum. Factors Soc. Annu. Meet. 1985, 29, 470–474. [Google Scholar] [CrossRef]

- Pettersson, J.S.; Wik, M. The Longevity of General Purpose Wizard-of-Oz Tools. In Proceedings of the Annual Meeting of the Australian Special Interest Group for Computer Human Interaction, Parkville, Australia, 7–10 December 2015. [Google Scholar]

- Joyce, G.; Lilley, M.; Barker, T.; Jefferies, A. Mobile Application Usability: Heuristic Evaluation and Evaluation of Heuristics. Advances in Human Factors, Software, and Systems Engineering; Springer: Cham, Switzerland, 2016; pp. 77–86. [Google Scholar] [CrossRef] [Green Version]

- Sauro, J.; Lewis, J. Should All Scale Points Be Labelled? 2020. Available online: https://measuringu.com/scale-points-labeled/accessed (accessed on 1 January 2022).

- Sauro, J. Rating the Severity of Usability Problems. 2013. Available online: http://www.measuringu.com/blog/rating-severity.php (accessed on 1 January 2022).

- Norman, D. The Design of Everyday Things: Revised and Expanded Edition; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Umscheid, C.A.; Margolis, D.J.; Grossman, C.E. Key Concepts of Clinical Trials: A Narrative Review. Postgrad. Med. 2011, 123, 194–204. [Google Scholar] [CrossRef] [Green Version]

- Allen, E.N.; Chandler, C.I.R.; Mandimika, N.; Leisegang, C.; Barnes, K. Eliciting Adverse Effects Data from Participants in Clinical Trials. Cochrane Database Syst. Rev. 2018, 1, MR000039. [Google Scholar] [CrossRef]

- Berlin, J.A.; Glasser, S.C.; Ellenberg, S.S. Adverse Event Detection in Drug Development: Recommendations and Obligations Beyond phase 3. Am. J. Public Health 2008, 98, 1366–1371. [Google Scholar] [CrossRef]

- Lawry, S.; Popovic, V.; Blackler, A.; Thompson, H. Age, Familiarity, and Intuitive Use: An Empirical Investigation. Appl. Ergon. 2019, 74, 74–84. [Google Scholar] [CrossRef]

- Khairat, S.; Burke, G.; Archambault, H.; Schwartz, T.; Larson, J.; Ratwani, R.M. Perceived Burden of EHRs on Physicians at Different Stages of Their Career. Appl. Clin. Inform. 2018, 9, 336–347. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mc Cord, K.A.; Ewald, H.; Ladanie, A.; Briel, M.; Speich, B.; Bucher, H.C.; Hemkens, L.G.; RCD for RCTs Initiative and the Making Randomized Trials More Affordable Group. Current Use and Costs of Electronic Health Records for Clinical Trial Research: A Descriptive Study. CMAJ Open. 2019, 7, E23–E32. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- National Academies of Sciences, Engineering, and Medicine. Reflections on Sharing Clinical Trial Data: Challenges and a Way Forward: Proceedings of the a Workshop; National Academies Press: Washington, DC, USA, 2020. [Google Scholar]

- Parab, A.A.; Mehta, P.; Vattikola, A.; Denney, C.K.; Cherry, M.; Maniar, R.M.; Kjaer, J. Accelerating the Adoption of eSource in Clinical Research: A Transcelerate Point of View. Ther. Innov. Regul. Sci. 2020, 54, 1141–1151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosa, M.; Faria, C.; Barbosa, A.M.; Caravau, H.; Rosa, A.F.; Rocha, N.P. A Fast Healthcare Interoperability Resources (FHIR) Implementation Integrating Complex Security Mechanisms. Procedia Comput. Sci. 2019, 164, 524–531. [Google Scholar] [CrossRef]

- Saripalle, R.; Runyan, C.; Russell, M. Using HL7 FHIR to Achieve Interoperability in Patient Health Record. J. Biomed. Inform. 2019, 94, 103188. [Google Scholar] [CrossRef]

- Othman, M.K.; Ong, L.W.; Aman, S. Expert vs novice collaborative heuristic evaluation (CHE) of a smartphone app for cultural heritage sites. Multimed. Tools. Appl. 2022, 81, 6923–6942. [Google Scholar] [CrossRef]

| No. | Needs | Details |

|---|---|---|

| 1 | Checking for side effects and adverse reactions | A function for recording side effects and adverse reactions is required. (This information is currently recorded in the comment section because there is no separate section for recording it) |

| 2 | Concomitant drug check | Taking photos and uploading concomitant drug function information is required to check drug relationships. |

| 3 | Remote feedback function | In addition to the traditional method of calling or texting the Clinical Research Coordinator, a function to give feedback to the patient based on the data recorded in the application is required (e.g., by analyzing a chat message). |

| 4 | Data sharing with the hospital system | A function to share data such as laboratory test results, doctors’ feedback, and a brief history of the patient, from the hospital system, is required. |

| 5 | Application menu | Separate menus to check medication, diet, concomitant medications, and adverse reactions are required |

| 6 | Standard form use | The form of the application should be based on the standard form currently used in the clinical trial center. |

| 7 | Design requirements | The design should be based on the target audience of users under 60 years of age. |

| No. | Menu | Function Description |

|---|---|---|

| 1 | Self-report | Patient-generated health data (PGHD), including the user’s weight, fasting blood sugar level, blood pressure, heart rate, body temperature, and oxygen saturation, were entered and checked. |

| 2 | Medication + nutrition | Medication: A medication log, which included the name and time of each medication or treatment, was maintained. The relevant data were added to the adverse reaction menu when the participants showed adverse reactions. Diet: A meal diary was maintained with photos of each meal, contents, and the time of consumption. |

| 3 | Concomitant drug | In participants consuming over-the-counter drugs or health supplements other than the test drug, information about the time and amount of the drugs was entered. |

| 4 | Adverse reactions | When an adverse reaction occurred, the type, location, period, action method, picture of the symptoms, etc., were recorded, and the management of persistent adverse reactions was documented. |

| 5 | Symptom record | Symptom record: Cough, stuffy nose, sore throat, fatigue, headache, fever, loss of smell, loss of taste, etc. (corresponding to symptoms of COVID-19) were reported. Health Record: Blood pressure and ECG data are input through an external device (wearable device). Blood pressure: Data from all devices linked to Samsung Health can be entered. ECG: Real-time input through VP-100 (device certified by the Korea Food and Drug Administration). |

| 6 | Daily to-do | The user’s medication, nutritional, and health measurement record items that must be entered each day are presented. The status changes from to-do to done when the user completes that task. |

| Heuristic Evaluation Contents | N (%) | Mean Score | Heuristic |

|---|---|---|---|

| Program errors that make it difficult to proceed with the scenario | 5 (45%) | 2.20 | SMART 3 |

| Errors related to the “symptom input” page configuration and screen information | 5 (45%) | 1.80 | SMART 8 |

| Inconvenience caused by the graphic method for inputting time | 5 (45%) | 1.40 | SMART 11 |

| Errors related to the “Health Report” page configuration and screen information | 5 (45%) | 1.20 | SMART 7 |

| Inconvenience caused by a hidden or difficult-to-operate input button | 3 (27%) | 3.00 | SMART 6 |

| Errors caused by unclear or missing pop-ups | 3 (27%) | 2.00 | SMART 3 |

| Errors caused by missing notifications for the ECG-related connection | 3 (27%) | 1.33 | SMART 1 |

| Inconsistent screen discomfort | 3 (27%) | 1.33 | SMART 2 |

| Errors related to the “Combination Drugs” page configuration and screen information | 3 (27%) | 1.33 | SMART 8 |

| Confusing screen configuration that allowed users to input the heart rate in the blood pressure input window | 3 (27%) | 1.00 | SMART 5 |

| Inconvenience for elderly individuals or people with reduced vision due to the small font size | 2 (18%) | 2.00 | SMART 10 |

| Discomfort caused by awkward or difficult-to-understand expressions | 2 (18%) | 1.50 | SMART 2 |

| Inconvenience caused by the lack of visibility of the configuration of the menu and tab at a glance | 2 (18%) | 1.50 | SMART 6 |

| Inconvenience caused by the keyboard window covering the screen when typing | 2 (18%) | 1.50 | SMART 10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ryu, H.; Piao, M.; Kim, H.; Yang, W.; Kim, K.H. Development of a Mobile Application for Smart Clinical Trial Subject Data Collection and Management. Appl. Sci. 2022, 12, 3343. https://doi.org/10.3390/app12073343

Ryu H, Piao M, Kim H, Yang W, Kim KH. Development of a Mobile Application for Smart Clinical Trial Subject Data Collection and Management. Applied Sciences. 2022; 12(7):3343. https://doi.org/10.3390/app12073343

Chicago/Turabian StyleRyu, Hyeongju, Meihua Piao, Heejin Kim, Wooseok Yang, and Kyung Hwan Kim. 2022. "Development of a Mobile Application for Smart Clinical Trial Subject Data Collection and Management" Applied Sciences 12, no. 7: 3343. https://doi.org/10.3390/app12073343

APA StyleRyu, H., Piao, M., Kim, H., Yang, W., & Kim, K. H. (2022). Development of a Mobile Application for Smart Clinical Trial Subject Data Collection and Management. Applied Sciences, 12(7), 3343. https://doi.org/10.3390/app12073343