Creating a Remote Choir Performance Recording Based on an Ambisonic Approach

Abstract

:Featured Application

Abstract

1. Introduction

2. Performing Remote Concerts

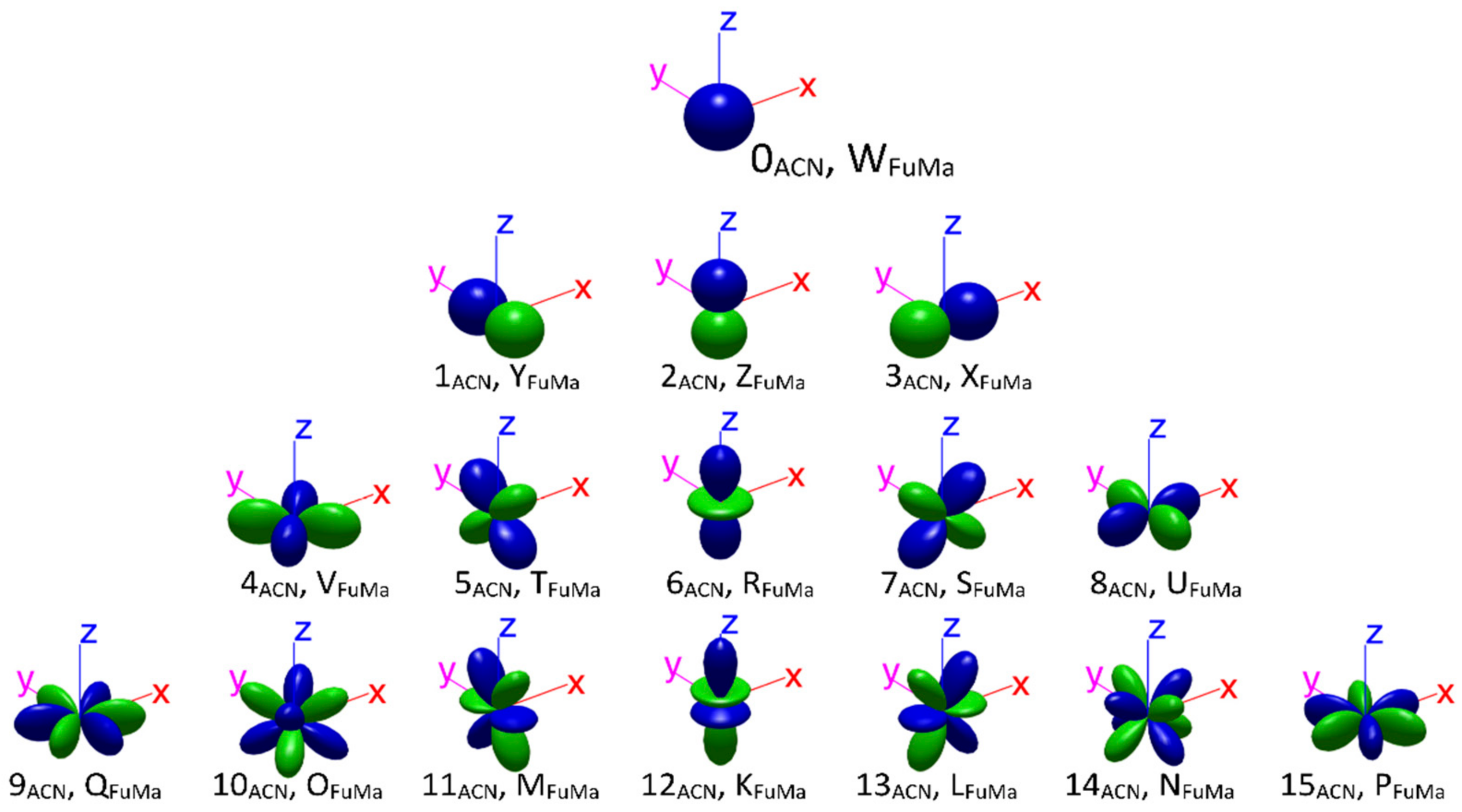

3. Basics of Ambisonics

- MaxN—normalizes each individual component not to exceed a gain of 1.0 for a panned mono source—used in FuMa;

- N3D—similar to SN3D—orthonormal basis for 3D decomposition. Provides equal power to the encoded components for a perfectly scattered 3D field;

- SN3D—(in ACN channel order) is widely used. Unlike N3D, no component will ever exceed the peak value of the 0th order component for single point sources. This scheme has been adopted in the AmbiX coding format and is widely used.

4. Realization of Remote Music Recording

4.1. Preparing a Remote Audio-Visual Recording

4.2. Technical Considerations

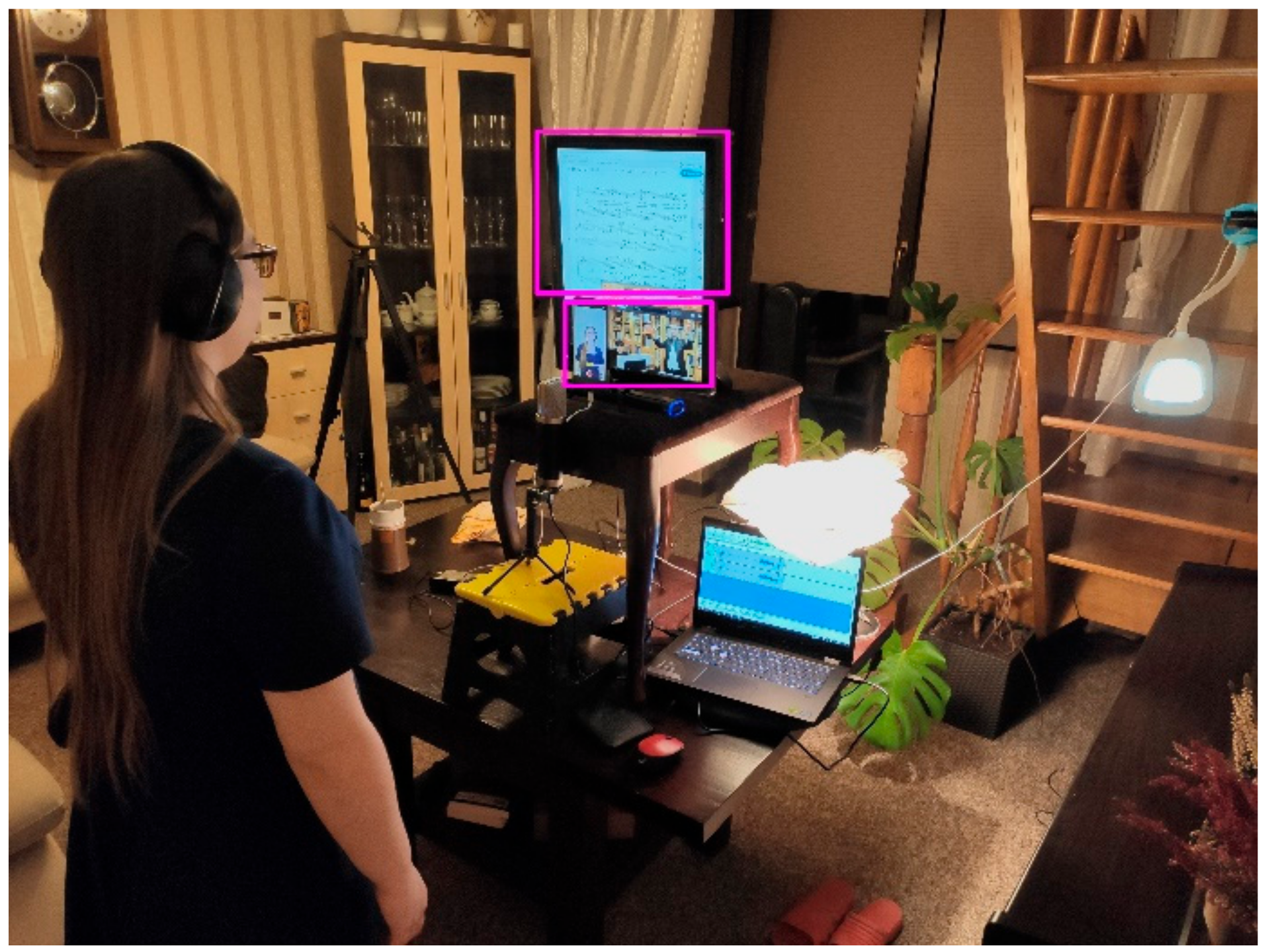

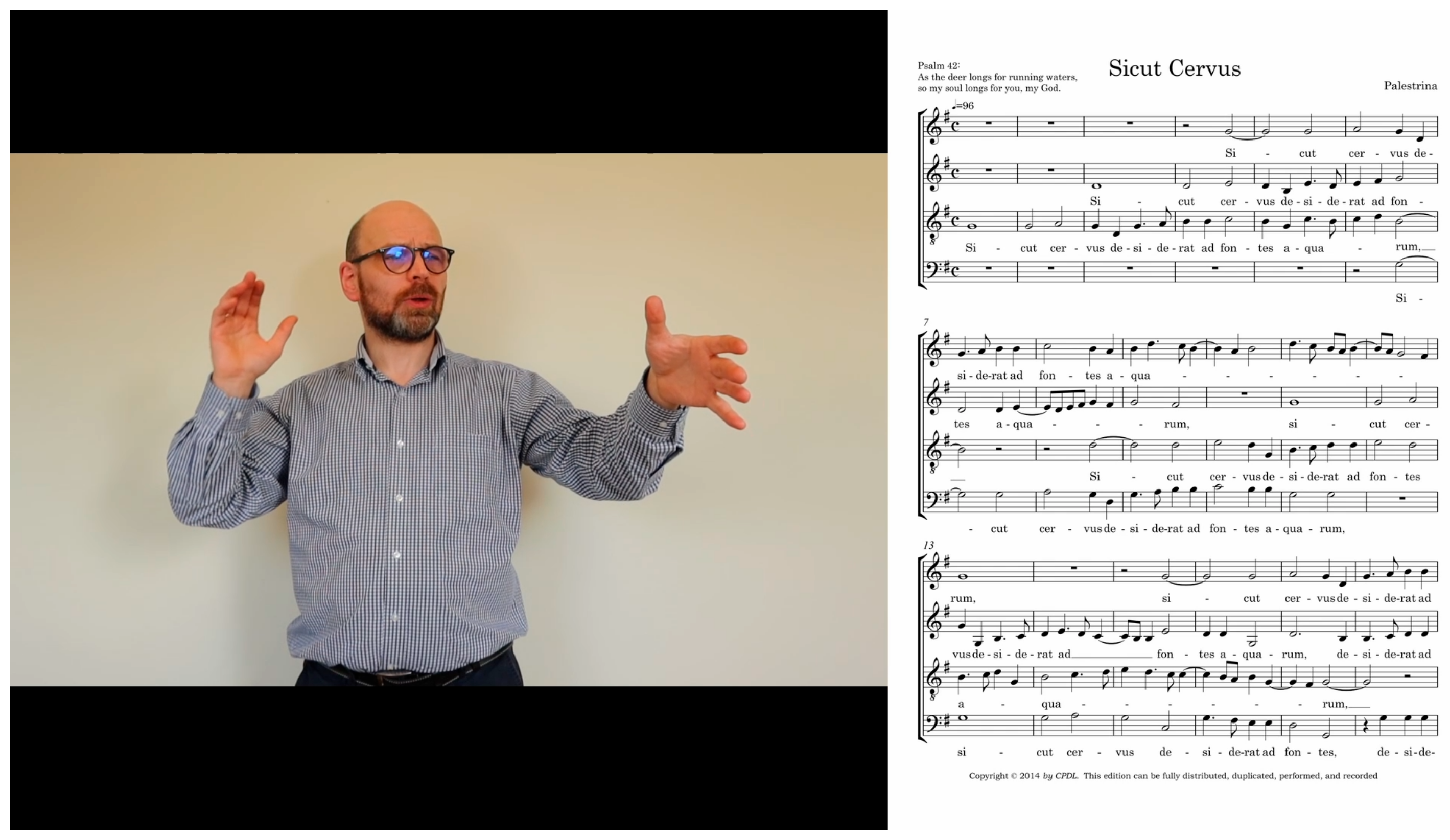

4.3. Recording of Musicians

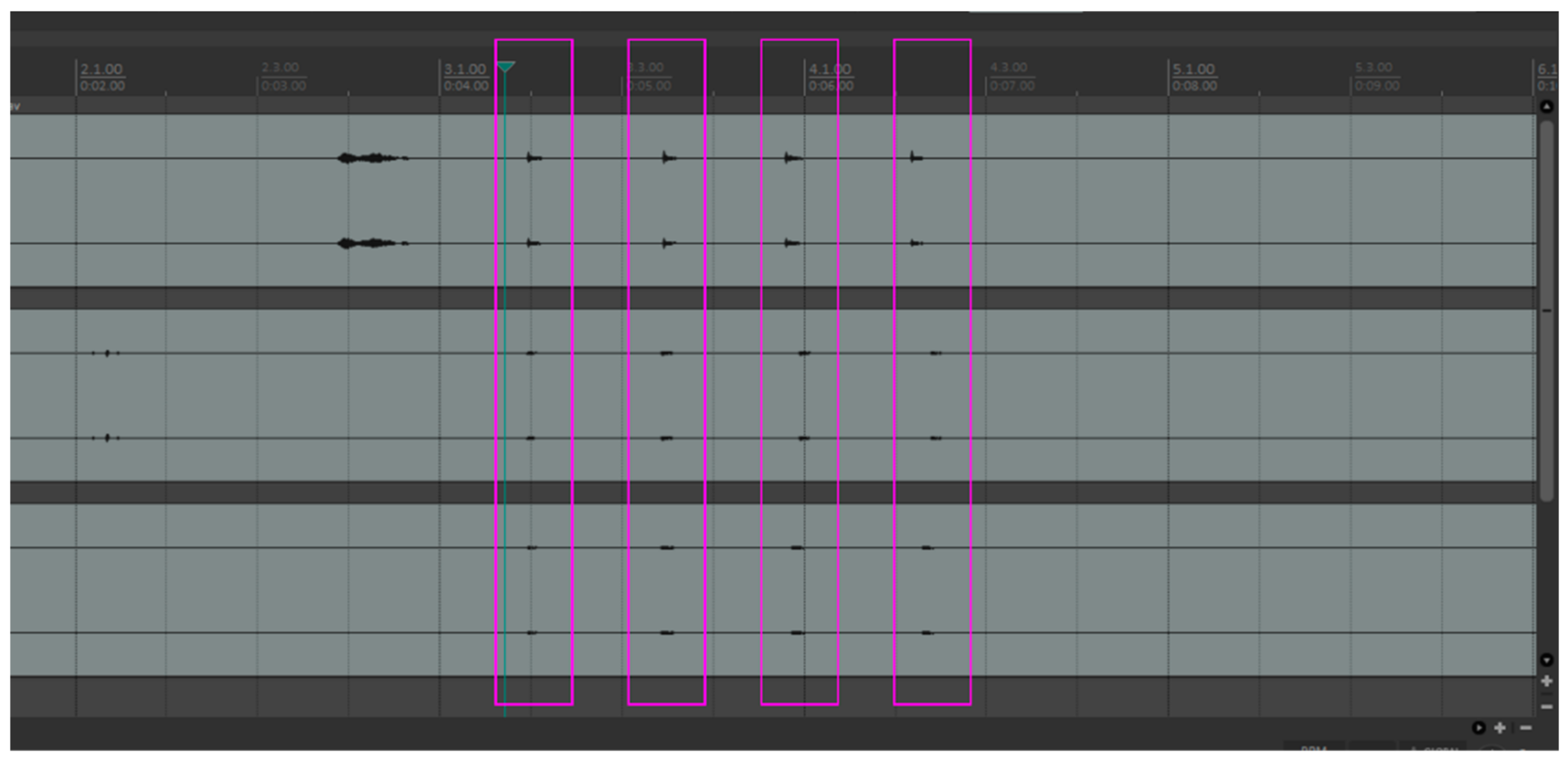

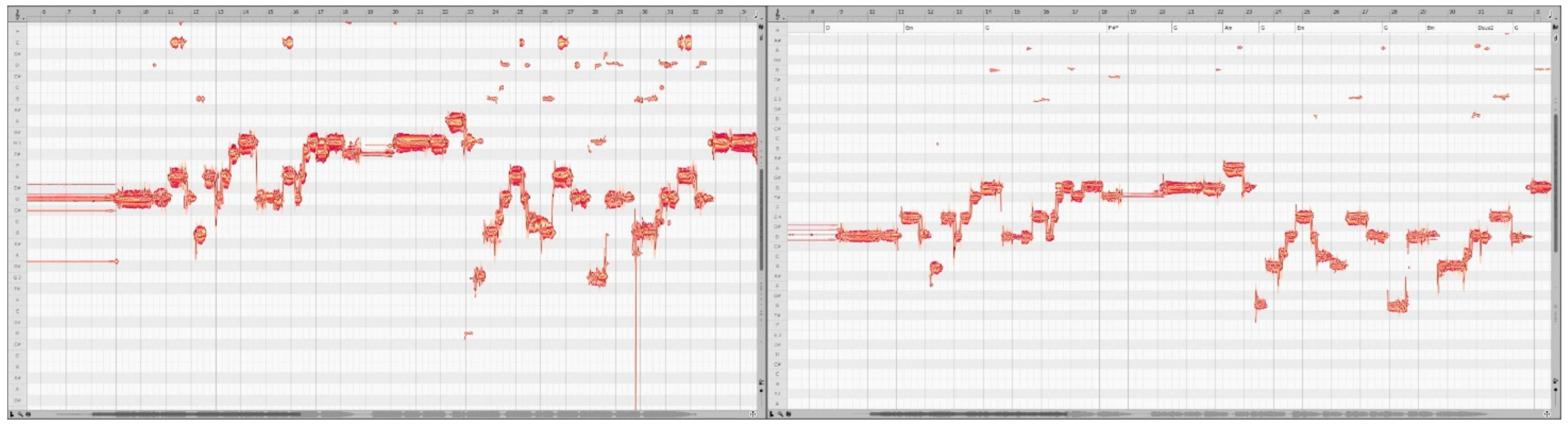

4.4. Postproduction of Soundtrack

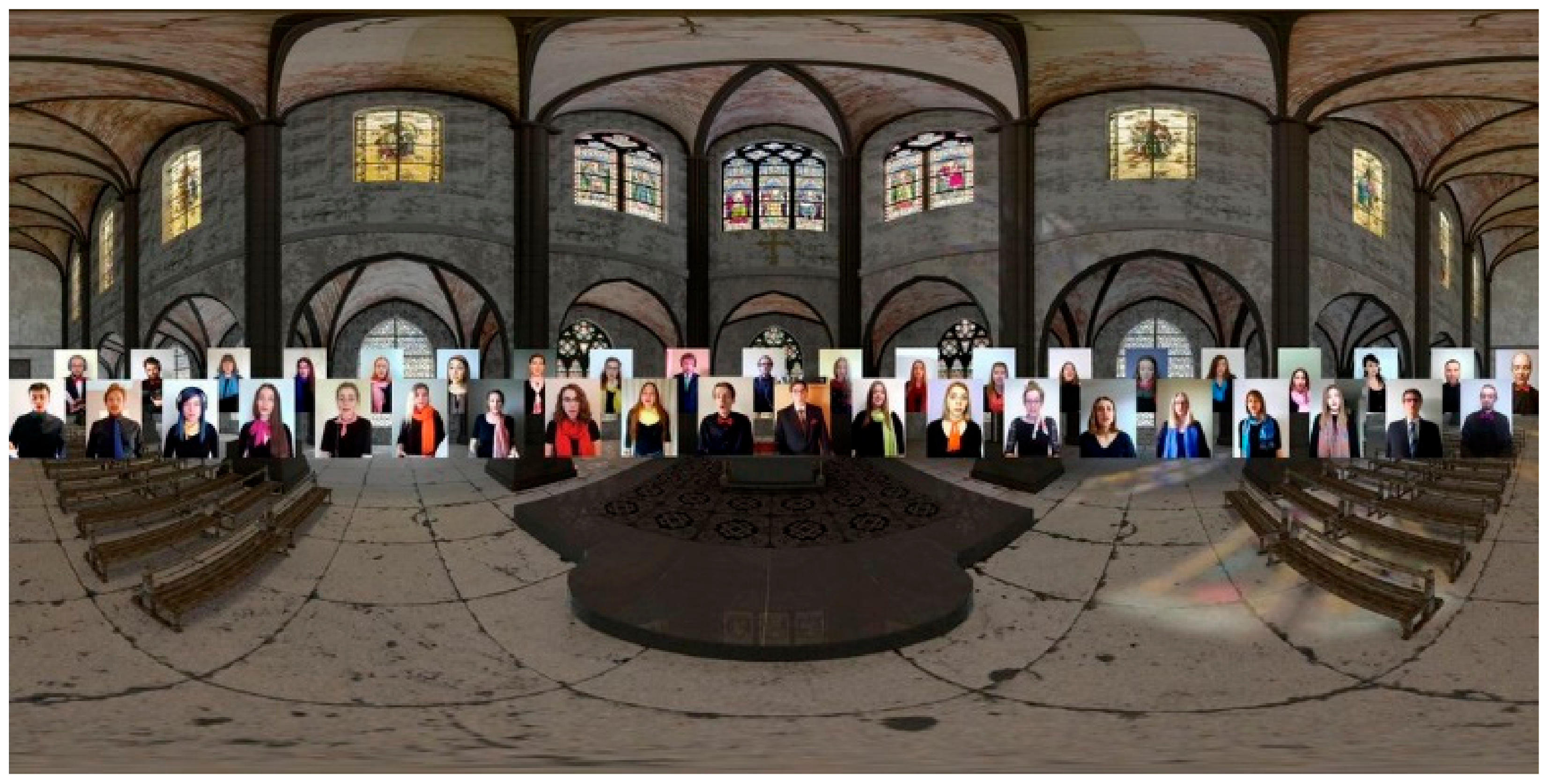

4.5. Postproduction of the Visual Layer

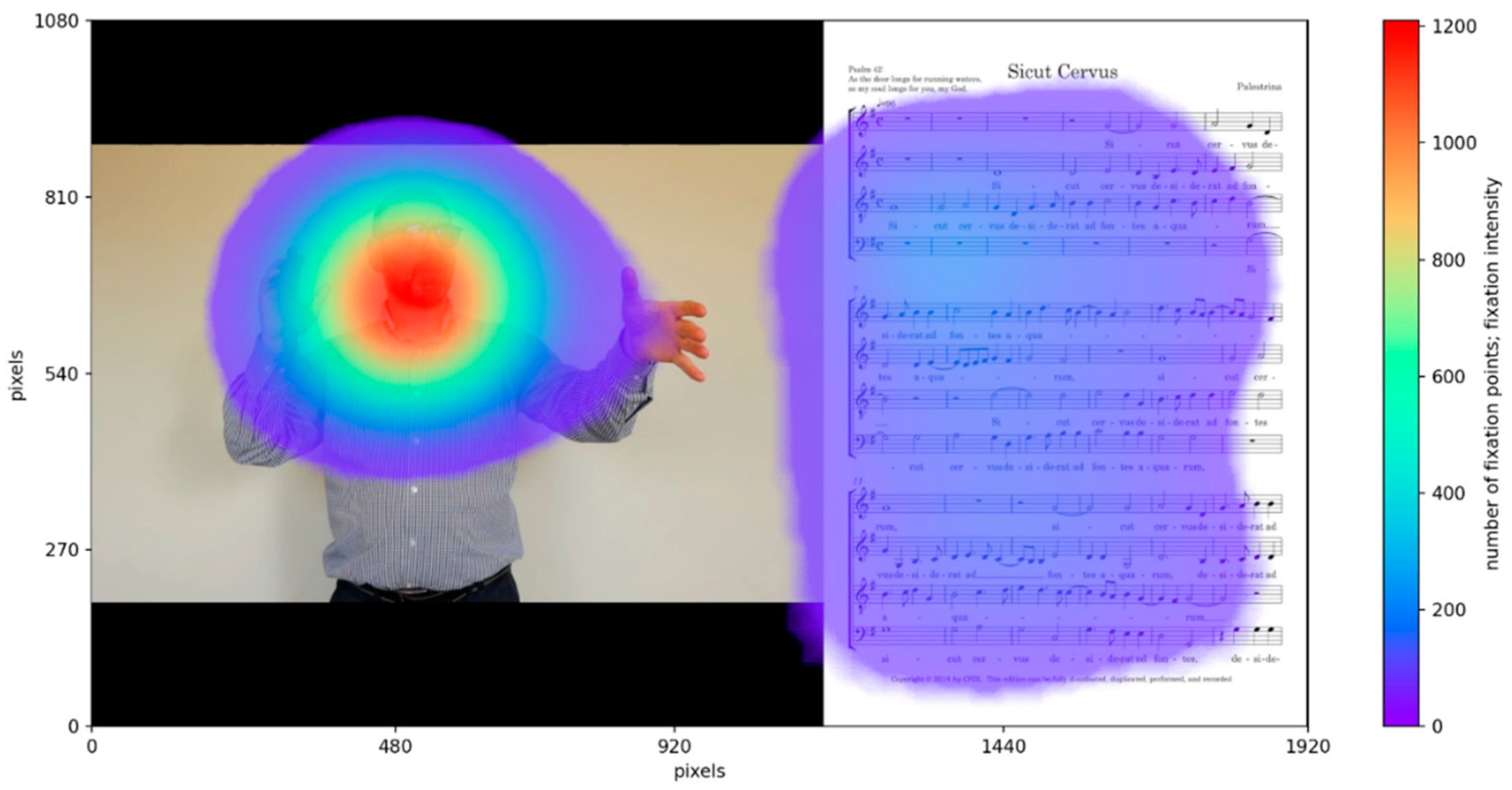

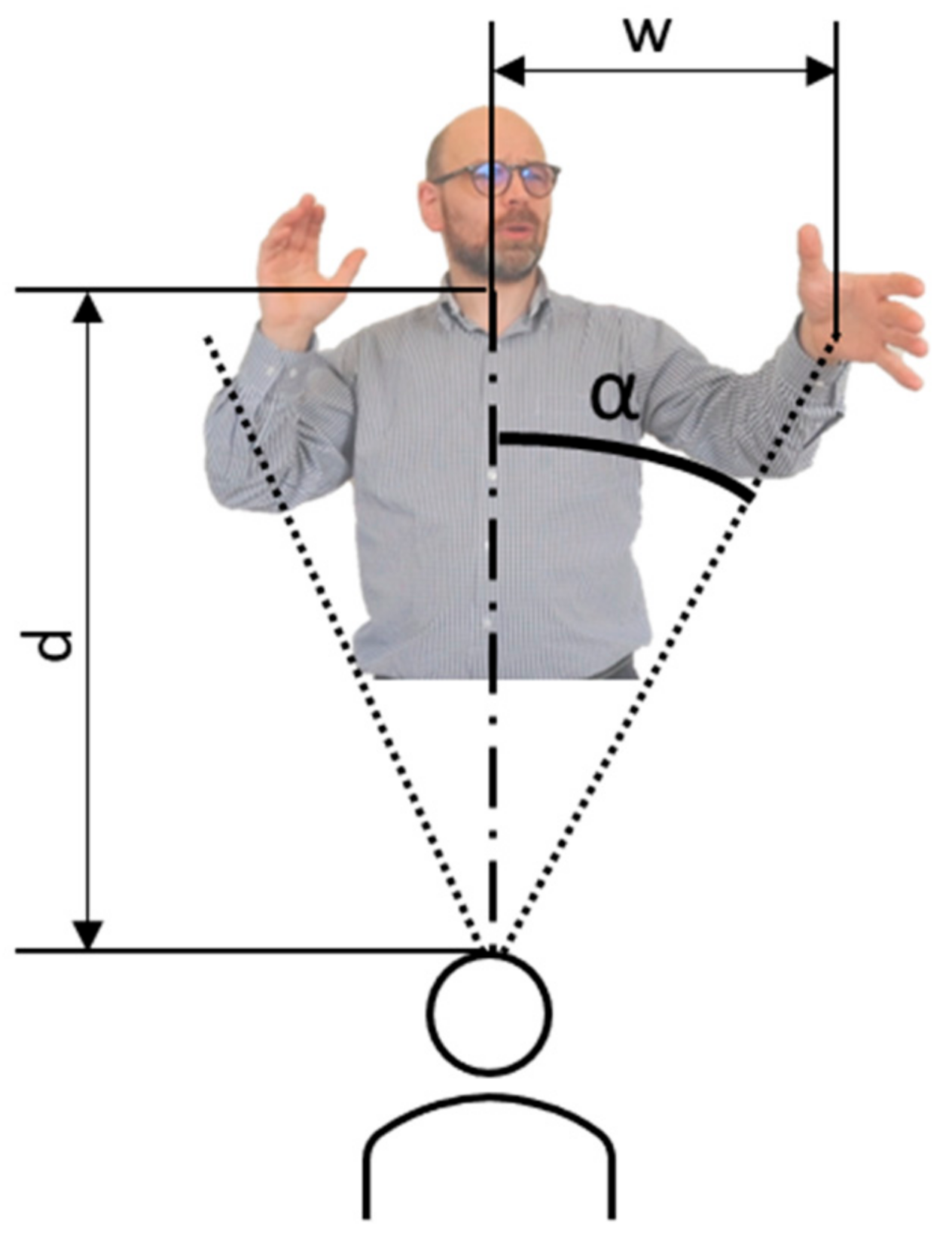

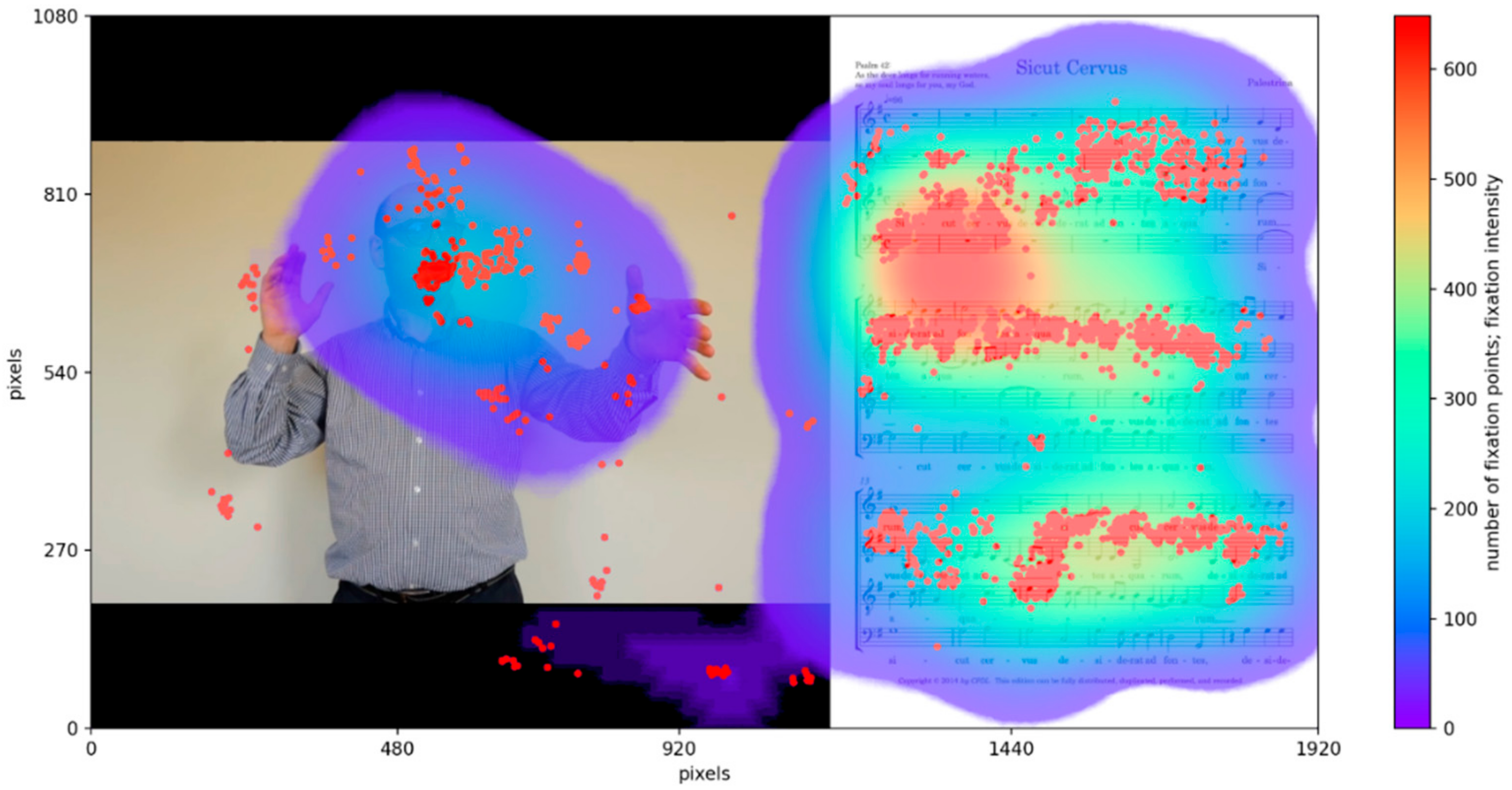

5. Eye-Tracker-Based Examination

6. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Caceress, J.-P.; Chafe, C. JackTrip/SoundWIRE meets server farm. Comput. Music J. 2010, 34, 29–34. [Google Scholar]

- Chafe, C.; Wilson, S.; Leistikow, R.; Chisholm, D.; Scavone, G. A simplified approach to high quality music and sound over IP. In Proceedings of the Conference on Digital Audio Effects, Verona, Italy, 7–9 December 2000; pp. 159–164. [Google Scholar]

- Chafe, C. Tapping into the Internet as an acoustical/musical medium. Contemp. Music Rev. 2009, 28, 413–420. [Google Scholar]

- Weinberg, G. Interconnected musical networks: Toward a theoretical framework. Comput. Music J. 2005, 29, 23–39. [Google Scholar]

- Bartlette, C.; Headlam, D.; Bocko, M.; Velikic, G. Effect of network latency on interactive musical performance. Music Percept. 2006, 24, 49–62. [Google Scholar]

- Bouillot, N.; Cooperstock, J.R. Challenges and performance of High-Fidelity audio streaming for interactive performances. In Proceedings of the 9th International Conference on New Interfaces for Musical Expression, Pittsburgh, PA, USA, 4–6 June 2009; pp. 135–140. [Google Scholar]

- Chafe, C.; Caceres, J.P.; Gurevich, M. Effect of temporal separation on synchronization in rhythmic performance. Perception 2010, 39, 982–992. [Google Scholar]

- Gu, X.; Dick, M.; Kurtisi, Z.; Noyer, U.; Wolf, L. Network-centric music performance: Practice and experiments. IEEE Commun. Mag. 2005, 43, 86–93. [Google Scholar]

- Kapur, A.; Wang, G.; Davidson, P.; Cook, P.R. Interactive network performance: A dream worth dreaming? Organ. Sound 2005, 10, 209–219. [Google Scholar]

- Lazzaro, J.; Wawrzynek, J. A case for network musical performance. In Proceedings of the 11th International Workshop on Network and Operating Systems Support for Digital Audio and Video, New York, NY, USA, 25–26 June 2001; pp. 157–166. [Google Scholar]

- Bouillot, N. nJam user experiments: Enabling remote musical interaction from milliseconds to seconds. In Proceedings of the 7th International Conference on New Interfaces for Musical Expression, New York, NY, USA, 6–10 June 2007; pp. 142–147. [Google Scholar]

- Caceres, J.-P.; Hamilton, R.; Iyer, D.; Chafe, C.; Wang, G. To the edge with China: Explorations in network performance. In Proceedings of the 4th International Conference on Digital Arts, Porto, Portugal, 10–12 September 2008; pp. 61–66. [Google Scholar]

- Gurevich, M. JamSpace: A networked real-time collaborative music environment. In Proceedings of the CHI’06 Extended Abstracts on Human Factors in Computing Systems, Montréal, Canada, 22–27 April 2006; pp. 821–826. [Google Scholar] [CrossRef]

- 10th Anniversary of the Internet in Poland, Internet Concert (In Polish). Available online: http://www.internet10.pl/koncert.html (accessed on 1 February 2022).

- Aoki, S.; Cohen, M.; Koizumi, N. Design and control of shared conferencing environments for audio telecommunication using individually measured HRTFs. Presence 1994, 3, 60–72. [Google Scholar]

- Buxton, W. Telepresence: Integrating shared task and person spaces. In Proceedings of the Graphics Interface ’92, Vancouver, Canada, 11–15 May 1992; pp. 123–129. [Google Scholar] [CrossRef]

- Durlach, N.I.; Shinn-Cunningham, B.G.; Held, R.M. Supernormal auditory localization. Presence 1993, 2, 89–103. [Google Scholar]

- Durlach, N. Auditory localization in teleoperator and virtual environment systems: Ideas, issues, and problems. Perception 1991, 20, 543–554. [Google Scholar]

- Jouppi, N.P.; Pan, M.J. Mutually-immersive audio telepresence. In Proceedings of the 113th Audio Engineering Society Convention, Los Angeles, CA, USA, 5–8 October 2002. [Google Scholar]

- Wenzel, E.M.; Wightman, F.L.; Kistler, D.J. Localization with non-individualized virtual acoustic display cues. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 27 April–2 May 1991; pp. 351–359. [Google Scholar]

- AltSpaceVR. Available online: https://altvr.com/ (accessed on 1 February 2022).

- Rottondi, C.; Chafe, C.; Allocchio, C.; Sarti, A. An Overview on Networked Music Performance Technologies. IEEE Access 2016, 4, 8823–8843. [Google Scholar] [CrossRef]

- The University of Texas at Austin and Internet2 to Host First Virtual Concert Experiment. 2004. Available online: https://news.utexas.edu/2004/09/27/the-university-of-texas-at-austin-and-internet2-to-host-first-virtual-concert-experiment-tuesday-sept-28/ (accessed on 1 February 2022).

- Sawchuk, A.; Chew, E.; Zimmermann, R.; Papadopoulos, C.; Kyriakakis, C. From Remote Media Immersion to Distributed Immersive Performance. In Proceedings of the 2003 ACM SIGMM workshop on Experiential telepresence, Berkeley, CA, USA, 7 November 2003. [Google Scholar] [CrossRef]

- Xu, A.; Woszczyk, W.; Settel, Z.; Pennycook, B.; Rowe, R.; Galanter, P.; Bary, J.; Martin, G.; Corey, J.; Cooperstock, J.R. Real-time streaming of multichannel audio data over Internet. J. Audio Eng. Soc. 2000, 48, 627–641. [Google Scholar]

- Zimmermann, R.; Chew, E.; Ay, S.A.; Pawar, M. Distributed musical performances: Architecture and stream management. ACM Trans. Multimedia Comput. Commun. Appl. 2008, 4, 1–23. [Google Scholar] [CrossRef]

- Gurevich, M.; Donohoe, D.; Bertet, S. Ambisonic spatialization for networked music performance. In Proceedings of the 17th International Conference on Auditory Display, Budapest, Hungary, 20–23 June 2011. [Google Scholar]

- Frank, M.; Sontacchi, A. Case Study on Ambisonics for Multi-Venue and Multi-Target Concerts and Broadcasts. J. Audio Eng. Soc. 2017, 65, 749–756. [Google Scholar] [CrossRef]

- PURE Ambisonics Concert & the Night of Ambisonics. Available online: https://ambisonics.iem.at/icsa2015/pure-ambisonics-concert (accessed on 1 February 2022).

- Rudrich, D.; Zotter, F.; Frank, M. Efficient Spatial Ambisonic Effects for Live Audio. In Proceedings of the 29th Tonmeistertagung—VDT International Convention, Cologne, Germany, 17–20 November 2016. [Google Scholar]

- YouTube Help—Use Spatial Audio in 360-Degree and VR Videos. Available online: https://support.google.com/youtube/answer/6395969 (accessed on 1 February 2022).

- Facebook 360 Spatial Workstation—Creating Videos with Spatial Audio for Facebook 360. Available online: https://facebookincubator.github.io/facebook-360-spatial-workstation/KB/CreatingVideosSpatialAudioFacebook360.html (accessed on 1 February 2022).

- Facebook 360 Spatial Workstation—Using an Ambisonic Microphone with Your Live 360 Video on Facebook. Available online: https://facebookincubator.github.io/facebook-360-spatial-workstation/KB/UsingAnAmbisonicMicrophone.html (accessed on 1 February 2022).

- Deppisch, T.; Meyer-Kahlen, N.; Hofer, B.; Latka, T.; Zernicki, T. HOAST: A Higher-Order Ambisonics, Streaming Platform. In Proceedings of the 148th Audio Engineering Society Convention, Online, 25–28 May 2020. [Google Scholar]

- Carôt, A.; Sardis, F.; Dohler, M.; Saunders, S.; Uniyal, N.; Cornock, R. Creation of a Hyper-Realistic Remote Music Session with Professional Musicians and Public Audiences Using 5G Commodity Hardware. In Proceedings of the IEEE International Conference on Multimedia & Expo Workshops (ICMEW), London, UK, 6–10 July 2020. [Google Scholar] [CrossRef]

- Eric Whitacre’s Virtual Choir. Available online: https://ericwhitacre.com/the-virtual-choir/about (accessed on 1 February 2022).

- A Socially-Distanced, 360 Performance of Puccini’s Turandot (Royal Opera House Chorus and Orchestra). Available online: https://youtu.be/VwOpNf8eHeY (accessed on 1 February 2022).

- Georgia Symphony Chorus, Georgia On My Mind—360° Virtual Choir with Adaptive Audio in 8K. Available online: https://youtu.be/BrXZ63nOUhU (accessed on 1 February 2022).

- I(solace)ion (Juliana Kay & Exaudi)|360°—Exaudi. Available online: https://youtu.be/HkiIUeuugk8 (accessed on 1 February 2022).

- J. S. Bach-Koncert na Dwoje Skrzypiec BWV 1043 [360°] (J. S. Bach—Concerto for Two Violins BWV 1043 [360°]). Available online: https://youtu.be/mQXNneuRG3s (accessed on 1 February 2022).

- Socially Distant Orchestra Plays “Jupiter” in 360°. Available online: https://youtu.be/eiouj6HkjfA (accessed on 1 February 2022).

- Sicut Cervus-Virtual Cathedral #StayAtHome #SingAtHome [4k 360°]. Available online: https://youtu.be/4dwSRNxUrlU (accessed on 1 February 2022).

- Hewage, C.; Ekmekcioglu, E. Multimedia Quality of Experience (QoE): Current Status and Future Direction. Future Internet 2020, 12, 121. [Google Scholar] [CrossRef]

- Kunka, B.; Czyżewski, A.; Kostek, B. Concentration tests. An application of gaze tracker to concentration exercises. In Proceedings of the 1st International Conference on Computer Supported Education, Lisboa, Portugal, 23–26 March 2009. [Google Scholar]

- Ramírez-Correa, P.; Alfaro-Pérez, J.; Gallardo, M. Identifying Engineering Undergraduates’ Learning Style Profiles Using Machine Learning Techniques. Appl. Sci. 2021, 11, 10505. [Google Scholar] [CrossRef]

- Jo, Y.-J.; Choi, J.-S.; Kim, J.; Kim, H.-J.; Moon, S.-Y. Virtual Reality (VR) Simulation and Augmented Reality (AR) Navigation in Orthognathic Surgery: A Case Report. Appl. Sci. 2021, 11, 5673. [Google Scholar] [CrossRef]

- Becerra Martinez, H.; Hines, A.; Farias, M.C.Q. Perceptual Quality of Audio-Visual Content with Common Video and Audio Degradations. Appl. Sci. 2021, 11, 5813. [Google Scholar] [CrossRef]

- Kunka, B.; Kostek, B.; Kulesza, M.; Szczuko, P.; Czyżewski, A. Gaze-Tracking Based Audio-Visual Correlation Analysis Employing Quality of Experience Methodology. Intell. Decis. Technol. 2010, 4, 217–227. [Google Scholar] [CrossRef]

- Kunka, B.; Kostek, B. Exploiting Audio-Visual Correlation by Means of Gaze Tracking. Int. J. Comput. Sci. 2010, 3, 104–123. [Google Scholar]

- Meghanathan, R.N.; Ruediger-Flore, P.; Hekele, F.; Spilski, J.; Ebert, A.; Lachmann, T. Spatial Sound in a 3D Virtual Environment: All Bark and No Bite? Big Data Cogn. Comput. 2021, 5, 79. [Google Scholar] [CrossRef]

- Zhu, H.; Luo, M.D.; Wang, R.; Zheng, A.-H.; He, R. Deep Audio-visual Learning: A Survey. Int. J. Autom. Comput. 2021, 18, 351–376. [Google Scholar] [CrossRef]

- Tran, H.T.T.; Ngoc, N.P.; Pham, C.T.; Jung, Y.J.; Thang, T.C. A Subjective Study on User Perception Aspects in Virtual Reality. Appl. Sci. 2019, 9, 3384. [Google Scholar] [CrossRef] [Green Version]

- Brungart, D.S.; Kruger, S.E.; Kwiatkowski, T.; Heil, T.; Cohen, J. The effect of walking on auditory localization, visual discrimination, and aurally aided visual search. Hum. Factors 2019, 61, 976–991. [Google Scholar] [CrossRef]

- Hekele, F.; Spilski, J.; Bender, S.; Lachmann, T. Remote vocational learning opportunities—A comparative eye-tracking investigation of educational 2D videos versus 360° videos for car mechanics. Br. J. Educ. Technol. 2022, 53, 248–268. [Google Scholar] [CrossRef]

- Kostek, B.; Kunka, B. Application of Gaze Tracking Technology to Quality of Experience Domain. In Proceedings of the MCSS 2010: IEEE International Conference on Multimedia Communications, Services and Security, Kraków, Poland, 6–7 May 2010; pp. 134–139. [Google Scholar]

- Kostek, B. Observing uncertainty in music tagging by automatic gaze tracking. In Proceedings of the 42nd International Audio Engineering Society Conference Semantic Audio, Ilmenau, Germany, 22–24 July 2011; pp. 79–85. [Google Scholar]

- Poggi, I.; Ranieri, L.; Leone, Y.; Ansani, A. The Power of Gaze in Music. Leonard Bernstein’s Conducting Eyes. Multimodal Technol. Interact. 2020, 4, 20. [Google Scholar] [CrossRef]

- Gerzon, M.A. What’s wrong with Quadraphonics. Studio Sound 1974, 16, 50–56. [Google Scholar]

- RØDE Blog—The Beginner’ ’s Guide To Ambisonics. Available online: https://www.rode.com/blog/all/what-is-ambisonics (accessed on 1 February 2022).

- Mróz, B.; Odya, P.; Kostek, B. Multichannel Techniques in the Application of Remote Concerts and Music Recordings at a Distance (in Polish). In Research Advances in Audio and Video Engineering. New Trends and Applications of Multichannel Sound Technology and Sound Quality Research; Opieliński, K., Ed.; Wroclaw University of Technology Publishing House: Wroclaw, Poland, 2021; pp. 67–82, (In Polish). [Google Scholar] [CrossRef]

- Zotter, F.; Frank, M. All-round Ambisonic panning and decoding. J. Audio Eng. Soc. 2012, 60, 807–820. [Google Scholar]

- Zotter, F.; Frank, M. Ambisonic decoding with panning-invariant loudness on small layouts (allrad2). In Proceedings of the 144th Audio Engineering Society Convention, Milan, Italy, 24–26 May 2018. [Google Scholar]

- Berge, S.; Barrett, N. High angular resolution planewave expansion. In Proceedings of the 2nd International Symposium on Ambisonics and Spherical Acoustics, Paris, France, 6–7 May 2010. [Google Scholar]

- Murillo, D.; Fazi, F.; Shin, M. Evaluation of Ambisonics decoding methods with experimental measurements. In Proceedings of the EAA Joint Symposium on Auralization and Ambisonics, Berlin, Germany, 3–4 April 2014. [Google Scholar] [CrossRef]

- Pulkki, V.; Merimaa, J. Spatial impulse response rendering II: Reproduction of diffuse sound and listening tests. J. Audio Eng. Soc. 2006, 54, 3–20. [Google Scholar]

- Wiggins, B.; Paterson-Stephens, I.; Schillebeeckx, P. The analysis of multichannel sound reproduction algorithms using HRTF data. In Proceedings of the 19th International AES Surround Sound Convention, Schloss Elmau, Germany, 21–24 June 2001; pp. 111–123. [Google Scholar]

- Beack, S.; Sung, J.; Seo, J.; Lee, T. MPEG Surround Extension Technique for MPEG-H 3D Audio. ETRI J. 2016, 38, 829–837. [Google Scholar] [CrossRef]

- Herre, J.; Hilpert, J.; Kuntz, A.; Plogsties, J. MPEG-H 3D Audio—The New Standard for Coding of Im-mersive Spatial Audio. IEEE J. Sel. Top. Signal Process. 2015, 9, 770–779. [Google Scholar] [CrossRef]

- Meltzer, S.; Neuendorf, M.; Sen, D.; Jax, P. MPEG-H 3D Audio—The Next Generation Audio System. IET Commun. 2014, 8, 2900–2908. [Google Scholar] [CrossRef]

- Meltzer, S.; Murtaza, A.; Pietrzyk, G. MPEG-H 3D Standard. Audio and its applications in digital television (in Polish). In Research Advances in Audio and Video Engineering. New Trends and Applications of Multimedia Technologies; Kostek, B., Ed.; Academic Publishing House EXIT: Warsaw, Poland, 2019; pp. 16–44. (In Polish) [Google Scholar]

- Zotter, F.; Frank, M. Does it Sound Better Behind Miles Davis’ Back?—What Would It Sound Like Face-to-Face? Rushing through a Holographic Sound Image of the Trumpet. Available online: https://acoustics.org/2paaa4-does-it-sound-better-behind-miles-davis-back-what-would-it-sound-like-face-to-face-rushing-through-a-holographic-sound-image-of-the-trumpet-franz-zotter-matthias-frank/ (accessed on 18 March 2022).

- Hohl, F.; Zotter, F. Similarity of musical instrument radiation-patterns in pitch and partial. In Proceedings of the DAGA 2010, Berlin, Germany, 15–18 March 2010; pp. 701–702. [Google Scholar]

- Pätynen, J.; Lokki, T. Directivities of Symphony Orchestra Instruments. Acta Acust. United Acust. 2010, 96, 138–167. [Google Scholar] [CrossRef] [Green Version]

- Waddell, G.; Williamon, A. Technology Use and Attitudes in Music Learning. Front. ICT 2019, 6, 11. [Google Scholar] [CrossRef]

- Ruby, R. How to Record High-Quality Music with a Smartphone. Available online: https://rangeofsounds.com/blog/how-to-record-music-with-a-smartphone/ (accessed on 18 March 2022).

- Reaper. Available online: https://www.reaper.fm/ (accessed on 1 February 2022).

- Melodyne Studio. Available online: https://www.celemony.com/en/melodyne/what-is-melodyne (accessed on 1 February 2022).

- IEM Plug-in Suite. Available online: https://plugins.iem.at/ (accessed on 1 February 2022).

- IEM Plug-in Suite—DirectivityShaper. Available online: https://plugins.iem.at/docs/directivityshaper/ (accessed on 1 February 2022).

- IEM Plug-in Suite—RoomEncoder. Available online: https://plugins.iem.at/docs/plugindescriptions/#roomencoder (accessed on 1 February 2022).

- IEM Plug-in Suite—FDNReverb. Available online: https://plugins.iem.at/docs/plugindescriptions/#fdnreverb (accessed on 1 February 2022).

- DaVinci Resolve. Available online: https://www.blackmagicdesign.com/products/davinciresolve/ (accessed on 1 February 2022).

- Facebook 360 Spatial Workstation. Available online: https://facebook360.fb.com/spatial-workstation/ (accessed on 1 February 2022).

- Strasburger, H.; Rentschler, I.; Jüttner, M. Peripheral vision and pattern recognition: A review. J. Vis. 2011, 11, 13. [Google Scholar] [CrossRef] [Green Version]

- Simpson, M.J. Mini-review: Far peripheral vision. Vis. Res. 2017, 140, 96–105. [Google Scholar] [CrossRef] [PubMed]

- Wöllner, C. Which Part of the Conductor’s Body Conveys Most Expressive Information? A Spatial Occlusion Approach. Music. Sci. 2008, 12, 249–272. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mróz, B.; Odya, P.; Kostek, B. Creating a Remote Choir Performance Recording Based on an Ambisonic Approach. Appl. Sci. 2022, 12, 3316. https://doi.org/10.3390/app12073316

Mróz B, Odya P, Kostek B. Creating a Remote Choir Performance Recording Based on an Ambisonic Approach. Applied Sciences. 2022; 12(7):3316. https://doi.org/10.3390/app12073316

Chicago/Turabian StyleMróz, Bartłomiej, Piotr Odya, and Bożena Kostek. 2022. "Creating a Remote Choir Performance Recording Based on an Ambisonic Approach" Applied Sciences 12, no. 7: 3316. https://doi.org/10.3390/app12073316

APA StyleMróz, B., Odya, P., & Kostek, B. (2022). Creating a Remote Choir Performance Recording Based on an Ambisonic Approach. Applied Sciences, 12(7), 3316. https://doi.org/10.3390/app12073316