Abstract

A brain–computer interface (BCI) is a promising technology that can analyze brain signals and control a robot or computer according to a user’s intention. This paper introduces our studies to overcome the challenges of using BCIs in daily life. There are several methods to implement BCIs, such as sensorimotor rhythms (SMR), P300, and steady-state visually evoked potential (SSVEP). These methods have different pros and cons according to the BCI type. However, all these methods are limited in choice. Controlling the robot arm according to the intention enables BCI users can do various things. We introduced the study predicting three-dimensional arm movement using a non-invasive method. Moreover, the study was described compensating the prediction using an external camera for high accuracy. For daily use, BCI users should be able to turn on or off the BCI system because of the prediction error. The users should also be able to change the BCI mode to the efficient BCI type. The BCI mode can be transformed based on the user state. Our study was explained estimating a user state based on a brain’s functional connectivity and a convolutional neural network (CNN). Additionally, BCI users should be able to do various tasks, such as carrying an object, walking, or talking simultaneously. A multi-function BCI study was described to predict multiple intentions simultaneously through a single classification model. Finally, we suggest our view for the future direction of BCI study. Although there are still many limitations when using BCI in daily life, we hope that our studies will be a foundation for developing a practical BCI system.

1. Introduction

The convergence of brain science and artificial intelligence technology has received significant attention. A typical example is a brain–computer interface (BCI) [1]. BCI is a technology that can measure and analyze brain signals to predict a user’s intention and control a robot or computer according to their choice [2]. For a person to perform a movement, the brain generates a movement command and transmits it to the peripheral nerves through the spinal cord to move the body [3]. However, suppose there is a problem such as spinal cord injury (SCI) or locked-in syndrome (LIS) in these pathways. In that case, even if the brain sends a motor command, the body cannot move at all [4]. Using BCI technology, even paralyzed patients can express their intentions by typing letters. They also can drink water by controlling a robot and can drive to the desired place in an electric wheelchair [2]. BCI technology is beneficial to healthy people too. By using BCI technology, one can easily control various electronic devices such as changing the TV channel, adjusting the air conditioner’s temperature, and adjusting the music volume just by thinking without moving the body. BCI can also be utilized for games, military purposes, and the elderly. Therefore, the social and economic ripple effects of BCI technology are substantial.

There are several methods used to implement BCIs, such as sensorimotor rhythms (SMR), P300, and steady-state visually evoked potential (SSVEP) [2]. SMR-based BCI is a method using the anatomy of the primary motor cortex. Different parts of the brain are responsible for their respective functions. The motor cortex’s alpha wave (8~13 Hz) and beta wave (13~30 Hz) will increase or decrease according to the movement intention. For example, when users want to move their hands or feet, the power of alpha and beta waves decreases on the corresponding motor cortex [2,5,6,7,8]. Therefore, the BCI system can predict a left hand, a right hand, or feet movement intention using power change of the brain area. SMR-BCIs are usually used to control a mouse cursor or an electric wheelchair. Users can control the direction of the cursor or wheelchair to the front, left, or right according to feet, a left-hand, or a right-hand movement intention. SMR-BCIs are intuitive. However, this method requires a long training time for movement imagination [7]. P300 is a positive peak of the brain signal on the parietal region about 300 ms after the stimulation [5]. P300 is greatest upon stimulus when a user wants to select among several stimuli. Therefore, P300-based BCI can predict the target that the user wants by choosing the biggest P300 stimulus. P300-BCIs are generally used to type letters by looking at characters [9,10,11]. They can be used to select one among many options, although a monitor and visual stimuli are needed. SSVEP-based BCI utilizes the fact that the electroencephalography (EEG) intensity increases at the same frequency as the visual stimulus from looking from the user among visual stimuli from blinking at different frequencies [2,12]. A user of the SSVEP-BCI can rapidly select the target among several visual stimuli. Generally, SSVEP shows the highest accuracy among the three BCI methods. SSVEP-BCIs also require a monitor and visual stimuli. The stimuli of SSVEP can make the user’s eyes tired [13].

As described above, BCIs have pros and cons according to the BCI type [14,15]. However, all these methods are limited in choice. It means that the user of these BCIs can do only predetermined tasks such as selecting wheelchair directions or a character. The user cannot do new jobs such as drinking water or brushing teeth. This paper aims to introduce our studies to overcome the limitations of previous BCI methods for practical use.

2. Studies to Overcome BCI Limitations

2.1. Arm Movement Prediction

If BCI users can control the robot arm like their own, they can do various things daily. Therefore, many research groups have attempted to predict arm movement and control the robot arm. It is necessary to identify how brain activities change according to arm movement to predict it. In 1982, Georgopoulos discovered that the firing rate of neurons in the primary motor cortex differed according to the direction of arm movement [16]. It means that neurons have a directional preference for action. Georgopoulos also found that arm movements could be predicted based on firing patterns of neurons [17]. These research results of Georgopoulos became the basis of research to control a robotic arm by predicting arm movements from brain signals. In 2008, a research team at the University of Pittsburgh succeeded in eating marshmallows by controlling a robotic arm in real-time in monkey experiments [18]. A research team from the Brown University and the University of Pittsburgh succeeded in drinking beverages by controlling a robotic arm in real-time with quadriplegic patients in 2012 and 2013, respectively [19,20]. However, existing BCI methods for predicting arm movement measure brain signals by inserting needle-shaped electrodes into the brain. This invasive method requires surgery and causes damage to brain cells. In addition, the invasive method makes it difficult to measure the signals over time [21].

To solve these problems, we developed a technology to predict three-dimensional arm movement using magnetoencephalography (MEG) signals without surgery in 2013 [4]. Movements could be estimated with statistically significant and considerably high accuracy (p < 0.001, mean r > 0.7) from all nine subjects. We analyzed MEG signals based on time-frequency analysis and extracted features for movement prediction using channel selecting, band-pass filtering (0.5–8 Hz), and downsampling (50 Hz). Current movements were predicted using 200 ms intervals (11 time points) of downsampled MEG signals. Then, x, y, and z velocities of movements were estimated using a multiple linear regression method. MEG signals from −60 to −140 ms were critical and 200–300 ms intervals were sufficient to predict current movements. To our knowledge, there was only one paper predicting three-dimensional arm movements using non-invasive neural signals before our study. However, the study’s accuracy was quite low (mean r = 0.19–0.38) and the prediction results were unreliable because of the experiment paradigm [4,22]. In our recent study, the prediction accuracy was highly improved using LSTM instead of multiple linear regression [23].

2.2. Correction Using Image Processing

We could estimate movement with a non-invasive method through the above study. However, the accuracy for controlling a robot arm to grasp a target is generally low. For example, success rates of the invasive BCI method were 20.8–62.2% for reaching and grasping movements, although the experiment task was easy [19]. One softball was presented on the flexible stick as a target in the experiment. Although the robotic arm approximately reached the target, grasping often failed because the robotic arm did not precisely reach the object. A small inaccuracy in the movement prediction caused task failure.

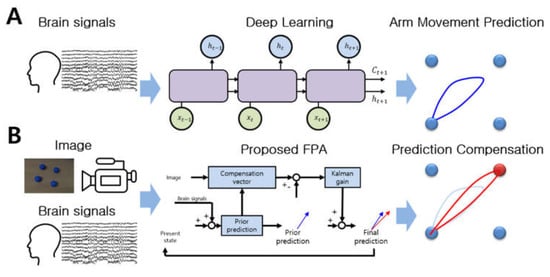

We proposed a novel prediction method, a feedback-prediction algorithm (FPA), to increase the accuracy using image information as shown in Figure 1 [24]. Positions of objects can be easily calculated using image processing technology. The FPA compensates for the prediction based on object positions. The method predicts the target among several objects based on the predicted direction. It corrects the prediction based on the target position. We applied the Kalman filter to compensate for the prediction. The compensated vector multiplied an automatically calculated weight value to reach the target easily. Even if the user changes the movement direction, the predicted trajectory can be corrected to the changed direction because the FPA predicts and corrects at every step. The accuracy of the movement prediction was significantly improved using the FPA for all nine subjects and 32.1% of the error was reduced. Although there were some studies modifying the prediction using target position, the previous studies predicted and compensated for movements on the 2D screen [25,26]. Therefore, the previous methods are unsuitable for controlling a neural prosthesis in real life.

Figure 1.

Arm movement prediction from brain signals and compensation based on image processing. (A) We developed technology to predict three-dimensional arm movement using MEG signals without surgery. (B) We proposed a novel prediction method, FPA, to increase accuracy using image information.

2.3. Prediction of User State

Although we can predict arm movement with high accuracy using our method, there are still problems. The research team of the University of Pittsburgh revealed that severe movement prediction errors could occur during the resting state [27]. It implies that the brain-controlled robot can operate in a dangerous manner if the BCI user does nothing or sleeps. Therefore, the user should be able to turn on or off the BCI system according to their needs. Moreover, different kinds of BCI have different pros and cons. It is challenging to type characters or control a wheelchair by controlling a robot arm. Thus, BCI should be able to predict a user state and apply a suitable BCI mode to the system.

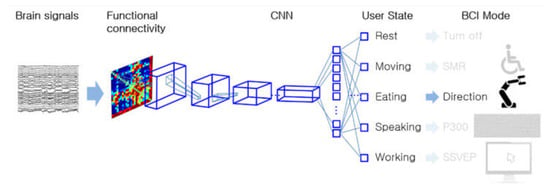

We developed a technology that could predict the user state to change the BCI type as shown in Figure 2 [28]. The user state could be estimated based on the brain’s functional connectivity and a convolutional neural network (CNN). Common average reference (CAR) and band-pass filtering are applied to EEG signals. After filtering, the system calculates mutual information (MI) among EEG signals as functional connectivity. The MI is used as the CNN input. The CNN predicts the user state into four states (resting, speech imagery, leg-motor imagery, and hand-motor imagery). Five-fold cross-validation was applied to evaluate the feasibility. The mean accuracy of 10 subjects for state prediction was 88.25 ± 2.34%. It implies that predicting user state and changing BCI mode are possible using functional connectivity and CNN.

Figure 2.

Mode changeable BCI based on user state. We proposed the mode changeable BCI using functional connectivity and a CNN.

2.4. Multi-Functional BCI

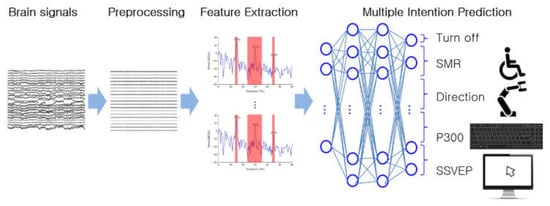

Now, the BCI user can control various electric devices such as a computer, a wheelchair, or a robot by changing the BCI type according to the user’s state. Although the BCI system is helpful to the user, there is still a problem. The user can control only one device at a time. However, the user often performs several tasks simultaneously in real life, such as carrying an object, walking, and talking. Therefore, the BCI system should be able to predict various intentions simultaneously for convenience. For this purpose, we developed a multi-functional BCI system that could simultaneously predict multiple intentions using a single prediction model as shown in Figure 3 [29]. The multi-functional BCI system applies CAR and band-pass filtering. After filtering, the system performs power spectrum and normalization. Finally, artificial neural networks predict multiple intentions from the normalized power spectrum. The prediction accuracy of the proposed BCI was 32.96%. Although the accuracy was not very high, it was significantly higher than the chance level (1.56%). Our ongoing study will increase the prediction accuracy for multiple intentions using a deep learning algorithm. Moreover, we will develop a multi-functional BCI that can work in real-time.

Figure 3.

Multi-functional BCI. We developed the multi-functional BCI system that can simultaneously predict multiple intentions using a single prediction model.

3. Discussion

Over the past decades, there have been lots of BCI studies. They were usually focused on the methods to improve the prediction accuracy [5,8,23,30,31,32], raise the number of commands [12,33], increase the information transfer rate (ITR) [34,35,36,37,38], or reduce the training efforts [7,30,34,39]. To enhance the prediction accuracy, new classification algorithms [30,40,41] or feature extraction methods have been proposed [31,32,42]. Recent BCI studies frequently applied deep learning algorithms for high accuracy [5,23]. The BCI study using deep learning shows 99.38% prediction accuracy for motor imagery tasks [30]. Furthermore, various stimulus-presentation methods were suggested to increase the number of commands [12,15,43]. A recent study implemented a speller with 160 characters by combining different frequency signals [12]. Another critical issue in BCI fields is improving typing speed while maintaining high accuracy. Canonical Correlation Analysis (CCA) is often used in SSVEP spellers and shows good ITR performance [35,36,44]. A hybrid BCI study combining an eye tracker and SSVEP achieved considerably high ITR [37,38]. The accuracy and ITR of the hybrid study were 95.2% and 360.7 bpm, respectively [38]. To reduce the time measuring training data, data augmentation or transfer learning approaches were often used [39]. The prediction model can be trained with fewer data by augmenting the data. The data augmentation could be achieved by cropping the signals with a sliding window [45], adding noise [46], or segmenting and recombining the signals [47]. Recent studies also used generative deep learning algorithms to create artificial neural signals as training data [48,49]. An alternative approach to reducing acquisition time is transfer learning. It utilizes the pre-trained model for new subjects [50,51]. Therefore, transfer learning requires less training data and reduces the acquisition time.

Despite these BCI studies, there were still critical limitations for disabled people to using the BCI system in real life. Here, we introduced our BCI studies to overcome the barriers for practical use. We suggested the methods of estimating arm movement with high accuracy using a non-invasive method and compensating the prediction. Moreover, the study was described predicting the user’s state and changing the system mode. A study predicting multiple intentions was also introduced. Our future study will develop a real-time multi-functional BCI system combining automatic prediction correction, mode change, and multi-intention prediction. In our opinion, automatic control and suggestion systems will be crucial to the BCIs for safe and efficient use of the BCI system in daily life. For instance, autonomous driving of an electric wheelchair by commanding the destination will be more convenient and safe than controlling the wheelchair constantly. Moreover, it will be convenient if the BCI system can suggest proper behavior based on circumstances, schedule, and time like an artificial intelligent secretary. The smart BCI may ask whether the user is hungry based on the user’s routine and time, suggest and order foods according to the preference, and automatically feed the user. To develop smart BCIs, several state-of-the-art technologies such as autonomic driving, context recognition, robotics, and artificial intelligence should be combined.

Although we proposed some approaches to overcome the challenges for the practical use, there are still many limitations when using BCIs in daily life, such as the inconvenience of electrode attachment, system recharge, and system detachment. Nevertheless, we hope that our studies will be a foundation for the development of a practical BCI system.

Author Contributions

Writing—original draft preparation, W.-S.C.; writing—review and editing, H.-G.Y.; visualization, H.-G.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by research fund from Chosun University (2020).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Salahuddin, U.; Gao, P.X. Signal Generation, Acquisition, and Processing in Brain Machine Interfaces: A Unified Review. Front. Neurosci. 2021, 15, 1174. [Google Scholar] [CrossRef] [PubMed]

- Dornhege, G. Toward Brain-Computer Interfacing; MIT Press: Cambridge, MA, USA, 2007; pp. 1–25. [Google Scholar]

- Kandel, E.R.; Koester, J.; Mack, S.; Siegelbaum, S. Principles of Neural Science, 6th ed.; McGraw Hill: New York, NY, USA, 2021; pp. 337–355. [Google Scholar]

- Yeom, H.G.; Kim, J.S.; Chung, C.K. Estimation of the velocity and trajectory of three-dimensional reaching movements from non-invasive magnetoencephalography signals. J. Neural Eng. 2013, 10, 26006. [Google Scholar] [CrossRef] [PubMed]

- Stieger, J.R.; Engel, S.A.; Suma, D.; He, B. Benefits of deep learning classification of continuous noninvasive brain-computer interface control. J. Neural Eng. 2021, 18, 046082. [Google Scholar] [CrossRef]

- Tidare, J.; Leon, M.; Astrand, E. Time-resolved estimation of strength of motor imagery representation by multivariate EEG decoding. J. Neural Eng. 2021, 18, 016026. [Google Scholar] [CrossRef] [PubMed]

- Jiang, X.Y.; Lopez, E.; Stieger, J.R.; Greco, C.M.; He, B. Effects of Long-Term Meditation Practices on Sensorimotor Rhythm-Based Brain-Computer Interface Learning. Front. Neurosci. 2021, 14, 1443. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Ha, J.; Kim, D.H.; Kim, L. Improving Motor Imagery-Based Brain-Computer Interface Performance Based on Sensory Stimulation Training: An Approach Focused on Poorly Performing Users. Front. Neurosci. 2021, 15, 1526. [Google Scholar] [CrossRef] [PubMed]

- Gao, P.; Huang, Y.H.; He, F.; Qi, H.Z. Improve P300-speller performance by online tuning stimulus onset asynchrony (SOA). J. Neural Eng. 2021, 18, 056067. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.L.; Xu, M.P.; Han, J.; Yin, E.W.; Liu, S.; Zhang, X.; Jung, T.P.; Ming, D. Enhancement for P300-speller classification using multi-window discriminative canonical pattern matching. J. Neural Eng. 2021, 18, 046079. [Google Scholar] [CrossRef]

- Kirasirova, L.; Bulanov, V.; Ossadtchi, A.; Kolsanov, A.; Pyatin, V.; Lebedev, M. A P300 Brain-Computer Interface With a Reduced Visual Field. Front. Neurosci. 2020, 14, 1246. [Google Scholar] [CrossRef]

- Chen, Y.H.; Yang, C.; Ye, X.C.; Chen, X.G.; Wang, Y.J.; Gao, X.R. Implementing a calibration-free SSVEP-based BCI system with 160 targets. J. Neural Eng. 2021, 18, 046094. [Google Scholar] [CrossRef]

- Ming, G.G.; Pei, W.H.; Chen, H.D.; Gao, X.R.; Wang, Y.J. Optimizing spatial properties of a new checkerboard-like visual stimulus for user-friendly SSVEP-based BCIs. J. Neural Eng. 2021, 18, 056046. [Google Scholar] [CrossRef] [PubMed]

- Rashid, M.; Sulaiman, N.; Majeed, A.P.P.A.; Musa, R.M.; Ab Nasir, A.F.; Bari, B.S.; Khatun, S. Current Status, Challenges, and Possible Solutions of EEG-Based Brain-Computer Interface: A Comprehensive Review. Front. Neurorobotics 2020, 14, 25. [Google Scholar] [CrossRef] [PubMed]

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain-Computer Interface Spellers: A Review. Brain Sci. 2018, 8, 57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Georgopoulos, A.P.; Kalaska, J.F.; Caminiti, R.; Massey, J.T. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. J. Neurosci. 1982, 2, 1527–1537. [Google Scholar] [CrossRef] [PubMed]

- Georgopoulos, A.P.; Kettner, R.E.; Schwartz, A.B. Primate motor cortex and free arm movements to visual targets in three-dimensional space. II. Coding of the direction of movement by a neuronal population. J. Neurosci. 1988, 8, 2928–2937. [Google Scholar] [CrossRef] [PubMed]

- Velliste, M.; Perel, S.; Spalding, M.C.; Whitford, A.S.; Schwartz, A.B. Cortical control of a prosthetic arm for self-feeding. Nature 2008, 453, 1098–1101. [Google Scholar] [CrossRef]

- Hochberg, L.R.; Bacher, D.; Jarosiewicz, B.; Masse, N.Y.; Simeral, J.D.; Vogel, J.; Haddadin, S.; Liu, J.; Cash, S.S.; van der Smagt, P.; et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 2012, 485, 372–375. [Google Scholar] [CrossRef] [Green Version]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef] [Green Version]

- Polikov, V.S.; Tresco, P.A.; Reichert, W.M. Response of brain tissue to chronically implanted neural electrodes. J. Neurosci. Methods 2005, 148, 1–18. [Google Scholar] [CrossRef]

- Bradberry, T.J.; Gentili, R.J.; Contreras-Vidal, J.L. Reconstructing Three-Dimensional Hand Movements from Noninvasive Electroencephalographic Signals. J. Neurosci. 2010, 30, 3432–3437. [Google Scholar] [CrossRef] [Green Version]

- Yeom, H.G.; Kim, J.S.; Chung, C.K. LSTM Improves Accuracy of Reaching Trajectory Prediction From Magnetoencephalography Signals. IEEE Access 2020, 8, 20146–20150. [Google Scholar] [CrossRef]

- Yeom, H.G.; Kim, J.S.; Chung, C.K. High-Accuracy Brain-Machine Interfaces Using Feedback Information. PLoS ONE 2014, 9, e103539. [Google Scholar] [CrossRef] [PubMed]

- Shanechi, M.M.; Williams, Z.M.; Wornell, G.W.; Hu, R.C.; Powers, M.; Brown, E.N. A Real-Time Brain-Machine Interface Combining Motor Target and Trajectory Intent Using an Optimal Feedback Control Design. PLoS ONE 2013, 8, e59049. [Google Scholar] [CrossRef] [Green Version]

- Gilja, V.; Nuyujukian, P.; Chestek, C.A.; Cunningham, J.P.; Yu, B.M.; Fan, J.M.; Churchland, M.M.; Kaufman, M.T.; Kao, J.C.; Ryu, S.I.; et al. A high-performance neural prosthesis enabled by control algorithm design. Nat. Neurosci. 2012, 15, 1752–1757. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Velliste, M.; Kennedy, S.D.; Schwartz, A.B.; Whitford, A.S.; Sohn, J.W.; McMorland, A.J.C. Motor Cortical Correlates of Arm Resting in the Context of a Reaching Task and Implications for Prosthetic Control. J. Neurosci. 2014, 34, 6011–6022. [Google Scholar] [CrossRef]

- Park, S.M.; Yeom, H.G.; Sim, K.B. User State Classification Based on Functional Brain Connectivity Using a Convolutional Neural Network. Electronics 2021, 10, 1158. [Google Scholar] [CrossRef]

- Choi, W.-S.; Yeom, H.G. A Brain-Computer Interface Predicting Multi-intention Using An Artificial Neural Network. J. Korean Inst. Intell. Syst. 2021, 31, 206–212. [Google Scholar] [CrossRef]

- Mattioli, F.; Porcaro, C.; Baldassarre, G. A 1D CNN for high accuracy classification and transfer learning in motor imagery EEG-based brain-computer interface. J. Neural Eng. 2021, 18, 066053. [Google Scholar] [CrossRef]

- Yang, L.; Song, Y.H.; Ma, K.; Su, E.Z.; Xie, L.H. A novel motor imagery EEG decoding method based on feature separation. J. Neural Eng. 2021, 18, 036022. [Google Scholar] [CrossRef]

- Fumanal-Idocin, J.; Wang, Y.K.; Lin, C.T.; Fernandez, J.; Sanz, J.A.; Bustince, H. Motor-Imagery-Based Brain-Computer Interface Using Signal Derivation and Aggregation Functions. IEEE Trans. Cybern. 2021, 1, 1–12. [Google Scholar] [CrossRef]

- Ko, L.W.; Sankar, D.S.V.; Huang, Y.F.; Lu, Y.C.; Shaw, S.; Jung, T.P. SSVEP-assisted RSVP brain-computer interface paradigm for multi-target classification. J. Neural Eng. 2021, 18, 016021. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.H.; Yang, C.; Chen, X.G.; Wang, Y.J.; Gao, X.R. A novel training-free recognition method for SSVEP-based BCIs using dynamic window strategy. J. Neural Eng. 2021, 18, 036007. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.G.; Wang, Y.J.; Nakanishi, M.; Gao, X.R.; Jung, T.P.; Gao, S.K. High-speed spelling with a noninvasive brain-computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.G.; Wang, Y.J.; Gao, S.K.; Jung, T.P.; Gao, X.R. Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain-computer interface. J. Neural Eng. 2015, 12, 046008. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Kamran, M.A.; Kang, S.; Choi, H.S.; Jeong, M.Y. A Hybrid Speller Design Using Eye Tracking and SSVEP Brain-Computer Interface. Sensors 2020, 20, 891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yao, Z.L.; Ma, X.Y.; Wang, Y.J.; Zhang, X.; Liu, M.; Pei, W.H.; Chen, H.D. High-Speed Spelling in Virtual Reality with Sequential Hybrid BCIs. IEICE Trans. Inf. Syst. 2018, E101d, 2859–2862. [Google Scholar] [CrossRef] [Green Version]

- Ko, W.; Jeon, E.; Jeong, S.; Phyo, J.; Suk, H.I. A Survey on Deep Learning-Based Short/Zero-Calibration Approaches for EEG-Based Brain-Computer Interfaces. Front. Hum. Neurosci. 2021, 15, 258. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.J.; Sun, J.S.; Chen, T. A new dynamically convergent differential neural network for brain signal recognition. Biomed. Signal Processing Control 2022, 71, 103130. [Google Scholar] [CrossRef]

- Roy, A.M. An efficient multi-scale CNN model with intrinsic feature integration for motor imagery EEG subject classification in brain-machine interfaces. Biomed. Signal Processing Control 2022, 74, 103496. [Google Scholar] [CrossRef]

- Yeom, H.G.; Jeong, H. F-Value Time-Frequency Analysis: Between-Within Variance Analysis. Front. Neurosci. 2021, 15, 729449. [Google Scholar] [CrossRef]

- Li, M.L.; He, D.N.; Li, C.; Qi, S.L. Brain-Computer Interface Speller Based on Steady-State Visual Evoked Potential: A Review Focusing on the Stimulus Paradigm and Performance. Brain Sci. 2021, 11, 450. [Google Scholar] [CrossRef]

- Nakanishi, M.; Wang, Y.J.; Wang, Y.T.; Mitsukura, Y.; Jung, T.P. A High-Speed Brain Speller Using Steady-State Visual Evoked Potentials. Int. J. Neural Syst. 2014, 24, 1450019. [Google Scholar] [CrossRef] [PubMed]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep Learning With Convolutional Neural Networks for EEG Decoding and Visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Freer, D.; Yang, G.Z. Data augmentation for self-paced motor imagery classification with C-LSTM. J. Neural Eng. 2020, 17, 016041. [Google Scholar] [CrossRef] [PubMed]

- Dai, G.H.; Zhou, J.; Huang, J.H.; Wang, N. HS-CNN: A CNN with hybrid convolution scale for EEG motor imagery classification. J. Neural Eng. 2020, 17, 016025. [Google Scholar] [CrossRef]

- Fahimi, F.; Dosen, S.; Ang, K.K.; Mrachacz-Kersting, N.; Guan, C.T. Generative Adversarial Networks-Based Data Augmentation for Brain-Computer Interface. IEEE Trans. Neural Networks Learn. Syst. 2021, 32, 4039–4051. [Google Scholar] [CrossRef]

- Zhang, K.; Xu, G.H.; Han, Z.Z.; Ma, K.Q.; Zheng, X.W.; Chen, L.T.; Duan, N.; Zhang, S.C. Data Augmentation for Motor Imagery Signal Classification Based on a Hybrid Neural Network. Sensors 2020, 20, 4485. [Google Scholar] [CrossRef]

- Zhang, R.L.; Zong, Q.; Dou, L.Q.; Zhao, X.Y.; Tang, Y.F.; Li, Z.Y. Hybrid deep neural network using transfer learning for EEG motor imagery decoding. Biomed. Signal Processing Control 2021, 63, 102144. [Google Scholar] [CrossRef]

- Raghu, S.; Sriraam, N.; Temel, Y.; Rao, S.V.; Kubben, P.L. EEG based multi-class seizure type classification using convolutional neural network and transfer learning. Neural Netw. 2020, 124, 202–212. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).