In this section, we describe the procedure of the trajectory synthesis with UDPT. First, we provide an overview of the UDPT. Then, we elaborate on each phase.

4.1. Overview

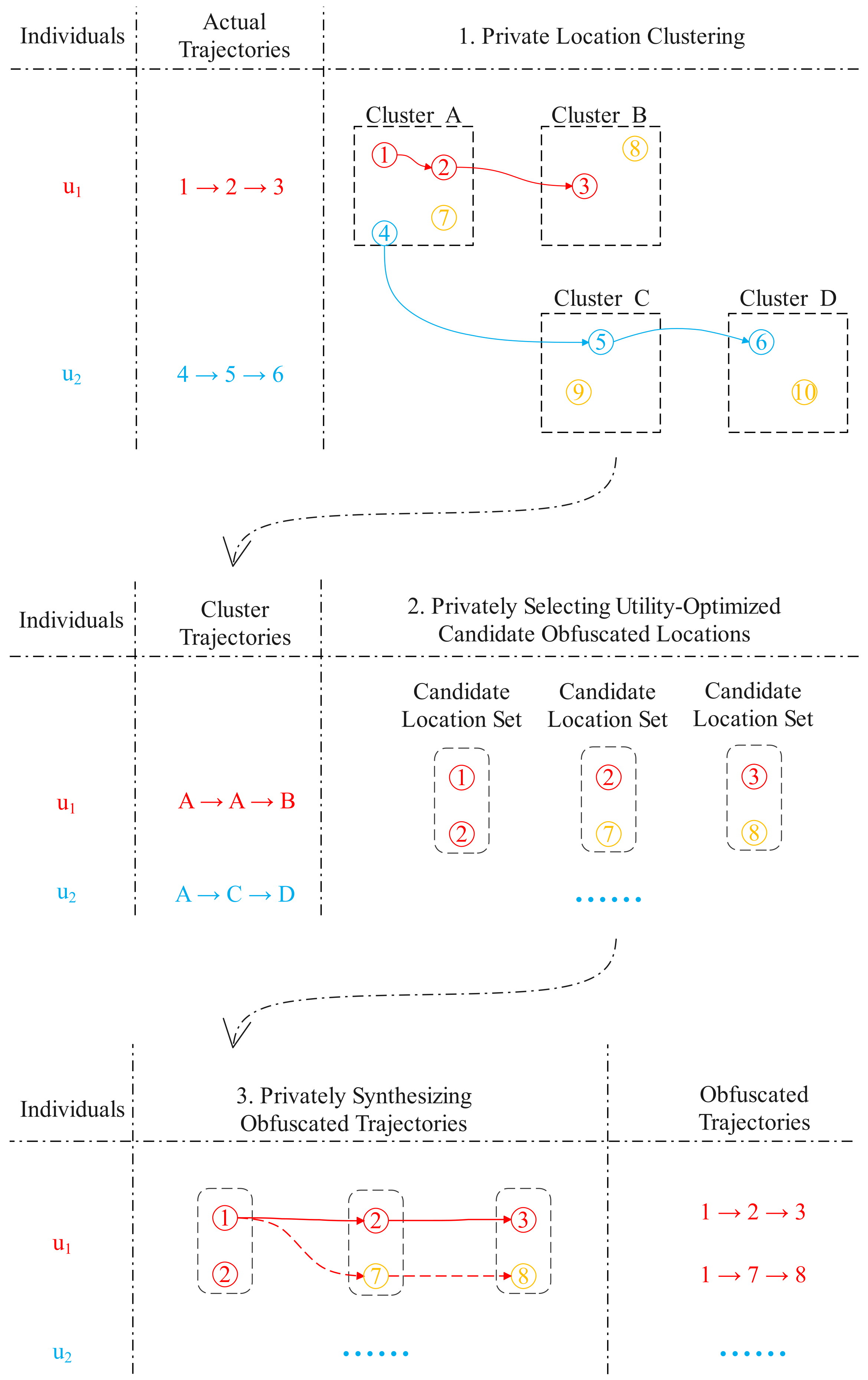

Overall ideas. Recall the design objectives in

Section 3.1. (i) One of the design objectives is to achieve that the chance that any actual location or individual identity is derived by observing the released dataset should be restricted to some magnitude specified by the data curator, we employ the differential privacy as the privacy notion of UDPT. An

-differentially private algorithm can ensure that the output is insensitive to the change of any location record in the actual trajectory, so the location inference attacks on actual locations can be thwarted. The privacy guarantee can be tuned by the data curator with the privacy budget

. However, the differential privacy cannot prevent the de-anonymization attacks on individual identity [

6]. Our remedy is to synthesize a number of indistinguishable and obfuscated trajectories for each actual trajectory in a generative manner, so the attacker cannot rebuild the linkage between individual identities and obfuscated trajectories. (ii) The other objective is to achieve simultaneous preservation of geographical utility as well as semantic utility. In other words, the obfuscated locations should be close to the actual locations and have similar semantics to the actual location as much as possible. To this end, we model the preservation of both types of utility as a multiple-objective optimization problem.

In general, UDPT is a location privacy protection algorithm, which takes as input an actual trajectory dataset of individuals, together with a (total) privacy budget

, and then produces an obfuscated trajectory dataset. It enables the trajectory data curator to release an offline trajectory dataset to public or other analyzers for data-analysis purposes in a privacy-protecting and utility-preserving fashion. Although the privacy protection will obfuscate the actual data and thus deteriorate the data utility, the data curator can control the trade-off between privacy and data utility by tuning the (total) privacy budget

to achieve the requirements of individuals and data analyzers. The pseudocode in Algorithm 1 presents a skeleton of UDPT. We also provide an illustrative example in

Figure 2.

| Algorithm 1 Utility-optimized and differentially private trajectory synthesis (UDPT). |

Input: actual trajectory dataset , (total) privacy budget . Output: obfuscated trajectory dataset . - 1:

Divide the total privacy budget into 3 pieces, namely, , , and . - 2:

Let clusters ⟵ Private location clustering with Algorithm 2, and . - 3:

Let ⟵∅. - 4:

for each in do - 5:

for each do - 6:

Let ⟵ Privately selecting utility-optimized candidate obfuscated locations for with Algorithm 3, clusters , and . - 7:

end for - 8:

Let ⟵. - 9:

Let ⟵ Privately select obfuscated trajectories with Algorithm 4, , and . - 10:

Let ⟵. - 11:

end for - 12:

return .

|

UDPT is composed of three sequential phases, where each phase is a differentially private sub-algorithm. In line 1, we divide the (total) privacy budget into three pieces and each phase (or sub-algorithm) consumes one piece.

In the first phase, as shown in line 2, in order to defend against the location inference attacks, locations that occur in the actual trajectory dataset are blurred into clusters by the private location clustering Algorithm 2. Since this sub-algorithm is essentially iteratively updating a numeric objective function, UDPT exploits the Laplace mechanism to ensure -differentially private. The sub-algorithm finally produces a set of clusters .

In the second phase, as given by line 3 to 8, to simultaneously preserve the geographical utility as well as the semantic utility of each actual trajectory , UDPT privately selects a set of utility-optimized candidate obfuscated locations from the clusters for each actual location . The selection is modeled as a multi-objective optimization problem and then solved by a differentially private genetic algorithm. Since this sub-algorithm is essentially a query which takes as input the clusters and the actual location and then produces categorical outcomes, i.e., a number of location, UDPT employs the exponential mechanism to ensure the sub-algorithm -differentially private. After that, a sequence of utility-optimized candidate obfuscated location sets for each actual trajectory is produced, as shown in line 7.

In the third phase, as shown in line 9, to defend against the de-anonymization attacks, UDPT privately selects obfuscated trajectories with the Algorithm 4 and . Because the sub-algorithm is essentially a categorical query which takes as input the actual trajectory and then produces discrete outcomes, UDPT exploits the exponential mechanism to ensure -differentially private. After that, the sub-algorithm produces a number of indistinguishable and obfuscated trajectories , which share the most similar movement patterns to the actual trajectory , so can be hidden from the de-anonymization attackers. In the end, UDPT collects all the obfuscated trajectories into the dataset , as given by line 10.

Finally, the Algorithm 1 returns an obfuscated trajectory dataset . The data curator can release it to public or any other data analyzers for data-mining purposes. We elaborate on each phase (or sub-algorithm) as follows.

4.2. Private Location Clustering

Since the location inference attacks aim to infer individuals’ actual (or precise) locations from obfuscated locations, a widely used remedy is to replace the actual locations with blurred locations such as grids [

6] or clusters [

22]. The location privacy protection algorithms based on grids split the geographical space where individuals move into uniform and rectangular cells. The locations inside a cell are represented by the cell itself. However, a careless setting of the cell size is likely to divide a place in nature into different cells, which could lose original location semantics. In contrast, recent works have demonstrated that the clustering has a more natural division on geographical space, so the neighboring locations that share the same semantics have a greater chance to gather together [

22]. Therefore, despite defending against location inference attacks, another advantage of location clustering is the better preservation of the semantic utility of individual trajectories. We apply

K-means as the fundamental clustering algorithm. It is easy to be extended for privacy protection, especially for differential privacy, because of its efficiency in computing and simplicity in parameter configuration. However, previous efforts showed that clustering on individual data without any privacy protection measures could lead to a compromise on privacy, e.g., the adversary could find out whether a given location is in a certain cluster [

25]. Therefore, we need to propose a differentially private location clustering algorithm. Despite the clustering, a recent study [

26] on private classification provides us with another inspiration. We leave it as our future work.

The basic idea of location clustering is calculating the distance of pairwise (actual) locations and then gathering those locations having shorter distances iteratively. To ensure the clustering is differentially private, the random noise generated by the Laplace mechanism is injected into the objective function of

K-means clustering. Finally, actual (or original) locations are blurred into clusters, then each actual trajectory is translated into a sequence of clusters, i.e., a cluster trajectory. The pseudocode of private location clustering is presented in Algorithm 2.

| Algorithm 2 Private location clustering. |

Input: geographical space , number of clusters K, privacy budget , max number of iterations p, sensitivity . Output: a set of clusters . - 1:

Initialize cluster centroids , ,..., randomly. - 2:

for r = 1 to p do - 3:

Initialize each cluster with ∅, . - 4:

// Assign the locations in to their closest cluster centroid. - 5:

for i = 1 to do - 6:

, . - 7:

Add into the cluster . - 8:

Let ⟵ 1. - 9:

for do - 10:

Let ⟵ 0. - 11:

end for - 12:

end for - 13:

Calculate the objective function g = . - 14:

if the objective function g converges, then - 15:

Break. - 16:

end if - 17:

// Update each cluster centroid with the mean of all locations in that cluster. - 18:

for k = 1 to K do - 19:

Let ⟵. - 20:

end for - 21:

end for - 22:

return.

|

In line 1, we initialize K cluster centroids with the locations uniformly chosen from the geographical space at random, where is actually the set of locations occurring in the actual trajectory dataset . After that, we iteratively update the clusters until the convergence. The steps in a single iteration are elaborated as follows.

In line 3, we initialize each cluster with an empty set. In line 5 to 12, we assign each location in the geographical space

to their closest cluster centroid. Specifically, first, in line 6, given a location

, let

denote the index of the cluster centroid which is closest to

. In particular, if there were more than one closest centroids, we uniformly chose one from them at random. Next, we add the location

into the cluster

, as shown in line 7. Then, to facilitate the calculation of objective function, we introduce an indicator of the relation between locations and clusters, which is defined in Equation (

3). Let

indicate the fact that the location

belongs to the cluster

, and

otherwise. In this case, we have

, as shown in line 8. We also have

for

, as shown in line 10.

In line 13, we calculate the objective function of the private location clustering,

g, which is defined in Equation (

4),

K the number of clusters,

the

i-th location in

,

the

k-th cluster centroid,

the random Laplace noise generated by the Laplace mechanism in Theorem 1.

In Equation (

4), the maximum number of iterations,

p, is a hyperparameter of the algorithm. A larger

p theoretically results in a better performance of clustering. However, since we need to divide the privacy budget

into

p pieces, a larger number of iterations could inject larger noise to a single iteration. The iteration often ends in advance, i.e., the actual number of iterations is likely less than

p, so we can leave the unused privacy budget to the following steps including the

Section 4.3 and

Section 4.4. We will discuss this hyperparameter in our experiments.

In addition, in Equation (

4),

represents the privacy budget allocated to the private location clustering algorithm. It controls the trade-off between privacy and data utility, where a smaller value indicates a stronger guarantee of privacy while a poor preservation of data utility. Previous studies suggest that taking values in the interval

often brings about a reasonable trade-off between privacy and data utility [

7].

In Equation (

4), the Laplace noise,

, is actually a random variable following the Laplace distribution, a.k.a., double exponential distribution. Its probability density function is defined in Equation (

5), where

x represents a possible noise value,

means the probability density w.r.t.

x, and

is the sensitivity of the objective function. According to the Laplace mechanism [

7], the sensitivity indicates the maximum change of

when removing or adding one location record from the actual trajectory dataset

. Therefore, we define the sensitivity

as the maximum distance between locations in the geographical space

; that is,

=

. In this case, we can ensure that the output of the private location clustering algorithm is insensitive to the change of a single record in the actual dataset. In other words, we achieve the differential privacy for our private location clustering algorithm.

In line 14, we check whether the objective function

g converges. If the change of objective function values between consecutive iterations is not greater than some threshold, we say that the objective function converges. The threshold is a hyperparameter that needs to be empirically determined. See the

Section 5 and the

Appendix A for a detailed discussion. When

g converges, we terminate the iteration and return the clusters

. Otherwise, in line 19, we update each cluster centroid

with the mean of all locations in that cluster,

.

4.3. Privately Selecting Utility-Optimized Candidate Obfuscated Locations

After private location clustering, the actual trajectory is translated into a sequence of clusters, i.e., a cluster trajectory. Since most of the data utility of the actual trajectory, e.g., location transition patterns [

20], is still maintained, an intuitive way to synthesize the obfuscated trajectory is to sample a trajectory, which preserves the data utility of the actual trajectory to the most extent, from the Cartesian product of the clusters at all time instants of the cluster trajectory. However, the number of candidate obfuscated trajectories is so large that we cannot find the expected trajectory at an acceptable time cost. Alternatively, a feasible remedy is to prune the sample space by shrinking each cluster to a small set of candidate obfuscated locations maintaining the data utility of the actual location as much as possible.

In this subsection, we select utility-optimized candidate obfuscated locations for each actual location in the actual trajectory. Specifically, first, we model the simultaneous preservation of both types of data utility as a multi-objective utility optimization problem. Second, we solve the optimization problem with the genetic algorithm to select utility-optimized candidate obfuscated locations, where the selection is perturbed by differential privacy to prevent the actual locations from being deduced by observing the candidate obfuscated locations.

4.3.1. Modeling Utility Optimization Problem

Given any actual location

in the actual trajectory

, there are two objectives for selecting candidate obfuscated location, namely, (i) preserving the geographical utility of the actual location as much as possible, and (ii) maintaining the semantic utility of the actual location to the most extent. On the one hand, in order to preserve the geographical utility, a solution that has been proved feasible by existing work [

12] is to select the location nearby the actual location as the obfuscated location. In this work, we borrow this solution. On the other hand, to preserve the semantic utility, we prefer to select the locations sharing the same (or similar) semantics with the actual location as the obfuscated location.

However, there exist conflicts between the two objectives since nearby locations do not always have similar semantics [

10]. Therefore, an optimal decision needs to be taken in the presence of trade-offs between the two conflicting objectives, which is known as a multi-objective optimization problem. Generally, the way to solve the problem is formalizing the objectives as functions and then finding the optimal solution over the objective functions by optimization algorithms such as genetic algorithm. We formalize the two objectives as follows.

The first objective, i.e., (i) preserving the geographical utility of the actual location as much as possible, is defined as selecting

M candidate obfuscated locations, denoted by

, which are closest to the actual location

. Equation (

6) presents a formal expression, which means minimizing the total Euclidean distance between

M candidate obfuscated locations and the actual location

.

Before formalizing the second objective, we need to acquire the location semantics and define the semantic distance measure. The acquirement of location semantics can be achieved by the application programming interfaces (APIs) of many LBS providers, such as Foursquare and Google Maps. Foursquare also provides a hierarchical tree representation of location categories [

27], which contains ten coarse-grained categories in the first level and hundreds of fine-grained ones in the second level. The path distance between two category nodes in the tree indicates their discrepancy in semantics, which inspires us to propose a graph-theory-based distance metric to measure the discrepancy between the categories of the candidate obfuscated location

and the actual location

. We denote the semantic distance by

and give its definition by Equation (

7).

where

denotes the length of the shortest path between the categories of two locations on the semantic tree,

represents the root node of the tree. In particular, if two categories are equal, their semantic distance is 0, while if they have the same parent category, the distance is 2. The semantic difference between two categories will be normalized by the sum of the nodes’ depths; that is, the distance to the root node.

The second objective, i.e., (ii) maintaining the semantic utility of the actual location to the most extent, is defined as selecting

M candidate obfuscated locations, denoted by

, which are most similar to the actual location

l in terms of location semantics. Then, the second objective is formulated as minimizing the semantic distances between the candidate obfuscated locations and the actual location, which is given by Equation (

8).

Combining the above two objectives, the multi-objective utility optimization problem is modeled as Equation (

9), where

represents the set of utility-optimized candidate obfuscated locations corresponding to the actual location

.

4.3.2. Privately Selecting Candidate Obfuscated Locations

The solutions of the multi-objective optimization problem include particle swarm, ant colony, and genetic algorithm [

28]. Among these approaches, the genetic algorithm is well-known for its high performance. It is based on a natural selection process that mimics biological evolution. Recent work has demonstrated that accessing actual data without taking any privacy-protection measures could lead to compromise on individual privacy, so Zhang et al. [

29] proposed a differentially private genetic algorithm. However, their work is not designed for high-dimensional data, such as trajectory, so we made an adaption, as follows, to support trajectory data.

According to the multi-objective utility optimization problem defined in Equation (

9), we define the objective function of the genetic algorithm, given by Equation (

10), where

indicates the data curator’s preference on the geographical utility and

the semantic utility. For example, suppose that the released data were used for early LBS that relied more on the geographical utility, e.g., points-of-interests extraction [

30], then

should be greater than

, namely,

. The geographical distance and semantic distance in the equation are shifted and re-scaled so that they end up having the same range,

.

In addition, in the genetic algorithm, there exist four operations, namely, encoding, selection, crossover, and mutation. (i) The encoding operation represents the solution of the optimization problem by a location set. (ii) The selection operation randomly chooses one or more location sets that maximize the objective function from an intermediate set. (iii) The crossover operation randomly exchanges some locations in a location set with the counterpart in another location set. (iv) The mutation operation randomly replaces a location in a location set by another location. Note that the selection operation involves access to actual (private) data, thus it should be perturbed by random noise to ensure differentially private. In contrast, the other three operations only access the perturbed results, so no extra perturbation is required according to the post-processing property of differential privacy in

Section 3. We formulate the procedure of privately selecting utility-optimized candidate obfuscated location set by Algorithm 3.

| Algorithm 3 Privately selecting utility-optimized candidate obfuscated locations. |

Input: actual location , privacy budget , size of candidate obfuscated location set M, number of intermediate sets m, number of selected sets , number of iterations r, the cluster C containing Output: candidate obfuscated location set - 1:

// encoding operation - 2:

Initialize the intermediate set with m candidate obfuscated location sets randomly sampled from the cluster C, where the size of each set is M. - 3:

for i = 1 to do - 4:

// selection operation - 5:

Initialize the selected set . - 6:

for each do - 7:

Compute - 8:

end for - 9:

Randomly sample m sets from following and put them into . - 10:

Set . - 11:

for j = 1 to do - 12:

Randomly select two sets . - 13:

// crossover operation - 14:

Randomly crossover and . - 15:

// mutation operation - 16:

Randomly mutate and . - 17:

Add and into . - 18:

end for - 19:

end for - 20:

for each do - 21:

Compute - 22:

end for - 23:

Randomly select a set following from . - 24:

return.

|

In line 1 to 2, we encode the solution of the optimization problem by a location set. The intermediate location set is initialized with m sets of candidate obfuscate locations, where each set is composed of M locations randomly selected from the cluster C. The initialization of the intermediate sets provides an initial direction for the optimal solution searching of the genetic algorithm.

In lines 3 to 23, we select the utility-optimized candidate obfuscated location set in a differentially private and iterative manner. We uniformly divide the privacy budget into r pieces, each iteration consumes .

In line 4, we employ the selection operation to choose m candidate obfuscated location sets that preserve the geographical and semantic utility to the most extent. Since the selection involves access to the actual trajectory data, we exploit the exponential mechanism to provide a differentially private guarantee, where the probability of the candidate obfuscated location set being chosen is proportional to its objective function value, . In line 7, the sensitivity of the exponential mechanism equals 1. In line 8, we randomly sample m location sets without replacement from the intermediate sets following the probability distribution , which can be achieved by constructing an unbalanced roulette.

In line 11, we randomly select pairs of location sets from the selected set, , and put them into the intermediate set, . To this end, first, we randomly select two location sets, and , from . Next, we crossover these two location sets by randomly exchanging part of locations in with the counterparts in . Then, we randomly mutate the location set (and ) by replacing a location in (and ) by another location in the cluster C. Finally, we put and into the intermediate set .

In line 20, the iteration ends. We randomly choose a candidate obfuscated location set from the intermediate set, , following the probability distribution as the algorithm output. The relation between m and is determined empirically. often results in good performance.

We generate the candidate obfuscated location set, , for the actual location at each time instant, , of the actual trajectory with Algorithm 3. Then, we obtain a sequence of candidate obfuscated location sets corresponding to , denoted by , which will be used for the synthesis of obfuscated trajectories of . We do the same for each actual trajectory in .

4.4. Privately Synthesizing Obfuscated Trajectories

A common idea of existing trajectory synthesis methods is to build a trajectory generator that has learned the population movement patterns and then produce the obfuscated trajectory dataset

in a generative manner. However, individual movement patterns could be destroyed, so numerous data analysis tasks that rely on individual-level data utility, e.g., periodic patterns mining [

9] could suffer poor performance. To tackle this issue, we synthesize the obfuscated trajectories for each individual, i.e., each actual trajectory, independently. In this case, the privacy budgets consumed by all individuals do not accumulate according to the differential privacy’s parallel composition property. In other words, when the total privacy budget is fixed, we can spare a larger privacy budget for the trajectory synthesis of a single individual, resulting in less noise injection and thus better preservation of data utility. In addition, to defend against the de-anonymization attacks, for each actual trajectory, we privately synthesize more than one indistinguishably obfuscated trajectories that share the most similar data utility with the actual trajectory.

In the previous section, for any actual trajectory

, we obtained a sequence of candidate obfuscated location sets. To privately synthesize obfuscated trajectories for

, first, to preserve the geographical and semantic utility, we capture individual movement patterns by the CRF. Second, we synthesize a set of candidate obfuscated trajectories by the CRF sequence decoding based on the sequence of candidate obfuscated location sets. Then, some candidates are privately selected as the (final) obfuscated trajectories. Since the obfuscated trajectories maintain similar data utility with the actual trajectory, they cannot be distinguished from each other, providing a defense against de-anonymization attacks. We formalize the aforementioned steps by the pseudocode in Algorithm 4.

| Algorithm 4 Private synthesizing obfuscated trajectories. |

Input: sequence of candidate obfuscated location sets , actual trajectory , sensitivity , number of (final) obfuscated trajectories N. Output: a set of (final) obfuscated trajectories . - 1:

Construct the obfuscated trajectory synthesizer with . - 2:

// Produce a number of candidate obfuscated trajectories. - 3:

. - 4:

for to N do - 5:

⟵ Produce the i-th most probable candidate obfuscated trajectory and its probability with Viterbi, and the synthesizer. - 6:

Add into . - 7:

end for - 8:

// Privately select the (final) obfuscated obfuscated trajectories from the candidates. - 9:

for to N do - 10:

Calculate the probability of being selected as the (final) obfuscated trajectory, i.e., , with Equation ( 11). - 11:

end for - 12:

⟵ Randomly sample candidate obfuscated trajectories from following its probability distribution without replacement. - 13:

return .

|

4.4.1. Constructing Obfuscated Trajectory Synthesizer

CRF is a discriminative undirected graphical model that supports auxiliary dependency within a sequence and performs well over many problems, such as sequence inference [

31]. Recent works have shown that CRF can capture individual movement patterns from spatial, temporal, and semantic aspects, even though the trajectory data are sparse [

23]. In particular, CRF is good at learning the transition patterns between locations, bringing about good preservation of data utility, such as periodic movement patterns.

In this work, we consider the most important example of modeling sequences, i.e., a linear chain CRF, which models the dependency between the actual trajectory and a mobility feature sequence. We consider the following mobility features concerning each actual location l in the actual trajectory; that is, the time when the individual visits l, the day of the week when the individual visits l, the time elapsed since the previous time instant, and the category of l. We extract the mobility features for each actual location in the actual trajectory to obtain the mobility feature sequence.

As shown in line 1 of Algorithm 4, the obfuscated trajectory synthesizer is essentially the CRF trained over the actual trajectory

. The way to train the CRF is following our previous work [

23]. The idea is as follows. First, we split both the actual trajectory and the corresponding mobility feature sequence into a number of sub-sequences by one week to enrich the training data. Then, we train the CRF by estimating the parameters that maximize the probability of the actual trajectory conditioned on the mobility feature sequence. After the training, the individual movement patterns of the actual trajectory

have been “remembered” by the parameters of CRF.

4.4.2. Privately Selecting Obfuscated Trajectories

As given by line 2 to line 6 of Algorithm 4, with the obfuscated trajectory synthesizer, we produce the (final) obfuscated trajectories by sequence decoding of the synthesizer. The sequence decoding refers to finding the most probable trajectories corresponding to a given mobility feature sequence, which can be solved by the Viterbi algorithm. An improved version [

32] can produce a number of most probable trajectories that share similar movement patterns, which inspires us to produce a number of indistinguishably obfuscated trajectories to prevent de-anonymization attacks. The trajectories produced by Viterbi are called by candidate obfuscated trajectories, represented by

. Let

N denote the number of candidate obfuscated trajectories, thus we have

. The Viterbi algorithm can be further improved by restricting the sample space to the Cartesian product of the candidate obfuscated location sets in

, because the locations that cannot well preserve the data utility of the actual locations have been pruned after the utility optimization in

Section 4.3.2. As shown in line 5, we exploit the improved Viterbi algorithm to produce the

i-th most probable candidate obfuscated trajectory

, together with its probability

, where

.

represents the possibility that Viterbi regards

as the actual trajectory

, which also indicates to what extent

can preserve the movement patterns in

. The probability can be directly calculated by the improved Viterbi algorithm. We omit the computational details, which can be found in [

32]. In line 6 of Algorithm 4, we collect the produced candidate obfuscated trajectories with the set

i, where

.

The Viterbi algorithm is essentially a query that takes as input

and the synthesizer, and then produces categorical outputs, i.e., a number of candidate obfuscated trajectories. Note that the above synthesizer is constructed based on the actual trajectory

without any privacy-protecting measure. In this case, the query result is sensitive to the change of a singe location in the actual trajectory

. A location inference attacker could derive the actual location by observing the query result. To tackle this issue, we exploit the exponential mechanism to perturb the outputs of the Viterbi algorithm. Recall the exponential mechanism in Theorem 2, the idea is to consider the probability

as the score of the candidate obfuscated trajectory

, and then to select the (final) obfuscated trajectory

from the candidates

with the probability proportional to

. The normalized probability over all possible outputs is defined in Equation (

11).

where

is the privacy budget of the exponential mechanism, which is determined by the data curator, as shown in line 1 of Algorithm 1.

represents the maximum change of the score when removing or adding a single location from the actual trajectory

, according to the definition of differential privacy. Since the probability

takes values in

, we have

.

As show in line 8 to 12 of Algorithm 4, we randomly sample

candidates from

without replacement to constitute the set of final obfuscated trajectories,

following the probability distribution in Equation (

11). The reason that we choose

(final) obfuscated trajectories from

is in two aspects. On the one hand, a larger number of obfuscated trajectories can increase the indistinguishability between the trajectories to prevent the de-anonymization attacks, because it is more difficult for the attacker to find the correct linkage between the numerous obfuscated trajectories and individual identities. On the other hand, a less number of obfuscated trajectories indicates that less candidate obfuscated trajectories, which have dissimilar movement patterns with the actual trajectory, are chosen as the (final) obfuscated trajectories. Therefore,

is a moderate trade-off between the above two aspects.

Let denote the final obfuscated trajectory set. For each actual trajectory , we independently select the obfuscated trajectories with the same privacy budget . According to the parallel composition property of differential privacy in Theorem 4, the privacy budgets do not accumulate. In the end, we merge all of the obfuscated trajectory sets into a single set, i.e., the obfuscated trajectory dataset .