AraConv: Developing an Arabic Task-Oriented Dialogue System Using Multi-Lingual Transformer Model mT5

Abstract

:1. Introduction

- Development of the first Arabic task-oriented dialogue dataset (Arabic-TOD) with 1500 dialogues. By translating the English BiToD dataset [1], we produced a valuable benchmark for further exploring Arabic task-oriented DS. Furthermore, Arabic-TOD is the first code-switching dialogue dataset for Arabic task-oriented DS.

- Introduction of the first Arabic end-to-end generative model, the AraConv model, short for Arabic Conversation, that achieves both DST and response generation tasks together in an end-to-end setting.

2. Related Works

2.1. English Task Oriented Dialogue Systems

2.2. Arabic Task-Oriented Dialogue Systems

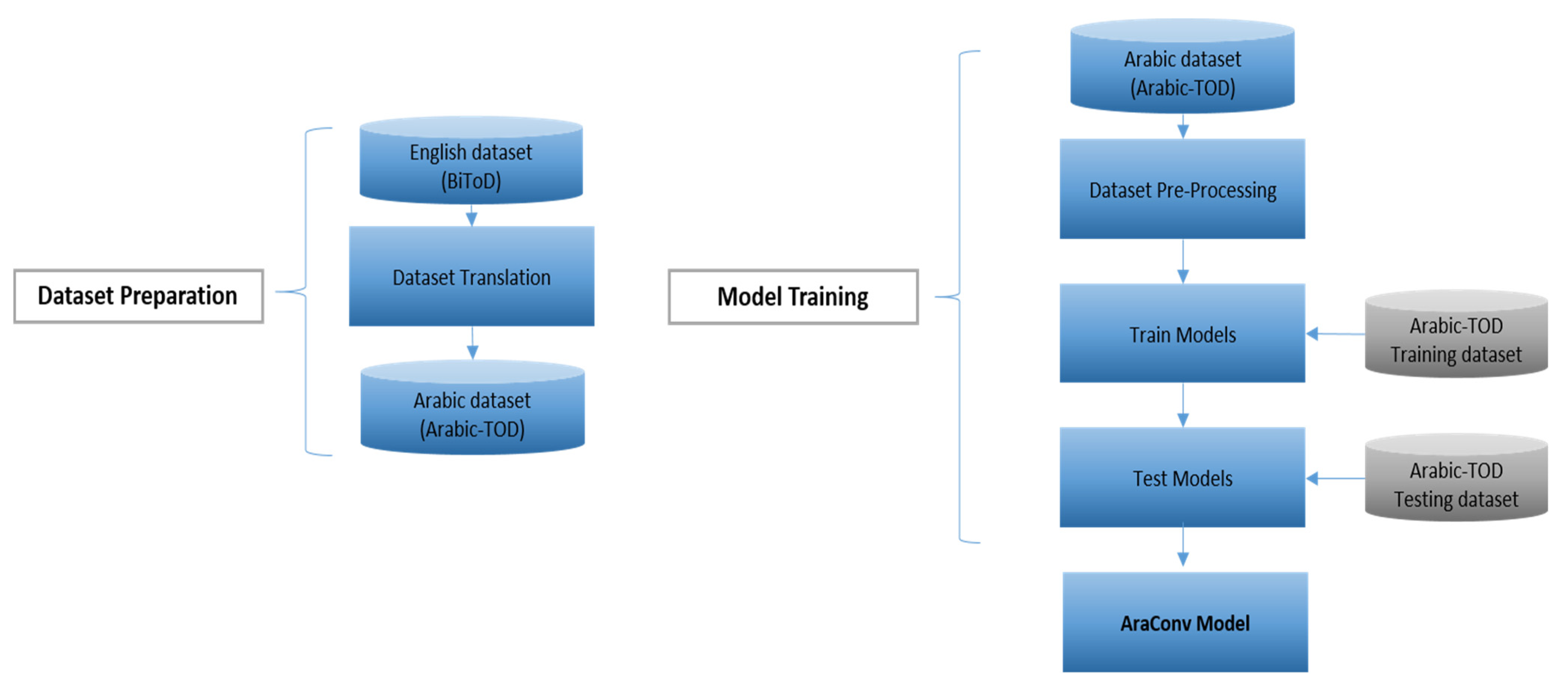

3. Method

3.1. Arabic Task-Oriented DS Dataset

3.2. Structure and Organization of Arabic-TOD Dataset

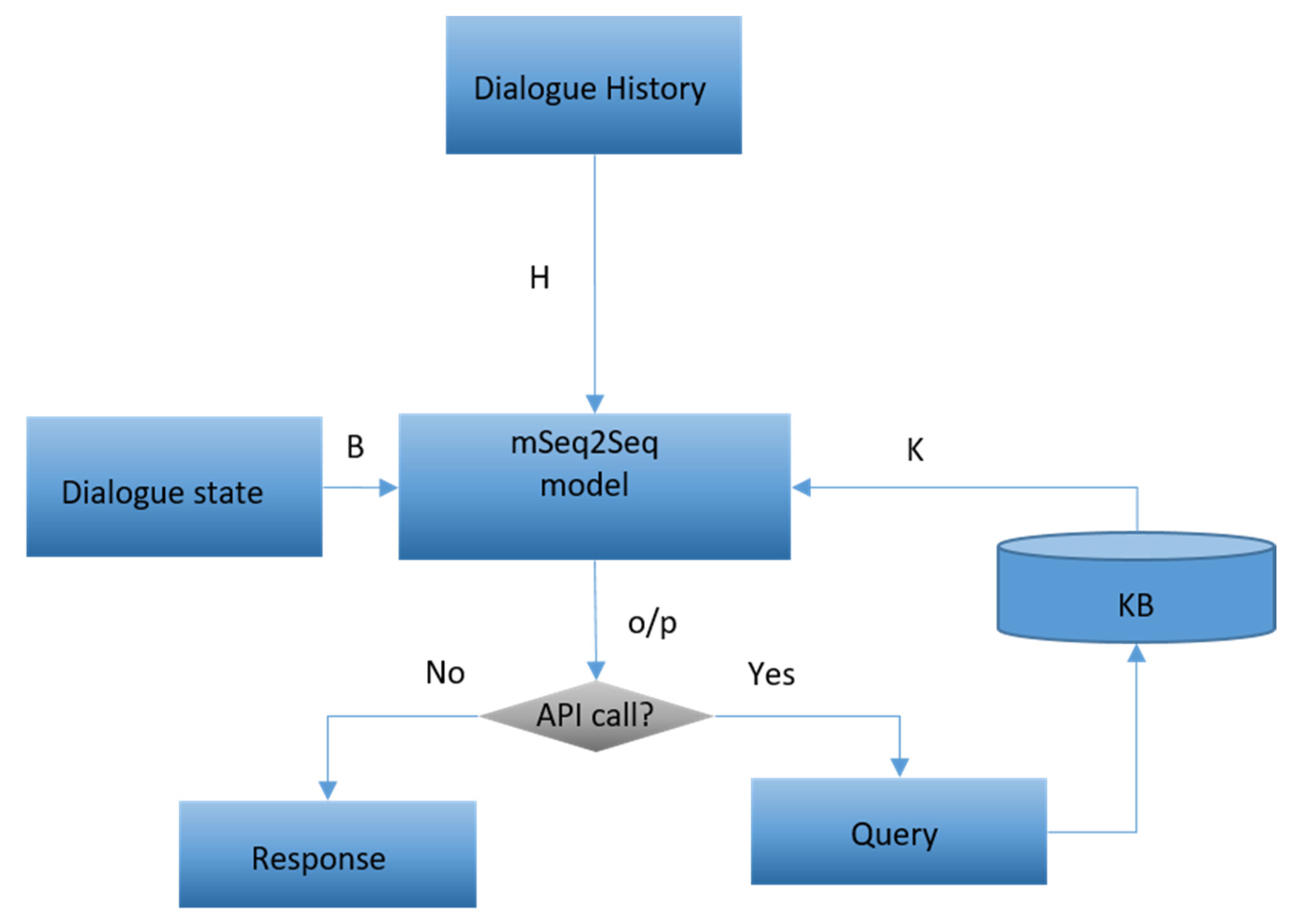

3.3. Model Architecture

4. Experiments

4.1. Evaluation Metrics

- the BLEU metric to assess the generated response fluency;

- the API call accuracy (APIAcc) metric to assess if the system generates the correct API call;

- the task success rate (TSR) metric to assess whether the system finds the correct entity and provides all of the requested information for a particular task. TSR can be defined as

- the dialogue success rate (DSR) metric to evaluate whether the system accomplishes all of the dialogue tasks. DSR can be defined as

- the language understanding score to indicate the extent to which the system understands user inputs; and

- the response appropriateness score to indicate whether the response is appropriate and human-like.

4.2. Experimental Setup

4.3. Baseline

4.4. Experiments

- Non-equivalent (NQ): The size of the Arabic-TOD dataset is not equal to the English BiToD dataset. We trained the model with 1000 Arabic dialogues and 2952 English dialogues.

- Equivalent (Q): The size of the Arabic-TOD dataset and the English BiToD dataset are equal (1000 dialogues for training).

- Non-equivalent (NQ): The size of the Arabic-TOD dataset is not equal to the English or Chinese BiToD dataset. We trained the model with 1000 Arabic dialogues, 2952 English dialogues, and 2835 Chinese dialogues.

- Equivalent (Q): The size of the Arabic-TOD dataset, the English BiToD dataset, and the Chinese BiToD are equal (1000 dialogues for training).

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, Z.; Madotto, A.; Winata, G.I.; Xu, P.; Jiang, F.; Hu, Y.; Shi, C.; Fung, P. BiToD: A Bilingual Multi-Domain Dataset For Task-Oriented Dialogue Modeling. arXiv 2021, arXiv:2106.02787. [Google Scholar]

- Huang, M.; Zhu, X.; Gao, J. Challenges in building intelligent open-domain dialog systems. ACM Trans. Inf. Syst. 2019, 38, 1–32. [Google Scholar] [CrossRef]

- AlHagbani, E.S.; Khan, M.B. Challenges facing the development of the Arabic chatbot. First Int. Work. Pattern Recognit. Int. Soc. Opt. Photonics 2016, 10011, 7. [Google Scholar] [CrossRef]

- Darwish, K.; Habash, N.; Abbas, M.; Al-Khalifa, H.; Al-Natsheh, H.T.; Bouamor, H.; Bouzoubaa, K.; Cavalli-Sforza, V.; El-Beltagy, S.R.; El-Hajj, W.; et al. A Panoramic Survey of Natural Language Processing in the Arab World. Commun. ACM 2021, 64, 72–81. [Google Scholar] [CrossRef]

- Xue, L.; Constant, N.; Roberts, A.; Kale, M.; Al-Rfou’, R.; Siddhant, A.; Barua, A.; Raffel, C. mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, online, 15–20 June 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 483–498. [Google Scholar] [CrossRef]

- McTear, M. Conversational AI: Dialogue Systems, Conversational Agents, and Chatbots; Morgan & Claypool Publishers LLC: San Rafael, CA, USA, 2020; Volume 13. [Google Scholar]

- Qin, L.; Xu, X.; Che, W.; Zhang, Y.; Liu, T. Dynamic Fusion Network for Multi-Domain End-to-end Task-Oriented Dialog. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, online, 5–10 July 2020; pp. 6344–6354. [Google Scholar] [CrossRef]

- Lei, W.; Jin, X.; Ren, Z.; He, X.; Kan, M.Y.; Yin, D. Sequicity: Simplifying task-oriented dialogue systems with single sequence-to-sequence architectures. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 1437–1447. [Google Scholar] [CrossRef] [Green Version]

- Budzianowski, P.; Vulić, I. Hello, It’s GPT-2-How Can I Help You? Towards the Use of Pretrained Language Models for Task-Oriented Dialogue Systems. In Proceedings of the 3rd Workshop on Neural Generation and Translation (WNGT 2019), Hong Kong, China, 4 November 2019; pp. 15–22. [Google Scholar] [CrossRef] [Green Version]

- Ham, D.; Lee, J.-G.; Jang, Y.; Kim, K.-E. End-to-End Neural Pipeline for Goal-Oriented Dialogue Systems using GPT-2. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; Volume 2, pp. 583–592. [Google Scholar] [CrossRef]

- Hosseini-Asl, E.; McCann, B.; Wu, C.S.; Yavuz, S.; Socher, R. A simple language model for task-oriented dialogue. Adv. Neural Inf. Process. Syst. 2020, 33, 20179–20191. [Google Scholar]

- Peng, B.; Li, C.; Li, J.; Shayandeh, S.; Liden, L.; Gao, J. SOLOIST: Building Task Bots at Scale with Transfer Learning and Machine Teaching. Trans. Assoc. Comput. Linguist. 2021, 9, 807–824. [Google Scholar] [CrossRef]

- Yang, Y.; Li, Y.; Quan, X. UBAR: Towards Fully End-to-End Task-Oriented Dialog Systems with GPT-2. arXiv 2020, arXiv:2012.03539. [Google Scholar]

- Lin, Z.; Madotto, A.; Winata, G.I.; Fung, P. MinTL: Minimalist Transfer Learning for Task-Oriented Dialogue Systems. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 3391–3405. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, Z.; Guo, J.; Dai, Y.; Chen, B.; Luo, W. Task-Oriented Dialogue System as Natural Language Generation. arXiv 2021, arXiv:2108.13679. [Google Scholar]

- Peng, B.; Zhu, C.; Li, C.; Li, X.; Li, J.; Zeng, M.; Gao, J. Few-shot Natural Language Generation for Task-Oriented Dialog. arXiv 2020, arXiv:2002.12328. [Google Scholar]

- Wu, C.-S.; Hoi, S.C.H.; Socher, R.; Xiong, C. TOD-BERT: Pre-trained Natural Language Understanding for Task-Oriented Dialogue. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 917–929. [Google Scholar] [CrossRef]

- Madotto, A.; Liu, Z.; Lin, Z.; Fung, P. Language Models as Few-Shot Learner for Task-Oriented Dialogue Systems. arXiv 2020, arXiv:2008.06239. [Google Scholar]

- Campagna, G.; Foryciarz, A.; Moradshahi, M.; Lam, M. Zero-Shot Transfer Learning with Synthesized Data for Multi-Domain Dialogue State Tracking. arXiv 2020, arXiv:2005.00891. [Google Scholar]

- Zhang, Y.; Ou, Z.; Hu, M.; Feng, J. A Probabilistic End-To-End Task-Oriented Dialog Model with Latent Belief States towards Semi-Supervised Learning. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 9207–9219. [Google Scholar] [CrossRef]

- Kulhánek, J.; Hudeček, V.; Nekvinda, T.; Dušek, O. AuGPT: Dialogue with Pre-trained Language Models and Data Augmentation. arXiv 2021, arXiv:2102.05126. [Google Scholar]

- Gao, S.; Takanobu, R.; Peng, W.; Liu, Q.; Huang, M. HyKnow: End-to-End Task-Oriented Dialog Modeling with Hybrid Knowledge Management. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 1591–1602. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.; Kim, T.Y. SUMBT: Slot-utterance matching for universal and scalable belief tracking. arXiv 2019, arXiv:1907.07421. [Google Scholar]

- Chao, G.L.; Lane, I. BERT-DST: Scalable end-to-end dialogue state tracking with bidirectional encoder representations from transformer. Proc. Annu. Conf. Int. Speech Commun. Assoc. Interspeech 2019, 2019, 1468–1472. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Yang, S.; Kim, G.; Lee, S.-W. Efficient Dialogue State Tracking by Selectively Overwriting Memory. arXiv 2020, arXiv:1911.03906. [Google Scholar] [CrossRef]

- Kumar, A.; Ku, P.; Goyal, A.; Metallinou, A.; Hakkani-Tur, D. MA-DST: Multi-Attention-Based Scalable Dialog State Tracking. Proc. Conf. AAAI Artif. Intell. 2020, 34, 8107–8114. [Google Scholar] [CrossRef]

- Heck, M.; van Niekerk, C.; Lubis, N.; Geishauser, C.; Lin, H.-C.; Moresi, M.; Gašić, M. TripPy: A Triple Copy Strategy for Value Independent Neural Dialog State Tracking. arXiv 2020, arXiv:2005.02877. [Google Scholar]

- Li, S.; Yavuz, S.; Hashimoto, K.; Li, J.; Niu, T.; Rajani, N.; Yan, X.; Zhou, Y.; Xiong, C. CoCo: Controllable Counterfactuals for Evaluating Dialogue State Trackers. arXiv 2020, arXiv:2010.12850. [Google Scholar]

- Wang, D.; Lin, C.; Liu, Q.; Wong, K.-F. Fast and Scalable Dialogue State Tracking with Explicit Modular Decomposition. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico City, Mexico, 6–11 June 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 289–295. [Google Scholar] [CrossRef]

- Schuster, S.; Shah, R.; Gupta, S.; Lewis, M. Cross-lingual transfer learning for multilingual task oriented dialog. In Proceedings of the NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 3795–3805. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Winata, G.I.; Lin, Z.; Xu, P.; Fung, P. Attention-informed mixed-language training for zero-shot cross-lingual task-oriented dialogue systems. Proc. AAAI Conf. Artif. Intell. 2020, 34, 8433–8440. [Google Scholar] [CrossRef]

- Zhang, Y.; Ou, Z.; Yu, Z. Task-oriented dialog systems that consider multiple appropriate responses under the same context. Proc. AAAI Conf. Artif. Intell. 2020, 34, 9604–9611. [Google Scholar] [CrossRef]

- Wang, K.; Tian, J.; Wang, R.; Quan, X.; Yu, J. Multi-Domain Dialogue Acts and Response Co-Generation. arXiv 2020, arXiv:2004.12363. [Google Scholar]

- Bashir, A.M.; Hassan, A.; Rosman, B.; Duma, D.; Ahmed, M. Implementation of A Neural Natural Language Understanding Component for Arabic Dialogue Systems. Procedia Comput. Sci. 2018, 142, 222–229. [Google Scholar] [CrossRef]

- Elmadany, A.R.A.; Abdou, S.M.; Gheith, M. Improving dialogue act classification for spontaneous Arabic speech and instant messages at utterance level. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), European Language Resources Association (ELRA), Miyazaki, Japan, 7–12 May 2018; pp. 128–134. [Google Scholar]

- Joukhadar, A.; Saghergy, H.; Kweider, L.; Ghneim, N. Arabic Dialogue Act Recognition for Textual Chatbot Systems. In Proceedings of the First International Workshop on NLP Solutions for Under Resourced Languages (NSURL 2019), Trento, Italy, 11–12 September 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 43–49. [Google Scholar]

- Al-Ajmi, A.H.; Al-Twairesh, N. Building an Arabic Flight Booking Dialogue System Using a Hybrid Rule-Based and Data Driven Approach. IEEE Access 2021, 9, 7043–7053. [Google Scholar] [CrossRef]

- Hijjawi, M.; Bandar, Z.; Crockett, K.; McLean, D. ArabChat: An arabic conversational agent. In Proceedings of the 2014 6th International Conference on Computer Science and Information Technology, CSIT 2014-Proceedings, Amman, Jordan, 26 March 2014; pp. 227–237. [Google Scholar] [CrossRef]

- Almurtadha, Y. LABEEB: Intelligent Conversational Agent Approach to Enhance Course Teaching and Allied Learning Outcomes attainment. J. Appl. Comput. Sci. Math. 2019, 13, 9–12. [Google Scholar] [CrossRef]

- Aljameel, S.; O’shea, J.; Crockett, K.; Latham, A.; Kaleem, M. LANA-I: An Arabic Conversational Intelligent Tutoring System for Children with ASD. Adv. Intell. Syst. Comput. 2019, 997, 498–516. [Google Scholar] [CrossRef]

- Moubaiddin, A.; Shalbak, O.; Hammo, B.; Obeid, N. Arabic dialogue system for hotel reservation based on natural language processing techniques. Comput. Sist. 2015, 19, 119–134. [Google Scholar] [CrossRef] [Green Version]

- Bendjamaa, F.; Nora, T. A Dialogue-System Using a Qur’anic Ontology. In Proceedings of the 2020 Second International Conference on Embedded & Distributed Systems (EDiS), Oran, Algeria, 3 November 2020; pp. 167–171. [Google Scholar]

- Fadhil, A.; AbuRa’Ed, A. Ollobot-Towards a text-based Arabic health conversational agent: Evaluation and results. In Proceedings of the Recent Advances in Natural Language Processing (RANLP), Varna, Bulgaria, 2–4 September 2019; pp. 295–303. [Google Scholar] [CrossRef]

- Al-Ghadhban, N.; Al-Twairesh, D. Nabiha: An Arabic dialect chatbot. Int. J. Adv. Comput. Sci. Appl. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 452–459. [Google Scholar] [CrossRef] [Green Version]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- Mozannar, H.; Maamary, E.; el Hajal, K.; Hajj, H. Neural Arabic Question Answering. arXiv 2019, arXiv:1906.05394. [Google Scholar]

- Mayeesha, T.T.; Sarwar, A.M.; Rahman, R.M. Deep learning based question answering system in Bengali. J. Inf. Telecommun. 2020, 5, 145–178. [Google Scholar] [CrossRef]

- Naous, T.; Hokayem, C.; Hajj, H. Empathy-driven Arabic Conversational Chatbot. In Proceedings of the Fifth Arabic Natural Language Processing Workshop, Barcelona, Spain, 8 December 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 58–68. [Google Scholar]

- Razumovskaia, E.; Glavaš, G.; Majewska, O.; Ponti, E.M.; Korhonen, A.; Vulić, I. Crossing the Conversational Chasm: A Primer on Natural Language Processing for Multilingual Task-Oriented Dialogue Systems. arXiv 2021, arXiv:2104.08570. [Google Scholar]

- He, X.; Deng, L.; Hakkani-Tur, D.; Tur, G. Multi-style adaptive training for robust cross-lingual spoken language understanding. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8342–8346. [Google Scholar]

- Mrkšić, N.; Vulić, I.; Séaghdha, D.; Leviant, I.; Reichart, R.; Gašić, M.; Korhonen, A.; Young, S. Semantic Specialization of Distributional Word Vector Spaces using Monolingual and Cross-Lingual Constraints. Trans. Assoc. Comput. Linguist. 2017, 5, 309–324. [Google Scholar] [CrossRef] [Green Version]

- Castellucci, G.; Bellomaria, V.; Favalli, A.; Romagnoli, R. Multi-lingual Intent Detection and Slot Filling in a Joint BERT-based Model. arXiv 2019, arXiv:1907.02884. [Google Scholar]

- Bellomaria, V.; Castellucci, G.; Favalli, A.; Romagnoli, R. Almawave-SLU: A new dataset for SLU in Italian. arXiv 2019, arXiv:1907.07526. [Google Scholar]

- Liu, Z.; Shin, J.; Xu, Y.; Winata, G.I.; Xu, P.; Madotto, A.; Fung, P. Zero-shot cross-lingual dialogue systems with transferable latent variables. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 11 November 2019; pp. 1297–1303. [Google Scholar] [CrossRef]

- Dao, M.H.; Truong, T.H.; Nguyen, D.Q. Intent Detection and Slot Filling for Vietnamese. arXiv 2021, arXiv:2104.02021. [Google Scholar]

- Deriu, J.; Rodrigo, A.; Otegi, A.; Echegoyen, G.; Rosset, S.; Agirre, E.; Cieliebak, M. Survey on evaluation methods for dialogue systems. Artif. Intell. Rev. 2020, 54, 755–810. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Huggingface/Transformers? Transformers: State-of-the-Art Natural Language Processing for Pytorch, TensorFlow, and JAX. Available online: https://github.com/huggingface/transformers (accessed on 17 November 2021).

- PyTorch. Available online: https://pytorch.org/ (accessed on 17 November 2021).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Siblini, W.; Pasqual, C.; Lavielle, A.; Challal, M.; Cauchois, C. Multilingual Question Answering from Formatted Text applied to Conversational Agents. arXiv 2019, arXiv:1910.04659. [Google Scholar]

- Hsu, T.Y.; Liu, C.L.; Lee, H.Y. Zero-shot Reading Comprehension by Cross-lingual Transfer Learning with Multi-lingual Language Representation Model. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5933–5940. [Google Scholar] [CrossRef]

- Upadhyay, S.; Faruqui, M.; Tür, G.; Dilek, H.-T.; Heck, L. (Almost) Zero-shot cross-lingual spoken language understanding. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 6034–6038. [Google Scholar]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual Denoising Pre-training for Neural Machine Translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Nagoudi, E.M.B.; Elmadany, A.; Abdul-Mageed, M. AraT5: Text-to-Text Transformers for Arabic Language Understanding and Generation. arXiv 2021, arXiv:2109.12068. [Google Scholar]

| Model | Dataset | Back-Bone Models | Performance Metrics | |||

|---|---|---|---|---|---|---|

| BLEU | Inform Rate | Success Rate | JGA | |||

| DAMD [32] | MultiWOZ 2.1 | multi-decoder seq2seq | 16.6 | 76.4 | 60.4 | 51.45 |

| Ham [10] | MultiWOZ 2.1 | GPT-2 | 6.01 | 77.00 | 69.20 | 44.03 |

| SimpleToD [11] | MultiWOZ 2.1 | GPT-2 | 15.23 | 85.00 | 70.05 | 56.45 |

| SC-GPT [16] | MultiWOZ | GPT-2 | 30.76 | - | - | - |

| SOLOIST [12] | MultiWOZ 2.0 | GPT-2 | 16.54 | 85.50 | 72.90 | - |

| MARCO [33] | MultiWOZ 2.0 | - | 20.02 | 92.30 | 78.60 | - |

| UBAR [13] | MultiWOZ 2.1 | GPT-2 | 17.0 | 95.4 | 80.7 | 56.20 |

| ToD-BERT [17] | MultiWOZ 2.1 | BERT | - | - | - | 48.00 |

| MinTL [14] | MultiWOZ 2.0 | T5-small | 19.11 | 80.04 | 72.71 | 51.24 |

| T5-base | 18.59 | 82.15 | 74.44 | 52.07 | ||

| BART-large | 17.89 | 84.88 | 74.91 | 52.10 | ||

| LABES-S2S [20] | MultiWOZ 2.1 | A copy-augmented Seq2Seq | 18.3 | 78.1 | 67.1 | 51.45 |

| AuGPT [21] | MultiWOZ 2.1 | GPT-2 | 17.2 | 91.4 | 72.9 | - |

| GPT-CAN [15] | MultiWOZ 2.0 | GPT-2 | 17.02 | 93.70 | 76.70 | 55.57 |

| HyKnow [22] | MultiWOZ 2.1 | multi-stage Seq2Seq | 18.0 | 82.3 | 69.4 | 49.2 |

| Dataset | Task | Language | Domains |

|---|---|---|---|

| Chinese ATIS [50] | Intent classification Slot extraction | ZH | Flight bookings |

| Multi-lingual WOZ 2.0 [51] | DST | EN, DE, IT | Restaurant bookings |

| SLU-IT [52] | Intent classification Slot extraction | IT | 7 domains (Restaurant, Weather, Music, …) |

| Almawave-SLU [53] | Intent classification Slot extraction | IT | 7 domains (Restaurant, Weather, Music, …) |

| S. Schuster et al. [30] | Task-oriented DS | ES, TH | 3 domains (Weather, Alarm, and Reminder) |

| Z. Liu et al. [54] | Task-oriented DS | ES, TH | 3 domains (Weather, Alarm, and Reminder) |

| Z. Liu et al. [31] | DST | EN, DE, IT | Restaurant booking |

| Task-oriented DS | ES, TH | 3 domains (Weather, Alarm, and Reminder) | |

| Vietnamese ATIS [55] | Intent classification Slot extraction | VI | Flight bookings |

| Dataset | Languages | Number of Dialogues | Avg. Turn Length | Number of Domains (Tasks) | Deterministic API | Mixed-Language Context |

|---|---|---|---|---|---|---|

| BiToD | EN, ZH | 7232 | 19.98 | 5 | Yes | Yes |

| MultiWoZ | EN | 8438 | 13.46 | 7 | No | No |

| Askmaster | EN | 13,215 | 22.9 | 6 | No | No |

| MetaLWOZ | EN | 37,884 | 11.4 | 47 | No | No |

| TM-1 | EN | 13,215 | 21.99 | 6 | No | No |

| Schema | EN | 22,825 | 20.3 | 17 | No | No |

| SGD | EN | 16,142 | 20.44 | 16 | No | No |

| STAR | EN | 5820 | 21.71 | 13 | No | No |

| Frames | EN | 1369 | 14.6 | 3 | No | No |

| Multi-lingual WOZ 2.0 | EN, DE, IT | 3600 | _ | 1 | No | Yes |

| Arabic-TOD | AR | 1500 | 19.98 | 4 | Yes | Yes |

| TSR | DSR | APIAcc | BLEU | JGA | |

|---|---|---|---|---|---|

| Arabic | |||||

| Baseline | 3.95 | 1.16 | 4.30 | 3.37 | 8.21 |

| AraConv | 45.07 | 18.60 | 48.86 | 31.05 | 34.82 |

| Other languages | |||||

| EN [1] | 69.13 | 47.51 | 67.92 | 38.48 | 69.19 |

| ZH [1] | 53.77 | 31.09 | 63.25 | 19.03 | 67.35 |

| TSR | DSR | APIAcc | BLEU | JGA | |

|---|---|---|---|---|---|

| Arabic | |||||

| AraConvBi-NQ (AR, EN) | 45.57 | 21.90 | 56.23 | 30.41 | 37.35 |

| AraConvBi-Q (AR, EN) | 44.62 | 16.98 | 46.32 | 27.36 | 35.58 |

| Other languages | |||||

| ZH, EN [1] | 71.18 | 51.13 | 71.87 | 40.71 | 72.16 |

| ZH, EN [1] | 57.24 | 34.78 | 65.54 | 22.45 | 68.70 |

| TSR | DSR | APIAcc | BLEU | JGA | |

|---|---|---|---|---|---|

| AraConvM-NQ (AR, EN, ZH) | 51.27 | 20.00 | 55.44 | 32.58 | 37.68 |

| AraConvM-Q (AR, EN, ZH) | 47.17 | 16.98 | 53.07 | 31.05 | 36.13 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fuad, A.; Al-Yahya, M. AraConv: Developing an Arabic Task-Oriented Dialogue System Using Multi-Lingual Transformer Model mT5. Appl. Sci. 2022, 12, 1881. https://doi.org/10.3390/app12041881

Fuad A, Al-Yahya M. AraConv: Developing an Arabic Task-Oriented Dialogue System Using Multi-Lingual Transformer Model mT5. Applied Sciences. 2022; 12(4):1881. https://doi.org/10.3390/app12041881

Chicago/Turabian StyleFuad, Ahlam, and Maha Al-Yahya. 2022. "AraConv: Developing an Arabic Task-Oriented Dialogue System Using Multi-Lingual Transformer Model mT5" Applied Sciences 12, no. 4: 1881. https://doi.org/10.3390/app12041881

APA StyleFuad, A., & Al-Yahya, M. (2022). AraConv: Developing an Arabic Task-Oriented Dialogue System Using Multi-Lingual Transformer Model mT5. Applied Sciences, 12(4), 1881. https://doi.org/10.3390/app12041881