Towards an Assembly Support System with Dynamic Bayesian Network

Abstract

1. Introduction

2. Related Work

3. Next Assembly Step Prediction through Dynamic Bayesian Network

3.1. The Target Product

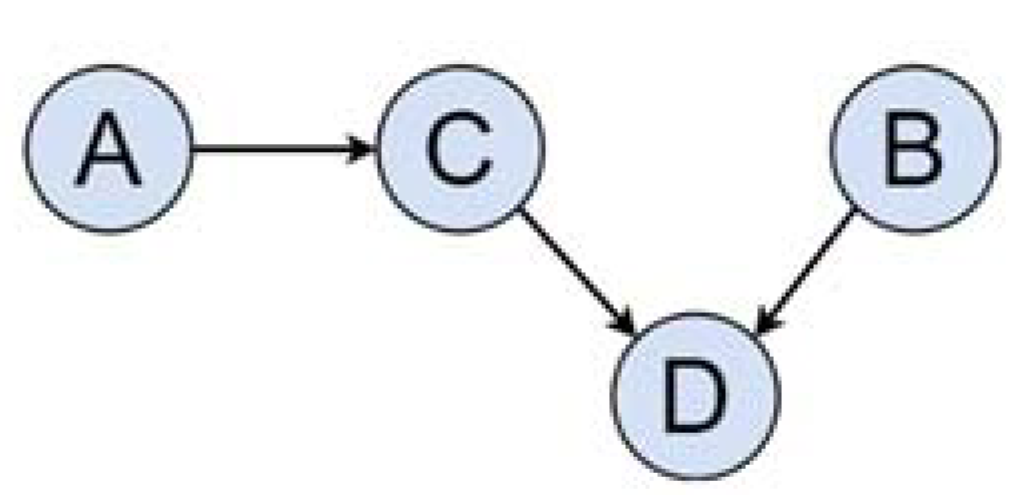

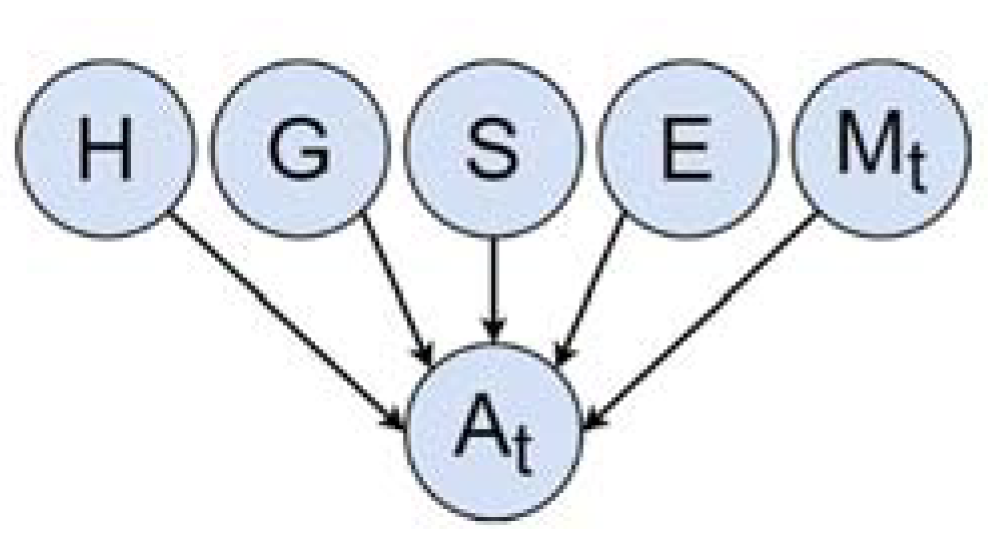

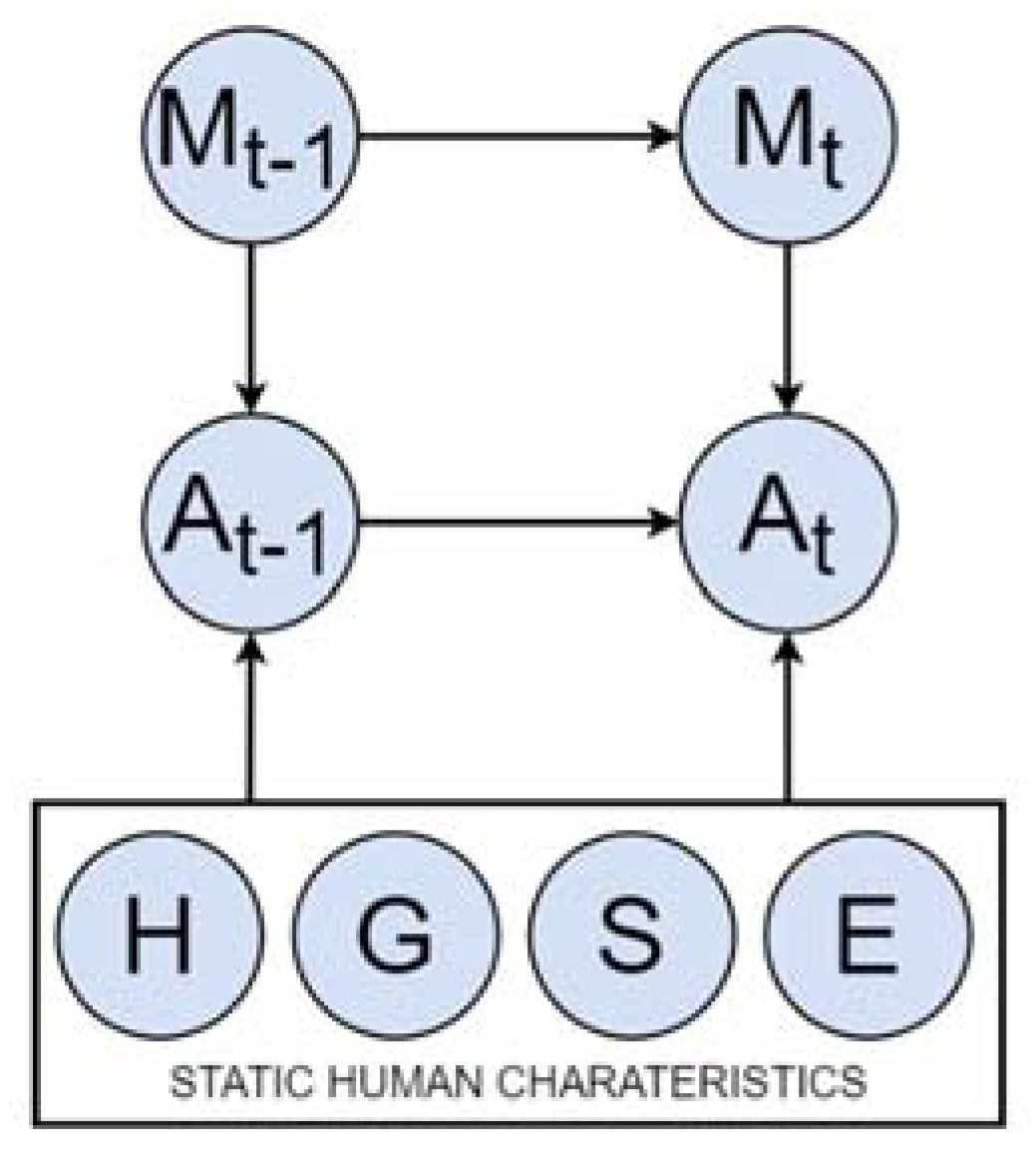

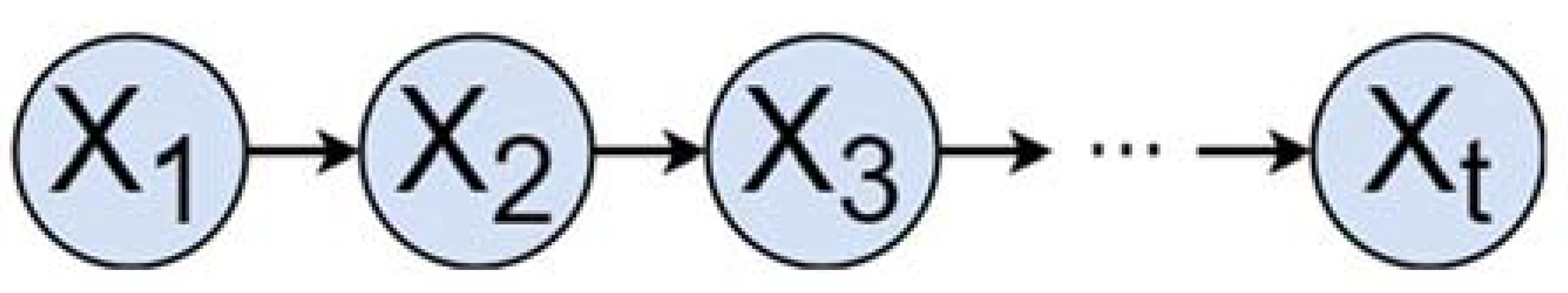

3.2. The DBN as a Prediction Model

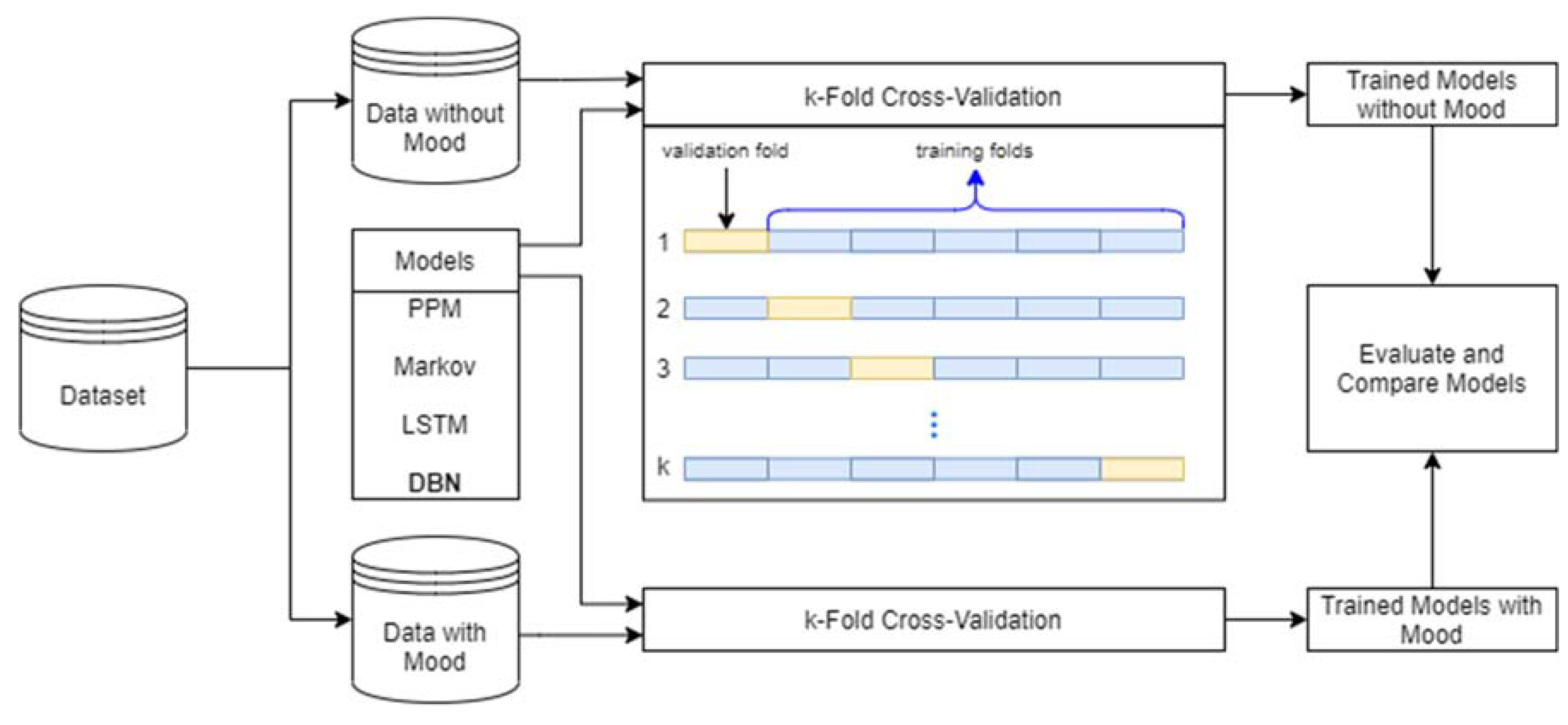

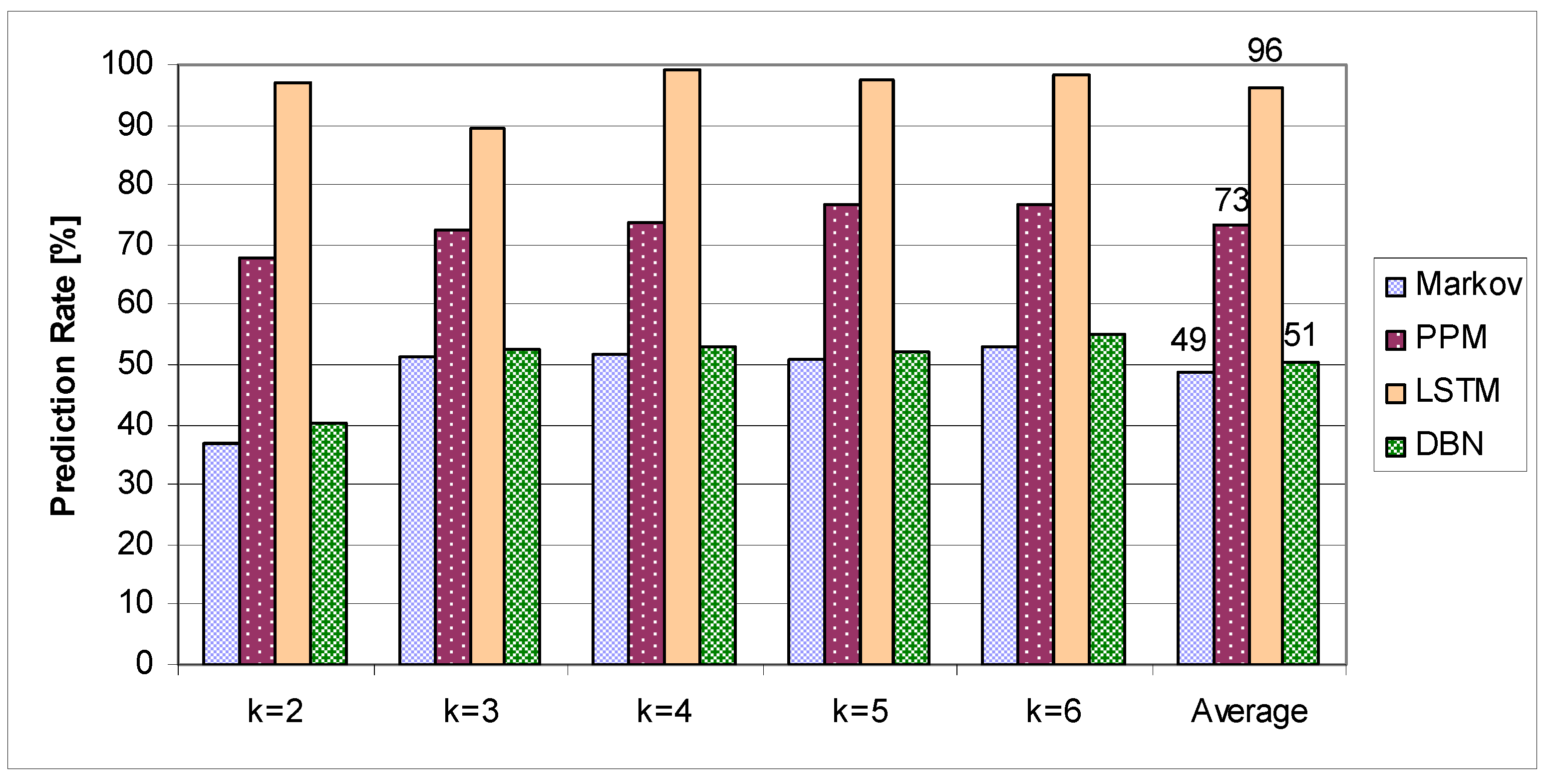

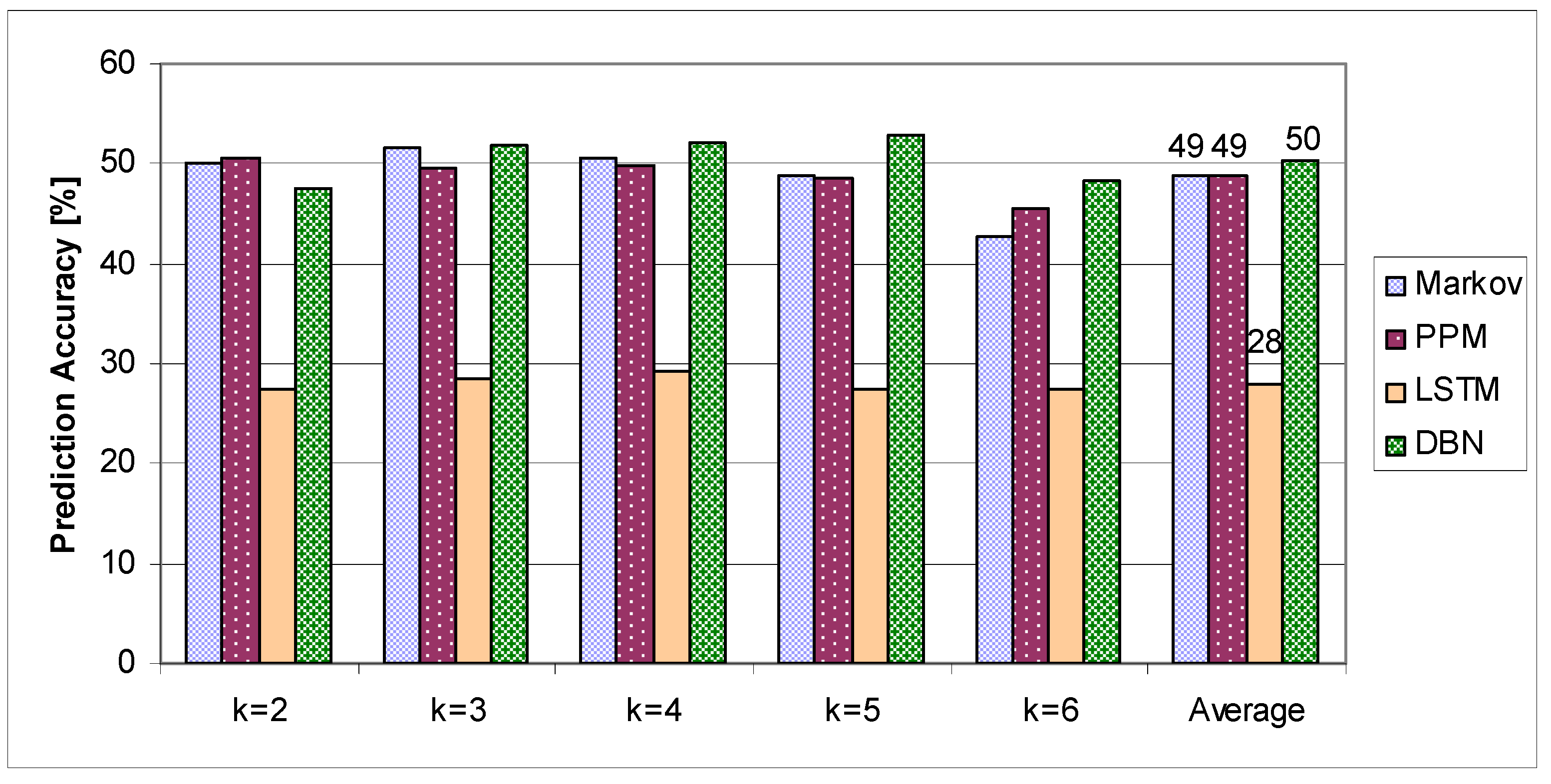

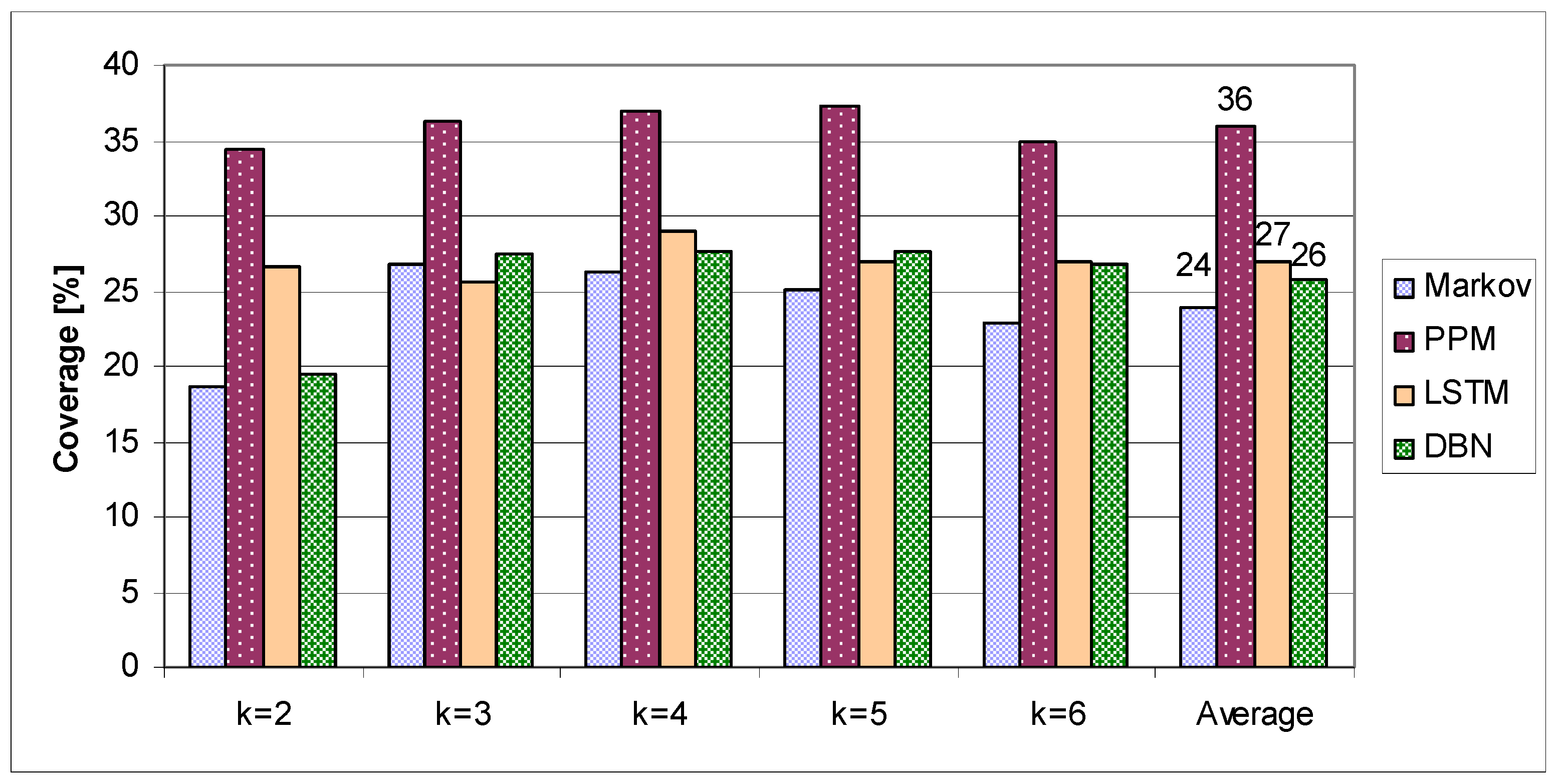

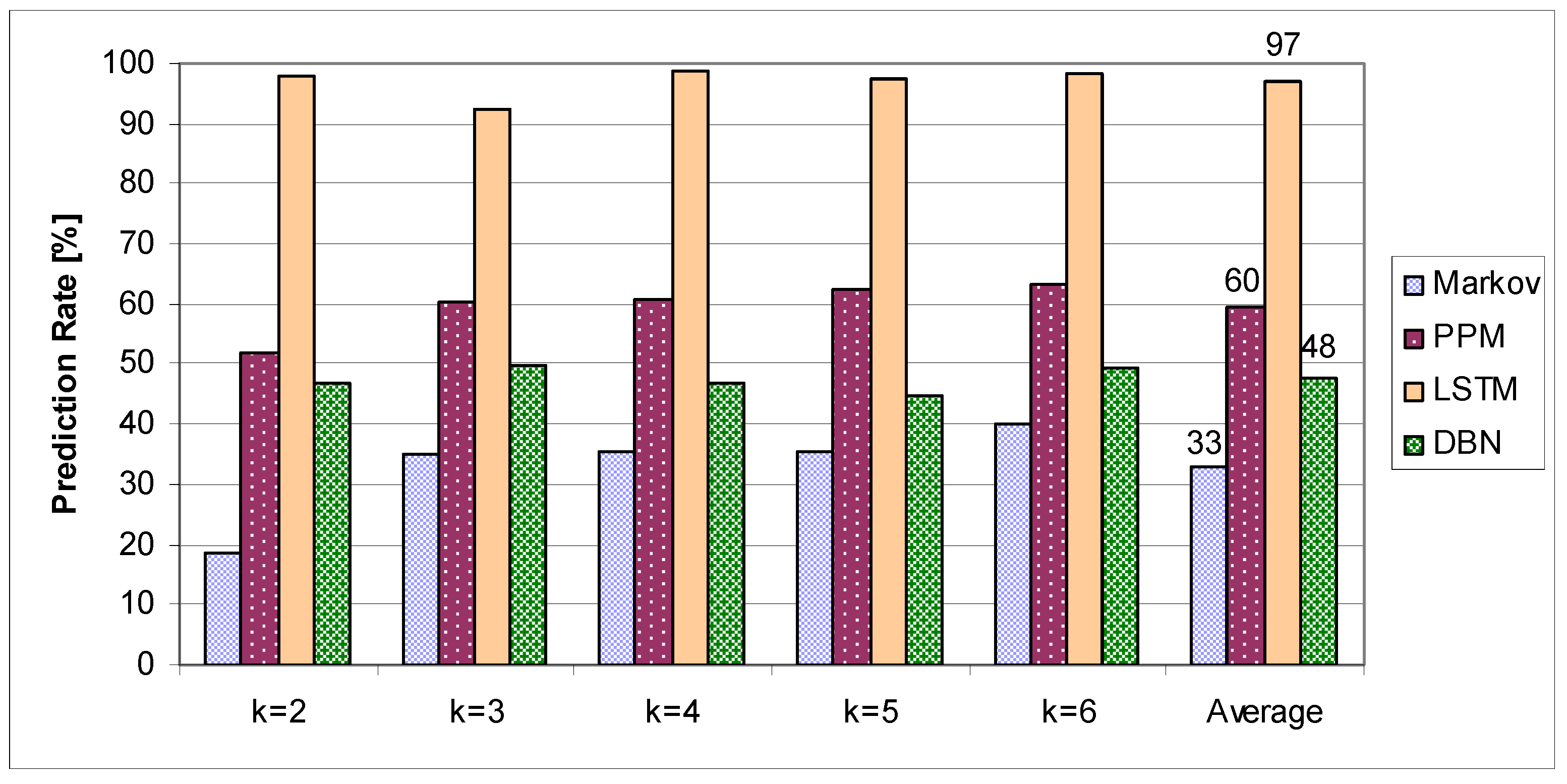

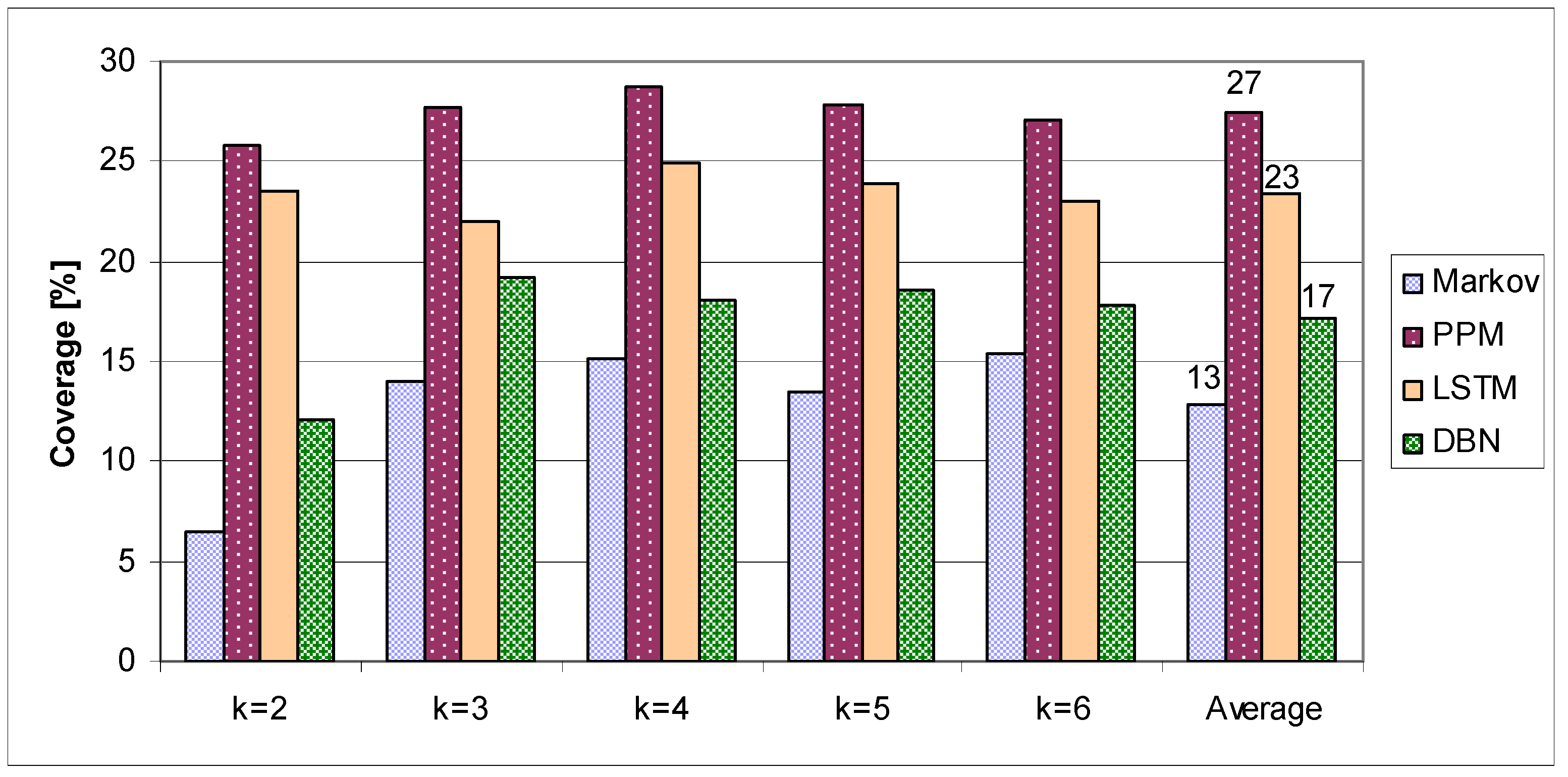

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ruppert, T.; Jaskó, S.; Holczinger, T.; Abonyi, J. Enabling Technologies for Operator 4.0: A Survey. Appl. Sci. 2018, 8, 1650. [Google Scholar] [CrossRef]

- Sun, S.; Zheng, X.; Gong, B.; Paredes, J.G.; Ordieres-Meré, J. Healthy Operator 4.0: A Human Cyber–Physical System Architecture for Smart Workplaces. Sensors 2020, 20, 2011. [Google Scholar] [CrossRef]

- Bortolini, M.; Ferrari, E.; Gamberi, M.; Pilati, F.; Faccio, M. Assembly system design in the Industry 4.0 era: A general framework. IFAC-PapersOnLine 2017, 50, 5700–5705. [Google Scholar] [CrossRef]

- Miqueo, A.; Torralba, M.; Yagüe-Fabra, J.A. Lean Manual Assembly 4.0: A Systematic Review. Appl. Sci. 2020, 10, 8555. [Google Scholar] [CrossRef]

- Longo, F.; Nicoletti, L.; Padovano, A. Smart operators in industry 4.0: A human-centered approach to enhance operators’ capabilities and competencies within the new smart factory context. Comput. Ind. Eng. 2017, 113, 144–159. [Google Scholar] [CrossRef]

- Danielsson, O.; Holm, M.; Syberfeldt, A. Augmented reality smart glasses in industrial assembly: Current status and future challenges. J. Ind. Inf. Integr. 2020, 20, 100175. [Google Scholar] [CrossRef]

- Santi, G.; Ceruti, A.; Liverani, A.; Osti, F. Augmented Reality in Industry 4.0 and Future Innovation Programs. Technologies 2021, 9, 33. [Google Scholar] [CrossRef]

- Faccio, M.; Ferrari, E.; Gamberi, M.; Pilati, F. Human Factor Analyser for work measurement of manual manufacturing and assembly processes. Int. J. Adv. Manuf. Technol. 2019, 103, 861–877. [Google Scholar] [CrossRef]

- Ling, S.; Guo, D.; Zhang, T.; Rong, Y.; Huang, G.Q. Computer Vision-enabled Human-Cyber-Physical Workstations Collaboration for Reconfigurable Assembly System. Procedia Manuf. 2020, 51, 565–570. [Google Scholar] [CrossRef]

- Matei, A.; Tocu, N.-A.; Zamfirescu, C.-B.; Gellert, A.; Neghina, M. Engineering a Digital Twin for Manual Assembling. In Part IV. Leveraging Applications of Formal Methods, Verification and Validation: Tools and Trends, Proceedings of the 9th International Symposium on Leveraging Applications of Formal Methods, ISoLA 2020, Rhodes, Greece, 20–30 October 2020; Tiziana, M., Bernhard, S., Eds.; Springer: Cham, Switzerland, 2021; Volume 12479. [Google Scholar] [CrossRef]

- Gellert, A.; Precup, S.-A.; Pirvu, B.-C.; Zamfirescu, C.-B. Prediction-Based Assembly Assistance System. In Proceedings of the 25th IEEE International Conference on Emerging Technologies and Factory Automation, Vienna, Austria, 8–11 September 2020; pp. 1065–1068. [Google Scholar] [CrossRef]

- Gellert, A.; Precup, S.-A.; Pirvu, B.-C.; Fiore, U.; Zamfirescu, C.-B.; Palmieri, F. An Empirical Evaluation of Prediction by Partial Matching in Assembly Assistance Systems. Appl. Sci. 2021, 11, 3278. [Google Scholar] [CrossRef]

- Nguyen, T.D.; McFarland, R.; Kleinsorge, M.; Krüger, J.; Seliger, G. Adaptive Qualification and Assistance Modules for Manual Assembly Workplaces. Procedia CIRP 2015, 26, 115–120. [Google Scholar] [CrossRef][Green Version]

- Hinrichsen, S.; Bendzioch, S. How Digital Assistance Systems Improve Work Productivity in Assembly. In Advances in Human Factors and Systems Interaction; Nunes, I., Ed.; Springer: Cham, Switzerland, 2018; Volume 781, pp. 332–342. [Google Scholar] [CrossRef]

- Sochor, R.; Kraus, L.; Merkel, L.; Braunreuther, S.; Reinhart, G. Approach to Increase Worker Acceptance of Cognitive Assistance Systems in Manual Assembly. Procedia CIRP 2019, 81, 926–931. [Google Scholar] [CrossRef]

- Petzoldt, C.; Keiser, D.; Beinke, T.; Freitag, M. Functionalities and Implementation of Future Informational Assistance Systems for Manual Assembly. In Subject-Oriented Business Process Management. The Digital Workplace—Nucleus of Transformation. S-BPM ONE 2020. Communications in Computer and Information Science; Freitag, M., Kinra, A., Kotzab, H., Kreowski, H.J., Thoben, K.D., Eds.; Springer: Cham, Switzerland, 2020; Volume 1278, pp. 88–109. [Google Scholar] [CrossRef]

- Blankemeyer, S.; Wiemann, R.; Raatz, A. Intuitive Assembly Support System Using Augmented Reality. In Tagungsband des 3. Kongresses Montage Handhabung Industrieroboter; Schüppstuhl, T., Tracht, K., Franke, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S.; Wan, B.; He, W.; Bai, X. Point cloud and visual feature-based tracking method for an augmented reality-aided mechanical assembly system. Int. J. Adv. Manuf. Technol. 2018, 99, 2341–2352. [Google Scholar] [CrossRef]

- Amin, A.-; Tao, W.; Doell, D.; Lingard, R.; Yin, Z.; Leu, M.C.; Qin, R. Action Recognition in Manufacturing Assembly using Multimodal Sensor Fusion. Procedia Manuf. 2019, 39, 158–167. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, W.; Chen, Y.; Jia, Y.; Peng, G. Prediction of Human Actions in Assembly Process by a Spatial-Temporal End-to-End Learning Model. In SAE Technical Paper 2019-01-0509; SAE International: Warrendale, PA, USA, 2019. [Google Scholar] [CrossRef]

- Hawkins, K.P.; Vo, N.; Bansal, S.; Bobick, A.F. Probabilistic human action prediction and wait-sensitive planning for responsive human-robot collaboration. In Proceedings of the 2013 13th IEEE-RAS International Conference on Humanoid Robots (Humanoids), Atlanta, GA, USA, 15–17 October 2013; pp. 499–506. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, P.; Gao, R.X. Hybrid machine learning for human action recognition and prediction in assembly. Robot. Comput. Manuf. 2021, 72, 102184. [Google Scholar] [CrossRef]

- Dojer, N.; Gambin, A.; Mizera, A.; Wilczyński, B.; Tiuryn, J. Applying dynamic Bayesian networks to perturbed gene expression data. BMC Bioinform. 2006, 7, 249. [Google Scholar] [CrossRef]

- Zou, M.; Conzen, S.D. A new dynamic Bayesian network (DBN) approach for identifying gene regulatory networks from time course microarray data. Bioinformatics 2005, 21, 71–79. [Google Scholar] [CrossRef]

- Sun, J.; Sun, J. A dynamic Bayesian network model for real-time crash prediction using traffic speed conditions data. Transp. Res. Part C Emerg. Technol. 2015, 54, 176–186. [Google Scholar] [CrossRef]

- Beckmann, J.; Koop, G.; Korobilis, D.; Schüssler, R.A. Exchange rate predictability and dynamic Bayesian learning. J. Appl. Econ. 2020, 35, 410–421. [Google Scholar] [CrossRef]

- Du, Y.; Chen, F.; Xu, W.; Li, Y. Recognizing Interaction Activities using Dynamic Bayesian Network. In Proceedings of the 18th International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; Volume 1, pp. 618–621. [Google Scholar] [CrossRef]

- Frigault, M.; Wang, L.; Singhal, A.; Jajodia, S. Measuring network security using dynamic bayesian network. In Proceedings of the 4th ACM workshop on Quality of Protection, Alexandria, VA, USA, 27 October 2008; pp. 23–30. [Google Scholar] [CrossRef]

- Chapelle, O.; Zhang, Y. A dynamic bayesian network click model for web search ranking. In Proceedings of the 18th International Conference on World Wide Web, Madrid, Spain, 20–24 April 2009; pp. 1–10. [Google Scholar] [CrossRef]

- Gellert, A.; Zamfirescu, C.-B. Using Two-Level Context-Based Predictors for Assembly Assistance in Smart Factories. In Intelligent Methods in Computing, Communications and Control. ICCCC 2020. Advances in Intelligent Systems and Computing; Dzitac, I., Dzitac, S., Filip, F., Kacprzyk, J., Manolescu, M.J., Oros, H., Eds.; Springer: Cham, Switzerland, 2021; Volume 1243. [Google Scholar] [CrossRef]

- Gellert, A.; Zamfirescu, C.-B. Assembly support systems with Markov predictors. J. Decis. Syst. 2020, 29, 63–70. [Google Scholar] [CrossRef]

- Precup, S.-A.; Gellert, A.; Dorobantiu, A.; Zamfirescu, C.-B. Assembly Process Modeling through Long Short-Term Memory. In Recent Challenges in Intelligent Information and Database Systems, Proceedings of the 13th Asian Conference, ACIIDS 2021, Phuket, Thailand, 7–10 April 2021; Hong, T.-P., Wojtkiewicz, K., Chawuthai, R., Sitek, P., Eds.; Springer: Singapore, 2021; Volume 1371, pp. 28–39. [Google Scholar] [CrossRef]

- Ghahramani, Z. Learning Dynamic Bayesian Networks. In Adaptive Processing of Sequences and Data Structures. NN 1997. Lecture Notes in Computer Science; Giles, C.L., Gori, M., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1387, pp. 168–197. [Google Scholar] [CrossRef]

- Ankan, A.; Panda, A. pgmpy: Probabilistic Graphical Models using Python. In Proceedings of the 14th Python in Science Conference, Austin, TX, USA, 6–12 July 2015; pp. 6–11. [Google Scholar] [CrossRef]

- Ganesh, S.; Cave, V. p-values, p-values everywhere! N. Z. Vet. J. 2018, 66, 55–56. [Google Scholar] [CrossRef] [PubMed]

- OpenVINO. Available online: https://docs.openvino.ai/latest/index.html (accessed on 6 December 2021).

| Feature | F-Value | p-Value |

|---|---|---|

| Assembly Experience | 0.00079 | 0.97762 |

| Age | 0.10553 | 0.74631 |

| Stress level before the assembly | 0.36950 | 0.54535 |

| Hungry | 0.55439 | 0.45917 |

| Under influence of medication | 0.69261 | 0.40827 |

| Preferred hand | 2.40527 | 0.12570 |

| Gender | 2.86426 | 0.09528 |

| Sleep quality | 2.87701 | 0.09456 |

| Eyeglass wearer | 3.99500 | 0.04975 |

| Height | 6.98954 | 0.01023 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Precup, S.-A.; Gellert, A.; Matei, A.; Gita, M.; Zamfirescu, C.-B. Towards an Assembly Support System with Dynamic Bayesian Network. Appl. Sci. 2022, 12, 985. https://doi.org/10.3390/app12030985

Precup S-A, Gellert A, Matei A, Gita M, Zamfirescu C-B. Towards an Assembly Support System with Dynamic Bayesian Network. Applied Sciences. 2022; 12(3):985. https://doi.org/10.3390/app12030985

Chicago/Turabian StylePrecup, Stefan-Alexandru, Arpad Gellert, Alexandru Matei, Maria Gita, and Constantin-Bala Zamfirescu. 2022. "Towards an Assembly Support System with Dynamic Bayesian Network" Applied Sciences 12, no. 3: 985. https://doi.org/10.3390/app12030985

APA StylePrecup, S.-A., Gellert, A., Matei, A., Gita, M., & Zamfirescu, C.-B. (2022). Towards an Assembly Support System with Dynamic Bayesian Network. Applied Sciences, 12(3), 985. https://doi.org/10.3390/app12030985