Abstract

This paper refers to a machine learning method for solving NP-hard discrete optimization problems, especially planning and scheduling. The method utilizes a special multistage decision process modeling paradigm referred to as the Algebraic Logical Metamodel based learning methods of Multistage Decision Processes (ALMM). Hence, the name of the presented method is the ALMM Based Learning method. This learning method utilizes a specifically built local multicriterion optimization problem that is solved by means of scalarization. This paper describes both the development of such local optimization problems and the concept of the learning process with the fractional derivative mechanism itself. It includes proofs of theorems showing that the ALMM Based Learning method can be defined for a much broader problem class than initially assumed. This significantly extends the range of the prime learning method applications. New generalizations for the prime ALMM Based Learning method, as well as some essential comments on a comparison of Reinforcement Learning with the ALMM Based Learning, are also presented.

1. Introduction

Curiosity is the main motivation for any human, a researcher in particular. Whenever a new method or algorithm is developed, multiple questions emerge. Could the method be applied under weaker assumptions, thus enhancing the area for its use? Could it be improved? In which ways? How does the method relate to the ones that came earlier? This paper refers to a learning method (a metaheuristic) called the ALMM Based Learning method. The objective of the method is to solve discrete (combinatorial) optimization problems, especially NP-hard ones. This paper presents further results of research involving the method and thus provides answers to the questions above. The method utilizes a special multistage decision process modeling paradigm referred to as the Algebraic Logical Metamodel of Multistage Decision Processes (abbreviated as ALMM of MDP and finally ALMM), which is presented in Section 2. Based on the ALMM paradigm, one can develop mathematical models for discrete optimization problems—the so-called AL models. Even though a problem model may be known, its analytic solution is not available. Moreover, as the model has a recursive character, it is difficult to infer decision consequences by more than a single step. Initial work related to attempts to use algebraic logic models to optimize discrete process control have been proposed in [1,2]. They used the concept of a simplified algebraic-logical description of the properties of the process that could be used to control its course. This approach had a strong limitation because it did not define a mathematical model of the process, but only relationships of its properties that could be used to make decisions controlling it [3,4,5]. In this paper, the authors define a generalized mathematical notation of the process model described in the ALMM technology, which takes into account the new mechanism of the learning strategy.

The objective of this paper is twofold:

- To present new generalizations of ALMM Based Learning methods involving a fractional derivative mechanism;

- To present essential remarks related to a comparison of Reinforcement Learning vs the ALMM Based Learning method.

Many types of learning have been explored for some discrete optimization problems [6,7,8,9,10,11,12,13,14], especially scheduling: rote learning, inductive learning, neural network learning, case-based learning, classifier systems, and others. Particular methodologies offer positive and negative features. However, none of the mentioned learning concepts use a mathematical model of a problem to be solved. The novelty of the machine learning presented in this paper is the fact that this method is based on a special mathematical model. The paper is constructed as follows. The Algebraic Logical Metamodel of Multistage Decision Process is presented in Section 2. Section 3 presents definitions of some criterion properties that are used in further discussion. An example that is an extension of the proposed learning method strategy is recalled in Section 4. Generalizations for the prime ALMM Based Learning method, as well as essential comments on a comparison of Reinforcement Learning with ALMM Based Learning, are given, respectively, in Section 5 and Section 7. In Section 6, an example is presented.

2. Algebraic-Logical Meta-Model of Multistage Decision Processes

The discrete process, which is implemented in the form of a sequence of control decisions, is an effective and practical approach that allows for monitoring various production processes. The key problem in constructing models of such process control is the need to have expert knowledge regarding the nature of such a process—whether it is deterministic or stochastic—but also the degree of their adjustment to real systems and the related confidence level of the conducted steering of this system. In this paper, the authors propose a mechanism of abstract mathematical modeling to describe a deterministic process using the approach paradigm named ALMM of MDP (abbreviated as ALMM). The idea of the ALMM paradigm was proposed and developed by Dudek-Dyduch E. in [1] and recalled in many other papers [5,15,16]. It has also been put to use in multiple cases [1,17,18,19,20,21,22]. Based on ALMM, the formal models, the so-called AL models, may be established for a very broad class of discrete optimization problems from a variety of application areas (especially for the modeling and control of discrete manufacturing and logistics processes), thus yielding to the meta-model designation. The description and definition of the ALMM paradigm, cited in the aforementioned articles, are cited below. “ALMM is a general model development paradigm for deterministic problems, for which solutions can be presented as a sequence (or a set) of decisions, usually complex ones (i.e., composed of some single decisions). It facilitates convenient representation of all kinds of information regarding the problem to be solved, in particular the information defining a structure of states and decisions, algorithm used to generate consecutive states and various temporal relationships and restrictions of the problem. Furthermore, ALMM enables us to define various problem properties, in particular ones for which a particular heuristic method may be applied”. ALMM provides a structured way of recording knowledge of the goal and all relevant restrictions that exist within the problems modeled. Using this paradigm, the author has provided, i.e., in [3,16], the definitions of two base types of multistage decision processes: a common process (cMDP) and a dynamic process (MDDP). The definition of MDDP quoted below refers to processes wherein both the constraints and the transition function depend on time. Therefore, the concept of the so-called “generalized state” has been introduced, defined as a pair containing both the state and the time instant.

Definition 1.

The multistage dynamic decision process is a process that is specified by the sextuple , where U is a set of decisions, is a set of generalized states, X is a set of proper states, is a subset of non-negative real numbers representing the time instants, is a partial function called a transition function, (it does not have to be determined for all elements of the set ), , and are, respectively, an initial generalized state, a set of not admissible generalized states, and a set of goal generalized states, i.e., the states in which we want the process to take place at the end. Subsets and are disjoint, i.e., .

The transition function is defined by means of two functions, , where , determine the next state and the next time instant, respectively. It is assumed that the difference has a value that is both finite and positive. Because not all decisions defined formally make sense in certain situations, the transition function f is defined as a partial one. As a result, all limitations concerning the decisions in a given state s can be defined in a convenient way by means of so-called sets of possible decisions , and defined as: .

The cMDP is obtained by reducing a generalized state to a proper state with a transition function . For both defined types of the multistage decision processes, in the most general case, sets U and X may be presented as a Cartesian product , , i.e., , .

In particular, , represent separate decisions that must or may be taken simultaneously and relate to particular objects. Values of particular coordinates of a state or a decision may be names of elements (symbols) as well as some objects (e.g., finite set, sequence etc.).

There are no limitations imposed on a type of elements of the sets; in particular they do not have to be numerical. Thus, values of particular co-ordinates of a state or a decision may be names of elements (symbols) as well as some objects (e.g., finite set, sequence etc.). The sets and are formally defined with the use of both algebraic and logical formulae, hence the algebraic–logic model descriptor.

The most significant characteristic, unique for the proposed ALMM of MDP paradigm, is the fact that:

- Proper state coordinates can be higher order variables;

- Decision u can take a complex form, consisting of individual decisions related to various issues/objects; these individual decisions may or have to be taken or executed at the same time;

- The transition function is defined as a partial one, which allows taking into account various different restrictions on sensible decisions in different states.

Based on the meta–model recalled herein, AL models may be created for individual problems consisting of seeking admissible or optimal solutions. In the case of an admissible solution, an AL model is equivalent to a suitable multistage decision process, hence it is denoted as process . An optimization problem is then denoted as a pair, where is a criterion. An optimization task (instance of the problem) is denoted as a , where P is an instance of the process and is named an individual process. At the same time, an individual process P is represented by a set of its trajectories. A finite trajectory is a sequence of consecutive states from the initial state to a final state (goal, not admissible or blind one), computed by the transition function. Though trajectories may be finite or infinite, for further consideration we assume only finite ones. For , it is assumed that no state of a trajectory, apart from the last one, may belong to the set . Only a trajectory that ends in the set of goal states is admissible (feasible). The decision sequence determining an admissible trajectory is an admissible decision sequence. Obviously, the set of finite trajectories corresponds to the state graph of the process P.

3. Properties of Problems

The vast majority of methods and algorithms described in the literature use (with or without overt declaration) some properties of criteria that facilitate the solving process. Some of these, defined based on the ALMM paradigm, have been presented in [3,19] and then recalled in [23], are shortly recalled below. A broad class of criteria can be defined by recurrence and computed in parallel to the calculation of trajectories, the author named the said class as separable criterions (Definition 2). It is worth remembering, though, that these are not the only criterion classes that may be used. Let us denote P—a fixed multistage decision process, —a set of all states of trajectories of the process, —the number of the last state of a finite trajectory , —a set of all decision sequences of the process P, —a set of real numbers.

Definition 2.

Criterion Q is separable for the process P if, for every decision sequence can be recursively calculated as follows:

where denotes a partial value of criterion Q calculated for the i-th state of the considered trajectory, defined as follows:

where is the initial part of the sequence , is some partial function such that:

Separability is a property of an algorithm which calculates a quality criterion for a sequence of decisions , and thus for designated trajectory . The criterion is separable if we can calculate its value for the next state of a trajectory knowing only its value in the previous state and the decision taken at that time.

Particularly useful is the property of additive separability of criterion. Let Q be a separable criterion and a function denotes a change of criterion for two consecutive states on any trajectory, i.e., .

Definition 3.

Separable criterion Q is additive for a process P, iff for each trajectory of the process P and for each occurs:

i.e., depends on the state and decision only.

Definition 4.

Separable criterion Q changes multiplicatively for a process P, iff for each trajectory of the process P and for each the following is true:

where is a certain function depending on the decision and the state only [16].

4. Machine Learning Based on ALMM

In the work [3], the authors proposed a machine learning method that used the changes dynamics of the criterion and the weighted sum of partial criteria to determine the local quality criterion q. The learning process was reduced to determining a set of trajectories of process states leading to states and sequentially searching for such control sequences u, taking into account the selection of the weight vector a. To avoid unnecessary redundancy, we recall briefly the basic form of the local criterion which became the following form:

where is the change of the criterion value as a result of decision u, undertaken in the state is the estimation of the quality index value for the final trajectory section after the decision u has been realized, is the components reflecting additional limitations or additional requirements in the space of states, , is the coefficient which defines the weight of i-th component in the criterion . The sum of weight coefficients for is equal to 1.

A role of the criterion components connected with considered subsets should be strengthened for the next trajectory, i.e., the weights (priorities) of these components increase. When the generated trajectory is admissible, the role of the components responsible for the trajectory quality can be strengthened, i.e., their weights can be increased. Based on the gained information, the local optimization task is being improved during simulation experiments. This process is treated as a learning or intelligent searching algorithm. As the q criterion coefficients change in consecutive iterations, the criterion assumes the following form:

where k denotes the number of iteration.

Typically, the same initial coefficient values are assumed. Changes to the coefficients depend on real maximum distances of the last generated trajectory from the reachable parts of the not-admissible state set , and the remaining special sets.

In the work [24], the fractional back propagation algorithm was proposed (FBP), which uses the fractional order derivative according to Grünwald–Letnikov fractional derivative (GL) theory. Assuming the general form definition of the integer derivative and the fractional derivative, the GL derivative can be written:

where denotes the Newton binomial, the order of the fractional derivative of basis function , , the interval range, and h denotes the number of steps in the state space of the given process. Then, we can assume the backwards difference of the fractional order as , where :

where the consecutive coefficients are defined as follows:

N denotes the number of discrete measurements of . The in Equation (7) is therefore true for the special case when and . Taking into account the above, it is possible to formulate a generalized form of the considered relationship, in which the change of the criterion can be estimated by an approximation of the fractional derivative of the order in the following form:

Thus, in the formula adopted above, it becomes possible to search for a set of trajectories taking into account the variable length of the state vector history. In the adopted formula for determining successive coefficients implementing the approximation of the fractional-order derivative, it should be remembered that the accuracy of the approximation is determined by the length of the vector . Moreover, the boundary value of N for successive sequences of process states cannot exceed the length of the generated trajectory. Using the above interpretation and the results of the research carried out for standard feed forward neural network structures using the backpropagation mechanism of the fractional-order derivative, it is possible to indicate the following features and properties of the proposed machine learning method:

- It is possible to smoothly control the fractional-order derivative approximation algorithm, using as parameters the order of the derivative and the number of vector elements ;

- For the value of , the algorithm works as for the method described in [24], calculating the difference , using the property (10), such that for , , where ;

- For a value of v in the range of , a smooth selection of the derivative approximation is possible, allowing for the search for the trajectories of optimal solutions in the full spectrum of the space of permissible states.

The proposed algorithm is a generalization of the machine learning method proposed in [3]. The numerical stability of determining the approximation of the derivative of the criterion using the vector will depend on the number of its elements. For sufficiently large values of N, it is possible to determine their values in advance, also with the use of the Gamma function and further implementation in the form of a static structure, reducing the computational complexity of the algorithm at the stage of sequential search for the solution trajectory of a given optimization problem. The obtained solutions, in the form of optimal discrete process trajectories, can be used as suboptimal controls for other more complex optimization problems. At the current stage of the research, the authors will use the above machine learning model with a fractional order derivative to control the processes described in the ALMM technology to control a fleet of unmanned aero vehicles (UAV) in the conditions of dynamically changing system resources; in particular, in the case of a change in the number of UAVs, airspace availability and the intensity of the jobs stream of logistic tasks carried out by this fleet.

5. Generalized ALMM Based Learning Method

Generalizations of the method may take two basic directions:

- Modification of the local criterion and the learning process;

- Weakening assumptions under which the method can be applied.

5.1. Criterion Modifications

First of all, let us notice that for certain problems a sufficiently accurate estimation of the criterion for the remaining part of the criterion may be difficult or not even possible. The learning method can be applied in such cases for the q criterion, omitting the addent, i.e., the formula (7) becomes:

Secondly, let us notice that the reliability of q criterion addends is not uniform. The value is certain, but the other parts are merely estimates or predictions of unknown values, meaning they are not as reliable, with specifics depending on the problem instance under analysis. What is more, the reliability may even vary for individual parts of a local criterion. This suggests a deviation from the deterministic choice of the best decision for a given state, to be replaced with stochastic choice. The probability of selecting decision u in state s is proposed to be proportional to the value. The proposed approach, using the probability choice, is also beneficial as the exploration of the solution space is necessary too.

5.2. Weakening the Assumptions

Although the learning concepts using ALMM technology, in combination with a separable additive steering quality criterion, have been discussed by the authors in previous works, we will show below that the method can also be defined for a weaker assumption, namely for a merely separable criterion.

Theorem 1.

Assumption: let the ALMM Based Learning method, defined for a problem , be additively separable.

Thesis: The ALMM Based Learning method can be defined for the problem , where is any separable criterion.

Proof .

Let us analyze the local criterion for a problem .

As restrictions represented by the process remain unchanged, (and, as a consequence, the restrictions of all the instances of the problem remain unchanged), one has to consider the first two addends in formula (7) or the first addend in formula (12) only. It results from Definition 2 that:

As the value of is known at the state , one can compute and the component can be used in formula (7) or formula (12). If sufficiently accurate, estimation criterion may be difficult or not even possible and formulae (12) instead of (7) should be applied for the criterion . Q.E.D. □

Theorem 2.

Assumption: let the ALMM Based Learning method be defined for a problem .

Thesis: The ALMM Based Learning method can be defined for the problem , where is any multiplicative criterion.

Proof .

It results from Definition 3 that the multiplicative criterion is a separable one. Thus, the thesis resulting from Theorem 1 is true, so:

These types of criteria are common in term securities investment problems that may not be redeemed early. □

6. Exemplary Problem

An example of a problem that can be used as a difficult-to-control process due to the exponential complexity of the process of selecting control decisions may be a fleet of drones performing transport tasks to a network of airport destinations. Assume that the graph of a destination is a loaded undirected graph in which the set of edges denotes the air routes J and the set of landing sites I represent the set of nodes partially connecting the air corridors described by the relation of interconnections . This relation includes only those connections that are defined in the considered airspace structure of routes. In the considered example, it was assumed that the drones fleet used is the counterpart of a set of parallel machines, which may differ in their operating parameters. In particular, it is their cruising speed, transported weight and range. Airport tasks are characterized by due times and their deadlines. It is also assumed in the considered example that the operational time of the fleet is not subject to restrictions related to the access of airspace and the routes of individual ships can be determined independently while maintaining adequately safe air separation. The execution of the flight along a given route during the execution of the task is not subject to stoppages and individual tasks may be assigned weights, taking into account the priority of their implementation. The main task of the system is to generate the trajectory of decisions that control the process, taking into account the costs of the work of individual machines, the waiting times for assigning tasks and the total time of the execution of all tasks.

The preliminary idea of the learning algorithm for this problem was given in [2]. Other examples that refer to simplified models of discrete processes can be indicated in other studies [23,25]. The specification of individual elements of the AL model is very extensive. Below we will provide only those elements of the model that are necessary to explain the structure of the local criterion and methods of learning. The process state at any instant t is defined as a vector , where . A coordinate represents a set of corridors, namely routes to be traveled by drones to the moment t. The other coordinates describe the state of the m-th drone, where . A state belongs to the set of not-admissible states if there is a route corridor whose job flight is not completed yet and whose due date is earlier than t. The definition of is as follows:

A state is a goal if all the jobs have been completed, i.e.:

A decision determines the flights that should be started at the moment t, machines which are in the air, machines that should be serviced or prepared to be operational or the machines that should be waiting for weather reasons. Thus, the complex decision , where the co-ordinate refers to the m-th drone and included all pieces of needed information. Based on the current state and the decision u taken in this state, the subsequent state is generated by means of the transition function f.

In the course of trajectory generation in each state of the process, a decision is taken for which the value of the local criterion is the lowest. The local criterion takes into account a component connected with cost of work, and a component connected with the necessity for the trajectory to omit the states of set . The first components constitute a sum of and , where denotes the increase of work cost as a result of realizing decision u and is the lower estimation of the cost of finishing the set of headings matching the final section of the trajectory after the decision u has been realized. As was presented in Section 4, the fractional order of the discrete difference might be used to fluently scan the space of the process states.

The next component is connected with the necessity for the trajectory to omit the states of set , i.e., it takes into account the consequences of the decision u from the due date’s limitations point of view. Let estimate the minimal distance between the new state and the subset of not-admissible set that is reachable from the state . The estimation is defined in detail in [16,26]. Thus, involving the GL fractional derivative, the local criterion is of the form:

where a is a parameter that is being changed during consecutive experiments (trajectory generations). The value of criterion q is computed for each . This decision for which the criterion value is minimal is chosen. Then, the new state is generated and the new best decision is chosen. If a newly generated trajectory is admissible and, for most of its states, the distances to the set are relatively big, the parameter a can be decreased. In such a situation, the role of optimization compounds is enlarged. On the contrary, when the generated trajectory is not admissible, the parameter a should be increased because then the greater emphasis should be put on the due date’s limitations.

In a typical process of optimizing logistic tasks for unmanned aircraft, the process is affected by problems related to downtime (e.g., vessel failures or delays in flight tasks), continuous control of fleet vessels, their availability, optimal task planning, optimal use of the available capacity, and available airspace capacity. In current air task planning systems (such as the European ATM Master Plan), this is still a manual process that is supported by additional technical means. Intelligent technology is used to control the process of executing dynamically incoming air tasks by a fleet of drones that move concurrently according to the distribution of nodal points—individual air destinations. For such a use of algebraic–logical description, the following assumptions are defined:

- The task of controlling the process of executing dynamically incoming aerial tasks by a fleet of drones that move concurrently according to the distribution of nodal points—individual aerial destinations—is defined.

- The aviation destinations for individual tasks can dynamically appear in the system, according to the properties of the process state vector.

- The drone fleet consisted of six unmanned aerial vehicles (UAV1–UAV6).

- The UAVs take off and land at a dedicated airport; the nodal points are known.

- The tasks to be executed arrive in real time and are respectively defined as , having the structure of single-stage and multi-stage target nodes, respectively.

- The execution of the assigned individual tasks for the UAV is carried out, respectively, at specific time intervals depending on the currently prevailing conditions and external interference, as well as taking into account the conditions related to possible dangerous/collision situations of the UAV.

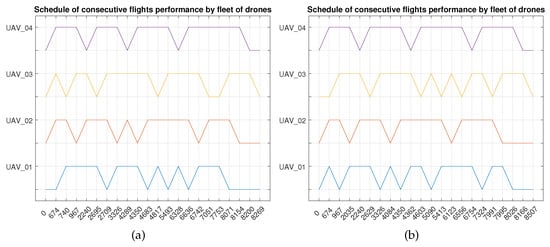

A number of studies have been conducted for different trajectory classes, number of drones and tasks. The example presented below is for a fleet of four drones that had to perform a set of 21 random tasks for a single-stage trajectory (i.e., the number of target nodes is 1). Table 1 shows the obtained results; in the Drones column, the obtained values in the form of A × B describe the number of steps in which the tasks were completed with the initial zero state (A) and the number of drones performing the tasks (B). For the cases considered, the shortest time for the drone fleet to complete incoming tasks is , and the longest is .

Table 1.

Results of simulations with four UAVs.

Table 2 shows the trajectories in which the individual drones carried out the assigned tasks (value 0—means the completion of the task, 1–21—task numbers) for which was found, respectively.

Table 2.

Assigned tasks for the UAV fleet.

Figure 1 shows the next steps in the tasks performed by the drone fleet.

Figure 1.

Schedule of consecutive flights’ performance by fleet of four drones. (a) the shortest and (b) the longest time.

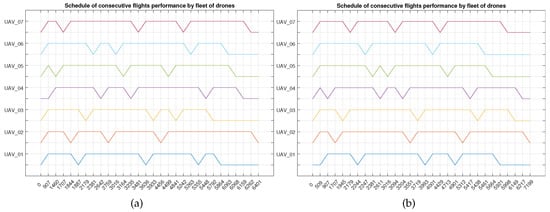

Further simulations were carried out for a randomly determined fleet of seven drones and 29 tasks, in which the solution with the shortest time of 6401 and the longest time of 7199 , respectively, was obtained (see Table 3).

Table 3.

Results of simulations with four UAVs.

Table 4 shows the assigned tasks for the drone fleet for which a target solution has been received (value 0–task completion, 1–29—task numbers).

Table 4.

Assigned tasks for the UAV fleet.

Figure 2 shows the schedule of tasks carried out by the supervised drone fleet of seven drones.

Figure 2.

Schedule of consecutive flights performance by fleet of seven drones. (a) the shortest and (b) the longest time.

The above results take into account only the obtained sets of acceptable or so-called admissible trajectories. These are only those trajectories that allow a process to reach the goal state. Unacceptable trajectories also referred to as non-admissible trajectories, which bring the process to forbbiden states, for example, as a result of over-timing the task to be executed, have been omitted. However, they can provide additional information to search for acceptable solutions to protect the process from entering a forbidden state.

7. Reinforcement Learning vs. ALMM Based Learning

ALMM Based Learning exhibits certain similarities to a general and much more well known method, namely Reinforcement Learning (RL) [6,8,9,11,15,27]. Let us attempt to review these similarities as well as differences. It is worth remembering, though, that these remarks do not constitute an entire comparison of the two methods. Such a comparison would not be possible anyway due to the large variety of RL method variants available. A more comprehensive comparative discussion will be the subject of a separate study. Similarities may be noticed more clearly when uniform terminology is used, which is why this paper attempts to provide equivalent terms for both methods.

7.1. Application of Learning

The Reinforcement method is a much more general method, which is why its applications cover multiple fields such as controlling robots, planning and scheduling problems, various games and many more. ALMM Based Learning was developed to deliver approximate solutions of NP-hard discrete optimization problems. It can be noticed, however, that the approach can be utilized for a broader spectrum of applications.

7.2. Environment

Both methods utilize the state space and both build the best possible trajectory (or its parts). In both cases the ultimate goal of an algorithm is to find a most profitable (overall) sequence of actions/decisions. The environment for the ALMM Based Learning method, however, is defined by the Algebraic Logical Metamodel of Multistage Decision Processes, while for the RL method it is defined as the Markov Decision Process. Thus, the ALMM Based Learning environment is deterministic, while the Reinforcement Learning one is stochastic. The next difference stems from the fact that ALMM Based Learning may utilize the so-called generalized states space , with X corresponding to a space of proper states and T representing the time instants space. As a consequence, ALMM Based Learning suits non-stationary environments well. All components defining the environment may be non-stationary, including the transition function as well as possible decision sets , (matching subsets of actions available in particular states) and constraints defining goal state set and not-admissible state set .

7.3. Completeness of Information

ALMM Based Learning utilizes a mathematical model of a problem (or instance) to be solved. One may say it has complete information on the environment beforehand. Taking into account the recursive character of the AL models, though such information may only be utilized locally, a certain degree of analysis of the environment properties is possible; however, an estimation of the distance to the not-admissible state set and other sets is necessary. In contrast to ALMM based Learning, the RL scheme implies that there is little need for human expert knowledge about the domain of application.

7.4. Learning Scheme

In the RL method, the agent is supposed to choose the best action based on the current state. The selection is made based on properly built Value Functions taking various forms depending on the various RL method variants. In ALMM Based Learning, the decision (that corresponds to the RL method’s ‘action’) is calculated based on a parametric, local criterion function . Obviously, one may express it as the Agent taking a decision based on function q, similar to the RL method.

7.5. Aim of Learning

Let us now review certain similarities to the indirect “aim” of the learning processes in both methods. First of all, both methods share a common assumption: the agent selects an action/decision that will maximize the reward in long term, not only in the immediate future. Such algorithms are known to have an infinite horizon, though in practice the heuristic philosophy is applied to a finite time range or finite trajectories (so-called episodic problems). The objective of an RL based algorithm is to find a ∏ policy with a maximum expected return. A policy refers to mapping that assigns some probability distribution over actions with the actions selected by the policy. The objective of an algorithm utilizing ALMM Based Learning is to find coefficients for a local criterion function in a way that would optimize the value of a global criterion Q. The selection of a decision in a given state is performed based on the local criterion q. Roughly speaking, a policy, or more precisely an action value function under policy ∏, corresponds to a local criterion q for ALMM based Learning, while the expected reward criterion corresponds to the global criterion Q.

7.6. Learning Process

In an RL method, the learning process utilizes a “reward feedback” for the agent learning its behavior. This is known as a reinforcement signal. Exact reinforcement algorithms vary depending on the RL method variant. This is the main novelty presented study, namely the learning progress performed by the ALMM Based Learning strategy. Iteration for ALMM Based Learning consists of generating a whole trajectory (admissible or not-admissible). The algorithm analyzes the whole trajectory path. Knowledge of the environment is applied to generate the most suitable local criterion. The gaining of knowledge is aggregated in a form of optimized coefficient values that determine the influence of individual criterion components. The coefficients are then improved in consecutive iterations based on the analysis of individual trajectory paths.

8. Conclusions

This paper presents new generalizations referring to the learning approach named the ALMM Based Learning method. The learning method is applied mainly to solve NP hard discrete optimization problems, however, the proposed approach can be used efficiently with algorithms for the broad area of applications. The paper includes proofs of theorems showing that the ALMM Based Learning method can be defined for much broader problem classes than initially assumed, including multiplicatively separable criteria. Then, it proceeds to propose and discuss essential modifications to the local criterion. The novelty of a machine learning method based on ALMM is the fact that it is uses formal algebraic–logical models of problems to be solved. Moreover, the generalization of the changing dynamics measurement of the criterion was applied with the use of a fractional derivative of the GL type. This allows for fluent searching in the state space for optimal and suboptimal solutions. However, although initial knowledge is delivered it cannot be utilized easily. Thus, the additional knowledge iis acquired and gathered during successive experiments which consist of the generation of subsequent trajectories. Comparing the method with methods of machine learning [8,28,29,30], the presented approach has similarities to Reinforcement Learning. However, it is not its typical class. A large number of difficult problems can be efficiently solved by means of the presented method. The method is especially very useful for difficult scheduling problems with state-dependent resources. The managing of projects, especially software projects, belongs to this class. Multiple experiments were carried out, confirming the efficiency of the presented method. Simultaneously, the experiments indicated the need for further research regarding coefficient fine-tuning algorithms. More detailed classification of problems based on properties of the AL problem models is also necessary, to be followed by studies for individual classes.

Author Contributions

Conceptualization, Z.G., E.D.-D. and E.Z.; methodology, Z.G., E.D.-D. and E.Z.; software, Z.G., E.D.-D. and E.Z.; validation, Z.G., E.D.-D. and E.Z.; formal analysis, Z.G., E.D.-D. and E.Z.; investigation, Z.G., E.D.-D. and E.Z.; resources, Z.G., E.D.-D. and E.Z.; data curation, Z.G., E.D.-D. and E.Z.; writing–original draft preparation, Z.G., E.D.-D. and E.Z.; writing–review and editing, Z.G., E.D.-D. and E.Z.; visualization, Z.G., E.D.-D. and E.Z.; supervision, Z.G., E.D.-D. and E.Z.; project administration, Z.G., E.D.-D. and E.Z.; funding acquisition, Z.G., E.D.-D. and E.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ALMM | Algebraic Logical Metamodel of Multistage Decision Processes |

| cMDP | common multistage decision processes |

| MDDP | dynamic multistage decision process |

| U | The set of decisions |

| S | initial generalized state |

| initial state | |

| f | transition function |

| The set of not admissible generalized states | |

| The set of goal generalized states | |

| The set of possible decision | |

| X | The set of proper states |

| The set of proper not admissible states | |

| The set of proper goal states | |

| process | |

| The optimization problem | |

| critterion | |

| The optimization task (instance of the problem) | |

| P | The instance of the process and named an individual process |

| The set of all states of trajectories of the process | |

| The number of the last state of a finite trajectory | |

| The set of all decision sequences of the process P, | |

| The set of real numbers. | |

| The state sets that are advantageous | |

| The state sets that are disadvantageous | |

| UAV | Unmanned Aerial Vehicle |

References

- Dudek-Dyduch, E. Learning based algorithm in scheduling. J. Intell. Manuf. 2000, 11, 135–143, (accepted to be published 1998). [Google Scholar] [CrossRef]

- Dudek-Dyduch, E.; Dyduch, T. Learning Based Algorithm in Scheduling; EPM: Lyon, France, 1997; Volume 1, pp. 119–128. [Google Scholar]

- Dudek-Dyduch, E. Algebraic logical meta-model of decision processes–new metaheuristics. In Artificial Intelligence and Soft Computing; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9119, pp. 541–554. [Google Scholar]

- Dudek-Dyduch, E.; Dyduch, T. Learning algorithms for scheduling using knowledge based model. In Proceedings of the ICAISC 2006, Zakopane, Poland, 25–29 June 2006; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4029, pp. 1091–1100. [Google Scholar]

- Dudek-Dyduch, E.; Kucharska, E. Learning method for co-operations. In Proceedings of the ICCCI 2011, Gdynia, Poland, 21–23 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6923, pp. 290–300. [Google Scholar]

- Dorigo, M.; Gambardella, L.M. Ant-Q: A Reinforcement Learning Approach to the Traveling Salesman Problem. In Machine Learning Proceedings 1995; Morgan Kaufmann: Burlington, MA, USA, 1995; pp. 252–260. [Google Scholar]

- Han, X.; Han, Y.; Zhang, B.; Qin, H.; Li, J.; Liu, Y.; Gong, D. An effective iterative greedy algorithm for distributed blocking flowshop scheduling problem with balanced energy costs criterion. Appl. Soft Comput. 2022, 129, 109502. [Google Scholar] [CrossRef]

- Mazyavkina, N.; Sviridov, S.; Ivanov, S.; Burnaev, E. Reinforcement learning for combinatorial optimization: A survey. Comput. Oper. Res. 2021, 134, 105400. [Google Scholar] [CrossRef]

- Priore, P.; de la Fuente, D.; Puente, J.; Parre, J. A comparison of machine-learning algorithms for dynamic scheduling of flexible manufacturing systems. Eng. Appl. Artif. Intell. 2006, 19, 247–255. [Google Scholar] [CrossRef]

- Wen, X.; Lian, X.; Qian, Y.; Zhang, Y.; Wang, H.; Li, H. Dynamic scheduling method for integrated process planning and scheduling problem with machine fault. Robot. Comput. Integr. Manuf. 2022, 77, 102334. [Google Scholar] [CrossRef]

- Zhang, W.; Dietterich, T.G. High-Performance Job-Shop Scheduling With A Time-Delay TD(lambda) Network. Adv. Neural Inf. Process. Syst. 1996, 8, 1024–1030. [Google Scholar]

- Bocewicz, G.; Wojcik, R.; Banaszak, Z.A.; Pawełewski, P.B. Multimodal Processes Rescheduling: Cyclic Steady States Space Approach in mathematical Problems n Engineering. Math. Probl. Eng. 2013, 2013, 407096. [Google Scholar] [CrossRef]

- Bożejko, W.; Rajba, P.; Wodecki, M. Robustness on Diverse Data Disturbance Levels of Tabu Search for a Single Machine Scheduling. In New Advances in Dependability of Networks and Systems. DepCoS-RELCOMEX 2022; Lecture Notes in Networks and Systems; Zamojski, W., Mazurkiewicz, J., Sugier, J., Walkowiak, T., Kacprzyk, J., Eds.; Springer: Cham, Switzerlands, 2022; Volume 484. [Google Scholar]

- Lobato, F.S.; Steffen, V., Jr. A New Multi-objective Optimization Algorithm Based on Differential Evolution and Neighborhood Exploring Evolution Strategy. J. Artif. Intell. Soft Comput. Res. 2011, 1, 4. [Google Scholar]

- Dietterich, T.G. Hierarchical reinforcement learning with the MAXQ value function decomposition. J. Artif. Intell. Res. 2007, 3, 227–303. [Google Scholar] [CrossRef]

- Dudek-Dyduch, E. Modeling Manufacturing Processes with Disturbances—A New Method Based on Algebraic-Logical Meta-Model. In Proceedings of the ICAISC 2015, Part II, LNCS, Zaopane, Poland, 14–28 June 2015; Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L., Zurada, J., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; Volume 9120, pp. 353–363. [Google Scholar]

- Grobler-Debska, K.; Kucharska, E.; Dudek-Dyduch, E. Idea of switching algebraic-logical models in flow-shop scheduling problem with defects. In Proceedings of the MMAR 2013: 18th international conference on Methods and Models in Automation and Robotics, Miedzyzdroje, Poland, 26–29 August 2013. [Google Scholar]

- Grobler-Dębska, K.; Kucharska, E.; Baranowski, J. Formal scheduling method for zero-defect manufacturing. Int. J. Adv. Manuf. Technol. 2022, 118, 4139–4159. [Google Scholar] [CrossRef]

- Gomolka, Z.; Twarog, B.; Zeslawska, E.; Dudek-Dyduch, E. Knowledge Base component of Intelligent ALMM System based on the ontology approach. Expert Syst. Appl. 2022, 199, 116975. [Google Scholar] [CrossRef]

- Kucharska, E.; Grobler-Debska, K.; Raczka, K. Algebraic-logical meta-model based approach for scheduling manufacturing problem with defects removal. Adv. Mech. Eng. 2017, 9, 1–18. [Google Scholar] [CrossRef]

- Kucharska, E. Heuristic Method for Decision-Making in Common Scheduling Problems. Appl. Sci. 2017, 7, 1073. [Google Scholar] [CrossRef]

- Kucharska, E. Dynamic Vehicle Routing Problem—Predictive and Unexpected Customer Availability. Symmetry 2019, 11, 546. [Google Scholar] [CrossRef]

- Kucharska, E.; Dudek-Dyduch, E. Extended Learning Method for Designation of Cooperation. In Transactions on Computational Collective Intelligence XIV; Springer: Berlin/Heidelberg, Germany, 2014; pp. 136–157. [Google Scholar]

- Gomolka, Z. Fractional Backpropagation Algorithm—Convergence for the Fluent Shapes of the Neuron Transfer Function. In Neural Information Processing; Yang, H., Pasupa, K., Leung, A.C.S., Kwok, J.T., Chan, J.H., King, I., Eds.; Springer: Cham, Switzerland, 2020; Volume 1333, pp. 580–588. [Google Scholar]

- Dudek-Dyduch, E.; Dutkiewicz, L. Substitution tasks method for discrete optimization. In Proceedings of the ICAISC 2013, Zakopane, Poland, 29 April–3 May 2013; Part II, LNCS; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7895, pp. 419–430. [Google Scholar]

- Dudek-Dyduch, E.; Dyduch, T. Formal Approach to Optimization of Discrete Manufacturing Processes. In Proceedings of the 12th IASTED International Conference Modelling, Identification and Control, Innsbruck, Austria, 15–17 February 1993; Acta Press: Zurich, Switzerland, 1993. [Google Scholar]

- Wang, X.; Zhang, L.; Lin, T.; Zhao, C.; Wang, K.; Chen, Z. Solving job scheduling problems in a resource preemption environment with multi-agent reinforcement learning. Robot. Comput. Integr. Manuf. 2022, 77, 102324. [Google Scholar] [CrossRef]

- Cherkassky, V.; Mylier, F. Learning from Data: Concepts, Theory, and Methods; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Flach, P. Machine Learning. The Art and Science of Algorithms that Make Sense of Data; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Popper, J.; Ruskowski, M. Using Multi-Agent Deep Reinforcement Learning For Flexible Job Shop Scheduling Problems. Procedia CIRP 2022, 112, 63–67. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).