Detection of Miss-Seeding of Sweet Corn in a Plug Tray Using a Residual Attention Network

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Materials

2.2. Experimental Method

2.3. Description of Datasets

2.4. The Residual Attention Network Model

2.4.1. The CBAM-ResNet45 Model

2.4.2. Experimental Parameter Setting

2.4.3. Model Evaluation

3. Results

3.1. The Impact of the Number of Filters on Model Performance

3.2. The Impact of the BN Module Location on Model Performance

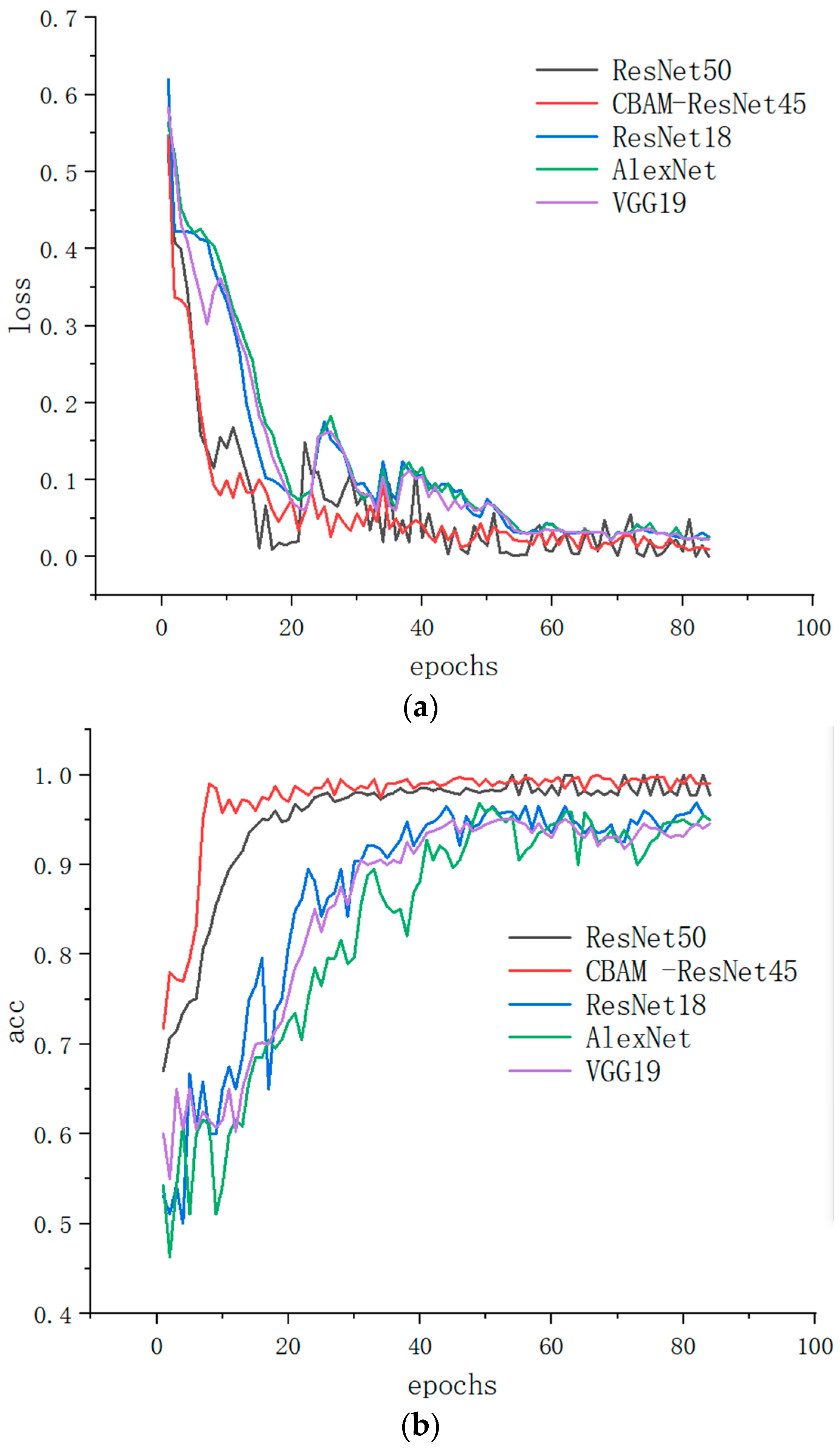

3.3. The Performance Comparison of Different Convolutional Neural Network Model

3.4. Areas of Concern in the Network Analysis Category

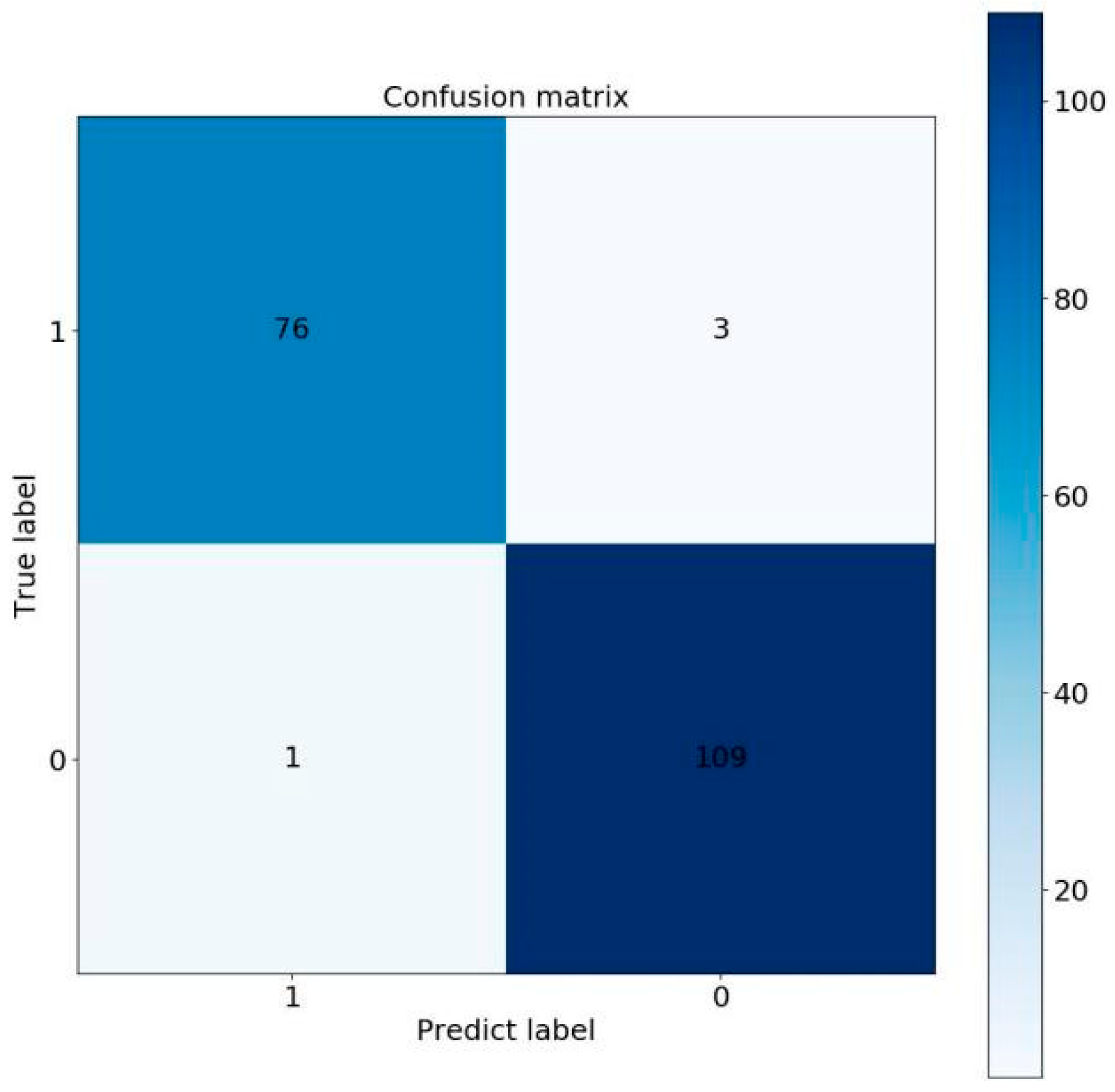

3.5. Confusion Matrix of the Model

3.6. Analysis of Classification Error

4. Conclusions

- Cost-saving and robust anti-interference: The method proposed in this paper did not need special instruments to collect the images, and it could directly classify the original images after simple pre-processing. Compared with the traditional machine vision-based classification, the model proposed in this paper eliminated the influence of subjective factors on threshold segmentation using pixel values and had a strong anti-interference ability.

- Under the unchanged conditions for the training time and testing time, the CBAM-ResNet45 model enabled the neural network to adjust the weights of the channel flow and the spatial flow, extract more information about objects from the channel and space, and improve the accuracy of the recognition and classification of the network.

- The results of the visualization experiments further verified that CBAM ResNet45 focused more on the concentrated feature areas. Moreover, the model also has disadvantages, e.g., the area of the corn seed was small. Moreover, when the plug tray moves with the conveyor belt, the vibration of the conveyor belt causes the falling of the proportion of the soil from the tray and its loss, which results in covering a part of the seed area or directly burying the seed so that the bare seed area becomes small. This leads to the problem of image misrecognition. Since it is difficult to avoid losing the soil and covering seeds caused by the vibration of the conveyor belt, the CBAM-ResNet45 model needs to be improved to correctly classify the pictures with small corn seed areas.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bai, J.; Hao, F.; Cheng, G.; Li, C. Machine vision-based supplemental seeding device for plug seedling of sweet corn. Comput. Electron. Agric. 2021, 188, 106345. [Google Scholar] [CrossRef]

- Abade, A.; Ferreira, P.; Vidal, F. Plant diseases recognition on images using convolutional neural networks: A systematic review. Comput. Electron. Agric. 2021, 185, 106125. [Google Scholar] [CrossRef]

- Campanile, G.; Ruberto, C.; Loddo, A. An open source plugin for image analysis in biology. In Proceedings of the 28th IEEE International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises, WETICE 2019, Naples, Italy, 12–14 June 2019; Reddy, S., Ed.; IEEE: Piscataway, NJ, USA, 2019; pp. 162–167. [Google Scholar]

- Wang, Y.; Jia, H.; Li, M.; Liu, J.; Xu, F. Study on corn seed quality detection and grading method based on OpenCV algorithm. For. Mach. Woodwork. Equip. 2017, 45, 35–39. [Google Scholar]

- Lv, M.; Zhang, R.; Jia, H.; Ma, L. Study on maize seed classification method based on improved ResNet. Chin. J. Agric. Mech. 2021, 42, 92–98. [Google Scholar]

- Li, J.; Zhao, B.; Wu, J.; Zhang, S.; Lv, C.; Li, L. Stress-Crack detection in maize kernels based on machine vision. Comput. Electron. Agric. 2022, 194, 106795. [Google Scholar] [CrossRef]

- Xia, C.; Yang, S.; Huang, M.; Zhu, Q.; Guo, Y.; Qin, J. Maize seed classification using hyperspectral image coupled with multi-linear discriminant analysis. Infrared Phys. Technol. 2019, 103, 103077. [Google Scholar] [CrossRef]

- Wakholi, C.; Kandpal, L.M.; Lee, H.; Bae, H.; Park, E.; Kim, M.S.; Mo, C.; Lee, W.H.; Cho, B.K. Rapid assessment of corn seed viability using short wave infrared line-scan hyperspectral imaging and chemometrics. Sens. Actuator B-Chem. 2018, 255, 498–507. [Google Scholar] [CrossRef]

- Liao, W.; Wang, X.; An, D.; Wei, Y. Hyperspectral imaging technology and transfer learning utilized in haploid maize seeds identifification. In Proceedings of the International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS), Shenzhen, China, 9–11 May 2019; pp. 157–162. [Google Scholar]

- Maeda-Gutierrez, V.; Galvan-Tejada, C.; Zanella-Calzada, L.; Celaya-Padilla, J.; Galvan-Tejada, J.; Gamaboa-Rosales, H.; Luna-Garica, H.; MagallanesQuintanar, R.; Mendez, C.; Olvera-Olvera, C. Comparison of Convolutional Neural Network Architectures for Classification of Tomato Plant Diseases. Appl. Sci. 2020, 10, 1245. [Google Scholar] [CrossRef]

- Haf, R.; Pearson, T.C.; Toyofuku, N. Sorting of in-shell pistachio nuts from kernels using color imaging. Appl. Eng. Agric. 2010, 26, 633–638. [Google Scholar] [CrossRef]

- Shima, J.; Seyed-Hassan Miraei Ashtiani, F.; Verbeek, A. Computer-vision classification of corn seed varieties using deep convolutional neural network. J. Stored Prod. Res. 2021, 92, 101800. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, C.; Xu, X.; Chen, J. RIC-Net: A plant disease classification model based on the fusion of Inception and residual structure and embedded attention mechanism. Comput. Electron. Agric. 2022, 193, 106644. [Google Scholar] [CrossRef]

- Trang, K.; TonThat, L.; Gia Minh Thao, N.; Tran Ta Thi, N. Mango Diseases Identification by a Deep Residual Network with Contrast Enhancement and Transfer Learning. In Proceedings of the 2019 IEEE Conference on Sustainable Utilization and Development in Engineering and Technologies (CSUDET), Penang, Malaysia, 7–9 November 2019; pp. 138–142. [Google Scholar] [CrossRef]

- Zhao, X.; Li, K.; Li, Y.; Ma, J.; Zhang, L. Identification method of vegetable diseases based on transfer learning and attention mechanism. Comput. Electron. Agric. 2022, 193, 106703. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. arXiv 2017, arXiv:1709.0150. [Google Scholar]

- Li, X.; Rai, L. Apple Leaf Disease Identification and Classification using ResNet Models. In Proceedings of the IEEE 3rd International Conference on Electronic Information and Communication Technology (ICEICT), Shenzhen, China, 27 November 2020; pp. 738–742. [Google Scholar]

- Qin, R.; Fu, X.; Lang, P. PolSAR Image Classification Based on Low-Frequency and Contour Subbands-Driven Polarimetric SENet. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4760–4773. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- He, C. Image Compressive Sensing via Multi-scale Feature Extraction and Attention Mechanism. In Proceedings of the 2020 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 11–13 December 2020; pp. 266–270. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, H.F.; Peng, Z. Rice diseases detection and classification using attention based neural network and bayesian optimization. Expert Syst. Appl. 2021, 178, 114770. [Google Scholar] [CrossRef]

- Tang, Z.; Yang, J.; Li, Z.; Qi, F. Grape disease image classification based on lightweight convolution neural networks and channelwise attention. Comput. Electron. Agric. 2020, 178, 105735. [Google Scholar] [CrossRef]

- Chen, Q.; Liu, L.; Han, R.; Qian, J.; Qi, D. Image identification method on high speed railway contact network based on YOLO v3 and SENet. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 8772–8777. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern identification, Honolulu, HI, USA, 21–26 July 2016; pp. 6450–6458. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

| Model | SE-ResNet-128 | SE-ResNet-256 | SE-ResNet-512 | |

| Accuracy | 0.900 | 0.930 | 0.890 |

| Model | CBAM-ResNet-128 | CBAM-ResNet-256 | CBAM-ResNet-512 | |

| Accuracy | 0.860 | 0.980 | 0.780 |

| Model | conv-BN-relu | conv-relu-BN | |

| Accuracy | 0.980 | 0.870 |

| Model | Precision (P) | Recall (R) | Accuracy (A) | F Score (F) |

|---|---|---|---|---|

| AlexNet | 0.8750 | 0.8640 | 0.7900 | 0.8690 |

| VGG19 | 0.9060 | 0.8170 | 0.8730 | 0.8592 |

| ResNet18 | 1 | 0.8440 | 0.8800 | 0.9150 |

| ResNet50 | 0.9540 | 0.9460 | 0.9420 | 0.9500 |

| CBAM-ResNet45 | 0.9968 | 0.9675 | 0.9800 | 0.9819 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, L.; Bai, J.; Xu, J.; Du, B.; Zhao, J.; Ma, D.; Hao, F. Detection of Miss-Seeding of Sweet Corn in a Plug Tray Using a Residual Attention Network. Appl. Sci. 2022, 12, 12604. https://doi.org/10.3390/app122412604

Gao L, Bai J, Xu J, Du B, Zhao J, Ma D, Hao F. Detection of Miss-Seeding of Sweet Corn in a Plug Tray Using a Residual Attention Network. Applied Sciences. 2022; 12(24):12604. https://doi.org/10.3390/app122412604

Chicago/Turabian StyleGao, Lulu, Jinqiang Bai, Jingyao Xu, Baoshuai Du, Jingbo Zhao, Dexin Ma, and Fengqi Hao. 2022. "Detection of Miss-Seeding of Sweet Corn in a Plug Tray Using a Residual Attention Network" Applied Sciences 12, no. 24: 12604. https://doi.org/10.3390/app122412604

APA StyleGao, L., Bai, J., Xu, J., Du, B., Zhao, J., Ma, D., & Hao, F. (2022). Detection of Miss-Seeding of Sweet Corn in a Plug Tray Using a Residual Attention Network. Applied Sciences, 12(24), 12604. https://doi.org/10.3390/app122412604