A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing

Abstract

Featured Application

Abstract

1. Introduction

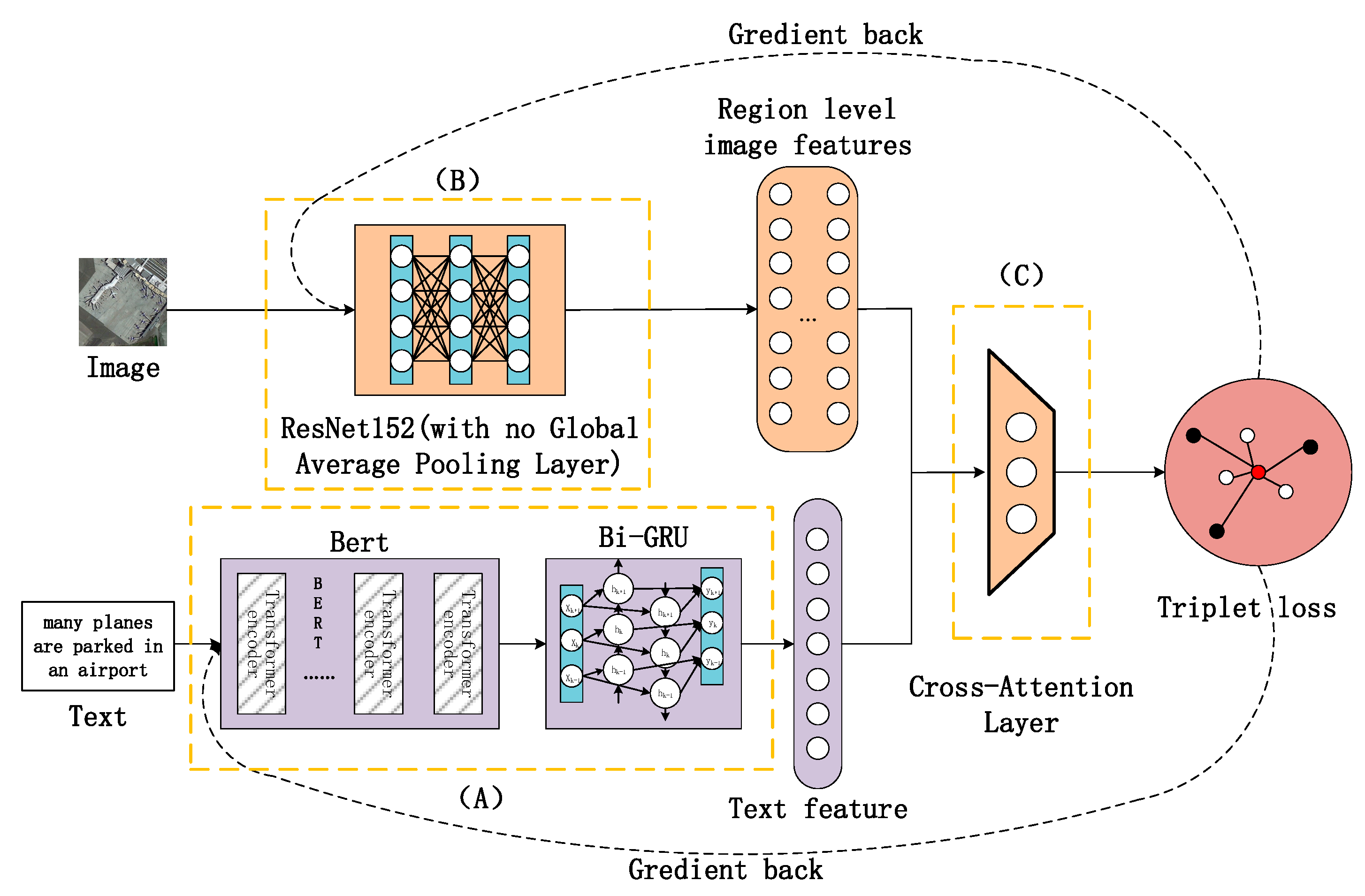

- First, it has proposed a cross-attention mechanism-based cross-modal network framework to tackle the problem of mutual interference of RS images with multi-scene semantics.

- Second, different from the combination of word-level textual information and region-level image information in previous cross-attention models, we adopted the correspondence between statement-level textual information and region-level image information and proposed a method for statement-level text feature generation based on the combination of the pretrained BERT model and Bi-GRU.

2. Methodology

2.1. Problem Analysis

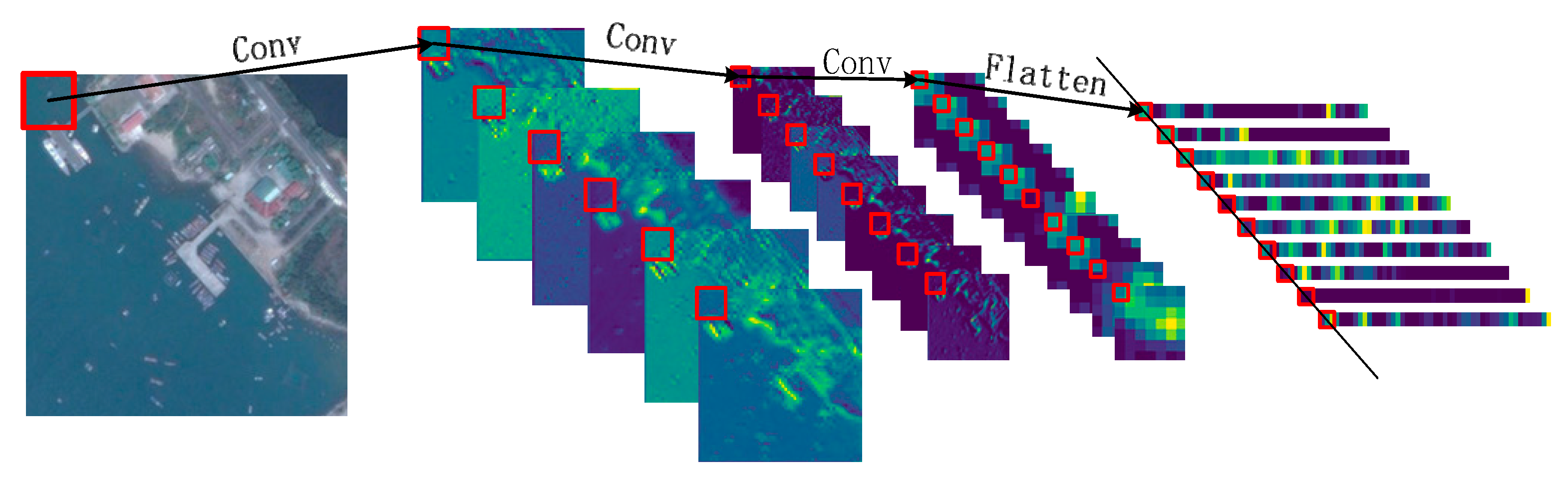

2.2. Image Feature Extraction

2.3. Text Feature Extraction

2.4. Cross-Attention Layer (CAL)

- should get close to zero at the initial stage of network training, so that the weights on image areas are close to one another, effectively equivalent to degenerating towards the global average pooling strategy.

- should zoom and converge to a certain constant after being trained for some rounds, which can avoid having too large a function value such that a minority of the image areas take up an extremely large proportion.where: h denotes the rounds of training; u is the ultimate constant to which the function converges; and r denotes at which round of training the median point should be achieved. The graph of is shown in Figure 5.

2.5. Objective Function

3. Experimental Results and Analysis

3.1. About the Datasets and Evaluation Indexes

3.2. Details in Training

3.3. Experiment Design

3.3.1. Basic Experiments Design

- VSE++ [34]: a natural image cross-modal retrieval model taking data enhancement and fine-tuning strategies based on an improved triplet loss function.

- MTFN [40]: a model that has designed a self-defined similarity function and that trains networks via the ranking-loss function.

- SCAN [27]: a model with the Stacked Cross Attention mechanism proposed for exploring fine-grained semantic correspondence between images and texts, signifying a successful application of the cross-attention mechanism in the field of natural images. In this paper, the model CABIR was compared with both SCAN i2t and t2i.

3.3.2. Ablative Experiments Design

3.3.3. Multi-Scene Semantic Image Retrieval Experiments Design

3.4. Experimental Results

3.4.1. Results of the Basic Experiments

3.4.2. Results of the Ablative Experiments

- the CAL, which was replaced with a global average pooling layer, hence the name “NO Attention” for the variant resulting from this change;

- the pretrained BERT network, which was removed and replaced with an embedded matrix to change the dimension of the word vector into 300, hence the name “NO BERT” for the variant resulting from this change;

- the GRU module, which was removed, with the CLC vector of the output sequence of the BERT network taken immediately as statement-level text features and mapped into a 2048-dimensional vector space via a fully connected layer, hence the name “NO GRU” for the variant resulting from this change;

- the temperature control function, which was removed, hence the name “NO Temperature” for the variant resulting from this change;

- the parameters of pretrained RSE152, which were frozen during training, hence the name “Freeze Rse152” for the variant resulting from this change;

- the parameters of pretrained BERT, which were frozen during training, hence the name “Freeze BERT” for the variant resulting from this change.

3.4.3. Results of the Multi-Scene Semantic Image Retrieval Experiments

4. Discussion

4.1. Further Analysis

4.2. Influences of Temperature Control Function Parameter Settings on Model Precision

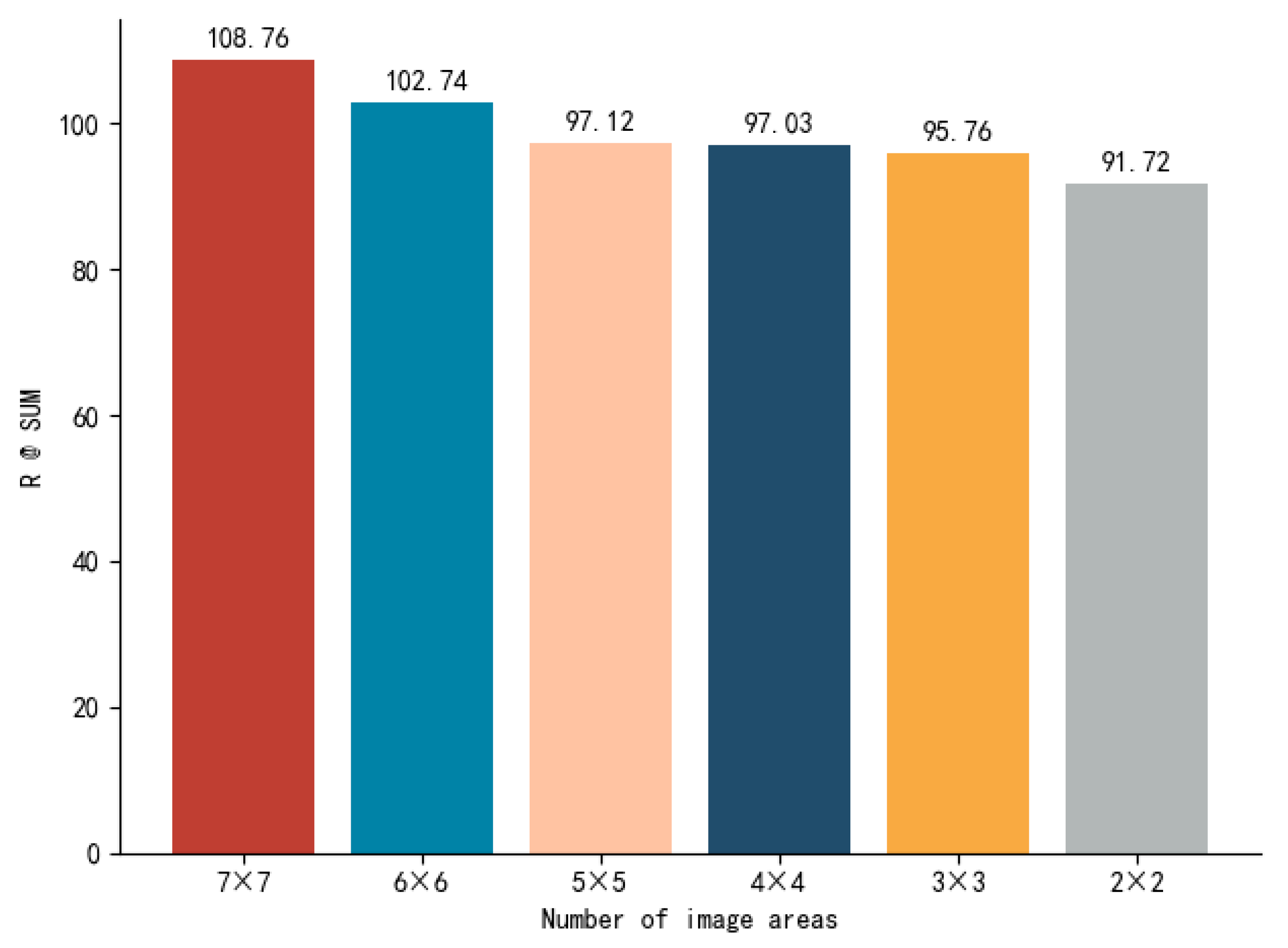

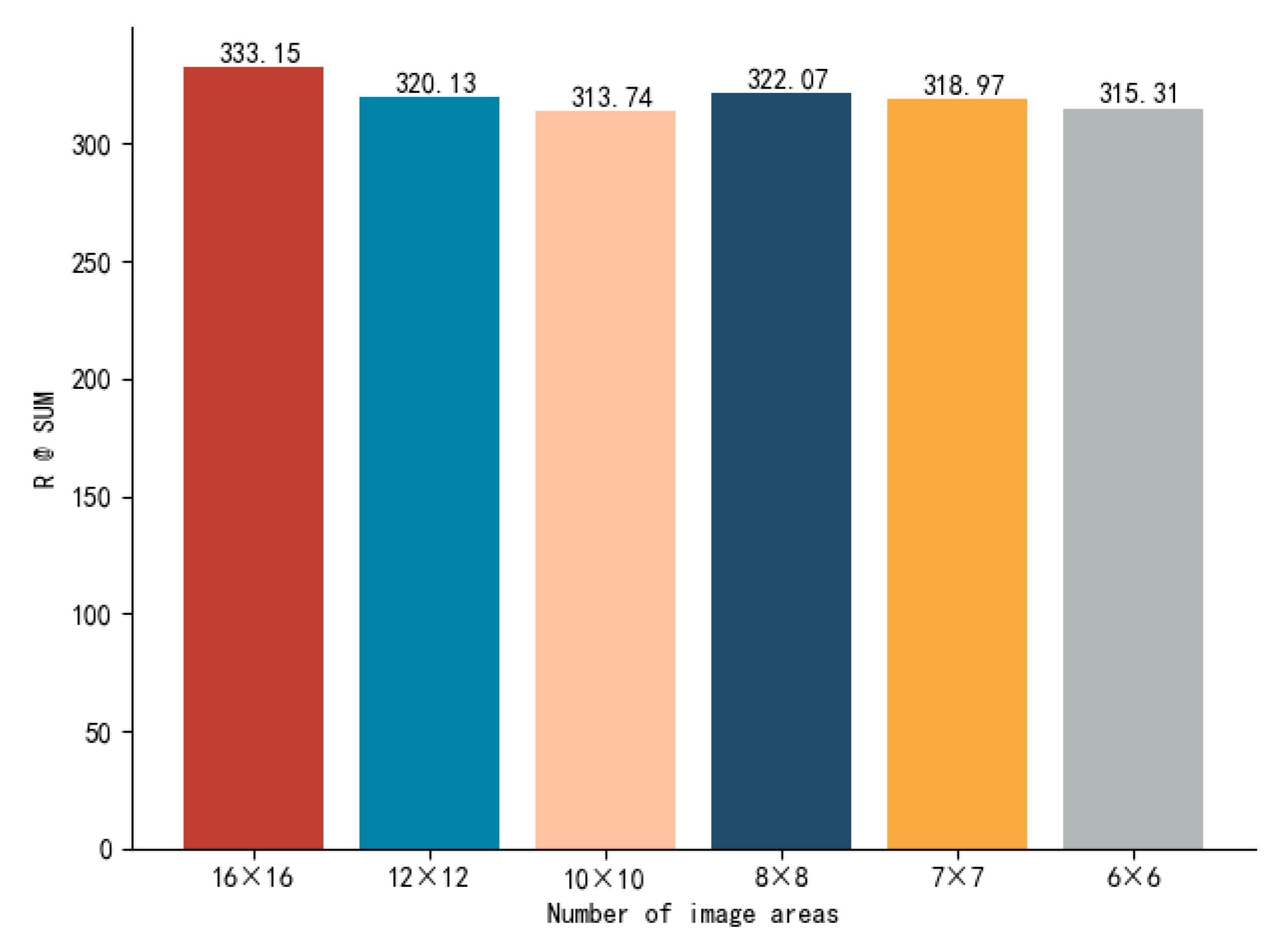

4.3. Influence of Different Image Region Divisions on Model Precision

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ma, J.; Zhou, H.; Zhao, J.; Gao, Y.; Jiang, J.; Tian, J. Robust Feature Matching for Remote Sensing Image Registration via Locally Linear Transforming. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6469–6481. [Google Scholar] [CrossRef]

- Scott, G.J.; Klaric, M.N.; Davis, C.H.; Shyu, C.-R. Entropy-Balanced Bitmap Tree for Shape-Based Object Retrieval From Large-Scale Satellite Imagery Databases. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1603–1616. [Google Scholar] [CrossRef]

- Demir, B.; Bruzzone, L. Hashing-Based Scalable Remote Sensing Image Search and Retrieval in Large Archives. IEEE Trans. Geosci. Remote Sens. 2016, 54, 892–904. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big Data for Remote Sensing: Challenges and Opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Li, P.; Ren, P. Partial Randomness Hashing for Large-Scale Remote Sensing Image Retrieval. IEEE Geosci. Remote Sens. Lett. 2017, 14, 464–468. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Zhu, H.; Ma, J. Large-Scale Remote Sensing Image Retrieval by Deep Hashing Neural Networks. IEEE Trans. Geosci. Remote Sens. 2018, 56, 950–965. [Google Scholar] [CrossRef]

- Cheng, Q.; Zhou, Y.; Fu, P.; Xu, Y.; Zhang, L. A Deep Semantic Alignment Network for the Cross-Modal Image-Text Retrieval in Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4284–4297. [Google Scholar] [CrossRef]

- Tobin, K.W.; Bhaduri, B.L.; Bright, E.A.; Cheriyadat, A.; Karnowski, T.P.; Palathingal, P.J.; Potok, T.E.; Price, J.R. Automated Feature Generation in Large-Scale Geospatial Libraries for Content-Based Indexing. Photogramm. Eng. Remote Sens. 2006, 72, 531–540. [Google Scholar] [CrossRef]

- Mikriukov, G.; Ravanbakhsh, M.; Demir, B. Deep Unsupervised Contrastive Hashing for Large-Scale Cross-Modal Text-Image Retrieval in Remote Sensing. arXiv 2022, arXiv:2201.08125. [Google Scholar]

- Cao, R. Enhancing remote sensing image retrieval using a triplet deep metric learning network. Int. J. Remote Sens. 2020, 41, 740–751. [Google Scholar] [CrossRef]

- Sumbul, G.; Demir, B. Informative and Representative Triplet Selection for Multilabel Remote Sensing Image Retrieval. IEEE Trans. Geosci. Remote Sens. 2022, 60, 11. [Google Scholar] [CrossRef]

- Yun, M.-S.; Nam, W.-J.; Lee, S.-W. Coarse-to-Fine Deep Metric Learning for Remote Sensing Image Retrieval. Remote Sens. 2020, 12, 219. [Google Scholar] [CrossRef]

- Roy, S.; Sangineto, E.; Demir, B.; Sebe, N. Metric-Learning-Based Deep Hashing Network for Content-Based Retrieval of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 226–230. [Google Scholar] [CrossRef]

- Han, L.; Li, P.; Bai, X.; Grecos, C.; Zhang, X.; Ren, P. Cohesion Intensive Deep Hashing for Remote Sensing Image Retrieval. Remote Sens. 2019, 12, 101. [Google Scholar] [CrossRef]

- Shan, X.; Liu, P.; Gou, G.; Zhou, Q.; Wang, Z. Deep Hash Remote Sensing Image Retrieval with Hard Probability Sampling. Remote Sens. 2020, 12, 2789. [Google Scholar] [CrossRef]

- Kong, J.; Sun, Q.; Mukherjee, M.; Lloret, J. Low-Rank Hypergraph Hashing for Large-Scale Remote Sensing Image Retrieval. Remote Sens. 2020, 12, 1164. [Google Scholar] [CrossRef]

- Ye, D.; Li, Y.; Tao, C.; Xie, X.; Wang, X. Multiple Feature Hashing Learning for Large-Scale Remote Sensing Image Retrieval. ISPRS Int. J. Geo.-Inf. 2017, 6, 364. [Google Scholar] [CrossRef]

- Yuan, Z.; Zhang, W.; Fu, K.; Li, X.; Deng, C.; Wang, H.; Sun, X. Exploring a Fine-Grained Multiscale Method for Cross-Modal Remote Sensing Image Retrieval. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Chen, Y.; Lu, X. A Deep Hashing Technique for Remote Sensing Image-Sound Retrieval. Remote Sens. 2019, 12, 84. [Google Scholar] [CrossRef]

- Rahhal, M.M.A.; Bazi, Y.; Abdullah, T.; Mekhalfi, M.L.; Zuair, M. Deep Unsupervised Embedding for Remote Sensing Image Retrieval Using Textual Cues. Appl. Sci. 2020, 10, 8931. [Google Scholar] [CrossRef]

- Socher, R.; Karpathy, A.; Le, Q.V.; Manning, C.D.; Ng, A.Y. Grounded Compositional Semantics for Finding and Describing Images with Sentences. Trans. Assoc. Comput. Linguist. 2014, 2, 207–218. [Google Scholar] [CrossRef]

- Karpathy, A.; Joulin, A.; Li, F.F.F. Deep Fragment Embeddings for Bidirectional Image Sentence Mapping. Adv. Neural Inf. Process. Syst. 2014, 27, 9. [Google Scholar]

- Gu, W.; Gu, X.; Gu, J.; Li, B.; Xiong, Z.; Wang, W. Adversary Guided Asymmetric Hashing for Cross-Modal Retrieval. In Proceedings of the 2019 on International Conference on Multimedia Retrieval, Ottawa, ON, Canada, 10–13 June 2019. [Google Scholar]

- Ning, H.; Zhao, B.; Yuan, Y. Semantics-Consistent Representation Learning for Remote Sensing Image–Voice Retrieval. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Mao, G.; Yuan, Y.; Xiaoqiang, L. Deep Cross-Modal Retrieval for Remote Sensing Image and Audio. In Proceedings of the 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), Beijing, China, 19–20 August 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Cheng, Q.; Huang, H.; Ye, L.; Fu, P.; Gan, D.; Zhou, Y. A Semantic-Preserving Deep Hashing Model for Multi-Label Remote Sensing Image Retrieval. Remote Sens. 2021, 13, 4965. [Google Scholar] [CrossRef]

- Lee, K.-H.; Chen, X.; Hua, G.; Hu, H.; He, X. Stacked Cross Attention for Image-Text Matching. arXiv 2018, arXiv:1803.08024. [Google Scholar]

- Huang, Y.; Wang, W.; Wang, L. Instance-Aware Image and Sentence Matching with Selective Multimodal LSTM. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7254–7262. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, H.; Bai, X.; Qian, X.; Ma, L.; Lu, J.; Li, B.; Fan, X. PFAN++: Bi-Directional Image-Text Retrieval With Position Focused Attention Network. IEEE Trans. Multimed. 2021, 23, 3362–3376. [Google Scholar] [CrossRef]

- Nam, H.; Ha, J.-W.; Kim, J. Dual Attention Networks for Multimodal Reasoning and Matching. arXiv 2016, arXiv:1611.00471. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Faghri, F.; Fleet, D.J.; Kiros, J.R.; Fidler, S. VSE++: Improving visual-semantic embeddings with hard negatives. arXiv 2017, arXiv:1707.05612. [Google Scholar]

- Lu, X.; Wang, B.; Zheng, X.; Li, X. Exploring Models and Data for Remote Sensing Image Caption Generation. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2183–2195. [Google Scholar] [CrossRef]

- Qu, B.; Li, X.; Tao, D.; Lu, X. Deep semantic understanding of high resolution remote sensing image. In Proceedings of the 2016 International Conference on Computer, Information and Telecommunication Systems (CITS), Kunming, China, 6–8 July 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems—GIS ’10, San Jose, CA, USA, 2–5 November 2010; p. 270. [Google Scholar] [CrossRef]

- Zhang, F.; Du, B.; Zhang, L. Saliency-Guided Unsupervised Feature Learning for Scene Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2175–2184. [Google Scholar] [CrossRef]

- Huang, Y.; Wu, Q.; Song, C.; Wang, L. Learning Semantic Concepts and Order for Image and Sentence Matching. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6163–6171. [Google Scholar] [CrossRef]

- Wang, T.; Xu, X.; Yang, Y.; Hanjalic, A.; Shen, H.T.; Song, J. Matching Images and Text with Multi-modal Tensor Fusion and Re-ranking. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019. [Google Scholar]

| RSICD Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|

| Method | Text Retrieval | Image Retrieval | ||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | mR | R@sum | |

| VSE++ | 3.38 | 9.51 | 17.46 | 2.82 | 11.32 | 18.1 | 10.43 | 62.59 |

| SCAN t2i | 4.39 | 10.9 | 17.64 | 3.91 | 16.2 | 26.49 | 13.25 | 79.53 |

| SCAN i2t | 5.85 | 12.89 | 19.84 | 3.71 | 16.4 | 26.73 | 14.23 | 85.42 |

| MTFN | 5.02 | 12.52 | 19.74 | 4.9 | 17.17 | 29.49 | 14.81 | 88.84 |

| AMFMN-soft | 5.05 | 14.53 | 21.57 | 5.05 | 19.74 | 31.04 | 16.02 | 96.98 |

| AMFMN-fusion | 5.39 | 15.08 | 23.4 | 4.9 | 18.28 | 31.44 | 16.42 | 98.49 |

| AMFMN-sim | 5.21 | 14.72 | 21.57 | 4.08 | 17 | 30.6 | 15.53 | 93.18 |

| CABIR | 8.59 | 16.27 | 24.13 | 5.42 | 20.77 | 33.58 | 18.12 | 108.76 |

| UCM Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|

| Method | Text Retrieval | Image Retrieval | ||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | mR | R@sum | |

| VSE++ | 12.38 | 44.76 | 65.71 | 10.1 | 31.8 | 56.85 | 36.93 | 221.6 |

| SCAN t2i | 14.29 | 45.71 | 67.62 | 12.76 | 50.38 | 77.24 | 44.67 | 268 |

| SCAN i2t | 12.85 | 47.14 | 69.52 | 12.48 | 46.86 | 71.71 | 43.43 | 260.56 |

| MTFN | 10.47 | 47.62 | 64.29 | 14.19 | 52.38 | 78.95 | 44.65 | 267.9 |

| AMFMN-soft | 12.86 | 51.9 | 66.67 | 14.19 | 51.71 | 78.48 | 45.97 | 275.81 |

| AMFMN-fusion | 16.67 | 45.71 | 68.57 | 12.86 | 53.24 | 79.43 | 46.08 | 276.48 |

| AMFMN-sim | 14.76 | 49.52 | 68.1 | 13.43 | 51.81 | 76.48 | 45.68 | 274.1 |

| CABIR | 15.17 | 45.71 | 72.85 | 12.67 | 54.19 | 89.23 | 48.3 | 289.82 |

| UCM Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|

| Method | Text Retrieval | Image Retrieval | ||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | mR | R@sum | |

| VSE++ | 24.14 | 53.45 | 67.24 | 6.21 | 33.56 | 51.03 | 39.27 | 235.63 |

| SCAN t2i | 18.97 | 51.72 | 74.14 | 17.59 | 56.9 | 76.21 | 49.26 | 295.53 |

| SCAN i2t | 20.69 | 55.17 | 67.24 | 15.52 | 57.59 | 76.21 | 48.74 | 292.42 |

| MTFN | 20.69 | 51.72 | 68.97 | 13.79 | 55.51 | 77.59 | 48.05 | 288.27 |

| AMFMN-soft | 20.69 | 51.72 | 74.14 | 15.17 | 58.62 | 80 | 50.06 | 300.34 |

| AMFMN-fusion | 24.14 | 51.72 | 75.86 | 14.83 | 56.55 | 77.89 | 50.17 | 300.99 |

| AMFMN-sim | 29.31 | 58.62 | 67.24 | 13.45 | 60 | 81.72 | 51.72 | 310.34 |

| CABIR | 32.76 | 65.52 | 79.31 | 19.66 | 57.59 | 78.51 | 55.53 | 333.15 |

| RSICD Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|

| Method | Text Retrieval | Image Retrieval | ||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | mR | R@sum | |

| NO Attention | 4.39 | 10.24 | 17 | 3.27 | 15.04 | 28.12 | 13.01 | 78.06 |

| NO BERT | 9.05 | 15.9 | 22.03 | 4.86 | 16.98 | 29.52 | 16.39 | 98.34 |

| NO GRU | 7.86 | 14.63 | 22.21 | 4.92 | 18.88 | 31.3 | 16.63 | 99.8 |

| NO Temperature | 6.3 | 14.16 | 21.85 | 5.13 | 19.07 | 32.34 | 16.48 | 98.85 |

| Freeze Rse152 | 6.39 | 12.34 | 21.76 | 3.91 | 15.87 | 27.77 | 14.67 | 88.04 |

| Freeze BERT | 7.13 | 11.33 | 16.73 | 2.72 | 12.27 | 29.83 | 12 | 80.01 |

| CABIR | 8.59 | 16.27 | 24.13 | 5.42 | 20.77 | 33.58 | 18.12 | 108.76 |

| Text For Retrieval | Target Image | Results Ranking (No Attention) | Results Ranking (With Attention) |

|---|---|---|---|

| a plane is parked on the apron | Figure 6a | 3 | 3 |

| there are some roads in the large brown farmland | 6 | 5 | |

| there is a large area of neat red buildings in the residential area | Figure 6b | 4 | 2 |

| a playground with several basketball fields | 5 | 5 | |

| beside the highway is a dense residential area | Figure 6c | 13 | 9 |

| next to the sea is a large port | 5 | 4 | |

| There are many vehicles parked in the parking lot around | Figure 6d | 10 | 9 |

| The center is a gray square building | 1 | 1 | |

| There is a forest beside the large farmland | Figure 6e | 22 | 7 |

| a large number of tall trees are planted on both sides of the river | 3 | 2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, F.; Li, W.; Wang, X.; Wang, L.; Zhang, X.; Zhang, H. A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing. Appl. Sci. 2022, 12, 12221. https://doi.org/10.3390/app122312221

Zheng F, Li W, Wang X, Wang L, Zhang X, Zhang H. A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing. Applied Sciences. 2022; 12(23):12221. https://doi.org/10.3390/app122312221

Chicago/Turabian StyleZheng, Fuzhong, Weipeng Li, Xu Wang, Luyao Wang, Xiong Zhang, and Haisu Zhang. 2022. "A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing" Applied Sciences 12, no. 23: 12221. https://doi.org/10.3390/app122312221

APA StyleZheng, F., Li, W., Wang, X., Wang, L., Zhang, X., & Zhang, H. (2022). A Cross-Attention Mechanism Based on Regional-Level Semantic Features of Images for Cross-Modal Text-Image Retrieval in Remote Sensing. Applied Sciences, 12(23), 12221. https://doi.org/10.3390/app122312221