Deep Transformer Language Models for Arabic Text Summarization: A Comparison Study

Abstract

1. Introduction

- A thorough comparison study among all existing abstractive TLMs-based Arabic and Arabic-supported multilingual ATS systems with various evaluation metrics.

- Empirically studying the impact of fine-tuning the TLMs for Arabic ATS on the resulting output summary.

- Empirically studying the performance of TLMs and deep-learning-based Arabic ATS systems.

2. Background and Related Work

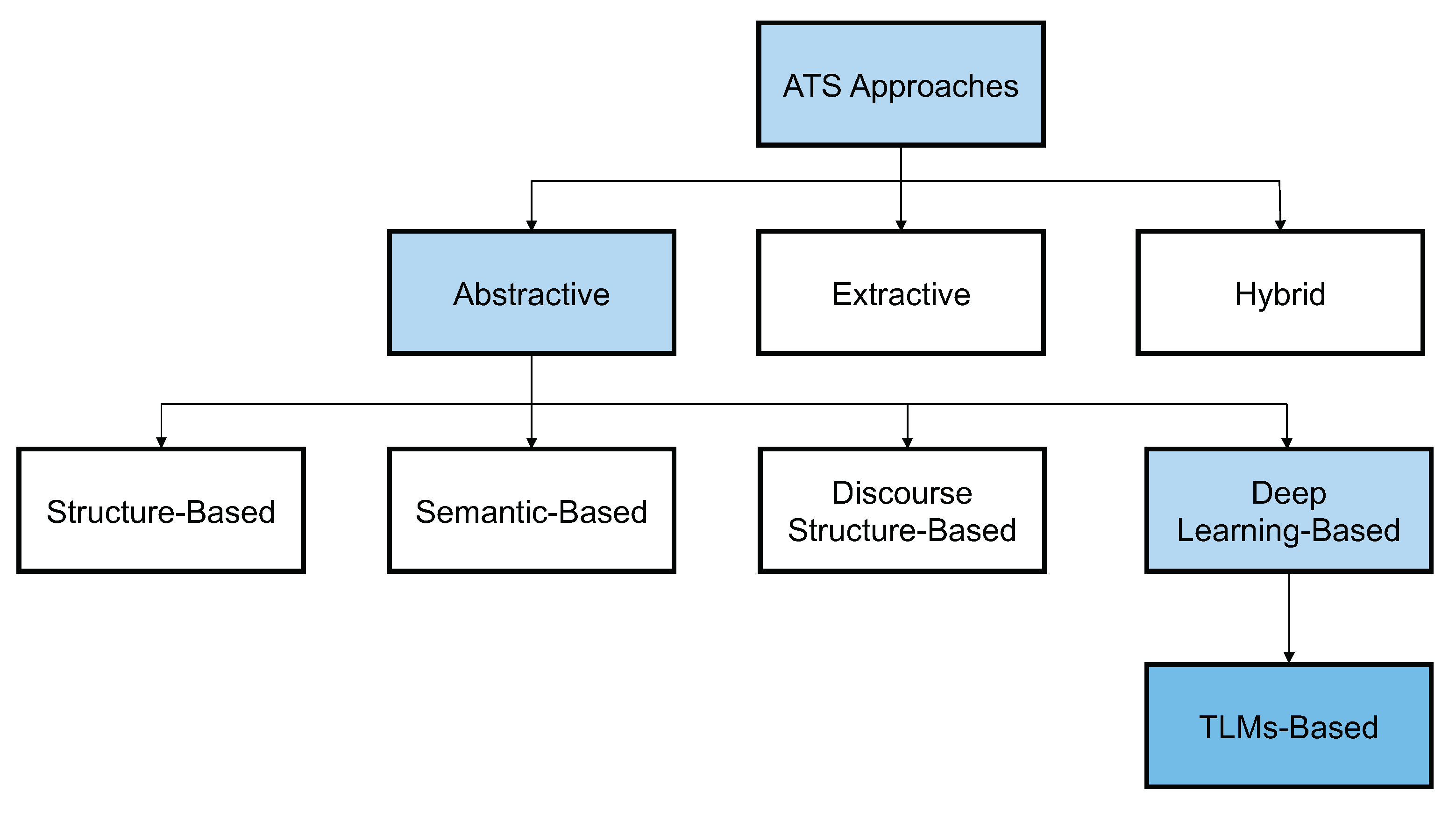

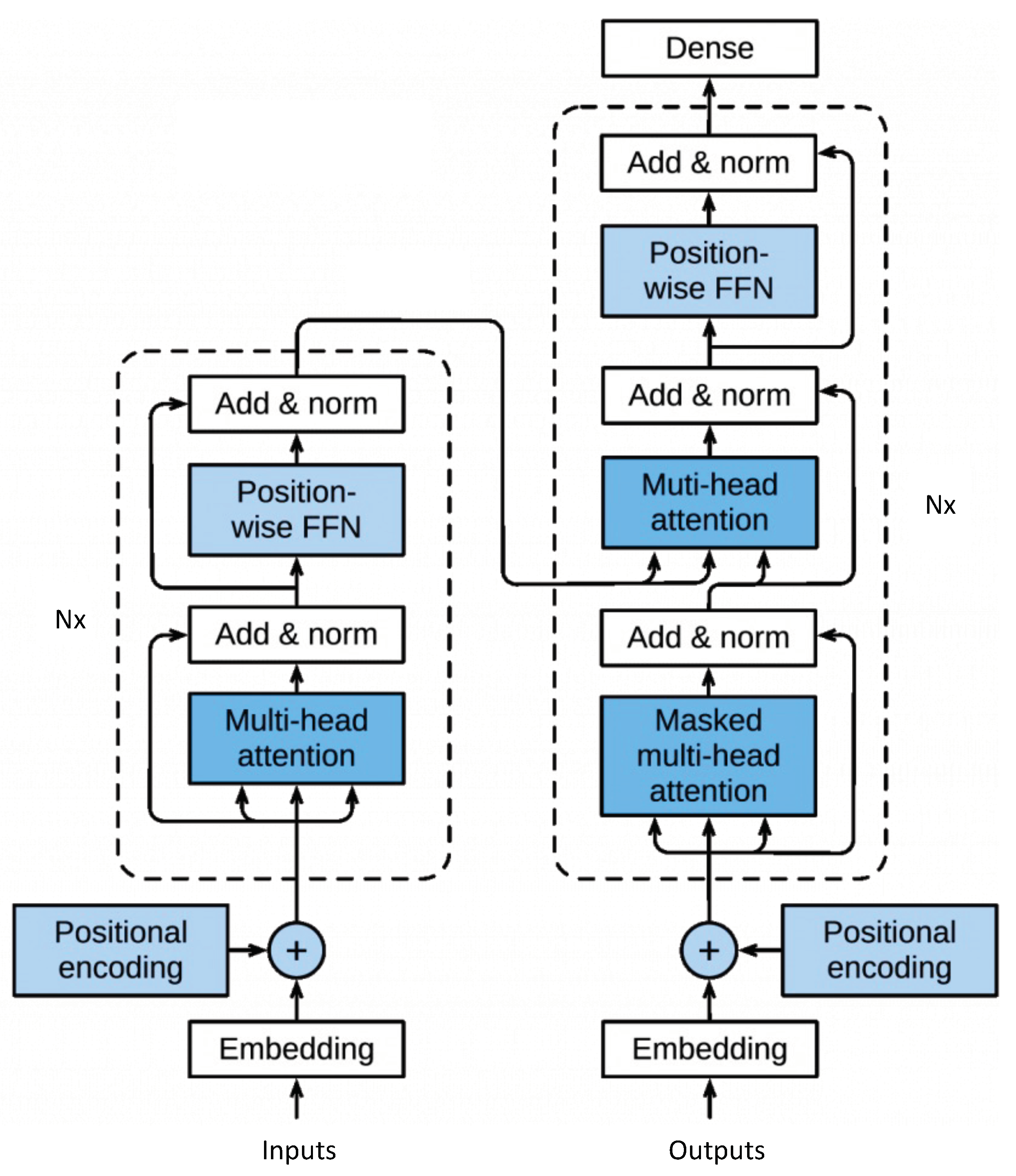

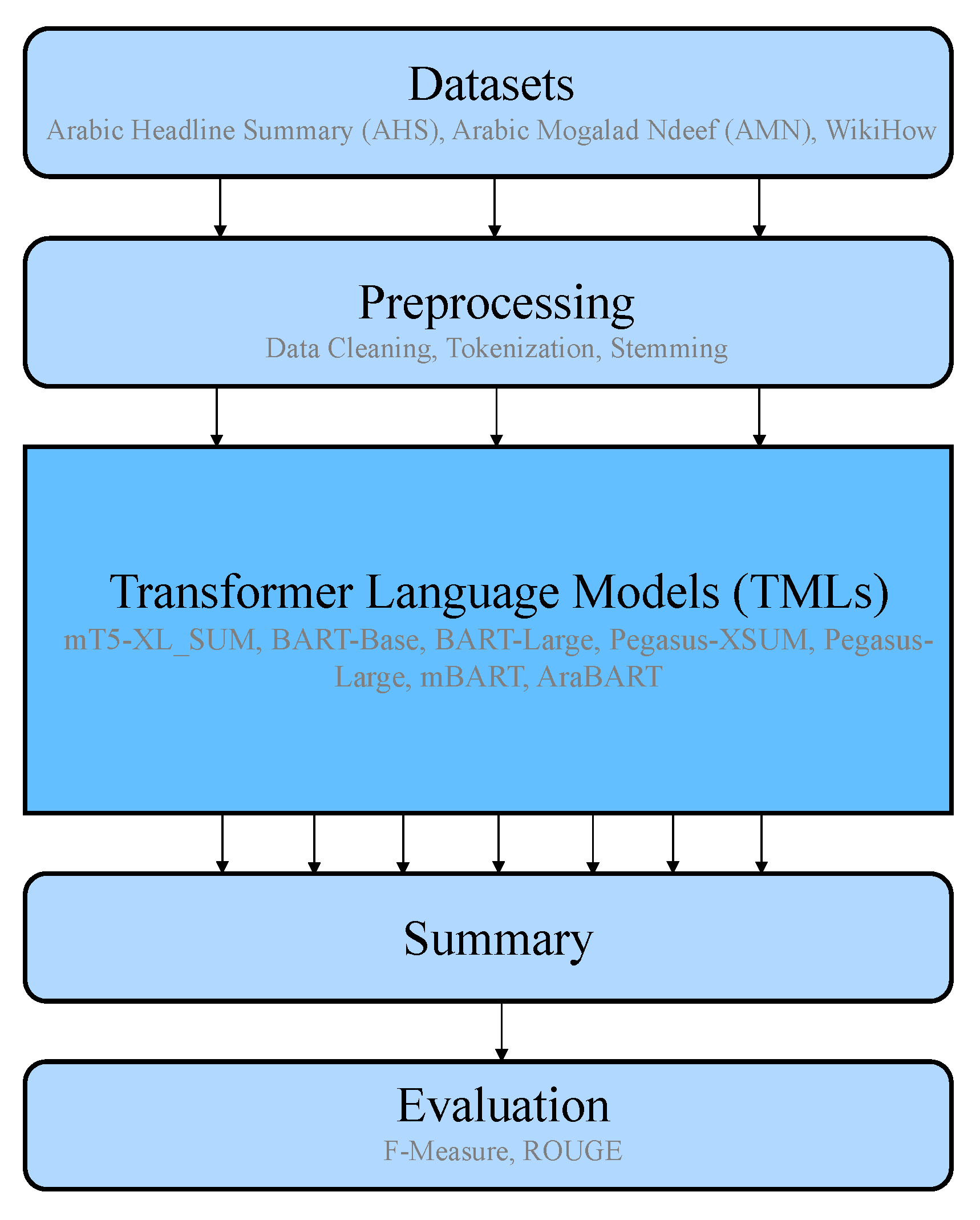

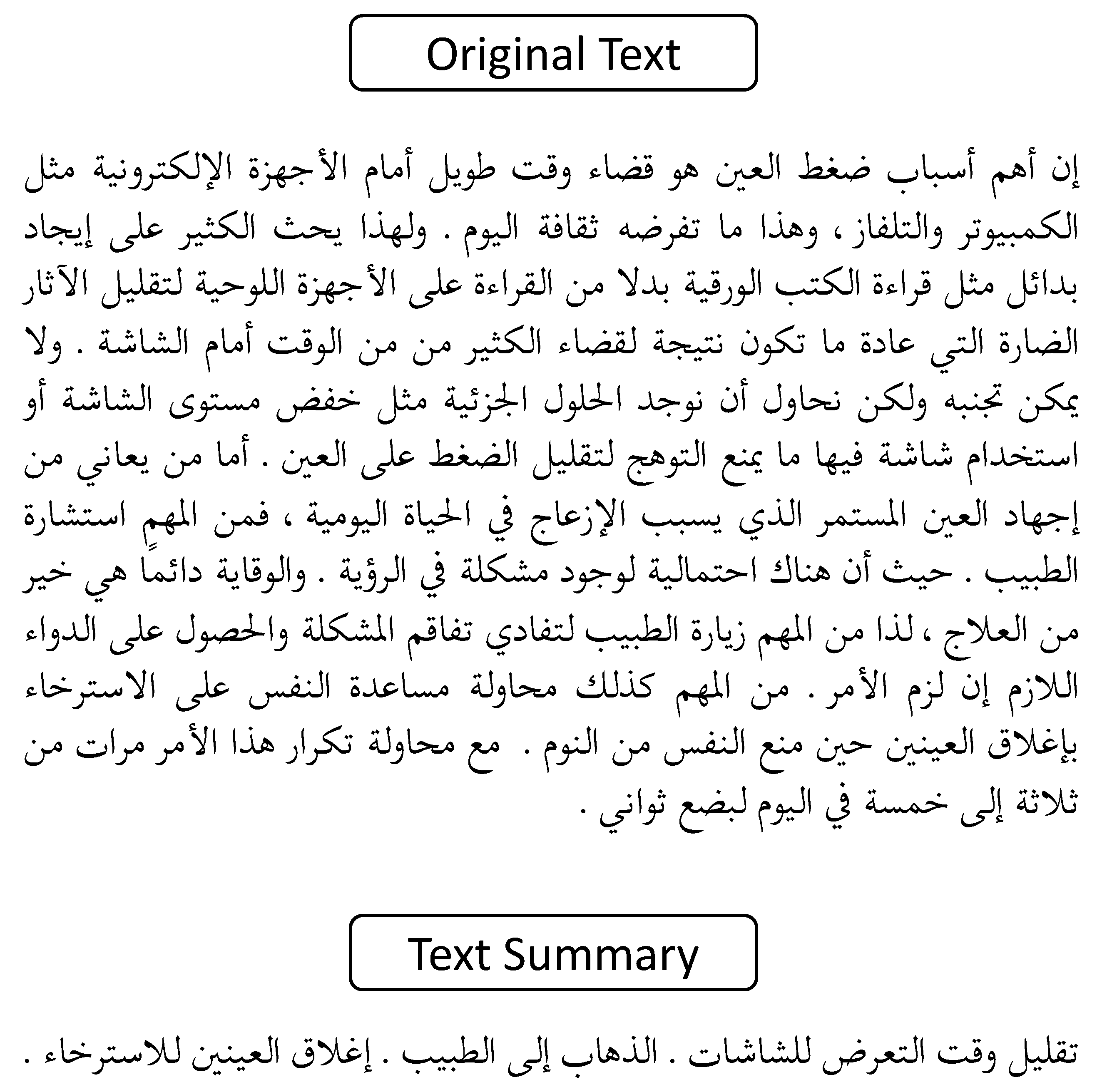

3. Text Summarization Methodology

4. Experiments

4.1. Arabic ATS Datasets

- Arabic Headline Summary (AHS) [13]. It is utilized for the abstractive summary of a single document. The news on the Mawdoo3 website served as the source for this dataset [15]. There are 300k texts in it. Opening sentences (introduction paragraph) were regarded as the original text, and their titles serve as the summary.

- WikiHow Dataset [50]. It includes 770,000 WikiHow articles and summary pairs in 18 different languages. It also contains a summary of one abstractive document and 29,229 Arabic newswire texts.

- Arabic News Articles (ANA) [26]. A combination of multiple Arabic datasets from different news articles, Arabic News and Saudi Newspapers, formed this large ANA dataset with 265k news articles. Each article in this dataset has one summary.

4.2. Used Transformer Language Models (TLMs)

- mBART: Following BART, mBART [47] is constructed using a seq2seq model with denoising as a pre-training objective. It models architecture that combines an encoder and a decoder using a typical seq2seq. The pre-training assignment incorporates a new approach where text ranges are exchanged with a single mask token and modifying the starting sentences order randomly. The autoregressive BART decoder is controlled for developing sequential NLP tasks such as text summarization. The denoising pre-training objective is strongly tied to the fact that the data are taken from the input but altered. As a result, the encoder’s input is the input sequence embedding, and the decoder’s output is produced autoregressively. BART only pre-trained for English, but mBART thoroughly investigated the impacts of pre-training on many sets of languages (e.g., Japanese, French, German, and Arabic). It utilized a common sequence-to-sequence Transformer design with 12 layers of encoders and 12 layers of decoders on 16 heads (corresponding to around 680 M parameters). The training was stabilized by adding a layer-normalization layer on top of the encoder and decoder.

- mT5: Transfer learning is the principle underpinning the mT5 [51] model, which is an extended version of T5. The original model was initially trained using transfer learning on a task with a lot of text before being fine-tuned on a downstream task to help the model develop general-purpose abilities and knowledge that can be used for tasks such as summarizing. T5 employed a sequence-to-sequence creation technique that produces an autoregressive output from the decoder after feeding it the encoded input through cross-attention layers. T5 only pre-trained for English; however, mT5 came to carefully examine the effects of pre-training on various natural languages, including Arabic.

- PEGASUS: A sequence-to-sequence model, PEGASUS [48] separates out important lines from the input text and compiles them as independent outputs. Additionally, selecting only pertinent sentences works better than selecting sentences at random. As it is analogous to the work of reading the complete document and producing a summary, this style is chosen and preferred for abstractive summarizing.

- AraBART: The architecture of AraBART [11], which has 768 hidden dimensions and 6 encoder and 6 decoder layers, is based on that of BART Base. AraBART has 139 M parameters in total. To stabilize training, it has a normalization layer on top of the encoder and the decoder. Sentencepiece is used by AraBART to construct its vocabulary. A randomly chosen subset of the pre-training corpus, measuring 20 GB in size, was used to train the sentencepiece model. The size of the vocabulary is 50 K tokens.

4.3. Experimental Setup

- Tokenization, to separate the info texts into tokens.

- The report has been then harmed by supplanting ranges of text with the “MASK” token.

- Frame every token to an index in light of the pre-trained models lexicon.

4.4. Evaluation

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Saggion, H.; Poibeau, T. Automatic text summarization: Past, present and future. In Multi-Source, Multilingual Information Extraction and Summarization; Springer: Berlin/Heidelberg, Germany, 2013; pp. 3–21. [Google Scholar]

- Rahul; Rauniyar, S.; Monika. A survey on deep learning based various methods analysis of text summarization. In Proceedings of the 2020 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–28 February 2020; pp. 113–116. [Google Scholar]

- Fejer, H.N.; Omar, N. Automatic Arabic text summarization using clustering and keyphrase extraction. In Proceedings of the 6th International Conference on Information Technology and Multimedia, Putrajaya, Malaysia, 18–20 November 2014; pp. 293–298. [Google Scholar]

- Syed, A.A.; Gaol, F.L.; Matsuo, T. A survey of the state-of-the-art models in neural abstractive text summarization. IEEE Access 2021, 9, 13248–13265. [Google Scholar] [CrossRef]

- Siragusa, G.; Robaldo, L. Sentence Graph Attention For Content-Aware Summarization. Appl. Sci. 2022, 12, 10382. [Google Scholar] [CrossRef]

- Allahyari, M.; Pouriyeh, S.; Assefi, M.; Safaei, S.; Trippe, E.D.; Gutierrez, J.B.; Kochut, K. Text summarization techniques: A brief survey. arXiv 2017, arXiv:1707.02268. [Google Scholar] [CrossRef]

- Witte, R.; Krestel, R.; Bergler, S. Generating update summaries for DUC 2007. In Proceedings of the Document Understanding Conference, Rochester, NY, USA, 26–27 April 2007; pp. 1–5. [Google Scholar]

- Fatima, Z.; Zardari, S.; Fahim, M.; Andleeb Siddiqui, M.; Ibrahim, A.; Ag, A.; Nisar, K.; Naz, L.F. A Novel Approach for Semantic Extractive Text Summarization. Appl. Sci. 2022, 12, 4479. [Google Scholar]

- Elsaid, A.; Mohammed, A.; Fattouh, L.; Sakre, M. A Comprehensive Review of Arabic Text summarization. IEEE Access 2022, 10, 38012–38030. [Google Scholar] [CrossRef]

- Boudad, N.; Faizi, R.; Thami, R.O.H.; Chiheb, R. Sentiment analysis in Arabic: A review of the literature. Ain Shams Eng. J. 2018, 9, 2479–2490. [Google Scholar] [CrossRef]

- Kamal Eddine, M.; Tomeh, N.; Habash, N.; Le Roux, J.; Vazirgiannis, M. AraBART: A Pretrained Arabic Sequence-to-Sequence Model for Abstractive Summarization. arXiv 2022, arXiv:2203.10945. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Al-Maleh, M.; Desouki, S. Arabic text summarization using deep learning approach. J. Big Data 2020, 7, 1–17. [Google Scholar] [CrossRef]

- See, A.; Liu, P.J.; Manning, C.D. Get to the point: Summarization with pointer-generator networks. arXiv 2017, arXiv:1704.04368. [Google Scholar]

- Wazery, Y.M.; Saleh, M.E.; Alharbi, A.; Ali, A.A. Abstractive Arabic Text Summarization Based on Deep Learning. Comput. Intell. Neurosci. 2022, 2022, 1566890. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. Xlnet: Generalized autoregressive pretraining for language understanding. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Xu, S.; Zhang, X.; Wu, Y.; Wei, F. Sequence level contrastive learning for text summarization. Proc. AAAI Conf. Artif. Intell. 2022, 36, 11556–11565. [Google Scholar] [CrossRef]

- González, J.Á.; Hurtado, L.F.; Pla, F. Transformer based contextualization of pre-trained word embeddings for irony detection in Twitter. Inf. Process. Manag. 2020, 57, 102262. [Google Scholar] [CrossRef]

- Meškelė, D.; Frasincar, F. ALDONAr: A hybrid solution for sentence-level aspect-based sentiment analysis using a lexicalized domain ontology and a regularized neural attention model. Inf. Process. Manag. 2020, 57, 102211. [Google Scholar] [CrossRef]

- Kahla, M.; Yang, Z.G.; Novák, A. Cross-lingual fine-tuning for abstractive Arabic text summarization. In Proceedings of the International Conference on Recent Advances in Natural Language Processing (RANLP 2021), Online, 1–3 September 2021; pp. 655–663. [Google Scholar]

- Zaki, A.M.; Khalil, M.I.; Abbas, H.M. Deep architectures for abstractive text summarization in multiple languages. In Proceedings of the 2019 14th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 17–18 December 2019; pp. 22–27. [Google Scholar]

- Edmundson, H.P. New methods in automatic extracting. J. ACM 1969, 16, 264–285. [Google Scholar] [CrossRef]

- Mohan, M.J.; Sunitha, C.; Ganesh, A.; Jaya, A. A study on ontology based abstractive summarization. Procedia Comput. Sci. 2016, 87, 32–37. [Google Scholar] [CrossRef]

- El-Kassas, W.S.; Salama, C.R.; Rafea, A.A.; Mohamed, H.K. Automatic text summarization: A comprehensive survey. Expert Syst. Appl. 2021, 165, 113679. [Google Scholar] [CrossRef]

- Hou, L.; Hu, P.; Bei, C. Abstractive document summarization via neural model with joint attention. In Proceedings of the National CCF Conference on Natural Language Processing and Chinese Computing, Dalian, China, 8–12 November 2017; pp. 329–338. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Zhu, X.; Ling, Z.; Wei, S.; Jiang, H. Distraction-based neural networks for document summarization. arXiv 2016, arXiv:1610.08462. [Google Scholar]

- Gu, J.; Lu, Z.; Li, H.; Li, V.O. Incorporating copying mechanism in sequence-to-sequence learning. arXiv 2016, arXiv:1603.06393. [Google Scholar]

- HUB, C.; LCSTS, Z. A Large Scale Chinese Short T e xt Summarization Dataset. In Proceedings of the Procee-dings of the 2015 Conference on Em pirical Methods in Natural Language Proces sing. Lisbo n: ACL, Lisbon, Ptugal, 17–21 September 2015; Volume 2, pp. 1967–1972. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Elmadani, K.N.; Elgezouli, M.; Showk, A. BERT Fine-tuning For Arabic Text Summarization. arXiv 2020, arXiv:2004.14135. [Google Scholar]

- Abu Nada, A.M.; Alajrami, E.; Al-Saqqa, A.A.; Abu-Naser, S.S. Arabic text summarization using arabert model using extractive text summarization approach. Int. J. Acad. Inf. Syst. Res. 2020, 4, 6–9. [Google Scholar]

- El-Haj, M.; Koulali, R. KALIMAT a multipurpose Arabic Corpus. In Proceedings of the Second Workshop on Arabic Corpus Linguistics (WACL-2), Lancaster, UK, 22 January 2013; pp. 22–25. [Google Scholar]

- Al-Abdallah, R.Z.; Al-Taani, A.T. Arabic single-document text summarization using particle swarm optimization algorithm. Procedia Comput. Sci. 2017, 117, 30–37. [Google Scholar] [CrossRef]

- Bhat, I.K.; Mohd, M.; Hashmy, R. Sumitup: A hybrid single-document text summarizer. In Soft Computing: Theories and Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 619–634. [Google Scholar]

- Martin, L.; Muller, B.; Suárez, P.J.O.; Dupont, Y.; Romary, L.; de La Clergerie, É.V.; Seddah, D.; Sagot, B. CamemBERT: A tasty French language model. arXiv 2019, arXiv:1911.03894. [Google Scholar]

- Safaya, A.; Abdullatif, M.; Yuret, D. KUISAIL at SemEval-2020 Task 12: BERT-CNN for Offensive Speech Identification in Social Media. In Proceedings of the Fourteenth Workshop on Semantic Evaluation, Barcelona, Spain, 12–13 December 2020. [Google Scholar]

- Antoun, W.; Baly, F.; Hajj, H. Arabert: Transformer-based model for arabic language understanding. arXiv 2020, arXiv:2003.00104. [Google Scholar]

- Inoue, G.; Alhafni, B.; Baimukan, N.; Bouamor, H.; Habash, N. The interplay of variant, size, and task type in Arabic pre-trained language models. arXiv 2021, arXiv:2103.06678. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. Techincal Rep. OpenAI. 2018, pp. 1–12. Available online: https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 14 November 2022).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual denoising pre-training for neural machine translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P. Pegasus: Pre-training with extracted gap-sentences for abstractive summarization. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 11328–11339. [Google Scholar]

- Hasan, T.; Bhattacharjee, A.; Islam, M.S.; Samin, K.; Li, Y.F.; Kang, Y.B.; Rahman, M.S.; Shahriyar, R. XL-sum: Large-scale multilingual abstractive summarization for 44 languages. arXiv 2021, arXiv:2106.13822. [Google Scholar]

- Ladhak, F.; Durmus, E.; Cardie, C.; McKeown, K. WikiLingua: A new benchmark dataset for cross-lingual abstractive summarization. arXiv 2020, arXiv:2010.03093. [Google Scholar]

- Xue, L.; Constant, N.; Roberts, A.; Kale, M.; Al-Rfou, R.; Siddhant, A.; Barua, A.; Raffel, C. mT5: A massively multilingual pre-trained text-to-text transformer. arXiv 2020, arXiv:2010.11934. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; ACL Anthology: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Rouge, L.C. A package for automatic evaluation of summaries. In Proceedings of the Proceedings of Workshop on Text Summarization of ACL, Barcelona, Spain, 25–26 July 2004. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

| Models | Layers | Parameters | Vocab Size | Epochs | Batch Size |

|---|---|---|---|---|---|

| mT5 | 6 | 600 M | 250 K | 3 | 6 |

| PEGASUS-XSum | 16 | 568 M | 96 K | 3 | 6 |

| PEGASUS-Large | 16 | 568 M | 96 K | 3 | 6 |

| mBART-Large | 12 | 680 M | 250 K | 3 | 6 |

| AraBART | 6 | 139 M | 50 K | 3 | 6 |

| Models | ROUGE-1 | ROUGE-2 | ROUGE-L | ROUGE-LSUM |

|---|---|---|---|---|

| F-Score % | ||||

| mT5 | 83.34 | 58.58 | 76.85 | 77.78 |

| PEGASUS-XSum | 88.89 | 75.75 | 84.57 | 84.88 |

| PEGASUS-Large | 88.27 | 73.74 | 83.95 | 84.25 |

| mBART-Large | 26.23 | 8.82 | 25.92 | 25.92 |

| AraBART | 85.83 | 70.90 | 85.01 | 85.01 |

| Models | ROUGE-1 | ROUGE-2 | ROUGE-L | ROUGE-LSUM |

|---|---|---|---|---|

| F-Score % | ||||

| mT5 | 48.74 | 31.22 | 46.45 | 46.58 |

| PEGASUS-XSum | 66.70 | 58.41 | 66.50 | 66.50 |

| PEGASUS-Large | 58.37 | 48.63 | 58.16 | 58.16 |

| mBART-Large | 27.70 | 9.81 | 27.70 | 27.70 |

| AraBART | 34.74 | 17.50 | 34.08 | 34.08 |

| Models | ROUGE-1 | ROUGE-2 | ROUGE-L | ROUGE-LSUM |

|---|---|---|---|---|

| F-Score % | ||||

| mT5 | 53.16 | 25.54 | 50.66 | 51.00 |

| PEGASUS-XSum | 94.49 | 88.63 | 94.45 | 94.41 |

| PEGASUS-Large | 94.62 | 88.72 | 94.58 | 94.58 |

| mBART-Large | 31.91 | 5.90 | 30.45 | 30.58 |

| AraBART | 44.12 | 12.18 | 42.16 | 42.12 |

| Models | ROUGE-1 | ROUGE-2 | ROUGE-L |

|---|---|---|---|

| F-Score % | |||

| BiLSTM | 51.49 | 12.27 | 34.37 |

| PEGASUS-XSum | 66.70 | 58.41 | 66.50 |

| Models | ROUGE-1 | ROUGE-2 | ROUGE-L |

|---|---|---|---|

| F-Score % | |||

| BiLSTM | 44.28 | 18.35 | 32.46 |

| PEGASUS-XSum | 88.89 | 75.75 | 84.57 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chouikhi, H.; Alsuhaibani, M. Deep Transformer Language Models for Arabic Text Summarization: A Comparison Study. Appl. Sci. 2022, 12, 11944. https://doi.org/10.3390/app122311944

Chouikhi H, Alsuhaibani M. Deep Transformer Language Models for Arabic Text Summarization: A Comparison Study. Applied Sciences. 2022; 12(23):11944. https://doi.org/10.3390/app122311944

Chicago/Turabian StyleChouikhi, Hasna, and Mohammed Alsuhaibani. 2022. "Deep Transformer Language Models for Arabic Text Summarization: A Comparison Study" Applied Sciences 12, no. 23: 11944. https://doi.org/10.3390/app122311944

APA StyleChouikhi, H., & Alsuhaibani, M. (2022). Deep Transformer Language Models for Arabic Text Summarization: A Comparison Study. Applied Sciences, 12(23), 11944. https://doi.org/10.3390/app122311944