Abstract

Hand gesturing is one of the most useful non-verbal behaviors in the classroom, and can help students activate multi-sensory channels to complement teachers’ verbal behaviors and ultimately enhance teaching effectiveness. The existing mainstream detection algorithms that can be used to recognize hand gestures suffered from low recognition accuracy under complex backgrounds and different backlight conditions. This study proposes an improved hand gesture recognition framework based on key point statistical transformation features. The proposed framework can effectively reduce the sensitivity of images to background and light conditions. We extracted key points of the image and establish a weak classifier to enhance the anti-interference ability of the algorithm in the case of noise and partial occlusion. Then, we used a deep convolutional neural network model with multi-scale feature fusion to recognize teachers’ hand gestures. A series of experiments were conducted on different human gesture datasets to verify the performance of the proposed framework. The results show that the framework proposed in this study has better detection and recognition rates compared to the you only look once (YOLO) algorithm, YOLOv3, and other counterpart algorithms. The proposed framework not only achieved 98.43%, measured by F1 score, for human gesture images in low-light conditions, but also has good robustness in complex lighting environments. We used the proposed framework to recognize teacher gestures in a case classroom setting, and found that the proposed framework outperformed YOLO and YOLOv3 algorithms on small gesture images with respect to recognition performance and robustness.

1. Introduction

Classroom teaching is a kind of educational activity in which teachers use verbal and non-verbal means to articulate their views, transmit learning-related information, and manage classroom interactions, so as to influence students’ knowledge acquisition and create a classroom atmosphere. Studies have shown that classroom success is largely achieved through teachers’ non-verbal behaviors, rather than their verbal behaviors [1]. Non-verbal behaviors generally include teachers’ eye contact, facial expressions, voice variations, and hand gestures, etc. Non-verbal behaviors can help students activate multiple sensory channels to complement teachers’ verbal behaviors, thereby stimulating students’ learning interest, optimizing the teaching atmosphere, enhancing teacher–student interaction, and ultimately improving classroom outcomes [2,3]. In the family of non-verbal cues of the classroom, hand gestures of teachers are a frequently-used non-verbal means for communication compared to other behaviors, because they represent the most common, natural, and expressive form of body language to convey emotions and meanings via human interaction [4]. Thus, it is easy to associate gesture movements with speech and cognitive effort during class [5]. Teachers’ hand gestures in the classroom can be divided into symbolic gestures that indicate standing up or being quiet, depictive gestures that describe the size or shape of an object, and emotional gestures that praise somebody or express feeling happy about something. With the empowerment of information technology and new computing capacity, many scholars have claimed that the estimation of teachers’ hand gestures can be used to identify the specific pedagogical implications of teachers’ behaviors in the classroom. It has been realized that hand gestures can provide a link between representations and ideas, make abstract concepts more concrete, and direct learner attention [6].

Early hand gesture recognition was mainly based on data gloves and computer vision [7]. Data-glove-based hand gesture recognition requires a large number of sensors, which is difficult to achieve in real classroom scenarios. Vision-based hand gesture recognition mainly extracts the features of gesture and then classifies them through supervised machine learning algorithms. In order to improve the accuracy of gesture classification, it is generally imperative to derive information from gesture images, such as shape, texture, and context, that can describe the gestures as much as possible. However, the extraction of gesture features is easily affected by the surrounding environment, resulting in a lot of noise in the geometry, texture, and contextual features of gestures. Therefore, although vision-based hand gesture recognition is free from the shackles of data gloves, there are still problems, such as the low recognition accuracy and weak generalization ability of the model. In recent years, some scholars have transformed the hand gesture recognition problem into a target detection problem, and proposed a regional convolutional neural network (R-CNN) [8], which improves the recognition speed and accuracy. Authors in the literature [9] used deep neural networks to detect human gestures and achieved good results. Since they used the gradient descent method, all parameters needed to be adjusted continuously during the training process, so the training time of this method was too long, and the practicability was not very satisfactory. To reduce the training time, Howard et al. in the literature [10] and Zhang et al. in the literature [11] proposed the MobileNet framework and the ShuffleNet framework, respectively, to improve the running speed. However, the design of the relevant network structure paid specific attention to reducing the number of network parameters to improve the detection speed, while ignoring the feature expression ability of the network. Authors in the literature [12] combined extreme machine learning and principal component analysis. Although the accuracy of target classification was improved to a certain extent, the algorithm was less robust to noise and had insufficient performance for low-brightness target detection. An Adaboost face detection algorithm based on statistical transformation was proposed in the literature [13]. The algorithm built a weak classifier for each pixel for ensemble training, which can effectively segment similar areas of the image. Since all pixels needed to be trained, the training time of the algorithm increased sharply with the increase in the input image resolution. Furthermore, when the background of the input image is abruptly changed, the accuracy of the algorithm decreases significantly. More recently, scholars proposed you only look once (YOLO), YOLOv2, YOLOv3 deep learning frameworks [14]. These frameworks improved the speed of detection while taking into account the accuracy of small target recognition. However, they often extract deep features by designing deeper network structures, which require high computing power of hardware and cannot be applied to real classroom scenarios.

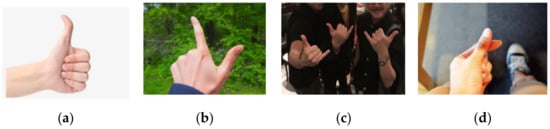

Successful estimation of teachers’ hand gestures is a difficult task because the complexity of teachers’ surrounding environment and backlight differences can significantly affect hand gesture recognition accuracy. This imposes severe restrictions on teachers’ hand gesture recognition in the classroom environment. First, hand gesture pictures taken in complex environments contain a lot of noise, which will be extracted into the feature space and interfere with the recognition of normal images. For example, a gesture with a clean background is shown in Figure 1a, and gestures with complex backgrounds are shown in Figure 1b–d. Clearly, recognizing hand gestures against a complex background is much more difficult than recognizing hand gestures against a clean background. In addition, the recognition accuracy of teachers’ hand gestures is also affected by the light intensity. The current algorithms are mainly divided into color-based detection algorithms, shape-based detection algorithms, and deep-learning-based detection algorithms [15]. Color-based detection is mainly based on RGB and HSV (hue, saturation, value) color spaces, creating a saliency map by segmenting specific colors, which can detect candidate regions quickly and efficiently. However, color-based detection is easily affected by light, and cannot meet the requirements of hand gesture recognition under different backlight conditions [16]. Shape-based detection utilizes the particularity of human gestures in shape to detect their edges. However, when the teacher’s hand gesture is blurred or occluded by motion, the accuracy of the algorithm will decrease greatly.

Figure 1.

Gesture images with different backgrounds. (a) presents a gesture with a clean background and (b–d) present gestures with complex backgrounds.

In order to effectively solve the problem of hand gesture recognition under complex backgrounds and different backlight conditions, this paper proposes an improved Adaboost hand gesture recognition algorithm based on key point statistical transformation features, called the modified census transform (MCT). First, we extract the MCT features of the image to increase the robustness of the algorithm to illumination changes, enabling the estimation of teachers’ hand gestures under poor lighting. Second, we extract the key points of the image to construct the Adaboost ensemble classifier, which can effectively shorten the training time and meet the needs of real-time detection on the premise of improving the algorithm’s anti-occlusion ability. Finally, the extracted target features are fused through a multi-scale neural network, so as to improve the accuracy of teachers’ hand gesture recognition under different backlights.

2. Related Works

2.1. Hand Gestures in Class

Hand gesturing is an effective form of non-verbal communication in the classroom. Many researchers believe that the quality of teacher–student interactions can be promoted if teachers make proper use of hand gestures [17]. Students’ cognitive abilities or learning motivations can be improved when teachers use hand gestures to compensate the limitations of oral presentation [18]. Hand gestures of teachers are usually defined as spontaneous movements of hands and arms that enhance the student learning process by clarification and description of the facts. Additionally, teachers can use hand gestures to direct the students’ classroom attention, making their eyes focus on specific instructional elements and course materials to enhance learning achievements. Scholars have suggested that the combination of words and hand gestures can make the class lively and dynamic, and can also change the cognitive resources recruited while learning [19]. Previous studies have used hand gestures as an integral element of instructional communication when teachers performed mathematical instructions [20]. Some studies have examined the relationship between learning efficiency and the number of gestures they used. Authors in the literature [21] have reported that adult students used hand gestures more frequently than the native students in the context of language learning. Teachers and students usually increased the number of hand gestures when they experienced difficulty in expressing themselves in the classroom [22]. Other evidence has been found that a class with teachers’ hand gestures is more effective than a class without teachers’ hand gestures [23]. Students tend to uptake more instructional information and perform deeper knowledge reasoning. With the help of teachers’ hand gestures, students in the class can better understand the materials and learning activities when following the instructions of teachers’ hand gestures [24].

Hand gesturing occurs not only in traditional face-to-face instruction, but also in physical–virtual immersive instruction. Authors in the literature [25] used computer vision technology to recognize students’ hand gestures from live images to enable intuitive interactions for interactive cultural learning. Students in the learning environment could mark route maps in 2D and translate, rotate, and scale architecture models in 3D using hand gestures, which enhanced interpersonal communication between teachers and students. Hand gesturing in an immersive learning environment is a fundamental component of human–computer interaction. Authors in the literature [26] developed a hand gesture recognition technology to help teachers to control the multi-screen touchable teaching tools. Teachers can use these tools to slide and call the eraser tool to rub out incorrect content. Authors in the literature [27] investigated the effectiveness of using gesture-based instruction to teach matching skills to school-aged students, and found that students acquired the target skills and maintained them at a high level.

2.2. Recognition of Hand Gestures

Hand gesture recognition can be divided into a static approach and dynamic approach. The static approach concentrates on the inner information of an image representing the shape or size of an object. This approach typically uses a general classifier or a template matcher, which may include linear learners and non-linear learners depending on whether the data are separated linearly [28]. The recognition of static hand gestures is mainly based on relatively differentiable gestures, which can be quite similar when viewed in two dimensions [29]. However, the dynamic approach is more complicated than the static approach, and aims to explore the characteristics of spatial–temporal movements, requiring techniques that handle motion-based gesture representation [30]. Both approaches, with regard to hand gesture recognition, provide facilitative communication and natural interaction means in a diversity of real scenarios. However, they can be significantly different in terms of recognizing static and dynamic hand gestures. The choice mainly replies on the specific hand gesture representation. For example, authors in the literature [31] suggested the use of a contour line Fourier descriptor of a segmentation image obtained by mean shift algorithm to classify static hand gesture. Other authors in the literature [32] proposed classifying the given hand gesture represented by hand contour into some predefined finite number of gesture classes, such as Open, Close, Cut, Paste, Maximize, and Minimize. However, dynamic hand gestures can be modeled as state transitions with temporal or probabilistic constraints. Therefore, authors in the literature [33] proposed a hidden Gauss–Markov model with a short-term Fourier transform to identify the best likelihood gesture model for a given pattern.

To recognize a hand gesture from images more efficiently, many scholars have concentrated on the power of traditional machine learning algorithms. Recursive induction learning was proposed to use extended variable-valued logic to induce rules from sets of feature vectors [34]. The locally linear embedding (LLE) algorithm was proposed in the literature [35] to map high-dimensional data to low-dimensional space, and which tried to discover the intrinsic structure and neighborhood relationship of the data based on similarity measurements. A probabilistic neural network (PNN) was used to classify different gestures based on the adaptive region-based active contour [36]. PNN has been identified with sound features of training speed, classification accuracy, and negligible retraining time. Authors in the literature [37] proposed a multi-scale and multi-angle hand gesture cognition algorithm using the single Gaussian model and SVM classifier. They processed the shape and contour of the hand gesture with the distribution of intensity gradients and edge directions so that the algorithm could capture the texture information and improve the rotation invariance of the image. Authors in the literature [38] integrated Bayesian attention, used the visual cortex model to perform feature extraction on the detected gesture regions, and then utilized the SVM classifier for hand gesture recognition. However, these methods are too complicated to calculate, and have a large time overhead, resulting in poor practicability of the algorithm. Most of them fuse hand geometric features, palm prints, and hand veins to represent the hand gestures, but the method that designs features artificially is difficult to adapt to the diversity of gestures, resulting in a low gesture recognition accuracy. Another significant limitation of these methods is that the algorithms are less robust in complex contexts [39], which means the algorithms may work appropriately in one scenario but may not obtain good performance in other scenarios. Although authors in the literature [40] extracted the histogram of oriented gradients (HOG) of an image and used neural networks for gesture recognition, this will affect the gradient at the contour of the gesture when there is a skin-like or skin-colored background in the image, thereby reducing the recognition rate of the algorithm. Authors in the literature [32] used a moment algorithm to describe hand gestures, and used a multi-layer neural network classifier with backpropagation for hand gesture recognition. However, since the extracted features are relatively homogeneous and the classifier structure is simple, the accuracy reported by the authors was not satisfactory in applications with complex backgrounds.

In recent years, the convolutional neural network (CNN) has achieved remarkable results in the field of computer vision. Authors in the literature [41] have shown that the CNN and stacked denoising autoencoder are capable of learning the complex hand gesture classification task with lower error rates. Examples of current research using CNNs in the field of hand gesture recognition are as follows. Authors in the literature [42] used CNN and autoencoder techniques to recognize gestures by converting the image into binary form and using threshold division to obtain gesture regions. Authors in the literature [43] used the skin color model and reconstructed the obtained gesture region into a grayscale image. Finally, they input the gesture region into the CNN for feature extraction and gesture recognition. These works either used threshold detection or skin color detection, which are susceptible to the surrounding environment. When there are complex background disturbances such as illumination and skin color in the image, the performance of the algorithm is seriously degraded. In brief, when there are complex background disturbances such as human skin color in the image, most CNN-based methods cannot learn effective patterns. It should be noted that scholars in the literature [44] have recently studied hand gesture datasets and reported high accuracy based on a hybrid network architecture combined with ensemble classifiers. The architecture used VGG16 as a feature extractor, where the weights for the convolutional layers were adopted from the pre-trained model. However, it may suffer from overfitting as the layers go deeper and more features are extracted in convolutional layers. In addition, random forest was used for ensemble learners. When the noise in the extracted features increases, the reliability of the results of random forest classification will be sharply reduced. As a consequence, their proposed architecture cannot work properly on images that have both complex backgrounds and different backlight conditions.

3. Proposed Framework

Both artificial feature extraction and deep-learning-based methods fail to completely improve the recognition of gestures in complex backgrounds. One of the important reasons for this is that complex backgrounds greatly interfere with gesture shape detection. Therefore, the gesture recognition algorithm proposed in this study includes gesture shape detection and gesture recognition.

3.1. Shape Detection Based on Illumination Invariance

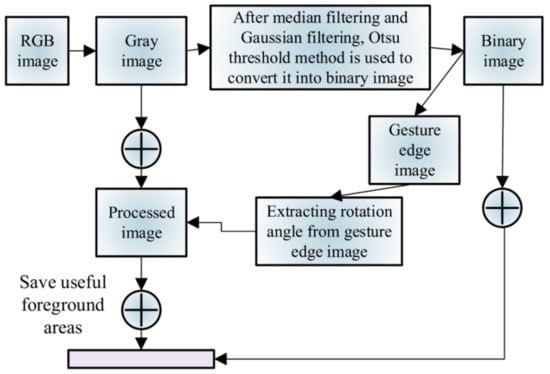

The preprocessing steps of the gesture image are shown in Figure 2. The aim of preprocessing is to deal with the shape detection problem under different lighting conditions. Through this, we can uniformly process the data to align the shape of the gesture for efficient input into the DCNN model. First, we convert the RGB image to a grayscale image to remove the influence of color features; then, we convert the grayscale image to a binary image and select the foreground of the binary image as the extraction region of the gesture. The target data after the preprocessing step are imputed into the proposed CNN model, which is represented by the purple block in Figure 2.

Figure 2.

Preprocessing of gesture images.

We use local binary pattern (LBP) to describe the texture features of gesture images. According to the literature [45], the LBP operator can compare the gray value of the center pixel and the adjacent pixel from a 3 × 3 window. The local area texture calculation based on LBP is shown in Formula (1).

where and represent grayscale images of adjacent points and the center point, respectively, represents the coordinates of the center point, and .

For image samples under different lighting conditions, the brightness at pixel can be expressed by Formula (2).

where is the brightness of pixel , and are the light intensity and surface reflectivity, respectively, and g and p are the camera parameters. It can be seen that the brightness of pixel is determined by the light intensity of the light source and the reflectivity of the object. If the light intensity is kept at a certain value, the relationship between pixel brightness and object reflectivity can be computed by Formula (3).

where is the bias constant. Obviously, the brightness of pixel is only related to the reflectivity of the object. Therefore, the influence of different light intensities in complex environments can be reduced by normalizing the pixels of the input image. In this study, MCT is used as the feature descriptor of image pixels. MCT is a local texture description operator without parameter adjustment. It can detect candidate regions of human gestures, capture the overall structural information of the image, and has strong robustness to illumination and detail transformation.

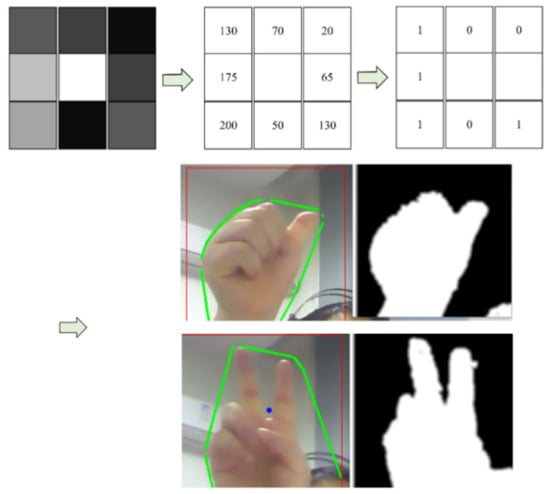

For a 3 × 3 pixel window, MCT compares the gray value of the eight adjacent pixels of the central pixel with the regional average pixel. If the gray value of the pixel is greater than the average gray value, we set it to 1, otherwise we set it to 0; then, we convert any adjacent local block to binary, keeping its value consistent with the central block. Unlike traditional statistical transformation algorithms, MCT can compare each pixel value with the average pixel value of a local area, and is not significantly affected by lighting. At the same time, since only the difference and the average value of eight adjacent pixels in the local area are considered, the use of MCT can reduce the influence of noise at the central pixel on image features, which has strong robustness to complex illumination changes. The MCT calculation process of pixel is shown in Formula (4).

where is the MCT feature expression, N is the number of adjacent pixels of the central pixel , and is the average pixel gray value of N pixels. When the operation result is true, the function returns 1, otherwise it returns 0. is the arrangement of conversion results of the N pixels. The calculation process of some samples based on MCT feature expression is shown in Figure 3.

Figure 3.

MCT process and results demonstration.

3.2. Hand Gesture Recognition

In this study, we use CNN to learn gesture patterns. CNN has the characteristics of local connectivity and weight sharing, so that the closer the pixels are, the greater the influence. The calculation of the convolutional layer is shown in Formula (5) [46].

where is the activation function, is the matrix of grayscale images, is the convolution operation, W is the convolution kernel, and b is the bias.

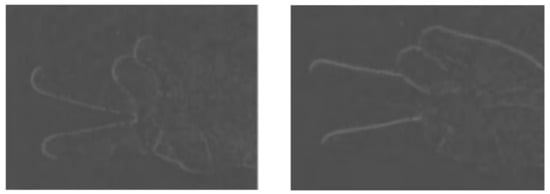

It is worth noting that in Figure 4, we use two different convolution kernels to perform convolution operations. As a consequence, we obtain two exemplified images. However, in a practical setting, we employ multiple convolution kernels to extract image features for further computation. Additional image presentations of convolution kernel operations are omitted due to space limitation.

Figure 4.

Extracted image features of convolution kernel operations.

We use Hu’s invariant for gesture shape matching. Hu’s invariant has the characteristics of rotation, translation, and scale invariance. The 2D p, q order statistics of the function f(x, y) can be defined as Formula (6) [47], where p, q are non-negative integers.

When we consider the centroid point , Equation (6) becomes Equation (7):

where and .

The translation invariance is obtained from the results of the above statistics, and the scale invariance is calculated by Formula (8) [48].

where is a normalization factor.

In order to satisfy the input of the deep convolutional neural network, we choose the logarithm of the absolute value of the seven Hu’s invariants as the eigenvalue. They can be calculated by Formulas (9)–(15) [49], respectively.

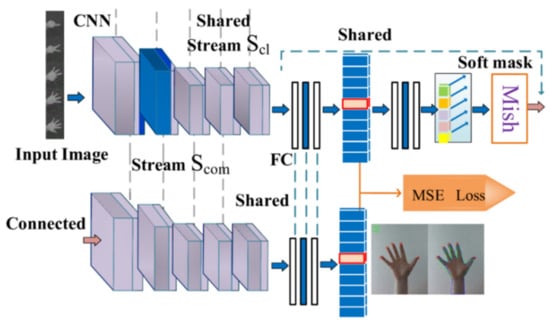

3.3. Improved CNN Based on Multi-Scale Feature Fusion

Deep convolutional neural networks (DCNNs) have complex network structures and powerful feature learning capabilities [50], which motivated us to use DCNN as a classifier to solve the gesture recognition problem. The improvements in the convolutional network used in this study can be found in Figure 5. Overall, we designed a multi-scale fusion framework to implement multi-channel data input; then, we made the latent space of the neural network shareable based on the Resnet architecture; and finally, we used the soft mask operation to select data features to improve the performance of the model.

Figure 5.

Multi-scale fusion framework.

Specifically, the proposed network model mainly includes two channels. The parameters of the network structure of the first channel are the same as those of the traditional DCNN; the convolutional layer of the second channel adopts a convolution kernel with a size of 5 × 5. Because the two channels use convolution kernels of different sizes, the output size of the convolution block will be different when the edge padding remains unchanged. In order to facilitate the feature fusion of the final network and the display of results, this study uses the output size of the convolution block of the 3 × 3 convolution kernel channel as the benchmark, and uses pads and strides of different sizes in the second channel. The output of each convolution and pooling of the second channel is consistent with the convolution and pooling of the first channel. In order to compare with the final output results, the original DCNN network of the first channel only outputs the results of eightfold up-sampling. However, only using convolution kernels of different sizes to enhance the extraction of feature information is not sufficient. This is because larger convolution kernels can extract the global information of the input image, but the local information of the image is easily lost; on the other hand, the convolution kernel with smaller size can extract the local information of the input image, but it is not sensitive enough to the global information of the image, and it is easy to cause blurred contour boundaries. In order to supplement the missing feature information of the two channels due to different convolution kernel sizes, the network model in Figure 5 uses a skip structure to make up for the missing feature information of the respective channels.

To use the skip structure in the convolution process, we add a feature fusion step in the proposed framework. When the number of channels remains unchanged, this step increases the amount of information describing the image features, which is calculated as follows:

where Z represents the output result of a single channel, X and Y represent the input of two channels, and represents the coefficient, which represents the weight of the input features of different channels in the feature fusion process. The larger the value of K, the greater the weight of this feature.

Considering that if there are too few test samples during training, the sample parameters may overfit the training dataset, thereby affecting the generalization performance of the training dataset and producing incorrect classification results. In order to reduce the possibility of overfitting, this study adopts a “dropout” method, which randomly ignores a certain proportion of node responses in the hidden layer during training, and uses the latest mesh activation function to improve the robustness of the network.

4. Experiments

4.1. Experimental Design

As described in this section, we conducted a series of experiments to validate the proposed framework. The experiments were divided into three phases. In the first phase, we examined to what extent the proposed framework detected the shapes of hand gestures with complex backgrounds compared to others algorithms. In the second phase, we examined whether or not the proposed framework can be used to precisely recognize the specific meanings of the hand gestures with the intervention of different backlights. In the last phase, we reviewed a case study in a classroom to determine whether the proposed framework can effectively work in a real classroom to establish the relationships between the teacher and students. All the experiments were conducted on a computing environment with the CPU version of Xeon E5-1620 v3, 8 GB memory capacity, and Ubuntu16.04 operating system, with Darknet-11 configuration for coding.

Machine learning models in this experimentation were implemented based on keras architecture and TensorFlow architecture. The optimizer we chose is Adam, and the activation function is Mesh. We set the dropout rate to 0.002, the batch size to 128, the learning rate to 0.0001, and the interactions to 5000. The dataset was split into 7:2:1 for training, testing, and validation, respectively. All the models were performed three times to obtain the average value.

4.2. Datasets

Considering no public datasets were available with respect to teachers’ teaching in classrooms, we utilized datasets that are well-known in computer vision technology. The datasets used in the experiments were the Marcel dataset [51] and the NUS hand posture dataset-II (NUS-II) [52], both of which are public hand gesture datasets with complex backgrounds. Images in the Marcel dataset were collected in a single indoor scene, and there was no skin-like or skin-colored background. The background of the images in this dataset was relatively simple, including 6 hand gestures and a total of 5494 images. The NUS-II dataset is a 10-class hand gesture dataset produced by the National University of Singapore. Data in the NUS-II dataset were collected under different backlight conditions indoors and outdoors. The background of the images is complex, including 10 hand gestures and 2750 RGB images. The gestures were performed by 40 subjects ranging from 22 to 56 years old, with different ethnicities, against different complex backgrounds. The dataset contained a subset with a natural background and a subset containing the interference of human skin color. A selection of sample images are shown in Figure 6.

Figure 6.

Samples from the NUS-II dataset.

4.3. Algorithms and Evaluation Metrics

To verify the effectiveness of the proposed framework in processing images with different backgrounds, we chose two object detection frameworks, YOLO and YOLOv3 [53], to compare with the proposed framework. The network structure of YOLO is based on the GoogleNet network, adding four convolutional layers and two fully connected layers. It divides the image into 7 × 7 grid cells. When the center of the object falls into a certain grid, the object is assigned two bounding boxes, and finally outputs a 7 × 7 × 30-dimensional tensor. YOLO converts the target detection into a regression model, and the loss function uses the mean square error. YOLOv3 is an improved version of the YOLO algorithm. It uses a deeper network structure called Darknet53, which alternately uses 3 × 3 and 1 × 1 convolution and residual structures. YOLOv3 includes feature pyramid networks for object detection to achieve multi-scale detection. The settings of all parameters were consistent with the original documents. In addition, we selected the Bayesian attention model [38], the deformable part model (DPM) based on object detection [54], and the skin-color-based HSV [55] model as representatives for additional comparison.

Some algorithms, e.g., [44], simply define gesture recognition as a supervised machine learning problem, which would generate a lot of background noise when building feature sets. Such methods cannot deal with gesture recognition images with poor shooting quality, such as the constant movement of the teacher’s position and the light intensity variance in different weather conditions. To overcome this problem, we first detect the shape of the hand to remove the influence of backgrounds and backlights, and keep the extracted gesture features almost pure. Hand gesture detection is reformulated as a single-target detection task, and each pixel of the input image is divided into target and non-target classes; thus, hand gesture detection can be regarded as a binary classification problem. The target is identified if the key points of hand gestures fit for most of the geometric and statistical features, otherwise the pixel is placed in the non-target class. We use the receiver operating characteristic (ROC) curve [56] as the evaluation metric, because it is often used to evaluate the quality of a binary classifier. The x axis of the ROC curve represents the false positive rate, and the y axis of the ROC curve represents the true positive rate. The false positive rate is calculated by FP/(FP + TN), and the true positive rate is calculated by TP/(TP + FN), where FP is false positive, TP is true positive, TN is true negative, and FN is false negative. The closer the ROC curve of the algorithm is to the upper left, the more accurate the algorithm is. In addition, we evaluate the extent to which algorithms detect the shape of hand gestures at the first stage and then recognize their specific meaning using the detection accuracy and localization accuracy in the evaluation system of object detection. The detection accuracy means the extent to which algorithms detect the shapes and boundaries of the object, and the localization accuracy means how frequently the algorithms estimate labels of the object successfully. The higher the detection accuracy and localization accuracy score, the more accurate the algorithm is. Since YOLO is an object detection algorithm, its evaluation index is different from the classification problem. In order to compare with the proposed framework, we use the F1 value as the evaluation index, which is suitable for both object classification and object detection.

4.4. Experimental Results

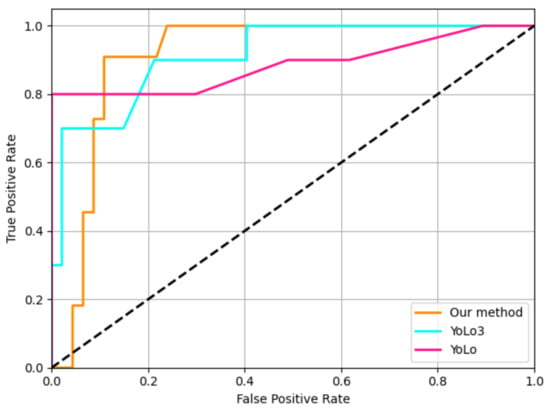

4.4.1. Detection of Hand Gestures with Complex Backgrounds

The running results of the proposed framework, YOLO, and YOLOv3 are shown in Figure 7. The ROC curve was obtained by continuously reducing the probability threshold of the predicted gesture area. It can be seen that the hand gesture detection effect of the YOLO algorithm is the worst and the detection effect of YOLOv3 is improved compared with the YOLO algorithm. The proposed framework achieves higher detection accuracy under different probability thresholds than both YOLO and YOLOv3.

Figure 7.

ROC curves of YOLO, YOLOv3, and the proposed framework.

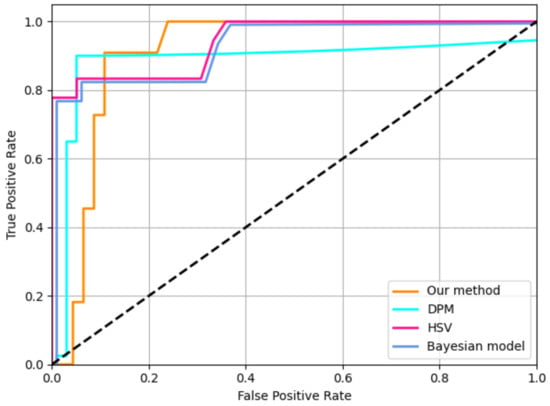

The ROC curves of the proposed framework, Bayesian attention model, DPM model, and skin-color-based HSV model are shown in Figure 8. The proposed framework can obtain higher detection accuracy compared to other models. The DPM model and the skin-color-based HSV model first obtain poor detection accuracy, but gradually surpass the Bayesian attention model. The recognition accuracy of the Bayesian attention model is more stable than other models. The detection accuracy of the DPM model seems to be worse compared to the skin-color-based HSV model.

Figure 8.

ROC curves of Bayesian attention model, DPM model, skin-color-based HSV model, and the proposed framework.

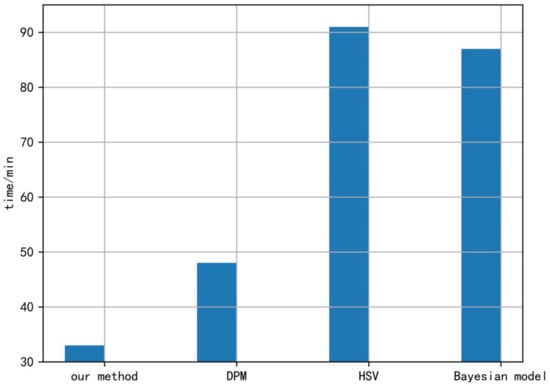

The running times of the proposed framework, Bayesian attention model, DPM model, and skin-color-based HSV model are shown in Figure 9. Figure 9 shows that the proposed framework needs no more than 35 min, the skin-color-based HSV model needs more than 90 min, and the Bayesian attention model needs about 88 min on the dataset. Clearly, the proposed framework needs the least running time to obtain the result.

Figure 9.

Running times of the Bayesian attention model, DPM model, skin-color-based HSV model, and the proposed framework.

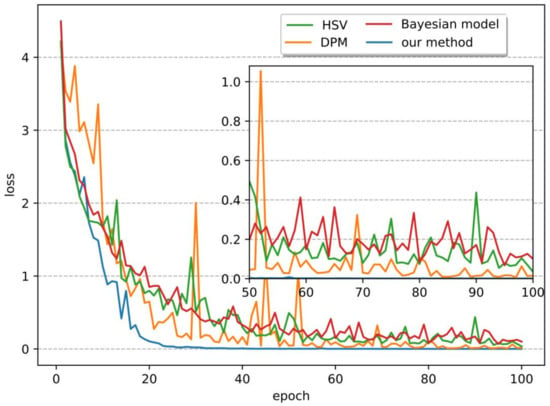

The convergence of the proposed framework, Bayesian attention model, DPM model, and skin-color-based HSV model are shown in Figure 10. Figure 10 shows that the Bayesian attention model, DPM model, and skin-color-based HSV model converge at around 30 epochs. However, the proposed framework converges faster than other models.

Figure 10.

Convergence of the Bayesian attention model, DPM model, skin-color-based HSV model, and the proposed framework.

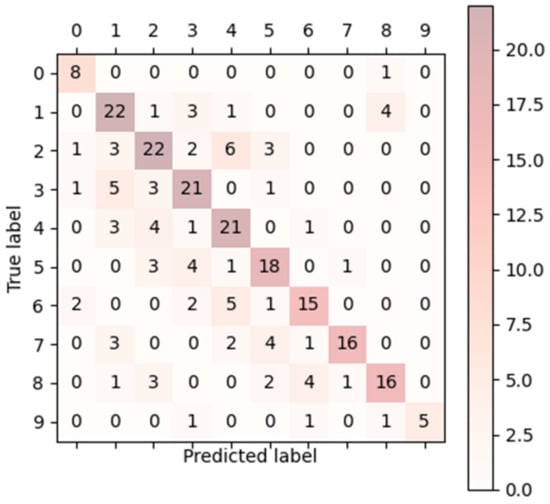

The confusion matrix of NUS-II is provided in Figure 11. The data in Table 1 show that YOLOv3 has an improved detection accuracy and localization accuracy compared to YOLO, but the proposed framework achieves the best hand gesture detection accuracy and localization accuracy among the algorithms. Table 1 also shows the detection accuracy and localization accuracy of the Bayesian model, DPM model, skin-color-based HSV model, and the proposed framework. Since the skin-color-based HSV model and the Bayesian attention model are only detection algorithms that are specially applied to human hands, they do not belong in the category of general object detection algorithms, and do not have localization accuracy indicators. Since there is a large number of skin colors in the dataset, and human body and face skin color is very similar to the color of the hand, it is difficult for the skin-color-based HSV model to distinguish the gesture area, resulting in poor detection accuracy. The DPM model is based on HOG features. The skin-like and skin-colored backgrounds in the dataset reduce the gradient of pixel intensities at the gesture contour, which seriously affect the detection performance of DPM. The human body in the image causes many gradients and complex textures around the gesture, which is also the reason the DPM cannot accurately identify the gesture area. The Bayesian attention model uses the human visual cortex mechanism [57] to extract features from the shape and texture of gestures in images, and then uses the Bayesian model for hand gesture detection. The visual cortex model responsible for feature extraction only has a four-layer network structure, and the number of filters is not sufficient. As a consequence, the gesture features learned by the visual cortex model are not complete. The visual cortex model is prone to produce erroneous results, especially when there are complex background disturbances such as skin color and human body parts in the image.

Figure 11.

Confusion matrix of NUS-II dataset.

Table 1.

Comparison of detection accuracy and localization accuracy.

Table 2 shows the F1 and running times of YOLO, YOLOv3, and the proposed framework on the NUS-II dataset. Compared with the YOLO algorithm that detects different hand gestures as multiple targets, the proposed framework further accurately identifies the hand gesture area after detection, and obtains a higher F1 score than both YOLO and YOLOv3. As for the time required for the three algorithms to process the same image, the framework proposed in this study achieves a shorter running time due to the improved network structure of the YOLO algorithm.

Table 2.

F1 values and running times of YOLO and the proposed framework.

4.4.2. Recognition of Hand Gestures with Different Backlights

In order to verify the effectiveness of the proposed algorithm for hand gesture recognition under different backlight conditions, we use common normalization methods [50,58] to process the gesture regions obtained in the previous stage. Table 3 shows that normalized methods of three scales (32 × 32, 64 × 64, and 96 × 96) have almost the same hand gesture recognition results. Each normalization will cause the loss of image information and affect the recognition rate. However, the proposed framework preserves the original size of the gesture region during the recognition stage, further significantly improving the hand gesture recognition rate. Normalization seemed not to influence the recognition rate with limited image information and different backlights. This validates that the framework proposed in this study can effectively reduce the influence of different backlights on hand gesture recognition.

Table 3.

Normalized hand gesture recognition rate of the proposed framework.

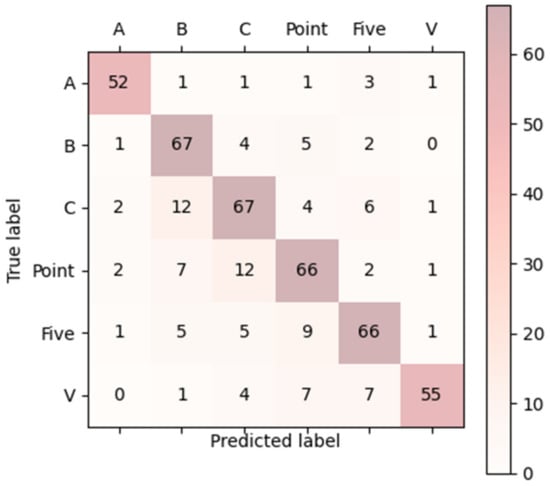

To verify the generalization ability and robustness of the proposed framework under different backlight conditions, we ran Skin color + CNN, CNN+SVM, Gaussian model, and the proposed framework on the Marcel dataset. The results are presented in Table 4. Since the backgrounds of the images in the Marcel dataset are relatively simple, all of the algorithms running on the Marcel dataset obtained high recognition rates. However, compared with other algorithms, the framework proposed in this study achieved the highest recognition rate. Therefore, we conclude that the framework proposed in this study can achieve better recognition performance and robustness against the interference of various backgrounds and backlights compared with the counterparts. The confusion matrix is presented in Figure 12.

Table 4.

Recognition accuracy of different algorithms on the Marcel dataset.

Figure 12.

Confusion matrix of Marcel dataset.

4.4.3. A Case Study in the Classroom Environment

In order to verify the actual detection effect of the framework proposed in this study on small target gestures in the classroom environment, the teacher made different hand gestures according to normal habits, and a video was recorded when half of the body was visible. The teacher was required to make three different gestures on a freely movable pulpit according to categories of hand gesture in the classroom, respectively, symbolic gesture, descriptive gesture, and emotional gesture, as we mentioned before. We collected 60 images in total with 20 images for each gesture, and the image size was cropped to 320 × 240 pixels. We used the framework proposed in this study to first detect the shape of hand gestures, and then to recognize the meaning of the gestures. The experimental results are shown in Figure 13. These figures are the running results of the algorithm proposed in this paper. When the gesture size in the image was small, the detection of the proposed framework was workable. The proposed framework can detect the shape of the gesture and generate a virtual gesture that is nearly identical to the real one, even if there is a skin-like background in the image. There were two images in the emotional gesture category incorrectly classified into the descriptive gesture category, whereas images of symbolic gesture and descriptive gesture categories were classified correctly. This is probably because it is not easy to distinguish the specific pedagogical meaning between a descriptive gesture and an emotional gesture. However, this preliminary result indicates that the framework proposed in this study can effectively detect gestures with different image background and gesture changes, which shows that the proposed framework has better usability and robustness for small target gestures.

Figure 13.

A sample extracted from the video.

Given the recognition results of the proposed framework, we asked the teacher to what extent the results indicate their intention with respect to communication with students when they taught in the class. The teacher thought that the results were nearly consistent with his intentions, and felt that this kind of non-verbal cue seemed to improve the level of class management and teacher–student interaction. He could more fully describe class material and deliver his emotions, which could lead to more engagement in students.

5. Conclusions

In this study, we proposed a hand gesture recognition framework for deep convolutional neural network models under complex backgrounds and different backlight conditions. The proposed framework incorporates deep convolutional neural networks as classifiers into hand gesture recognition tasks, which can be used for non-verbal interaction between teachers and students in the classroom. We established a two-phase machine learning task in order to improve the recognition accuracy and robustness, i.e., we detected the shape of the hand gesture at the first stage and determined the specific meanings at a later stage. A series of experiments showed that the proposed framework can outperform many existing algorithms, including YOLO, YOLOv3, and the Bayesian model, etc. The encouraging results obtained from the experiments can be attributed to multi-scale feature fusion tactics in DCNN. As the complexity of fusion features increases, the “dropout” method can not only avoid overfitting in DCNN, but can also effectively improve the recognition rate and greatly shorten the recognition time compared to probabilistic neural networks and support vector machines. Moreover, with the increase in the number of deep convolutional neural network layers, the “dropout” method can improve the robustness of gestures in complex scenes by randomly ignoring a certain proportion of node responses in each network layer. It is worth noting that although the proposed framework includes the preprocessing step and improved CNN architecture, the main performance improvement should be attributed to the improved CNN architecture, because all experiments conducted on algorithmic architectures used the preprocessed data instead of the raw data. In summary, the proposed framework is expected to be applied to real classroom scenarios for the recognition of teachers’ hand gestures, because of the sound features with regard to running speed, accuracy, and robustness. However, one main limitation of this study is that we did not run the proposed framework directly on the mature dataset of teachers’ hand gestures collected in real classroom settings. Rather, we adopted public datasets that satisfy the established conditions as a simulation of real classroom teaching. We believe the results are also credible and meaningful in the absence of available datasets with respect to teachers’ hand gestures. Future work to be undertaken is to incorporate the proposed framework into cloud platforms of universities and validate the real performance in the classroom. Distributed implementation of the proposed framework will be extended to meet the requirement of large-scale classroom videos.

Author Contributions

Conceptualization, Z.P.; methodology, Z.P. and Z.Y.; software, J.X.; validation, Z.P., Z.Y. and J.X.; formal analysis, Z.Y.; investigation, T.X.; resources, Z.Y.; data curation, J.X.; writing—original draft preparation, Z.P.; writing—review and editing, T.X.; visualization, Z.P.; supervision, Z.P.; project administration, T.X.; funding acquisition, Z.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Quanzhou Fengze District Science and Technology Plan Project (2021FZ23), Fujian Social and Science Project (FJ2022B067), Fujian Undergraduate University Teaching Reform Project (FBJG20200087, FBJG20210264), and Quanzhou Normal University New Liberal Arts Reform Project (2021XWK006).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Paranduk, R.; Karisi, Y. The Effectiveness of Non-Verbal Communication in Teaching and Learning English: A Systematic Review. J. Engl. Cult. Lang. Lit. Educ. 2021, 8, 145–159. [Google Scholar] [CrossRef]

- Brey, E.; Pauker, K. Teachers’ nonverbal behaviors influence children’s stereotypic beliefs. J. Exp. Child Psychol. 2019, 188, 104671. [Google Scholar] [CrossRef] [PubMed]

- Kamiya, N. What Factors Affect Learners’ Ability to Interpret Nonverbal Behaviors in EFL Classrooms? J. Nonverbal Behav. 2019, 43, 283–307. [Google Scholar] [CrossRef]

- Mahmoud, A.G.; Hasan, A.M.; Hassan, N.M. Convolutional neural networks framework for human hand gesture recognition. Bull. Electr. Eng. Inform. 2021, 10, 2223–2230. [Google Scholar] [CrossRef]

- Alibali, M.W.; Nathan, M.J.; Wolfgram, M.S.; Church, R.B.; Jacobs, S.A.; Martinez, C.J.; Knuth, E.J. How Teachers Link Ideas in Mathematics Instruction Using Speech and Gesture: A Corpus Analysis. Cogn. Instr. 2013, 32, 65–100. [Google Scholar] [CrossRef]

- Nathan, M.J.; Yeo, A.; Boncoddo, R.; Hostetter, A.B.; Alibali, M.W. Teachers’ attitudes about gesture for learning and instruction. Gesture 2019, 18, 31–56. [Google Scholar] [CrossRef]

- Berman, S.; Stern, H. Sensors for Gesture Recognition Systems. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2011, 42, 277–290. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Lee, H.-C.; Shih, C.-Y.; Lin, T.-M. Computer-Vision Based Hand Gesture Recognition and Its Application in Iphone. In Advances in Intelligent Systems and Applications-Volume 2; Springer: Berlin/Heidelberg, Germany, 2013; pp. 487–497. [Google Scholar]

- Zhou, H.; Wu, T.; Sun, K.; Zhang, C. Towards High Accuracy Pedestrian Detection on Edge GPUs. Sensors 2022, 22, 5980. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Bai, Y.; Park, S.-Y.; Kim, Y.-S.; Jeong, I.-G.; Ok, S.-Y.; Lee, E.-J. Hand Tracking and Hand Gesture Recognition for Human Computer Interaction. J. Korea Multimed. Soc. 2011, 14, 182–193. [Google Scholar] [CrossRef][Green Version]

- Li, S.Z.; Chu, R.; Liao, S.; Zhang, L. Illumination invariant face recognition using near-infrared images. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 627–639. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Jiang, J.; Li, Y.; Xu, S.; Zhang, X.; Yan, C.; Li, L. A panoramic survey method based on gesture recognition. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 677–681. [Google Scholar]

- Fan, D.; Lu, H.; Xu, S.; Cao, S. Multi-Task and Multi-Modal Learning for RGB Dynamic Gesture Recognition. IEEE Sensors J. 2021, 21, 27026–27036. [Google Scholar] [CrossRef]

- Enfield, N.; Kita, S.; de Ruiter, J. Primary and secondary pragmatic functions of pointing gestures. J. Pragmat. 2007, 39, 1722–1741. [Google Scholar] [CrossRef]

- Rahmat, A. Teachers’ Gesture in Teaching EFL Classroom of Makassar State University. Metathes. J. Engl. Lang. Lit. Teach. 2018, 2, 236–252. [Google Scholar] [CrossRef]

- Aldugom, M.; Fenn, K.; Cook, S.W. Gesture during math instruction specifically benefits learners with high visuospatial working memory capacity. Cogn. Res. Princ. Implic. 2020, 5, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Flevares, L.M.; Perry, M. How many do you see? The use of nonspoken representations in first-grade mathematics lessons. J. Educ. Psychol. 2001, 93, 330. [Google Scholar] [CrossRef]

- Sime, D. What Do Learners Make of Teachers’ Gestures in the Language Classroom? Int. Rev. Appl. Linguist. Lang. Teach. (IRAL) 2006, 44, 211–230. [Google Scholar] [CrossRef]

- Lim, V.F. Analysing the teachers’ use of gestures in the classroom: A systemic functional multimodal discourse analysis approach. Social Semiotics 2019, 29, 83–111. [Google Scholar] [CrossRef]

- Stam, G.; Tellier, M. Gesture helps second and foreign language learning and teaching. In Gesture in Language: Development across the Lifespan; American Psychological Association: Washington, DC, USA, 2022; pp. 335–363. [Google Scholar] [CrossRef]

- Wang, J.; Liu, T.; Wang, X. Human hand gesture recognition with convolutional neural networks for K-12 double-teachers instruction mode classroom. Infrared Phys. Technol. 2020, 111, 103464. [Google Scholar] [CrossRef]

- Yang, M.-T.; Liao, W.-C. Computer-Assisted Culture Learning in an Online Augmented Reality Environment Based on Free-Hand Gesture Interaction. IEEE Trans. Learn. Technol. 2014, 7, 107–117. [Google Scholar] [CrossRef]

- Liu, T.; Chen, Z.; Liu, H.; Zhang, Z.; Chen, Y. Multi-modal hand gesture designing in multi-screen touchable teaching system for human-computer interaction. In Proceedings of the 2nd International Conference on Advances in Image Processing, Chengdu, China, 16–18 June 2018; pp. 198–202. [Google Scholar] [CrossRef]

- Hu, X.; Han, Z.R. Effects of gesture-based match-to-sample instruction via virtual reality technology for Chinese students with autism spectrum disorders. Int. J. Dev. Disabil. 2019, 65, 327–336. [Google Scholar] [CrossRef]

- Rautaray, S.S.; Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 2012, 43, 1–54. [Google Scholar] [CrossRef]

- Ghosh, D.K.; Ari, S. On an algorithm for Vision-based hand gesture recognition. Signal, Image and Video Processing 2016, 10, 655–662. [Google Scholar] [CrossRef]

- Lu, W.; Tong, Z.; Chu, J. Dynamic Hand Gesture Recognition with Leap Motion Controller. IEEE Signal Process. Lett. 2016, 23, 1188–1192. [Google Scholar] [CrossRef]

- Fan, H.; Zhu, H. Separation of vehicle detection area using Fourier descriptor under internet of things monitoring. IEEE Access 2018, 6, 47600–47609. [Google Scholar] [CrossRef]

- Hasan, H.; Abdul-Kareem, S. RETRACTED ARTICLE: Static hand gesture recognition using neural networks. Artif. Intell. Rev. 2012, 41, 147–181. [Google Scholar] [CrossRef]

- Wang, Z.; Li, G.; Yang, L. Dynamic Hand Gesture Recognition Based on Micro-Doppler Radar Signatures Using Hidden Gauss–Markov Models. IEEE Geosci. Remote Sens. Lett. 2020, 18, 291–295. [Google Scholar] [CrossRef]

- Zhao, M.; Quek, F.; Wu, X. RIEVL: Recursive induction learning in hand gesture recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1174–1185. [Google Scholar] [CrossRef]

- Ge, S.; Yang, Y.; Lee, T. Hand gesture recognition and tracking based on distributed locally linear embedding. Image Vis. Comput. 2008, 26, 1607–1620. [Google Scholar] [CrossRef]

- Dubey, A.K. Enhanced hand-gesture recognition by improved beetle swarm optimized probabilistic neural network for human–computer interaction. J. Ambient Intell. Humaniz. Comput. 2022, 1–14. [Google Scholar] [CrossRef]

- Li, J.; Li, C.; Han, J.; Shi, Y.; Bian, G.; Zhou, S. Robust Hand Gesture Recognition Using HOG-9ULBP Features and SVM Model. Electronics 2022, 11, 988. [Google Scholar] [CrossRef]

- Ma, D.; Lan, G.; Hassan, M.; Hu, W.; Upama, M.B.; Uddin, A.; Youssef, M. Solargest: Ubiquitous and battery-free gesture recognition using solar cells. In Proceedings of the 25th Annual International Conference on Mobile Computing and Networking, Los Cabos, Mexico, 21–25 October 2019; pp. 1–15. [Google Scholar]

- Montulet, R.; Briassouli, A.; Maastricht, N. Deep Learning for Robust end-to-end Tone Mapping. In Proceedings of the British Machine Vision Conference BMVC, Cardiff, UK, 9–12 September 2019; Volume 2, p. 4. [Google Scholar]

- Li, G.; Tang, H.; Sun, Y.; Kong, J.; Jiang, G.; Jiang, D.; Tao, B.; Xue, S.; Liu, H. Hand gesture recognition based on convolution neural network. Clust. Comput. 2019, 22, 2719–2729. [Google Scholar] [CrossRef]

- Oyedotun, O.; Khashman, A. Deep learning in vision-based static hand gesture recognition. Neural Comput. Appl. 2016, 28, 3941–3951. [Google Scholar] [CrossRef]

- Zhang, D.; Shen, D.; Alzheimer’s Disease Neuroimaging Initiative. Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in Alzheimer’s disease. NeuroImage 2012, 59, 895–907. [Google Scholar] [CrossRef] [PubMed]

- Melo, D.F.Q.; Silva, B.M.C.; Pombo, N.; Xu, L. Internet of Things Assisted Monitoring Using Ultrasound-Based Gesture Recognition Contactless System. IEEE Access 2021, 9, 90185–90194. [Google Scholar] [CrossRef]

- Ewe, E.L.R.; Lee, C.P.; Kwek, L.C.; Lim, K.M. Hand Gesture Recognition via Lightweight VGG16 and Ensemble Classifier. Appl. Sci. 2022, 12, 7643. [Google Scholar] [CrossRef]

- Yeo, H.S.; Lee, B.G.; Lim, H. Hand tracking and gesture recognition system for human-computer interaction using low-cost hardware. Multimed. Tools Appl. 2015, 74, 2687–2715. [Google Scholar] [CrossRef]

- Liu, G.; Guo, J. Bidirectional LSTM with attention mechanism and convolutional layer for text classification. Neurocomputing 2019, 337, 325–338. [Google Scholar] [CrossRef]

- Koide, K.; Miura, J. Identification of a specific person using color, height, and gait features for a person following robot. Robot. Auton. Syst. 2016, 84, 76–87. [Google Scholar] [CrossRef]

- Han, Y.; Roig, G.; Geiger, G.; Poggio, T. Scale and translation-invariance for novel objects in human vision. Sci. Rep. 2020, 10, 1411–1413. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, X. Vehicle-logo recognition based on modified HU invariant moments and SVM. Multimed. Tools Appl. 2017, 78, 75–97. [Google Scholar] [CrossRef]

- Hsieh, J.-W.; Chen, L.-C.; Chen, D.-Y. Symmetrical SURF and Its Applications to Vehicle Detection and Vehicle Make and Model Recognition. IEEE Trans. Intell. Transp. Syst. 2014, 15, 6–20. [Google Scholar] [CrossRef]

- Mohanty, A.; Rambhatla, S.S.; Sahay, R.R. Deep gesture: Static hand gesture recognition using CNN. In Proceedings of the International Conference on Computer Vision and Image Processing, Roorkee, India, 26–28 February 2016; pp. 449–461. [Google Scholar]

- Pisharady, P.; Vadakkepat, P.; Loh, A.P. Attention Based Detection and Recognition of Hand Postures against Complex Backgrounds. Int. J. Comput. Vis. 2012, 101, 403–419. [Google Scholar] [CrossRef]

- You, S.H.; Hwang, M.; Kim, K.H.; Cho, C.S. Implementation of an Autostereoscopic Virtual 3D Button in Non-contact Manner Using Simple Deep Learning Network. J. Inf. Process. Syst. 2021, 17, 505–517. [Google Scholar]

- Song, P.; Qi, L.; Qian, X.; Lu, X. Detection of ships in inland river using high-resolution optical satellite imagery based on mixture of deformable part models. J. Parallel Distrib. Comput. 2019, 132, 1–7. [Google Scholar] [CrossRef]

- Espejel-Cabrera, J.; Cervantes, J.; García-Lamont, F.; Castilla, J.S.R.; Jalili, L.D. Mexican sign language segmentation using color based neuronal networks to detect the individual skin color. Expert Syst. Appl. 2021, 183, 115295. [Google Scholar] [CrossRef]

- Sabaghi, A.; Oghbaie, M.; Hashemifard, K.; Akbari, M. Deep Learning meets Liveness Detection: Recent Advancements and Challenges. arXiv 2021, arXiv:2112.14796. [Google Scholar]

- Castaldi, E.; Lunghi, C.; Morrone, M.C. Neuroplasticity in adult human visual cortex. Neurosci. Biobehav. Rev. 2020, 112, 542–552. [Google Scholar] [CrossRef]

- Byun, S.; Lim, H.; Yu, S.; Paik, J. Contrast Enhancement of Mobile Phone Camera Using Multi-Scale Feature Map. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–2. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).