Learning Motion Constraint-Based Spatio-Temporal Networks for Infrared Dim Target Detections

Abstract

1. Introduction

- This paper introduces a learning spatio-temporal constraint module based on Conv-LSTM cells. Experiments show that the model could be applied in long-range infrared target detection.

- This paper fuses multiscale features along the temporal dimension, which makes the detection more efficient and robust.

- This paper applies a state-aware module to achieve dynamic switching between local searching and global re-detection.

- Real datasets are employed to evaluate detection speeds and the robustness of the model.

2. Materials and Methods

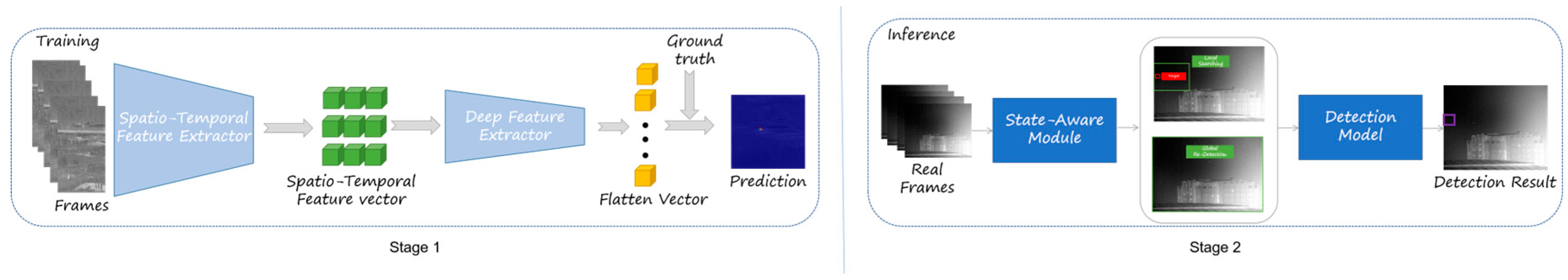

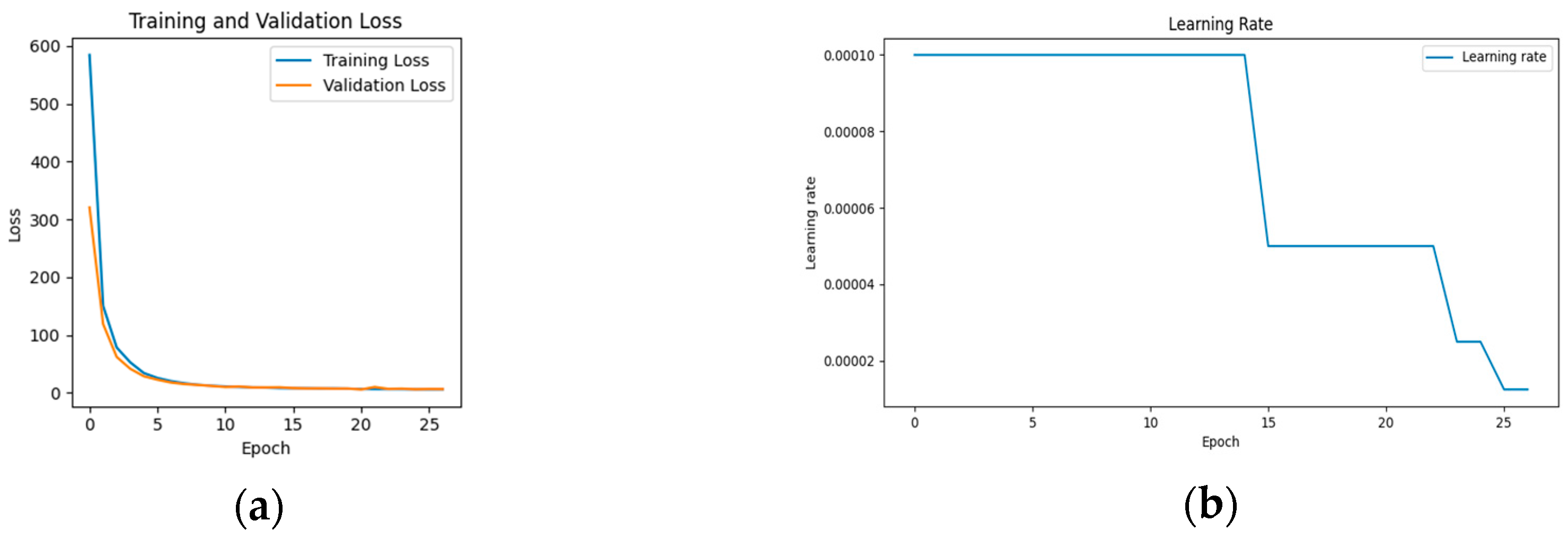

2.1. Training Phase

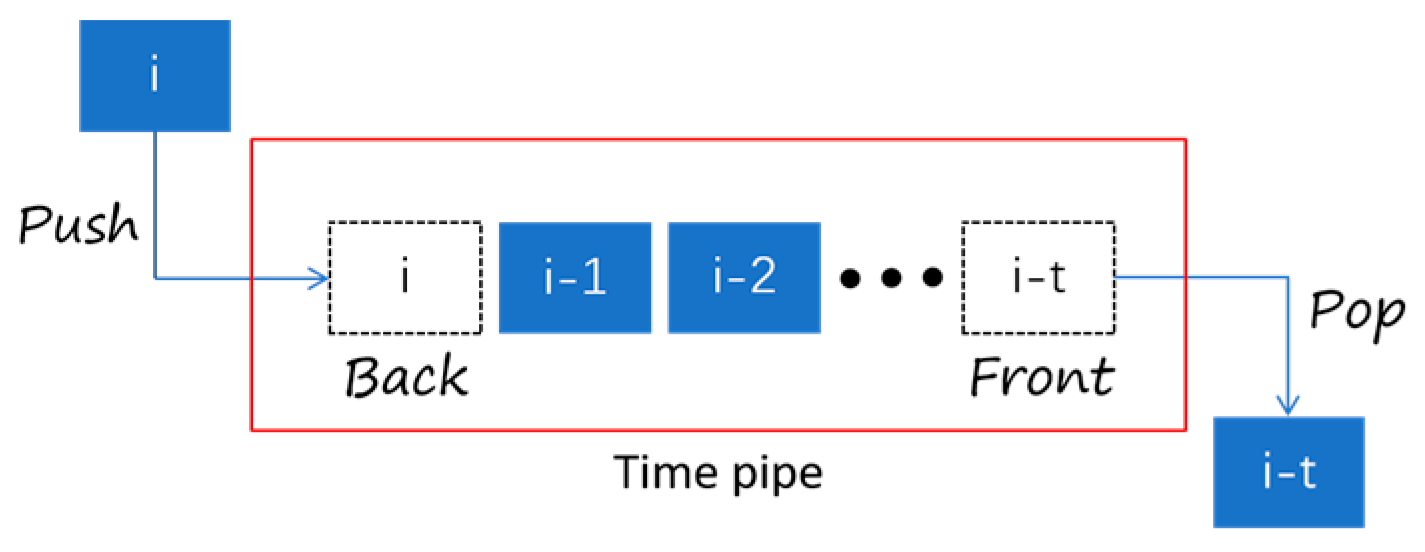

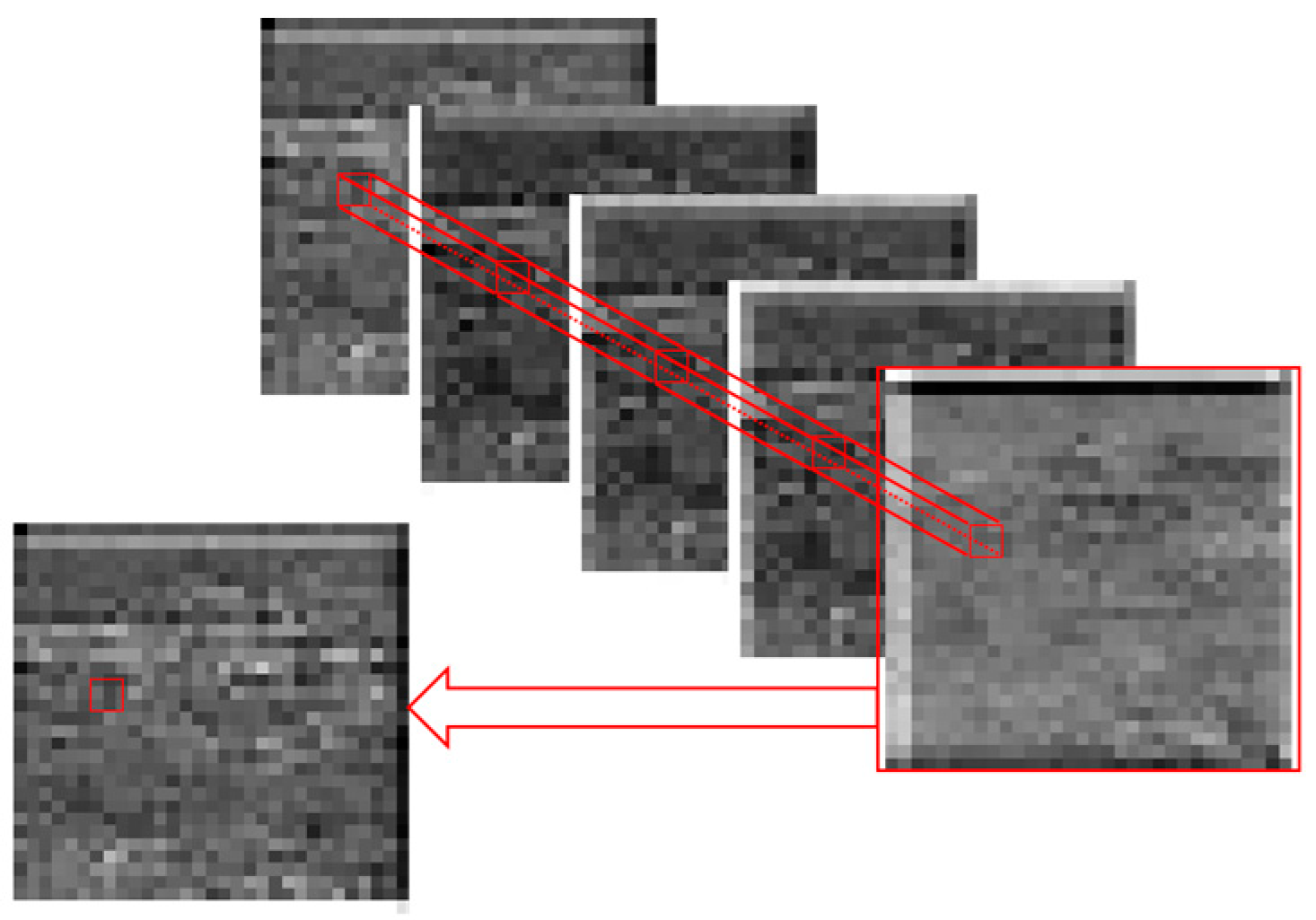

2.1.1. Time Stream Handing

2.1.2. Network Details

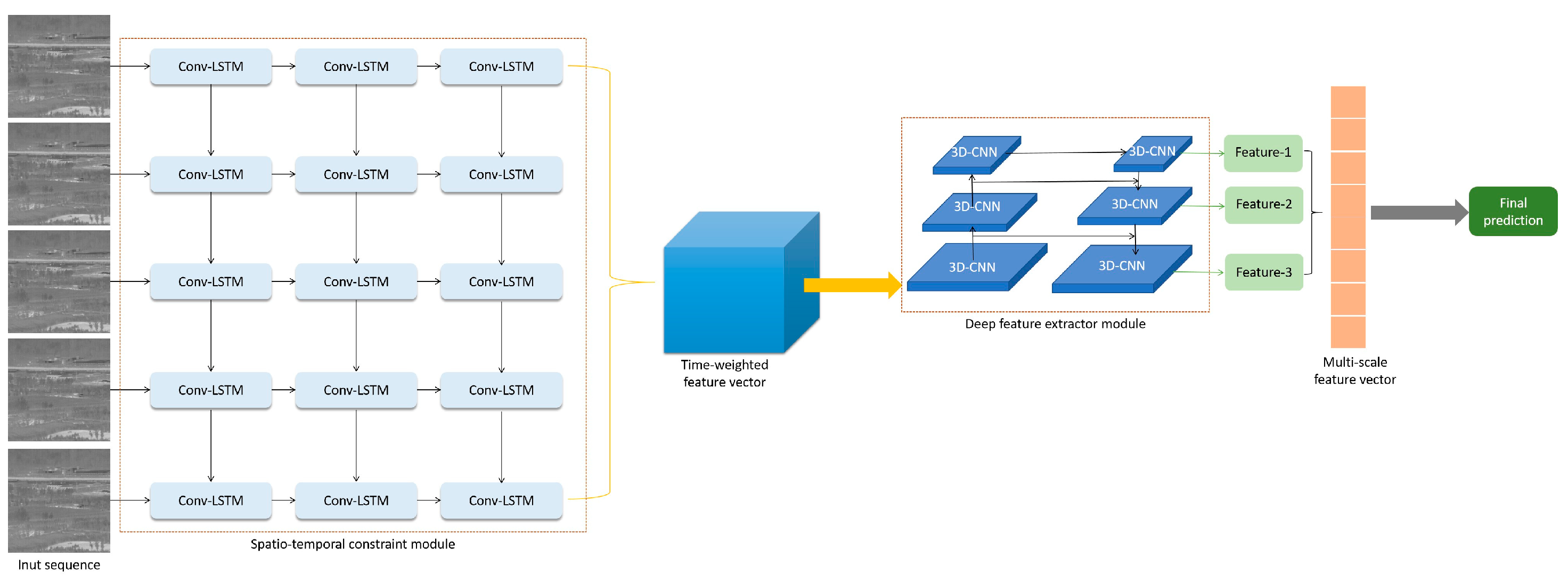

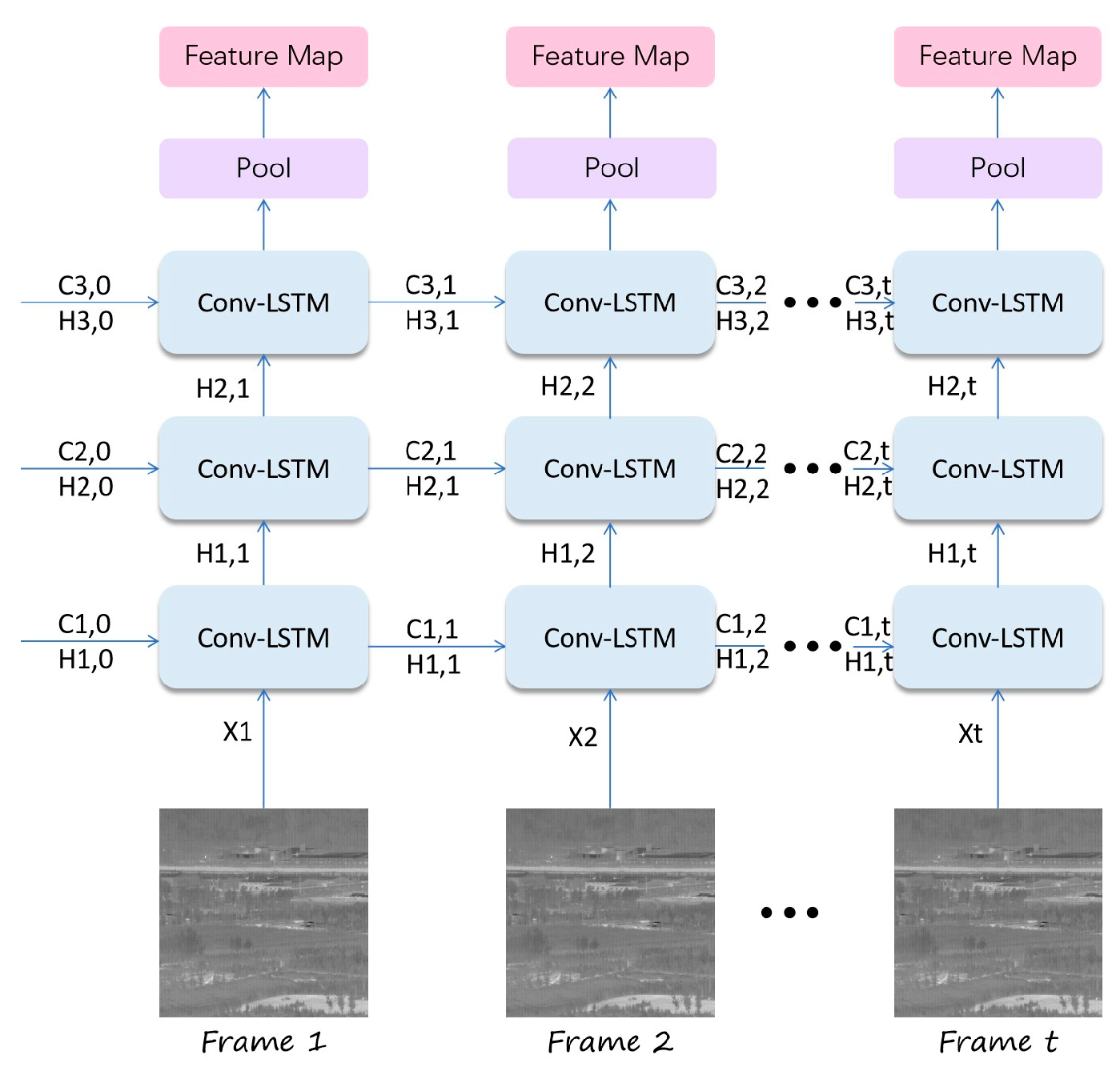

2.1.3. Learning Spatio-Temporal Constraint Module

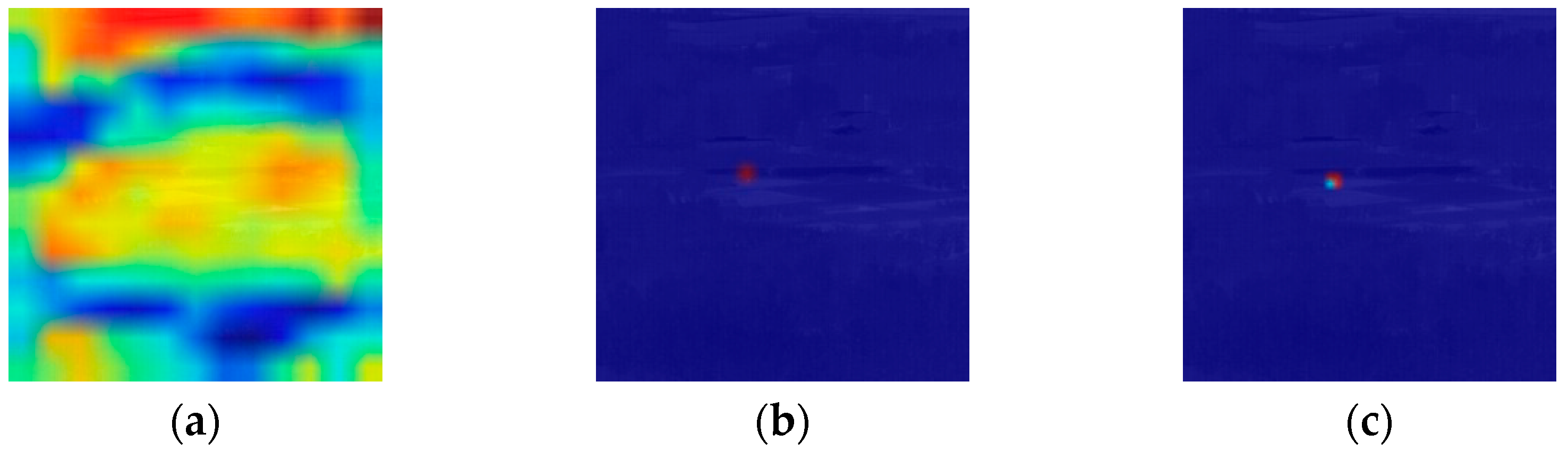

2.1.4. Extracting Deep Spatial Features Module

2.2. Inference Phase

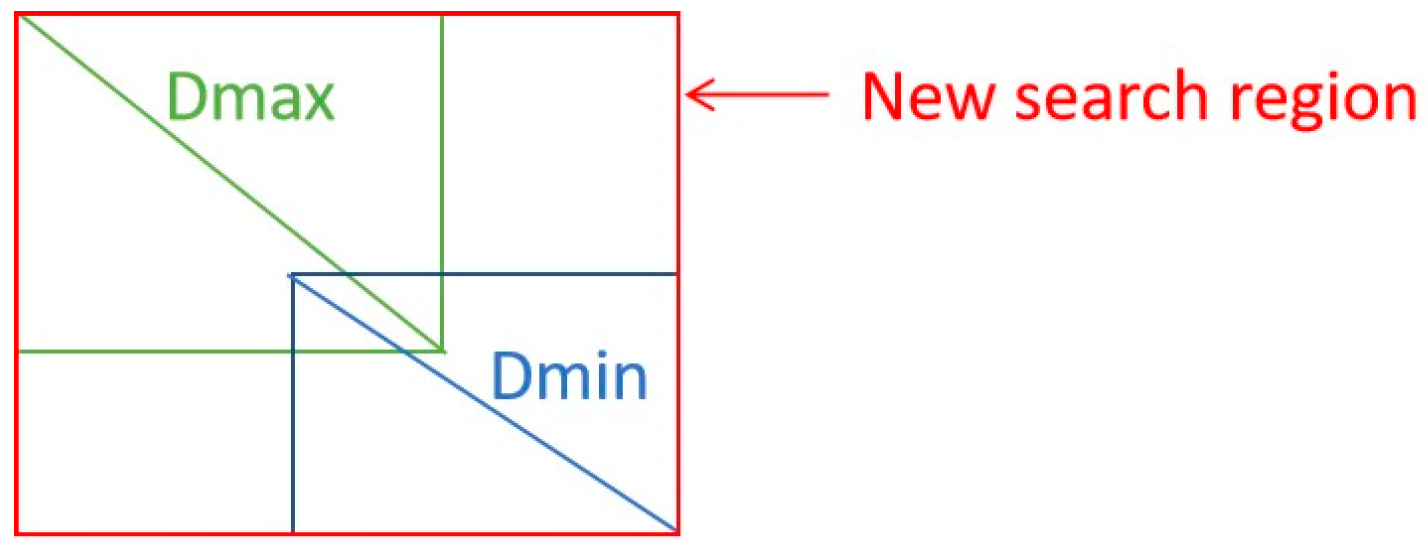

State-Aware Module

3. Results and Discussion

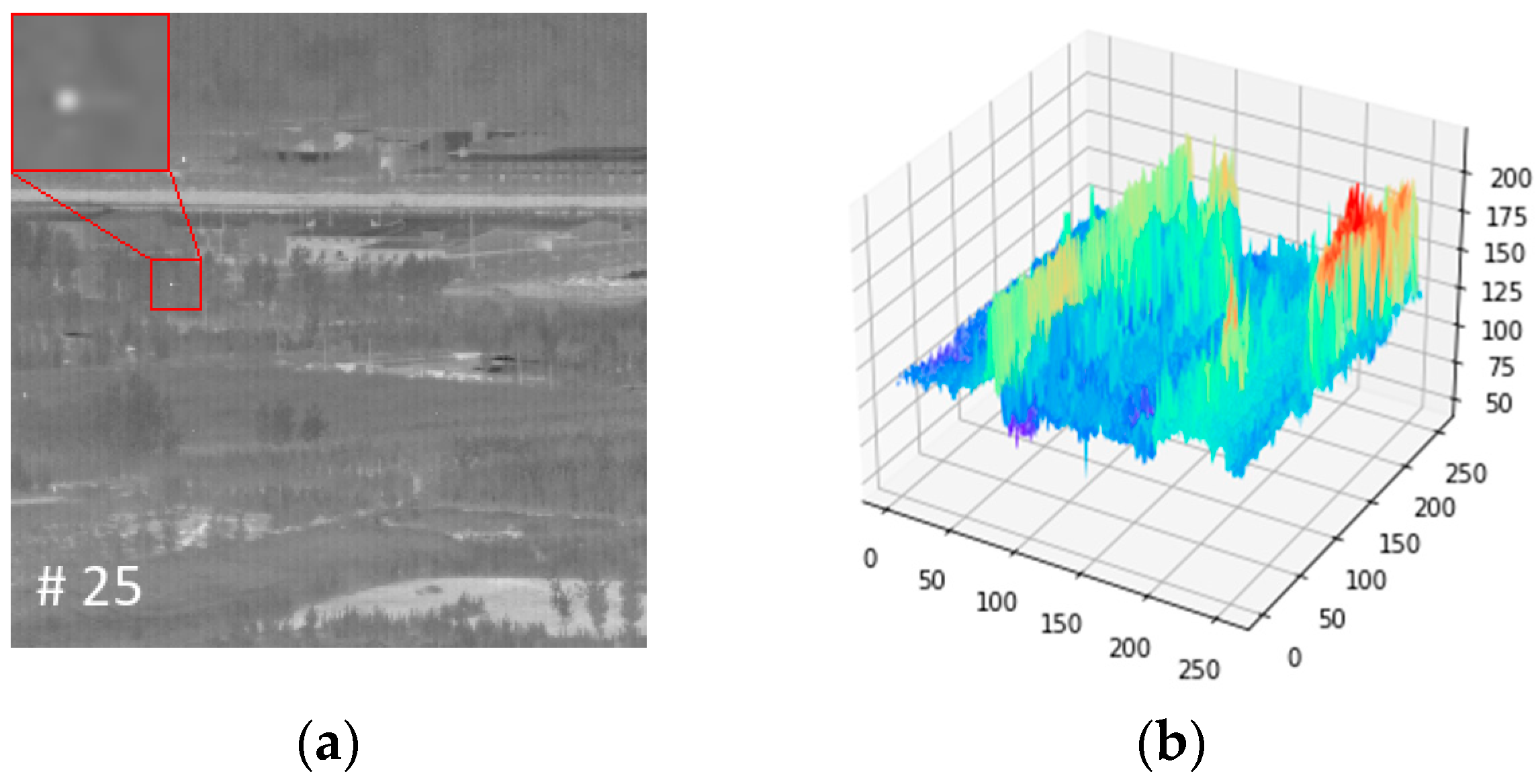

3.1. Introduction to Datasets

3.2. Evaluation Metrics

3.3. Performance Comparison and Discussion

3.3.1. Comparison to State-of-the-Art Methods

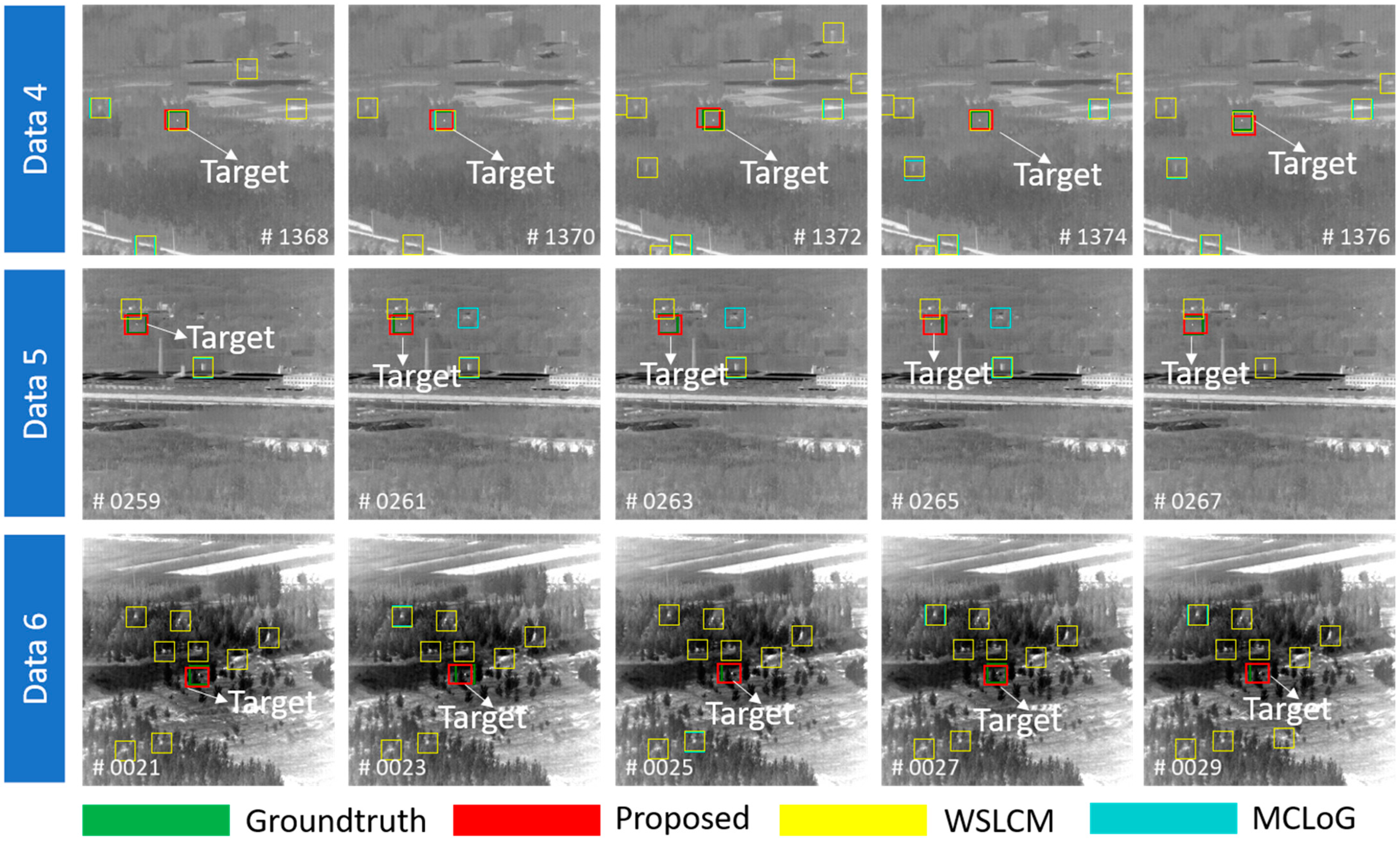

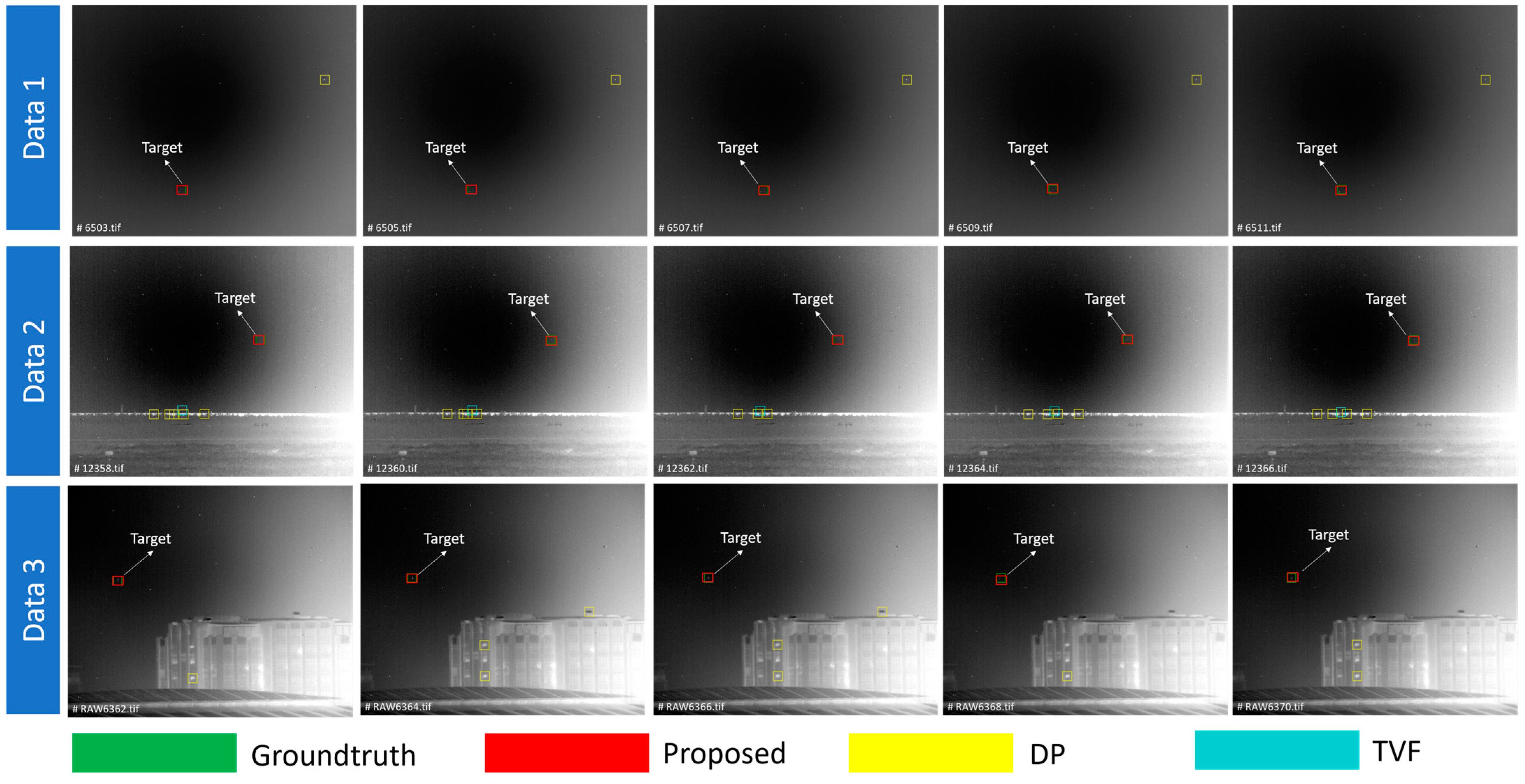

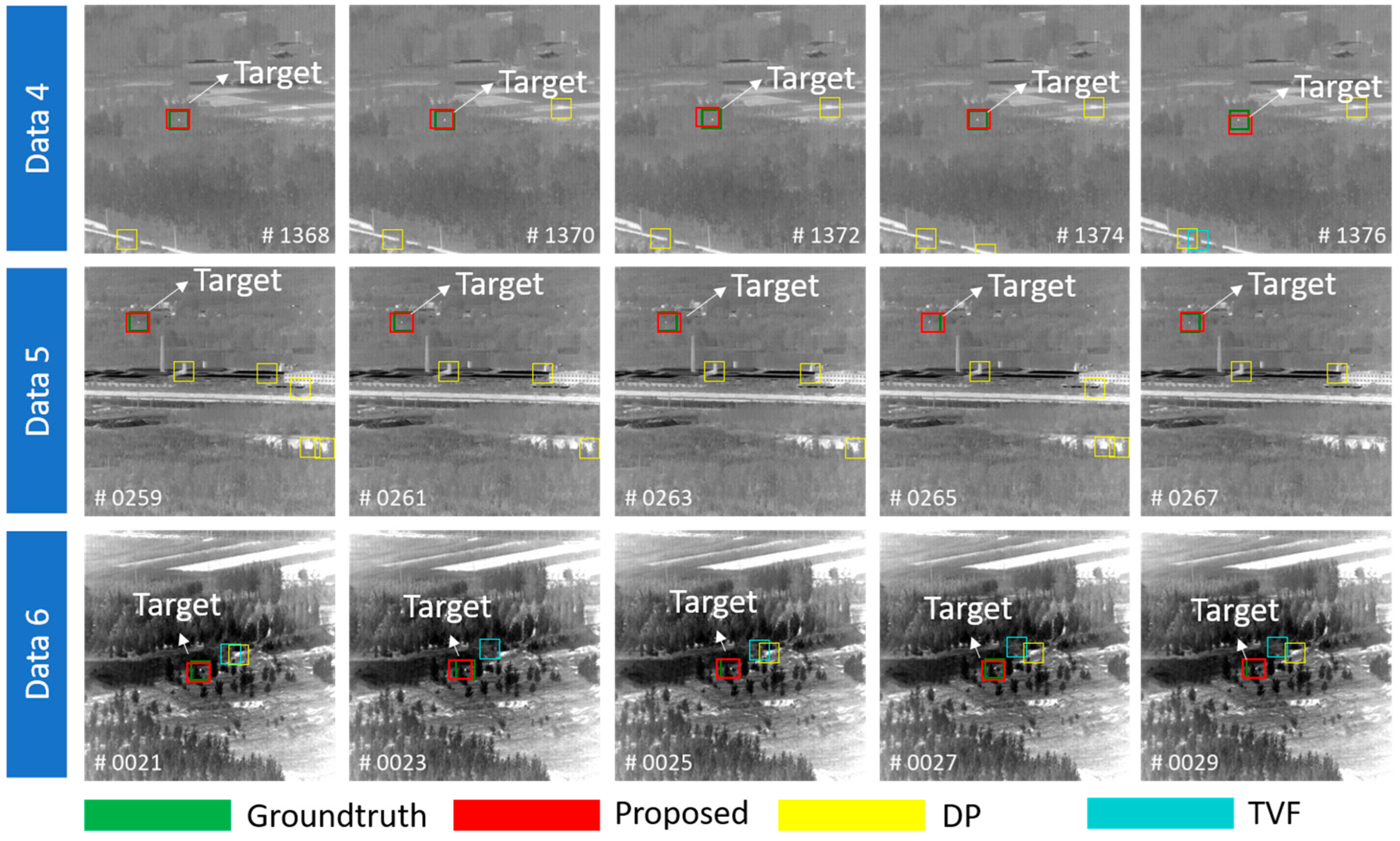

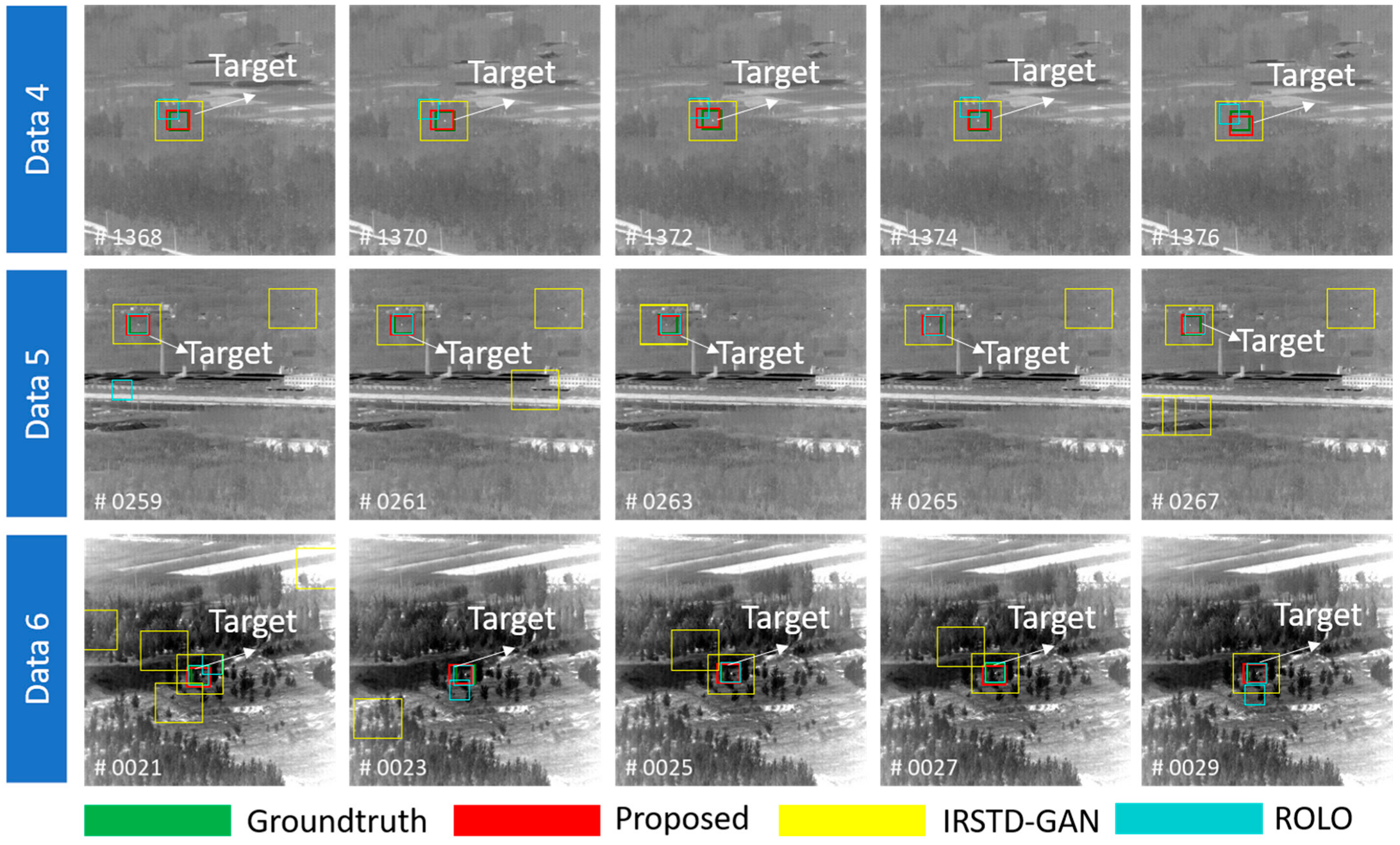

3.3.2. Qualitative Evaluation

3.3.3. Ablation Study

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hu, Y.; Xiao, M.; Li, S.; Yang, Y. Aerial infrared target tracking based on a Siamese network and traditional features. Infrared Phys. Technol. 2020, 111, 103505. [Google Scholar] [CrossRef]

- Liu, R.; Wang, D.; Jia, P.; Sun, H. An Omnidirectional Morphological Method for Aerial Point Target Detection Based on Infrared Dual-Band Model. Remote Sens. 2018, 10, 1054. [Google Scholar] [CrossRef]

- Guan, X.; Zhang, L.; Huang, S.; Peng, Z. Infrared Small Target Detection via Non-Convex Tensor Rank Surrogate Joint Local Contrast Energy. Remote Sens. 2020, 12, 1520. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, L.; Zhang, Y. Small Infrared Target Detection via a Mexican-Hat Distribution. Appl. Sci. 2019, 9, 5570. [Google Scholar] [CrossRef]

- Rao, J.; Mu, J.; Li, F.; Liu, S. Infrared Small Target Detection Based on Weighted Local Coefficient of Variation Measure. Sensors 2022, 22, 3462. [Google Scholar] [CrossRef]

- Pan, S.D.; Zhang, S.; Zhao, M.; An, B.W. Infrared Small Target Detection Based on Double-layer Local Contrast Measure. Acta Photonica Sin. 2020, 49, 110003. [Google Scholar]

- Wu, L.; Ma, Y.; Fan, F.; Wu, M.; Huang, J. A Double-Neighborhood Gradient Method for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1476–1480. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, J.; Li, M.; An, W. Infrared Small-Faint Target Detection Using Non-i.i.d. Mixture of Gaussians and Flux Density. Remote Sens. 2019, 11, 2831. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B. Enhancing Small Moving Target Detection Performance in Low-Quality and Long-Range Infrared Videos Using Optical Flow Techniques. Remote Sens. 2020, 12, 4024. [Google Scholar] [CrossRef]

- Lv, P.-Y.; Lin, C.-Q.; Sun, S.-L. Dim small moving target detection and tracking method based on spatial-temporal joint processing model. Infrared Phys. Technol. 2019, 102, 102973. [Google Scholar] [CrossRef]

- Yi, W.; Fang, Z.; Li, W.; Hoseinnezhad, R.; Kong, L. Multi-Frame Track-Before-Detect Algorithm for Maneuvering Target Tracking. IEEE Trans. Veh. Technol. 2020, 69, 4104–4118. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. Available online: https://ui.adsabs.harvard.edu/abs/2015arXiv150408083G (accessed on 1 April 2015).

- Redmon, J.; Farhadi, V.; Recognition, P. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A.J.A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Lecture Notes in Computer Science, Proceedings of the omputer Vision–ECCV, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P.; Intelligence, M. Focal Loss for Dense Object Detection. IEEE Int. Conf. Comput. Vis. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Kim, S. Heterogeneous Gray-Temperature Fusion-Based Deep Learning Architecture for Far Infrared Small Target Detection. J. Sens. 2019, 2019, 4658068. [Google Scholar] [CrossRef]

- Wang, H.; Li, H.; Zhou, H.; Chen, X. Low-altitude infrared small target detection based on fully convolutional regression network and graph matching. Infrared Phys. Technol. 2021, 115, 103738. [Google Scholar] [CrossRef]

- Shi, M.; Wang, H. Infrared Dim and Small Target Detection Based on Denoising Autoencoder Network. Mob. Netw. Appl. 2019, 25, 1469–1483. [Google Scholar] [CrossRef]

- Ju, M.; Luo, J.; Liu, G.; Luo, H. ISTDet: An efficient end-to-end neural network for infrared small target detection. Infrared Phys. Technol. 2021, 114, 103659. [Google Scholar] [CrossRef]

- Miller, J.L.; Kim, S. Small infrared target detection by data-driven proposal and deep learning-based classification. In Proceedings of the Infrared Technology and Applications XLIV, Orlando, FL, USA, 23 May 2018; Volume 10624. [Google Scholar]

- Liu, M.; Du, H.; Zhao, Y.; Dong, L.; Hui, M.; Wang, S.X. Image Small Target Detection based on Deep Learning with SNR Controlled Sample Generation. Curr. Trends Comput. Sci. Mech. Autom. 2017, 1, 211–220. [Google Scholar]

- Yao, S.; Zhu, Q.; Zhang, T.; Cui, W.; Yan, P. Infrared Image Small-Target Detection Based on Improved FCOS and Spatio-Temporal Features. Electronics 2022, 11, 933. [Google Scholar] [CrossRef]

- Wang, P.; Wang, H.; Li, X.; Zhang, L.; Di, R.; Lv, Z. Small Target Detection Algorithm Based on Transfer Learning and Deep Separable Network. J. Sens. 2021, 2021, 9006288. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C.J.A. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Zhou, A.; Xie, W.; Pei, J. Background Modeling in the Fourier Domain for Maritime Infrared Target Detection. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 2634–2649. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Liu, P.; Luo, H.; Cheng, B.; Sun, H. Infrared LSS-Target Detection Via Adaptive TCAIE-LGM Smoothing and Pixel-Based Background Subtraction. Photonic Sens. 2018, 9, 179–188. [Google Scholar] [CrossRef]

- Kim, S.; Hong, S.; Joh, M.; Song, S.-k.J.A. DeepRain: ConvLSTM Network for Precipitation Prediction using Multichannel Radar Data. arXiv 2017, arXiv:1711.02316. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, no. 07. pp. 12993–13000. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M.J.A. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Eysa, R.; Hamdulla, A.; Automation, E. Issues on Infrared Dim Small Target Detection and Tracking. In Proceedings of the 2019 International Conference on Smart Grid and Electrical Automation (ICSGEA), Xiangtan, China, 10–11 August 2019; pp. 452–456. [Google Scholar]

- Du, J.; Lu, H.; Zhang, L.; Hu, M.; Chen, S.; Deng, Y.; Shen, X.; Zhang, Y. A Spatial-Temporal Feature-Based Detection Framework for Infrared Dim Small Target. IEEE Trans. Geosci. Remote Sens. 2021, 60, 3000412. [Google Scholar] [CrossRef]

- Yang, L.; Liu, S.; Zhao, Y. Deep-Learning Based Algorithm for Detecting Targets in Infrared Images. Appl. Sci. 2022, 12, 3322. [Google Scholar] [CrossRef]

- Huang, B.; Chen, J.; Xu, T.; Wang, Y.; Jiang, S.; Wang, Y.; Wang, L.; Li, J. SiamSTA: Spatio-Temporal Attention based Siamese Tracker for Tracking UAVs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 1204–1212. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Xiao, S.; Ma, Y.; Fan, F.; Huang, J.; Wu, M. Tracking small targets in infrared image sequences under complex environmental conditions. Infrared Phys. Technol. 2020, 104, 103102. [Google Scholar] [CrossRef]

- Zhu, Z.; Lou, K.; Ge, H.; Xu, Q.; Wu, X. Infrared target detection based on Gaussian model and Hungarian algorithm. Enterp. Inf. Syst. 2021, 16, 1573–1586. [Google Scholar] [CrossRef]

- Hui, B.; Song, Z.; Fan, H.; Zhong, P.; Hu, W.; Zhang, X.; Ling, J. A dataset for infrared image dim-small aircraft target detection and tracking under ground / air background. Sci. Data Bank 2019, 5, 12. [Google Scholar]

- Ning, G.; Zhang, Z.; Huang, C.; Ren, X.; Wang, H.; Cai, C.; He, Z. Spatially supervised recurrent convolutional neural networks for visual object tracking. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar]

- Zhao, B.; Wang, C.; Fu, Q.; Han, Z. A Novel Pattern for Infrared Small Target Detection With Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4481–4492. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Zhao, Q.; Zhang, X.; Li, N. Infrared Small Target Detection Based on the Weighted Strengthened Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1670–1674. [Google Scholar] [CrossRef]

- Moradi, S.; Moallem, P.; Sabahi, M.F. A false-alarm aware methodology to develop robust and efficient multi-scale infrared small target detection algorithm. Infrared Phys. Technol. 2018, 89, 387–397. [Google Scholar] [CrossRef]

| Image Examples | Resolution | SNR | Frame Number | Scene Description | |

|---|---|---|---|---|---|

| Dataset 1 |  | 640 × 512 | 1.06 | 1500 | Sky background |

| Dataset 2 |  | 640 × 512 | 1.75 | 1000 | Asphalt road and grassy background |

| Dataset 3 |  | 640 × 512 | 2.09 | 4000 | Buildings Background |

| Dataset 4 |  | 256 × 256 | 5.45 | 3000 | Ground background |

| Dataset 5 |  | 256 × 256 | 3.42 | 750 | Field background |

| Dataset 6 |  | 256 × 256 | 2.20 | 500 | Vegetation background |

| Layer | Parameter |

|---|---|

| conv-lstm-2D_0 (ConvLSTM) | 9856 |

| batch_normalization_0 (BatchNormalization) | 64 |

| conv-lstm-2D_1 (ConvLSTM) | 55,424 |

| batch_normalization_1 (BatchNormalization) | 128 |

| conv-lstm-2D_2(ConvLSTM) | 27,712 |

| batch_normalization_2 (BatchNormalization) | 64 |

| conv3d_0 (Conv3D) | 6928 |

| batch_normalization_3 (BatchNormalization) | 64 |

| conv3d_1 (Conv3D) | 3464 |

| batch_normalization_4 (BatchNormalization) | 32 |

| conv3d_2 (Conv3D) | 3472 |

| batch_normalization_5 (BatchNormalization) | 64 |

| up_sampling3d_0 (UpSampling3D) | 0 |

| up_sampling3d_1 (UpSampling3D) | 0 |

| concatenate (Concatenate) | 0 |

| concatenate (Concatenate) | 0 |

| Total params | 107,272 |

| Trainable params | 107,064 |

| Non-trainable params | 208 |

| Method | Parameter Setting |

|---|---|

| TVF | T = 16, S_Z = 8 |

| DP | T = 8 |

| WSLCM | K = 9, λ = 0.8 |

| MCLoG | K = 4 |

| Proposed Method | T = 5 |

| Method | Author-Collected Dataset | Public Dataset | ||||

|---|---|---|---|---|---|---|

| IoU (×10−2) | DR (×10−2) | FA (×10−2) | IoU (×10−2) | DR (×10−2) | FA (×10−2) | |

| WSLCM [32] | 2.81 | 32.97 | 19.40 | 3.78 | 45.96 | 13.76 |

| MCLoG [33] | 9.78 | 45.35 | 26.78 | 19.78 | 53.16 | 7.04 |

| DP [11] | 60.75 | 66.97 | 7.93 | 73.65 | 77.94 | 17.9 |

| TVF [10] | 59.54 | 61.81 | 17.18 | 65.32 | 75.16 | 8.49 |

| ROLO [30] | 50.32 | 73.97 | 0.732 | 65.08 | 84.57 | 0.45 |

| IRSTD-GAN [40] | 45.68 | 76.31 | 3.45 | 75.32 | 86.16 | 1.38 |

| Proposed | 86.99 | 95.87 | 0.10 | 88.65 | 97.96 | 0.02 |

| Submodule | Evaluation Metric | ||||

|---|---|---|---|---|---|

| STM | DFM | STAM | IoU (×10−2) | DR (×10−2) | FA (×10−2) |

| √ | 43.60 | 60.15 | 4.63 | ||

| √ | 56.27 | 77.33 | 7.87 | ||

| √ | √ | 85.69 | 90.28 | 1.03 | |

| √ | √ | 48.73 | 69.45 | 2.32 | |

| √ | √ | 55.69 | 78.64 | 1.11 | |

| √ | √ | √ | 86.99 | 95.87 | 0.10 |

| Submodule | Evaluation Metric | ||||

|---|---|---|---|---|---|

| Spatio-Temporal Module | Multiscale Feature Module | State-Aware Module | IoU (×10−2) | DR (×10−2) | FA (×10−2) |

| √ | 50.92 | 75.43 | 7.35 | ||

| √ | 48.37 | 80.79 | 4.36 | ||

| √ | √ | 83.68 | 92.86 | 0.51 | |

| √ | √ | 51.78 | 83.59 | 1.36 | |

| √ | √ | 50.36 | 81.54 | 1.29 | |

| √ | √ | √ | 88.65 | 97.96 | 0.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Liu, P.; Huang, X.; Cui, W.; Zhang, T. Learning Motion Constraint-Based Spatio-Temporal Networks for Infrared Dim Target Detections. Appl. Sci. 2022, 12, 11519. https://doi.org/10.3390/app122211519

Li J, Liu P, Huang X, Cui W, Zhang T. Learning Motion Constraint-Based Spatio-Temporal Networks for Infrared Dim Target Detections. Applied Sciences. 2022; 12(22):11519. https://doi.org/10.3390/app122211519

Chicago/Turabian StyleLi, Jie, Pengxi Liu, Xiayang Huang, Wennan Cui, and Tao Zhang. 2022. "Learning Motion Constraint-Based Spatio-Temporal Networks for Infrared Dim Target Detections" Applied Sciences 12, no. 22: 11519. https://doi.org/10.3390/app122211519

APA StyleLi, J., Liu, P., Huang, X., Cui, W., & Zhang, T. (2022). Learning Motion Constraint-Based Spatio-Temporal Networks for Infrared Dim Target Detections. Applied Sciences, 12(22), 11519. https://doi.org/10.3390/app122211519