Communication in Human–AI Co-Creation: Perceptual Analysis of Paintings Generated by Text-to-Image System

Abstract

1. Introduction

2. Literature Review

2.1. Text-to-Image Systems

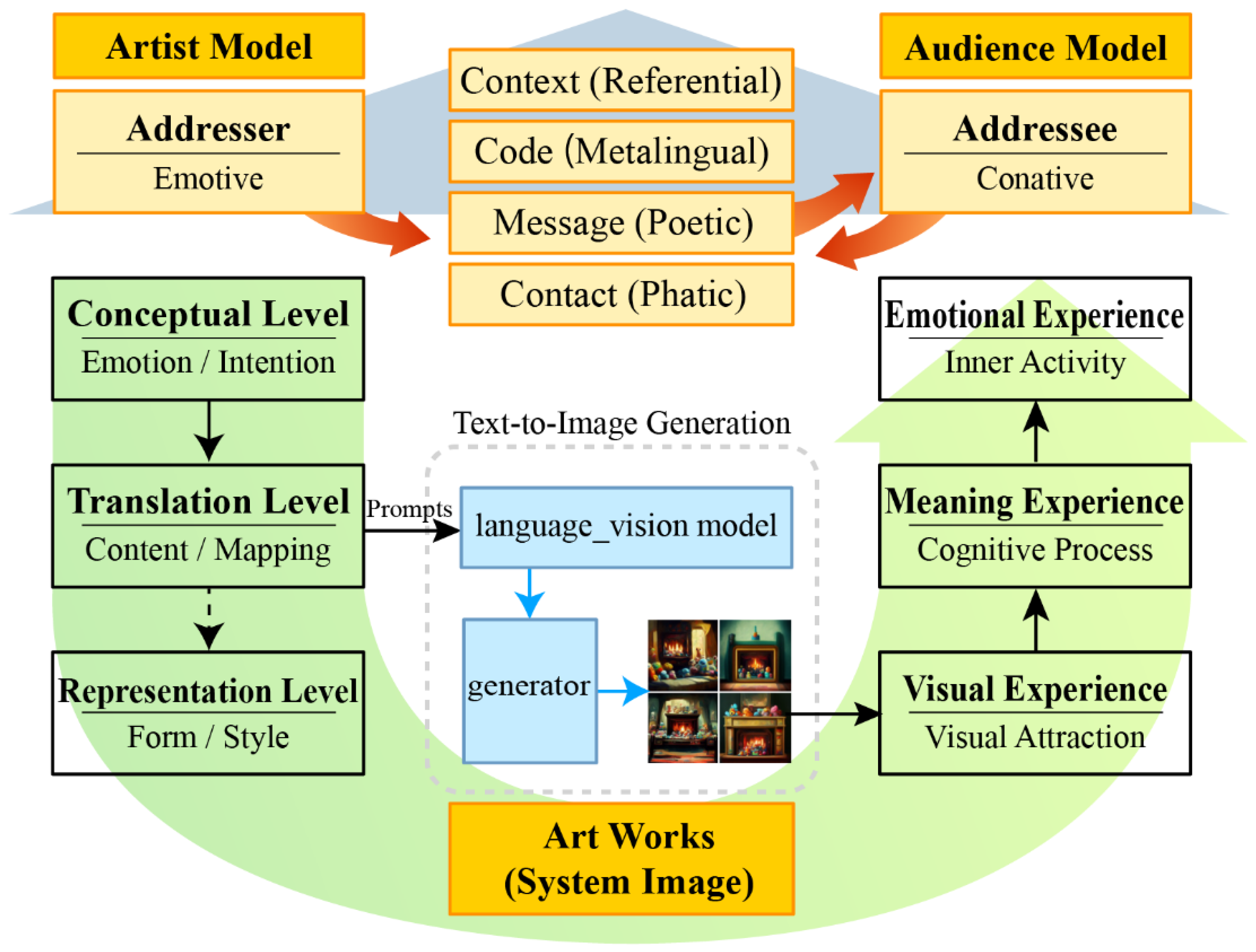

2.2. Communication between Artists and Audiences

2.3. Artworks Generated by Human–AI Co-Creation

3. Materials and Methods

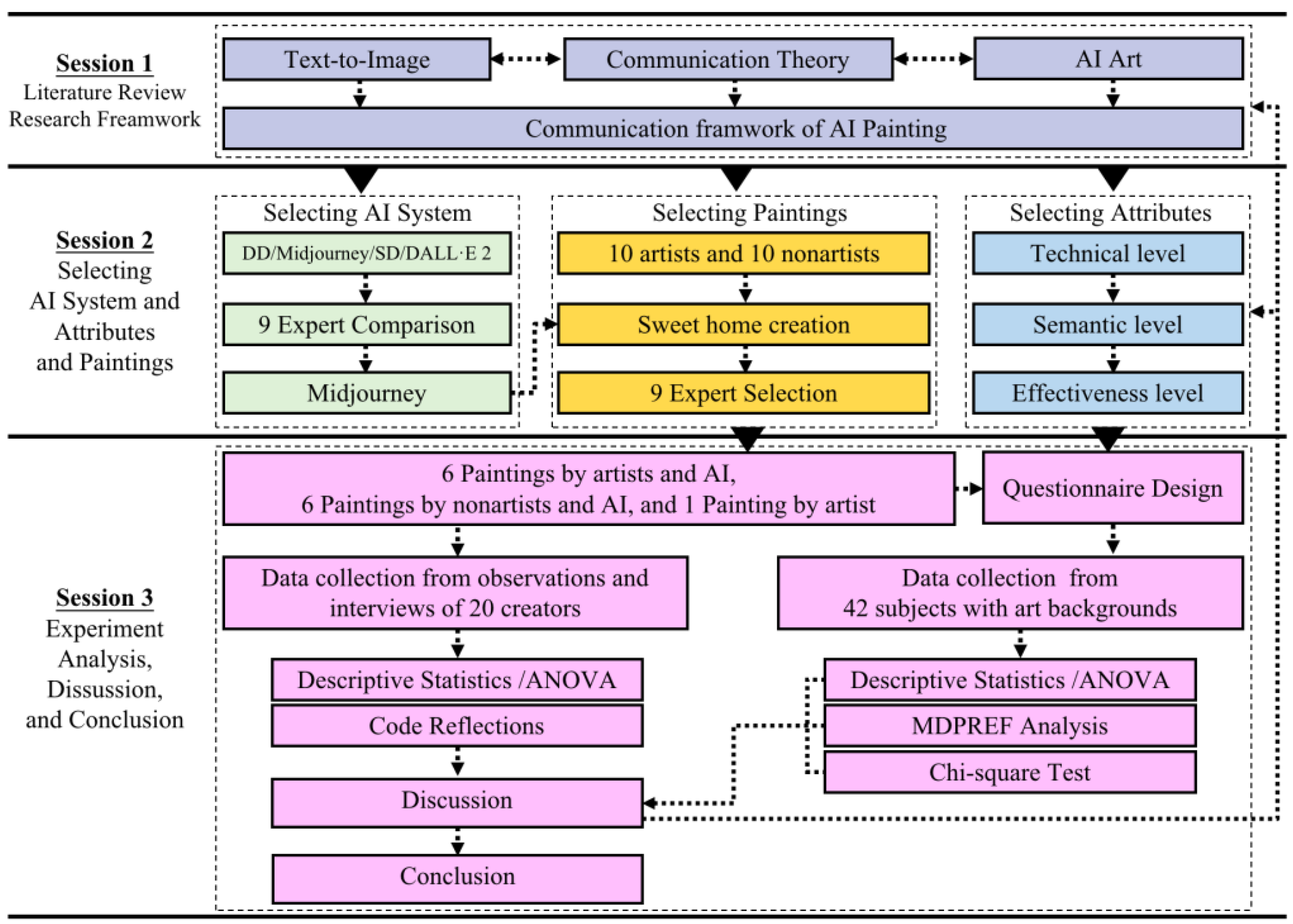

3.1. Research Framework

3.2. Stimuli

3.3. Experiment Procedures

3.4. Questionnaire Participants

3.5. Questionnaire Design

3.6. Statistical Analysis

4. Results

4.1. Coding of Reflections in Human–AI Co-Creation

4.2. Descriptive Statistics and ANOVA Analysis of Rating Data

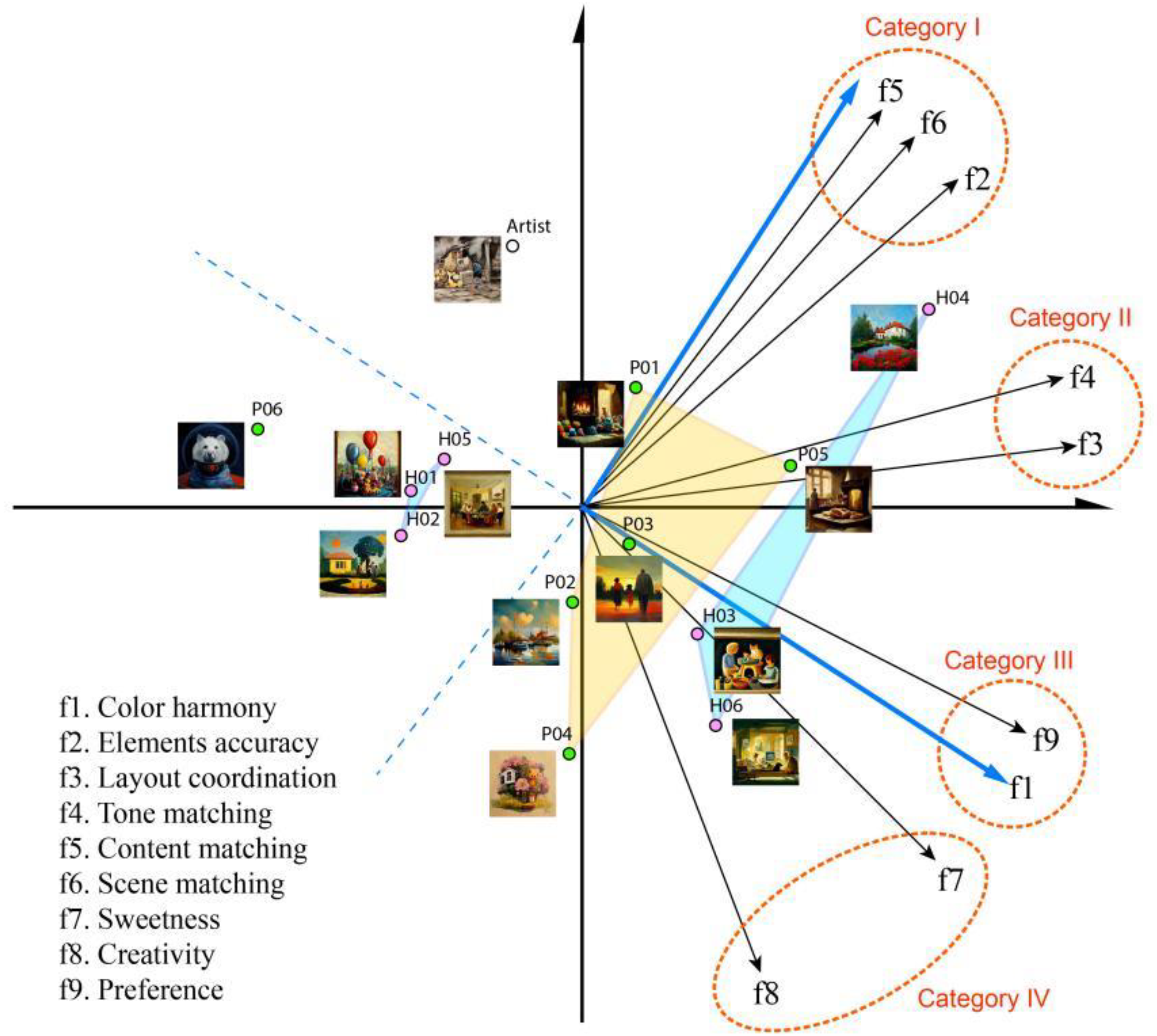

4.3. MDPREF Analysis of Rating Data in Attribute Vectors

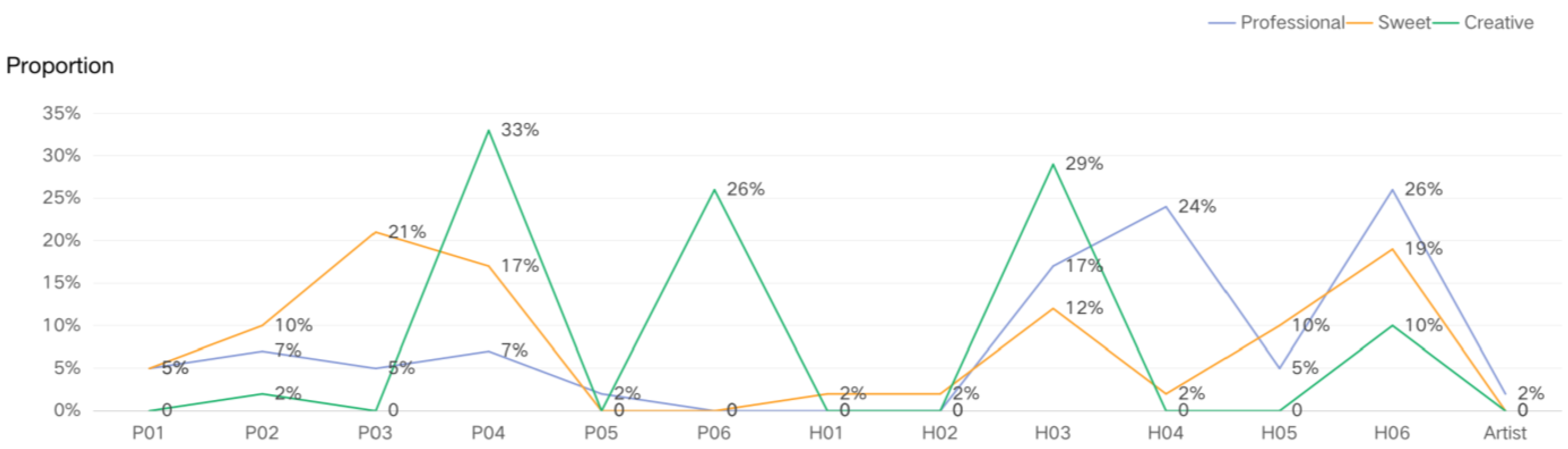

4.4. Analysis of Subjective Ranking

5. Discussion

5.1. Differences of Coding in Co-Creation with AI

5.2. Differences in Decoding in Communication with Creators

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eindhoven, J.E.; Vinacke, W.E. Creative processes in painting. J. Gen. Psychol. 1952, 47, 139–164. [Google Scholar] [CrossRef]

- Kay, S. The figural problem solving and problem finding of professional and semiprofessional artists and nonartists. Creat. Res. J. 1991, 4, 233–252. [Google Scholar] [CrossRef]

- Disco Diffusion. Available online: https://github.com/alembics/disco-diffusion (accessed on 10 June 2022).

- Midjourney. Available online: www.midjourney.com (accessed on 25 August 2022).

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.; Ghasemipour, S.K.S.; Ayan, B.K.; Mahdavi, S.S.; Lopes, R.G.; et al. Photorealistic Text.-to-Image Diffusion Models with Deep Language Understanding. arXiv 2022, arXiv:2205.11487, 2022. [Google Scholar]

- State Fair’s Website. Available online: https://coloradostatefair.com/wp-content/uploads/2022/08/2022-Fine-Arts-First-Second-Third.pdf (accessed on 25 August 2022).

- Gu, S.; Chen, D.; Bao, J.; Wen, F.; Zhang, B.; Chen, D.; Yuan, L.; Guo, B. Vector quantized diffusion model for text-to-image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10696–10706. [Google Scholar]

- Crowson, K.; Biderman, S.; Kornis, D.; Stander, D.; Hallahan, E.; Castricato, L.; Raff, E. Vqgan-clip: Open domain image generation and editing with natural language guidance. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 88–105. [Google Scholar]

- Lee, H.; Ullah, U.; Lee, J.S.; Jeong, B.; Choi, H.C. A Brief Survey of text driven image generation and maniulation. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Gangneung, Korea, 1–3 November 2021; pp. 1–4. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision-language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Liu, V.; Chilton, L.B. Design Guidelines for Prompt Engineering Text-to-Image Generative Models. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–23. [Google Scholar]

- Wu, Y.; Yu, N.; Li, Z.; Backes, M.; Zhang, Y. Membership Inference Attacks Against Text-to-image Generation Models. arXiv 2022, arXiv:2210.00968. [Google Scholar]

- Van Den Oord, A.; Vinyals, O. Neural discrete representation learning. In Proceedings of the Neural Information Processing Systems Annual Conference, Long Beach, CA, USA, 4–9 December 2017; pp. 1–10. [Google Scholar]

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-shot text-to-image generation. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 8821–8831. [Google Scholar]

- Yu, J.; Xu, Y.; Koh, J.Y.; Luong, T.; Baid, G.; Wang, Z.; Vasudevan, V.; Ku, A.; Yang, Y.; Ayan, B.K.; et al. Scaling autoregressive models for content-rich text-to-image generation. arXiv 2022, arXiv:2206.10789. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Stable-Diffusion. Available online: https://github.com/CompVis/stable-diffusion (accessed on 2 September 2022).

- Cetinic, E.; She, J. Understanding and creating art with AI: Review and outlook. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 1–22. [Google Scholar] [CrossRef]

- Lin, C.L.; Chen, J.L.; Chen, S.J.; Lin, R. The cognition of turning poetry into painting. J. US-China Educ. Rev. B 2015, 5, 471–487. [Google Scholar]

- Audry, S. Art in the Age of Machine Learning; MIT Press: Cambridge, MA, USA, 2021; pp. 30, 158–165. [Google Scholar]

- Solso, R.L. Cognition and the Visual Arts; MIT Press: Cambridge, MA, USA, 1996; pp. 34–36. [Google Scholar]

- Steenberg, E. Visual Aesthetic Experience. J. Aesthet. Educ. 2007, 41, 89–94. [Google Scholar] [CrossRef]

- Taylor, J.; Witt, J.; Grimaldi, P. Uncovering the connection between artist and audience: Viewing painted brushstrokes evokes corresponding action representations in the observer. J. Cogn. 2012, 125, 26–36. [Google Scholar] [CrossRef] [PubMed]

- Kozbelt, A. Gombrich, Galenson, and beyond: Integrating case study and typological frameworks in the study of creative individuals. Empir. Stud. Arts 2008, 26, 51–68. [Google Scholar] [CrossRef]

- Kozbelt, A.; Ostrofsky, J. Expertise in drawing. In The Cambridge Handbook of Expertise and Expert Performance; Ericsson, K.A., Hoffman, R.R., Kozbelt, A., Eds.; Cambridge University Press: Cambridge, UK, 2018; pp. 576–596. [Google Scholar]

- Chiarella, S.G.; Torromino, G.; Gagliardi, D.M.; Rossi, D.; Babiloni, F.; Cartocci, G. Investigating the negative bias towards artificial intelligence: Effects of prior assignment of AI-authorship on the aesthetic appreciation of abstract paintings. Comput. Hum. Behav. 2022, 137, 107406. [Google Scholar] [CrossRef]

- Lyu, Y. A Study on Perception of Artistic Style Tansfer using Artificial Intelligance Technology. Unpublished Doctor’s Thesis, National Taiwan University, Taipei, Taiwan. 2022. Available online: https://hdl.handle.net/11296/grdz93 (accessed on 23 October 2022).

- Lyu, Y.; Lin, C.-L.; Lin, P.-H.; Lin, R. The Cognition of Audience to Artistic Style Transfer. Appl. Sci. 2021, 11, 3290. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, C.H.; Lyu, Y.; Lin, R. From Pigments to Pixels: A Comparison of Human and AI Painting. Appl. Sci. 2022, 12, 3724. [Google Scholar] [CrossRef]

- Fiske, J. Introduction to Communication Studies, 3rd ed.; Routledge: London, UK, 2010; pp. 5–6. [Google Scholar]

- Jakobson, R. Language in literature; Harvard University Press: Cambridge, MA, USA, 1987; pp. 100–101. [Google Scholar]

- Lin, R.; Qian, F.; Wu, J.; Fang, W.-T.; Jin, Y. A Pilot Study of Communication Matrix for Evaluating Artworks. In Proceedings of the International Conference on Cross-Cultural Design, Vancouver, BC, Canada, 9–14 July 2017; pp. 356–368. [Google Scholar]

- Mazzone, M.; Elgammal, A. Art, creativity, and the potential of artificial intelligence. Arts 2019, 8, 26. [Google Scholar] [CrossRef]

- Gao, Y.-J.; Chen, L.-Y.; Lee, S.; Lin, R.; Jin, Y. A study of communication in turning “poetry” into “painting”. In Proceedings of the International Conference on Cross-Cultural Design, Vancouver, BC, Canada, 9–14 July 2017; pp. 37–48. [Google Scholar]

- Gao, Y.; Wu, J.; Lee, S.; Lin, R. Communication Between Artist and Audience: A Case Study of Creation Journey. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; pp. 33–44. [Google Scholar]

- Yu, Y.; Binghong, Z.; Fei, G.; Jiaxin, T. Research on Artificial Intelligence in the Field of Art Design Under the Background of Convergence Media. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Ulaanbaatar, Mongolia, 10–13 September 2020; p. 012027. [Google Scholar]

- Promptbase. Available online: https://promptbase.com/ (accessed on 25 August 2022).

- Hageback, N.; Hedblom, D. AI FOR ARTS; CRC Press: Boca Raton, FL, USA, 2021; p. 67. [Google Scholar]

- Hertzmann, A. Can Computers Create Art? Arts 2018, 7, 18. [Google Scholar] [CrossRef]

- Oppenlaender, J. Prompt Engineering for Text-Based Generative Art. arXiv 2022, arXiv:2204.13988. [Google Scholar]

- Ghosh, A.; Fossas, G. Can There be Art Without an Artist? arXiv 2022, arXiv:2209.07667. [Google Scholar]

- Chamberlain, R.; Mullin, C.; Scheerlinck, B.; Wagemans, J. Putting the art in artificial: Aesthetic responses to computer-generated art. Psychol. Aesthet. Crea. 2018, 12, 177. [Google Scholar] [CrossRef]

- Hong, J.-W.; Curran, N.M. Artificial intelligence, artists, and art: Attitudes toward artwork produced by humans vs. artificial intelligence. ACM Trans. Multimed. Comput. Commun. Appl. 2019, 15, 1–16. [Google Scholar] [CrossRef]

- Gangadharbatla, H. The role of AI attribution knowledge in the evaluation of artwork. Empir. Stud. Arts 2022, 40, 125–142. [Google Scholar] [CrossRef]

- Corbin, J.; Strauss, A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory; Sage Publications: Newbury Park, CA, USA, 1998; pp. 172–186. [Google Scholar]

- Lin, Z.Y. Multivariate Analysis; Best-Wise Publishing Co., Ltd.: Taipei, Taiwan, 2007; pp. 25–35. [Google Scholar]

| Methods | Disco Diffusion | Midjourney | Stable Diffusion | DALL·E 2 |

|---|---|---|---|---|

| Source | https://github.com/alembics/disco-diffusion (accessed on 10 June 2022) | www.midjourney.com (accessed on 25 August 2022) | https://beta.dreamstudio.ai/dream (accessed on 2 September 2022) | https://labs.openai.com (accessed on 29 September 2022) |

| Prompt 1 | An oil painting of a room full of toys by the fireplace. | |||

| Result 1 |  |  |  |  |

| Prompt 2 | An oil painting of a father reading a newspaper in front of the computer, a mother cooking in the kitchen, a little son sitting on the sofa watching the cartoon named tom and jerry, and a big daughter just bringing a golden retriever into the room. | |||

| Result 2 |  |  |  |  |

| Artists | AP01 | AP02 | AP03 | AP04 | AP05 | AP06 | AP07 | AP08 | AP09 | AP10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Age (Years) | 39 | 41 | 22 | 22 | 40 | 23 | 23 | 35 | 41 | 43 |

| Painting Experience (Years) | 9 | 15 | 12 | 12 | 24 | 7 | 4 | 13 | 20 | 23 |

| Nonartists | NH01 | NH02 | NH03 | NH04 | NH05 | NH06 | NH07 | NH08 | NH09 | NH10 |

| Age (Years) | 38 | 67 | 40 | 42 | 49 | 23 | 22 | 26 | 25 | 42 |

| Type | Midjourney + Artist Paintings | ||

|---|---|---|---|

| No. | P01 | P02 | P03 |

| Prompt | An oil painting of a room full of toys by the fireplace. | An oil painting of love harbor full of laughter and warmth. | An oil painting of parents happily walking in the park hand in hand, and an active dog is chasing me. |

| Painting |  |  |  |

| No. | P04 | P05 | P06 |

| Prompt | A warm tone oil painting of a little pink bear holding a honey jar to enjoy the cool under the shade of the big tree in front of the yellow wooden house, and beautiful flowers and grass, and gurgling streams beside the wooden house on a bright summer day. | A warm tone oil painting of mother toasting bread for her daughter in a Europe style room. | An oil painting of a Samoyed dog with a space helmet and a space suit floating in outer space. |

| Painting |  |  |  |

| Type | Midjourney + Nonartist Paintings | ||

| No. | H01 | H02 | H03 |

| Prompt | An oil painting of one family, balloons, toys and food in amusement park. | An oil painting of a family playing in the yard of a house, also including trees, sun, birds. | An oil painting of kids playing, cat napping, and parents cooking while chatting. |

| Painting |  |  |  |

| No. | H04 | H05 | H06 |

| Prompt | An oil painting of a two-and-a-half floor house with red roofs and gray walls, surrounding with a beautiful garden full of plants and flowers, and a crystal-clear stream flowing through the garden. | An oil painting of a family having dinner and a fish in the center of the table. | An oil painting of a father reading a newspaper in front of the computer, a mother cooking in the kitchen, a little son sitting on the sofa watching the cartoon named tom and jerry, and a big daughter just bringing a golden retriever into the room. |

| Painting |  |  |  |

| Type | Artist Painting | ||

| Painting |  | Description | Smoke curls up from the kitchen, roosters look for food, and the simple open-air kitchen emits the smell of cooking. |

| Painting | Attributes | 1 2 3 4 5 |

|---|---|---|

P01: An oil painting of a room full of toys by the fireplace. | f1. Color harmony | |

| f2. Element accuracy | ||

| f3. Layout coordination | ||

| f4. Tone matching | ||

| f5. Content matching | ||

| f6. Scene matching | ||

| f7. Sweetness | ||

| f8. Creativity | ||

| f9. Preference | ||

| Please subjectively rate each painting according to visual attributes, with a maximum of 5 points and a minimum of 1 point. | ||

| Please Select One Painting | Paintings |

|---|---|

| Which one is the most professional? |  |

| Which one is the sweetest? | |

| Which one is the most creative? | |

| Which ones are the creations of artists? |

| Two Groups of Creators | |||

|---|---|---|---|

| Artists (n = 10) | Nonartists (n = 10) | Significance | |

| Time spent (Min.) | 22 ± 4.25 | 14 ± 4.25 | *** |

| Number of modified prompts | 6 ± 2.16 | 4± 2.46 | * |

| Number of U-button clicks | 10 ± 2.95 | 3 ± 1.52 | *** |

| Core Category | Selective Coding | Open Coding |

|---|---|---|

| Visual performance | Artistic style | ▲ Paintings generated by AI can be identified because of high standardization (AP05, AP09–10). ▲ The visual style lacks a uniqueness (AP05, AP18, AP10). |

| Techniques | ▲ The color is very harmonious (AP01, AP06–07). ▲ The strokes are rich and vivid (AP04). | |

| Semantic matching | Element accuracy | ■ Did not generate elements accurately based on the prompt (NH04). |

| Space attributes | ● There are some mistakes in element positions (AP01, AP06–07, AP09, AH06). ● When prompts are complex, some elements are usually lost (AP09, NH02, NH06). ● The generated space layout has deviation (AP04, AP09, NH06). | |

| Expression characters | ▲ In some results, the animal state was a little decadent, which did not meet the prompt (AP06). | |

| Human–AI interaction | Prompt restrictions | ● Some naughty words are banned (AP03, NH06). |

| Subject control | ▲ Unlike traditional brushes and paints, they can help you realize that what you think is what you get, and it is completely under your control (AP01, AP05–07). ▲ More iterations can make the results closer to inner thoughts (AP03–05). | |

| Prompt grammar rules | ● Prompting rules are related to the final generated effect to a great extent (AP02–04, AP06, NH02–04). ● Any small difference in prompts would cause disparate generation (AP01–08, NH01–10). | |

| Creation experience | Creation assistance | ▲ There are still differences in using language to express emotions instead of painting, even though all the elements described are generated (AP07–09). ■ Generated some fantastic images that I just imagine but cannot draw (NH01/, NH04–08, NH095). |

| Creative generation | ▲ Compared with the result of matching with prompts, some unexpected surprise is preferred (AP02, AP04). ▲ It is like Pandora’s Box. If it is not a surprise, it may be a shock (AP01, AP06). | |

| Culture cognition | Cross cultural differences | ■ The originally generated image is full of Indian style home decorations with cultural differences (NH03). |

| Technological ethics | Work displacement | ▲ AI cannot generate my unique styles and cannot replace senior painters (AP10). ▲ A little confused about own core competitiveness (AP06–07). ● Maybe some work related to painting will be impacted by AI (AP06, NH05). |

| Copyright issues | ▲ Due to the mixture and collage of painting styles, the ownership of copyright is a complex issue (AP01, AP03–07). |

| Subjective Questionnaire (1–5 Points) | Sweet Home Paintings | |||

|---|---|---|---|---|

| Midjourney + Creator with Art Background | Midjourney + Creator without Art Background | Artist | Significance | |

| F1. Color harmony | 3.96 | 4.00 | 3.79 | |

| F2. Element accuracy | 3.76 | 3.71 | 3.89 | |

| F3. Layout coordination | 3.69 | 3.71 | 3.66 | |

| F4. Tone matching | 3.83 | 3.83 | 3.95 | |

| F5. Content matching | 3.64 | 3.74 | 3.97 | |

| F6. Scene matching | 3.66 | 3.80 | 4.05 | |

| F7. Sweetness | 3.43 a | 3.54 a | 2.68 b | *** |

| F8. Creativity | 3.38 | 3.38 | 3.32 | |

| F9. Preference | 3.36 | 3.36 | 3.03 | |

P01 |  P02 |  P03 |  P04 |  P05 |  P06 |  H01 |  H02 |  H03 |  H04 |  H05 |  H06 |  Artist | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 3.91 | 4.10 | 3.76 | 4.12 | 4.21 | 3.74 | 3.86 | 3.55 | 4.19 | 4.07 | 3.93 | 4.38 | 3.83 |

| F2 | 3.52 | 3.71 | 3.83 | 3.62 | 4.05 | 3.69 | 3.69 | 3.45 | 3.55 | 4.43 | 3.50 | 3.52 | 3.88 |

| F3 | 3.76 | 3.93 | 3.76 | 3.60 | 3.79 | 3.31 | 3.41 | 3.33 | 3.81 | 4.31 | 3.55 | 3.83 | 3.67 |

| F4 | 3.91 | 3.69 | 3.91 | 3.86 | 4.05 | 3.60 | 3.55 | 3.64 | 3.86 | 4.29 | 3.76 | 3.95 | 3.91 |

| F5 | 4.02 | 3.45 | 3.69 | 3.17 | 3.95 | 3.45 | 3.55 | 3.62 | 3.76 | 4.36 | 3.55 | 3.43 | 4.00 |

| F6 | 3.98 | 3.50 | 3.64 | 3.31 | 4.02 | 3.41 | 3.60 | 3.67 | 3.76 | 4.21 | 3.76 | 3.69 | 4.05 |

| F7 | 3.45 | 3.45 | 3.67 | 3.76 | 3.67 | 2.48 | 3.31 | 3.55 | 3.48 | 3.55 | 3.29 | 3.83 | 2.64 |

| F8 | 3.14 | 3.24 | 3.41 | 3.71 | 3.31 | 3.52 | 3.14 | 3.17 | 3.88 | 3.29 | 3.02 | 3.79 | 3.29 |

| F9 | 3.26 | 3.36 | 3.31 | 3.48 | 3.62 | 3.02 | 3.00 | 3.14 | 3.64 | 3.64 | 3.00 | 3.71 | 3.00 |

| Question | The Top Three | ||

|---|---|---|---|

| Which one is the creation by an artist? |  H04 (21%) |  P03 (13%) |  Artist (13%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, Y.; Wang, X.; Lin, R.; Wu, J. Communication in Human–AI Co-Creation: Perceptual Analysis of Paintings Generated by Text-to-Image System. Appl. Sci. 2022, 12, 11312. https://doi.org/10.3390/app122211312

Lyu Y, Wang X, Lin R, Wu J. Communication in Human–AI Co-Creation: Perceptual Analysis of Paintings Generated by Text-to-Image System. Applied Sciences. 2022; 12(22):11312. https://doi.org/10.3390/app122211312

Chicago/Turabian StyleLyu, Yanru, Xinxin Wang, Rungtai Lin, and Jun Wu. 2022. "Communication in Human–AI Co-Creation: Perceptual Analysis of Paintings Generated by Text-to-Image System" Applied Sciences 12, no. 22: 11312. https://doi.org/10.3390/app122211312

APA StyleLyu, Y., Wang, X., Lin, R., & Wu, J. (2022). Communication in Human–AI Co-Creation: Perceptual Analysis of Paintings Generated by Text-to-Image System. Applied Sciences, 12(22), 11312. https://doi.org/10.3390/app122211312